- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

09_Introduction to Convolution Neural Networks CNN

展开查看详情

1 .Ch. 9: Introduction to Convolution Neural Networks CNN KH Wong CNN. V8c 1

2 .Introduction Very Popular: Toolboxes: tensorflow , cuda-convnet and caffe (user friendlier) A high performance Classifier (multi-class) Successful in object recognition, handwritten optical character OCR recognition, image noise removal etc. Easy to implementation Slow in learning Fast in classification CNN. V8c 2

3 .Overview of this note Prerequisite: Fully connected Back Propagation Neural Networks (BPNN), in http ://www.cse.cuhk.edu.hk/~ khwong/www2/cmsc5707/5707_08_neural_net.pptx Convolution neural networks (CNN) Part A: feed forward of CNN Part B: feed backward of CNN CNN. V8c 3

4 .Overview of Convolution Neural Networks CNN. V8c 4

5 .An example optical chartered recognition OCR Example test_example_CNN.m in http://www.mathworks.com/matlabcentral/fileexchange/38310-deep-learning-toolbox Based on a data base (mnist_uint8, from http://yann.lecun.com/exdb/mnist/ ) 60,000 training examples (28x28 pixels each) 10,000 testing samples (a different dataset) After training , given an unknown image, it will tell whether it is 0, or 1 ,..,9 etc. Recognition rate 11% use 1 epoch (training 200seconds) Recognition rate 1.2% use 100 epochs (hours of training) CNN. V8c 5 http://andrew.gibiansky.com/blog/machine-learning/k-nearest-neighbors-simplest-machine-learning/

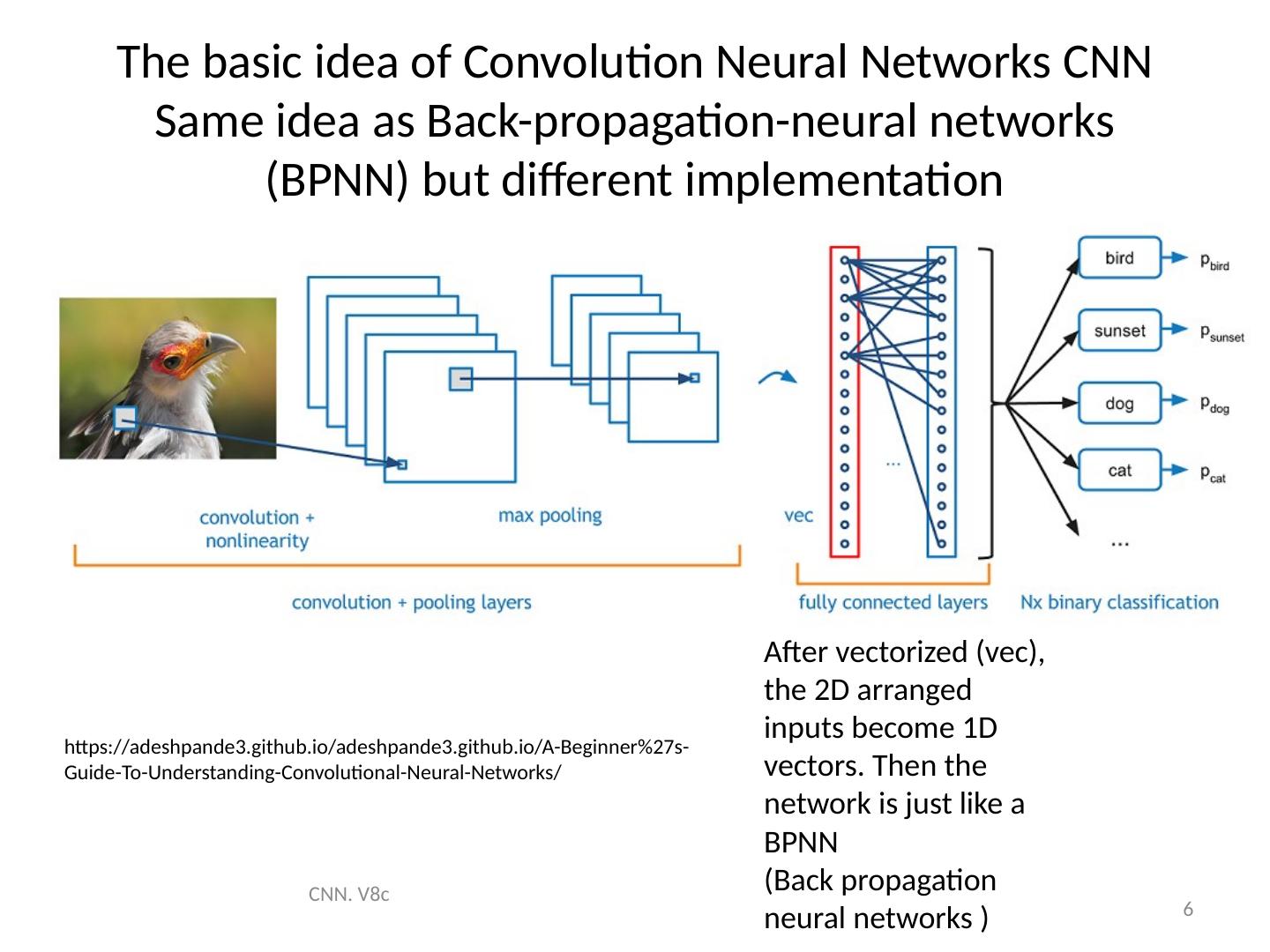

6 .The basic idea of Convolution Neural Networks CNN Same idea as Back-propagation-neural networks (BPNN) but different implementation CNN. V8c 6 https://adeshpande3.github.io/adeshpande3.github.io/A-Beginner%27s-Guide-To-Understanding-Convolutional-Neural-Networks/ After vectorized ( vec ), the 2D arranged inputs become 1D vectors. Then the network is just like a BPNN (Back propagation neural networks )

7 .Basic structure of CNN The convolution layer: see how to use convolution for feature identifier CNN. V8c 7

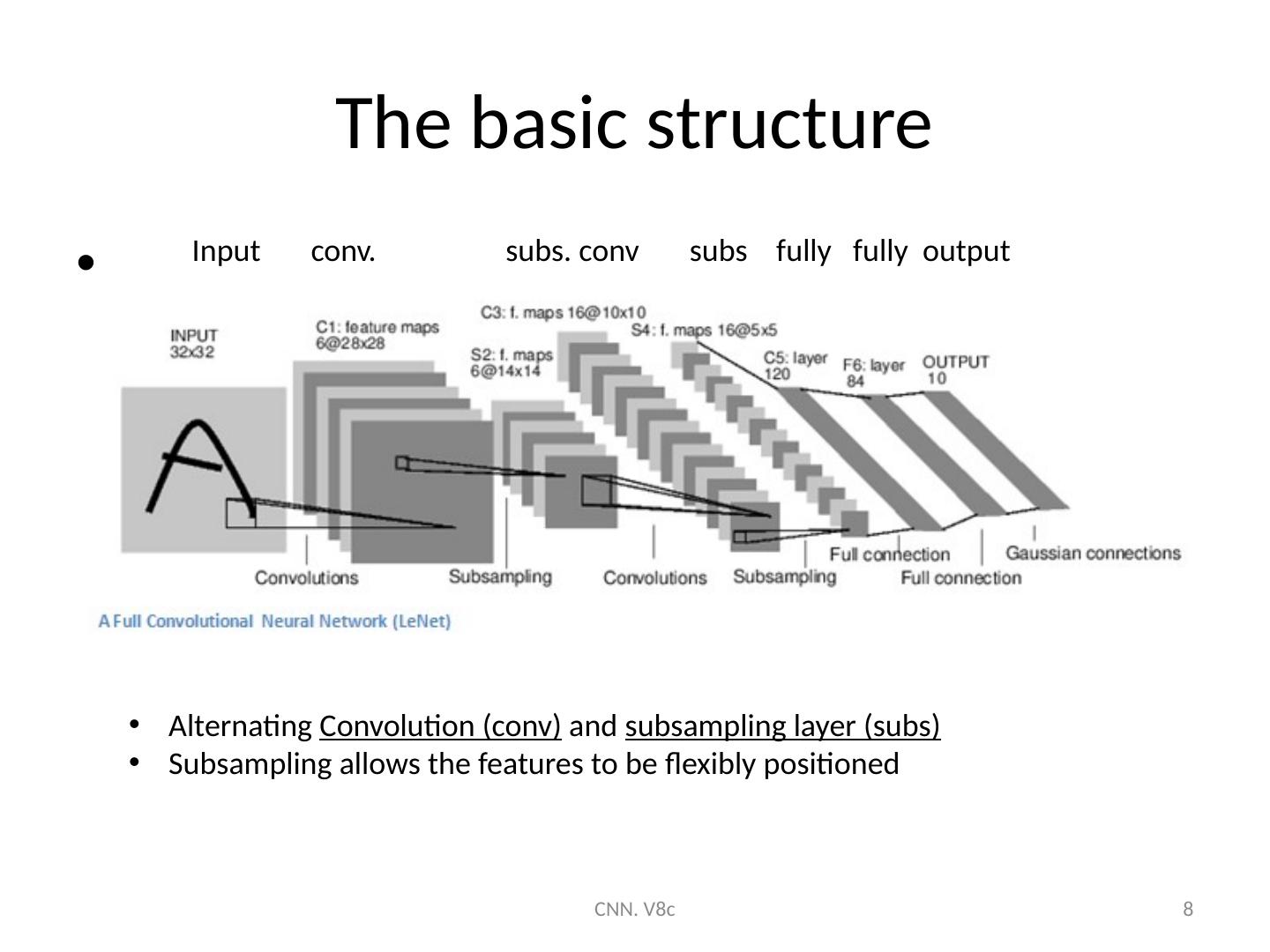

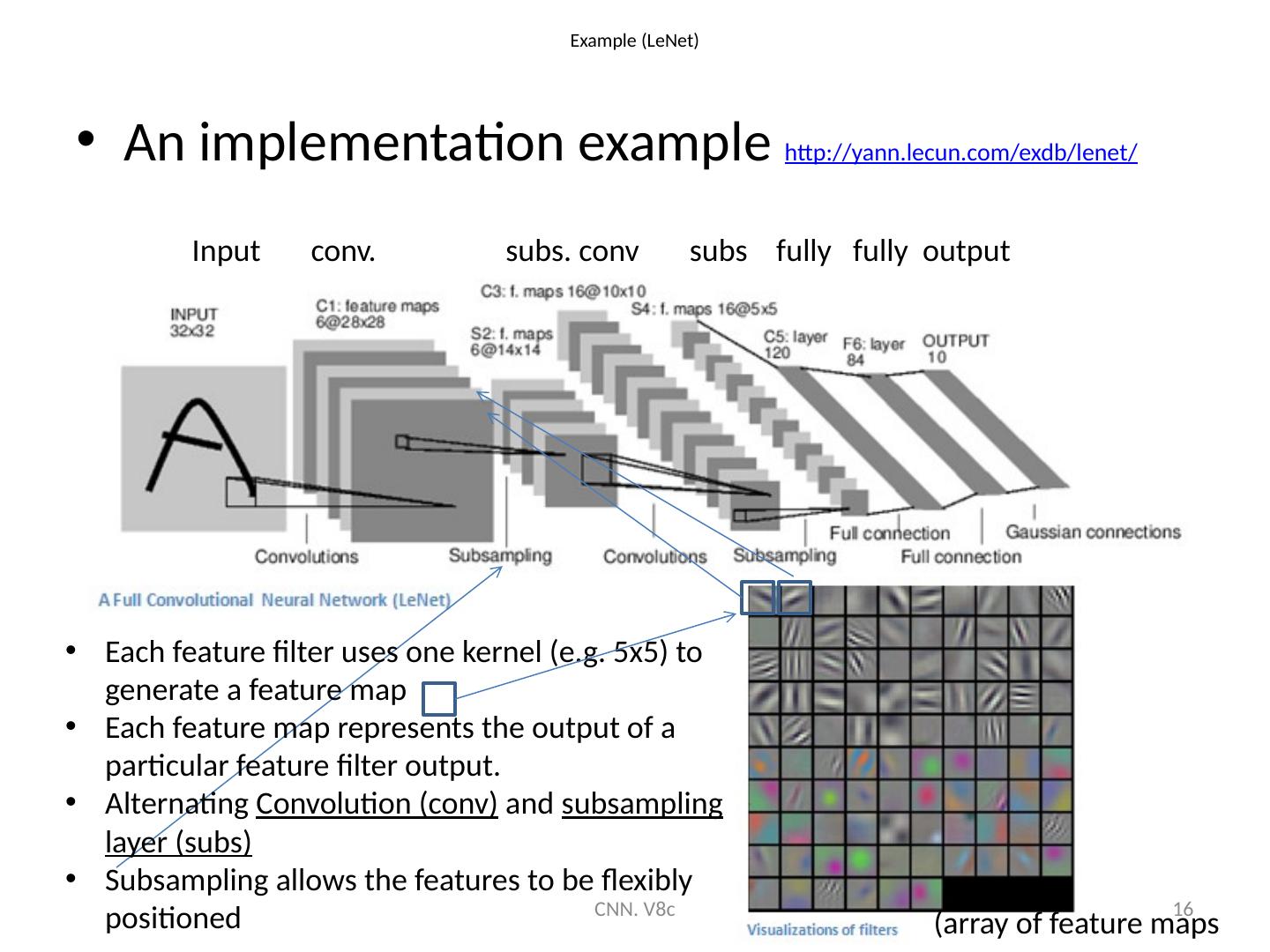

8 .The basic structure CNN. V8c 8 Input conv. subs. conv subs fully fully output Alternating Convolution (conv) and subsampling layer (subs) Subsampling allows the features to be flexibly positioned

9 .Convolution ( conv) layer: Example: From the input layer to the first hidden layer The first hidden layer represents the filter outputs of a certain feature So, what is a feature? Answer is in the next slide CNN. V8c 9

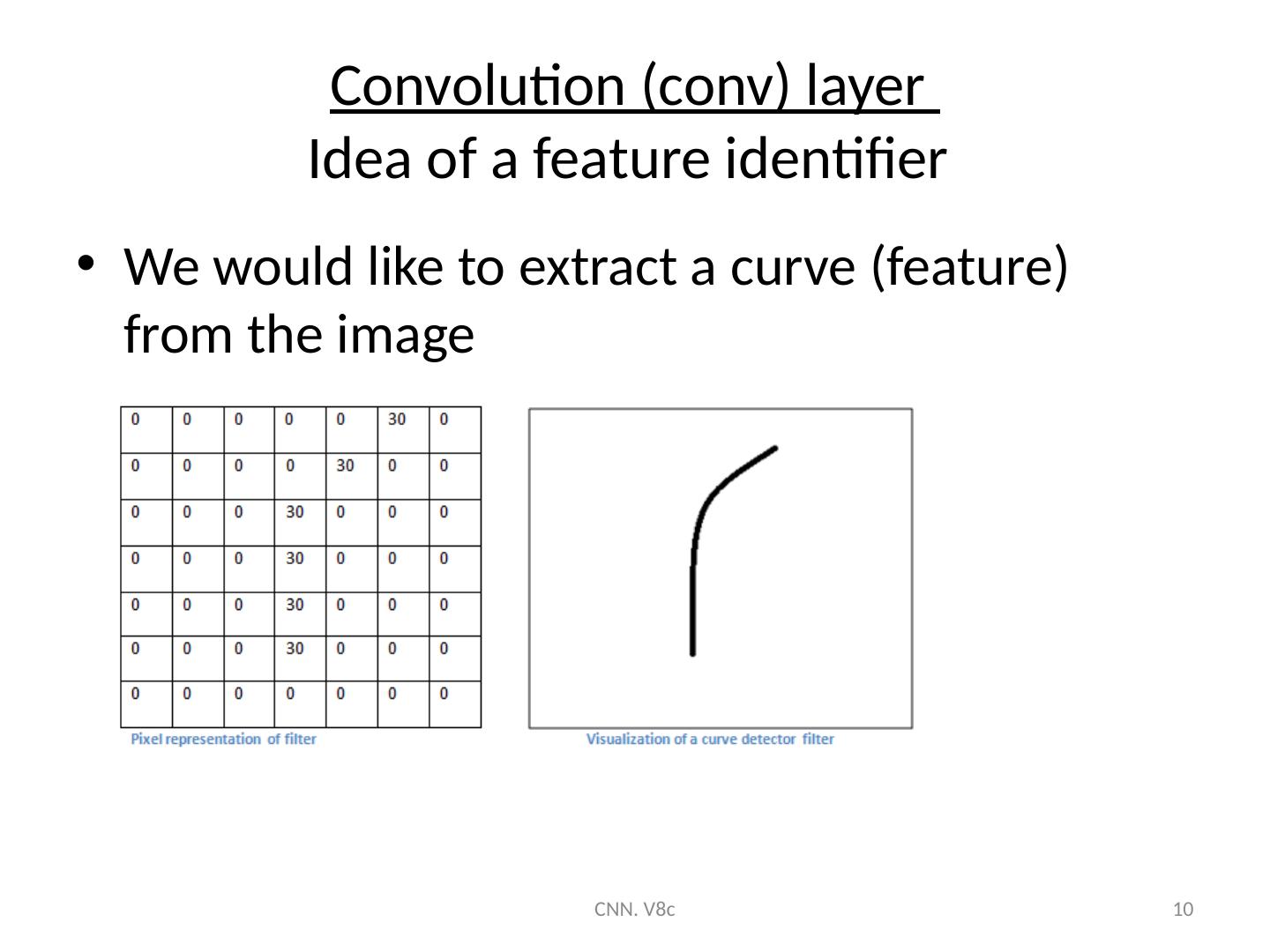

10 .Convolution (conv) layer Idea of a feature identifier We would like to extract a curve (feature) from the image CNN. V8c 10

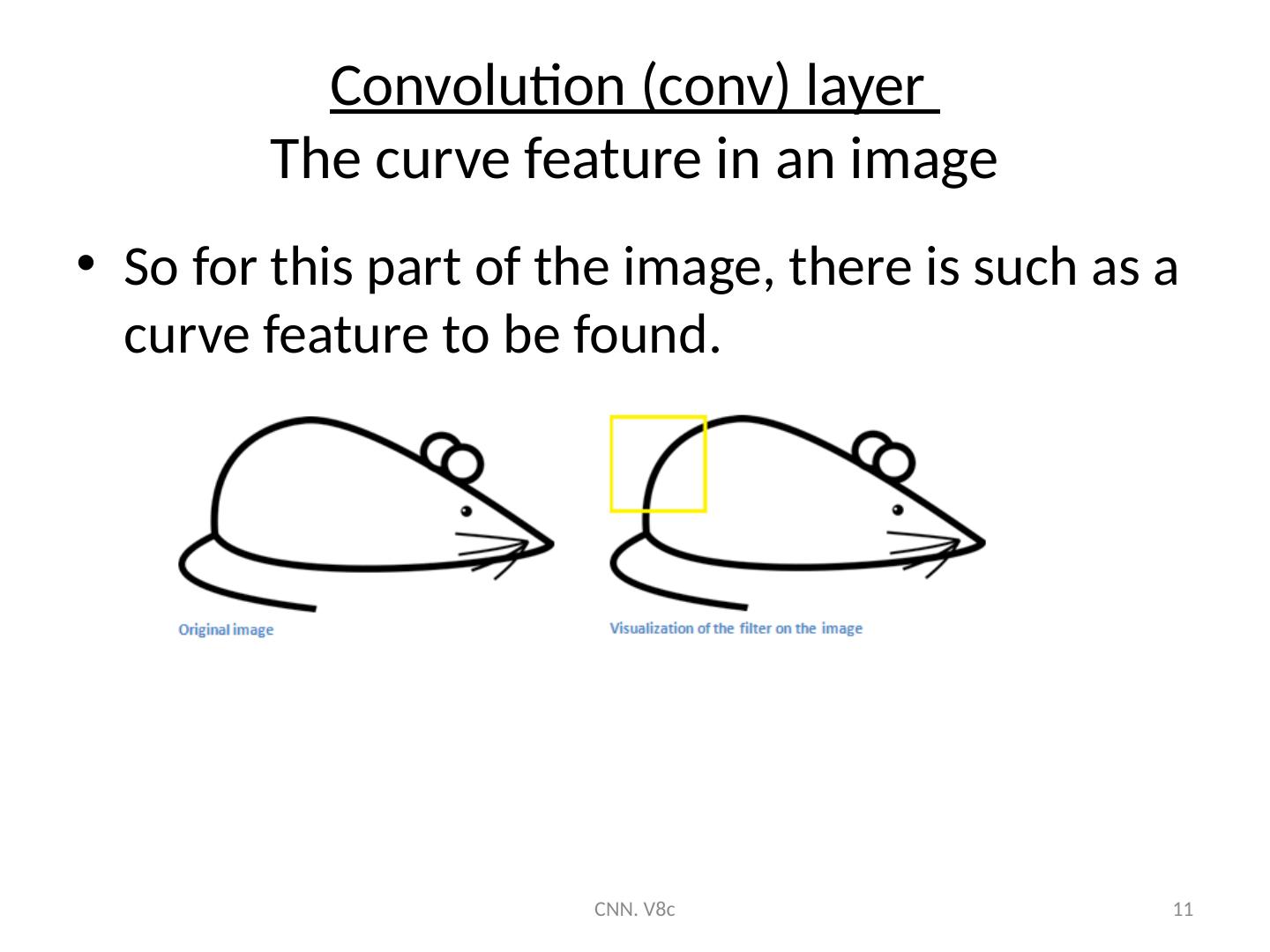

11 .Convolution (conv) layer The curve feature in an image So for this part of the image, there is such as a curve feature to be found. CNN. V8c 11

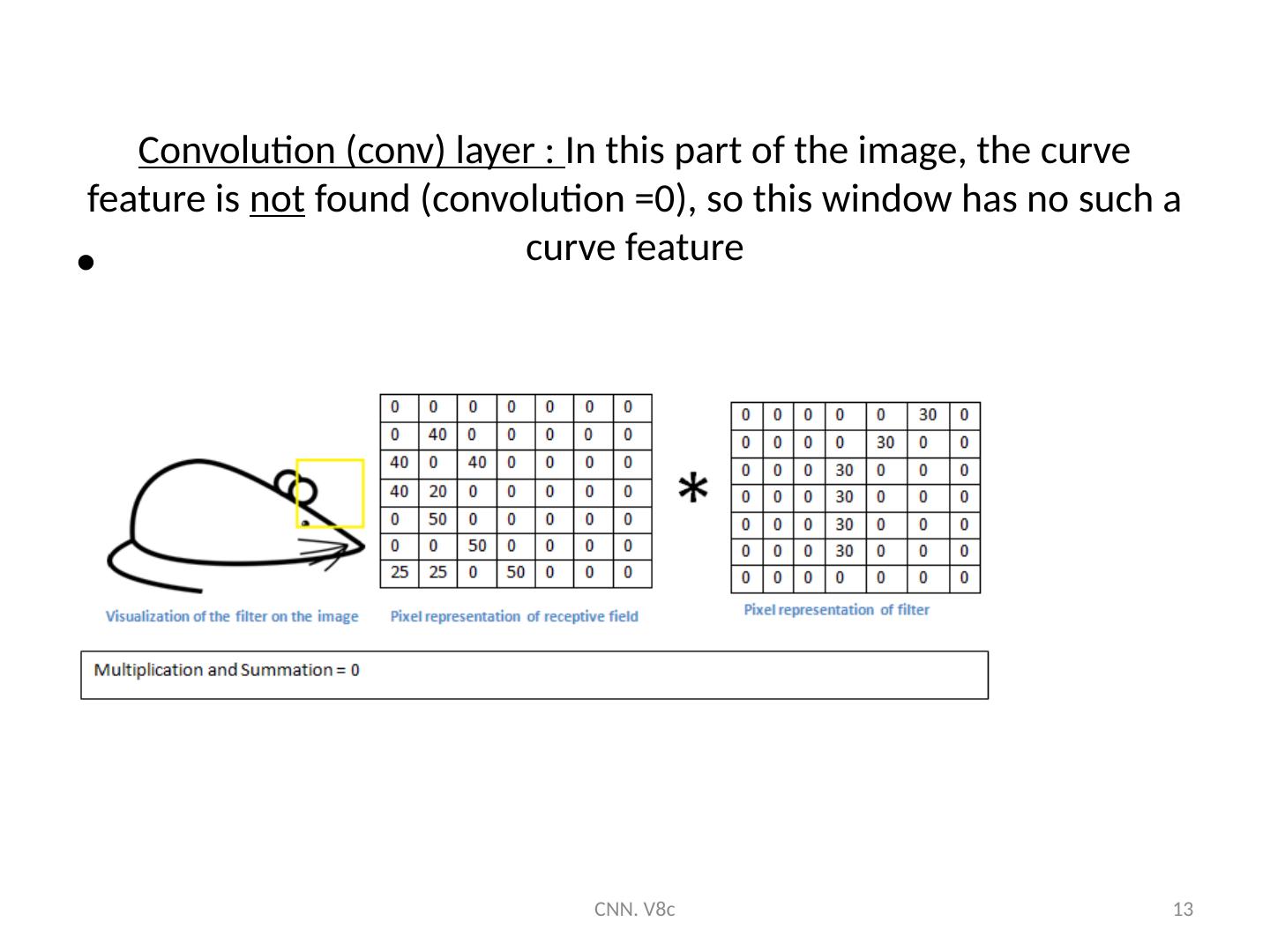

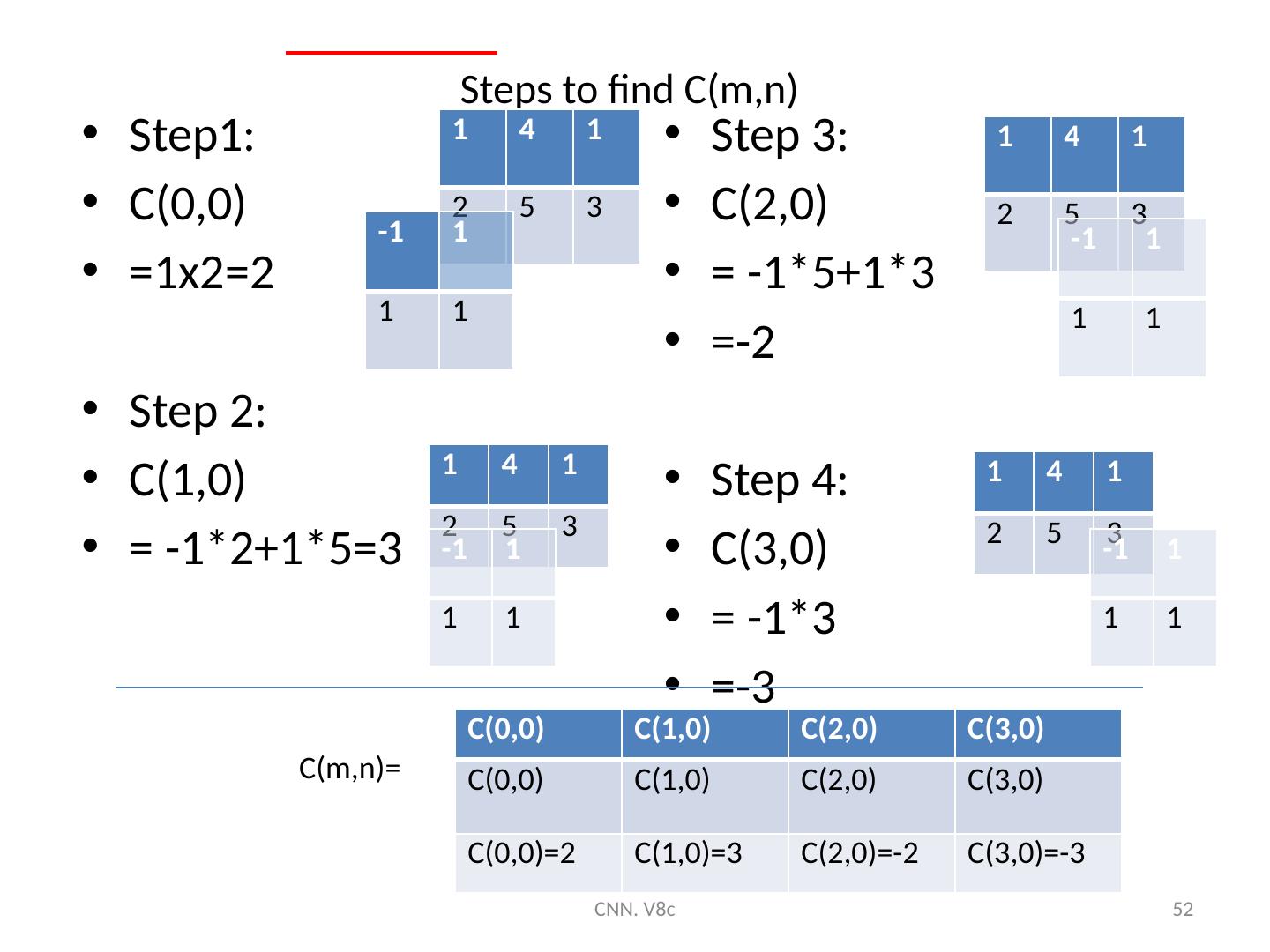

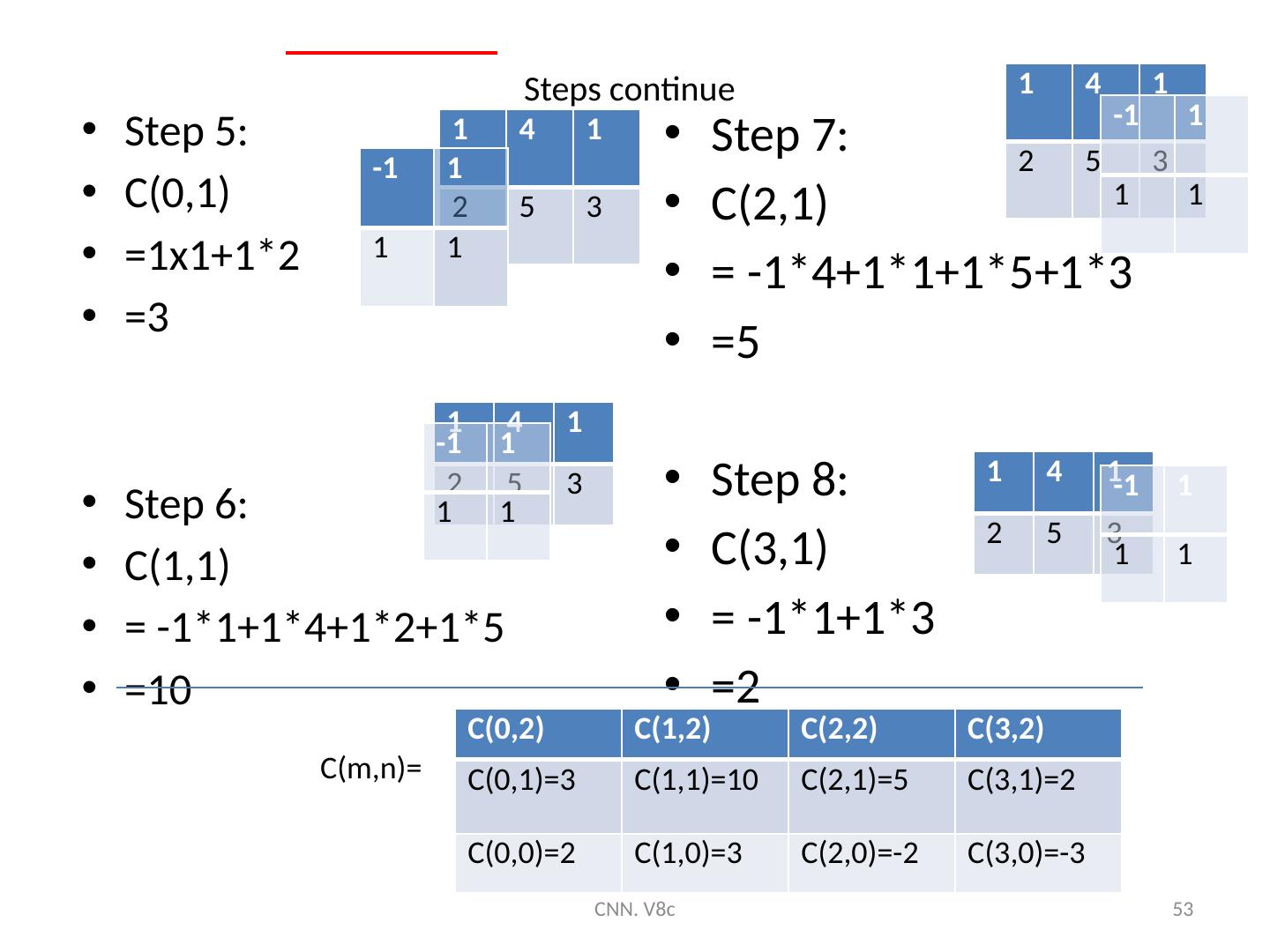

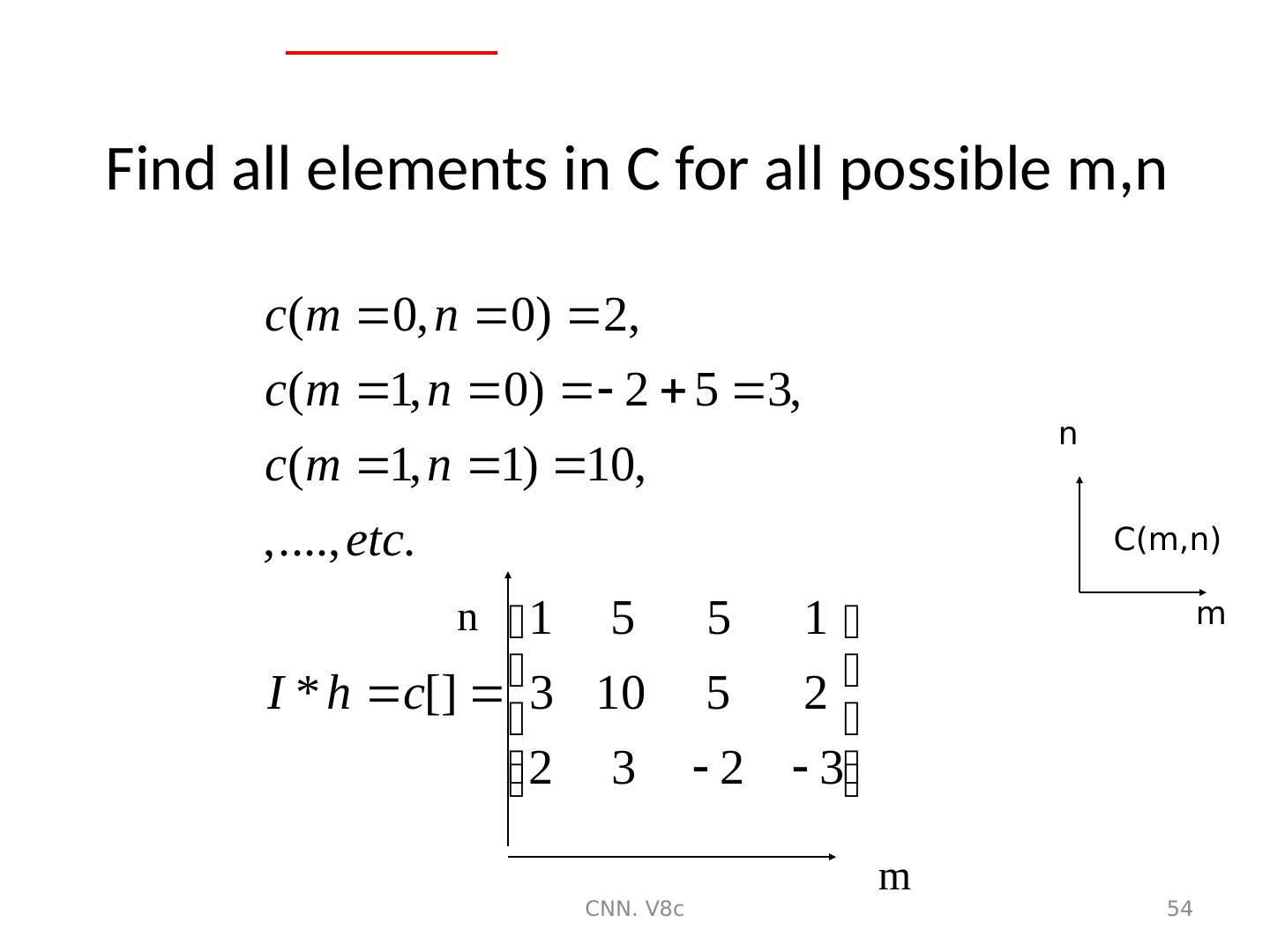

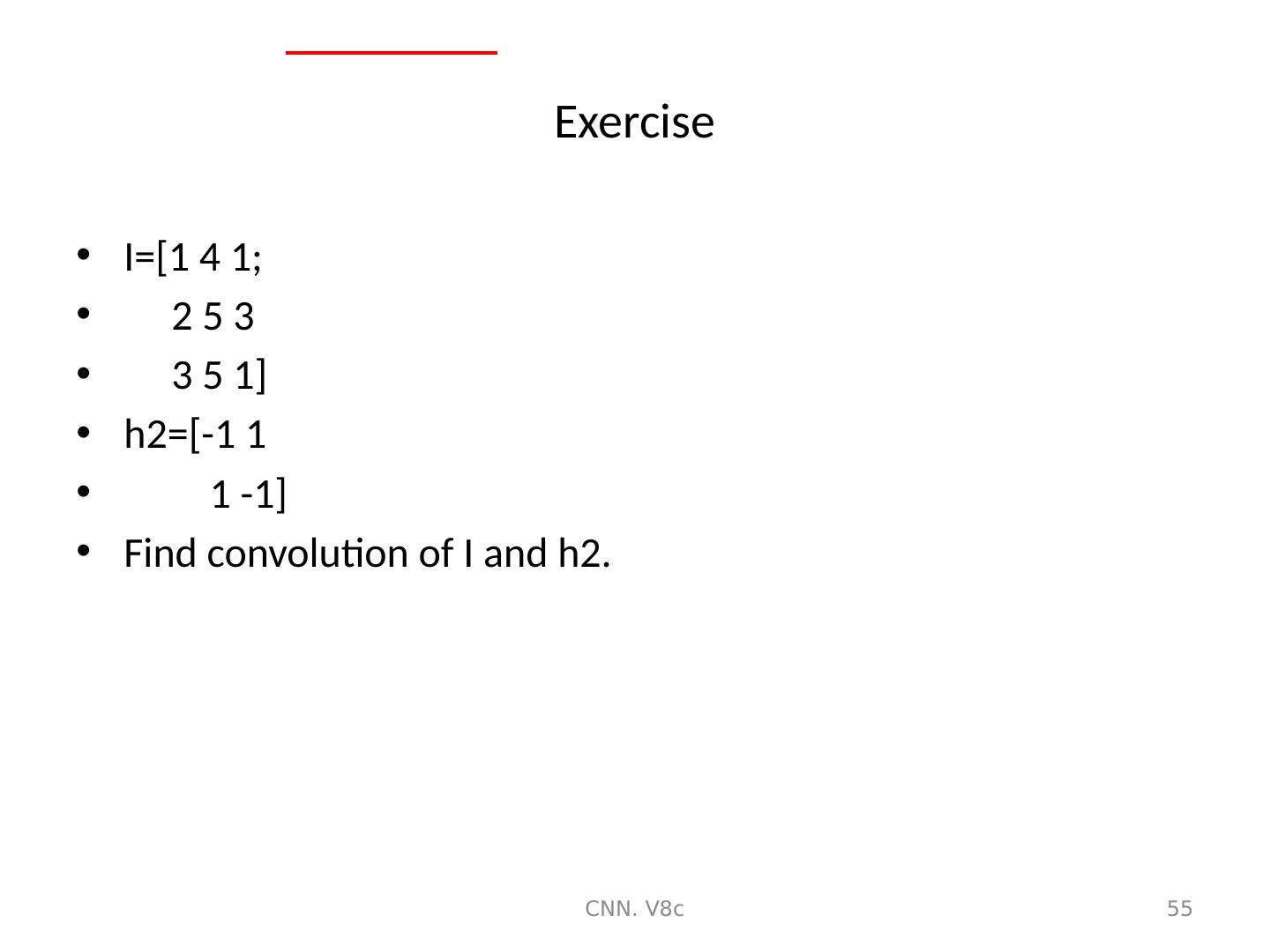

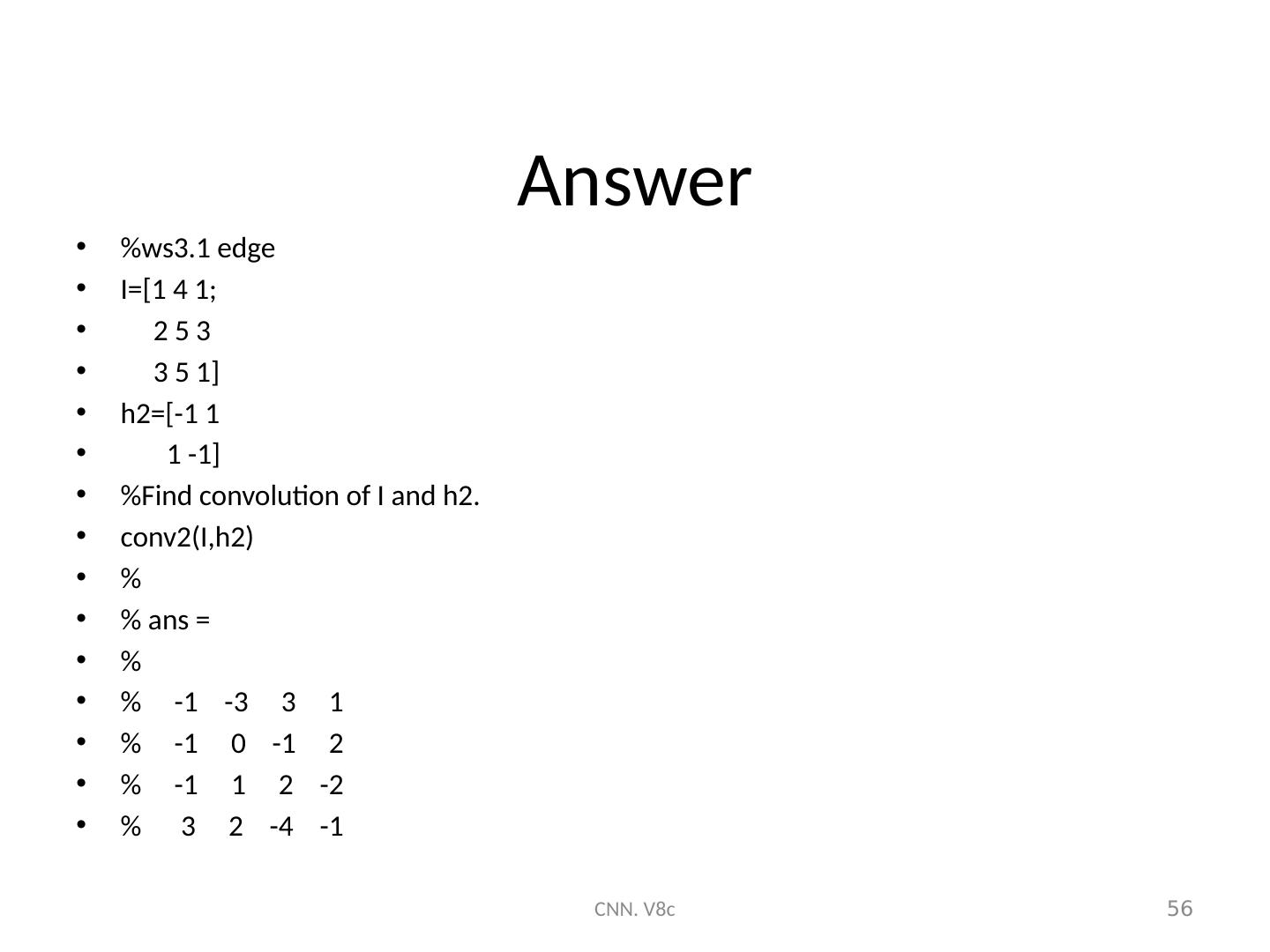

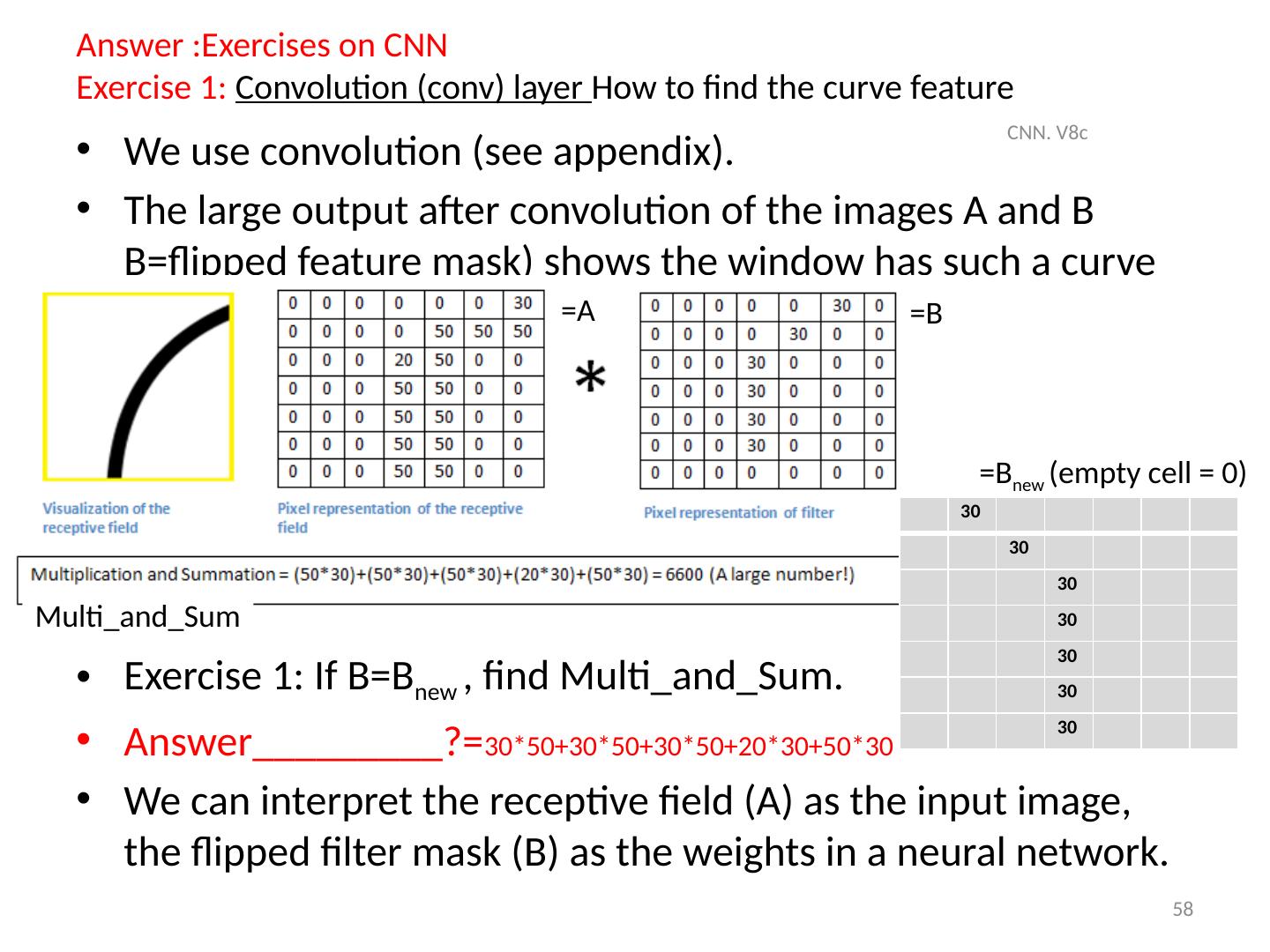

12 .Exercises on CNN Exercise 1: Convolution (conv) layer How to find the curve feature We use convolution (see appendix). The large output after convolution of the images A and B B=flipped feature mask) shows the window has such a curve Exercise 1: If B= B new , find Multi_and_Sum . Answer_________? We can interpret the receptive field (A) as the input image, the flipped filter mask (B) as the weights in a neural network. CNN. V8c 12 =A =B 30 30 30 30 30 30 30 = B new ( empty cell = 0) Multi_and_Sum

13 .Convolution (conv) layer : In this part of the image, the curve feature is not found (convolution =0), so this window has no such a curve feature CNN. V8c 13

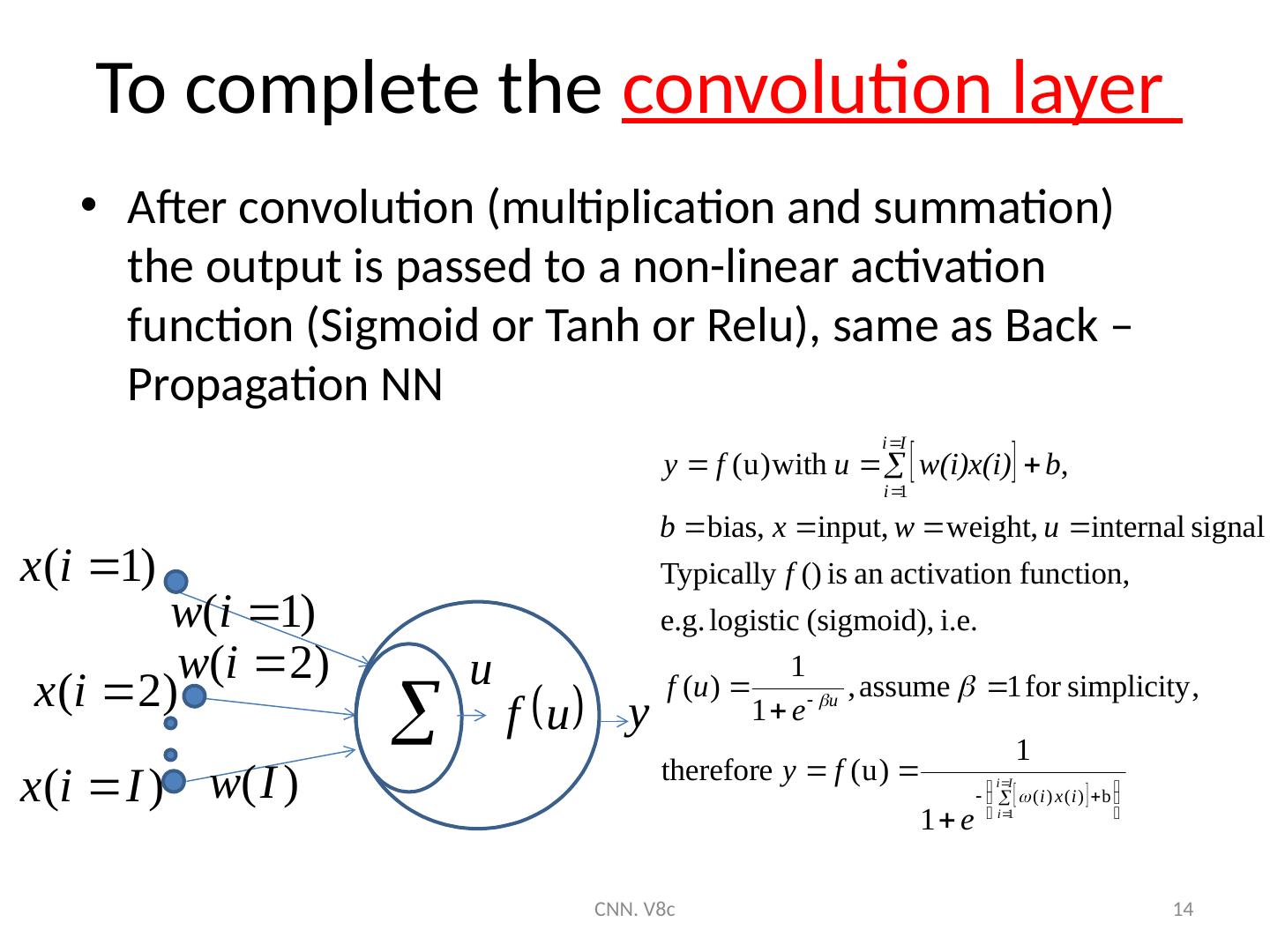

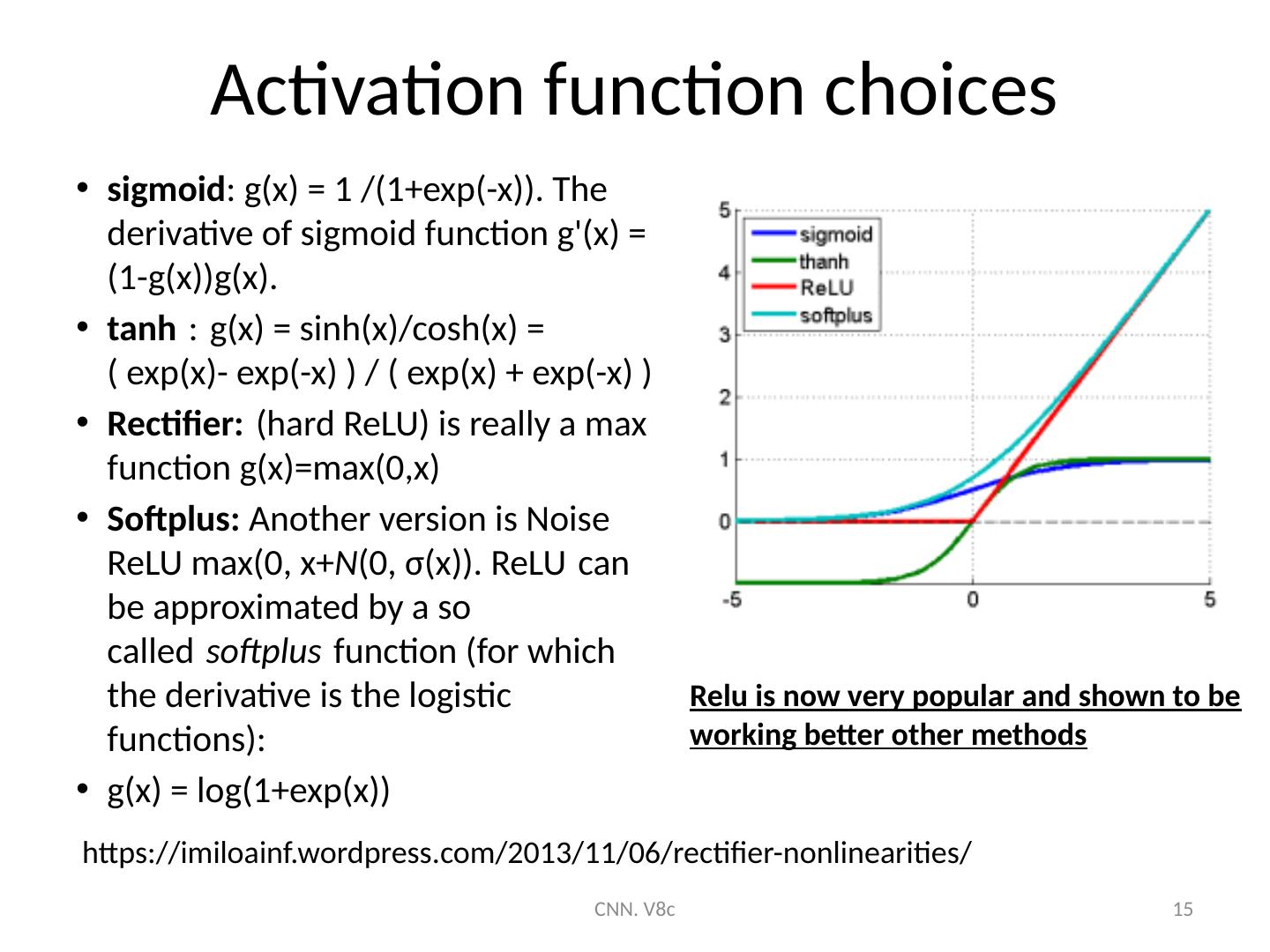

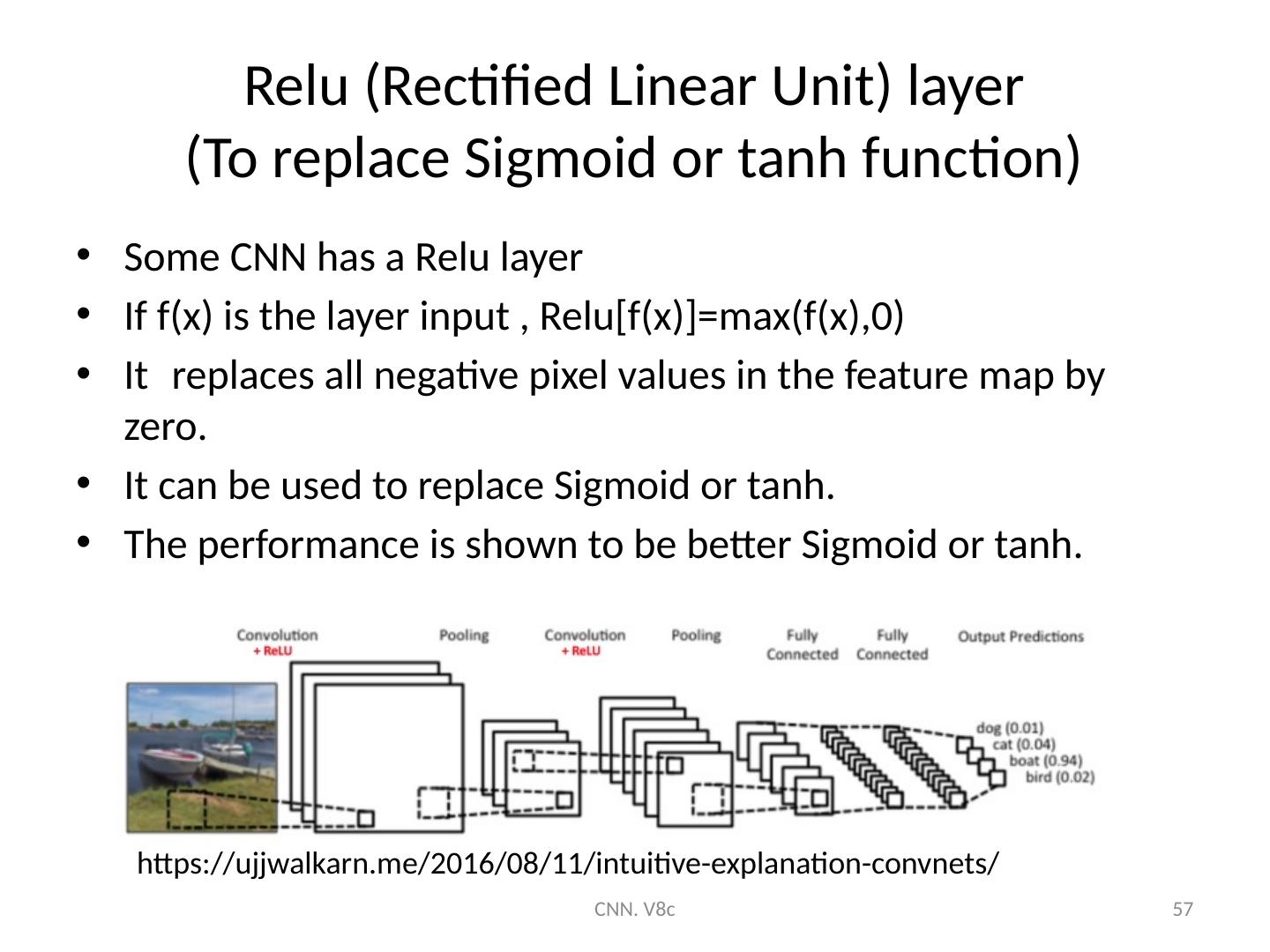

14 .To complete the convolution layer After convolution (multiplication and summation) the output is passed to a non-linear activation function (Sigmoid or Tanh or Relu ), same as Back –Propagation NN CNN. V8c 14

15 .To complete the convolution layer After convolution (multiplication and summation) the output is passed to a non-linear activation function (Sigmoid or Tanh or Relu ), same as Back –Propagation NN CNN. V8c 14

16 .Example ( LeNet ) An implementation example http ://yann.lecun.com/exdb/lenet / CNN. V8c 16 Each feature filter uses one kernel (e.g. 5x5) to generate a feature map Each feature map represents the output of a particular feature filter output. Alternating Convolution (conv) and subsampling layer (subs) Subsampling allows the features to be flexibly positioned (array of feature maps Input conv. subs. conv subs fully fully output

17 .Exercise2 and Demo ( click image to see demo ) CNN. V8c 17 http:// deeplearning.stanford.edu/wiki/images/6/6c/Convolution_schematic.gif , https://link.springer.com/content/pdf/10.1007%2F978-3-642-25191-7.pdf 1 0 1 0 1 0 1 0 1 Convolution mask (kernel). It just happens the flipped mask (assume 3x3) = the mask, because it is symmetrical Input image A feature map A different kernel generates a different feature map Y X Exercise 2: (a) Find X,Y. Answer:X =_______? , Y =_______? (b) Find X again if the convolution mask is [0 2 0; 2 0 2; 0 2 0]. Answer:X new =____? This is a 3x3 mask for illustration purpose, b ut noted that the above application uses a 5x5 mask.

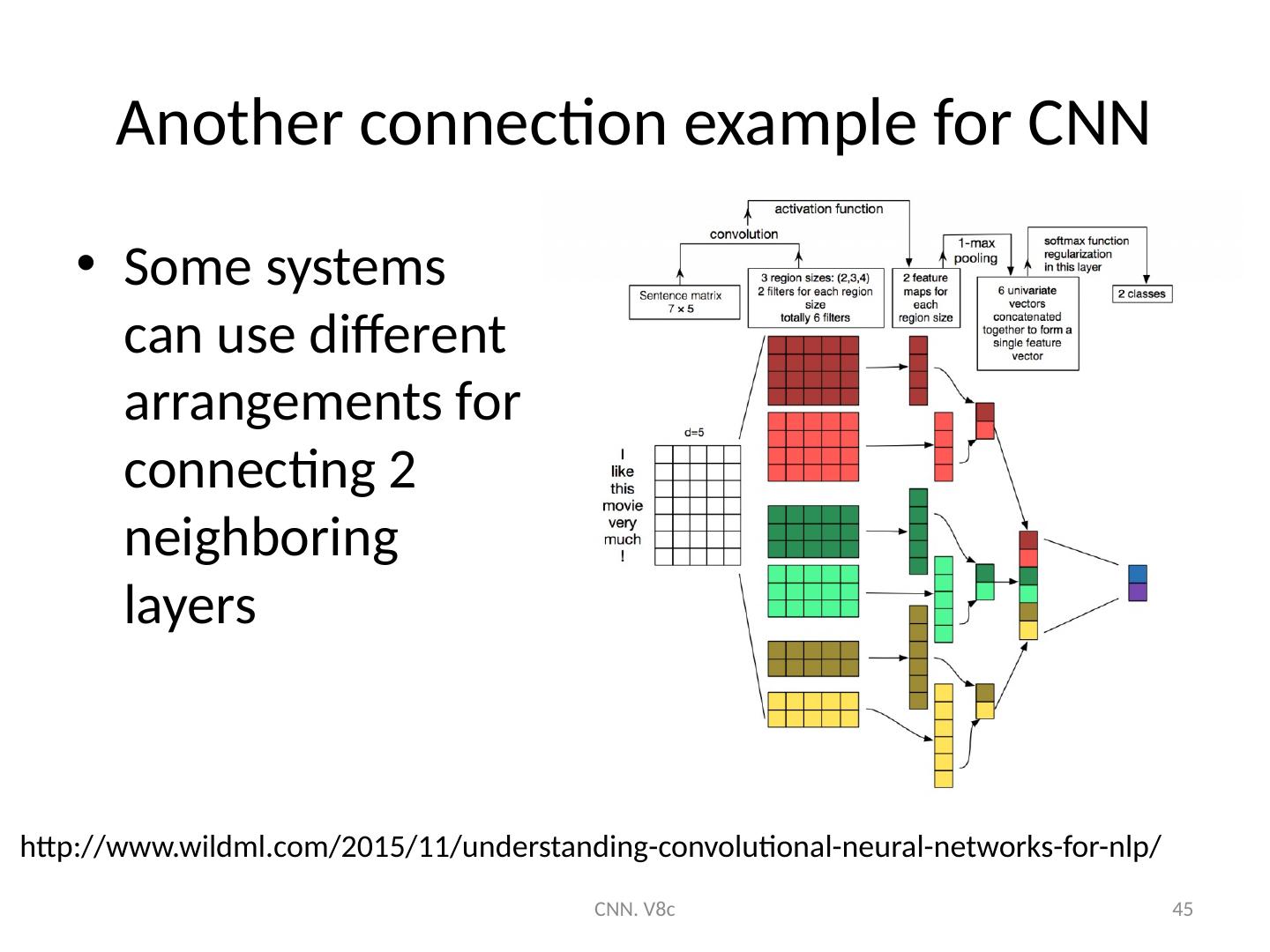

18 .Description of the layers Subsampling Layer to layer connections CNN. V8c 18

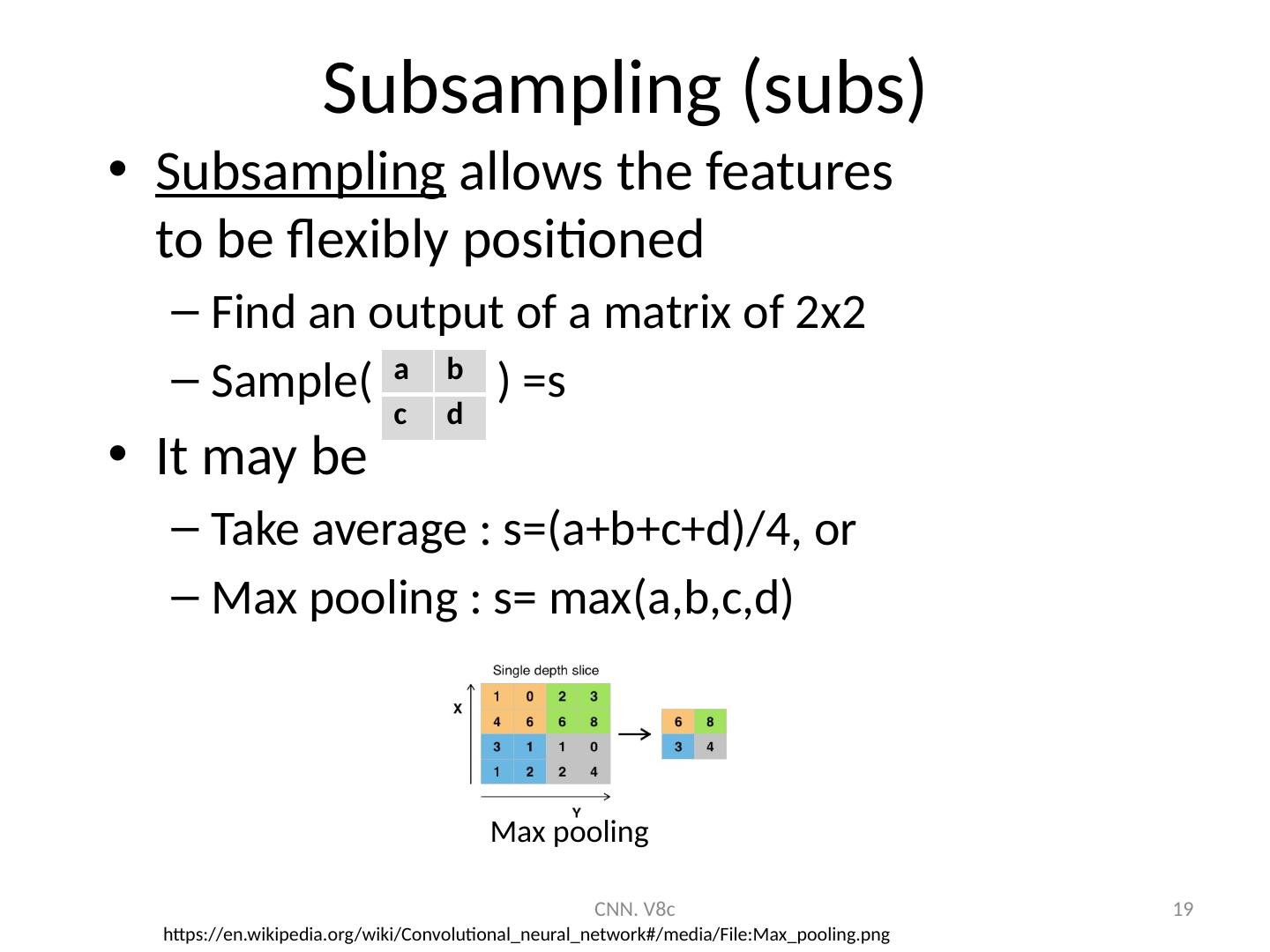

19 .Subsampling (subs) Subsampling allows the features to be flexibly positioned Find an output of a matrix of 2x2 Sample( ) =s It may be Take average : s=( a+b+c+d )/4, or Max pooling : s= max( a,b,c,d ) CNN. V8c 19 a b c d https://en.wikipedia.org/wiki/Convolutional_neural_network#/media/File:Max_pooling.png Max pooling

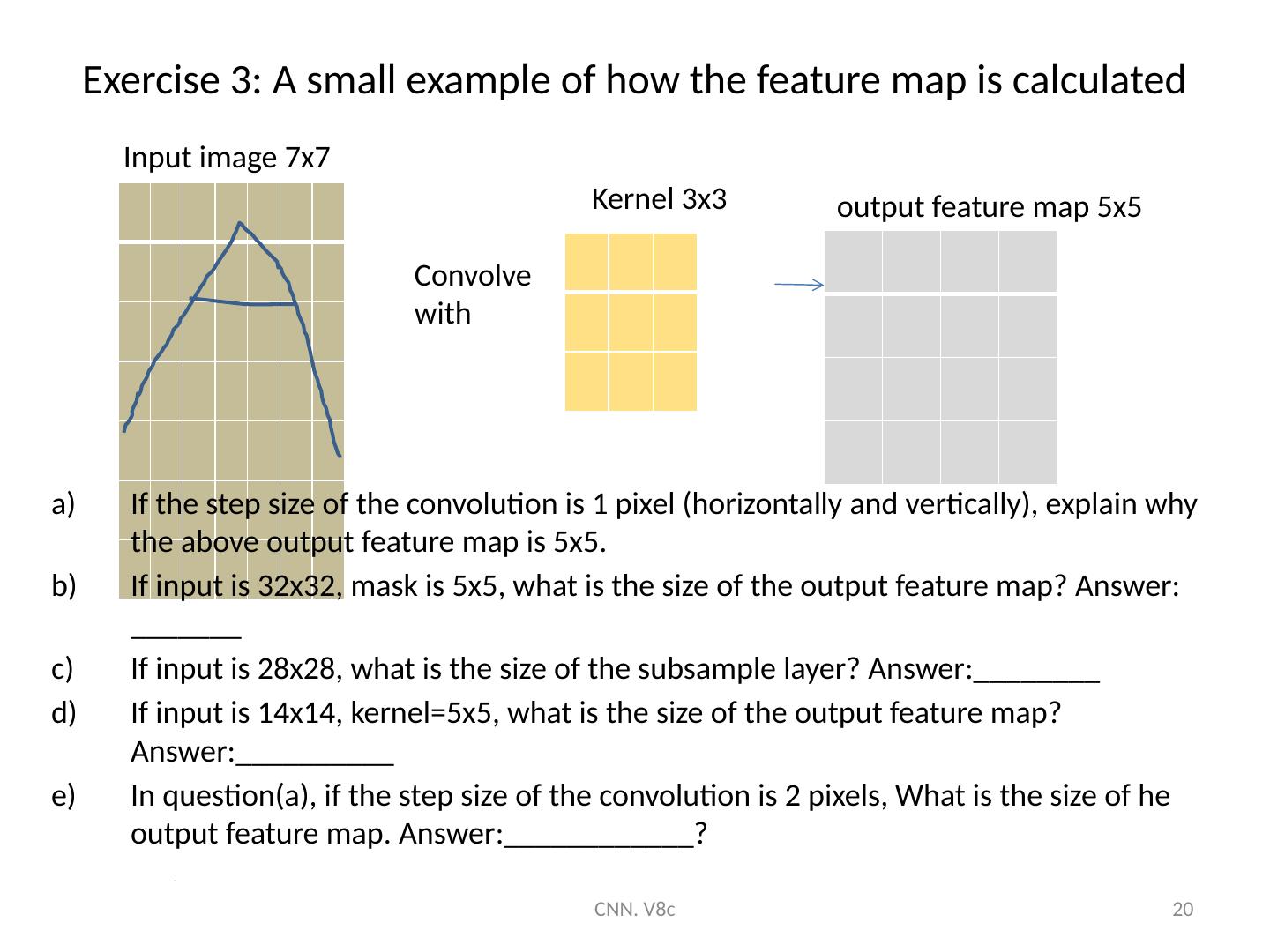

20 .Exercise 3: A small example of how the feature map is calculated If the step size of the convolution is 1 pixel (horizontally and vertically), explain why the above output feature map is 5x5. If input is 32x32, mask is 5x5, what is the size of the output feature map? Answer: _______ If input is 28x28, what is the size of the subsample layer? Answer:________ If input is 14x14, kernel=5x5, what is the size of the output feature map? Answer:__________ In question(a), if the step size of the convolution is 2 pixels, What is the size of he output feature map. Answer:____________? 3x3 CNN. V8c 20 Convolve with Kernel 3x3 output feature map 5x5 Input image 7x7

21 .How to feed one feature layer to multiple features layers You can combine multiple feature maps of one layer into one feature map in the next layer See next slide for details CNN. V8c 21 https://link.springer.com/content/pdf/10.1007%2F978-3-642-25191-7.pdf 6 feature maps Layer 1 Layer 2 Layer 3 Layer 4 Layer 5 Layer 6

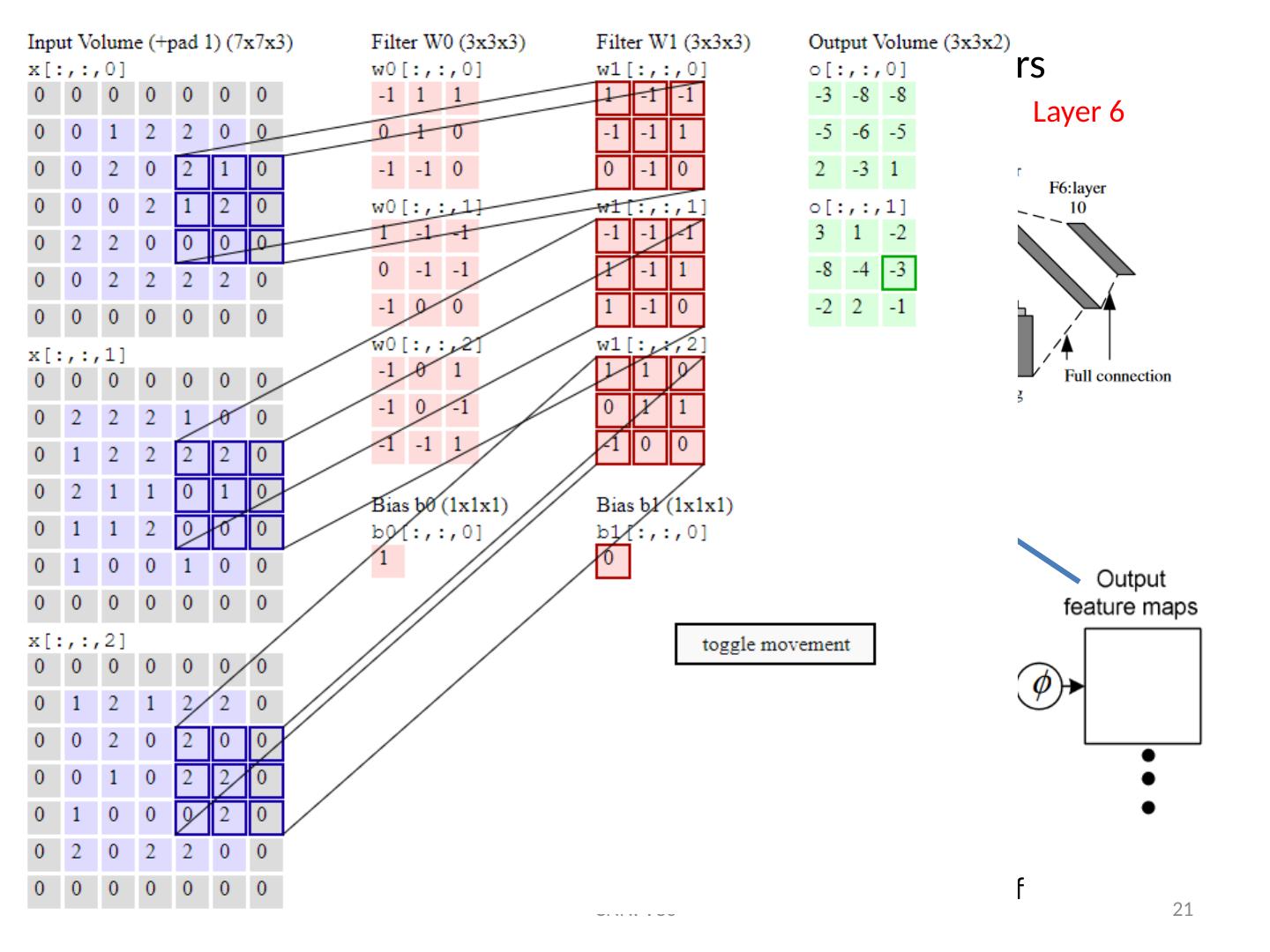

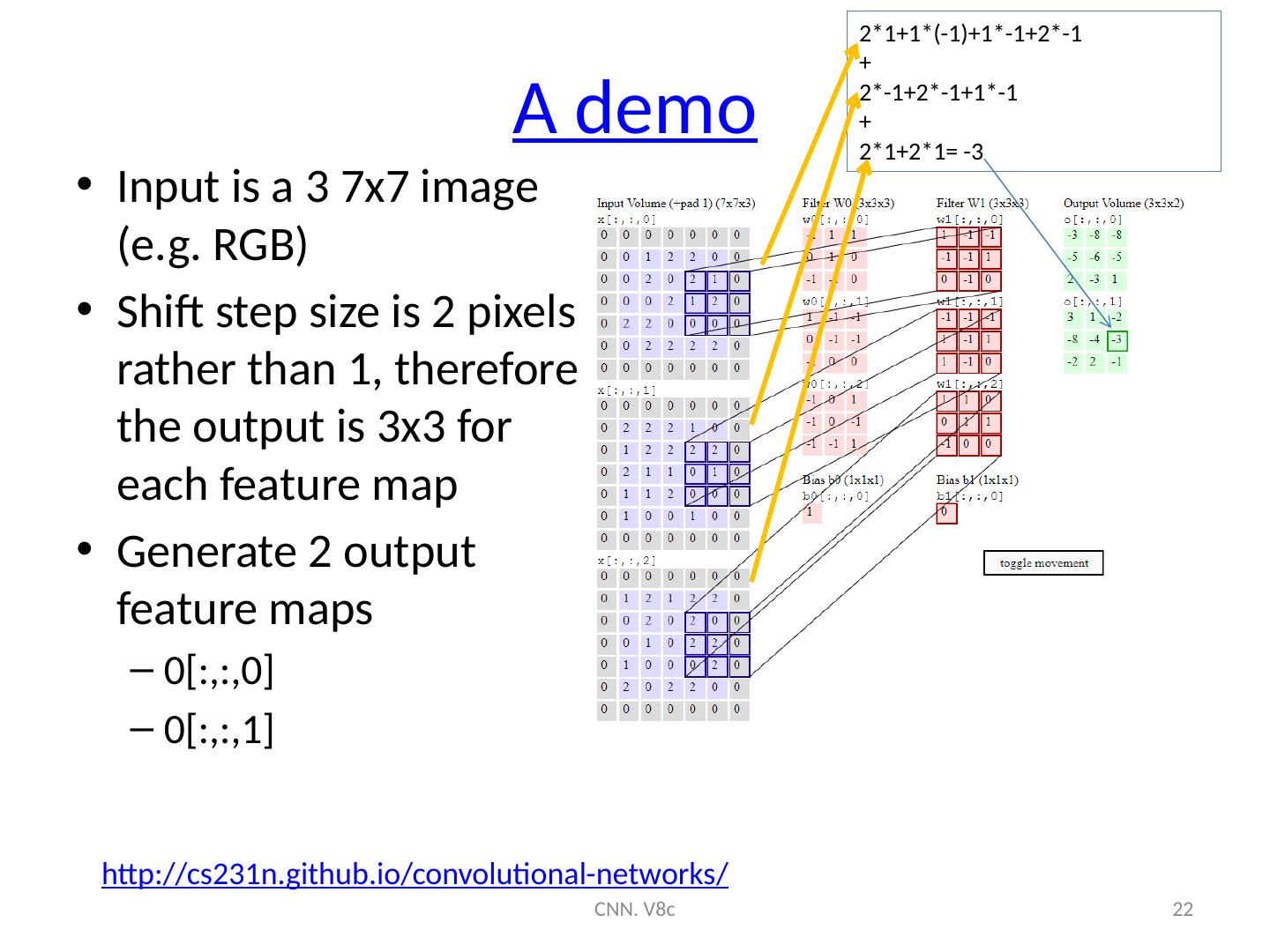

22 .A demo Input is a 3 7x7 image (e.g. RGB) Shift step size is 2 pixels rather than 1, therefore the output is 3x3 for each feature map Generate 2 output feature maps 0[:,:,0] 0[:,:,1] CNN. V8c 22 http://cs231n.github.io/convolutional-networks / 2*1+1*(-1)+1*-1+2*-1 + 2*-1+2*-1+1*-1 + 2*1+2*1= -3

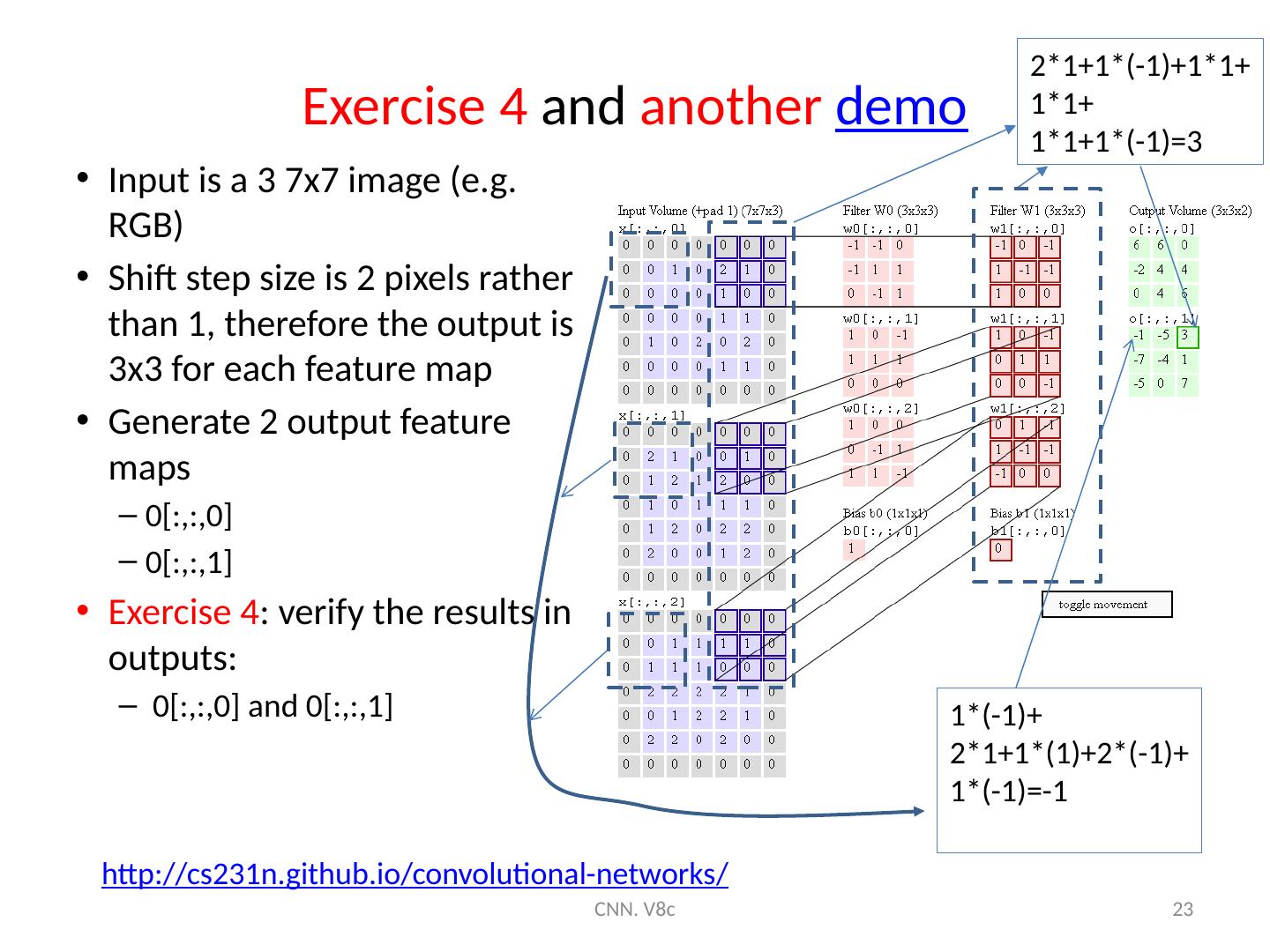

23 .Exercise 4 and another demo Input is a 3 7x7 image (e.g. RGB) Shift step size is 2 pixels rather than 1, therefore the output is 3x3 for each feature map Generate 2 output feature maps 0[:,:,0] 0[:,:,1] Exercise 4 : verify the results in outputs: 0 [:,:,0] and 0 [:,:,1] CNN. V8c 23 http://cs231n.github.io/convolutional-networks / 2*1+1*(-1)+1*1+ 1*1+ 1*1+1*(-1)=3 1*(-1)+ 2*1+1*(1)+2*(-1)+ 1*(-1)=-1

24 .Example Using a program CNN. V8c 24

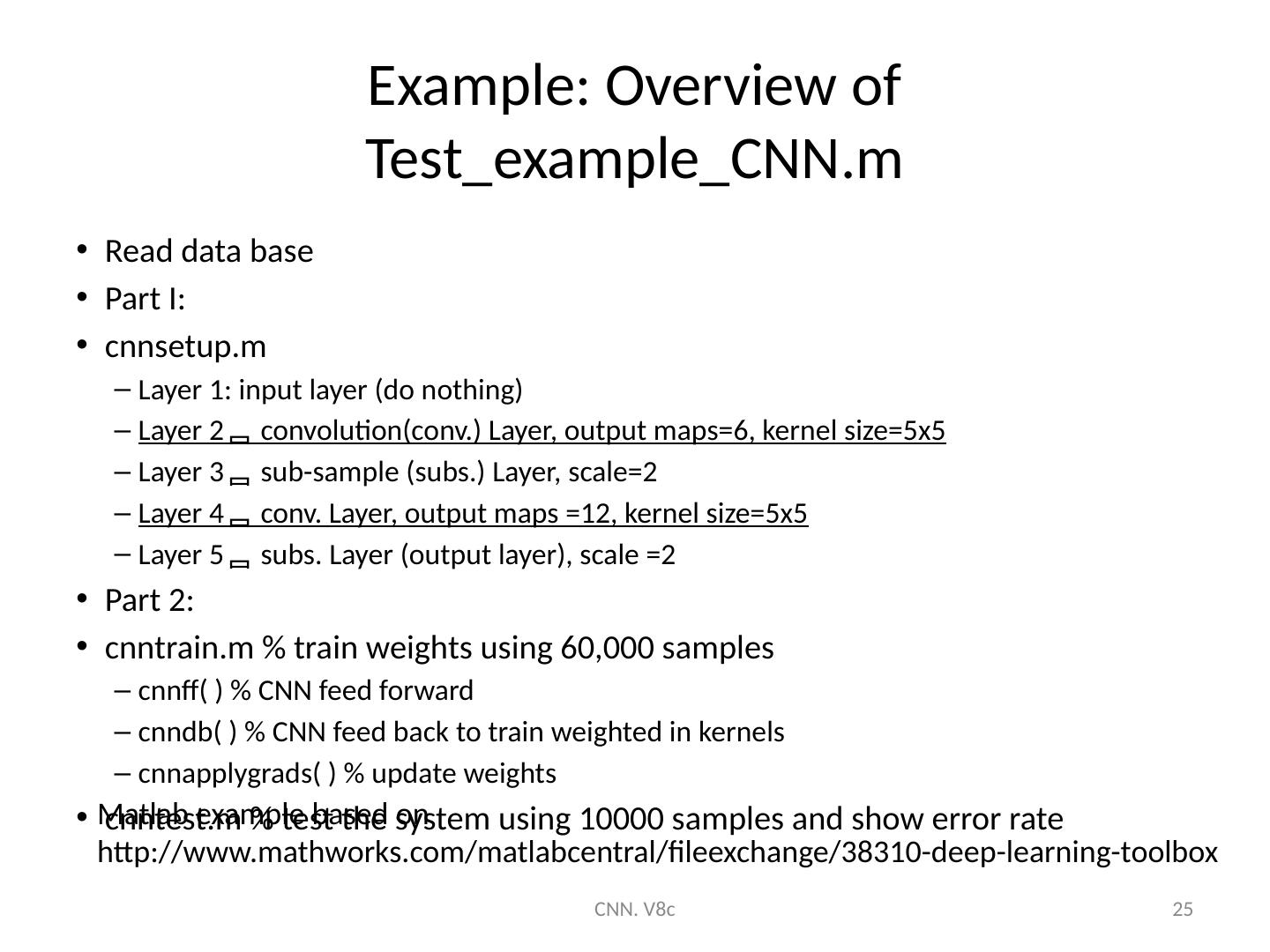

25 .Example: Overview of Test_example_CNN.m Read data base Part I: cnnsetup.m Layer 1: input layer (do nothing) Layer 2 convolution(conv.) Layer, output maps=6, kernel size=5x5 Layer 3 sub-sample (subs.) Layer, scale=2 Layer 4 conv. Layer, output maps =12, kernel size=5x5 Layer 5 subs. Layer (output layer), scale =2 Part 2: cnntrain.m % train weights using 60,000 samples cnnff ( ) % CNN feed forward c nndb ( ) % CNN feed back to train weighted in kernels c nnapplygrads ( ) % update weights cnntest.m % test the system using 10000 samples and show error rate CNN. V8c 25 Matlab example based on http://www.mathworks.com/matlabcentral/fileexchange/38310-deep-learning-toolbox

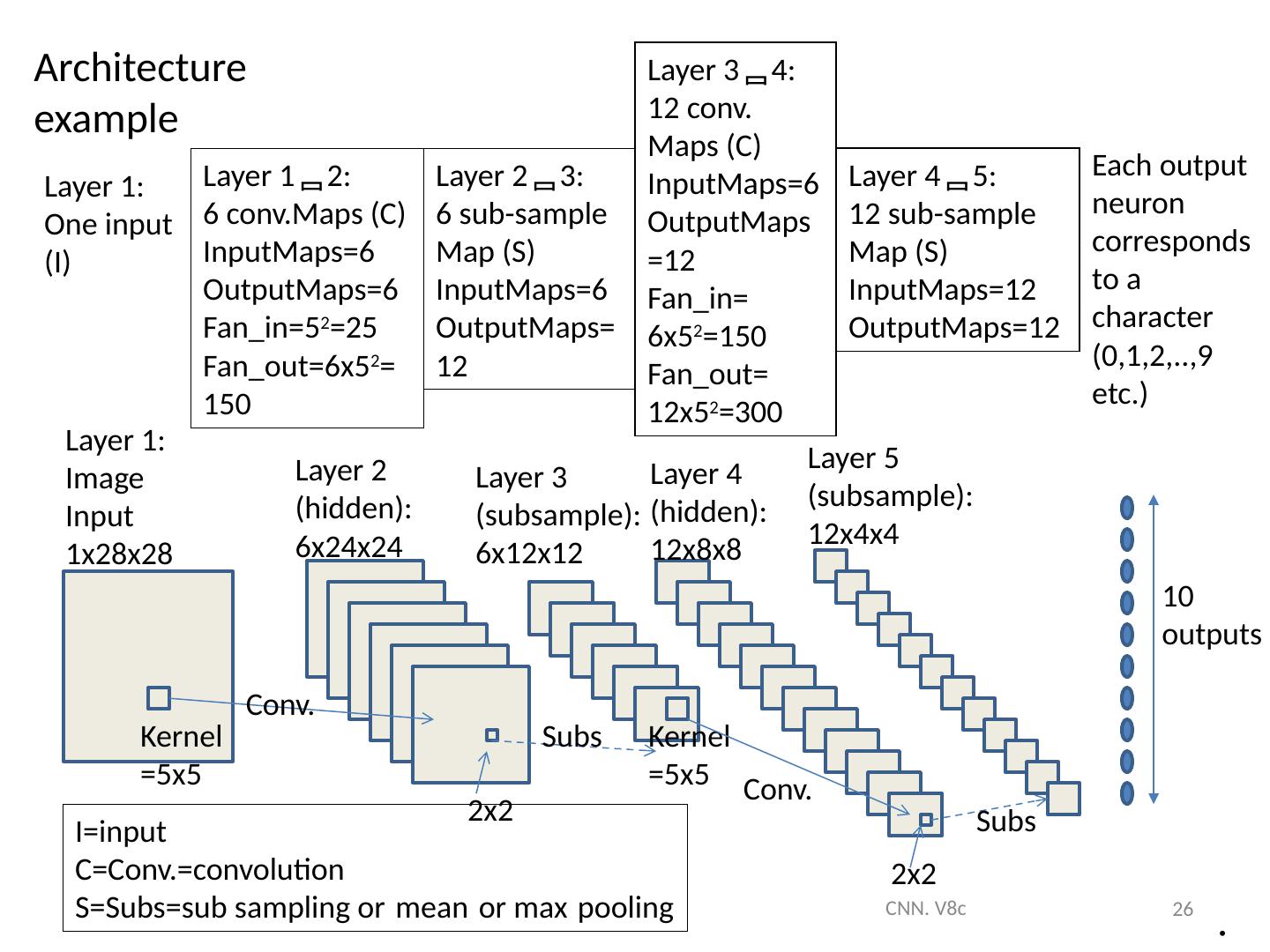

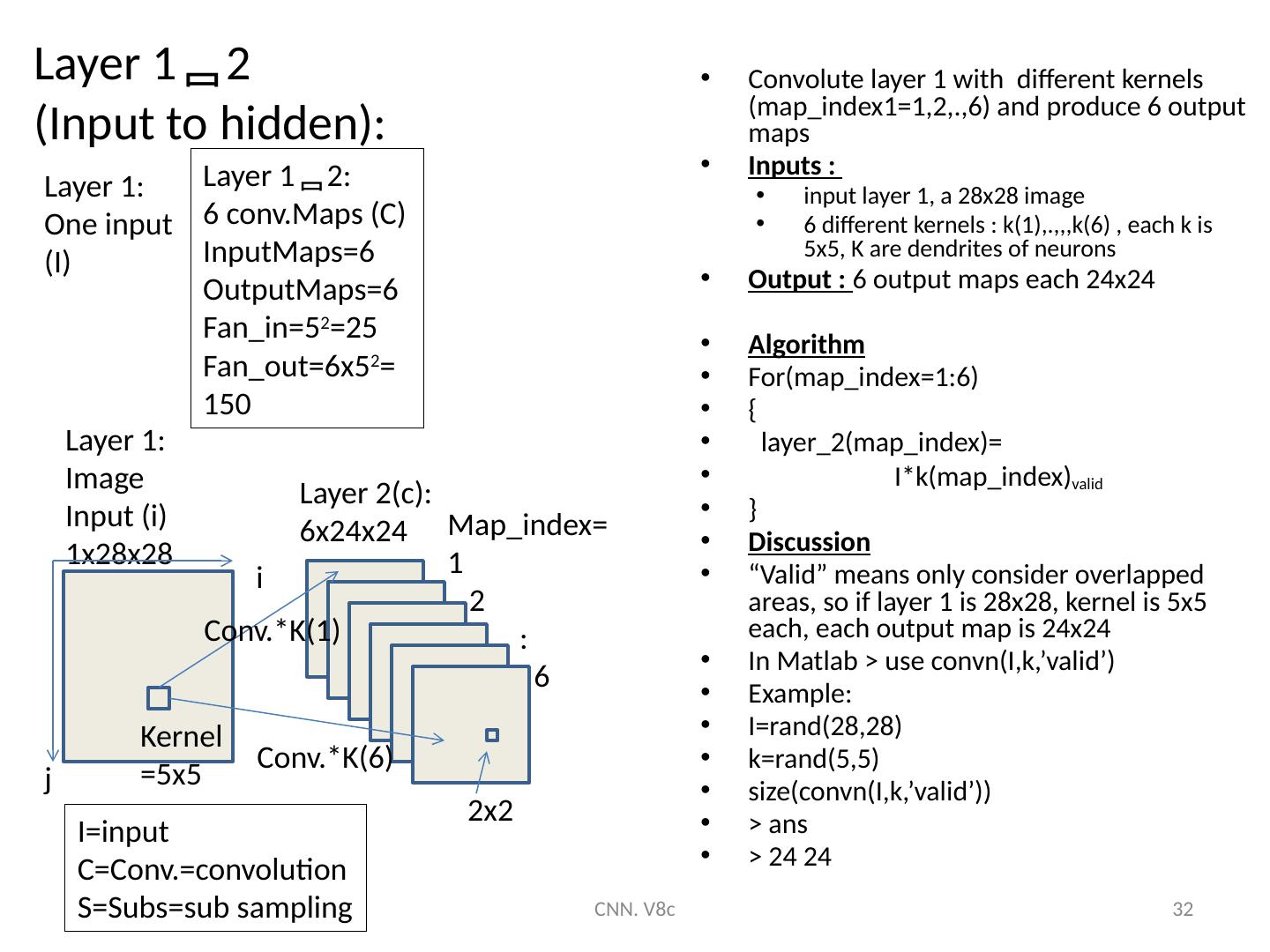

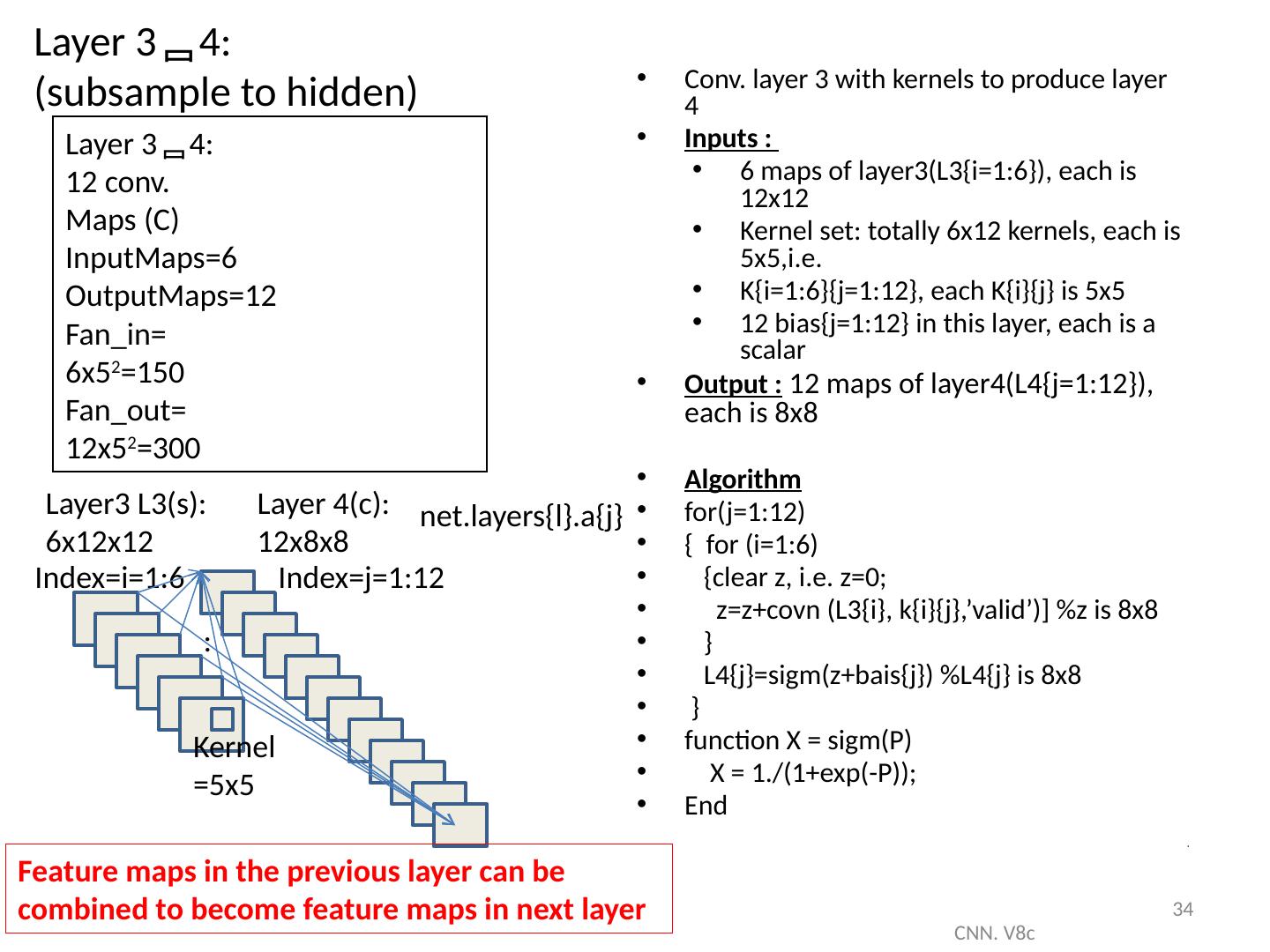

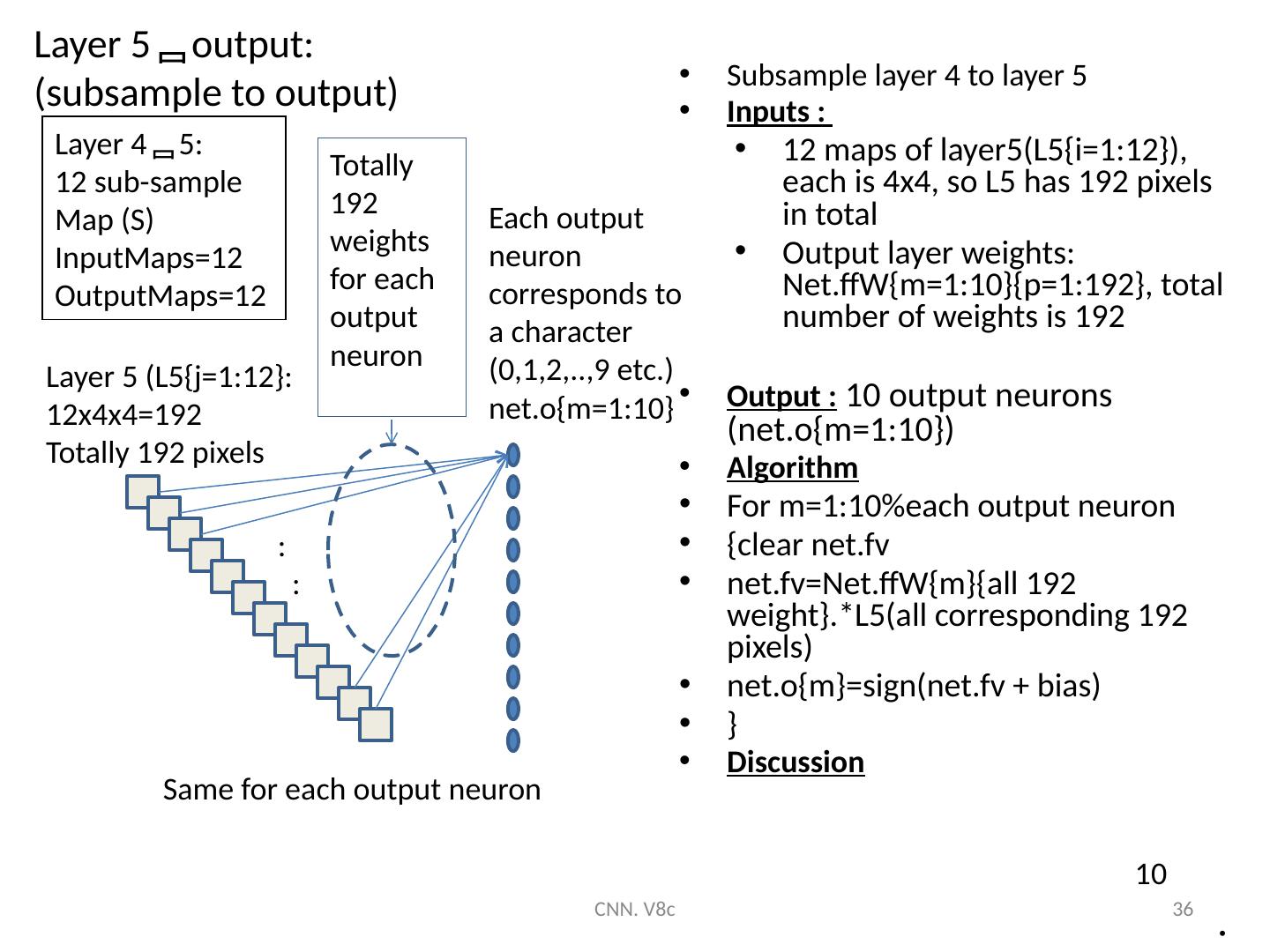

26 .Architecture example CNN. V8c 26 Each output neuron corresponds to a character (0,1,2,..,9 etc.) Layer 1: Image Input 1x28x28 Layer 1 2: 6 conv.Maps (C) InputMaps =6 OutputMaps =6 Fan_in =5 2 =25 Fan_out =6x5 2 =150 Layer 2 3: 6 sub-sample Map (S) InputMaps=6 OutputMaps=12 Layer 3 4: 12 conv. Maps (C) InputMaps=6 OutputMaps=12 Fan_in= 6x5 2 =150 Fan_out= 12x5 2 =300 Layer 4 5: 12 sub-sample Map (S) InputMaps=12 OutputMaps=12 Layer 1: One input (I) Layer 5 (subsample): 12x4x4 10 outputs Kernel =5x5 2x2 Kernel =5x5 Subs Subs Layer 4 (hidden): 12x8x8 Layer 3 (subsample): 6x12x12 Layer 2 (hidden): 6x24x24 Conv. I=input C=Conv.=convolution S=Subs=sub sampling or mean or max pooling Conv. 2x2

27 .Data used in training of a neural networks Training set Around 60-70 % of the total data Used to train the system Validation set (optional) Around 10-20 % of the total data Used to tune the parameters of the model of the system Test set Around 10-20 % of the total data Used to test the system Data in the above sets cannot be overlapped, the exact % depends on applications and your choice. CNN. V8c 27

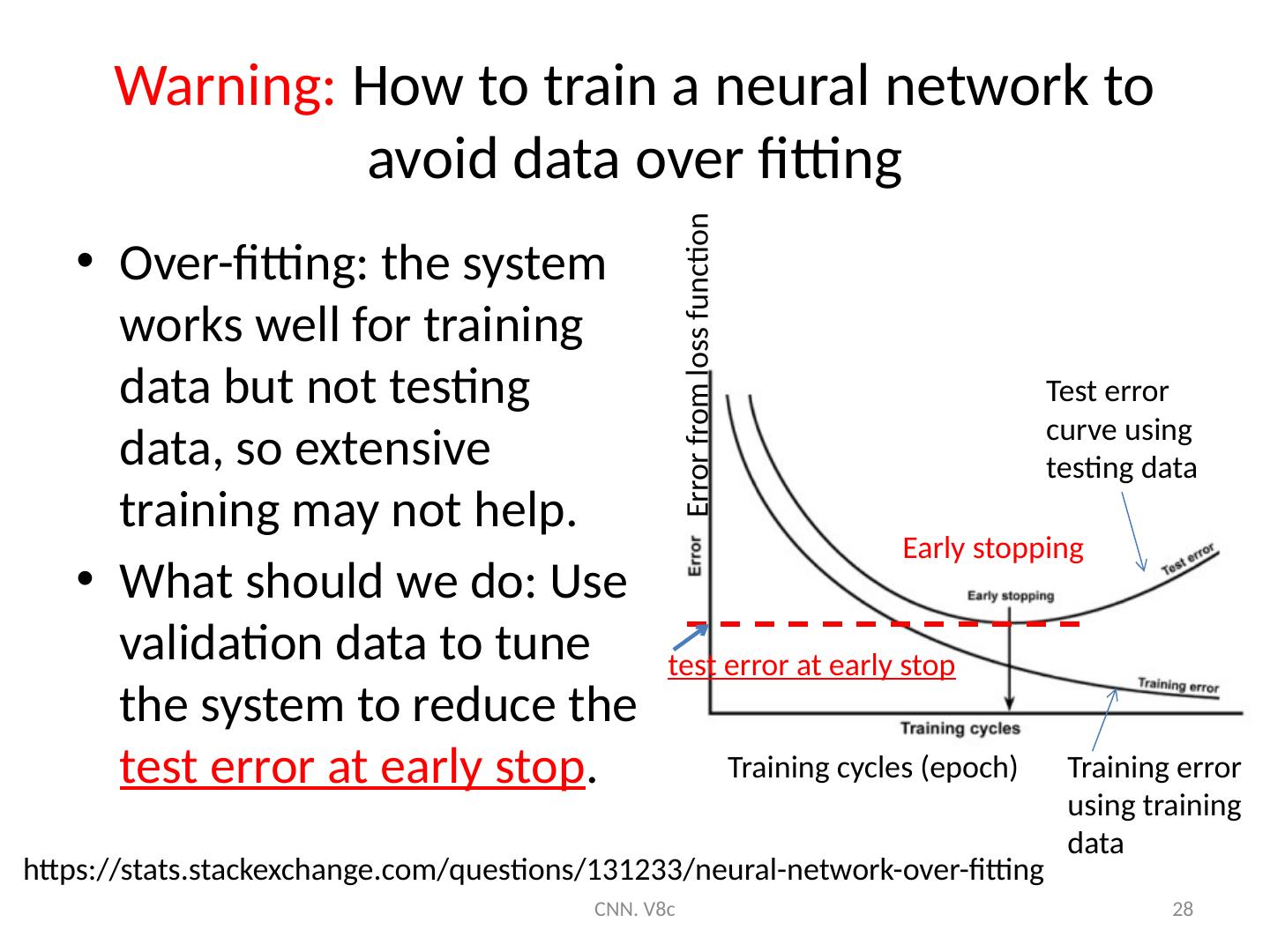

28 .Warning: How to train a neural network to avoid data over fitting Over-fitting: the system works well for training data but not testing data, so extensive training may not help. What should we do: Use validation data to tune the system to reduce the test error at early stop . CNN. V8c 28 https://stats.stackexchange.com/questions/131233/neural-network-over-fitting Training cycles (epoch) Early stopping Error from loss function Test error curve using testing data Training error using training data test error at early stop

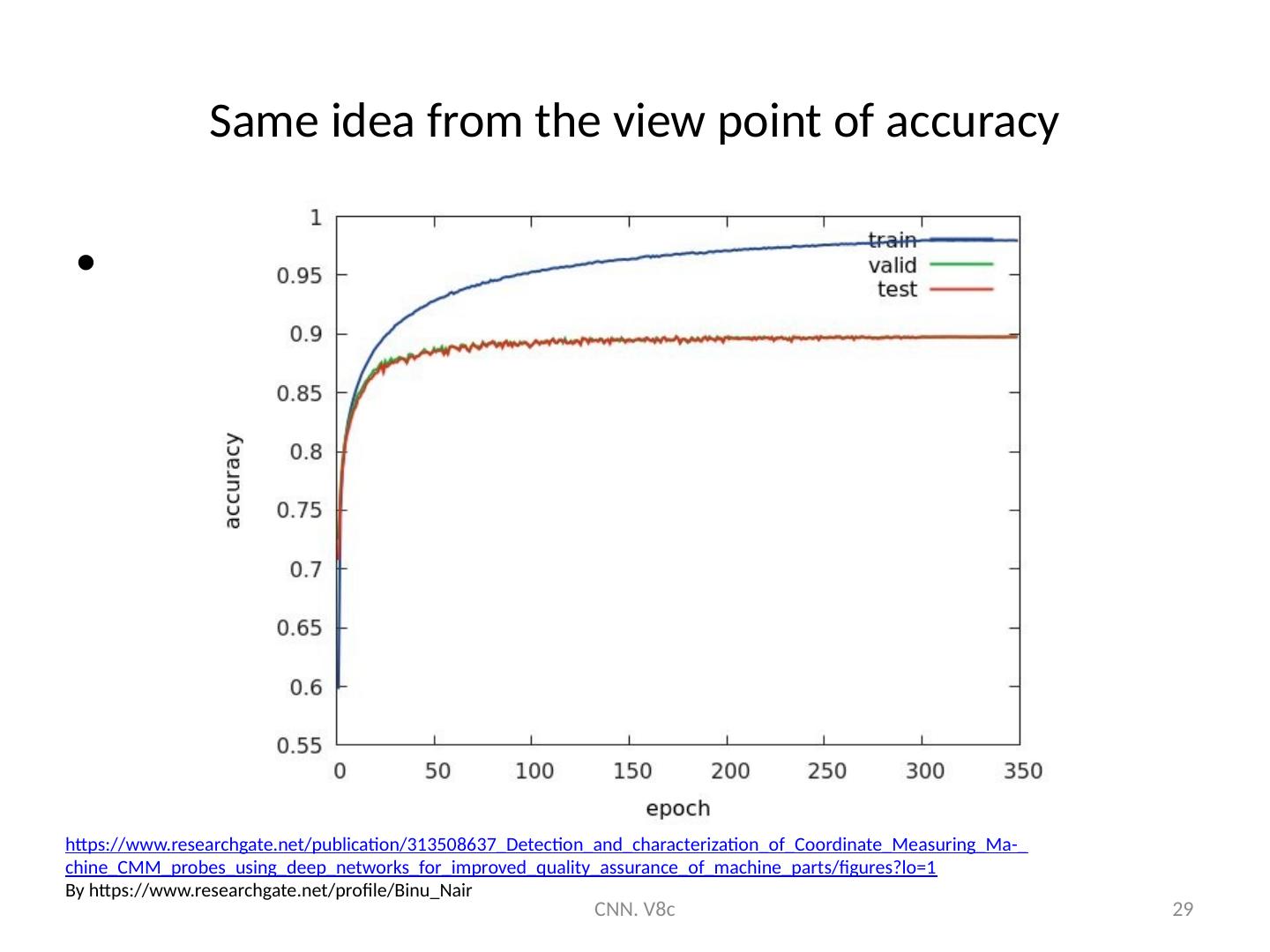

29 .Same idea from the view point of accuracy CNN. V8c 29 https://www.researchgate.net/publication/313508637_Detection_and_characterization_of_Coordinate_Measuring_Ma-_ chine_CMM_probes_using_deep_networks_for_improved_quality_assurance_of_machine_parts/figures?lo=1 By https://www.researchgate.net/profile/Binu_Nair