- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Parallel and Distributed Systems in Machine Learning

展开查看详情

1 .Parallel and Distributed Systems in Machine Learning Haichuan Yang

2 .Outline Background: Machine Learning Data Parallelism Model Parallelism Examples Future Trends

3 .Background: Machine Learning Machine learning is a field of computer science that uses statistical techniques to give computer systems the ability to " learn”. ( From Wikipedia ) Simply can be understand as the program that: F eed in historical <data, label> pairs ( training set ); After some computation ( training ), it can provide a model that: Takes a new data item without label , predict its corresponding label ( inference ). “magic” function

4 .Background: Machine Learning Training set 1 Training 1 Caltech-256 dataset Model Baseball-bat Images, labels = train_data_load () model = NeuralNetwork () model.train (images, labels) n ew_label = model.inference ( new_image )

5 .Background: Machine Learning Problem: training process is usually time consuming, especially when training set / model size is large. What parallel and distributed systems are good at!

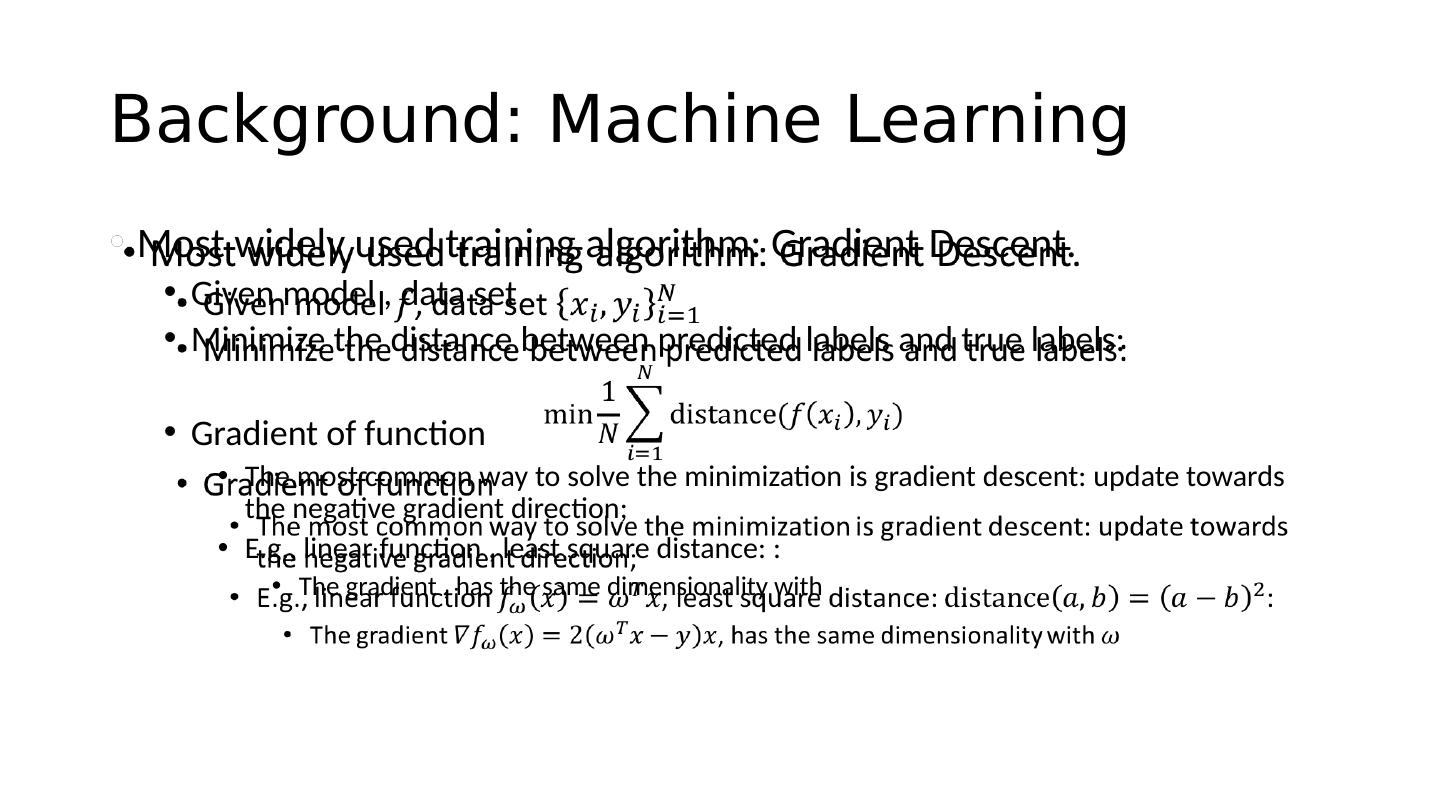

6 .Background: Machine Learning Most widely used training algorithm: Gradient Descent. Given model , data set Minimize the distance between predicted labels and true labels: Gradient of function The most common way to solve the minimization is gradient descent: update towards the negative gradient direction; E.g., linear function , least square distance: : The gradient , has the same dimensionality with

7 .Background: Machine Learning Gradient Descent: Model is parameterized by ; The gradient on : has the the same dimensionality , computing it dominates the cost of training . is the update rate FOR t = 1:T //compute update //apply update END Can be parallelized Synchronous method: Updates to do not break the dependency of the FOR loop. Asynchronous method: Updates to ignore the dependency of the FOR loop. Why it works?

8 .Background: Machine Learning Gradient Descent: Model is parameterized by ; The gradient on : has the the same dimensionality , computing it dominates the cost of training . is the update rate FOR t = 1:T //compute update //apply update END Can be parallelized Synchronous method: Updates to do not break the dependency of the FOR loop. Asynchronous method: Updates to ignore the dependency of the FOR loop. Why it works?

9 .Data Parallelism Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning”

10 .Data Parallelism: Map-Reduce Map-Reduce to synchronize parameters among workers Only support synchronous update Example: Spark FOR t = 1:T PARFOR i = 1:N END END Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning” //Sequential Version FOR t = 1:T //compute update //apply update END

11 .Data Parallelism: Map-Reduce Simplified method: Model Average PARFOR i = 1:N : //initialization FOR t = 1:T //local ops END END //Sequential Version FOR t = 1:T //compute update //apply update END

12 .Data Parallelism: Parameter Server 1. Support asynchronous update Flexible mechanisms for model aggregation Support model parallelism Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning”

13 .Data Parallelism: Parameter Server Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning”

14 .Data Parallelism: Parameter Server PARFOR i = 1:N: FOR t = 1:T //pull from PS (local copy) //local ops //push updates to PS END END Staleness could happen! Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning” //Sequential Version FOR t = 1:T //compute update //apply update END

15 .Data Parallelism: Parameter Server PARFOR i = 1:N: FOR t = 1:T //pull from PS (local copy) //local ops //push updates to PS END END Staleness could happen! Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning” //Sequential Version FOR t = 1:T //compute update //apply update END

16 .Model Parallelism The model parameters , where could be very large. We can also distribute the model: Synchronous model parallelization Asynchronous model slicing Model block scheduling Basically, store the parameters distributedly : Each machine has a subset of parameters ; Communication is necessary for both inference and training; Updates to different parameters are done in different machines.

17 .Model Parallelism Synchronous model parallelization High communication cost always need the most fresh intermediate data Sensitive to communication delay & machine failure Low efficient when machines have different speed

18 .Model Parallelism Asynchronous model slicing Intermediate data from other machine are cached locally; Periodically update the cached data;

19 .Model Parallelism Cons of the asynchronous method: Cache contains stale information, which hurt the accuracy of updates When we allow the stale information? If the update is very small (in magnitude) Strategy: Model block scheduling Synchronize the parameters in different frequency, based on the magnitude of update

20 .Model Parallelism Cons of the asynchronous method: Cache contains stale information, which hurt the accuracy of updates When we allow the stale information? If the update is very small (in magnitude) Strategy: Model block scheduling Synchronize the parameters in different frequency, based on the magnitude of update

21 .Examples MapReduce – Spark Mllib Machine learning library in Spark Provide implemented algorithms in classification, regression, recommendation, clustering, etc. Simple interface Only support synchronous method Only support data parallelism

22 .Examples Parameter Server – DMTK Distributed key-value store Multiple server nodes with multiple worker nodes Figure from “AAAI 2017 Tutorial: Recent Advances in Distributed Machine Learning”

23 .Examples Data Flow – TensorFlow Use graph to represent math ops and data flow; Support different hardwares , e.g. cpu , gpu ; Support data and model parallelism; Auto differential tool

24 .Examples: TensorFlow Represent math computation as a directed graph: Nodes represent math ops and variables; Edges represent the input/output of nodes;

25 .Examples: TensorFlow Node placement Manually assign to devices Done by programmer Automatically assignment Simulation based on cost estimation (computation, communication) Start with source node, assign each node to the device which can finish fastest

26 .Examples: TensorFlow Node placement Manually assign to devices Done by programmer Automatically assignment Simulation based on cost estimation (computation, communication) Start with source node, assign each node to the device which can finish fastest

27 .Future Trends Flexibility Friendly to programmer/data scientist; Extension to very customized task; Efficiency Optimization to specified task/framework; Consistent/optimal efficiency to different customized task; Accuracy Different to traditional deterministic computation task; The relation between accuracy and complexity (computation, communication, energy consumption, etc.)

28 .Reference Tie-Yan Liu, Wei Chen, Taifeng Wang. Recent Advances in Distributed Machine Learning, AAAI 2017 Tutorial. Lian X, Huang Y, Li Y, Liu J. Asynchronous parallel stochastic gradient for nonconvex optimization. In Advances in Neural Information Processing Systems 2015 (pp. 2737-2745). Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, Kudlur M. TensorFlow : A System for Large-Scale Machine Learning. InOSDI 2016 Nov 2 (Vol. 16, pp. 265-283).