- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

14-High-level Parallel Programming Models

展开查看详情

1 .CSC2/458 Parallel and Distributed Systems High-level Parallel Programming Models Sreepathi Pai March 6, 2018 URCS

2 .Outline Higher Level Parallel Programming OpenMP Cilk

3 .Outline Higher Level Parallel Programming OpenMP Cilk

4 .Parallel Programming Models • What can be executed in parallel? • Parallelization • Where and when should be it executed? • Scheduling • Synchronization

5 .Low-level Parallel Programming • Parallelization • Threading • Multiprocessing • Scheduling • Manual (thread pinning, etc.) • Synchronization • Manual (locks, barriers, etc.)

6 .Higher-level Parallel Programming Models • Make parallel programming easy • for parallel programmers • Without ”overburdening” • Programming language designers • Compiler writers • Computer architects

7 .Building your own parallel programming models • Identify parallel patterns • Embarrassingly parallel • Reductions • Producer–Consumer • etc. • Provide structures for these parallel patterns • e.g. parallel for loop – forall • Programmers use these structures • Execute in parallel • Implement structures using lower-level programming models

8 .Outline Higher Level Parallel Programming OpenMP Cilk

9 .OpenMP • Aimed at shared memory multithreaded programming • Within a single process and single computer node • Usually coupled with Message Passing Interface (MPI) • MPI is used for parallelism across processes and machines • Not required for OpenMP programs, will discuss MPI in distributed systems

10 .OpenMP programming model • C/C++ and Fortran support • Must be supported by compiler • gcc/clang both support OpenMP • Supports programmer-created threads • But do not need to use them

11 .Vector Addition void vector_add(int *a, int *b, int *c, int N) { for(int i = 0; i < N; i++) { c[i] = a[i] + b[i] } } How would you parallelize this loop using pthreads?

12 .Parallel Programming Pattern in Vector Addition • Create T threads • Distribute N/T work to each thread • N is size of array (or work, or loop iterations, etc.) • Pattern • Embarrassingly Parallel • Data Decomposition • Also called ”map” pattern

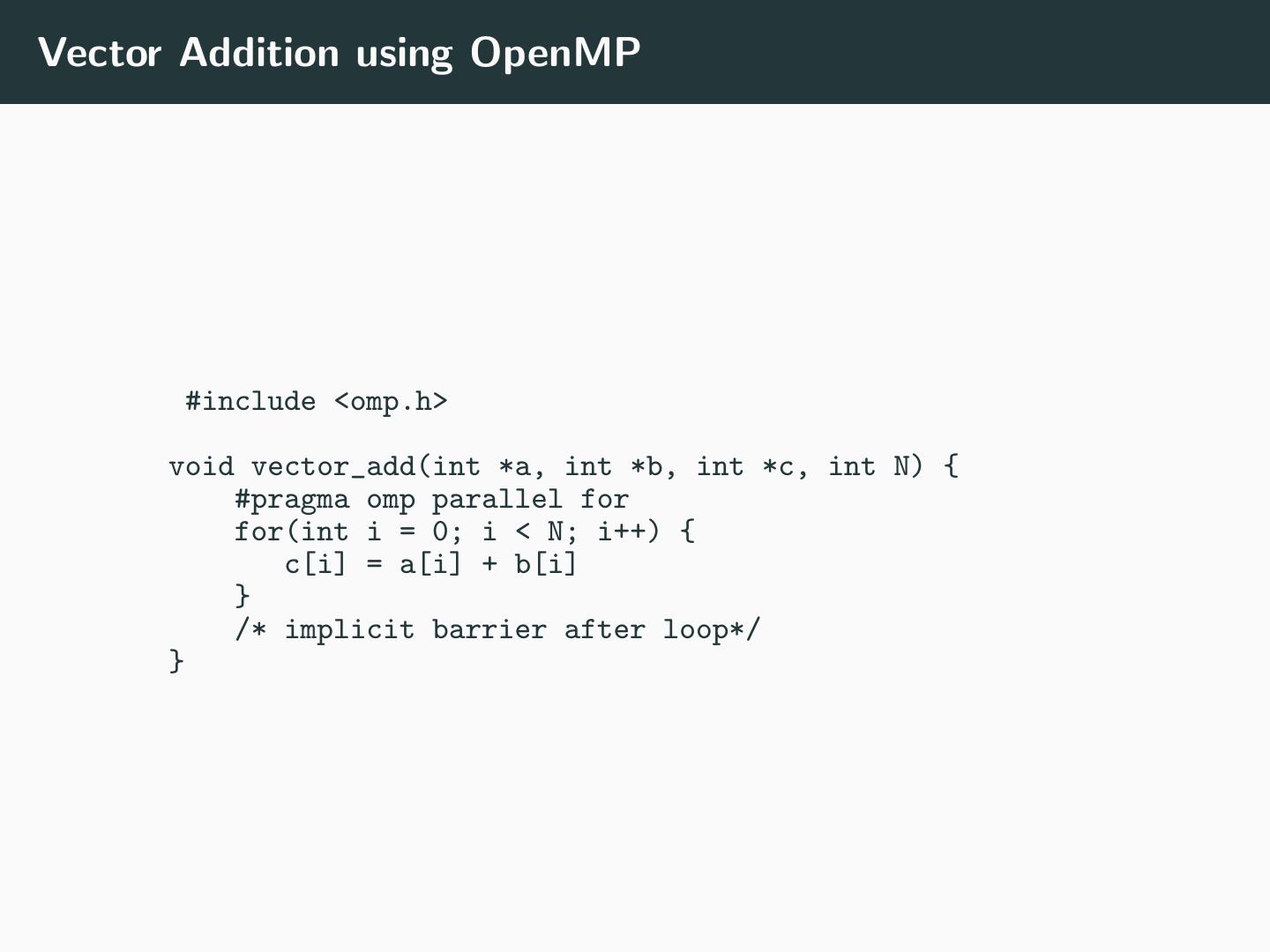

13 .Vector Addition using OpenMP #include <omp.h> void vector_add(int *a, int *b, int *c, int N) { #pragma omp parallel for for(int i = 0; i < N; i++) { c[i] = a[i] + b[i] } /* implicit barrier after loop*/ }

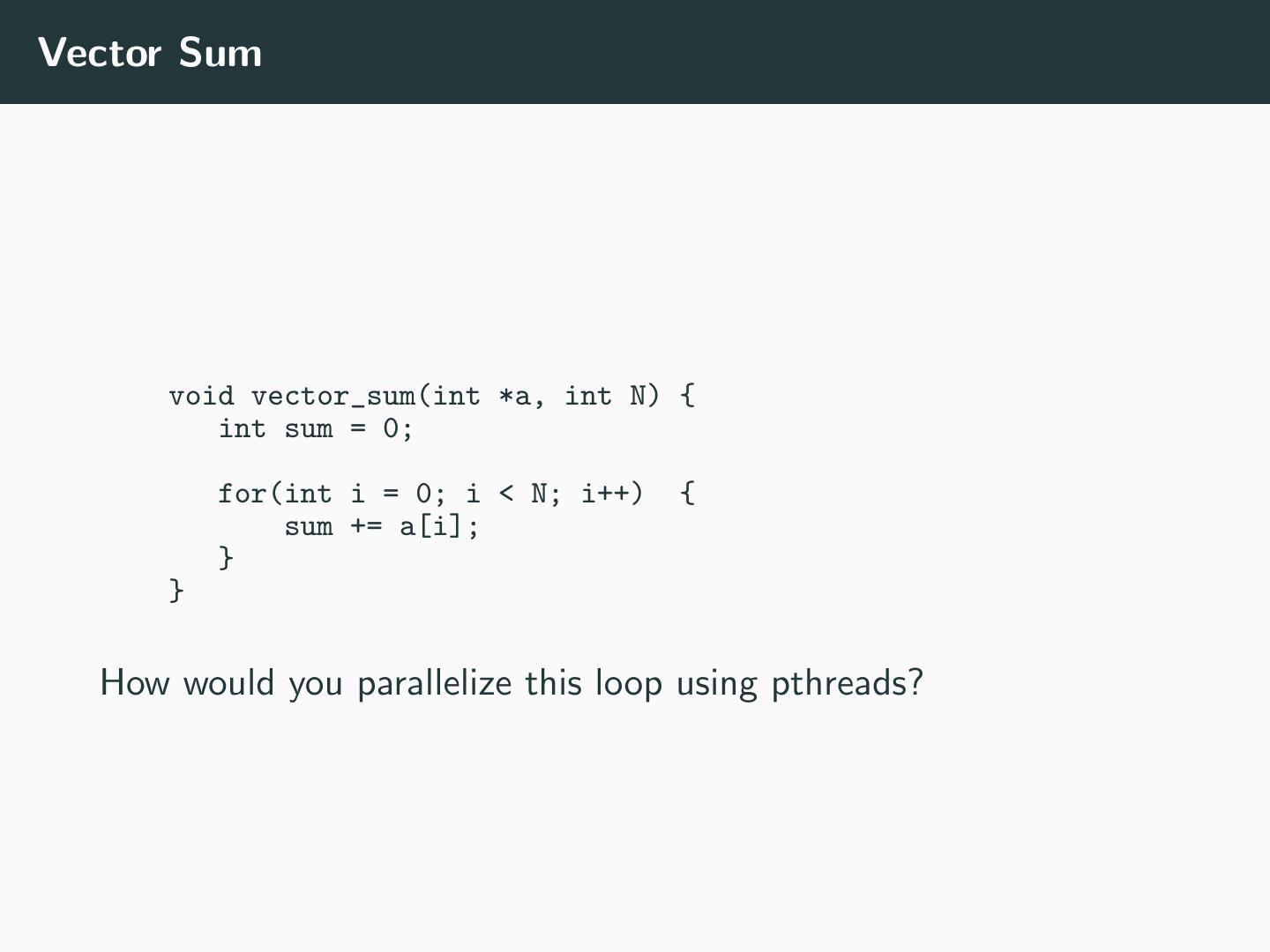

14 .Vector Sum void vector_sum(int *a, int N) { int sum = 0; for(int i = 0; i < N; i++) { sum += a[i]; } } How would you parallelize this loop using pthreads?

15 .Parallel Programming Pattern in Vector Sum • Create T threads • Distribute N/T work to each thread • N is size of array (or work, or loop iterations, etc.) • Wait until each thread computes sum • Compute the sum of these individual sums (recursively and in parallel) • Pattern • Reduction • Requires associative operator • Also called ”reduce”

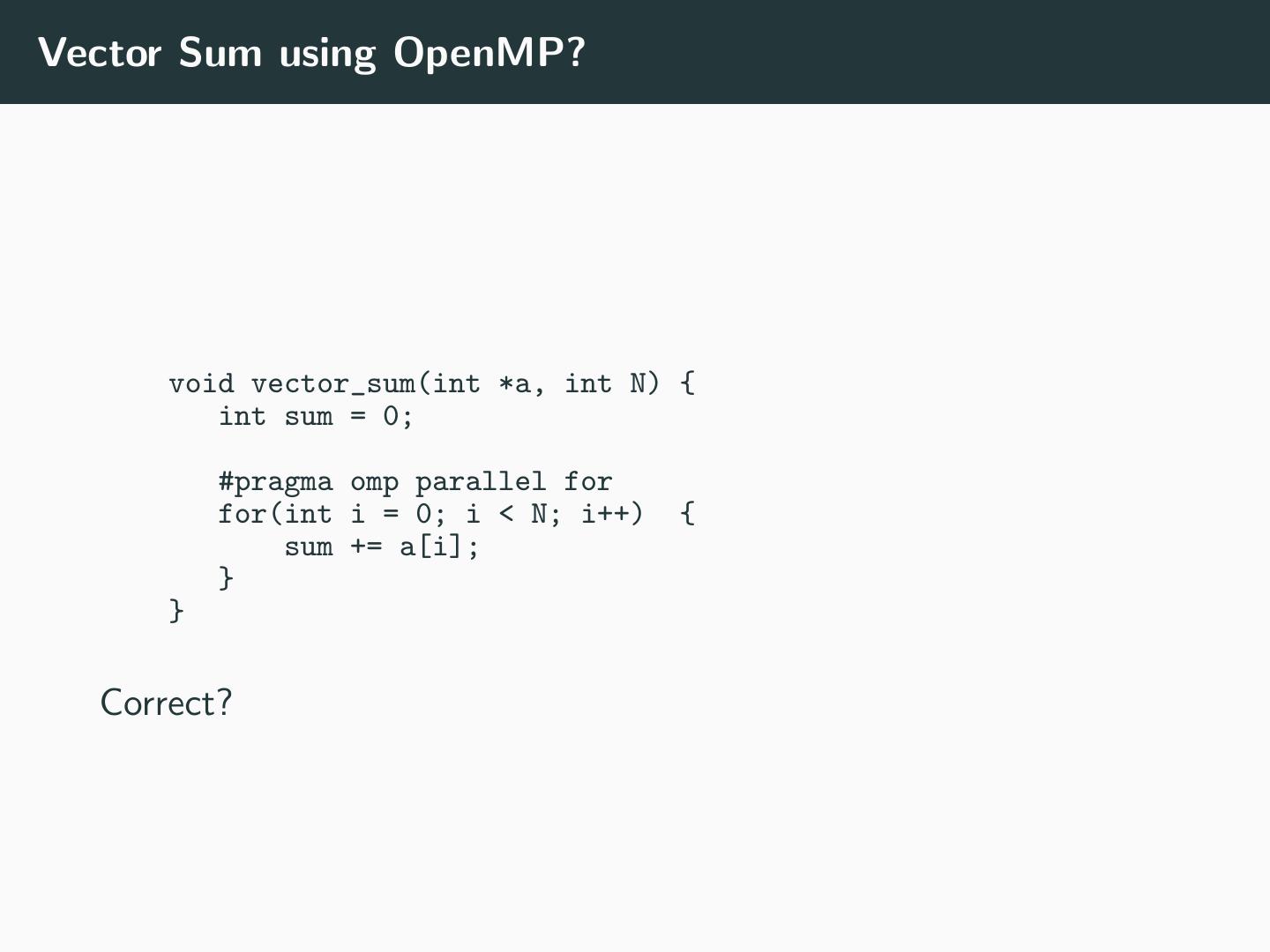

16 .Vector Sum using OpenMP? void vector_sum(int *a, int N) { int sum = 0; #pragma omp parallel for for(int i = 0; i < N; i++) { sum += a[i]; } } Correct?

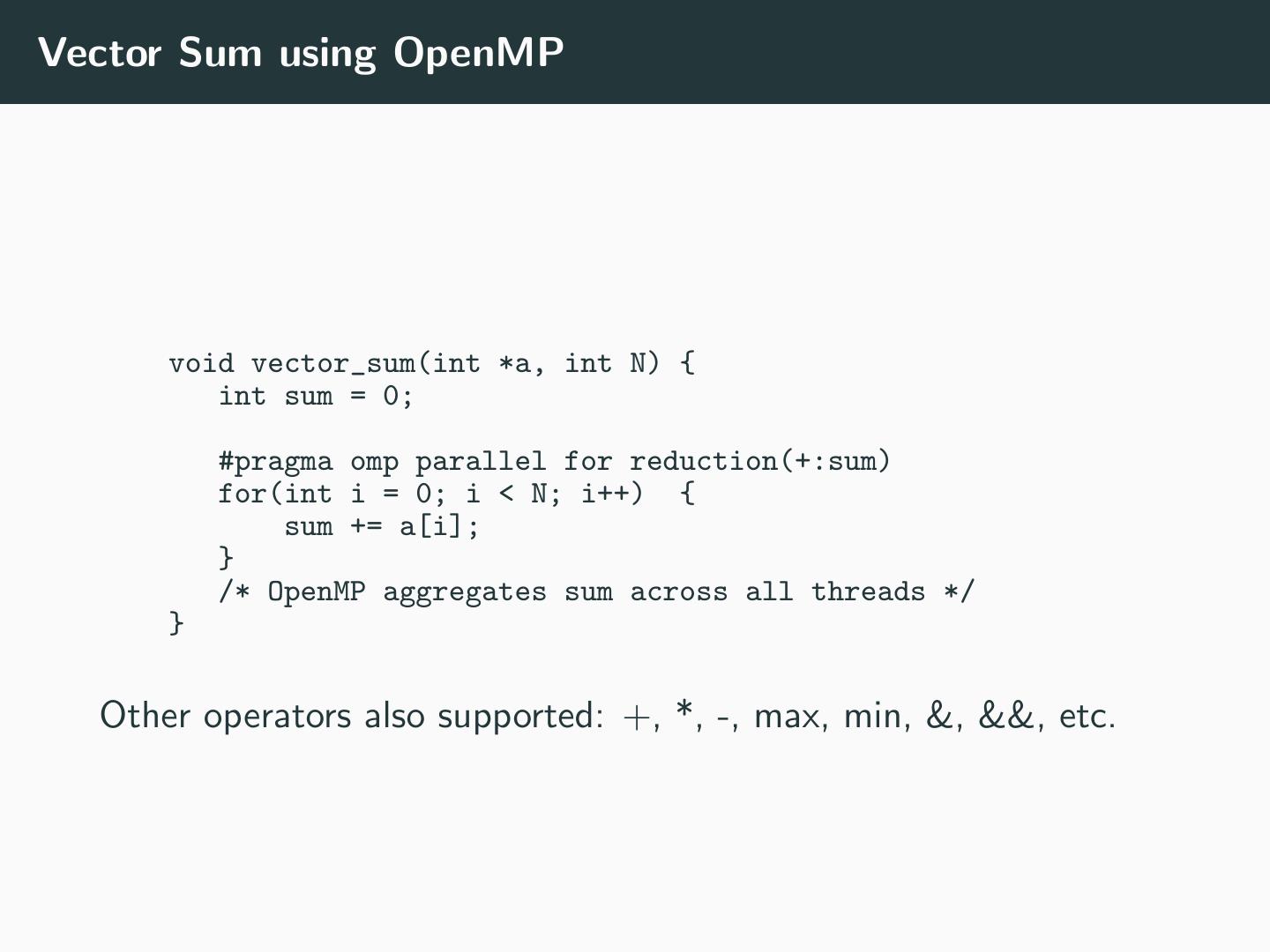

17 .Vector Sum using OpenMP void vector_sum(int *a, int N) { int sum = 0; #pragma omp parallel for reduction(+:sum) for(int i = 0; i < N; i++) { sum += a[i]; } /* OpenMP aggregates sum across all threads */ } Other operators also supported: +, *, -, max, min, &, &&, etc.

18 .Low-level constructs in OpenMP • Thread creation • #pragma omp parallel • (without for) • Critical Sections • #pragma omp critical • Many others • Usually better integrated with C/C++ than pthreads • Other ”task parallel” constructs too

19 .Outline Higher Level Parallel Programming OpenMP Cilk

20 .Cilk programming model • Also C/C++ • Two keywords: • cilk spawn • cilk sync

21 .Fibonacci calculation example int fib(int n) { if (n < 2) return n; int x = fib(n-1); int y = fib(n-2); return x + y; }

22 .Fibonacci calculation in Cilk int fib(int n) { if (n < 2) return n; int x = cilk_spawn fib(n-1); int y = fib(n-2); cilk_sync; return x + y; } • cilk spawn will allow fib(n-1) to execute in parallel with fib • cilk sync will wait for all spawns from current function to finish • All functions contain an implicit cilk sync at exit • cilk spawn is like fork, cilk sync is like join • However, these are suggestions, may not actually execute in parallel

23 .Scheduling in Cilk • cilk spawn creates two tasks • Actually one task, and one continuation • How are these tasks mapped to cores? • Tasks can produce other tasks

24 .Work stealing in Cilk - I • Assume each core has a deque (double-ended queue) • Tasks spawned (and their continuations) are added to the deque of a core • And pulled off the head of deque and executed • Will this keep all cores busy?

25 .Work stealing in Cilk - II • Assume core runs out of tasks • It randomly picks another core • And pulls off a task from the tail of the dequeue and executes it • Will this keep all cores busy?

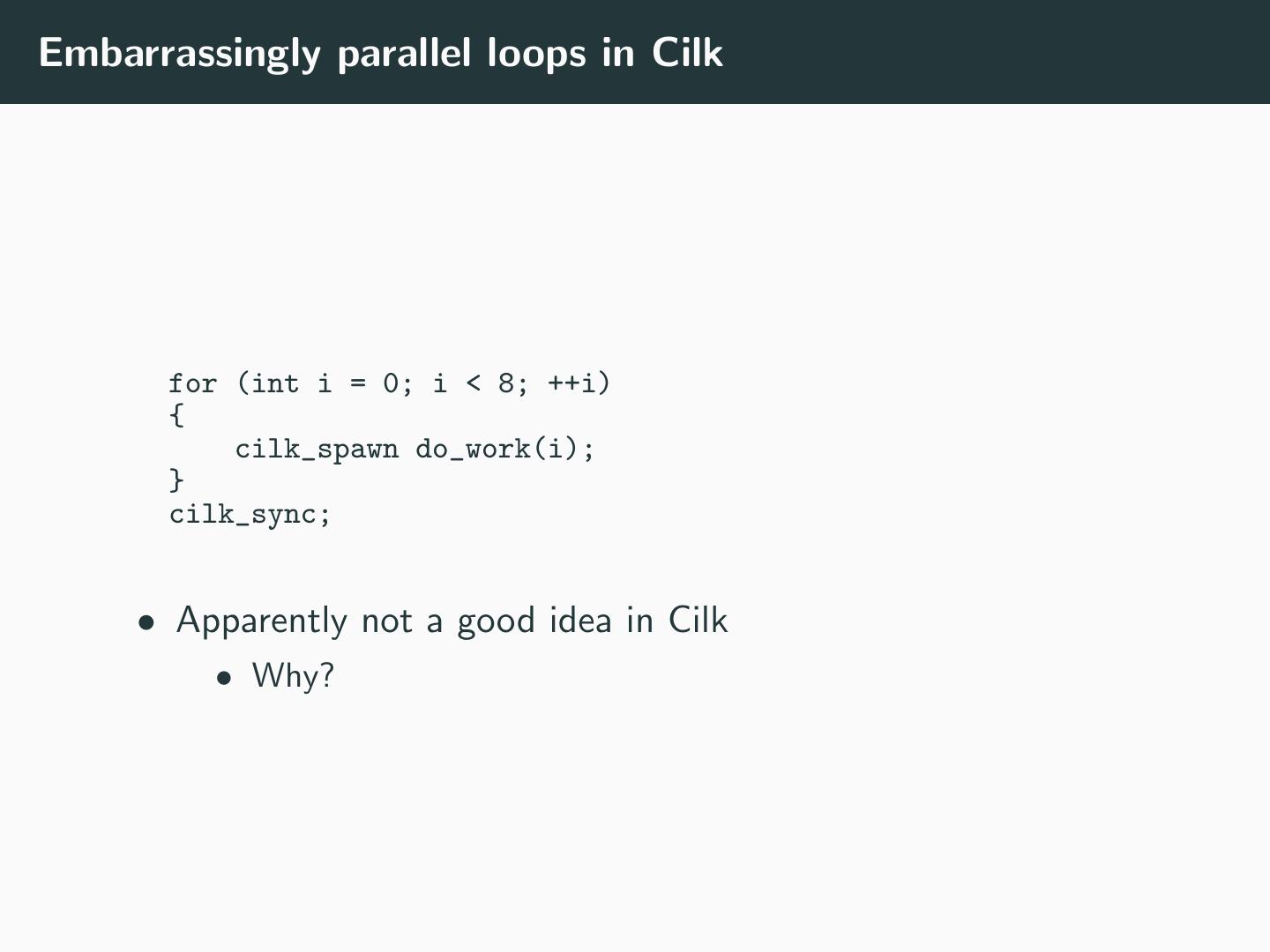

26 .Embarrassingly parallel loops in Cilk for (int i = 0; i < 8; ++i) { cilk_spawn do_work(i); } cilk_sync; • Apparently not a good idea in Cilk • Why?

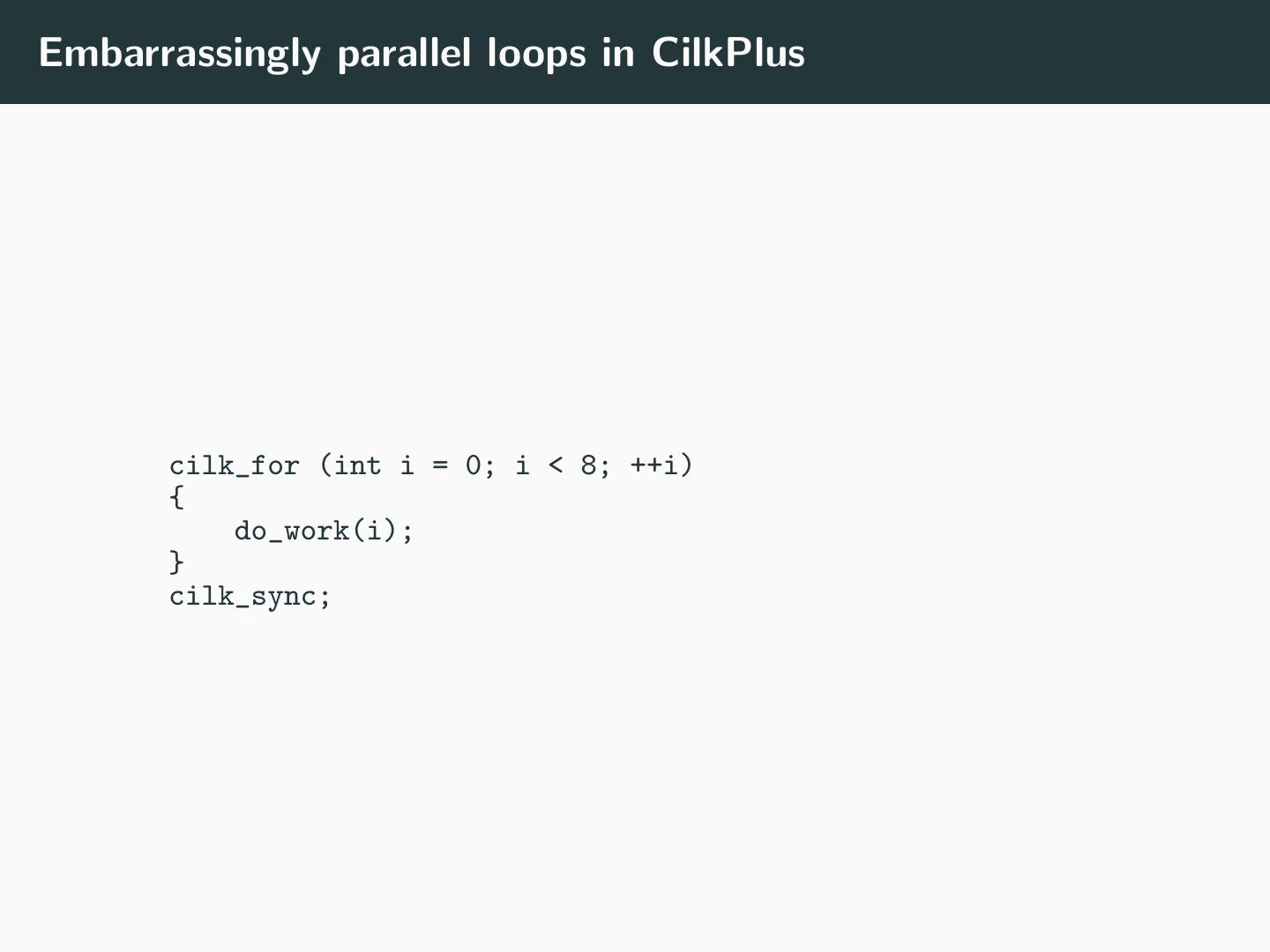

27 .Embarrassingly parallel loops in CilkPlus cilk_for (int i = 0; i < 8; ++i) { do_work(i); } cilk_sync;

28 .Acknowledgements Cilk/Cilkplus material from the Cilkplus tutorial