- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Effortless Data Exploration with zenvisage

展开查看详情

1 . Effortless Data Exploration with zenvisage: An Expressive and Interactive Visual Analytics System Tarique Siddiqui1 Albert Kim2 John Lee1 Karrie Karahalios1 Aditya Parameswaran1 1 University of Illinois (UIUC) 2 MIT {tsiddiq2,lee98,kkarahal,adityagp}@illinois.edu alkim@csail.mit.edu ABSTRACT Case Study 1: Engineering Data Analysis. Battery scientists at Data visualization is by far the most commonly used mechanism to Carnegie Mellon University perform visual exploration of datasets explore and extract insights from datasets, especially by novice data of solvent properties to design better batteries. A specific task may scientists. And yet, current visual analytics tools are rather limited involve finding solvents with desired behavior: e.g., those whose in their ability to operate on collections of visualizations—by com- solvation energy of Li+ vs. the boiling point is a roughly increas- posing, filtering, comparing, and sorting them—to find those that ing trend. To do this using current tools, these scientists manually depict desired trends or patterns. The process of visual data ex- examine the plot of Li+ solvation energy vs. boiling point for each ploration remains a tedious process of trial-and-error. We propose of the thousands of solvents, to find those that match the desired zenvisage, a visual analytics platform for effortlessly finding de- pattern of a roughly increasing trend. sired visual patterns from large datasets. We introduce zenvisage’s Case Study 2: Advertising Data Analysis. Advertisers at ad an- general purpose visual exploration language, ZQL ("zee-quel") for alytics firm Turn, Inc., often examine their portfolio of advertise- specifying the desired visual patterns, drawing from use-cases in a ments to see if their campaigns are performing as expected. For variety of domains, including biology, mechanical engineering, cli- instance, an advertiser may be interested in seeing if there are any mate science, and commerce. We formalize the expressiveness of keywords that are behaving unusually with respect to other key- ZQL via a visual exploration algebra—an algebra on collections of words in Asia—for example, maybe most keywords have a specific visualizations—and demonstrate that ZQL is as expressive as that trend for click-through rates (CTR) over time, while a small num- algebra. zenvisage exposes an interactive front-end that supports ber of them have a different trend. To do this using the current the issuing of ZQL queries, and also supports interactions that are tools available at Turn, the advertiser needs to manually examine “short-cuts” to certain commonly used ZQL queries. To execute the plots of CTR over time for each keyword (thousands of such these queries, zenvisage uses a novel ZQL graph-based query opti- plots), and remember what are the typical trends. mizer that leverages a suite of optimizations tailored to the goal of Case Study 3: Genomic Data Analysis. Clinical researchers at the processing collections of visualizations in certain pre-defined ways. NIH-funded genomics center at UIUC and Mayo Clinic are inter- Lastly, a user survey and study demonstrates that data scientists are ested in studying data from clinical trials. One such task involves able to effectively use zenvisage to eliminate error-prone and te- finding pairs of genes that visually explain the differences in clinical dious exploration and directly identify desired visualizations. trial outcomes (positive vs. negative)—visualized via a scatterplot with the x and y axes each referring to a gene, and each outcome depicted as a point in the scatterplot—with the positive outcomes 1. INTRODUCTION depicted in one color, and the negative ones as another. Current Interactive visualization tools, such as Tableau [4] and Spot- tools require the researchers to generate and manually evaluate tens fire [3], have paved the way for the democratization of data ex- of thousands of scatter plots of pairs of genes for whether the out- ploration and data science. These tools have witnessed an ever- comes can be clearly distinguished in the scatter plot. expanding user base—as a concrete example, Tableau’s revenues Thus, in these examples, the recurring theme is the manual exam- last year were in the hundreds of millions of US Dollars and is ex- ination of a large number of generated visualizations for a specific pected to reach tens of billions soon [7]. Using such tools, or even visual pattern. Indeed, we have found that in these scenarios, as tools like Microsoft Excel, the standard data analysis recipe is as well as others that we have encountered via other collaborators—in follows: the data scientists load a dataset into the tool, select visu- climate science, server monitoring, and mobile app analysis—data alizations to examine, study the results, and then repeat the process exploration can be a tedious and time-consuming process with cur- until they find ones that match their desired pattern or need. Thus, rent visualization tools. using this repeated process of manual examination, or trial-and- error, data scientists are able to formulate and test hypothesis, and Key Insight. The goal of this paper is to develop zenvisage, a derive insights. The key premise of this work is that to find desired visual analytics system that can automate the search for desired patterns in datasets, manual examination of each visualization in visual patterns. Our key insight in developing zenvisage is that a collection is simply unsustainable, especially on large, complex the data exploration needs in all of these scenarios can be captured datasets. Even on moderately sized datasets, a data scientist may within a common set of operations on collections of visualizations. need to examine as many as tens of thousands of visualizations, all These operations include: composing collections of visualizations, to test a single hypothesis, a severe impediment to data exploration. filtering visualizations, based on some conditions, comparing visu- To illustrate, we describe the challenges of several collaborator alizations, and sorting them based on some condition. The condi- tions include similarity or dissimilarity to a specific pattern, “typ- groups who have been hobbled by the ineffectiveness of current data exploration tools: ical” or anomalous behavior, or the ability to provide explanatory 1

2 .or discriminatory power. These operations and conditions form the impossible to write within native SQL, and would require custom kernel of a new data exploration language, ZQL ("zee-quel"), that UDFs—these UDFs would need to be hand-written for every ZQL forms the foundation upon which zenvisage is built. query. For the small space of queries where it is possible to write Key Challenges. We encountered many challenges in building the the queries within SQL these queries require non-standard con- zenvisage visual analytics platform, a substantial advance over the structs, and are both complex and cumbersome to write, even for manually-intensive visualization tools like Tableau and Spotfire; expert SQL users, and are optimized very poorly (see Section 7). these tools enable the examination of one visualization at a time, Statistical, data mining, and machine learning certainly provide without the ability to automatically identify relevant visualizations functionality beyond zenvisage in supporting prediction and statis- from a collection of visualizations. tics; these functionalities are exposed as “one-click” algorithms First, there were many challenges in developing ZQL, the under- that can be applied on data. However, no functionality is provided lying query language for zenvisage. Unlike relational query lan- for searching for desired patterns; no querying functionality beyond guages that operate directly on data, ZQL operates on collections the one-click algorithms, and no optimization. To use such tools for of visualizations, which are themselves aggregate queries on data. ZQL, many lines of code and hand-optimization is needed. Thus, in a sense ZQL is a query language that operates on other Outline. We first describe our query language for zenvisage, ZQL queries a a first class citizen. This leads to a number of challenges (Section 2), and the graph-based query translator and optimizer for that are not addressed in a relational query language context. For ZQL (Section 3). We then describe our initial prototype of zenvis- example, we had to develop a natural way to users to specify a age (Section 4). We describe our performance experiments (Sec- collection of visualizations to operate on, without having to explic- tion 5), and present a user survey and study focused on evaluating itly list them; even though the criteria on which the visualizations the effectiveness and usability of zenvisage (Section 6). In our were compared varied widely, we had to develop a small number extended technical report [2], we provide additional details that we of general mechanisms that capture all of these criteria; often, the weren’t able to fit into the paper. In particular, we formalize the no- visualizations that we operated on had to be modified in various tion of a visual exploration algebra, an analog of relational algebra, ways—e.g., we might be interested in visualizing the sales of a describing a core set of capabilities for any language that supports product whose profits have been dropping—composing these vi- visual data exploration, and demonstrate that ZQL is complete in sualizations from existing ones is not straightforward; and lastly, that it subsumes these capabilities. drilling down into specific visualizations from a collection also re- quired special care. Our ZQL language is a synthesis of desiderata 2. QUERY LANGUAGE after discussions with data scientists from a variety of domains, and zenvisage’s query language, ZQL, provides users with a power- has been under development for the past two years. To further show ful mechanism to operate on collections of visualizations. In fact, that ZQL is complete under a new visual exploration algebra that ZQL treats visualizations as a first-class citizen, enabling users to we develop, involved additional challenges. operate at a high level on collections of visualizations much like Second, in terms of front-end development, zenvisage, being an one would operate on relational data with SQL. For example, a interactive analytics tool, needs to support the ability for users to user may want to filter out all visualizations where the visualiza- interactively specify ZQL queries—specifically, interactive short- tion shows a roughly decreasing trend from a collection, or a user cuts for commonly used ZQL queries, as well as the ability to pose may want to create a collection of visualizations which are most extended ZQL queries for more complex needs. Identifying com- similar to a visualization of interest. Regardless of the query, ZQL mon interaction “idioms” for these needs took many months. provides an intuitive, yet flexible specification mechanism for users Third, an important challenge in building zenvisage is the back- to express the desired patterns of interest (in other words, their ex- end that supports the execution of ZQL. A single ZQL query can ploration needs) using a small number of ZQL lines. Overall, ZQL lead to the generation of 10000s of visualizations—executing each provides users the ability to compose collections of visualizations, one independently as an aggregate query, would take several hours, filter them, and sort and compare them in various ways. rendering the tool somewhat useless. zenvisage’s query optimizer ZQL draws heavy inspiration from the Query by Example (QBE) operates as a wrapper over any traditional relational database sys- language [33] and uses a similar table-based specification interface. tem. This query optimizer compiles ZQL queries down to a di- Although ZQL components are not fundamentally tied to the tab- rected acyclic graph of operations on collections of visualizations, ular interface, we found that our end-users feel more at home with followed with the optimizer using a combination of intelligent spec- it; many of them are non-programmers who are used to spreadsheet ulation and combination, to issue queries to the underlying database. tools like Microsoft Excel. Users may either directly write ZQL, or We also demonstrate that the underlying problem is NP-H ARD via they may use the zenvisage front-end, which supports interactions the PARTITION P ROBLEM. Our query optimizer leads to substan- that are transformed internally into ZQL. tial improvements over the naive schemes adopted within relational We now provide a formal introduction to ZQL in the rest of this database systems for multi-query optimization. section. We introduce many sample queries to make it easy to Related Work. There are a number of tools one could use for inter- follow along, and we use a relatable fictitious product sales-based active analysis; here, we briefly describe why those tools are inad- dataset throughout this paper in our query examples—we will re- equate for the important need of automating the search for desired veal attributes of this dataset as we go along. visual insights. We describe related work in detail in Section 7. To start, visualization tools like Tableau and Spotfire only gen- 2.1 Formalization erate and provide one visualization at a time, while zenvisage ana- For describing ZQL, we assume that we are operating on a single lyzes collections of visualizations at a time, and identifies relevant relation or a star schema where the attributes are unique (barring ones from that collection—making it substantially more powerful. key-foreign key joins), allowing ZQL to seamlessly support natural While we do use relational database systems as a computation joins. In general, ZQL could be applied to arbitrary collections of layer, it is cumbersome to near-impossible to express these user relations by letting the user precede an attribute A with the relation needs in SQL. As an example, finding visualizations of solvents name R, e.g., R.A. For ease of exposition, we focus on the single for whom a given property follows a roughly increasing trend is relation case. 2

3 . Sales (million $) 70 Name X Y Z Viz Process 60 Identifier Visualization Collection Operation 50 40 Table 3: ZQL query structure. 30 Name X Y Z Viz 2012 2013 2014 2015 2016 ... ... {‘sales’, ‘profit’} ... ... Figure 1: Sales over year visualization for the product chair. Table 4: Query for the sales and profit bar charts for the product Name X Y Z Viz *f1 ‘year’ ‘sales’ ‘product’.‘chair’ bar.(y=agg(‘sum’)) chair (missing values are the same as that in Table 1) Table 1: Query for the bar chart of sales over year for the product Name X Y Z Viz chair. ... {‘year’, ‘month’} {‘sales’, ‘profit’} ... ... Name X Y Z Viz Table 5: Query for the sales and profit bar charts over years and *f1 ‘year’ ‘sales’ ‘product’.* bar.(y=agg(‘sum’)) months for chairs (missing values are the same as in Table 1). Table 2: Query for the bar chart of sales over year for each product. Name X Y Z Z2 Viz ... ... ... ... ‘location’.‘US’ ... 2.1.1 Overview Table 6: Query which returns the overall sales bar chart for the The concept of visualizations. We start by defining the notion of chairs in US (all missing values are the same as that in Table 1). a visualization. We use a sample visualization in Figure 1 to guide our discussion, Of course, different visual analysis tasks may re- Thus, by repeatedly using the X, Y, Z, and Viz columns to com- quire different types of visualizations (instead of bar charts, we may pose visualizations and the Process column to process those visu- want scatter plots or trend lines), but across all types a visualization alizations, the user is able derive the exact set of visualizations she is defined by the following five main components: (i) the x-axis at- is looking for. Note that the result of a ZQL query is the data used tribute, (ii) the y-axis attribute, (iii) the subset of data used, (iv) the to generate visualizations. The zenvisage front-end then uses this type of visualization (e.g., bar chart, scatter plot), and (v) the bin- data to render the visualizations for the user to peruse. ning and aggregation functions for the x- and y- axes. Visualization collections in ZQL: ZQL has four columns to sup- 2.1.2 X, Y, and Z port the specification of visualizations that the five aforementioned The X and Y columns specify the attributes used for the x- and components map into: (i) X, (ii) Y, (iii) Z, and (iv) Viz. y- axes. For example, Table 1 dictates that the returned visual- Table 1 gives an example of a valid ZQL query that uses these ization should have ‘year’ for its x-axis and ‘sales’ for its y-axis. columns to specify a bar chart visualization of overall sales over As mentioned, the user may also specify a collection of values for the years for the product chair (i.e., the visualization in Figure 1)— the X and Y columns if they wish to refer to a collection of visu- ignore the Name column for now. The details for each of these alizations in one ZQL row. Table 4 refers the collection of both columns are presented subsequently. In short, the x axis (X) is the sales-over-years and profit-over-years bar charts for the chair—the attribute year, the y axis (Y) is the attribute sales, and the subset missing values in this query (“...”) are the same as Table 1. As we of data (Z) is the product chair, while the type of visualization is a can see, a collection is constructed using {}. If the user wishes to bar chart (bar), and the binning and aggregation functions indicate denote all possible values, the shorthand * symbol may be used, that the y axis is an aggregate (agg) — the sum of sales. as is shown by Table 2. In the case that multiple columns contain In addition to specifying a single visualization, users may often collections, a Cartesian product is performed, and visualizations want to retrieve multiple visualizations. ZQL supports this in two for every combination of values is returned. For example, Table 5 ways. Users may use multiple rows, and specify one visualization would return the collection of visualizations with specifications: per row. The user may also specify a collection of visualizations in {(X: ‘year’, Y: ‘sales’), (X: ‘year’, Y: ‘profit’), (X: ‘month’, a single row by iterating over a collection of values for one of the Y: ‘sales’), (X: ‘month’, Y: ‘profit’)}. X, Y, Z, and Viz columns. Table 2 gives an example of how one With the Z column, the user can select which subset of the data may iterate over all products (using the notation * to indicate that they wish to construct their visualizations from. ZQL uses the the attribute product can take on all values), returning a separate attribute . attribute-value notation to denote the selection of data. sales bar chart for each product. Consequently, the query in Table 1 declares that the user wishes High-level structure of ZQL. Starting from these two examples, to retrieve the sales bar chart only for the chair product. Collec- we can now move onto the general structure of ZQL queries. Over- tions are allowed for both the attribute and the attribute value in all, each ZQL query consists of multiple rows, where each row the Z column. Table 2 shows an example of using the * short- operates on collections of visualizations. Each row contains three hand to specify a collection of bar charts, one for each product. A sets of columns, as depicted in Table 3: (i) the first column corre- Z column which has a collection over attributes might look like: sponds to an identifier for a visualization collection, (ii) the second {‘location’, ‘product’}.* (i.e., a visualization for every product set of columns defines a visualization collection, while (iii) the last and a visualization for every location). In addition, the Z col- column corresponds to some operation on the visualization collec- umn allows users to specify predicate constraints using syntax like tion. All columns can be left empty if needed (in such cases, to save ‘weight’.[? < 10]; this specifies all items whose weight is less space, for convenience, we do not display these columns in our pa- than 10 lbs. To evaluate, the ? is replaced with the attribute and the per). For example, the last column may be empty if no operation is resulting expression is passed to SQL’s WHERE clause. to be performed, like it was in Table 1 and 2. We have already dis- ZQL supports multiple constraints on different attributes through cussed (ii); now we will briefly discuss (i) and (iii), corresponding the use of multiple Z columns. In addition to the basic Z column, to Name and Process respectively. the user may choose to add Z2, Z3, ... columns depending on how Identifiers and operations in ZQL. The Process column allows many constraints she requires. Table 6 gives an example of a query the user to operate on the defined collections of visualizations, ap- which looks at sales plots for chairs only in the US. Note that Z plying high-level filtering, sorting, and comparison. The Name col- columns are combined using conjunctive semantics. umn provides a way to label and combine specified collections of visualizations, so users may refer to them in the Process column. 2.1.3 Viz 3

4 . Name X Y Viz *f1 ‘weight’ ‘sales’ bin2d.(x=nbin(20), y=nbin(20)) seemingly unrelated weight attribute. The values in the X, Y, Z, Table 7: Query which returns the heat map of sales vs. weights and Viz columns all help to specify a particular collection of visu- across all transactions. alizations from a larger collection. When this collection is defined Name X Y Z via the Name column, we no longer need to fill in the values for X, f1 ‘year’ ‘sales’ ‘product’.‘chair’ Y, Z, or Viz, except to select from the collection—here, ZQL only f2 ‘year’ ‘profit’ ‘location’.‘US’ selects the items which satisfy the constraint, weight < 10. *f3 <– f1 + f2 ‘weight’.[? < 10] Table 8: Query which returns the sales for chairs or profits for US 2.1.5 Process visualizations for all items less than 10 lbs. The real power of ZQL as a query language comes not from The Viz column decides the visualization type, binning, and ag- its ability to effortlessly specify collections of visualizations, but gregation functions for the row. Elements in this column have the rather from its ability to operate on these collections somewhat format: type . bin+aggr . All examples so far have been bar declaratively. With ZQL’s processing capabilities, users can filter charts with no binning and SUM aggregation for the y-axis, but visualizations based on trend, search for similar-looking visualiza- other variants are supported. The visualization types are derived tions, identify representative visualizations, and determine outlier from the Grammar of Graphics [32] specification language, so all visualizations. Naturally, to operate on collections, ZQL must have plots from the geometric transformation layer of ggplot [31] (the a way to iterate over them; however, since different visual analysis tool that implements Grammar of Graphics) are supported. For in- tasks might require different forms of traversals over the collec- stance, scatter plots are requested with point and heat maps with tions, we expose the iteration interface to the user. bin2d. As for binning, binning based on bin width (bin) and num- Iterations over collections. Since collections may be composed ber of bins (nbin) are supported for numerical attributes—we may of varying values from multiple columns, iterating over the col- want to use binning, for example, when we are plotting the total lections is not straight-forward. Consider Table 9—the goal is to number of products whose prices lie within 0-10, 10-20, and so on. return profit by year visualizations for the top-10 products whose Finally, ZQL supports all the basic SQL aggregation functions profit by year visualizations look the most different from the sales such as AVG, COUNT, and MAX. Table 7 is an example of a by year visualizations. While we will describe this query in de- query which uses a different visualization type, heat map, and cre- tail below, at a high level the first row assembles the visualizations ates 20 bins for both x- and y- axes. for profit over year for all products (f1), the second row assembles The Viz column allows users powerful control over the structure the visualizations for sales over year for all products (f2), followed of the rendered visualization. However, there has been work from by operating (via the Process column) on these two collections by the visualization community which automatically tries to determine finding the top-10 products who sales over year is most different the most appropriate visualization type, binning, and aggregation from profit over year, while the third row displays the profit over for a dataset based on the x- and y- axis attributes [17, 21]. Thus, year for those top-10 products. A array-based representation of the we can frequently leave the Viz column blank and zenvisage will visualization collections f1 and f2, would look like the following: use these rules of thumb to automatically decide the appropriate X: ‘year’, Y: ‘profit’ X: ‘year’, Y: ‘sales’ Z: ‘product.chair’ Z: ‘product.chair’ setting for us. With this in mind, we omit the Viz column from Z: ‘product.table’ Z: ‘product.table’ the remaining examples with the assumption that zenvisage will f1 = Z: ‘product.stapler’ f2 = Z: ‘product.stapler’ determine the “best” visualization structure for us. . . . . . . 2.1.4 Name We would like to iterate over the products—the Z dimension values— Together, the values in the X, Y, Z, and Viz columns of each row of both f1 and f2 to make our comparisons. Furthermore, we must specify a collection of visualizations. The Name column allows us iterate over the products in the same order for both f1 and f2 to en- to label these collections so that they can be referred to be in the sure that a product’s profit visualization correctly matches with its Process column. For example, f1 is the label or identifier given to sales visualization. Using a single index for this would be compli- the collection of sales bar charts in Table 2. The * in front of f1 cated and need to take into account the sizes of each of the columns. signifies that the the collection is an output collection; that is, ZQL Instead, ZQL opts for a more powerful dimension-based iteration, should return this collection of visualizations to the user. which assigns each column (or dimension) a separate iterator called However, not all rows need to have a * associated with their an axis variable. This dimension-based iteration is a powerful Name identifier. A user may define intermediate collections of vi- idea that extends to any number of dimensions. As shown in Ta- sualizations if she wishes to further process them in the Process ble 9, axis variables are defined and assigned using the syntax: column before returning the final results. In the case of Table 8, f1 variable <– collection ; axis variable v1 is assigned to the Z and f2 are examples of intermediate collections. dimension of f1 and iterates over all product values. For cases in Also in Table 8, we have an example of how the Name column which multiple collections must traverse over a dimension in the allows us to perform high-level set-like operations to combine vi- same order, an axis variable must be shared across those collec- sualization collections directly. For example, f3 <– f1 + f2 as- tions for that dimension; in Table 9, f1 and f2 share v1 for their Z signs f3 to the collection which includes all visualizations in f1 and dimension, since we want to iterate over the products in lockstep. f2 (similar to set union). This can be useful if the user wishes to Operations on collections. With the axis variables defined, the combine variations of values without considering the full Cartesian user can then formulate the high-level operations on collections of product. Our example in Table 8, the user is able to combine the visualizations as an optimization function which maximizes/mini- sales for chairs plots with the profits for the US plots without also mizes for their desired pattern. Given that argmaxx [k = 10] g(x) having to consider the sales for the US plots or the profits for chairs returns the top-10 x values which maximizes the function g(x), and plots; she would have had to do so if she had used the specification: D(x, y) returns the “distance” between x and y, now consider the (Y: {‘sales’, ‘profit’}, Z: {‘product’.‘chair’, ‘location’.‘US’}). expression in the Process column for Table 9. Colloquially, the ex- An interesting aspect of Table 8 is that the X and Y columns of pression says to find the top-10 v1 values whose D( f 1, f 2) values the third row are devoid of values, and the Z column refer to the are the largest. The f 1 and f 2 in D( f 1, f 2) refer to the collections 4

5 . Name X Y Z Process f1 ‘year’ ‘profit’ v1 <– ‘product’.* f2 ‘year’ ‘sales’ v1 v2 <– argmaxv1 [k = 10]D( f 1, f 2) *f3 ‘year’ ‘profit’ v2 Table 9: Query which returns the top 10 profit visualizations for products which are most different from their sales visualizations. of visualizations in the first and second row and are bound to the may want to look at this set of products to see what makes them current value of the iteration for v1. In other words, for each prod- so special. Rows 1 and 2 specify the sales and profit visualizations uct v1’ in v1, retrieve the visualizations f1[z: v1’] from collection for all locations and categories respectively, and the processes for f1 and f2[z: v1’] from collection f2 and calculate the “distance” these rows filter down to the locations and categories which have between these visualizations; then, retrieve the 10 v1’ values for negative trends. Then rows 3 and 4 specify the sales and profit vi- which this distance is the largest—these are the products, and as- sualizations for products in these locations and categories, and the sign v2 to this collection. Subsequently, we can access this set of processes filter the visualizations down to the ones that have pos- products in Z column of the third line of Table 9. itive trends. Finally, row 5 takes the list of output products from Formal structure. More generally, the basic structure of the Pro- the processes in rows 3 and 4 and takes the intersection of the two cess column is: returning the sales and profits visualizations for these products. argopt axvar [ limiter ] expr where Pluggable functions. While the general structure of the Process column does cover the majority of the use cases requested by our expr → max | min | ∑ | ∏ expr axvar users, users may want to write their own functions to run in a ZQL → expr (+| − | × |÷) expr query. To support this, ZQL exposes a java-based API for users to → T ( nmvar ) write their own functions. In fact, we use this interface to imple- → D( nmvar , nmvar ) ment the k-means algorithm for ZQL. While the pluggable func- argopt → (argmax|argmin|argany) tions do allow virtually any capabilities to be implemented, it is limiter → (k = N | t > R | p = R) preferred that users write their queries using the syntax of the Pro- cess column; pluggable functions are considered black-boxes and where axvar refers to the axis variables, and nmvar refers cannot be automatically optimized by the ZQL compiler. to collections of visualizations. argopt may be one of argmax, argmin, or argany, which returns the values which have the largest, 2.2 Discussion of Capabilities and Limitations smallest, and any expressions. The limiter limits the number of Although ZQL can capture a wide range of visual exploration results: k = N returns only the top-k values; t > R returns only queries, it is not limitless. Here, we give a brief description of what values who are larger than a threshold value t (may also be smaller, ZQL can do. A more formal quantification can be found in [2]. greater than equal, etc.); p = R returns the top p-percentile values. ZQL’s primary goal is to support queries over visualizations— T and D are two simple functional primitives supported by ZQL which are themselves aggregate group-by queries on data. Using that can be applied to visualizations to find desired patterns: these queries, ZQL can compose a collection of visualizations, fil- • [T ( f ) → R]: T is a function which takes a visualization f ter them in various ways, compare them against benchmarks or and returns a real number measuring some visual property of against each other, and sort the results. The functions T and D, the trend of f . One such property is “growth”, which returns while intuitive, support the ability to perform a range of computa- a positive number if the overall trend is “upwards” and a nega- tions on visualization collections—for example, any filter predicate tive number otherwise; an example implementation might be to on a single visualization, checking for a specific visual property, measure the slope of a linear fit to the given input visualization can be captured under T . Then, via the dimension-based iterators, f . Other properties could measure the skewness, or the number ZQL supports the ability to chain these queries with each other and of peaks, or noisiness of visualizations. compose new visualization collections. These simple set of op- • [D( f , f ) → R]: D is a function which takes two visualiza- erations offer unprecedented power in being able to sift through tions f and f and measures the distance (or dissimilarity) be- visualizations to identify desired trends. tween these visualizations. Examples of distance functions may Since ZQL already operates one layer above the data—on the include pointwise distance functions like Euclidean distance, visualizations—it does not support the creation of new derived data: Earth Mover’s Distance, or the Kullback-Leibler Divergence. that is, ZQL does not support the generation of derived attributes or The distance D could also be measured using the difference in values not already present in the data. The new data that is gener- the number of peaks, or slopes, or some other property. ated via ZQL is limited to those from binning and aggregating via ZQL supports many different implementations for these two func- the Viz column. This limits ZQL’s ability to perform prediction— tional primitives, and the user is free to choose any one. If the user since feature engineering is an essential part of prediction; it also does not select one, zenvisage will automatically detect the “best” limits ZQL’s ability to compose visualizations on combinations of primitive based on the data characteristics. Furthermore, if ZQL attributes at a time, e.g., A1 A2 on the X axis. Among other drawbacks does not have an implementation of the T or D function that the of ZQL: ZQL does not support (i) recursion; (ii) any data modi- user is looking for, the user may write and use their own function. fication; (iii) non-foreign-key joins nor arbitrary nesting; (iv) di- Concrete examples. With just dimension-based iteration, the opti- mensionality reduction or other changes to the attributes; (v) other mization structure of the Process column, and the functional prim- forms of processing visualization collections not expressible via T , itives T and D, we found that we were able to support the majority D or the black box; (vi) merging of visualizations (e.g., by aggre- of the visual analysis tasks required by our users. Common patterns gating two visualizations); and (vii) statistical tests. include filtering based on overall trend (Table 10), searching for the most similar visualization (Table 11), and determining outlier visu- 3. QUERY EXECUTION alizations (Table 12). Table 13 features a realistic query inspired In zenvisage, ZQL queries are automatically parsed and exe- by one of our case studies. The overall goal of the query is to find cuted by the back-end. The ZQL compiler translates ZQL queries the products which have positive sales and profits trends in loca- into a combination of SQL queries to fetch the visualization collec- tions and categories which have overall negative trends; the user tions and processing tasks to operate on them. We present a basic 5

6 . Name X Y Z Process f1 ‘year’ ‘sales’ v1 <– ‘product’.* v2 <– argmaxv1 [t < 0]T ( f 1) *f2 ‘year’ ‘sales’ v2 Table 10: Query which returns the sales visualizations for all products which have a negative trend. Name X Y Z Process f1 ‘year’ ‘sales’ ‘product’.‘chair’ f2 ‘year’ ‘sales’ v1 <– ‘product’.(* - ‘chair’) v2 <– argminv1 [k = 10]D( f 1, f 2) *f3 ‘year’ ‘sales’ v2 Table 11: Query which returns the sales visualizations for the 10 products whose sales visualizations are the most similar to the sales visualization for the chair. f1 p1 f3 p3 refers to any additional constraints specified in the Z columns. The f5 ORDER BY is inserted to ensure that all rows corresponding to f2 p2 f4 p4 a visualization are grouped together, in order. As an example, the SQL query for the c-node for f1 in Table 12 would have the form: Figure 2: The query plan for the query presented in Table 13. SELECT year, SUM(sales), product graph-based translation for ZQL and then provide several optimiza- GROUP BY year, product ORDER BY year, product tions to the graph which reduce the overall runtime considerably. If a collection is specified for the y-axis, each attribute in the collec- 3.1 Basic Translation tion is appended to the SELECT clause. If a collection is specified Every valid ZQL query can be transformed into a query plan in for the x-axis, a separate query must be issued for every X attribute the form of a directed acyclic graph (DAG). The DAG contains c- in the collection. The results of the SQL query are then packed into nodes (or collection nodes) to represent the collections of visualiza- a m-dimensional array (each dimension in the array corresponding tions in the ZQL query and p-nodes (or process nodes) to represent to a dimension in the collection) and labeled with its f# tag. the optimizations (or processes) in the Process column. Directed p-node translation: At a high level, for p-nodes, depending on the edges are drawn between nodes that have a dependency relation- structure of the expression within the process, the appropriate pseu- ship. Using this query plan, the ZQL engine can determine at each docode is generated to operate on the visualizations. To illustrate, step which visualization collection to fetch from the database or say our process is trying to find the top-10 values for which a trend which process to execute. The full steps to build a query plan for is maximized/minimized with respect to various dimensions (using any ZQL query is as follows: (i) Create a c-node or collection node T ), and the process has the form: for every collection of visualizations (including singleton collec- tions). (ii) Create a p-node or processor node for every optimiza- argopt v0 [k =k] op1 v1 op2 v2 · · · opm vm T ( f 1) (1) tion (or process) in the Process column. (iii) For each c-node, if where argopt is one of argmax or argmin, and op refers to any of its axis variables are derived as a result of a process, con- one of (max | min | ∑ | ∏). Given this, the pseudocode which op- nect a directed edge from the corresponding p-node. (iv) For each timizes this process can automatically be generated based on the p-node, connect a directed edge from the c-node of each collec- actual values of argopt , op , and the number of operations. In tion which appears in the process. Following these steps, we can short, for each op or dimension traversed over, the ZQL engine translate our realistic query example in Table 13 to its query plan generates a new nested for loop. Within each for loop, we iterate presented in Figure 2. Here, the c-nodes are annotated with f#, over all values of that dimension, evaluate the inner expression, and and the p-nodes are annotated with p# (the ith p-node refers to the then eventually apply the overall operation (e.g., max, ∑). process in the ith row of the table). Further, f1 is a root node with no dependencies since it does not depend on any process, whereas 3.2 Optimizations f5 depends on the results of both p3 and p4 and have edges coming from both of them. Once the query plan has been constructed, the We now present several optimizations to the previously intro- ZQL engine can execute it using the simple algorithm presented in duced basic translator. In preliminary experiments, we found that in Algorithm 1. the SQL queries for the c-nodes took the majority of the runtime for ZQL queries, so we concentrate our efforts on reducing the cost of A LGORITHM 1. Algorithm to execute ZQL query plan: these c-nodes. However, we do present one p-node-based optimiza- 1. Search for a node with either no parents or one whose parents have all been marked as done. tion for process-intensive ZQL queries. We start with the simplest 2. Run the corresponding task for that node and mark the node as optimization schemes, and add more sophisticated variations later. done. 3. Repeat steps 1 and 2 until all nodes have been marked as done. 3.2.1 Parallelization One natural way to optimize the graph-based query plan is to For c-nodes, the corresponding task is to retrieve the data for take advantage of the multi-query optimization (MQO) [27] present visualization collection, while for p-nodes, the corresponding task in databases and issue in parallel the SQL queries for independent is to execute the process. c-nodes—the c-nodes for which there is no dependency between c-node translation: At a high level, for c-nodes, the appropriate them. With MQO, the database can receive multiple SQL queries SQL group-by queries are issued to the database to compose the at the same time and share the scans for those queries, thereby re- data for multiple visualizations at once. Specifically, for the sim- ducing the number of times the data needs to be read from disk. plest setting where there are no collections specified for X or Y, a To integrate this optimization, we make two simple modifica- SQL query in the form of: tions to Algorithm 1. In the first step, instead of searching for a SELECT X, A(Y), Z, Z2, ... WHERE C(X, Y, Z, Z2, ...) single node whose parents have all been marked done, search for GROUP BY X, Z, Z2, ... ORDER BY X, Z, Z2, ... all nodes whose parents have been marked as done. Then in step 2, is issued to the database, where X is the X column attribute, Y is the issue the SQL queries for all c-nodes which were found in step 1 in Y column attribute, A(Y) is the aggregation function on Y (spec- parallel at the same time. For example, the SQL queries for f1 and ified in the Viz column), Z, Z2, ... are the attributes/dimensions f2 could be issued at the same time in Figure 2, and once p1 and p2 we are iterating over in the Z columns, while C(X, Y, Z, Z2, ...) are executed, SQL queries for f3 and f4 can be issued in parallel. 6

7 . Name X Y Z Process f1 ‘year’ ‘sales’ v1 <– ‘product’.* f2 ‘year’ ‘sales’ v2 <– ‘product’.* v3 <– argmaxv1 [k = 10] ∑v2 D( f 1, f 2) *f3 ‘year’ ‘sales’ v3 Table 12: Query which returns the sales visualizations for the 10 products whose sales visualizations are the most different from the others. Name X Y Z Z2 Z3 Process f1 ‘year’ ‘sales’ v1 <– ‘location’.* v2 <– arganyv1 [t < 0]T ( f 1) f2 ‘year’ ‘profit’ v3 <– ‘category’.* v4 <– arganyv3 [t < 0]T ( f 2) f3 ‘year’ ‘profit’ v5 <– ‘product’.* ‘location’.[? IN v2] ‘category’.[? IN v4] v6 <– arganyv5 [t > 0]T ( f 3) f4 ‘year’ ‘sales’ v5 ‘location’.[? IN v2] ‘category’.[? IN v4] v7 <– arganyv5 [t > 0]T ( f 4) *f5 ‘year’ {‘profit’, ‘sales’} v6 ˆ v7 Table 13: Query which returns the profit and sales visualizations for products which have positive trends in profit and sales in locations and categories which have overall negative trends. 3.2.2 Speculation over the non-related groups for each query. For Q1 , we would select While parallelization gives the ZQL engine a substantial increase the data for which C1 holds, and for each (X1, Z1) pair, we would in performance, we found that many realistic ZQL queries intrin- aggregate over the X2, Z2, and C2 groups. sically have a high level of interdependence between the nodes in While query combining is an effective optimization, there are their query plans. To further optimize the performance, we use limitations. We found that the overall runtime also depends on the speculation, i.e., the ZQL engine pre-emptively issues SQL queries number of unique group-by values per query, and the number of to retrieve the superset of visualizations for each c-node, consider- unique group-by values for a combined query is the product of the ing all possible outcomes for the axis variables. Specifically, by number of unique group-by values of the constituent queries. Thus, tracing the provenance of each axis variable back to the root, we the number of average group-by values per query grows super- can determine the superset of all values for each axis variable; linearly with respect to the number of combinations. However, we then, by considering the cartesian products of these sets, we can found that as long as the combined query had less than MG unique determine a superset of the relevant visualization collection for a group-by values, it was more advantageous to combine than not c-node. After the SQL queries have returned, the ZQL engine pro- (e.g., for our settings of PostgreSQL, we found MG = 100k). ceeds through the graph as before, and once all parent p-nodes for Formulation. Given the above findings, we can now formulate a c-node have been evaluated, the ZQL engine isolates the correct the problem of deciding which queries to combine as an optimiza- subset of data for that c-node from the pre-fetched data. tion problem: Find the best combination of SQL queries that min- For example, in the query in Table 13, f3 depends on the results imizes: α×(total number of combined queries) + ∑i (number of of p1 and p2 since it has constraints based on v2 and v4; specif- unique group-by values in combined query i), such that no single ically v2 and v4 should be locations and categories for which f1 combination has more than MG unique group-by values. and f2 have a negative trend. However, we note that v2 and v4 are As we show in the technical report [2], this optimization problem derived as a result of v1 and v3, specified to take on all locations is NP-H ARD via a reduction from the PARTITION P ROBLEM. and categories in rows 1 and 2. So, a superset of f3, the set of profit Wrinkle and Solution. However, a wrinkle to the above formu- over year visualizations for various products for all locations and lation is that it assumes no two SQL queries share a group-by at- categories (as opposed to just those that satisfy p1 and p2), could tribute. If two queries have a shared group-by attribute, it may be be retrieved pre-emptively. Later, when the ZQL engine executes more beneficial to combine those two, since the number of group- p1 and p2, this superset can be filtered down correctly. by values does not go up on combining them. Overall, we devel- One downside of speculation is that a lot more data must be re- oped the metric EFGV or the effective increase in the number of trieved from the database, but we found that blocking on the re- group-by values to determine the utility of combining query Q to trieval of data was more expensive in runtime than retrieving ex- query Q: EFGVQ (Q ) = ∏g∈G(Q ) #(g)[[g∈G(Q)]] / where G(Q) is the tra data. Thus, speculation ends up being a powerful optimization set of group-by values in Q, #(g) calculates the number of unique which compounds the positive effects of parallelization. group-by values in g, and [[g ∈ / G(Q)]] returns 1 if g ∈ / G(Q) and 0 3.2.3 Query Combination otherwise. In other words, this calculates the product of group-by From extensive modeling of relational databases, we found that values of the attributes which are in Q but not Q. Using the EFGV the overall runtime of concurrently running issuing SQL queries is metric, we then apply a variant of agglomerative clustering [10] to heavily dependent on the number of queries being run in parallel. decide the best choice of queries to combine. As we show in the ex- Each additional query constituted a Tq increase in the overall run- periments section, this technique leads to very good performance. time (e.g., for our settings of PostgreSQL, we found Tq = ~900ms). 3.2.4 Cache-Aware Execution To reduce the total number of running queries, we use query com- Although the previous optimizations were all I/O-based opti- bination; that is, given two SQL queries Q1 and Q2 , we combine mizations for ZQL, there are cases in which optimizing the exe- these two queries into a new Q3 which returns the data for both Q1 cution of p-nodes is important as well. In particular, when a pro- and Q2 . In general, if we have Q1 (and Q2 ) in the form of: cess has multiple nested for loops, the cost of the p-node may SELECT X1, A(Y1), Z1 WHERE C1(X1, Y1, Z1) start to dominate the overall runtime. To address this problem, GROUP BY X, Z1 ORDER BY X, Z1 we adapt techniques developed in high-performance computing— we can produce a combined Q3 which has the form: specifically, cache-based optimizations similar to those used in ma- SELECT X1, A(Y1), Z1, C1, X2, A(Y2), Z2, C2 trix multiplication [13]. With cache-aware execution, the ZQL en- WHERE C1 or C2 gine partitions the iterated values in the for loops into blocks of GROUP BY X1, Z1, C1, X2, Z2, C2 data which fit into the L3 cache. Then, the ZQL engine reorders ORDER BY X1, Z1, C1, X2, Z2, C2 the order of iteration in the for loops to maximize the time that where C1 = C1(X1, Y1, Z1) and C2 is defined similarly. From each block of data remains in the L3 cache. This allows the system the combined query Q3 , it is possible to regenerate the data which to minimize the amount of data the cache needs to eject and thus would have been retrieved using queries Q1 and Q2 by aggregating the amount of data that needs to be copied from main memory to 7

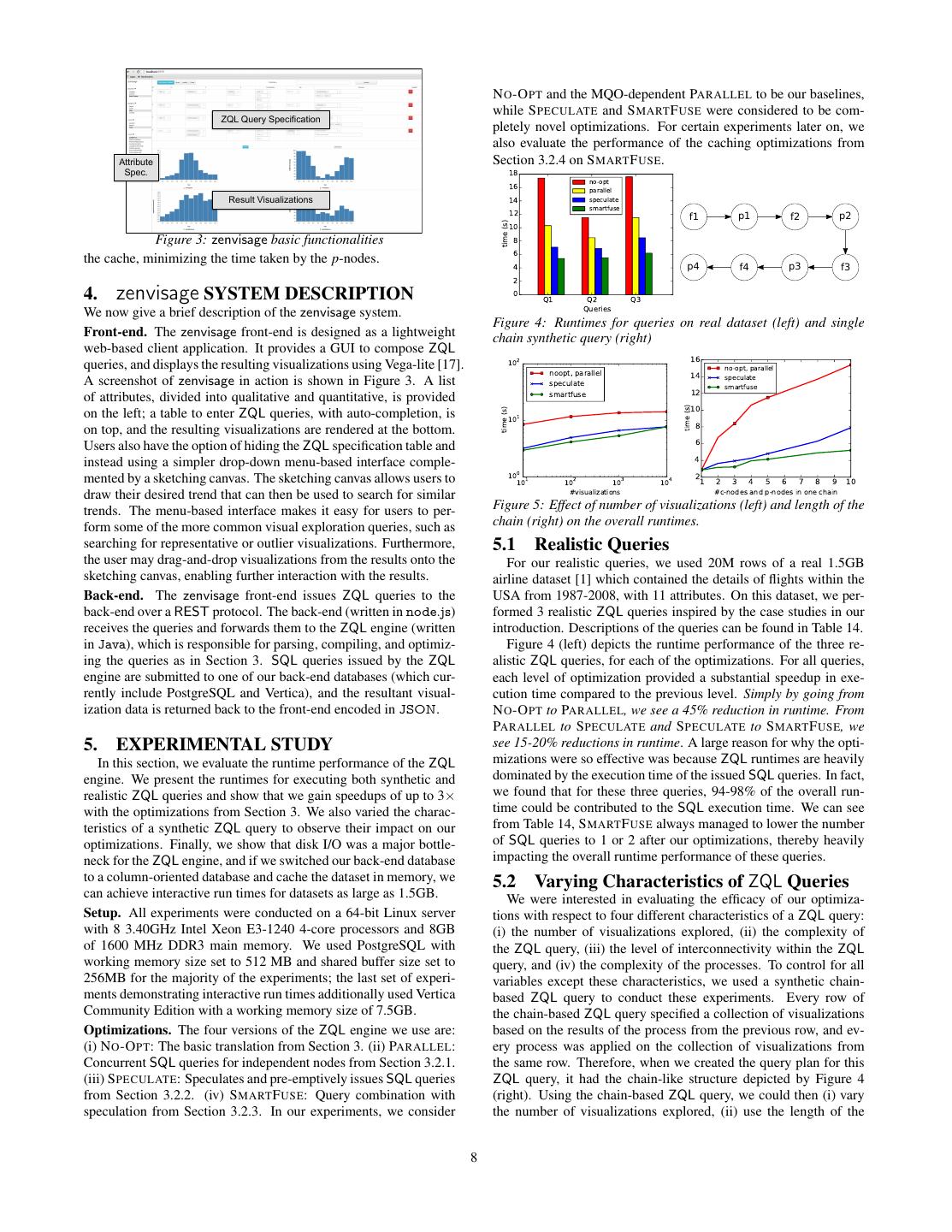

8 . N O -O PT and the MQO-dependent PARALLEL to be our baselines, while S PECULATE and S MART F USE were considered to be com- ZQL Query Specification pletely novel optimizations. For certain experiments later on, we also evaluate the performance of the caching optimizations from Attribute Section 3.2.4 on S MART F USE. Spec. 18 no-opt 16 parallel Result Visualizations 14 speculate smartfuse 12 f1 p1 f2 p2 10 time (s) Figure 3: zenvisage basic functionalities 8 6 the cache, minimizing the time taken by the p-nodes. 4 p4 f4 p3 f3 2 4. zenvisage SYSTEM DESCRIPTION 0 Q1 Q2 Q3 We now give a brief description of the zenvisage system. Queries Figure 4: Runtimes for queries on real dataset (left) and single Front-end. The zenvisage front-end is designed as a lightweight chain synthetic query (right) web-based client application. It provides a GUI to compose ZQL 102 16 queries, and displays the resulting visualizations using Vega-lite [17]. noopt, parallel no-opt, parallel 14 speculate A screenshot of zenvisage in action is shown in Figure 3. A list speculate smartfuse of attributes, divided into qualitative and quantitative, is provided smartfuse 12 10 time (s) time (s) on the left; a table to enter ZQL queries, with auto-completion, is 101 on top, and the resulting visualizations are rendered at the bottom. 8 Users also have the option of hiding the ZQL specification table and 6 instead using a simpler drop-down menu-based interface comple- 4 mented by a sketching canvas. The sketching canvas allows users to 100 1 10 102 103 104 21 2 3 4 5 6 7 8 9 10 draw their desired trend that can then be used to search for similar #visualizations #c-nodes and p-nodes in one chain trends. The menu-based interface makes it easy for users to per- Figure 5: Effect of number of visualizations (left) and length of the form some of the more common visual exploration queries, such as chain (right) on the overall runtimes. searching for representative or outlier visualizations. Furthermore, 5.1 Realistic Queries the user may drag-and-drop visualizations from the results onto the For our realistic queries, we used 20M rows of a real 1.5GB sketching canvas, enabling further interaction with the results. airline dataset [1] which contained the details of flights within the Back-end. The zenvisage front-end issues ZQL queries to the USA from 1987-2008, with 11 attributes. On this dataset, we per- back-end over a REST protocol. The back-end (written in node.js) formed 3 realistic ZQL queries inspired by the case studies in our receives the queries and forwards them to the ZQL engine (written introduction. Descriptions of the queries can be found in Table 14. in Java), which is responsible for parsing, compiling, and optimiz- Figure 4 (left) depicts the runtime performance of the three re- ing the queries as in Section 3. SQL queries issued by the ZQL alistic ZQL queries, for each of the optimizations. For all queries, engine are submitted to one of our back-end databases (which cur- each level of optimization provided a substantial speedup in exe- rently include PostgreSQL and Vertica), and the resultant visual- cution time compared to the previous level. Simply by going from ization data is returned back to the front-end encoded in JSON. N O -O PT to PARALLEL, we see a 45% reduction in runtime. From PARALLEL to S PECULATE and S PECULATE to S MART F USE, we 5. EXPERIMENTAL STUDY see 15-20% reductions in runtime. A large reason for why the opti- In this section, we evaluate the runtime performance of the ZQL mizations were so effective was because ZQL runtimes are heavily engine. We present the runtimes for executing both synthetic and dominated by the execution time of the issued SQL queries. In fact, realistic ZQL queries and show that we gain speedups of up to 3× we found that for these three queries, 94-98% of the overall run- with the optimizations from Section 3. We also varied the charac- time could be contributed to the SQL execution time. We can see teristics of a synthetic ZQL query to observe their impact on our from Table 14, S MART F USE always managed to lower the number optimizations. Finally, we show that disk I/O was a major bottle- of SQL queries to 1 or 2 after our optimizations, thereby heavily neck for the ZQL engine, and if we switched our back-end database impacting the overall runtime performance of these queries. to a column-oriented database and cache the dataset in memory, we 5.2 Varying Characteristics of ZQL Queries can achieve interactive run times for datasets as large as 1.5GB. We were interested in evaluating the efficacy of our optimiza- Setup. All experiments were conducted on a 64-bit Linux server tions with respect to four different characteristics of a ZQL query: with 8 3.40GHz Intel Xeon E3-1240 4-core processors and 8GB (i) the number of visualizations explored, (ii) the complexity of of 1600 MHz DDR3 main memory. We used PostgreSQL with the ZQL query, (iii) the level of interconnectivity within the ZQL working memory size set to 512 MB and shared buffer size set to query, and (iv) the complexity of the processes. To control for all 256MB for the majority of the experiments; the last set of experi- variables except these characteristics, we used a synthetic chain- ments demonstrating interactive run times additionally used Vertica based ZQL query to conduct these experiments. Every row of Community Edition with a working memory size of 7.5GB. the chain-based ZQL query specified a collection of visualizations Optimizations. The four versions of the ZQL engine we use are: based on the results of the process from the previous row, and ev- (i) N O -O PT: The basic translation from Section 3. (ii) PARALLEL: ery process was applied on the collection of visualizations from Concurrent SQL queries for independent nodes from Section 3.2.1. the same row. Therefore, when we created the query plan for this (iii) S PECULATE: Speculates and pre-emptively issues SQL queries ZQL query, it had the chain-like structure depicted by Figure 4 from Section 3.2.2. (iv) S MART F USE: Query combination with (right). Using the chain-based ZQL query, we could then (i) vary speculation from Section 3.2.3. In our experiments, we consider the number of visualizations explored, (ii) use the length of the 8

9 . # SQL # SQL # Visual- Query Description # c-nodes # p-nodes #T #D Queries: Queries: izations N O -O PT S MART F USE Plot the related visualizations for airports which have a correlation 6 3 670 93,000 18,642 6 1 1 between arrival delay and traveled distances for flights arriving there. Plot the delays for carriers whose delays have gone up at airports 5 4 1,000 0 11,608 4 1 2 whose average delays have gone down over the years. Plot the delays for the outlier years, outlier airports, and outlier 12 3 0 94,025 4,358 8 2 3 carriers with respect to delays. Table 14: Realistic queries for the airline dataset with the # of c-nodes, # of p-nodes, # of T functions calculated, # of D functions calculated, # of visualizations explored, # of SQL queries issued with N O -O PT, and # of SQL queries issued with S MART F USE per query. chain as a measure of complexity, (iii) introduce additional inde- number of SQL queries issued, they grew much slower compared pendent chains to decrease interconnectivity, and (iv) increase the to issuing those queries sequentially, thus leading to an almost flat number of loops in a p-node to control the complexity of processes. line in comparison to N O -O PT. To study these characteristics, we used a synthetic dataset with 10M Impact of process complexity. We increased the complexity of rows and 15 attributes (10 dimensional and 5 measure) with cardi- processes by increasing the number of loops in the first p-node from nalities of dimensional attributes varying from 10 to 10000. By 1 to 2. For the single loop, the p-node filtered based on a positive default, we set the input number of visualizations per chain to be trend via T , while for the double loop, the p-node found the out- 100, with 10 values for the X attribute, number of c-nodes per chain lier visualizations. Then, we varied the number of visualizations to as 5, the process as T (with a single for loop) with a selectivity of see how that affected the overall runtimes. Figure 6 (right) shows .50, and number of chains as 1. the results. For this experiment, we compared regular S MART F USE Impact of number of visualizations. Figure 5 (left) shows the with cache-aware S MART F USE to see how much of a cache-aware performance of N O -O PT, S PECULATE, and S MART F USE on our execution made. We observed that there was not much difference chain-based ZQL query as we increased the number of visualiza- between cache-aware S MART F USE and regular S MART F USE be- tions that the query operated on. The number of visualizations was low 5k visualizations when all data could fit in cache. After 5k increased by specifying larger collections of Z column values in the visualizations, not all the visualizations could be fit into the cache first c-node. We chose to omit PARALLEL here since it performs the same time, and thus the cache-aware execution of the p-node identically to N O -O PT. With the increase in visualizations, the had an improvement of 30-50% as the number of visualizations in- overall response time increased for all versions because the amount creased from 5k to 25k. This improvement, while substantial, is of processing per SQL query increased. S MART F USE showed bet- only a minor change in the overall runtime. ter performance than S PECULATE up to 10k visualizations due to reduction in the total number of SQL queries issued. However, at 5.3 Interactivity The previous figures showed that the overall execution times of 10k visualization, we reached the threshold of the number of unique ZQL queries took several seconds, even with S MART F USE, thus group-by values per combined query (100k for PostgreSQL), so it perhaps indicating ZQL is not fit for interactive use with large was less optimal to merge queries. At that point, S MART F USE be- datasets. However, we found that this was primarily due to the haved similarly to S PECULATE. 40 disk-based I/O bottleneck of SQL queries. In Figure 7 (left), we no-opt 101 35 single loop process show the S MART F USE runtimes of the 3 realistic queries from be- parallel two loops-block optimized process 30 speculate 100 two loops-no opt process fore on varying size subsets of the airline dataset, with the time that smartfuse 25 it takes to do a single group-by scan of the dataset. As we can see, time (s) time (s) 20 10-1 the runtimes of the queries and scan time are virtually the same, 15 indicating that S MART F USE comes very close to the optimal I/O 10 10-2 runtime (i.e., a “fundamental limit” for the system). 5 To further test our hypothesis, we ran our ZQL engine with Ver- 01 10-3 1 2 3 4 5 10 102 103 104 tica with a large working memory size to cache the data in mem- # chains of c-nodes and p-nodes #visualizations ory to avoid expensive disk I/O. The results, presented in Figure 7 Figure 6: Effect of number of independent chains (left) and the number of loops in a p-node (right) on the overall runtimes. (right), showed that there was a 50× speedup in using Vertica over Impact of the length of the chain. We varied the length of the PostgreSQL with these settings. Even with a large dataset of 1.5GB, chain in the query plan (or the number of rows in the ZQL query) to we were able to achieve sub-second response times for many queries. simulate a change in the complexity of the ZQL query and plotted Furthermore, for the dataset with 120M records (11GB, so only the results in Figure 5 (right). As the number of nodes in the query 70% could be cached), we were able to to reduce the overall re- plan grew, the overall runtimes for the different optimizations also sponse times from 100s of seconds to less than 10 seconds. Thus, grew. However, while the runtimes for both N O -O PT and S PEC - once again zenvisage returns results in a small multiple of the time ULATE grew at least linearly, the runtime for S MART F USE grew it takes to execute a single group-by query. sublinearly due to its query combining optimization. While the Overall, S MART F USE will be interactive on moderate sized datasets runtime for N O -O PT was much greater than for S PECULATE, since on PostgreSQL, or on large datasets that can be cached in mem- the overall runtime is linearly dependent on the number of SQL ory and operated on using a columnar database—which is standard queries run in parallel, we see a linear growth for S PECULATE. practice adopted by visual analytics tools [29]. Improving on inter- Impact of the number of chains. We increased the number of activity is impossible due to fundamental limits to the system; in independent chains from 1 to 5 to observe the effect on runtimes the future, we plan to explore returning approximate answers using of our optimizations; the results are presented in Figure 6 (left). samples, since even reading the entire dataset is prohibitive. While N O -O PT grew linearly as expected, all PARALLEL, S PEC - ULATE , and S MART F USE were close to constant with respect to 6. USER STUDY the number of independent chains. We found that while the over- We conducted a user study to evaluate the utility of zenvisage all runtime for concurrent SQL queries did grow linearly with the for data exploration versus two types of systems—first, visualiza- 9

10 . 103 101 singlegby singlegby query1 query1 2 query2 query2 Java; eight of them used spreadsheet software; and four of them 10 query3 query3 100 used Tableau. Data for other not as popular tools are not reported. time (s) time (s) 101 Baselines. For the purposes of our study, we explicitly wanted to 10-1 do a head-to-head qualitative and quantitative comparison with vi- 100 sual analytics tools, and thus we developed a baseline tool to com- pare zenvisage against directly. Further, via qualitative interviews, 10-1 2M 20M 120M 10-2 2M 20M 120M we compared zenvisage versus against other types of tools, such #rows #rows as databases, data mining, and programming tools. Our baseline Figure 7: S MART F USE on PostgreSQL (left) and Vertica (right) tool was developed by replicating the visualization selection capa- tion tools, similar to Tableau, and second, general database and data bilities of visual analytics tools with a styling scheme identical to mining tools, which also support interactive analytics to a certain zenvisage to control for external factors. The tool allowed users to extent. In preparation for the user study, we conducted interviews specify the X-axis, Y-axis, dimensions, and filters. The tool would with data analysts to identify the typical exploration tasks and tools then populate all visualizations meeting the specifications. used in their present workflow. Using these interviews, we identi- Dataset. We used a housing dataset from Zillow.com [6], consist- fied a set of tasks to be used in the user study for zenvisage. We ing of housing sales data for different cities, counties, and states describe these interviews first, followed by the user study details. from 2004-15, with over 245K rows, and 15 attributes. We selected 6.1 Analyst Interviews and Task Selection this dataset since participants could relate to the dataset and under- We hired seven data analysts via Upwork [5], a freelancing plat- stand the usefulness of the tasks. form—we found these analysts by searching for freelancers who Tasks. We designed the user study tasks with the case studies from had the keywords analyst or tableau in their profile. We con- Section 1 in mind, and translated them into the housing dataset. ducted one hour interviews with them to understand how they per- Further, we ensured that these tasks together evaluate eight of the form data exploration tasks. The interviewees had 3—10 years of exploration tasks described above—f, s, r, d, a, c, co, and v. One prior experience, and told about every step of their workflow; from task used in the user study is as follows: “Find three cities in the receiving the dataset to presenting the analysis to clients. The rough state of NY where the Sold Price vs Year trend is very different workflow of all interviewees identified was the following: first, data from the state's overall trend.” This query required the participants cleaning is performed; subsequently, the analysts perform data ex- to first retrieve the trend of NY (v) and characterize its distribution ploration; then, the analysts develop presentations using their find- (d), then separately filter to retrieve the cities of NY (f), compare ings. We then drilled down onto the data exploration step. the values to find a negative correlation (co), sort the results (s), We first asked the analysts what types of tools they use for data and report the top three cities on the list. exploration. Analysts reported nine different tools—the most pop- Study Protocol. The user study was conducted using a within- ular ones included Excel (5), Tableau (3), and SPSS (2). The rest of subjects study design [11], forming three phases. First, participants the tools were reported by just one analyst: Python, SQL, Alteryx, described their previous experience with data analytics tools. Next, Microsoft Visio, Microsoft BI, SAS. Perhaps not surprisingly, an- participants performed exploration tasks using zenvisage (Tool A) alysts use both visualization tools (Tableau, Excel, BI), program- and the baseline tool (Tool B), with the orders randomized to reduce ming languages (Python), statistical tools (SAS, SPSS), and rela- order effects. Participants were provided a 15-minute tutorial-cum- tional databases (SQL) for data exploration. practice session per tool to get familiarized before performing the Then, to identify the common tasks used in data exploration, we tasks. Finally, participants completed a survey that both measured used a taxonomy of abstract exploration tasks proposed by Amar their satisfaction levels and preferences, along with open-ended et al. [9]. Amar et al. developed their taxonomy through summa- questions on the strengths and weaknesses of zenvisage and the rizing the analytical questions that arose during the analysis of five baseline, when compared to other analytics tools they may have different datasets, independent of the capabilities of existing tools used. After the study, we reached out to participants with back- or interfaces. The exploration tasks in Amar et al. include: filter- grounds in data mining and programming, and asked if they could ing (f), sorting (s), determining range (r), characterizing distribu- complete a follow-up interview where they use their favorite ana- tion (d), finding anomalies (a), clustering (c), correlating attributes lytics tool for performing one of the tasks, via email. (co), retrieving value (v), computing derived value (dv), and find- Metrics. Using data that we recorded, we collected the follow- ing extrema (e). When we asked the data analysts which tasks they ing metrics: completion time, accuracy, and the usability ratings use in their workflow, the responses were consistent in that all of and satisfaction level from the survey results. In addition, we also them use all of these tasks, except for three exceptions—c, reported explicitly asked participants to compare zenvisage with tools that by four participants, and e, d, reported by six participants. they use in their workflow. For comparisons between zenvisage and Given these insights, we selected a small number of appropriate general database and data mining tools via follow-up interviews, tasks for our user study encompassing eight of the ten exploration we used the number of lines of code to evaluate the differences. tasks described above: f, s, r, d, a, c, co, v. The other two—dv Ground Truth. Two expert data analysts prepared the ground truth and e—finding derived values and computing extrema, are impor- for each the tasks in the form of ranked answers, along with score tant tasks in data analysis, but existing tools (e.g., Excel) already cut-offs on a 0 to 5 scale (5 highest). Their inter-rater agreement, provide adequate capabilities for these tasks, and we did not expect measured using Kendall’s Tau coefficient, was 0.854. We took the zenvisage to provide additional benefits. average of the two scores to rate the participants’ answers. 6.2 User Study Methodology The goal of our user study was to evaluate zenvisage with other tools, on its ability to effectively support data exploration. 6.3 Key Findings Participants. We recruited 12 graduate students as participants Three key findings emerged from the study and are described with varying degrees of expertise in data analytics. In short, half below. We use µ, σ , χ 2 to denote average, standard deviation, and of them used databases; eight of them used Matlab, R, Python or Chi-square test scores, respectively. 10

11 . with ranking as ( Finding 1: zenvisage enables faster and more accurate explo- with distances as ( ration than existing visualization tools. Since all of our tasks in- with distance_ product_year as ( volved generating multiple visualizations and comparing them to with aggregate_ product_year as ( select product, year, avg(profit) as avg_profit find desired ones, participants were not only able to complete the from table group by product, year) ) tasks faster—µ=115s, σ =51.6 for zenvisage vs. µ=172.5s, σ =50.5 select s. product as source, d. product as destination, s.year, for the baseline—but also more accurately—µ=96.3%, σ =5.82 for power(s.avg_profit - d.avg_profit,2) as distance_year from aggregate_ product_year s, aggregate_ product_year d zenvisage vs. µ=69.9%, σ =13.3 for the baseline. The baseline re- where s. product!=d. product and s.year=d.year ) quires considerable manual exploration to complete the same task, select source, destination, sum(distance_year) as distance from distance_ product_year groupby source, destination ) explaining the high task completion times; in addition, participants select source, destination, distance, frequently compromised by selecting suboptimal answers before rank() over (partition by source order by distance asc) browsing the entire list of results for better ones, explaining the rank from distances ) select source, destination, distance low accuracy. On the other hand, zenvisage is able to automate the from ranking where rank < 10; task of finding desired visualizations, considerably reducing man- Table 15: Verbose SQL query ual effort. Also of note is the fact that the accuracy with zenvisage is close to 100%—indicating that a short 15 minute tutorial on ZQL ing code may not be fair, the roughly order of magnitude difference was enough to equip users with the knowledge they needed to ad- demonstrates the power of zenvisage over existing systems. dress the tasks—and that too, within 2 minutes (on average). Finding 3: zenvisage can be improved. Participants outlined some When asked about using zenvisage vs. the baseline in their cur- areas for improvement: some requested drag-and-drop interactions rent workflow, 9 of the 12 participants stated that they would use to support additional operations, such as outlier finding; others zenvisage in their workflow, whereas only two participants stated wanted a more polished interface; and some desired bookmarking that they would use our baseline tool (χ 2 = 8.22, p<0.01). When and search history capabilities. the participants were asked how, one participant provided a specific scenario: “If I am doing my social science study, and I want to see some specific behavior among users, then I can use tool A [zenvis- 7. RELATED WORK age ] since I can find the trend I am looking for and easily see what We now discuss related prior work in a number of areas. We be- users fit into the pattern.” (P7). In response to the survey ques- gin with analytics tools — visualization tools, statistical packages tion “I found the tool to be effective in visualizing the data I want and programming libraries, and relational databases. Then, we talk to see”, the participants rated zenvisage higher (µ=4.27, σ =0.452) about other tools that overlap somewhat with zenvisage. than the baseline (µ=2.67, σ =0.890) on a five-point Likert scale. A Visual Analytics Tools. Visualization tools, such as ShowMe, participant experienced in Tableau commented: “In Tableau, there Spotfire, and Tableau [28, 22, 8], along with similar tools from is no pattern searching. If I see some pattern in Tableau, such as the database community [12, 19, 20, 18] have recently gained in a decreasing pattern, and I want to see if any other variable is de- popularity, catering to data scientists who lack programming skills. creasing in that month, I have to go one by one to find this trend. Using these tools, these scientists can select and view one visualiza- But here I can find this through the query table.” (P10). tion at a time. However, these tools do not operate on collections of Finding 2: zenvisage complements existing database and data visualizations at a time—and thus they are much less powerful and mining systems, and programming languages. When explicitly the optimization challenges are minimal. zenvisage, on the other asking participants about comparing zenvisage with the tools they hand, supports queries over collections of visualizations, returning use on a regular basis for data analysis, all participants acknowl- results not much slower than the time to execute a single query (See edged that zenvisage adds value in data exploration not encom- Section 5). Since these systems operate one visualization at a time, passed by their tools. ZQL augmented with inputs from the sketch- users are also not able to directly identify desired patterns or needs. ing canvas proved to be extremely effective. For example P8 stated: Statistical Packages and Programming Libraries: Statistical tools “you can just [edit] and draw to find out similar patterns. You'll (e.g., KNIME, RapidMiner, SAS, SPSS) support the easy applica- need to do a lot more through Matlab to do the same thing.” An- tion of data mining and statistical primitives—including prediction other experienced participant mentioned the benefits of not need- algorithms and statistical tests. While these tools support the se- ing to know much programming to accomplish certain tasks: “The lection of a prediction algorithm (e.g., decision trees) to apply, and obvious good thing is that you can do complicated queries, and the appropriate parameters, they offer no querying capabilities, and you don't have to write SQL queries... I can imagine a non-cs stu- as a result do not need extensive optimization. As a result, these dent [doing] this.” (P9). When asked about the specific tools they tools cannot support user needs like those describe in the exam- would use to solve the user study tasks, all participants reported ples in the introduction. Similarly, programming libraries such as a programming language like Matlab or Python. This is despite Weka [15] and Scikit-learn [24] embed machine learning within half of the participants reporting using a relational database regu- programs. However, manually translating the user desired patterns larly, and a smaller number of participants (2) reporting using a data into code that uses these libraries will require substantial user effort mining tool regularly. Additionally, multiple participants even with and hand-optimization. In addition, writing new code and hand- extensive programming experience reported that zenvisage would optimization will need to be performed every time the exploration take less time and fewer lines of code for certain data exploration needs change. Additionally, for both statistical tools and program- tasks. (Indeed, we found that all participants were able to complete ming libraries, there is a need for programming ability and under- the user study tasks in under 2 minutes.) In follow-up email inter- standing of machine learning and statistics to be useful—something views, we asked a few participants to respond with code from their we cannot expect all data scientists to possess. favorite data analytics tool for the user study tasks. Two partici- Relational Databases. Relational databases can certainly support pants responded — one with Matlab code, one with Python code. interactive analytics via SQL. In zenvisage, we use relational databases Both these code snippets were much longer than ZQL: as a con- as a backend computational component, augmented with an engine crete example, the participant accomplished the same task with 38 that uses S MART F USE to optimize accesses to the database, along lines of Python code compared to 4 lines of ZQL. While compar- with efficient processing code. Thus, one can certainly express 11