- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

TurKit: Human Computation Algorithms on Mechanical Turk

展开查看详情

1 . TurKit: Human Computation Algorithms on Mechanical Turk Greg Little1, Lydia B. Chilton2, Max Goldman1, Robert C. Miller1 1 2 MIT CSAIL University of Washington {glittle, maxg, rcm}@mit.edu hmslydia@cs.washington.edu ABSTRACT Mechanical Turk provides an on-demand source of human ideas = [] computation. This provides a tremendous opportunity to for (var i = 0; i < 5; i++) { explore algorithms which incorporate human computation idea = mturk.prompt( as a function call. However, various systems challenges "What’s fun to see in New York City? make this difficult in practice, and most uses of Mechanical Ideas so far: " + ideas.join(", ")) ideas.push(idea) Turk post large numbers of independent tasks. TurKit is a } toolkit for prototyping and exploring truly algorithmic hu- man computation, while maintaining a straight-forward ideas.sort(function (a, b) { imperative programming style. We present the crash-and- v = mturk.vote("Which is better?", [a, b]) rerun programming model that makes TurKit possible, return v == a ? ‐1 : 1 }) along with a variety of applications for human computation algorithms. We also present a couple case studies of TurKit Figure 1: Naturally, a programmer wants to write an used for real experiments outside our lab. algorithm to help them visit New York City. TurKit ACM Classification: H5.2 [Information interfaces and lets them use Mechanical Turk as a function call to presentation]: User Interfaces. - Prototyping. generate ideas and compare them. General terms: Algorithms, Design, Experimentation general, this paper considers human computation algo- Keywords: Human computation, Mechanical Turk, toolkit rithms, where an algorithm coordinates the contributions of INTRODUCTION humans toward some goal. Amazon’s Mechanical Turk (MTurk) is a popular web ser- Human computation and Mechanical Turk are already be- vice for paying people to do simple human computation ing explored and studied in the HCI community [7] [8] [9]. tasks. Workers on the system (turkers) are typically paid a We want to extend this study to explore algorithms involv- few cents for Human Intelligence Tasks (HITs) that can be ing humans, which is an HCI issue in itself. It requires done in under a minute. MTurk has already been used by knowing the right interface to present to each turker, as industry and academia for labeling images, categorizing well as the right information for the algorithm to pass from products, and tagging documents. one turker to the next. Currently, MTurk is largely used for independent tasks. Unfortunately, implementing algorithms on MTurk is not Task requesters post a group of HITs that can be done in easy. HITs cost money to create, and may take hours to parallel, such as labeling 1000 images. We want to explore complete. Algorithms involving many HITs may run for tasks that build on each other. Figure 1 shows a simple ex- days. These factors present a significant systems building ample of an algorithm that generates a list of suggestions challenge to programmers. Programmers must worry about for what to see in New York, and then sorts them. A more issues like: what if the machine running the program crash- sophisticated example might have turkers iteratively im- es? What if the program throws an exception after a bunch prove a passage of text, and vote on each other’s work. In of HITs have already been completed? These challenges Permission to make digital or hard copies of all or part of this work for are prohibitive enough to prevent easy prototyping and personal or classroom use is granted without fee provided that copies are exploration of human computation algorithms. not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, This paper introduces the crash-and-rerun programming to republish, to post on servers or to redistribute to lists, requires prior model to overcome these systems challenges. In this model, specific permission and/or a fee. a program can be executed many times, without repeating UIST’09, October 4–7, 2009, Victoria, British Columbia, Canada. costly work. Copyright 2009 ACM 978-1-60558-745-5/09/10...$10.00.

2 .TurKit is a toolkit for writing human computation algo- some for recursive algorithms like quicksort, which would rithms using the crash-and-rerun model. TurKit allows the require storing some representation of the call stack in the programmer to think about algorithmic tasks as simple database. straight-line imperative programs, where calls to MTurk The insight of crash-and-rerun programming is that if our appear as ordinary function calls. program crashes, it is cheap to rerun the entire program up This paper makes the following contributions: to the place it crashed, since local computation is cheap. Crash-and-Rerun Programming: A novel program- This is true as long as rerunning does not re-perform all of ming model suited to long running processes where lo- the costly external operations from the previous run. cal computation is cheap, and remote work is costly. The latter problem is solved by recording information in a database every time a costly operation is executed. Costly TurKit Script: An API for writing algorithmic MTurk operations are marked in a new primitive called once, tasks using crash-and-rerun programming. meaning they should only be executed once over all reruns TurKit Online: A public web GUI for running and of a program. Subsequent runs of the program check the managing TurKit scripts. database before performing operations marked with once Example Applications: Examples of algorithmic tasks to see if they have already been executed. explored in our lab, as well as algorithmic tasks ex- Note that this model requires the program to be determinis- plored by people outside our lab using TurKit. tic, since we are essentially storing complicated state in the Performance Evaluation: An evaluation of TurKit's logic of the program itself, rather than storing it explicitly performance drawn from a corpus of 20 scripts posting in the database. Hence, once is important in the following almost 30,000 tasks over the past year. conditions: CRASH-AND-RERUN PROGRAMMING Non-determinism. Since all calls to once need to hap- Consider a standard quicksort algorithm which outsources pen in the same order every time the program is exe- comparisons to Mechanical Turk (see Figure 2). This is a cuted, it is important that execution be deterministic. scenario where a local algorithm is making calls to an ex- Wrapping non-deterministic calls in once ensures that ternal system. Local computation is cheap, but the external their outcomes are the same in all subsequent runs of calls cost money, and must wait for humans to complete the program. work. The algorithm may need to run for a long time wait- High cost. The whole point of crash-and-rerun pro- ing on these results. gramming is to avoid incurring more cost than neces- The challenge in this scenario is managing state over a long sary. If a function is expensive (in terms of time or running process. This state can be kept in the heap, but this money), then it is important to wrap it in once so that is dangerous in case the machine reboots or the program the program only pays that cost the first time the pro- encounters an error. The error may be easy to fix, but all gram encounters the function call. Typical tasks posted the state up to that point is lost. to Mechanical Turk cost 1 to 10 cents, and take be- State can be managed in a database, but this complicates tween 30 seconds and an hour to complete. the programming model, since we need to think about how Side-effects. If functions have side-effects, then it may to record and restore state. This can be particularly cumber- be important to wrap them in once if invoking the quicksort(A) quicksort(A) if A.length > 0 if A.length > 0 pivot ← A.remove(A.randomIndex()) pivot ← A.remove(once A.randomIndex()) left ← new array left ← new array right ← new array right ← new array for x in A for x in A if compare(x, pivot) if compare(x, pivot) A left.add(x) left.add(x) else else right.add(x) right.add(x) quicksort(left) quicksort(left) quicksort(right) quicksort(right) A.set(left + pivot + right) A.set(left + pivot + right) A compare(a, b) compare(a, b) A hitId ← createHIT(...a...b...) hitId ← once createHIT(...a...b...) result ← getHITResult(hitId) result ← once getHITResult(hitId) return (result says a < b) return (result says a < b) A Figure 2: Standard quicksort algorithm that out- Figure 3: Standard quicksort augmented with the sources comparisons to Mechanical Turk. once primitive, to remember costly and non- deterministic operations for subsequent runs.

3 . side-effect multiple times will cause problems. For in- der to understand where it went wrong. This technique stance, accepting results from a HIT multiple times can also be used to retroactively extract data from an causes an error from Mechanical Turk. experiment, and print it to a file for analysis in an ex- We can add once to our quicksort algorithm by surround- ternal program, like Excel. ing the non-deterministic random pivot selection, as well as TURKIT SCRIPT the expensive MTurk calls (see Figure 3). These modifica- TurKit Script is built on top of JavaScript. Users have full tions maintain the imperative style of the algorithm. access to JavaScript, in addition to a set of APIs designed around crash-and-rerun programming and Mechanical If the program crashes at any point, then subsequent runs Turk. JavaScript was chosen because it is a common script- will encounter all calls to once in the same order as before. ing language, popularized primarily within webpages, but Any calls which succeeded on a previous run of the pro- general purpose enough for many prototyping applications. gram will have a result stored in the database, which will be returned immediately, rather than re-performing the costly Crash-and-Rerun or non-deterministic operation inside once. TurKit supports crash-and-rerun programming in JavaS- cript by providing the once function described in the pre- Since crashing is so inexpensive in this model, we can vious section. Once accepts another function as an argu- crash instead of blocking. For instance, we implement get- ment. It calls this function, and if it succeeds (i.e. it returns HITResult by crashing the program if the results are not without crashing), then it records the return value in the ready, rather than blocking until the results are ready. This database, and returns the result back to the caller. When works because once only stores results if the operation once is called on a subsequent run of the script, it checks succeeds. the database to see whether a return value has already been If the user needs to change an algorithm so that it is incom- stored. If so, it skips calling the argument function, but ra- patible with a recorded sequence of once calls, then they ther simply returns the stored value. For example: can clear this record in the database, and start afresh. Once var r = once(function () { also detects when the database is out of sync with the pro- return Math.random() gram by recording information about each operation, and }) ensuring that the same operation is performed on subse- The first time the script runs, the function is evaluated, quent runs. In such cases, the program crashes, and the user generating a new random number. This number is stored as is notified that the database and program no longer agree. the result for this call to once. The next time the script The benefits of the crash-and-rerun model include: runs, Math.random is not called, and the random number generated on the previous call is returned instead. Incremental Programming: When a crash-and-rerun program crashes, it is unloaded from the runtime sys- TurKit also provides a convenient way to crash a script. tem. This provides a convenient opportunity to modify The crash function throws a "crash" exception. Crash is the program before it is executed again, as long as the most commonly called when external data is not ready, modifications do not change the order of important op- e.g., tasks on MTurk are not complete. erations that have already been executed. TurKit pro- TurKit automatically reruns the script after an adjustable grammers can take advantage of this fact to write the time interval. Rerunning the script effectively polls Me- first part of an algorithm, run it, view the results, and chanical Turk every so often to see if any tasks have com- then decide what the rest of the program should do pleted. In addition, the online version of TurKit receives with these results. notifications from MTurk when tasks complete, and reruns Easy to Implement: Crash-and-rerun programming is any scripts waiting on these tasks. easy to implement, and does not require any special Parallelism runtime system, language support, threads or synchro- Although TurKit is single-threaded, and the programmer nization. All that is required is a database to store a se- does not need to worry about real concurrency in the sense quence of results from calls to once. of multiple paths of execution running at the same time, it does provide a mechanism for simulating simple parallel- Retroactive Print-Line-Debugging: In addition to add- ism. This is done using fork, which creates a new branch ing code to the end of a program, it is also possible to in the recorded execution trace. If crash is called inside add code to parts of a program which have already ex- this branch, the script resumes execution of the former ecuted. This is true because only expensive or non- deterministic operations are recorded. Innocuous oper- branch. Note that fork can be called within a fork to cre- ations, like printing debugging information, are not ate a tree of branches that the script will follow. recorded, since it is easy enough to simply re-perform Fork is useful in cases where a user wants to run several these operations on subsequent runs of the program. processes in parallel. They may want to run them in parallel This provides a cheap and convenient means of debug- for efficiency reasons, so they can post multiple HITs on ging in which the programmer adds print-line state- Mechanical Turk at the same time, and the script can make ments to a program which has already executed, in or-

4 .progress on whichever path gets a result first. For example, grammer’s perspective, it waits for the results to be ready consider the following code: before returning. a = createHITAndWait() // HIT A Voting b = createHITAndWait(...a...) // HIT B The crash-and-rerun programming model allows us to en- c = createHITAndWait() // HIT C capsulate human computation algorithms into functions, d = createHITAndWait(...c...) // HIT D which can be used as building blocks for more sophisticat- ed algorithms. Currently, HITs A and B must complete before HIT C is created, even though HIT C does not depend on the results One common building block is voting. We saw voting early from HITs A or B. We can instead create HIT A and C on on in Figure 1, but did not explain how it worked. Consider the first run of the script using fork as follows: a simple voting function, where we want a best 3-out-of-5 fork(function () { vote. This is possible using a single HIT with 5 assign- a = createHITAndWait() // HIT A ments (Amazon will ensure that each assignment is com- b = createHITAndWait(...a...) // HIT B pleted by a different turker). However, if we want to be }) even more cost efficient, we could ask for just 3 votes, and fork(function () { only ask for additional votes if the first 3 are not the same. c = createHITAndWait() // HIT C d = createHITAndWait(...c...) // HIT D This implies a simple algorithm: }) function vote(message, options) { // create comparison HIT The first time around, TurKit would get to the first fork, var h = mturk.createHITAndWait({ create HIT A, and try to wait for it. It would not be done, so ...message...options... it would crash that forked branch (rather than actually wait- assignments : 3}) ing), and then the next fork would create HIT C. So the // get enough votes first time the script runs, HITs A and C will be created, and while (...votes for best option < 3...) { each subsequent time it runs, it will check on both HITs to mturk.extendHIT(...add assignment...) see if they are done. h = mturk.waitForHIT(h) } TurKit also provides a join function, which ensures that a series of forks have all finished. The join function ensures // cleanup and return that all the previous forks along the current path did not mturk.deleteHIT(h) return ...best option... terminate prematurely. If any of them crashed, then join } itself crashes the current path. In our example above, we would use join if we had an additional HIT E that re- TurKit’s version of this function takes an optional third quired results from both HIT B and D: parameter to indicate the number of votes required for a single option. One could also imagine extending this func- fork(... b = ...) fork(... d = ...) tion to support different voting schemes. join() Sorting E = createHITAndWait(...b...d...) // HIT E Another building block is sorting. A first attempt at sorting Using Mechanical Turk is simple using the crash-and-rerun model. We just take The simplest way to use Mechanical Turk in TurKit is with JavaScript’s sort function and pass in our own comparator. the prompt function. This function shows a string of text to Recall from Figure 1: a turker, and returns their response: ideas.sort(function (a, b) { v = mturk.vote("Which is better?", [a, b]) print(mturk.prompt(“Where is UIST 2010?”)) return v == a ? ‐1 : 1 Prompt takes an optional argument specifying a number of }) responses to be returned as an array, so we can ask 100 One problem with this approach is that all of the compari- people for their favorite color like this: sons are performed serially, and there is no good way to get mturk.prompt("What is your favorite color?", 100) around this using JavaScript’s sort function because it requires knowing the results of each comparison before In addition to these high level functions, TurKit provides making additional comparisons. However, in TurKit we wrappers around Amazon’s MTurk REST API. These can implement a parallel quicksort, as shown in Figure 4. wrappers build on the crash-and-rerun library to make these This implementation is fairly straightforward, and shows calls safe, e.g., the createHIT function calls once inter- where TurKit’s parallel programming model succeeds. nally so that it only creates one HIT over all runs of a pro- Limits of this approach are discussed more in the discus- gram. These wrappers use the same naming conventions as sion section. MTurk, and handle the job of converting XML responses from Amazon into suitable JavaScript objects. TurKit also Creating Interfaces for Turkers provides a waitForHIT function which crashes unless the The high level functions described so far use Mechanical results are ready. It is called wait because from the pro- Turk’s custom language for creating interfaces for turkers. However, more complicated UIs involving JavaScript or

5 . a trace of once calls in an array. As the script runs, we quicksort(a) { if (a.length == 0) return maintain a pointer to the next location in this array. var pivot = a.remove(once(function () { When once is called, it checks the information stored at the return Math.floor(a.length * Math.random()) })) next location in the trace. If there is a return value there, it var left = [] returns this immediately. Otherwise, it calls the function var right = [] passed as a parameter to once. If the function succeeds, for (var i = 0; i < a.length; i++) { then it writes information about this call into the trace. Af- fork(function () { ter the call to once completes, the pointer moves to the if (vote("Which is best?", [a[i], pivot]) == a[i]) { next location in the trace. right.push(a[i]) Implementing fork requires managing a stack of instruc- } else { left.push(a[i]) tion pointers. Fork also consumes an element in the array } of once calls, except instead of storing a return value there, }) it stores another array of once calls. } join() The crash function is implemented by throwing a “crash” fork(function () { exception. This exception is caught internally by the fork quicksort(left) function, so that it can pop the forked branch off the stack }) fork(function () { of instruction pointers, and return. If crash is ever called, quicksort(right) even if it is caught by a fork, then TurKit will schedule a }) rerun of the script after some time interval. join() a.set(left.concat([pivot]).concat(right)) ONLINE WEB INTERFACE } Figure 5 shows the TurKit web-based user interface, an online IDE for writing TurKit scripts, running them, and Figure 4: A parallel quicksort in TurKit using fork automatically rerunning them. The interface also has facili- and join. ties for managing projects, editing files, viewing output, and managing the execution trace. CSS require custom webpages, which Mechanical Turk The run controls allow the user to run the project, and start will display to turkers in an iframe. and stop automatic rerunning of the script. This is neces- TurKit provides methods for generating webpages and sary in the crash-and-rerun programming model since the hosting them on TurKit’s server. Users may create script is likely to crash the first time it runs, after creating a webpages from raw HTML, or use templates provided by HIT and seeing that the results for the HIT are not ready TurKit to generate webpages with common features. yet. Starting automatic rerunning of the script will periodi- cally run the script, effectively polling Mechancial Turk One basic template feature is to disable all form elements until the results are ready. when a HIT is being previewed. MTurk provides a preview mode so that turkers can view HITs before deciding to There are also controls for switching between sandbox and work on them, but turkers may accidently fill out the form normal mode on Mechanical Turk, as well as clearing the in preview mode if they are not prevented from doing so. database. Together, these tools allow users to debug their scripts before letting them run unattended. Sandbox mode TurKit also provides a mechanism for blocking specific does not cost money, and is used for testing HITs. Users turkers from doing specific HITs. This is useful when an typically run a script in sandbox mode and complete the algorithm wants to prevent turkers who generated content HITs themselves in the MTurk sandbox. from voting on that content. This feature is implemented at the webpage level (in JavaScript) as a temporary fix until After the script appears to be working in the sandbox, the Amazon adds this functionality to their core API. programmer may reset the database. Resetting the database clears the execution trace, as well as deletes any outstand- Implementation ing HITs or webpages created by the script. The user may TurKit is written in Java, using Rhino1 to interpret JavaS- now run the script in normal mode, and it will create HITs cript code, and E4X2 to handle XML results from MTurk. again on the real MTurk without any memory of having State is persisted between runs of a TurKit script by serial- done so in the sandbox. Reseting the database is also useful izing a designated global variable as JSON. This variable is after correcting major errors in the script that invalidate the called db. recorded execution trace. The crash-and-rerun module makes use of db to store re- The execution trace panel shows a tree view representing sults between runs of the script. The basic idea is to record the recorded actions in previous runs of the script. Note that calling fork creates a new branch in this tree. Some items 1 are links, allowing the user to see the results for certain http://www.mozilla.org/rhino/ actions. In particular, createHIT has a link to the Mechan- 2 http://en.wikipedia.org/wiki/ECMAScript_for_XML

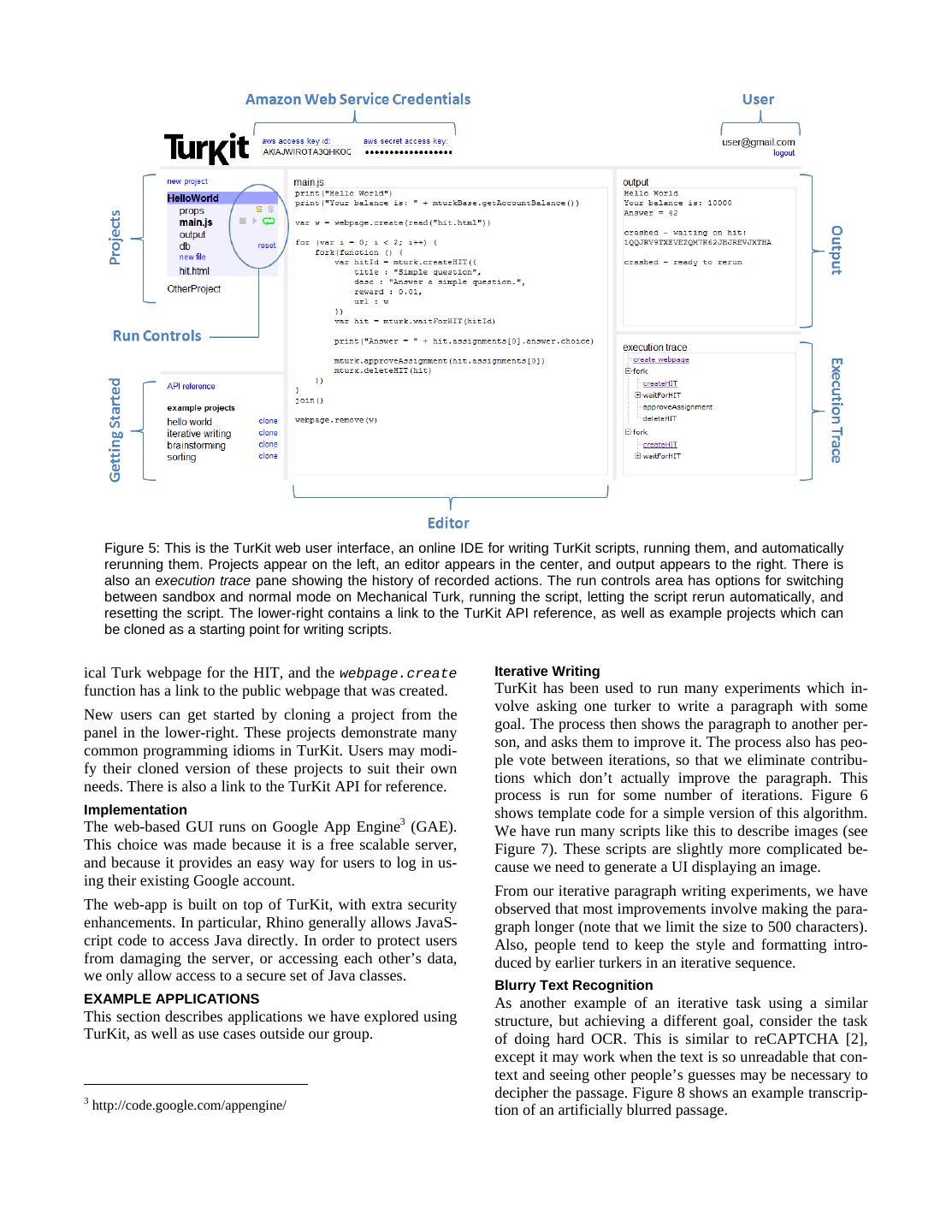

6 . Figure 5: This is the TurKit web user interface, an online IDE for writing TurKit scripts, running them, and automatically rerunning them. Projects appear on the left, an editor appears in the center, and output appears to the right. There is also an execution trace pane showing the history of recorded actions. The run controls area has options for switching between sandbox and normal mode on Mechanical Turk, running the script, letting the script rerun automatically, and resetting the script. The lower-right contains a link to the TurKit API reference, as well as example projects which can be cloned as a starting point for writing scripts. ical Turk webpage for the HIT, and the webpage.create Iterative Writing function has a link to the public webpage that was created. TurKit has been used to run many experiments which in- volve asking one turker to write a paragraph with some New users can get started by cloning a project from the goal. The process then shows the paragraph to another per- panel in the lower-right. These projects demonstrate many son, and asks them to improve it. The process also has peo- common programming idioms in TurKit. Users may modi- ple vote between iterations, so that we eliminate contribu- fy their cloned version of these projects to suit their own tions which don’t actually improve the paragraph. This needs. There is also a link to the TurKit API for reference. process is run for some number of iterations. Figure 6 Implementation shows template code for a simple version of this algorithm. The web-based GUI runs on Google App Engine3 (GAE). We have run many scripts like this to describe images (see This choice was made because it is a free scalable server, Figure 7). These scripts are slightly more complicated be- and because it provides an easy way for users to log in us- cause we need to generate a UI displaying an image. ing their existing Google account. From our iterative paragraph writing experiments, we have The web-app is built on top of TurKit, with extra security observed that most improvements involve making the para- enhancements. In particular, Rhino generally allows JavaS- graph longer (note that we limit the size to 500 characters). cript code to access Java directly. In order to protect users Also, people tend to keep the style and formatting intro- from damaging the server, or accessing each other’s data, duced by earlier turkers in an iterative sequence. we only allow access to a secure set of Java classes. Blurry Text Recognition EXAMPLE APPLICATIONS As another example of an iterative task using a similar This section describes applications we have explored using structure, but achieving a different goal, consider the task TurKit, as well as use cases outside our group. of doing hard OCR. This is similar to reCAPTCHA [2], except it may work when the text is so unreadable that con- text and seeing other people’s guesses may be necessary to 3 decipher the passage. Figure 8 shows an example transcrip- http://code.google.com/appengine/ tion of an artificially blurred passage.

7 . // generate a description of X // and iterate it N times var text = "" for (var i = 0; i < N; i++) { // generate new text var newText = mturk.prompt( "Please write/improve this paragraph describing " + X + ": " + text) // decide whether to keep it if (vote(“Which describes " + X + " better?", [text, newText]) == newText) { text = newText } Iteration 4: TV is* *festival ____ was *two *me ____ , *but ____ } *is ____ ____ TV ____ . I *two ____ tv ____ ____ ____ *festival , ____ I ____ ____ is* ____ it ____ *festival . Figure 6: Template for a simple iterative text im- Iteration 6: TV is supposed to be bad for you , but I ____ watching provement algorithm. some TV *shows . I think some TV shows are *really *advertising , and I ____ ____ is good for the ____ Iteration 12: TV is supposed to be bad for you , but I am watching some TV shows . I think some TV shows are really entertaining , and I think it is good to be entertained . Figure 8: Blurry text recognition. Errors are shown in red. The error in iteration 12 should be “like” in- stead of “am”, according to ground truth. decision problem where each person in a sequence must make a decision given information about the decision made by the previous person in the sequence. Dia wanted to test how well humans matched the theoretical optimal strategies for a particular decision problem: Iteration 1: Lightening strike in a blue sky near a tree and a building. Consider a sequence of N numbers, each chosen randomly Iteration 2: The image depicts a strike of fork lightening, striking a between -10 and 10. The goal of the participants is to guess blue sky over a silhoutted building and trees. (4/5 votes) whether the sum of the N numbers is positive or negative. Iteration 3: The image depicts a strike of fork lightning, against a Each person is provided two options, “negative” or “posi- blue sky with a few white clouds over a silhouetted building and trees. (5/5 votes) tive”, and sometimes a third option “I don’t know”. Person Iteration 4: The image depicts a strike of fork lightning, against a x in the sequence is given three pieces of information: blue sky- wonderful capture of the nature. (1/5 votes) Iteration 5: This image shows a large white strike of lightning com- the fact that they are person x in the sequence ing down from a blue sky with the tops of the trees and rooftop the xth number of the N numbers peaking from the bottom. (3/5 votes) Iteration 6: This image shows a large white strike of lightning com- the decision of the (x – 1)th person, if x > 1 ing down from a blue sky with the silhouettes of tops of the trees and rooftop peeking from the bottom. The sky is a dark blue and TurKit was used to simulate this setup using real humans the lightening is a contrasting bright white. The lightening has on Mechanical Turk, and run 50 trials of this problem for many arms of electricity coming off of it. (4/5 votes) two conditions: with and without the option “I don’t know”. The first condition replicated the findings of prior Figure 7: Iterative text improvement of an image. results which used classroom studies, and the second condi- tion found some interesting deviations in human behavior from the theoretical optimal strategy. We can see the guesses evolve over several iterations, and the final result is almost perfect. We have had good success Dia found TurKit helpful for coordinating the iterative na- getting turkers to translate difficult passages, though there ture of these experiments. However, she used an early ver- is room for improvement. For instance, if one turker early sion of TurKit, and had difficulty discovering the parallel- in the process makes poor guesses, these guesses can lead ization features in that version. subsequent turkers astray. Psychophysics Experimentation Phillip Isola, a PhD student in Brain and Cognitive Science, Decision Theory Experimentation is using TurKit to explore psychophysics. He is interested TurKit has been used to coordinate a user study in a Mas- in having turkers collaboratively sort, compare, and classify ter’s thesis outside our lab by Manal Dia: “On Decision various stimuli, in order to uncover salient dimensions in Making in Tandem Networks” [4]. The thesis presents a

8 .those stimuli. For instance, if turkers naturally sort a set of images from lightest to darkest, then we might guess that brightness is a salient dimension for classifying images. This work is related to the staircase-method in psychophys- ics, where experimenters may iteratively adjust stimuli until it is on the threshold of what a subject can perceive [3]. His current experiments involve using TurKit to run genetic algorithms where humans perform both the mutation and selection steps. For instance, he has evolved pleasant color Figure 9: Average time until the first assignment is palettes by having some turkers change various colors in completed after posting a HIT with 1, 2, 5, or 10 randomly generated palettes, and other turkers select the cents reward. Error bars show standard error. best from a small set of color palettes. He has also applied genetic algorithms to sorting. In one experiment, he shows users a list of animals, and asks them to reposition one of the animals in the list. Other users se- lect the best ordering from several candidates. Users are not told how they should sort the animals. In one instance, the result is an alphabetical sorting. Isola found TurKit to be the right tool for these tasks, since he needed to embed calls to MTurk in a larger algorithm. However, he also used an early version of TurKit, and had Figure 10: Time until the first assignment is com- difficulty discovering the parallelization features. This is- pleted for 2648 HITs with 1 cent reward. Five com- sue is discussed more in the Discussion section below. pleted within 10 seconds. PERFORMANCE EVALUATION This paper claims that the programming model is good for prototyping algorithmic tasks on MTurk, and that it sacri- fices efficiency for programming usability. One question to ask is whether the overhead is really as inconsequential as we claim, and where it breaks down. We consider a corpus of 20 TurKit experiments run over the past year, including: iterative writing, blurry text recog- nition, website clustering, brainstorming, and photo sorting. Figure 11: Time and space requirements for 20 These experiments paid turkers a total of $364.85 for TurKit scripts, given the number of HITs created by 29,731 assignments across 3,829 HITs. each script. Figure 9 and Figure 10 give a sense for how long tasks take to complete once they are posted on MTurk. Figure 9 this measure is more correlated to space and time require- shows the round-trip time for the first assignment to com- ments than “calls to once” or “assignments created”. Note plete after posting HITs with various payoffs. Part of this that for every HIT created, there is an average of 6 calls to time is spent waiting for turkers to accept each task, and the once, and 7.8 assignments created. rest is spent waiting for turkers to perform the work. Our The largest script in our corpus creates 956 HITs. It takes higher paying tasks are typically more difficult, so we ex- 10.6 seconds to rerun a full trace, and the database file is pect them to take longer to perform. However, if we sub- 7.1MBs. It takes Rhino 0.91 seconds to parse and load the tract this time, the chart still increases, meaning it takes database into memory, where the database expands to longer for turkers to start the higher paying tasks. One ex- 25.8MBs. planation is that turkers sort by reward, and 10-cent tasks are not on the first page of results. Another explanation is This means that waiting for a single human takes an order of magnitude longer than running most of our scripts, that turkers are looking for quick and easy tasks. which suggests that crash-and-rerun programming is suita- Figure 10 gives a better picture of the round-trip time-to- ble for many applications. The slowest script is faster than completion for the 1-cent tasks. The average is 4 minutes, 99% of our hit-completion times. Note that making the where 82% take between 30 seconds and 5 minutes. About script 10x slower would only be faster than 70% of hit- 0.1% complete within 10 seconds. The fastest is 7 seconds. completion times. For such a slow script, it may be worth Figure 11 gives a sense for how long TurKit scripts take to investigating options beyond the crash-and-rerun model. rerun given a fully recorded execution trace, in addition to DISCUSSION how much memory they consume. Both of these charts are We have iterated on TurKit for over a year, and received in terms of the number of HITs created by a script, since feedback from a number of users (four in our group, and

9 .two outside our group, noted above). This section discusses cannot be reused if the script changes. This means that us- what we’ve learned, including some limitations of TurKit, ers could not incrementally modify their code between runs and areas for future work. of a program, or use retroactive print-line debugging. Usability Parallel Programming Model The TurKit crash-and-rerun programming model makes it Parallel programming in the crash-and-rerun model is not easy to write simple scripts, but users have uncovered a completely general. For instance, we proposed a parallel number of usability issues. First, even when users know version of quicksort that performs the partition in parallel, that a script will be rerun many times, it is not obvious that and then sorts each sublist in parallel. However, it joins it needs to be deterministic. In particular, it is not clear that between partitioning the elements, and sorting the sublists. Math.random is dangerous, and must be wrapped in once. In theory, this is not necessary. Once we have a few ele- This led us to override Math.random with a wrapper that ments for a given sublist, we should be able to start sorting uses a random seed the first time the script executes, and it right away (provided that we chose a pivot from among uses the same seed on subsequent runs (until the database is the elements that we have so far). Doing so is possible in reset). TurKit by storing extra state information in the database, Users were also often unclear about which aspects of a but is infeasible using once, fork and join alone. TurKit script were stored in the execution trace, and which Experimental Replication parts could be modified or re-ordered. This was due primar- The crash-and-rerun programming model offers a couple of ily to the fact that many functions in TurKit call once in- interesting benefits for experimental replication using Me- ternally (such as createHIT and waitForHIT). We miti- chanical Turk. First, it is possible to give someone the gated this problem by adding a view of the execution trace source code for a completed experiment, along with the to the GUI, making clear which aspects of the script were database file. This allows them to rerun the experiment recorded. This also allows users to delete records from the without actually making calls to Mechanical Turk. In this execution trace for fine-grained control of their script. Do- way, people can investigate the methodology of an experi- ing this before required advanced knowledge of how the ment in great detail, and even introduce print-line state- trace was stored in the database. ments retroactively to reveal more information. Finally, many early TurKit users did not know about the Second, users can use the source code alone to rerun the parallel features of TurKit. Multiple users asked to be able experiment. This provides an exciting potential for experi- to create multiple HITs in a single run, and were surprised mental replication where human subjects are involved, to learn that they already could. The relevant function used since the experimental design is encoded as a program. We to be called attempt, a poor naming choice based on im- post most of our experiments on the Deneme4 blog, along plementation details, rather than the user’s mental model. with the TurKit code and database needed to rerun them. We renamed this function to fork. We also added join, RELATED WORK since most uses of the original attempt function would Programming Model employ code to check that all of the attempts had been suc- Crash-and-rerun programming is related to early work on cessful before moving on. reversible execution [11], as well as more recent work on Scalability the Java Whyline which can answer causality questions The crash-and-rerun model favors usability over efficiency, about a program after it has already executed [10]. Our but does so at an inherent cost in scalability. Whereas a implementation is more light weight, and does not require conventional program could create HITs and wait for them instrumenting a virtual machine. Crash-and-rerun pro- in an infinite loop, a crash-and-rerun program cannot. The gramming is also similar to web application programming. crash-and-rerun program will need to rerun all previous Web servers typically generate HTML for the user and then iterations of the loop every time it re-executes, and eventu- “crash” (forget their state) until the next request. The server ally the space required to store this list of actions in the preserves state between requests in a database. The differ- database will be too large. Alternatively, the time it takes to ence is that crash-and-rerun programming uses an impera- replay all of these actions will grow longer than the time it tive programming model, whereas web applications must takes to wait for a HIT to complete, in which case it may be be written using an event-driven state-machine model. better to poll inside the script, rather than rerun it. Some innovative web application frameworks allow for an One way to overcome this barrier is to use continuations imperative model, including Struts Flow5 and stateful djan- and coroutines. Rhino supports first-class continuations, go6. These and similar systems serialize continuations be- which provide the ability to save and serialize the state of a tween requests in order to preserve state, which means they running script, even along multiple paths of execution. do not share many of the important benefits of crash-and- Continuations could be saved after all important calls (like rerun programming, including incremental programming createHIT), and a try-catch block around the entire script would catch any exceptions and store all the continuations 4 http://bit.ly/deneme-blog in a database. The main drawback of this approach is that a 5 http://struts.apache.org/struts-sandbox/struts-flow/index.html 6 serialized continuation includes the code of the script, so it http://code.google.com/p/django-stateful/

10 .and retroactive debugging. This is less of an issue for web and members of the UID group. This work was supported services since the preserved state generally deals with a in part by Xerox, by the National Science Foundation under single user over a small time-span, whereas TurKit scripts award number IIS-0447800, by Quanta Computer as part of may involve hundreds of people over several days. the TParty project, and by the MIT Center for Collective Human Computation Intelligence. Any opinions, findings, conclusions or rec- Human computation systems generally involve many ommendations expressed in this publication are those of the workers making small contributions toward a goal. Quinn authors and do not necessarily reflect the views of the and Bederson give a good overview of distributed human sponsors. computation systems [16]. Individual systems have also REFERENCES been studied and explored in academic literature, including 1. Luis von Ahn. Games With A Purpose. IEEE Computer Mag- Games with a Purpose [1], Wikipedia [9] [15], and Me- azine, June 2006. Pages 96-98. chanical Turk [7] [8] [12] [13] [14]. 2. Luis von Ahn, Ben Maurer, Colin McMillen, David Abraham and Manuel Blum. reCAPTCHA: Human-Based Character Human Computation Algorithms Recognition via Web Security Measures. Science, September Many human computation systems are embarrassingly par- 12, 2008. pp 1465-1468. allel, where tasks to not depend on each other. Human 3. Cornsweet, T.N. The Staircase-Method in Psychophysics. The computation algorithms involve more complicated orches- American Journal of Psychology, Vol. 75, No. 3 (Sep., 1962), tration of human effort, where workers build on each oth- pp. 485-491 er’s work. Kosorukoff uses humans in genetic algorithms 4. Manal A. Dia. "On Decision Making in Tandem Networks". [11]. Wikipedia itself may be viewed as a human computa- M.Eng. Thesis at MIT. 2009. 5. Dai, P., Mausam, Weld, D.S. Decision-Theoretic Control of tion algorithm. Each article involves many humans adding, Crowd-Sourced Workflows. AAAI 2010. improving and moderating content. 6. Feldman, S. I. and Brown, C. B. 1988. IGOR: a system for TurKit is a toolkit for exploring human computation algo- program debugging via reversible execution. In Proceedings rithms. Human genetic algorithms, and processes within of the 1988 ACM SIGPLAN and SIGOPS Workshop on Pa- Wikipedia can be encoded as TurKit scripts and tested on rallel and Distributed Debugging. Mechanical Turk. The applications and algorithms present- 7. Heer, J., Bostock, M. Crowdsourcing Graphical Perception: Using Mechanical Turk to Assess Visualization Design. CHI ed in this paper are merely first attempts at exploring this 2010. space. Already Dai, Mausam and Weld propose decision- 8. Kittur, A., Chi, E. H., and Suh, B. 2008. Crowdsourcing user theoretic improvements to algorithms proposed in this pa- studies with MTurk. CHI 2008. per [5], which we could encode and test empirically using 9. Kittur, A. and Kraut, R. E. 2008. Harnessing the wisdom of TurKit. crowds in wikipedia: quality through coordination. CSCW CONCLUSION '08. ACM, New York, NY, 37-46 10. Ko, A. J. and Myers, B. A. Finding causes of program output TurKit is a toolkit for exploring human computation algo- with the Java Whyline. CHI 2009. rithms on Mechanical Turk. We introduce the crash-and- 11. Kosorukoff A. Human based genetic algorithm. IlliGAL re- rerun programming model for writing fault-tolerant scripts. port no. 2001004. 2001, University of Illinois, Urbana- Using this model, TurKit allows users to write algorithms Champaign. in a straight-forward imperative programming style, ab- 12. Mason, W. and Watts, D. J. Financial incentives and the "per- stracting Mechanical Turk as a function call. We present a formance of crowds". KDD-HCOMP 2009. variety of applications for TurKit, including real-world use 13. Snow, R., O'Connor, B., Jurafsky, D., and Ng, A. Y. Cheap cases from outside our lab. and fast---but is it good?: evaluating non-expert annotations for natural language tasks. EMNLP 2008. The online version of TurKit is available now, as well as 14. Sorokin, A. and D. Forsyth, "Utility data annotation with Am- the source code: turkit-online.appspot.com. In addition to azon MTurk," Computer Vision and Pattern Recognition enhancing the TurKit UI and API, we are actively using Workshops, Jan 2008. TurKit to continue exploring the field of human computa- 15. Susan L. Bryant, et al. Becoming Wikipedian: transformation tion algorithms as future work. of participation in a collaborative online encyclopedia. GROUP 2005. ACKNOWLEDGMENTS 16. Quinn, A. J., Bederson, B. B. A Taxonomy of Distributed We would like to thank everyone who contributed to this Human Computation. Technical Report HCIL-2009-23 (Uni- work, including Mark Ackerman, Michael Bernstein, Jef- versity of Maryland, College Park, 2009). frey P. Bigham, Thomas W. Malone, Robert Laubacher, Manal Dia, Phillip Isola, everyone who has tried TurKit, The columns on the last page should be of approximately equal length.