- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

ORPHEUSDB: Bolt-on Versioning for Relational Databases

展开查看详情

1 . O RPHEUS DB: Bolt-on Versioning for Relational Databases Silu Huang1 , Liqi Xu1 , Jialin Liu1 , Aaron J. Elmore2 , Aditya Parameswaran1 1 2 University of Illinois (UIUC) University of Chicago {shuang86,liqixu2,jialin2,adityagp}@illinois.edu aelmore@cs.uchicago.edu ABSTRACT storage, as well as manual supervision and maintenance to track Data science teams often collaboratively analyze datasets, gener- versions. As a worse alternative, they only store the most recent ating dataset versions at each stage of iterative exploration and versions—thereby losing the ability to retrieve the original datasets analysis. There is a pressing need for a system that can support or trace the provenance of the new versions. dataset versioning, enabling such teams to efficiently store, track, A concrete example of this phenomena occurs with biologists and query across dataset versions. We introduce O RPHEUS DB, a who operate on shared datasets, such as a protein-protein interac- dataset version control system that “bolts on” versioning capabili- tion dataset [41] or a gene annotation dataset [16], both of which ties to a traditional relational database system, thereby gaining the are rapidly evolving, by periodically checking out versions, per- analytics capabilities of the database “for free”. We develop and forming local analysis, editing, and cleaning operations, and com- evaluate multiple data models for representing versioned data, as mitting these versions into a branched network of versions. This well as a light-weight partitioning scheme, LYRE S PLIT, to further network of versions is also often repeatedly explored and queried optimize the models for reduced query latencies. With LYRE S PLIT, for global statistics and differences (e.g., the aggregate count of O RPHEUS DB is on average 103 × faster in finding effective (and protein-protein tuples with confidence in interaction greater than better) partitionings than competing approaches, while also reduc- 0.9, for each version) and for versions with specific properties (e.g., ing the latency of version retrieval by up to 20× relative to schemes versions with a specific gene annotation record, or versions with “a without partitioning. LYRE S PLIT can be applied in an online fash- bulk delete”, ones with more than 100 tuples deleted from their ion as new versions are added, alongside an intelligent migration parents). scheme that reduces migration time by 10× on average. While recent work has outlined a vision for collaborative data an- alytics and versioning [12], and has developed solutions for dataset versioning from the ground up [33, 13], these papers offer partial 1. INTRODUCTION solutions, require redesigning the entire database stack, and as such When performing data science, teams of data scientists repeat- cannot benefit from the querying capabilities that exist in current edly transform their datasets in many ways, by normalizing, clean- database systems. Similarly, while temporal databases [42, 21, 36, ing, editing, deleting, and updating one or more data items at a 27] offer functionality to revisit instances at various time intervals time; the New York Times defines data science as a step-by-step on a linear chain of versions, they do not support the full-fledged process of experimentation on data [5]. The dataset versions gener- branching and merging essential in a collaborative data analytics ated, often into the hundreds or thousands, are stored in an ad-hoc context, and the temporal functionalities offered and concerns are manner, typically via copying and naming conventions in shared very different. We revisit related work in Section 6. (networked) file systems [12]. This makes it impossible to effec- The question we ask in this paper is: can we have the best of tively manage, make sense of, or query across these versions. One both worlds—advanced querying capabilities, plus effective and alternative is to use a source code version control system like git efficient versioning in a mature relational database? More specifi- or svn to manage dataset versions. However, source code version cally, can traditional relational databases be made to support ver- control systems are both inefficient at storing unordered structured sioning for collaborative data analytics effectively and efficiently? datasets, and do not support advanced querying capabilities, e.g., To answer this question we develop a system, O RPHEUS DB1 , to querying for versions that satisfy some predicate, performing joins “bolt-on” versioning capabilities to a traditional relational database across versions, or computing some aggregate statistics across ver- system that is unaware of the existence of versions. By doing sions [12]. Therefore, when requiring advanced (SQL-like) query- so, we seamlessly leverage the analysis and querying capabilities ing capabilities, data scientists typically store each of the dataset that come “for free” with a database system, along with efficient versions as independent tables in a traditional relational database. versioning capabilities. Developing O RPHEUS DB comes with a This approach results in massive redundancy and inefficiencies in host of challenges, centered around the choice of the representa- tion scheme or the data model used to capture versions within a database, as well as effectively balancing the storage costs with the costs for querying and operating on versions: This work is licensed under the Creative Commons Attribution- Challenges in Representation. One simple approach of capturing NonCommercial-NoDerivatives 4.0 International License. To view a copy dataset versions would be to represent the dataset as a relation in of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/. For a database, and add an extra attribute corresponding to the version any use beyond those covered by this license, obtain permission by emailing 1 info@vldb.org. Orpheus is a musician and poet from ancient Greek mythology with the ability to Proceedings of the VLDB Endowment, Vol. 10, No. 10 raise the dead with his music, much like O RPHEUS DB has the ability to retrieve old Copyright 2017 VLDB Endowment 2150-8097/17/06. (“dead”) dataset versions on demand. 1130

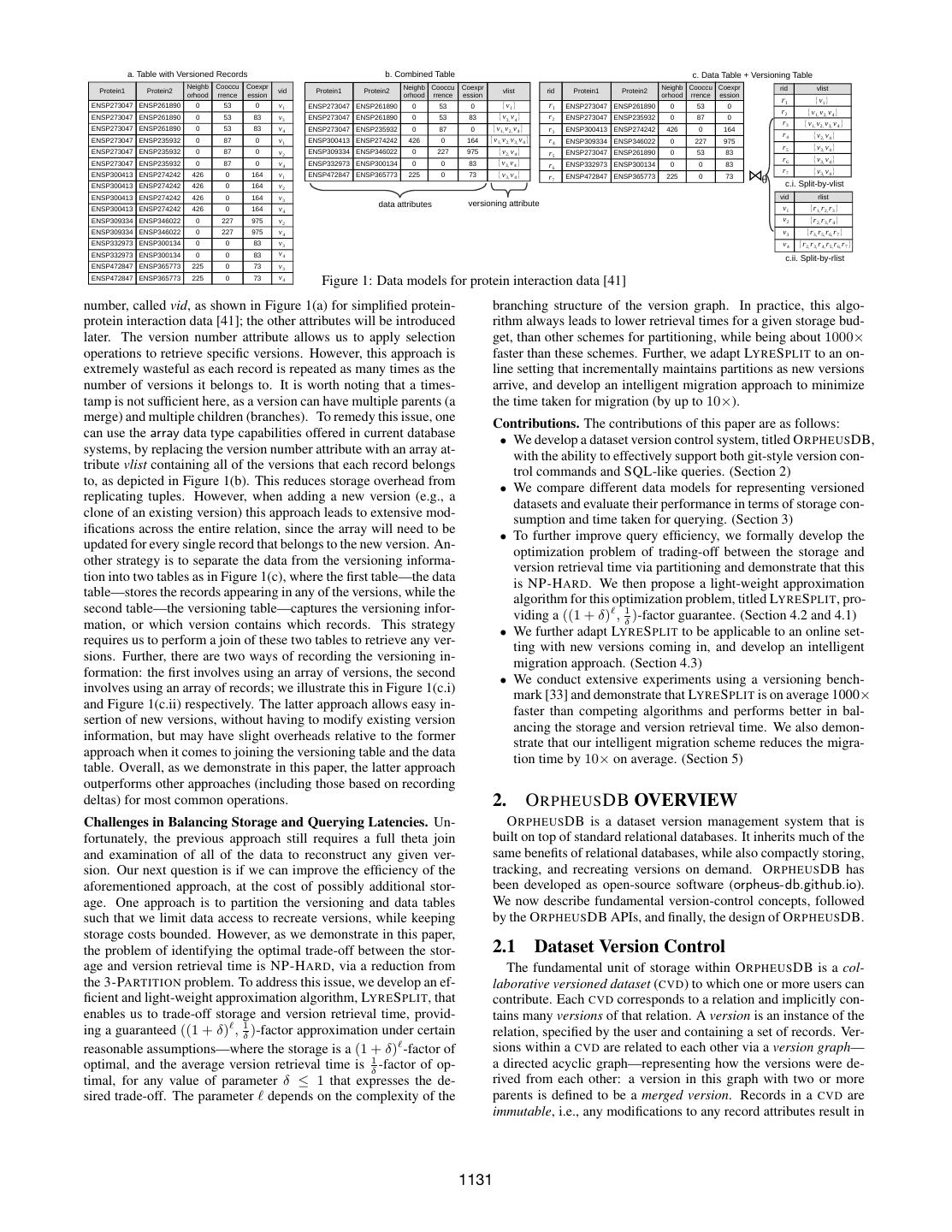

2 . a. Table with Versioned Records b. Combined Table c. Data Table + Versioning Table Neighb Cooccu Coexpr Neighb Cooccu Coexpr Neighb Cooccu Coexpr rid vlist Protein1 Protein2 vid Protein1 Protein2 vlist rid Protein1 Protein2 orhood rrence ession orhood rrence ession orhood rrence ession r1 {v 1 } ENSP273047 ENSP261890 0 53 0 v1 ENSP273047 ENSP261890 0 53 0 {v 1 } r1 ENSP273047 ENSP261890 0 53 0 r2 {v 1, v 2, v 4 } ENSP273047 ENSP261890 0 53 83 v3 ENSP273047 ENSP261890 0 53 83 {v 3, v 4 } r2 ENSP273047 ENSP235932 0 87 0 r3 {v 1, v 2, v 3, v 4 } ENSP273047 ENSP261890 0 53 83 v4 ENSP273047 ENSP235932 0 87 0 {v 1, v 2, v 4 } r3 ENSP300413 ENSP274242 426 0 164 r4 {v 2, v 4 } ENSP273047 ENSP235932 0 87 0 v1 ENSP300413 ENSP274242 426 0 164 {v 1, v 2, v 3, v 4 } r4 ENSP309334 ENSP346022 0 227 975 r5 {v 3, v 4 } ENSP273047 ENSP235932 0 87 0 v2 ENSP309334 ENSP346022 0 227 975 {v 2, v 4 } r5 ENSP273047 ENSP261890 0 53 83 {v 3, v 4 } r6 {v 3, v 4 } ENSP273047 ENSP235932 0 87 0 v4 ENSP332973 ENSP300134 0 0 83 r6 ENSP332973 ENSP300134 0 0 83 r7 {v 3, v 4 } ENSP300413 ENSP274242 426 0 164 v1 ENSP472847 ENSP365773 225 0 73 {v 3, v 4 } r7 ENSP472847 ENSP365773 225 0 73 ENSP300413 ENSP274242 426 0 164 v2 c.i. Split-by-vlist ENSP300413 ENSP274242 426 0 164 v3 vid rlist data attributes versioning attribute ENSP300413 ENSP274242 426 0 164 v4 v1 {r 1, r 2, r 3 } ENSP309334 ENSP346022 0 227 975 v2 v2 {r 2, r 3, r 4 } ENSP309334 ENSP346022 0 227 975 v4 v3 {r 3, r 5, r 6, r 7 } ENSP332973 ENSP300134 0 0 83 v3 v4 {r 2, r 3, r 4, r 5, r 6, r 7 } ENSP332973 ENSP300134 0 0 83 v4 c.ii. Split-by-rlist ENSP472847 ENSP365773 225 0 73 v3 ENSP472847 ENSP365773 225 0 73 v4 Figure 1: Data models for protein interaction data [41] number, called vid, as shown in Figure 1(a) for simplified protein- branching structure of the version graph. In practice, this algo- protein interaction data [41]; the other attributes will be introduced rithm always leads to lower retrieval times for a given storage bud- later. The version number attribute allows us to apply selection get, than other schemes for partitioning, while being about 1000× operations to retrieve specific versions. However, this approach is faster than these schemes. Further, we adapt LYRE S PLIT to an on- extremely wasteful as each record is repeated as many times as the line setting that incrementally maintains partitions as new versions number of versions it belongs to. It is worth noting that a times- arrive, and develop an intelligent migration approach to minimize tamp is not sufficient here, as a version can have multiple parents (a the time taken for migration (by up to 10×). merge) and multiple children (branches). To remedy this issue, one Contributions. The contributions of this paper are as follows: can use the array data type capabilities offered in current database • We develop a dataset version control system, titled O RPHEUS DB, systems, by replacing the version number attribute with an array at- with the ability to effectively support both git-style version con- tribute vlist containing all of the versions that each record belongs trol commands and SQL-like queries. (Section 2) to, as depicted in Figure 1(b). This reduces storage overhead from • We compare different data models for representing versioned replicating tuples. However, when adding a new version (e.g., a datasets and evaluate their performance in terms of storage con- clone of an existing version) this approach leads to extensive mod- sumption and time taken for querying. (Section 3) ifications across the entire relation, since the array will need to be • To further improve query efficiency, we formally develop the updated for every single record that belongs to the new version. An- optimization problem of trading-off between the storage and other strategy is to separate the data from the versioning informa- version retrieval time via partitioning and demonstrate that this tion into two tables as in Figure 1(c), where the first table—the data is NP-H ARD. We then propose a light-weight approximation table—stores the records appearing in any of the versions, while the algorithm for this optimization problem, titled LYRE S PLIT, pro- second table—the versioning table—captures the versioning infor- viding a ((1 + δ) , 1δ )-factor guarantee. (Section 4.2 and 4.1) mation, or which version contains which records. This strategy • We further adapt LYRE S PLIT to be applicable to an online set- requires us to perform a join of these two tables to retrieve any ver- ting with new versions coming in, and develop an intelligent sions. Further, there are two ways of recording the versioning in- migration approach. (Section 4.3) formation: the first involves using an array of versions, the second • We conduct extensive experiments using a versioning bench- involves using an array of records; we illustrate this in Figure 1(c.i) mark [33] and demonstrate that LYRE S PLIT is on average 1000× and Figure 1(c.ii) respectively. The latter approach allows easy in- faster than competing algorithms and performs better in bal- sertion of new versions, without having to modify existing version ancing the storage and version retrieval time. We also demon- information, but may have slight overheads relative to the former strate that our intelligent migration scheme reduces the migra- approach when it comes to joining the versioning table and the data tion time by 10× on average. (Section 5) table. Overall, as we demonstrate in this paper, the latter approach outperforms other approaches (including those based on recording deltas) for most common operations. 2. O RPHEUS DB OVERVIEW Challenges in Balancing Storage and Querying Latencies. Un- O RPHEUS DB is a dataset version management system that is fortunately, the previous approach still requires a full theta join built on top of standard relational databases. It inherits much of the and examination of all of the data to reconstruct any given ver- same benefits of relational databases, while also compactly storing, sion. Our next question is if we can improve the efficiency of the tracking, and recreating versions on demand. O RPHEUS DB has aforementioned approach, at the cost of possibly additional stor- been developed as open-source software (orpheus-db.github.io). age. One approach is to partition the versioning and data tables We now describe fundamental version-control concepts, followed such that we limit data access to recreate versions, while keeping by the O RPHEUS DB APIs, and finally, the design of O RPHEUS DB. storage costs bounded. However, as we demonstrate in this paper, the problem of identifying the optimal trade-off between the stor- 2.1 Dataset Version Control age and version retrieval time is NP-H ARD, via a reduction from The fundamental unit of storage within O RPHEUS DB is a col- the 3-PARTITION problem. To address this issue, we develop an ef- laborative versioned dataset (CVD) to which one or more users can ficient and light-weight approximation algorithm, LYRE S PLIT, that contribute. Each CVD corresponds to a relation and implicitly con- enables us to trade-off storage and version retrieval time, provid- tains many versions of that relation. A version is an instance of the ing a guaranteed ((1 + δ) , 1δ )-factor approximation under certain relation, specified by the user and containing a set of records. Ver- reasonable assumptions—where the storage is a (1 + δ) -factor of sions within a CVD are related to each other via a version graph— optimal, and the average version retrieval time is 1δ -factor of op- a directed acyclic graph—representing how the versions were de- timal, for any value of parameter δ ≤ 1 that expresses the de- rived from each other: a version in this graph with two or more sired trade-off. The parameter depends on the complexity of the parents is defined to be a merged version. Records in a CVD are immutable, i.e., any modifications to any record attributes result in 1131

3 .a new record, and are stored and treated separately within the CVD. table to the parent versions. If any records were added or modified Overall, there is a many-to-many relationship between records and these records are treated as new records and added to the CVD. An versions: each record can belong to many versions, and each ver- alternative is to compare the new records with all of the existing sion can contain many records. Each version has a unique version records in the CVD to check if any of the new records have existed id, vid, and each record has its unique record id, rid. The record ids in any version in the past, which would take longer to execute. At are used to identify immutable records within the CVD and are not the same time, the latter approach would identify records that were visible to end-users of O RPHEUS DB. In addition, the relation cor- deleted then re-added later. Since we believe that this is not a com- responding to the CVD may have primary key attribute(s); this im- mon case, we opt for the former approach, which would only lead plies that for any version no two records can have the same values to modest additional storage at the cost of much less computation for the primary key attribute(s). O RPHEUS DB can support multiple during commit. We call this the no cross-version diff implementa- CVDs at a time. However, in order to better convey the core ideas tion rule. Lastly, if the schema of the table that is being committed of O RPHEUS DB, in the rest of the paper, we focus our discussion is different from the CVD it derived from, we alter the CVD to in- on a single CVD. corporate the new schema; we discuss this in Section 3.3. In order to support data science workflows, we additionally sup- 2.2 O RPHEUS DB APIs port the use of checkout and commit into and from csv (comma sep- Users interact with O RPHEUS DB via the command line, using arated value) files via slightly different flags: -f for csv instead of both SQL queries, as well as git-style version control commands. -t. The csv file can be processed in external tools and programming In our companion demo paper, we also describe an interactive user languages such as Python or R, not requiring that users perform the interface depicting the version graph, for users to easily explore modifications and analysis using SQL. However, during commit, and operate on dataset versions [44]. To make modifications to ver- the user is expected to also provide a schema file via a -s flag so sions, users can either use SQL operations issued to the relational that O RPHEUS DB can make sure that the columns are mapped in database that O RPHEUS DB is built on top of, or can alternatively the correct manner. Internally, O RPHEUS DB also tracks the name operate on them using programming or scripting languages. We of the csv file as being derived from one or more versions of the begin by describing the version control commands. CVD , just like it does with the materialized tables. Version control commands. Users can operate on CVDs much like In addition to checkout and commit, O RPHEUS DB also supports they would with source code version control. The first operation is other commands, described very briefly here: (a) diff: a standard checkout: this command materializes a specific version of a CVD differencing operation that compares two versions and outputs the as a newly created regular table within a relational database that records in one but not the other. (b) init: initialize either an ex- O RPHEUS DB is connected to. The table name is specified within ternal csv file or a database table as a new CVD in O RPHEUS DB. the checkout command, as follows: (c) create_user, config, whoami: allows users to register, login, and checkout [cvd] -v [vid] -t [table name] view the current user name. (d) ls, drop: list all the CVDs or drop a particular CVD. (e) optimize: as we will see later, O RPHEUS DB Here, the version with id vid is materialized as a new table [table can benefit from intelligent incremental partitioning schemes (en- name] within the database, to which standard SQL statements can abling operations to process much less data). Users can setup the be issued, and which can later be added to the CVD as a new ver- corresponding parameters (e.g., storage threshold, tolerance factor, sion. The version from which this table was derived (i.e., vid) is described later) via the command line; the O RPHEUS DB backend referred to as the parent version for the table. will periodically invoke the partitioning optimizer to improve the Instead of materializing one version at a time, users can mate- versioning performance. rialize multiple versions, by listing multiple vids in the command above, essentially merging multiple versions to give a single table. SQL commands. O RPHEUS DB supports the use of SQL com- When merging, the records in the versions are added to the table in mands on CVDs via the command line using the run command, the precedence order listed after -v: for any record being added, if which either takes a SQL script as input or the SQL statement as a another record with the same primary key has already been added, string. Instead of materializing a version (or versions) as a table via it is omitted from the table. This ensures that the eventual ma- the checkout command and explicitly applying SQL operations on terialized table also respects the primary key property. There are that table, O RPHEUS DB also allows users to directly execute SQL other conflict-resolution strategies, such as letting users resolve queries on a specific version, using special keywords VERSION, conflicted records manually; for simplicity, we use a precedence OF, and CVD via syntax based approach. Internally, the checkout command records the ver- SELECT ... FROM VERSION [vid] OF CVD [cvd], ... sions that this table was derived from, along with the table name. Note that only the user who performed the checkout operation is without having to materialize it. Further, by using renaming, users permitted access to the materialized table, so they can perform any can operate directly on multiple versions (each as a relation) within analysis and modification on this table without interference from a single SQL statement, enabling operations such as joins across other users, only making these modifications visible when they use multiple versions. the commit operation, described next. However, listing each version individually as described above The commit operation adds a new version to the CVD, by mak- may be cumbersome for some types of queries that users wish to ing the local changes made by the user on their materialized table run, e.g., applying an aggregate across a collection of versions, visible to others. The commit command has the following format: or identifying versions that satisfy some property. For this, O R - commit -t [table name] -m [commit message] PHEUS DB also supports constructs that enable users to issue ag- The command does not need to specify the intended CVD since O R - gregate queries across CVDs grouped by version ids, or select ver- PHEUS DB internally maintains a mapping between the table name sion ids that satisfy certain constraints. Internally, these constructs and the original CVD. In addition, since the versions that the table are translated into regular SQL queries that can be executed by the was derived from originally during checkout are internally known underlying database system. In addition, O RPHEUS DB provides to O RPHEUS DB, the table is added to the CVD as a new version shortcuts for several types of queries that operate on the version with those versions as parent versions. During the commit opera- graph, e.g., listing the descendant or ancestors of a specific ver- tion, O RPHEUS DB compares the (possibly) modified materialized sion, or querying the metadata, e.g., identify the last modification 1132

4 .(in time) to the CVD. The details of the query syntax, translation, as For example, the tuple of <ENSP273047, ENSP261890, 0, 53, 83> well as examples can be found in our companion demo paper [44]. in Figure 1(a) exists in two versions: v3 and v4 . (Note that even though <protein1, protein2> is the primary key, it is only the pri- 2.3 System Architecture mary key for any single version and not across all versions.) How- SQL Version Control ever, this approach implies that we would need to duplicate each Command Command SQL Command Client record as many times as the number of versions it is in, leading to severe storage overhead due to redundancy, as well as inefficiency Query Translator Access Controller Partition Optimizer for several operations, including checkout and commit. We focus on alternative approaches that are more space efficient and dis- Record Manager Version Manager Provenance Manager cuss how they can support the two most fundamental operations— SQLs commit and checkout—on a single version at a time. Considera- Translation Layer tions for multiple version checkout is similar to that for a single Database Communicator DBMS version; our findings generalize to that case as well. Approach 1: The Combined Table Approach. Our first approach Partition Checkout Tables CVDs Information of representing the data and versioning information for a CVD is the combined table approach. As before, we augment the schema with Figure 2: O RPHEUS DB Architecture an additional versioning attribute, but now, the versioning attribute We implement O RPHEUS DB as a middleware layer or wrapper is of type array and is named vlist (short for version list) as shown between end-users (or application programs) and a traditional re- in Figure 1(b). For each record the vlist is the ordered list of ver- lational database system—in our case, PostgreSQL. PostgreSQL sion ids that the record is present in, which serves as an inverted is completely unaware of the existence of versioning, as version- index for each record. Returning to our example, there are two ver- ing is handled entirely within the middleware. Figure 2 depicts the sions of records corresponding to <ENSP273047, ENSP261890>, overall architecture of O RPHEUS DB. O RPHEUS DB consists of six with coexpression 0 and 83 respectively—these two versions are core modules: the query translator is responsible for parsing in- depicted as the first two records, with an array corresponding to v1 put and translating it into SQL statements understandable by the for the first record, and v3 and v4 for the second. underlying database system; the access controller monitors user Even though array is a non-atomic data type, it is commonly sup- permissions to various tables and files within O RPHEUS DB; the ported in many database systems [8, 3, 1]; thus O RPHEUS DB can partition optimizer is responsible for periodically reorganizing and be built with any of these systems as the back-end database. As our optimizing the partitions via a partitioning algorithm LYRE S PLIT implementation uses PostgreSQL, we focus on this system for the along with a migration engine to migrate data from one partition- rest of the discussion, even though similar considerations apply to ing scheme to another, and is the focus of Section 4; the record the rest of the databases listed. manager is in charge of recording and retrieving information about For the combined table approach, committing a new version to records in CVDs; the version manager is in charge of recording the CVD is time-consuming due to the expensive append operation and retrieving versioning information, including the rids each ver- for every record present in the new version. Consider the scenario sion contains as well as the metadata for each version; and the where the user checks out version vi into a materialized table T provenance manager is responsible for the metadata of uncommit- and then immediately commits it back as a new version vj . The ted tables or files, such as their parent version(s) and the creation query translator parses the user commands and generates the cor- time. At the backend, a traditional DBMS, we maintain CVDs that responding SQL queries for checkout and commit as shown in Ta- consist of versions, along with the records they contain, as well as ble 1. When checking out vi into a materialized table T , the array metadata about versions. In addition, the underlying DBMS con- containment operator ‘ARRAY[vi ] <@ vlist’ first examines whether tains a temporary staging area consisting of all of the materialized vi is contained in vlist for each record in CVD, then all records that tables that users can directly manipulate via SQL without going satisfy that condition are added to the materialized table T . Next, through O RPHEUS DB. Understanding how to best represent and when T is committed back to the CVD as a new version vj , for operate on these CVDs within the underlying DBMS is an impor- each record in the CVD, if it is also present in T (i.e., the WHERE tant challenge—this is the focus of the next section. clause), we append vj to the attribute vlist (i.e., vlist=vlist+vj ). In this case, since there are no new records that are added to the CVD, 3. DATA MODELS FOR CVDs no new records are added to the combined table. However, even In this section, we consider and compare methods to represent this process of appending vj to vlist can be expensive especially and operate on CVDs within a backend relational database, starting when the number of records in vj is large, as we will demonstrate. with the data within versions, and then the metadata about versions. Approach 2: The Split-by-vlist Approach. Our second approach addresses the limitations of the expensive commit operation for the 3.1 Versions and Data: The Models combined table approach. We store two tables, keeping the version- To explore alternative storage models, we consider the array- ing information separate from the data information, as depicted in based data models, shown in Figure 1, and compare them to a delta- Figure 1(c)—the data table and the versioning table. The data ta- based data model, which we describe later. The table(s) in Figure 1 ble contains all of the original data attributes along with an extra displays simplified protein-protein interaction data [41], and has a primary key rid, while the versioning table maintains the mapping composite primary key <protein1, protein2>, along with numerical between versions and rids. The rid attribute was not needed in the attributes indicating sources and strength of interactions: neighbor- previous approach since it was not necessary to associate identifiers hood represents how frequently the two proteins occur close to each with the immutable records. There are two ways we can store the other in runs of genes, cooccurrence reflects how often the two pro- versioning data. The first approach is to store the rid along with teins co-occur in the species, and coexpression refers to the level to the vlist, as depicted in Figure 1(c.i). We call this approach split- which genes are co-expressed in the species. by-vlist. Split-by-vlist has a similar SQL translation as combined- One approach, as described in the introduction, is to augment table for commit, while it incurs the overhead of joining the data the CVD’s relational schema with an additional versioning attribute. table with the versioning table for checkout. Specifically, we select 1133

5 . Table 1: SQL Queries for Checkout and Commit Commands with Different Data Models Command SQL Translation with combined-table SQL Translation with Split-by-vlist SQL Translation with Split-by-rlist SELECT * into T’ FROM dataTable, SELECT * into T’ FROM dataTable, (SELECT rid AS rid_tmp (SELECT unnest(rlist) AS rid_tmp SELECT * into T’ FROM T CHECKOUT FROM versioningTable FROM versioningTable WHERE ARRAY[vi ] <@ vlist WHERE ARRAY[vi ] <@ vlist) AS tmp WHERE vid = vi ) AS tmp WHERE rid = rid_tmp WHERE rid = rid_tmp UPDATE versioningTable UPDATE T SET vlist=vlist+vj INSERT INTO versioningTable SET vlist=vlist+vj COMMIT WHERE rid in VALUES (vj , WHERE rid in (SELECT rid FROM T’) ARRAY[SELECT rid FROM T’]) (SELECT rid FROM T’) the rids that are in the version to be checked out and store it in the 3.2 Versions and Data: The Comparison table tmp, followed by a join with the data table. We perform an experimental evaluation between the approaches Approach 3: The Split-by-rlist Approach. Alternatively, we can described in the previous section on storage size, and commit and organize the versioning table with a primary key as vid (version checkout time. We focus on the commit and checkout times since id), and another attribute rlist, containing the array of the records they are the primitive versioning operations on which the other present in that particular version, as in Figure 1(c.ii). We call this more complex operations and queries are built on. It is important approach the split-by-rlist approach. When committing a new ver- that these operations are efficient, because data scientists checkout sion vj from the materialized table T , we only need to add a single a version to start working on it immediately, and often commit a tuple in the versioning table with vid equal to vj , and rlist equal to version to have their changes visible to other data scientists who the list of record ids in T . This eliminates the expensive array may be waiting for them. appending operations that are part of the previous two approaches, In our evaluation, we use four versioning benchmark datasets making the commit command much more efficient. For the check- SCI_1M, SCI_2M, SCI_5M and SCI_8M, each with 1M , 2M , 5M out command for version vi , we first extract the record ids associ- and 8M records respectively, that will be described in detail in Sec- ated with vi from the versioning table, by applying the unnesting tion 5.1. For split-by-vlist, a physical primary key index is built on operation: unnest(rlist), following which we join the rids with the rid in both the data table and the versioning table; for split-by-rlist, data table to identify all of the relevant records. a physical primary key index is built on rid in the data table and on vid in the versioning table. When calculating the total storage So far, all our models support convenient rewriting of arbitrary size, we count the index size as well. Our experiment involves first and complex versioning queries into SQL queries understood by checking out the latest version vi into a materialized table T and the backend database; see details in our demo paper [44]. However, then committing T back into the CVD as a new version vj . We our delta-based model, discussed next, does not support convenient depict the experimental results in Figure 3. rewritings for some of the more advanced queries, e.g., “find ver- sions where the total count of tuples with protein1 as ENSP273047 Storage. From Figure 3(a), we can see that a-table-per-version is greater than 50”: in such cases, delta-based model essentially takes 10× more storage than the other data models. This is be- needs to recreate all of the versions, and/or perform extensive and cause each record exists on average in 10 versions. Compared to expensive computation outside of the database. Thus, even though a-table-per-version and combined-table, split-by-vlist and split-by- this model does not support advanced analytics capabilities “for rlist deduplicate the common records across versions and therefore free”, we include it in our comparison to contrast its performance have roughly similar storage. In particular, split-by-vlist and split- to the array-based models. by-rlist share the same data table, and thus the difference can be at- tributed to the difference in the size of the versioning table. For the Approach 4: Delta-based Approach. Here, each version records delta-based approach, the storage size is similar to or even slightly the modifications (or deltas) from its precedent version(s). Specifi- smaller than split-by-vlist and split-by-rlist. This is because our cally, each version is stored as a separate table, with an added tomb- versioning benchmark contains only a few deleted tuples (opting stone boolean attribute indicating the deletion of a record. In ad- instead for updates or inserts); in other cases, where deleted tuples dition, we maintain a precedent metadata table with a primary key are more prevalent, the storage in the delta-based approach is worse vid and an attribute base indicating from which version vid stores than split-by-vlist/rlist, since the deleted records will be repeated. the delta. When committing a new version vj , a new table stores the delta from its previous version vi . If vj has multiple parents, Commit. From Figure 3(b), we can see that the combined-table we will store vj as the modification from the parent that shares the and split-by-vlist take multiple orders of magnitude more time than largest common number of records with vj . (Storing deltas from split-by-rlist for commit. We also notice that the commit time when multiple parents would make reconstruction of a version compli- using combined-table is almost 104 s as the dataset size increases: cated, since we would need to trace back multiple paths in the ver- when using combined-table, we need to add vj to the attribute vlist sion graph. Here, we opt for the simpler solution.) A new record for each record in the CVD that is also present in T . Similarly, for is then inserted into the metadata table, with vid as vj and base as split-by-vlist, we need to perform an append operation for several vi . For the checkout command for version vi , we trace the version tuples in the versioning table. On the contrary, when using split- lineage (via the base attribute) all the way back to the root. If an by-rlist, we only need to add one tuple to the versioning table, thus incoming record has occurred before, it is discarded; otherwise, if getting rid of the expensive array appending operations. A-table- it is marked as “insert”, we insert it into the checkout table T . per-version also has higher latency for commit than split-by-rlist since it needs to insert all the records in T into the CVD. For the Approach 5: The A-Table-Per-Version Approach. Our final array- delta-based approach, the commit time is small since the new ver- based data model is impractical due to excessive storage, but is use- sion vj is exactly the same as its precedent version vi . It only needs ful from a comparison standpoint. In this approach, we store each to update the precedent metadata table, and create a new empty ta- version as a separate table. We include a-table-per-version in our ble. The commit time of the delta-based approach is not small in comparison; we do not include the approach in Figure 1a, contain- general when there are extensive modifications to T , as illustrated ing a table with duplicated records, since it would do similarly in by other experiments (not displayed); For instance, for a committed terms of storage and commit times to a-table-per-version, but worse in terms of checkout times. 1134

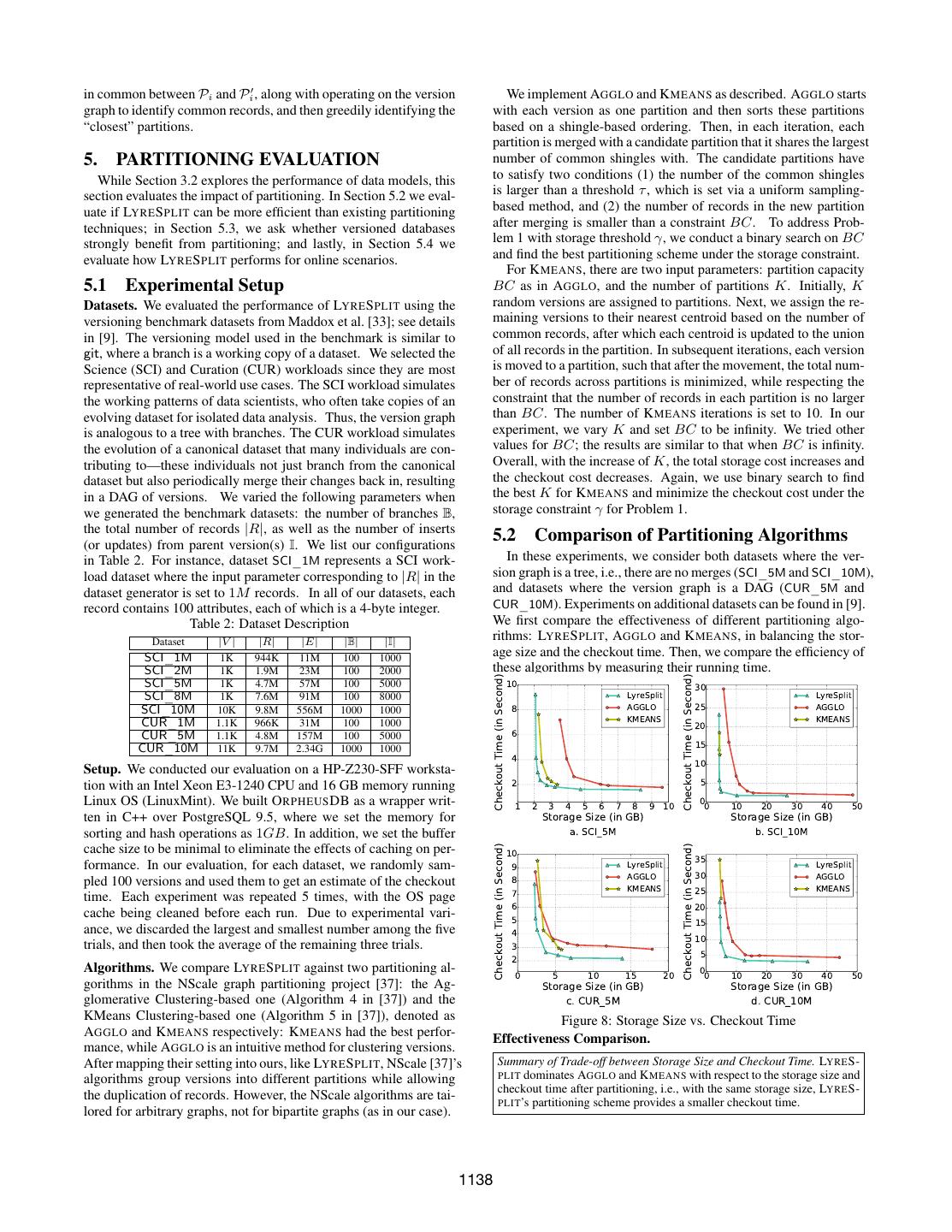

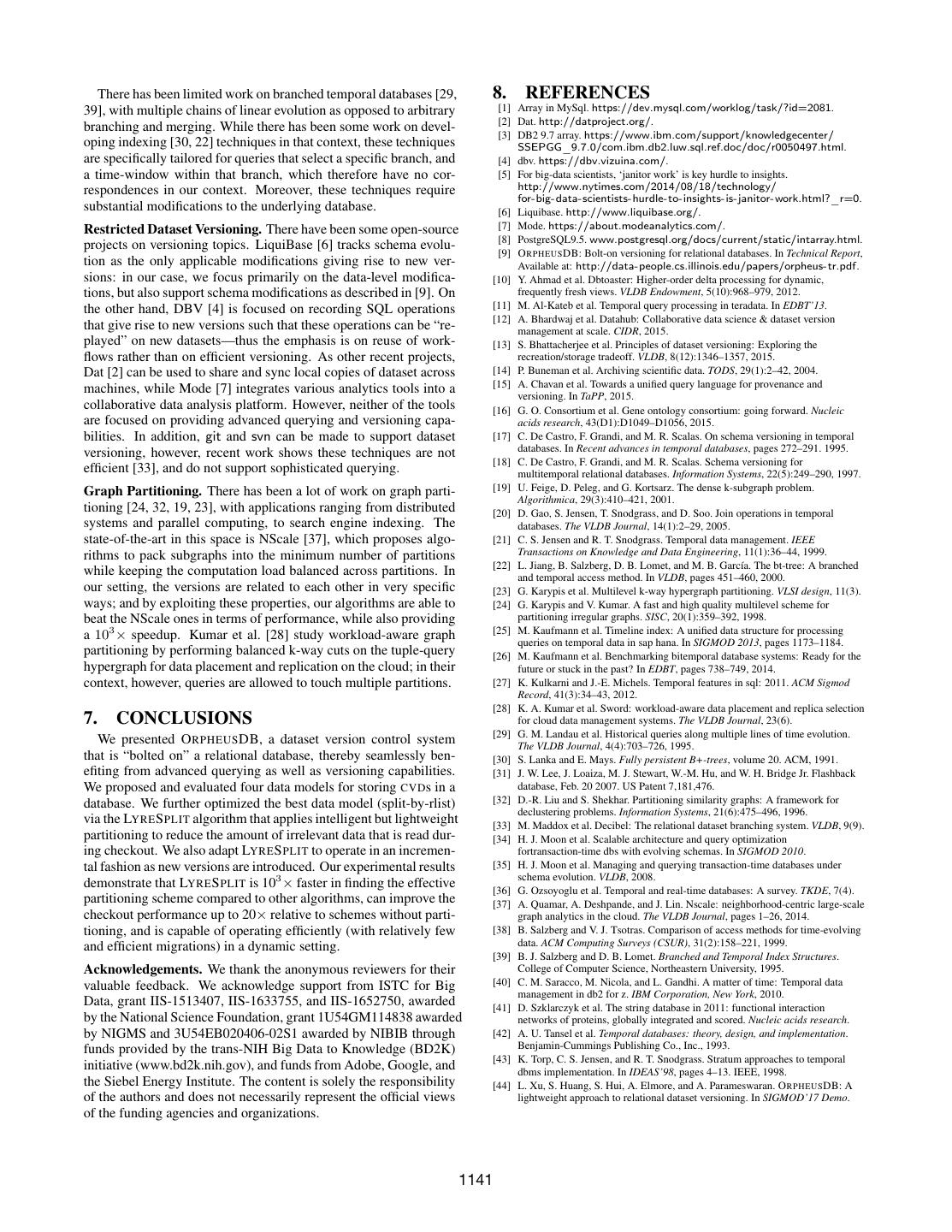

6 . A-table-per-version Combined-table Split-by-vlist Split-by-rlist Delta-based 40 104 60 Checkout Time (in Second) 35 Commit Time (in Second) 50 103 Storage Size (in GB) 30 25 40 20 102 30 15 20 101 10 5 10 100 0 SCI_1M SCI_2M SCI_5M SCI_8M SCI_1M SCI_2M SCI_5M SCI_8M 0 SCI_1M SCI_2M SCI_5M SCI_8M a. Storage Size Comparison b. Commit Time Comparison c. Checkout Time Comparison Figure 3: Comparison Between Different Data Models version with 250K records of which 30% of the records are modi- parent/child versions, creation time, commit time, a commit mes- fied, delta-based takes 8.16s, while split-by-rlist takes 4.12s. sage, and an array of attributes present in the version. Using the Checkout. From Figure 3 (c), we can see that split-by-rlist is a bit data contained in this table, users can easily query for the prove- faster than combined-table and split-by-vlist for checkout. Not sur- nance of versions and for other metadata. In addition, using the prisingly, a-table-per-version is the best for this operation since it attribute parents we can obtain each version’s derivation informa- simply requires retrieving all the records in a specific table (corre- tion and visualize it as directed acyclic graph that we call a version sponding to the desired version). Combined-table requires one full graph. Each node in the version graph is a version and each di- scan over the combined table to check whether each record is in ver- rected edge points from a version to one of its children version(s). sion vi . On the other hand, split-by-vlist needs to first scan the ver- An example is depicted in Figure 4(b), where version v2 and v3 are sioning table to retrieve the rids in version vi , and then join the rids merged into version v4 . with the data table. Lastly, split-by-rlist retrieves the rids in version vid parents checkoutT commitT msg attributes v1 3 2 1 vi using the primary key index on vid in the versioning table, and v1 NULL NULL t1 ... {a1, a2, a3, a 4, a6 } v2 t2 ... 3 v2 4 v3 then joins the rids with the data table. For both split-by-vlist and {v 1 } t3 {a1, a2, a3, a 4, a6 } split-by-rlist, we used a hash-join, which was the most efficient2 , v3 {v 1 } t2 t4 ... {a1, a2, a3, a 4, a6 } 3 4 where a hash table on rids is first built, followed by a sequential v 4 {v 2, v 3 } t5 t6 ... {a1, a2, a3, a 4, a6 } v4 6 scan on the data table by probing each record in the hash table. a. Metadata Table b. Version Graph Overall, combined-table, split-by-vlist, and split-by-rlist all require Figure 4: Metadata Table and Version Graph (Fixed Schema) a full scan on the combined table or the data table, and even though split-by-rlist introduces the overhead of building a hash table, it re- Schema Changes. During a commit, if the schema of the table duces the expensive array operation for containment checking as being committed is different from the schema of the CVD it was in combined-table and split-by-vlist. For the delta-based approach, derived from, we update the schema of CVD to incorporate the the checkout time is large since it needs to probe into a number changes. We describe details in our technical report [9]. All of our of tables, tracing all the way back to the root, remembering which array-based models can adapt to changes in the set of attributes: a records were seen. simple solution for new attributes is so use the ALTER command to add any new attributes to the model, assigning NULLs to the Takeaways. Overall, considering the space consumption, the com- records from the previous versions that do not possess these new mit and checkout time, plus the fact that delta-based models are in- attributes. Attribute deletions only require an update in the ver- efficient in supporting advanced queries as discussed in Section 3.1, sion metadata table. This simple mechanism is similar to the single we claim that split-by-rlist is preferable to the other data models in pool method proposed in a temporal schema versioning context by supporting versioning within a relational database. Thus, we pick De Castro et al. [17]. Compared to the multi pool method where split-by-rlist as our data model for representing CVDs. That said, any schema change results in the new version being stored sepa- from Figure 3(c), we notice that the checkout time for split-by-rlist rately, the single pool method has fewer records with duplicated grows with dataset size. For instance, for dataset SCI_8M with 8M attributes and therefore has less storage consumption overall. Even records in the data table, the checkout time is as high as 30 sec- though ALTER TABLE is indeed a costly operation, due to the onds. On the other hand, a-table-per-version has very low checkout partitioning schemes we describe later, we only need to ALTER a times on all datasets; it only needs to access the relevant records smaller partition of the CVD rather than a giant CVD, and conse- instead of all records as in split-by-rlist. This motivates the need quently the cost of an ALTER operation is substantially mitigated. for the partition optimizer module in O RPHEUS DB, which tries to In our technical report, we describe how our partitioning schemes attain the best of both worlds by adopting a hybrid representation (described next) can adapt to the single pool mechanism with com- of split-by-rlist and a-table-per-version, described in Section 4. parable guarantees; for ease of exposition, for the rest of this paper, we focus on the static schema case, which is still important and 3.3 Version Derivation Metadata challenging. There has been some work on developing schema ver- Version Provenance. As discussed in Section 2.3, the version sioning schemes [18, 35, 34] and we plan to explore these and other manager in O RPHEUS DB keeps track of the derivation relation- schema evolution mechanisms (including hybrid single/multi-pool ships among versions and maintains metadata for each version. methods) as future work. We store version-level provenance information in a separate table called the metadata table; Figure 4(a) depicts the metadata table for the example in Figure 1. It contains attributes including version id, 4. PARTITION OPTIMIZER 2 In this section, we introduce the concept of partitioning a CVD We also tried alternative join methods—the findings were unchanged; we will discuss by breaking up the data and versioning tables, in order to reduce this further in Section 4.1. We also tried using an additional secondary index for vlist for split-by-vlist which reduced the time for checkout but increased the time for the number of irrelevant records during checkout. All of our proofs commit even further. can be found in our technical report [9]. 1135

7 .4.1 Problem Overview across all partitions—this is the cost of checking out all of the ver- The Partitioning Notion. Let V = {v1 , v2 , ..., vn } be the n ver- sions, equivalent to n i=1 Ci . sions and R = {r1 , r2 , ..., rm } be the m records in a CVD. We can Formal Problem. Our two metrics S and Cavg interfere with each represent the presence of records in versions using a version-record other: if we want a small Cavg , then we need more storage, and if bipartite graph G = (V, R, E), where E is the set of edges—an we want the storage to be small, then Cavg will be large. Typically, edge between vi and rj exists if the version vi contains the record storage is under our control; thus, our problem can be stated as: rj . The bipartite graph in Figure 5(a) captures the relationships P ROBLEM 1 (M INIMIZE C HECKOUT C OST ). Given a stor- between records and versions in Figure 1. v1 r1 v1 r1 age threshold γ and a version-record bipartite graph G = (V, R, E), r2 r2 find a partitioning of G that minimizes Cavg such that S ≤ γ. Ρ1 v2 r3 v2 r3 We can show that Problem 1 is NP-H ARD using a reduction from r4 r4 the 3-PARTITION problem, whose goal is to decide whether a given r5 set of n integers can be partitioned into n3 sets with equal sum. 3- v3 v3 r5 PARTITION is known to be strongly NP-H ARD. r6 Ρ2 r6 v4 v4 T HEOREM 1. Problem 1 is NP- HARD. r7 r7 a. Bipartite Graph b. Illustration of Partitioning We now clarify one complication between our formalization so Figure 5: Version-Record Bipartite Graph & Partitioning far and our implementation. O RPHEUS DB uses the no cross-version diff rule: that is, while performing a commit operation, to minimize The goal of our partitioning problem is to partition G into smaller computation, O RPHEUS DB does not compare the committed ver- subgraphs, denoted as Pk . We let Pk = (Vk , Rk , Ek ), where Vk , sion against all of the ancestor versions, instead only comparing it Rk and Ek represent the set of versions, records and bipartite graph to its parents. Therefore, if some records are deleted and then re- edges in partition Pk respectively. Note that ∪k Ek = E, where E added later, these records would be assigned different rids, and are is the set of edges in the original version-record bipartite graph G. treated as different. As it turns out, Problem 1 is still NP-H ARD We further constrain each version in the CVD to exist in only one when the space of instances are those that can be generated when partition, while each record can be duplicated across multiple par- this rule is applied. For the rest of this section, we will use the for- titions. In this manner, we only need to access one partition when malization with the no cross-version diff rule in place, since that checking out a version, consequently simplifying the checkout pro- relates more closely to practice. cess by reducing the overhead from accessing multiple partitions. Thus, our partition problem is equivalent to partitioning V , such 4.2 Partitioning Algorithm that each partition (Pk ) stores all of the records corresponding to Given a version-record C avg all of the versions assigned to that partition. Figure 5(b) illustrates bipartite graph G = (V, R, E), single partition a possible partitioning strategy for Figure 5(a). Partition P1 con- |R| there are two extreme cases tains version v1 and v2 , while partition P2 contains version v3 and for partitioning. At one v4 . Note that records r2 , r3 and r4 are duplicated in P1 and P2 . n partitions extreme, we can minimize |E| Metrics. We consider two criteria while partitioning: the storage the checkout cost by stor- |V| S cost and the time for checkout. Recall that the time for commit is ing each version in the CVD |R| |E| fixed and small—see Figure 3(b), so we only focus on checkout. as one partition; there are in Figure 6: Extreme Schemes The overall storage costs involves the cost of storing all of the total K = |V | = n parti- n partitions of the data and the versioning table. However, we observe tions, and the storage cost is S = k=1 |Rk | = |E| and the that the versioning table simply encodes the bipartite graph, and as 1 n checkout cost is Cavg = n k=1 (|Vk ||Rk |) = |V |E| . At another | a result, its cost is fixed. Furthermore, since all of the records in extreme, we can minimize the storage by storing all versions in one the data table have the same (fixed) number of attributes, so instead single partition; the storage cost is S = |R| and Cavg = |R|. We of optimizing the actual storage we will optimize for the number of illustrate these schemes in Figure 6. records in the data table across all the partitions. Thus, we define the storage cost, S, to be the following: Version Graph Concept. Our goal in designing our partition- ing algorithm, LYRE S PLIT3 , is to trade-off between these two ex- K tremes. Instead of operating on the version-record bipartite graph, S= |Rk | (4.1) which may be very large, LYRE S PLIT operates on the much smaller k=1 version graph instead, which makes it a lot more lightweight. We Next, we note that the time taken for checking out version vi is denote a version graph as G = (V, E), where each vertex v ∈ V proportional to the size of the data table in the partition Pk that is a version and each edge e ∈ E is a derivation relationship. Note contains version vi , which in turn is proportional to the number of that V is essentially the same as V in the version-record bipartite records present in that data table partition. We theoretically and graph. An edge from vertex vi to a vertex vj indicates that vi is a empirically justify this in our technical report [9]. So we define the parent of vj ; this edge has a weight w(vi , vj ) equals the number checkout cost of a version vi , Ci , to be Ci = |Rk |, where vi ∈ Vk . of records in common between vi and vj . We use p(vi ) to denote The checkout cost, denoted as Cavg , is defined to be the average of the parent versions of vi . For the special case when there are no i Ci Ci , i.e., Cavg = n . While we focus on the average case, which merge operations, |p(vi )| ≤ 1, ∀i, and the version graph is a tree, assumes that each version is checked out with equal frequency, our denoted as T = (V, E). Lastly, we use R(vi ) to be the set of all algorithms generalize to the weighted case [9]. On rewriting the records in version vi , and l(vi ) to be the depth of vi in the version expression for Cavg above, we get: graph G in a topological sort4 of the graph—the root has depth 1. K |Vk ||Rk | For example, in Figure 4, version v2 has |R(v2 )| = 3 since it has k=1 Cavg = (4.2) n 3 A lyre was the musical instrument of choice for Orpheus. The numerator is simply sum of the number of records in each 4 In each iteration r, topological sorting algorithm finds vertices V with in-degree partition, multiplied by the number of versions in that partition, equals 0, removes V , and updates in-degree of other vertices. l(vi ) = r, ∀vi ∈ V . 1136

8 .three records, and is at level l(v2 ) = 2. Further, v2 has a single performance in terms of these quantities: an algorithm has an ap- parent p(v2 ) = v1 , and shares two records with its parent, i.e., proximation ratio of (X, Y ) if its storage cost S is no larger than w(v1 , v2 ) = 2. Next, we describe the algorithm for LYRE S PLIT |E| X · R while its checkout cost Cavg is no larger than Y · |V | . We when the version graph is a tree (i.e., no merge operations). first study the impact of a single split edge. The Version Tree Case. Our algorithm is based on the following L EMMA 2. Given a bipartite graph G = (V, R, E), a version lemma, which intuitively states that if every version vi shares a tree T = (V, E) and a parameter δ, let e∗ ∈ E be the edge that is large number of records with its parent version, then the checkout split in LYRE S PLIT, then after splitting the storage cost S must be |E| |E| cost is small, and bounded by some factor of |V | , where |V | is the within (1 + δ)|R|. lower bound on the optimal checkout cost. Now, overall, we have: L EMMA 1. Given a bipartite graph G = (V, R, E), a version T HEOREM 2. Given a parameter δ, LYRE S PLIT results in a tree T = (V, E), and a parameter δ ≤ 1, if the weight of every ((1 + δ) , 1δ )-approximation for partitioning. edge in E is larger than δ|R|, then the checkout cost Cavg when all |E| Complexity. The total time complexity is O(n ), where is the of the versions are in one single partition is less than 1δ · |V | . recursion level number when the algorithm terminates. |E| Lemma 1 indicates that when Cavg ≥ 1δ · |V | , there must ex- Generalizations. We can naturally extend our algorithms for the ist some version vj that only shares a small number of common case where the version graph is a DAG: in short, we first construct records with its parent version vi , i.e., w(vi , vj ) ≤ δ|R|; oth- ˆ based on the original version graph G, then apply |E| a version tree T erwise Cavg < 1δ · |V | . Intuitively, such an edge (vi , vj ) with LYRE S PLIT on the constructed version tree T. ˆ We describe the w(vi , vj ) ≤ δ|R| is a potential edge for splitting since the overlap details for this algorithm in our technical report [9]. between vi and vj is small. LYRE S PLIT Illustration. We describe a version of LYRE S PLIT 4.3 Incremental Partitioning LYRE S PLIT can be explicitly invoked by users or by O RPHEUS DB that accepts as input a parameter δ, and then recursively applies |E| when there is a need to improve performance or a lull in activity. partitioning until the overall Cavg < 1δ · |V | ; we will adapt this We now describe how the partitioning identified by LYRE S PLIT is to Problem 1 later. The pseudocode is provided in the technical incrementally maintained during the course of normal operation, report [9], and we illustrate its execution on an example in Figure 7. and how we reduce the migration time when LYRE S PLIT identi- As before, we are given a version tree T = (V, E). We start fies a new partitioning. We only describe the high-level ideas here; with all of the versions in one partition. We first check whether details and guarantees can be found in the technical report [9]. |R||V | < |E| . If yes, then we terminate; otherwise, we pick one δ Online Maintenance. When a new version vi is committed, O R - edge e∗ with weight e∗ .w ≤ δ|R| to cut in order to split the par- PHEUS DB applies the same intuition as LYRE S PLIT to determine tition into two. According to Lemma 1, if |R||V | ≥ |E| δ , there whether to add vi to an existing partition, or to create a new par- must exist some edge whose weight is no larger than δ|R|. The tition: if vi shares a large number of records in common with algorithm does not prescribe a method for picking this edge if there one of its parent versions vj , then vi is added to the partition Pk are multiple; the guarantees hold independent of this method. For that parent is in, or else a new partition is created. Specifically, if instance, we can pick the edge with the smallest weight; or the one w(vi , vj ) ≤ δ ∗ |R| and S < γ, where δ ∗ was the splitting param- such that after splitting, the difference in the number of versions eter used during the last invocation of LYRE S PLIT, then we create in the two partitions is minimized. In our experiments, we use the a new version. This way, the added storage cost is minimized, and latter. In our example in Figure 7(a), we pick the red edge to split the added checkout cost is guaranteed to be small, as in Lemma 1. the version tree T into two partitions—as shown in Figure 7(b), we get one partition P1 with the blue nodes (versions) and another P2 Even with the proposed online maintenance scheme, the checkout with the red nodes (versions). cost tends to diverge from the best checkout cost that LYRE S PLIT After each edge split, we update the number of records, versions can identify under the current constraints. This is because LYRE S- PLIT performs global partitioning using the full version graph as in- and bipartite edges, and then we recursively call the algorithm on each partition. In the example, we terminate for P2 but we split put, while online maintenance makes small changes to the existing the edge (v2 , v4 ) for P1 , and then terminate with three partitions— partitioning. To maintain the checkout performance, O RPHEUS DB Figure 7(c). We define be the recursion level number. In Figure 7 allows for a tolerance factor µ on the current checkout cost (users ∗ (a) (b) and (c), = 0, = 1 and = 2 respectively. We will use can also set µ explicitly). We let Cavg and Cavg be the current this notation in the performance analysis next. checkout cost and the best checkout cost identified by LYRE S PLIT ∗ respectively. If Cavg > µCavg , the migration engine is triggered, v1 7 v1 7 v1 7 and we reorganize the partitions by migrating data from the old par- 6 4 6 6 titions to the new ones; until then, we perform online maintenance. 8 v2 v 3 10 8 v2 v 3 10 8 v2 v 3 10 In general, when µ is small, the migration engine is invoked more frequently. Next, we discuss how migration is performed. 6 8 6 7 6 8 6 7 8 6 7 v4 v5 v6 v7 v4 v5 v6 v7 v4 v5 v6 v7 Migration Approach. Given the existing partitioning P = {P1 , 30 12 10 8 30 12 10 8 30 12 10 8 P2 , . . . , Pα } and the new partitioning P = {P1 , P2 , ..., Pβ } iden- a. ℓ=0 b. ℓ=1 c. ℓ=2 tified by LYRE S PLIT, we need an algorithm to efficiently migrate Figure 7: Illustration of LYRE S PLIT (δ = 0.5) the data from P to P without dropping all existing tables and Now that we have an algorithm for the δ case, we can simply recreating the partitions from scratch, which could be very costly. apply binary search on δ and obtain the best δ for Problem 1. We To do so, O RPHEUS DB needs to identify, for every Pi ∈ P , the can show that for two δ such that one is smaller than the other, the closest partition Pj ∈ P , in terms of modification cost, defined edges cut in the former is a superset of the latter. as |Ri \ Rj | + |Rj \ Ri |, where Ri \ Rj and Rj \ Ri are the records needed to be inserted and deleted respectively to transform Performance Analysis. Overall, the lowest storage cost is |R| and Pj to Pi . Since this is expensive to calculate, O RPHEUS DB in- |E| the lowest checkout cost is |V | respectively. We now analyze the stead approximates this quantity by using the number of versions 1137

9 .in common between Pi and Pi , along with operating on the version We implement AGGLO and K MEANS as described. AGGLO starts graph to identify common records, and then greedily identifying the with each version as one partition and then sorts these partitions “closest” partitions. based on a shingle-based ordering. Then, in each iteration, each partition is merged with a candidate partition that it shares the largest 5. PARTITIONING EVALUATION number of common shingles with. The candidate partitions have While Section 3.2 explores the performance of data models, this to satisfy two conditions (1) the number of the common shingles section evaluates the impact of partitioning. In Section 5.2 we eval- is larger than a threshold τ , which is set via a uniform sampling- uate if LYRE S PLIT can be more efficient than existing partitioning based method, and (2) the number of records in the new partition techniques; in Section 5.3, we ask whether versioned databases after merging is smaller than a constraint BC. To address Prob- strongly benefit from partitioning; and lastly, in Section 5.4 we lem 1 with storage threshold γ, we conduct a binary search on BC evaluate how LYRE S PLIT performs for online scenarios. and find the best partitioning scheme under the storage constraint. For K MEANS, there are two input parameters: partition capacity 5.1 Experimental Setup BC as in AGGLO, and the number of partitions K. Initially, K Datasets. We evaluated the performance of LYRE S PLIT using the random versions are assigned to partitions. Next, we assign the re- versioning benchmark datasets from Maddox et al. [33]; see details maining versions to their nearest centroid based on the number of in [9]. The versioning model used in the benchmark is similar to common records, after which each centroid is updated to the union git, where a branch is a working copy of a dataset. We selected the of all records in the partition. In subsequent iterations, each version Science (SCI) and Curation (CUR) workloads since they are most is moved to a partition, such that after the movement, the total num- representative of real-world use cases. The SCI workload simulates ber of records across partitions is minimized, while respecting the the working patterns of data scientists, who often take copies of an constraint that the number of records in each partition is no larger evolving dataset for isolated data analysis. Thus, the version graph than BC. The number of K MEANS iterations is set to 10. In our is analogous to a tree with branches. The CUR workload simulates experiment, we vary K and set BC to be infinity. We tried other the evolution of a canonical dataset that many individuals are con- values for BC; the results are similar to that when BC is infinity. tributing to—these individuals not just branch from the canonical Overall, with the increase of K, the total storage cost increases and dataset but also periodically merge their changes back in, resulting the checkout cost decreases. Again, we use binary search to find in a DAG of versions. We varied the following parameters when the best K for K MEANS and minimize the checkout cost under the we generated the benchmark datasets: the number of branches B, storage constraint γ for Problem 1. the total number of records |R|, as well as the number of inserts (or updates) from parent version(s) I. We list our configurations 5.2 Comparison of Partitioning Algorithms in Table 2. For instance, dataset SCI_1M represents a SCI work- In these experiments, we consider both datasets where the ver- load dataset where the input parameter corresponding to |R| in the sion graph is a tree, i.e., there are no merges (SCI_5M and SCI_10M), dataset generator is set to 1M records. In all of our datasets, each and datasets where the version graph is a DAG (CUR_5M and record contains 100 attributes, each of which is a 4-byte integer. CUR_10M). Experiments on additional datasets can be found in [9]. Table 2: Dataset Description We first compare the effectiveness of different partitioning algo- Dataset |V | |R| |E| |B| |I| rithms: LYRE S PLIT, AGGLO and K MEANS, in balancing the stor- SCI_1M 1K 944K 11M 100 1000 age size and the checkout time. Then, we compare the efficiency of SCI_2M 1K 1.9M 23M 100 2000 these algorithms by measuring their running time. Checkout Time (in Second) SCI_5M 1K 4.7M 57M 100 5000 10 LyreSplit 30 Checkout Time (in Second) LyreSplit SCI_8M 1K 7.6M 91M 100 8000 SCI_10M 10K 9.8M 556M 1000 1000 8 AGGLO 25 AGGLO KMEANS KMEANS CUR_1M 1.1K 966K 31M 100 1000 20 CUR_5M 1.1K 4.8M 157M 100 5000 6 CUR_10M 11K 9.7M 2.34G 1000 1000 15 4 10 Setup. We conducted our evaluation on a HP-Z230-SFF worksta- tion with an Intel Xeon E3-1240 CPU and 16 GB memory running 2 5 Linux OS (LinuxMint). We built O RPHEUS DB as a wrapper writ- 1 2 3 4 5 6 7 8 9 10 00 10 20 30 40 50 ten in C++ over PostgreSQL 9.5, where we set the memory for Storage Size (in GB) Storage Size (in GB) sorting and hash operations as 1GB. In addition, we set the buffer a. SCI_5M b. SCI_10M Checkout Time (in Second) Checkout Time (in Second) cache size to be minimal to eliminate the effects of caching on per- 10 35 formance. In our evaluation, for each dataset, we randomly sam- 9 LyreSplit LyreSplit pled 100 versions and used them to get an estimate of the checkout 8 AGGLO 30 AGGLO 7 KMEANS 25 KMEANS time. Each experiment was repeated 5 times, with the OS page 6 20 cache being cleaned before each run. Due to experimental vari- 5 15 ance, we discarded the largest and smallest number among the five 4 10 trials, and then took the average of the remaining three trials. 3 2 5 Algorithms. We compare LYRE S PLIT against two partitioning al- 0 5 10 15 20 00 10 20 30 40 50 gorithms in the NScale graph partitioning project [37]: the Ag- Storage Size (in GB) Storage Size (in GB) glomerative Clustering-based one (Algorithm 4 in [37]) and the c. CUR_5M d. CUR_10M KMeans Clustering-based one (Algorithm 5 in [37]), denoted as Figure 8: Storage Size vs. Checkout Time AGGLO and K MEANS respectively: K MEANS had the best perfor- Effectiveness Comparison. mance, while AGGLO is an intuitive method for clustering versions. After mapping their setting into ours, like LYRE S PLIT, NScale [37]’s Summary of Trade-off between Storage Size and Checkout Time. LYRE S- algorithms group versions into different partitions while allowing PLIT dominates AGGLO and K MEANS with respect to the storage size and checkout time after partitioning, i.e., with the same storage size, LYRE S- the duplication of records. However, the NScale algorithms are tai- PLIT ’s partitioning scheme provides a smaller checkout time. lored for arbitrary graphs, not for bipartite graphs (as in our case). 1138

10 . In order to trade-off between S and Cavg , we vary δ for LYRE - Furthermore, K MEANS can only finish the binary search process S PLIT, BC for AGGLO and K for K MEANS to obtain the overall within 10 hours for SCI_1M. Thus our proposed LYRE S PLIT is trend between the storage size and the checkout time. The results much more scalable than AGGLO and K MEANS. Even if K MEANS are shown in Figure 8, where the x-axis depicts the total storage is closer to LYRE S PLIT in performance (as seen in the previous size for the data table in gigabytes (GB) and the y-axis depicts the experiments), it is impossible to use in practice. average checkout time in seconds for the 100 randomly selected versions. Each point in Figure 8 represents a partitioning scheme 5.3 Benefits of Partitioning obtained by one algorithm with a specific input parameter value. Summary of Checkout Time Comparison with and without Partitioning: We terminated the execution of K MEANS when its running time With only a 2× increase on the storage, we can achieve a substantial 3×, exceeded 10 hours for each K, which is why there are only two 10× and 21× reduction on checkout time for SCI_1M, SCI_5M, and points with star markers in Figure 8(b) and 8(d) respectively. The SCI_10M respectively. overall trend for AGGLO, K MEANS, and LYRE S PLIT is that with We now study the impact of partitioning and demonstrate that the increase in storage size, the average checkout time first de- with a relatively small increase in storage, the checkout time can creases and then tends to a constant value—the average checkout be substantially reduced. We conduct two sets of experiments with time when each version is stored as a separate table. the storage threshold as γ = 1.5 × |R| and γ = 2 × |R| respec- Furthermore, LYRE S PLIT has better performance than the other tively, and compare the average checkout time with and without two algorithms in both the SCI and CUR datasets in terms of the partitioning. Figure 10(a) illustrates the comparison on the check- storage size and the checkout time, as shown in Figure 8. For in- out time for different datasets, and Figure 10(b) displays the cor- stance, in Figure 8(a), with 2.3GB storage budget, LYRE S PLIT can responding storage size comparison. Each collection of bars in provide a partitioning scheme taking 2.9s for checkout on average, Figure 10 corresponds to one dataset. Consider SCI_5M in Fig- while both K MEANS and AGGLO give schemes taking more than ure 10 as an example: the checkout time without partitioning is 7s. Thus, with equal or lesser storage size, the partitioning scheme 16.6s while the storage size is 2.04GB; when the storage thresh- selected by LYRE S PLIT achieves much less checkout time than the old is set to be γ = 2 × |R|, the checkout time after partitioning ones proposed by AGGLO and K MEANS, especially when the stor- is 1.71s and the storage size is 3.97GB. As illustrated in Figure 10, age budget is small. The reason is that LYRE S PLIT takes a “global” with only 2× increase in the storage size, we can achieve 3× reduc- perspective to partitioning, while AGGLO and K MEANS take a “lo- tion on SCI_1M, 10× reduction on SCI_5M, and 21× reduction on cal” perspective. Specifically, each split in LYRE S PLIT is decided SCI_10M for the average checkout time compared to that without based on the derivation structure and similarity between various partitioning. Thus, with partitioning, we can eliminate the time for versions, as opposed to greedily merging partitions with partitions accessing irrelevant records. Consequently, the checkout time re- in AGGLO, and moving versions between partitions in K MEANS. mains small even for large datasets. The results for CUR is similar LyreSplit AGGLO KMEANS and can be found in the technical report [9]. 109 109 108 cutoff 5.4h 108 cutoff Without-partitioning LyreSplit (γ =1.5|R|) LyreSplit (γ =2|R|) Running Time (in ms) Running Time (in ms) 10h 10h 3.7h 10h 45 10 Checkout Time (in Second) 107 0.8h 107 0.5h 106 106 40 8.17 Storage Size (in GB) 35.99 105 10 5 119s 35 8 18s 30 104 7s 104 6s 6 6.19 103 0.3s 103 0.7s 25 102 33ms 102 53ms 20 16.60 4 3.97 4.24 17ms 15 101 101 3ms 3ms 2.99 100 SCI_1M 100 SCI_1M 10 2 2.04 SCI_5M SCI_10M SCI_5M SCI_10M 5 4.21 1.26 1.21 (a) Total Running Time (b) Running Time Per Iteration 1.81 1.71 1.82 1.68 0.41 0.56 0.73 0 SCI_1M SCI_5M SCI_10M 0 SCI_1M SCI_5M SCI_10M Figure 9: Algorithms’ Running Time Comparison (SCI_*) (a) Checkout Time (SCI_*) (b) Storage Size (SCI_*) Efficiency Comparison. Figure 10: Comparison With and Without Partitioning Summary of Comparison of Running Time of Partitioning Algorithms. 5.4 Maintenance and Migration When minimizing the checkout time under a storage constraint (Prob- We now evaluate the performance of O RPHEUS DB’s partition- lem 1), LYRE S PLIT is on average 103 × faster than AGGLO, and more than 105 × faster than K MEANS for all SCI_* and CUR_* datasets. ing optimizer over the course of an extended period with many ver- sions being committed to the system. We employ our SCI_10M In this experiment, we set the storage threshold as γ = 2|R|, and dataset, which contains the largest number of versions (i.e. 10k). terminate the binary search process when the resulting storage cost Here, the versions are streaming in continuously; as each version S meets the constraint: 0.99γ ≤ S ≤ γ. We discuss the results commits, we perform online maintenance based on the mechanism C for the SCI datasets: the CUR dataset performance is similar [9]. described in Section 4.3. When Cavg ∗ reaches the tolerance factor avg Figure 9a shows the total running time during the end-to-end binary µ, the migration engine is automatically invoked, and starts to per- search process, while Figure 9b shows the running time per binary form the migration of data from the old partitions to the new ones search iteration. Again, we terminate K MEANS and AGGLO when identified by LYRE S PLIT. We first examine how our online main- the running time exceeds 10 hours. Consider the largest dataset tenance performs, and how frequently migration is invoked. Next, SCI_10M in Figure 9 as an example: with LYRE S PLIT the entire we test the latency of our proposed migration approach. The stor- binary search procedure and each binary search iteration took 0.3s age threshold is set to be γ = 1.5|R|. Similar results on γ = 2|R| and 53ms respectively; AGGLO takes 50 minutes in total; while can be found in our technical report [9]. K MEANS does not even finish a single iteration in 10 hours. Overall, LYRE S PLIT is 102 × faster than AGGLO for SCI_1M, Online Maintenance. 103 × faster for SCI_5M, and 104 × faster for SCI_10M respec- Summary of Online Maintenance Compared to LYRE S PLIT. With our tively, and more than 105 × faster than K MEANS for all datasets. proposed online maintenance mechanism, the checkout cost Cavg di- ∗ verges slowly from the best checkout cost Cavg identified by LYRE S PLIT. This is mainly because LYRE S PLIT only needs to operate on the version graph while AGGLO and K MEANS operate on the version- When µ = 1.5, our migration engine is triggered only 7 times across a total of 10,000 committed versions . record bipartite graph, which is much larger than the version graph. 1139