- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Gestural Query Specification

展开查看详情

1 . Gestural Query Specification Arnab Nandi Lilong Jiang Michael Mandel Computer Science & Engineering The Ohio State University {arnab,jianglil,mandelm}@cse.osu.edu ABSTRACT CHINOOK Album Direct, ad-hoc interaction with databases has typically been per- Album Artist AlbumId formed over console-oriented conversational interfaces using query languages such as SQL. With the rise in popularity of gestural user Artist ArtistId ⋈ ArtistId 1:2 interfaces and computing devices that use gestures as their exclusive Name: Black Sabbath n=2 Title modes of interaction, database query interfaces require a fundamen- Customer tal rethinking to work without keyboards. We present a novel query 16 Black Sabbath 12 12 Black Sabbath 17 Black Sabbath Vol. 4 (Remaster) 12 12 Black Sabbath specification system that allows the user to query databases using a Employee series of gestures. We present a novel gesture recognition system that uses both the interaction and the state of the database to classify gestural input into relational database queries. We conduct exhaus- Figure 1: Query Specification using Multi-touch Gestures. The tive systems performance tests and user studies to demonstrate that query task from our motivating example (Section 1.1) is speci- our system is not only performant and capable of interactive laten- fied using a series of gestures. As two tables are brought close cies, but it is also more usable, faster to use and more intuitive than to each other, the attributes are presented in an arc such that existing systems. they are amenable to be joined. The preview of most likely join is presented as feedback to the user for guidance. 1. INTRODUCTION Next-generation computing devices such as tablets, smartphones, using a finger, hand, head or input device, denoting an action to be motion capture-based systems such as the Kinect, and eye-tracking- performed on the database query interface. Gestures encompass based systems such as Google Glass have ushered us into a new age finger-based multitouch points (e.g., input from the iPad) includ- of natural user interaction with data. The number of smartphones ing pressure for each finger, skeletal motion tracking (e.g., Kinect), and tablets sold in 2011 was 1.5 times the number of desktops, and more. We label databases that support such querying gestural laptops, and netbooks combined [9] for the same period; and in databases. In this paper, we articulate the challenges in building the last quarter of that year, this ratio jumped to 1.9 times. In a query interface that supports gestural querying from such inputs, a more recent study [29], sales of tablet devices are expected to and study a novel system that allows for gestural query specification. surpass those of portable computers in 2013, and surpass all personal Gestural user interfaces have become a popular mode of inter- computers in 2015. Based on these trends, it is clear that both the action with a wide variety of touch-based, motion-tracking, or size and the heterogeneity of non-keyboard interaction is growing eye-tracking devices. Given the rising popularity of such devices, rapidly, and soon will be a dominant mode of interaction. domain-specific applications have come up with mappings between End-user-friendly interaction with databases is a well-motivated standard gestures and actions pertinent to the system. The onus of problem [31]. As discussed in the related work section (Section 6), gesture recognition is on the user interface layer, that identifies the there has been a wide variety of work in query interfaces in both gesture as one of a set of gestures predefined by the operating sys- domain-specific and domain-independent use cases. However, cur- tem, independent of the application state. The gesture type, along rent efforts are based on keyboard or mouse-driven interaction, and with metadata parameters such as coordinates and pressure, are are therefore unsuitable for gestures. sent to the application, which then uses them to infer actions. This We capture the heterogeneous modes of natural, movement-based mapping of interpreted gestures to a domain-specific action can be interaction with data under a common terminology, gestural query- considered as a classification problem. ing. Each gesture is a single movement – performed by the user Unlike domain-specific applications, in the context of ad-hoc, open-domain querying of relational databases, the use of gestures as the sole mode of interaction faces several challenges. First, the This work is licensed under the Creative Commons Attribution- space of possible actions is large1 – the action depends on the NonCommercial-NoDerivs 3.0 Unported License. To view a copy of this li- underlying database query language (e.g., SQL), the schema of cense, visit http://creativecommons.org/licenses/by-nc-nd/3.0/. Obtain per- the database (the tables and the attributes for each table), and the mission prior to any use beyond those covered by the license. Contact copyright holder by emailing info@vldb.org. Articles from this volume data contained in it (the unique values for each attribute, and the were invited to present their results at the 40th International Conference on individual tuples for each table). One solution to this problem is Very Large Data Bases, September 1st - 5th 2014, Hangzhou, China. to present a modal interface allowing the user to first pick the type Proceedings of the VLDB Endowment, Vol. 7, No. 4 1 Copyright 2013 VLDB Endowment 2150-8097/13/12. When considering n-ary joins, this space can be infinite. 289

2 .of query, and then drill down the parameters of the desired query. Contributions & Outline: In this paper, we make the following Such a modal interface goes against the desiderata of fluidity and contributions: direct manipulability. Further, if the user is unsure about the exact • We introduce and define the problem of gestural query specifica- query [44] itself, the database system will need to guide the user to tion, a critical task in database querying that is gaining importance the exact query intent, and the intended query result. with the proliferation of purely gesture-oriented interfaces. Thus, to provide for an effective querying experience, there is a strong need for the system to effectively aid the user in articulat- • We propose a novel querying framework that allows the user to ing the intended query using gestures. This problem of gestural effectively specify relational queries using a series of gestures. query specification relies on both gestural input and the state of the database to recognize and articulate queries. • We detail the architecture and implementation of a novel gestural query specification system, G ESTURE Q UERY, that provides feed- 1.1 Motivating Example back to the user during the query process, and detail the various systems considerations and optimizations involved in the building To better explain the system, we motivate our problem with a of the system. simple ad-hoc querying task. Consider a relational database about music, which contains information about music artists, albums and • We evaluate the effectiveness of our system by performing exhaus- performances. From this database, the user is tasked to find the titles tive usability studies and systems evaluation using real workloads. of all the albums created by the artist “Black Sabbath”. While the We show that G ESTURE Q UERY is not only performant to use for task is simple to express, it embodies many of the challenges users real-world use cases, but also significantly more usable compared have with querying the system – discovering the schema and data, to existing querying methods. understanding the query language and interface, and then interacting with the system to extract the required information. The paper is organized as follows. In the following section, we Figure 1 demonstrates this task using one possible frontend for describe the various terminologies and preliminary concepts that gestural querying – a multitouch interface. The user first browses are central to our work. In Section 3, we describe a novel Gestural the schema and data by dragging elements from the database tray Query Language, that allows the user to specify relational queries into the workspace, and then peeks into them by pinching out on using an intuitive set of gestures. Section 4 details the underlying each table. The artist “Black Sabbath” is filtered by using a simple architecture and implementation considerations of our Query Spec- gesture: dragging the “Black Sabbath” cell to the attribute header, ification System. In Section 5 we provide a detailed performance followed by a join operation, performed by bringing the ARTIST analysis and discuss the results of an exhaustive usability study. We and ALBUM close to each other. The interface fluidly guides the end with related work, conclusions, and future work. user by responding to each touch and gestural movement, providing previews and feedback of the results to the most likely query. It 2. MODELS AND PRELIMINARIES should be noted that the interaction is done directly on the data itself, Data Model: It should be noted that while our paper uses the and is composable – queries can be constructed piecemeal, and the relational model as its underlying data model, our contributions ordering of the filter clause and the join could be interchanged. The are independent of this choice, and can be applied to any other gestural query paradigm allows for the user to intuitively specify an data model. From a presentation standpoint, we additionally allow articulate query by interacting with the system without having to relations to have ORDER BY attributes. invest time learning about the schema, data, or the query language. As we will see in our experimental evaluation, our novel query Query Model: Our system allows for both read and write queries, specification system outperforms existing methods in usability. at the data and the schema level. Specifically, we allow for selec- tion, projection, union, join, and groupby/aggregate operations over 1.2 Challenges and Opportunities relations. Selections are constructed as conjunctions of clauses, and The use of gestures to support ad-hoc database interaction brings disjunctions can be created using the union operator. As we will forth a set of challenges and opportunities. Interaction is continu- describe in Section 3, each query operation takes one or more rela- ous – unlike traditional keyboard interaction where the user types tion as input, and outputs one relation. In addition, each operation out a string of characters (denoting a query) and then pressing enter generates feedback for the user during the gesture articulation phase to execute it, gestural interaction is expected to be fluid. Users to guide them. expect to see feedback during the articulation of the gesture itself. Gestural Articulation: A gesture is defined as a movement of the This leads us to observe the emergence of a new database query hand or body. In our system, a gesture G is represented as a set paradigm: in place of the traditional Query→Result interaction, of time-series of points; pi = t, l, m where t is the timestamp we now have a continuous loop of interaction, where the user re- of that point, l is locational information in the interface, and m ceives constant feedback from the database during the specification is the metadata information associated with that point and time of the query itself. Second, touch-based, augmented reality, and and |p| is the number of points. For example, in the context of motion-oriented interfaces have popularized the notion of a direct a capacitive 2-dimensional multi-touch interface that supports 10 manipulation [27] interface. In the context of database querying, fingers at a touch sampling rate of 30Hz (such as those found in users expect to manage, query, and manipulate data by directly inter- most tablet devices), there would be a time-series of up to ten 2- acting with it – a contrast from the current paradigm of constructing dimensional points, at 30 sets per second: l = { x, y } and |l| ≤ 10. declarative queries and then applying them to the data. Finally, new m in this example could represent pressure and size of the finger patterns of interaction are likely to overwhelm the user – unlike touch. Our model of gestural input allows for a wide variety of traditional keyboard interaction with a limited number of keys to input: for 3-D depth-capture devices such as the Microsoft Kinect, press and keywords to generate, there can be an infinite number of l = { x, y, z }. The user interacts with the system in a series of gestures, and implied actions from these gestures. Thus, it becomes articulations, individual fluid motions. Each gesture articulation the responsibility of the query specification system to guide the typically lasts a few seconds, going from an ambiguous gesture user during the database interaction process. at the start (the user has not provided any point information yet) 290

3 .to an articulate gesture (the user has made a discernible gesture, Example Walkthrough: To familiarize the reader with the terms encoded as hundreds of timestamped point instances), followed by presented, we walk through the user interaction in the motivating the completion of the gesture. It should be noted that for gestural example. The user has the query specification task of representing interfaces, the user expects feedback from the system during the a FILTER (i.e., selection) followed by a JOIN. This is done using articulation of the gesture as well – it is not sufficient to only react a series of six quick gestures – the user first performs a gesture to at the end of the articulation. This is because the nature of feedback drag the A RTIST relation into the workspace, putting it in the query might affect the gesture the user performs. For example, a user context, followed by a pinch-out gesture to PREVIEW the relation. who is trying to perform a UNION on two relations may notice The same pair of gestures is performed on the A LBUM relation. The that the schema of the two relations are in fact incompatible, and user then performs a FILTER gesture on A RTIST, selecting only thus consider performing a JOIN instead, changing from a stacking “Black Sabbath”. Due to the closed algebra, all direct manipulations gesture (one relation on top of another) to a composing gesture (two on presented data result in relations as well, thus allowing subse- relations side by side) in the same fluid finger movement. quent actions on the data. The user then articulates a JOIN gesture Query Specification: Given a database query task, the user per- by bringing the relations close to each other, considering each pair forms a series of interactions to query the database. The process of attributes. During the gesture articulation, the query intent is of interacting with the database to go from a conceptual task to initially equally spread across all the valid JOINs – for tables with specifying the correct structured query is called query specification. M and N attributes, this can yield M × N possible queries. The Query specification solves a query task, and comprises one or more user is provided with intent feedback, a preview of the most likely query intent transitions. join result and join statistics. The user then uses this feedback to complete the articulation of the query, bringing the most desirable Query Intent: Users issue queries by focusing on their query intent, pair of attributes close to each other. This articulation completes going from a vague information need to an exact query formulation. the query intent transition – the intent has gone from a uniform The query intent is a probability distribution over a space of valid distribution over M × N possible queries to exactly one possible relational queries. Initially, the user provides no information to join query. This completes the articulation of the gesture, and corre- the system, thus the query intent is a uniform distribution over all spondingly, the intent transition and the overall specification of the possible queries to the database. Given sufficient information from tasked query. Ultimately, the user is left with the desired result. the gesture and the query context (described below), the system can narrow down this distribution, bounding the space of possible 3. A GESTURAL QUERY LANGUAGE queries. At the completion of a gesture, the query intent space is that of a single query with 100% probability: an explicit query. The As depicted in Figure 1, the database query interface allows users process of narrowing the query space and arriving at an explicit to directly manipulate results by interacting with them in a sequence query is called a query intent transition. A single user session will of gestures. The interface is divided into three parts: the header, tray, comprise multiple query intent transitions, each building on the and workspace. The header displays the database information and previous one. allows users to pick another database. The tray shows a list of tables available in the selected database, along with tuple cardinalities. Query Context: All interactions take place within a query specifica- The tray also acts as a source for database table interface objects. tion session. Due to the directly manipulable nature of the interface, Tables are dragged to the workspace from the database tray. Each the system catalogs all query intent transitions and all recent inter- table in the workspace represents a view of the table, i.e., cloned mediate results and feedback, termed the query context. This allows representation of the data, and can be directly manipulated. Each the system to infer and narrow the space of possible queries. Further, gesture denotes a single manipulation action and impacts only the the query context can be used to prioritize the surfacing of feedback cloned instance – not the original database. There are a finite number to the user, as discussed in the forthcoming paragraphs. of intuitive gestures that the user can learn, each of which when Query Gesture: Each set of user gestures is codified as a search performed on the workspace can correspond to an action. Users pattern, with a “likelihood score”. Each gesture maps to one or can undo each action to return to the previous workspace state. many parameterized queries. When the likelihood score for a certain Since actions directly correspond to relational queries, all actions gesture goes above a fixed threshold, the system attempts to pop- manipulate one or more relations into another relation. Thus, actions ulate the parameters of the parameterized query using the gesture are composable and can be performed in sequence, manipulating information (e.g., which relations are being dragged) and the query relations in the workspace till the desired result is achieved. context, building a query template. This query template is used to narrow the query intent space (described above). In the event that 3.1 Desiderata multiple gestures are inferred at the same time, the intent space is The development of a gestural query language is a critical task the union of all corresponding query templates. – the language needs to capture the nuances of the new generation Intent Feedback: The goal of the user interface is to accelerate the of user interfaces, while at the same time, provide the user with the narrowing of the intent space, allowing the user to quickly reach an expressiveness and capabilities of existing querying methods. As explicit query. To do this, the system provides feedback to the user a process, we detail the desired features of an ideal gestural query during the entire interaction loop. Since the amount of feedback language. As we will see in the following sections, we leverage possible is quite large, and there is a cost of overburdening the user a combination of algebraic, algorithmic, and design-based solu- with too much information. This leads to an interesting problem: tions to meet these needs, and come up with a usable and efficient How do we rank feedback such that it causes the user to narrow the way to specify queries using gestures. intent space? While the presentation of feedback is central to the user interaction, the generation of feedback itself can leverage prior Direct Manipulation: Modern user interfaces have brought forth a work in areas such as result diversification [55] and is beyond the paradigm shift in user input and output. In place of indirect interac- scope of this paper. In this paper, we focus on the query language, tion via textual commands or mouse movements, users now expect to touch, move, and interact directly with the data and interface ele- interaction paradigm, and query specification system. ments. Therefore, our system needs to allow for direct manipulation: 291

4 .Users should not be expected to construct abstract queries, but to in- the workspace, the PREVIEW action is issued on the target table. teract with the data directly. Thus, queries are constructed implicitly This is issued to the database as a SQL query SELECT * FROM – each gestural operation by the user transforms the existing data, TARGET TABLE LIMIT 6;, presenting the first six rows of the iteratively specifying the intended query in steps. table on screen2 . Closure and Composition: The iterative and directly manipulable SORT: By swiping the attribute header right/left, users can sort the nature of the system implies that the data presented to the user can data according to the attribute in ascending/descending order. The be perceived as a view of a query expression over the database. For sorted attribute is highlighted. Ordering by multiple attributes can interaction to be intuitive, all gestures (and thus the corresponding be performed by successively swiping headers. query operations) should be available to the user at all times. There- fore, we require that our gestural query operators be closed under AGGREGATE: This action works on a single table. The user first relational algebra, and thus all gesture operations are performed on needs to drag the grouping attribute to the table header to bring one or more relations, and yield new relation as the final output, up a popup menu of aggregate functions. The user then drags the allowing for composition of operations. aggregated attribute through the desired aggregation functions in the menu. Feedback: While each gesture is performed on a relation and yields a relation upon completion of the gesture, it is imperative that the REARRANGE: By dragging the attribute header into a different place user be guided through the space of possible queries and results. in the attribute list, the user can change the relative positions of the As mentioned in the previous section, this is increasingly important attributes. This allows positional operations such as JOINS to be in the context of gestural interfaces where the space of possible performed more easily. gestures is unbounded and there are few constraints provided to FILTER: This action works on a single table. By tapping and the user (e.g., finite number of keys on a keyboard versus a touch holding a table cell, a free-floating copy of the cell shows up under interface with no explicit targets). Unlike the final result of each the user’s finger. The user can drag this copy into the attribute header operation, feedback need not always be a relation – it can be any to filter the preview. If the user release his finger immediately after piece of information that aids the user in articulating their intended the copy overlaps with the attribute header, the table will be filtered query. From a usability perspective, we observe that modeling by equality to the selected value. If the user holds the copy on the feedback on the most likely query result is most useful to the user. attribute header, a range slider will appear and the user can filter the Expressivity: It is important that our language should not hold the table by adjusting the range on the slider. user back in terms of their ability to specify queries. In the following JOIN: Two tables can be joined by moving them close to each subsection, we present our gesture vocabulary that allows users to other. The JOIN action represents the inner equijoin SQL query, perform both schema and data-level queries, providing a mapping representing combinations of rows that have the same value for the between gestures and most relational algebra operations. Further, it attributes that are being joined upon. When two tables are brought is important for the system to let the user express queries efficiently close to each other, their attribute lists curve so that the user can – thus, most gestures allow taking advantage of ideas such as multi- bring the desired pair of attributes closest to each other. The design touch to perform complex operations (e.g., conjunctive selections considerations for this curvature are described in Section 3.3. can be performed by filtering on multiple values and attributes, one per finger, that can then be UNIONed together to return a disjunction UNION: Two tables can be unified into a single table if their at- of conjunctive selections). tributes are compatible; i.e., they have the same number of attributes, and each pair of attributes is of the same data type. To unify tables, 3.2 A Gesture Vocabulary the user drags one table onto another from the top, in a stacking In this section, we describe the vocabulary of gestures used in gesture. A preview of compatible columns is presented as color our system. Our vocabulary works on the relational data model coded feedback. and assumes the use of multi-point gestures, as per our gesture INSERT: In order to insert one new tuple, the user can doubletap definition. It should be noted that while the existence of a gesture the bottom of the table. A blank tuple will be added into the end of vocabulary is critical to our system, the gesture itself is not important the table that the user can edit. – users are welcome to come up with their own creative and intuitive gestures. Custom gestures, when encoded as feature functions for UPDATE: To update the value of a table cell, the user uses two- the gesture classifier will work similar to the gestures proposed fingers to swipe the cell. If the cell is numeric, scrolling up will below. However, as discussed in Section 3.3, our gesture vocabulary increase the value while scrolling down will decrease the value. If has been carefully thought through and has been through several the user holds their finger on the cell, a distribution slider will pop up iterations of design. As part of the overall system, this set of gestures and the user can update the value through the slider. If the value is has been formally evaluated to be highly usable and intuitive as per categorical, a menu will appear allowing the selection of a category. the evaluations performed in Section 5. Visual representations of If the value is alphanumeric, text input with autocompletion will be most actions are presented in Figure 2. triggered, based on prior gestural text input work [56]. UNDO: This is a universal action, performed by swiping the workspace ALTER: There are two ways of changing the table schema. One way to the left. This restores the workspace to the previous state, un- is to change the attribute layout using the REARRANGE action. The doing the prior gesture. This (non-operator) query is implemented other way is to scroll the attribute header using two fingers, which by maintaining a stack of prior queries; and is extremely useful for allows the user to change the datatype or name of the attribute. exploration / trial-and-error query specification tasks. PREVIEW: This action works on a single table. When dragged from the database tray, each table is represented by the name of the table 2 We skip more complex variants of preview for conciseness and to and its attributes. By making the pinch-out gesture on the table in focus on the gesture recognition challenges. 292

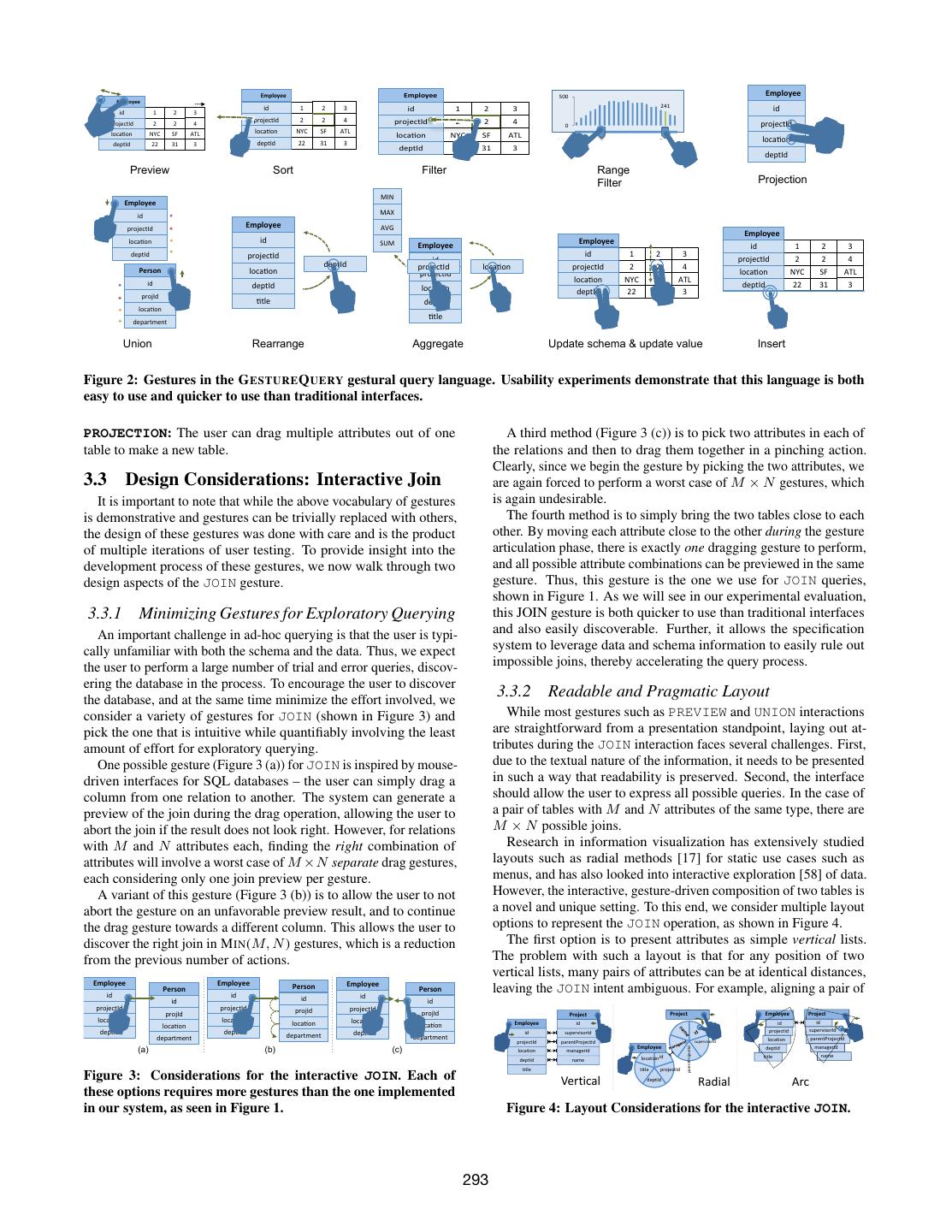

5 . Employee" Employee" &%%$ Employee Employee" " " " " " " " " "" "" !"#$ "" id " 1" 2" 3" id 1 2 3 id 1 2 3 distribution id "" projectId" 2" 2" 4" " 2" "" 2" 4" of values " 2" 2" 4" "" "" "" projectId projectId in column! projectId "" "" %$ loca:on" NYC" SF" ATL" loca:on" NYC" SF" ATL" "" loca:on" NYC" SF" ATL" " " "" " " " " " " loca.on deptId 22 31 3 " " " " WHERE emp.age > 60 deptId 22 31 3 deptId 22 31 3 deptId Preview Sort Filter Range Filter Projection MIN !! Employee! !! id ! MAX !!!!!! Employee ! ! projectId ! AVG Employee loca.on! id SUM Employee Employee id 1 2 3 deptId ! projectId id id 1 2 3 projectId 2 2 4 deptId Person ! !! loca.on projectId projectId loca.on projectId 2 2 4 loca.on NYC SF ATL id ! deptId loca.on NYC SF ATL deptId 22 31 3 !! !! !!!! loca.on deptId 22 31 3 projId ! ! ! .tle deptId loca.on! .tle department ! Union Rearrange Aggregate Update schema & update value Insert Figure 2: Gestures in the G ESTURE Q UERY gestural query language. Usability experiments demonstrate that this language is both easy to use and quicker to use than traditional interfaces. PROJECTION: The user can drag multiple attributes out of one A third method (Figure 3 (c)) is to pick two attributes in each of table to make a new table. the relations and then to drag them together in a pinching action. Clearly, since we begin the gesture by picking the two attributes, we 3.3 Design Considerations: Interactive Join are again forced to perform a worst case of M × N gestures, which It is important to note that while the above vocabulary of gestures is again undesirable. is demonstrative and gestures can be trivially replaced with others, The fourth method is to simply bring the two tables close to each the design of these gestures was done with care and is the product other. By moving each attribute close to the other during the gesture of multiple iterations of user testing. To provide insight into the articulation phase, there is exactly one dragging gesture to perform, development process of these gestures, we now walk through two and all possible attribute combinations can be previewed in the same design aspects of the JOIN gesture. gesture. Thus, this gesture is the one we use for JOIN queries, shown in Figure 1. As we will see in our experimental evaluation, 3.3.1 Minimizing Gestures for Exploratory Querying this JOIN gesture is both quicker to use than traditional interfaces An important challenge in ad-hoc querying is that the user is typi- and also easily discoverable. Further, it allows the specification cally unfamiliar with both the schema and the data. Thus, we expect system to leverage data and schema information to easily rule out the user to perform a large number of trial and error queries, discov- impossible joins, thereby accelerating the query process. ering the database in the process. To encourage the user to discover the database, and at the same time minimize the effort involved, we 3.3.2 Readable and Pragmatic Layout consider a variety of gestures for JOIN (shown in Figure 3) and While most gestures such as PREVIEW and UNION interactions pick the one that is intuitive while quantifiably involving the least are straightforward from a presentation standpoint, laying out at- amount of effort for exploratory querying. tributes during the JOIN interaction faces several challenges. First, One possible gesture (Figure 3 (a)) for JOIN is inspired by mouse- due to the textual nature of the information, it needs to be presented driven interfaces for SQL databases – the user can simply drag a in such a way that readability is preserved. Second, the interface column from one relation to another. The system can generate a should allow the user to express all possible queries. In the case of preview of the join during the drag operation, allowing the user to a pair of tables with M and N attributes of the same type, there are abort the join if the result does not look right. However, for relations M × N possible joins. with M and N attributes each, finding the right combination of Research in information visualization has extensively studied attributes will involve a worst case of M ×N separate drag gestures, layouts such as radial methods [17] for static use cases such as each considering only one join preview per gesture. menus, and has also looked into interactive exploration [58] of data. A variant of this gesture (Figure 3 (b)) is to allow the user to not However, the interactive, gesture-driven composition of two tables is abort the gesture on an unfavorable preview result, and to continue a novel and unique setting. To this end, we consider multiple layout the drag gesture towards a different column. This allows the user to options to represent the JOIN operation, as shown in Figure 4. discover the right join in M IN (M, N ) gestures, which is a reduction The first option is to present attributes as simple vertical lists. from the previous number of actions. The problem with such a layout is that for any position of two vertical lists, many pairs of attributes can be at identical distances, Employee ! ! Employee ! Person ! Employee ! ! ! Person ! ! Person leaving the JOIN intent ambiguous. For example, aligning a pair of id !! id ! id !! id ! id !! !! id ! projectId ! !! !! ! projectId ! !! !! projId ! projectId ! ! !! projId !! !! !! !! projId Project Employee Project !! !! !!!! !! !! Project loca.on! loca.on! !! loca.on! !! !! !! !! loca.on! Employee id id loca.on! !! !! !! !!loca.on! id deptId ! department ! deptId ! department ! deptId ! department ! id supervisorId projectId supervisorId parentProjectId projectId parentProjectId supervisorId location Employee managerId (a) (b) (c) deptId parentProjectId location managerId location id title name deptId name title title projectId Figure 3: Considerations for the interactive JOIN. Each of Vertical deptId Radial Arc these options requires more gestures than the one implemented in our system, as seen in Figure 1. Figure 4: Layout Considerations for the interactive JOIN. 293

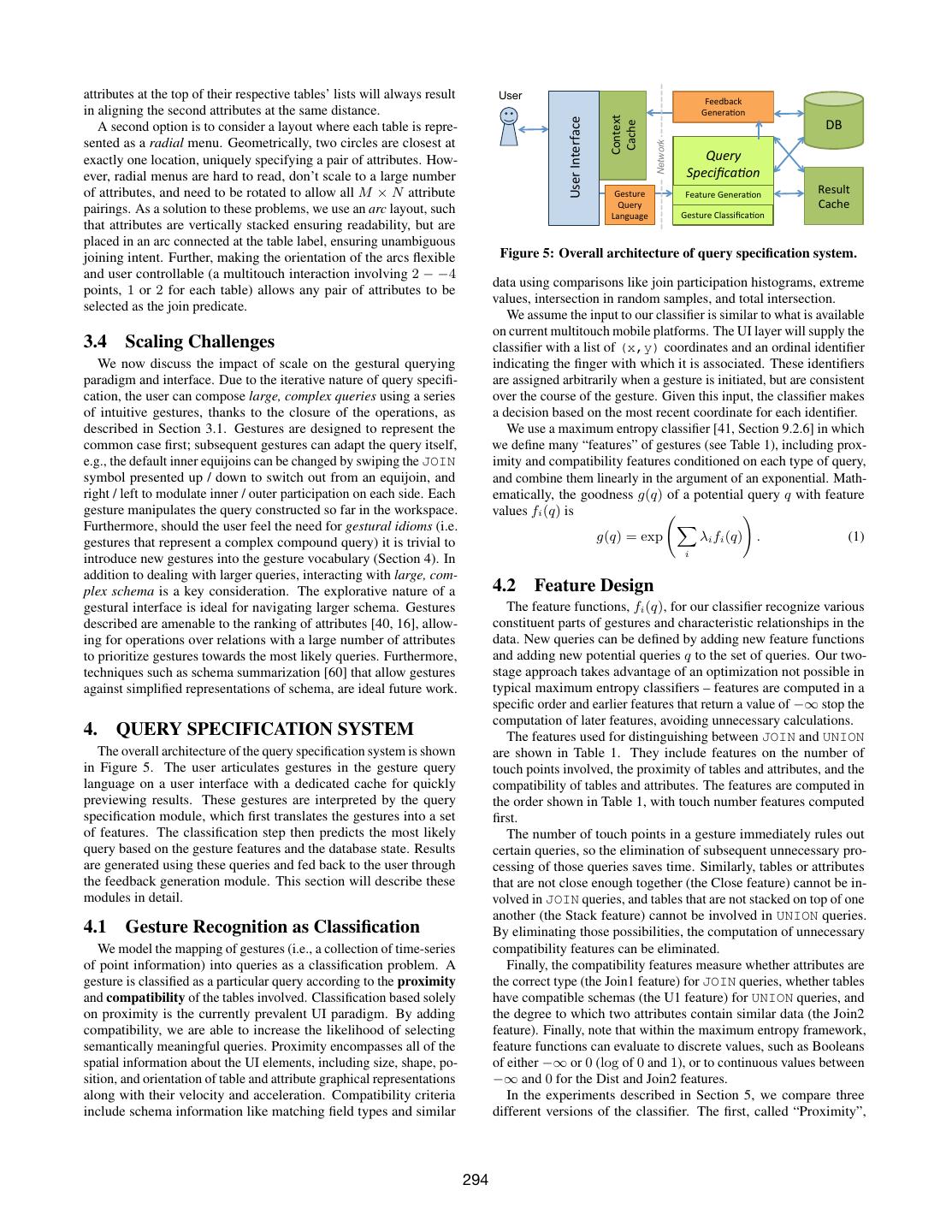

6 .attributes at the top of their respective tables’ lists will always result User Feedback in aligning the second attributes at the same distance. Genera,on Context User Interface DB Cache A second option is to consider a layout where each table is repre- sented as a radial menu. Geometrically, two circles are closest at Network exactly one location, uniquely specifying a pair of attributes. How- Query ever, radial menus are hard to read, don’t scale to a large number Specifica-on of attributes, and need to be rotated to allow all M × N attribute Gesture Feature G enera,on Result pairings. As a solution to these problems, we use an arc layout, such Query Cache Language Gesture Classifica,on that attributes are vertically stacked ensuring readability, but are placed in an arc connected at the table label, ensuring unambiguous joining intent. Further, making the orientation of the arcs flexible Figure 5: Overall architecture of query specification system. and user controllable (a multitouch interaction involving 2 − −4 data using comparisons like join participation histograms, extreme points, 1 or 2 for each table) allows any pair of attributes to be values, intersection in random samples, and total intersection. selected as the join predicate. We assume the input to our classifier is similar to what is available on current multitouch mobile platforms. The UI layer will supply the 3.4 Scaling Challenges classifier with a list of (x,y) coordinates and an ordinal identifier We now discuss the impact of scale on the gestural querying indicating the finger with which it is associated. These identifiers paradigm and interface. Due to the iterative nature of query specifi- are assigned arbitrarily when a gesture is initiated, but are consistent cation, the user can compose large, complex queries using a series over the course of the gesture. Given this input, the classifier makes of intuitive gestures, thanks to the closure of the operations, as a decision based on the most recent coordinate for each identifier. described in Section 3.1. Gestures are designed to represent the We use a maximum entropy classifier [41, Section 9.2.6] in which common case first; subsequent gestures can adapt the query itself, we define many “features” of gestures (see Table 1), including prox- e.g., the default inner equijoins can be changed by swiping the JOIN imity and compatibility features conditioned on each type of query, symbol presented up / down to switch out from an equijoin, and and combine them linearly in the argument of an exponential. Math- right / left to modulate inner / outer participation on each side. Each ematically, the goodness g(q) of a potential query q with feature gesture manipulates the query constructed so far in the workspace. values fi (q) is Furthermore, should the user feel the need for gestural idioms (i.e. gestures that represent a complex compound query) it is trivial to g(q) = exp λi fi (q) . (1) i introduce new gestures into the gesture vocabulary (Section 4). In addition to dealing with larger queries, interacting with large, com- plex schema is a key consideration. The explorative nature of a 4.2 Feature Design gestural interface is ideal for navigating larger schema. Gestures The feature functions, fi (q), for our classifier recognize various described are amenable to the ranking of attributes [40, 16], allow- constituent parts of gestures and characteristic relationships in the ing for operations over relations with a large number of attributes data. New queries can be defined by adding new feature functions to prioritize gestures towards the most likely queries. Furthermore, and adding new potential queries q to the set of queries. Our two- techniques such as schema summarization [60] that allow gestures stage approach takes advantage of an optimization not possible in against simplified representations of schema, are ideal future work. typical maximum entropy classifiers – features are computed in a specific order and earlier features that return a value of −∞ stop the computation of later features, avoiding unnecessary calculations. 4. QUERY SPECIFICATION SYSTEM The features used for distinguishing between JOIN and UNION The overall architecture of the query specification system is shown are shown in Table 1. They include features on the number of in Figure 5. The user articulates gestures in the gesture query touch points involved, the proximity of tables and attributes, and the language on a user interface with a dedicated cache for quickly compatibility of tables and attributes. The features are computed in previewing results. These gestures are interpreted by the query the order shown in Table 1, with touch number features computed specification module, which first translates the gestures into a set first. of features. The classification step then predicts the most likely The number of touch points in a gesture immediately rules out query based on the gesture features and the database state. Results certain queries, so the elimination of subsequent unnecessary pro- are generated using these queries and fed back to the user through cessing of those queries saves time. Similarly, tables or attributes the feedback generation module. This section will describe these that are not close enough together (the Close feature) cannot be in- modules in detail. volved in JOIN queries, and tables that are not stacked on top of one another (the Stack feature) cannot be involved in UNION queries. 4.1 Gesture Recognition as Classification By eliminating those possibilities, the computation of unnecessary We model the mapping of gestures (i.e., a collection of time-series compatibility features can be eliminated. of point information) into queries as a classification problem. A Finally, the compatibility features measure whether attributes are gesture is classified as a particular query according to the proximity the correct type (the Join1 feature) for JOIN queries, whether tables and compatibility of the tables involved. Classification based solely have compatible schemas (the U1 feature) for UNION queries, and on proximity is the currently prevalent UI paradigm. By adding the degree to which two attributes contain similar data (the Join2 compatibility, we are able to increase the likelihood of selecting feature). Finally, note that within the maximum entropy framework, semantically meaningful queries. Proximity encompasses all of the feature functions can evaluate to discrete values, such as Booleans spatial information about the UI elements, including size, shape, po- of either −∞ or 0 (log of 0 and 1), or to continuous values between sition, and orientation of table and attribute graphical representations −∞ and 0 for the Dist and Join2 features. along with their velocity and acceleration. Compatibility criteria In the experiments described in Section 5, we compare three include schema information like matching field types and similar different versions of the classifier. The first, called “Proximity”, 294

7 . of results, not too many and not too few. The data compatibility Table 1: Features used in maximum entropy classifier. feature, termed Join2, is designed to capture this intuition. Columns are the feature Name, whether it applies to Union To estimate the data similarity between two attributes, the number and/or Join queries (U/J), the Type of feature (Num: number of times that each value from one attribute appears in the other of tables, Prox: proximity, Comp: compatibility), a description is counted and a histogram is constructed from the counts for all of when it is “On”, the entities provided to it as input (1T: one of the values. This histogram is then converted to a multinomial table, 2T: two tables, 2A: two attributes), and its output type distribution and its probability is measured under a dirichlet distri- (Bool: {−∞, 0}, Cont: (−∞, 0]). bution. In addition, to avoid zero probabilities, we provide a small Laplace prior on the histogram bin counts, so that every bin has 0.01 of a count added to it. This has a large effect on bins with Name U/J Type On when In Out zero counts, but an insignificant effect on bins with non-zero counts. Tch1 — Num 1 simultaneous touch 1T Bool If the histogram bin values are hi , and the normalized counts are, Tch2a — Num 2 simultaneous touches 1T Bool xi = hihj , then the dirichlet likelihood with parameters αi is j Tch2b UJ Num 2 simultaneous touches 2T Bool Tch3 J Num 3 simultaneous touches 2T Bool Γ( i αi ) i −1 dir(x, α) = xα i (2) Tch4 J Num 4 simultaneous touches 2T Bool i Γ(α i) i Close UJ Prox Attrs. are close enough 2A Bool where Γ(x) is the gamma function. This dirichlet likelihood is Dist UJ Prox Negated distance 2A Cont scaled by a reasonable λi to make it comparable to the other good- Stack U Prox Tables are stacked 2T Bool nesses. In the current experiments, we define the α parameters and Join1 J Comp Attrs. are the same type 2A Bool the scaling exponent by hand. We define αi to be Join2 J Comp Data sim. btwn. attrs. 2A Cont 1 1≤i≤5 U1 U Comp Tables share schema 2T Bool 1 U2 U Comp Attr. types match, not ord. 2T Bool αi = 6 ≤ i ≤ 20 (3) i−4 0 otherwise In the future, these parameters could be learned from pairs of com- does not use any of the compatibility features. The second, called patible attributes, although for these experiments we did not have “Proximity + Schema” uses the proximity features and compatibil- enough pairs to perform such an estimation. ity features Join1, U1, and U2, but not Join2. The third version, All of the parameters of the classifier, λi , can be learned across called “Proximity + Schema + Data” uses all of the proximity and a collection of recorded training gestures to tune the quality of compatibility features. the classifier. For the preliminary experiments in Section 5, these parameters are instead set manually. Learning these tunings from data will allow future quality improvements. We plan to learn 4.3 Classification Example these parameters by treating all UI states in a gesture as training To illustrate how the classifier works, consider the example query instances with the query at which the user eventually arrives as the and gesture described in Section 1.1 with the table layout shown correct query, much in the same way that search engines learn spell in Figure 1. Before the user begins the gesture, there are no ac- checking [13] and search suggestions from search sessions. tive touches, so all of the TchX feature functions are −∞, making g(q) = 0 for all queries q and obviating the need for further pro- 4.5 Context Caching cessing. When the user touches the headers of the Artist and Album Note that some of the computations performed in evaluating the tables, the Tch2b feature function becomes 0 because there are two feature functions can be shared between different feature functions. simultaneous touches on two separate tables while the other TchX For example, the computation of distances between attributes is feature functions are still −∞. Thus g(q) = 0 for queries not necessary for the Stack, Dist, and Close features. Similarly, attribute involving two touches on two different tables, but processing pro- compatibilities are necessary for computing table compatibilities, so ceeds on the remaining queries to evaluate g(q). At the beginning computations would be repeated by the U1, U2, and Join1 features. of the gesture, all pairs of attributes are far away from each other In order to further speed up the system, these computations can be and the Close feature function is −∞, making g(q) = 0 for all memoized such that they are only computed at most once and only queries. Once the tables get close enough together, the Close feature if necessary, with the result cached for subsequent feature functions. becomes 0 and the Dist features are computed between the six pairs The speedup due to this caching is shown in Section 5.4. of attributes in the two tables. Because the tables are side-by-side, the Stacked feature is −∞, and only JOIN queries have non-zero goodness. After identifying compatible attribute pairs with the Join1 5. EXPERIMENTS AND EVALUATION and Join2 feature functions, the closest compatible pair of attributes We perform experiments both from the user perspective, evalu- having the highest goodness is shown as a preview. ating the usability of the system, and from a systems perspective, evaluating the performance of the system and sharing insights from 4.4 Implementation Details the impact of caching strategies employed in the gesture classifier. In these experiments we test an iPad implementation of the G ES - In addition to the proximity and schema-based compatibility fea- TURE Q UERY system. The system comprises a frontend written ture functions, our system uses data similarity as a feature to aid in Javascript and HTML on the iPad and a backend based on Tor- multi-attribute queries such as joins. This feature is based on a nado3 running on a Linux PC. We use publicly available datasets, probability distribution over histograms of join participation counts. Chinook (11 tables, 64 columns,15K tuples, 1MB)4 and TPC-H (9 The motivation for such a measure is an intuition that the user would 3 typically only issue queries that could usefully be displayed on our http://www.tornadoweb.org 4 interface, and thus each value should participate in a small number http://chinookdatabase.codeplex.com 295

8 . 70 tables, 76 columns, 86K tuples, 15MB)5 as the test databases6 . We performed three user studies under procedures approved by our 60 Console-‐based SQL institutional review board7 . 50 Visual Query Builder Predic'on (s) User Study Setup: We adopt a within-subjects experimental design 40 GestureQuery since the compared systems were already highly discernible (e.g., 30 C ONSOLE - BASED SQL vs. G ESTURE Q UERY) and catching-on effects were not applicable. 20 Each experiment was performed over 30 users from the student 10 body of the authors’ university, a sample size motivated by prior 0 research in user studies [37, 19, 23]. We report mean and stan- Preview Filter Sort Group by & Join Union dard errors for all metrics to demonstrate consistency across users, Aggregate and perform 1-tailed t-tests to establish statistical significance. All studies were carefully designed to avoid bias [37]. Users were re- Figure 6: Average completion time for six query types using cruited from the student body at the university and care was taken three different interfaces: Users completed tasks using G ES - TURE Q UERY quicker than the other two interfaces. Error bars to ensure an even distribution of proficiency by asking objective post-interview questions about their degree major and prior expe- show standard error of the mean. rience with databases; 15 of the 30 students were confirmed to be proficient at data-related tasks and the other 15 were classified as Statistical Significance: In order to determine whether these dif- naive users. For consistency each user was given an identical tuto- ferences were statistically significant, we performed 1-tailed t-tests rial (read off a written script) on the use of each interface and had a on the results. The actions, null hypotheses and p-values are shown chance to practice each task before their actions were recorded. In in Appendix A. We can clearly see that users construct queries order to avoid bias from learning effects on the user, the order of significantly more quickly using G ESTURE Q UERY than the other the tasks was randomized, as was the order of the systems within two systems, with the significance level under 0.01. each task. Fatigue effects were avoided by restricting the length of User Survey: Results of the subjective survey questions are shown each experiment and scheduling breaks into the study. Carryover in Table 2. Each user cast a single vote for each question, and users effects were avoided by restricting the number of similar tasks, and who selected multiple systems for an answer had their votes divided by counterbalancing (i.e., varying the order of both the systems between those systems. As we can see from the table, most users evaluated and the individual tasks for each user). find G ESTURE Q UERY easier to use by a large margin except for the Systems and Studies: The interfaces compared were a C ONSOLE - JOIN action, where V ISUAL Q UERY B UILDER received 15 votes BASED SQL (SQLite), the V ISUAL Q UERY B UILDER of Active compared to G ESTURE Q UERY’s 12 for usability. In addition, users Query Builder8 which represents a state-of-the-art keyboard-and- think that G ESTURE Q UERY is easier to learn than console-based mouse query interface, and our system, G ESTURE Q UERY. We SQL and V ISUAL Q UERY B UILDER except for the JOIN operation, performed three studies, Completion Time, Discoverability, and where G ESTURE Q UERY and V ISUAL Q UERY B UILDER received 14 Anticipation, each with their own set of objective and subjective and 15 votes respectively. In contrast, for AGGREGATE, a similarly metrics, as described below. complex operation, G ESTURE Q UERY was preferred by most for both usability and learnability. 5.1 Completion Time Drilling down further into the survey results uncovers more in- sights for the JOIN gesture – in terms of usability, for proficient We first compare the time it takes users to specify queries on users, 9 out of 15 preferred V ISUAL Q UERY B UILDER and 4 pre- three different interfaces. Each student was given six SQL queries ferred G ESTURE Q UERY, in contrast to naive users, where G ES - to perform on each interface, for example “A LBUM JOIN A RTIST TURE Q UERY received 8 votes and V ISUAL Q UERY B UILDER re- ON A LBUM .A RTIST I D = A RTIST.A RTIST I D”. ceived 6. In terms of learnability, for proficient users, 9.5 voted for The objective metrics of performance in this study are completion V ISUAL Q UERY B UILDER and 5.5 voted for G ESTURE Q UERY (.5 time, the total time it takes, on average, to complete each task on votes for ties), in contrast to naive users, where G ESTURE Q UERY re- each interface, and interactivity for GestureQuery, the fraction ceived 8.5 votes and V ISUAL Q UERY B UILDER received 5.5. Revis- of touches that are classified within a predefined interactive latency iting individual subjects’ interactions reveals that several proficient threshold. The subjects also completed a survey questionnaire af- users prefer to perform JOINs by combining two attributes (e.g. by ter finishing the study, providing details on their majors of study, pinching two attributes together), while naive users have a prefer- relevant coursework and prior experience working with databases. ence of gesturing compositions at the relation level (by pinching two Subjects were also surveyed on subjective metrics; usability (how relations together). Thus, the JOIN gesture in GestureQuery easy it was to use each system) and learnability (how easy it was seems to work better with naive users. This again motivates our to learn to each system). ability to customize the gesture vocabulary at the classifier level, Figure 6 shows the average completion time for each action in discussed in Section 4. three different systems. G ESTURE Q UERY’s average completion times are lower and have less variance than both C ONSOLE - BASED System Performance: Table 3 shows the interactivity results, the SQL and V ISUAL Q UERY B UILDER. fraction of gestural inputs (movement of multitouch points on the device) that are classified within 33 ms, a threshold chosen based on 5 the sampling rate of the multitouch sensor, which runs at 30 Hz. No- http://www.tpc.org/tpch 6 tice that the interactivity results demonstrate a highly fluid interface. It should be noted that the goal of our experiments is to compare the feasibility of gestural query specification against existing methods; larger, more complex datasets There are some gestural inputs that are slower than the interactive can dealt with using existing summarization methods [50, 60] threshold. This is caused by the initial loading of schema informa- 7 OSU IRB #2013B0084 tion into the frontend’s Context Cache, which can be improved by 8 http://activequerybuilder.com prepopulation of relevant information, an area for future work. 296

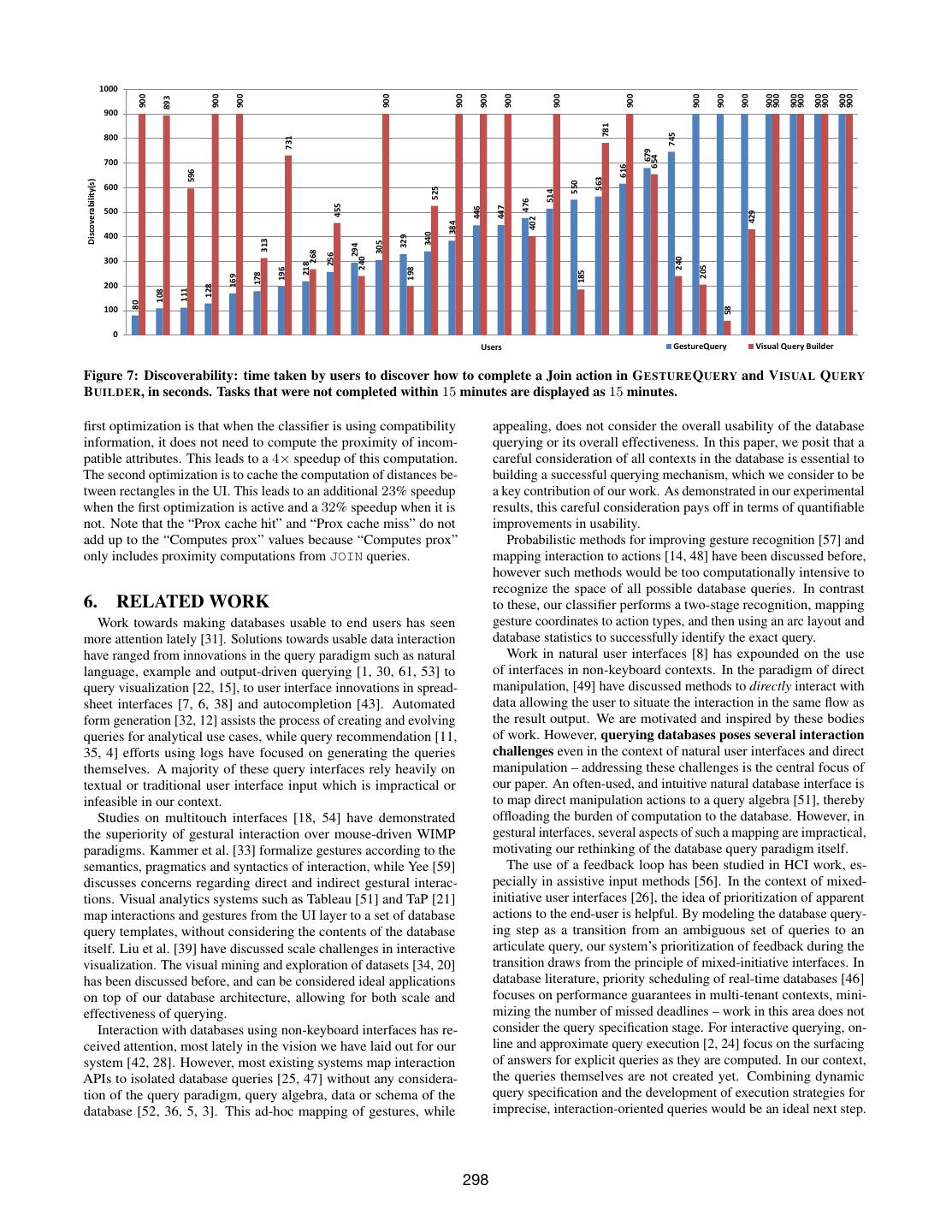

9 . 1.0 Table 2: Number of users selecting, based on a written sur- Proximity 0.9 vey after the tasks, each system as easiest to use (usability) and 0.8 Proximity + Schema Proximity + Schema + Data learn (learnability) for each query type. Systems are C ONSOLE - 0.7 Anticipation BASED SQL, V ISUAL Q UERY B UILDER (VQB), and G ES - 0.6 TURE Q UERY (GQ – our system). For users claiming equal 0.5 0.4 preference for multiple systems, their votes were split equally 0.3 amongst the systems. 0.2 0.1 Survey Action Console VQB GQ 0.0 Join1A Join1R Join2A Join2R Union1 Union2 Usability PREVIEW 1 5 24 Usability FILTER 5 1.5 23.5 Figure 8: Average anticipation scores for six queries performed Usability SORT 0 2 28 by 30 subjects with standard errors. 1.0 represents correct clas- Usability AGGREGATE 5 4 21 sification at the start of the gesture articulation, and 0 repre- Usability JOIN 3 15 12 sents an incorrect classification even after completion of the ges- Usability UNION 2 0 28 ture. Information from the schema and data in the database al- lows our classifier to better predict the intended database query Learnability PREVIEW 1.66 2.16 26.16 for ambiguous gestures. Learnability FILTER 5 4.5 20.5 Learnability SORT 1.33 1.33 27.33 5.3 Anticipation Learnability AGGREGATE 2 10.5 17.5 As our third study, we compare the ability of three different Learnability JOIN 1 15 14 classifiers to anticipate a user’s intent. Thirty of the subjects who Learnability UNION 3 0 27 participated in the first two studies were recruited to perform two JOIN queries and two UNION queries using G ESTURE Q UERY. The Joins were performed in two different ways, once by re-arranging Table 3: Interactivity: percentage of touch classifications that the attributes and then dragging the two tables together, and once by take less than 33 ms. adjusting the attribute arc before dragging the two tables together, giving a total of 180 gestures, demonstrating the versatility of the PREVIEW FILTER SORT AGGREGATE JOIN UNION classifier over different gestures. We compared three different ver- 95.1 98.8 97.8 99.6 98.4 98.0 sions of our classifier on recordings of these gestures. The first uses only proximity of the UI elements to predict the desired query. The second uses proximity and schema compatibility of attributes and the third uses proximity, schema compatibility, and data com- 5.2 Discoverability patibility, as described in Section 4. Gestures were recorded until Another aspect of evaluating ease of use of interaction is to assess a classifier was able to recognize the correct query. Results are how intuitive or discoverable the interactions of a system are. Thus, measured as anticipation, the fraction of the gesture articulation our second study compares V ISUAL Q UERY B UILDER to G ES - time remaining after a classifier first recognizes the correct query. TURE Q UERY in terms of the discoverability of the JOIN action, This number is 1.0 for gestures that are recognized correctly the i.e., whether an untrained user is able to intuit how to successfully moment the articulation process begins, and 0 for gestures that are perform gestural interaction from the interface and its usability affor- never recognized. dances [45]. We recruited thirty students for this study, distributed Results of the anticipation study are presented in Figure 8. Using across both proficient and naive users similar to the Completion both proximity and schema compatibility features is better than just Time study discussed in the previous section. It should be noted proximity in the all JOIN queries. Considering proximity, schema that care was taken to avoid training effects – subjects who had and data compatibility together helps improve anticipation scores for previously performed the Completion Time study were not allowed all but the first JOIN, for which data compatibility in fact negatively to perform the Discoverability study. Each subject was provided impacts anticipation scores. Schema and data compatibility are not a task described in natural language, and asked to figure out and helpful for the UNION queries because the subjects only perform complete the query task on each system within 15 minutes. The them on tables that are already fully schema compatible. order of the systems was randomized across subjects to avoid biases Table 4: Cache performance of three versions of classifier on from learning effects. The task involved a PREVIEW, FILTER, and 180 recorded gestures. “Skips prox” and “Prox cache hit” both JOIN, described to the subjects as answering the question, “What allow computation to be avoided. are the titles of the albums created by the artist ‘Black Sabbath’?” Before the experiment, we provided a tutorial to each subject on Prox Schema Schema+Data performing the PREVIEW and FILTER actions in both systems, but left it to the user to discover the JOIN action. Computes prox 333,315 81,583 81,583 Figure 7 shares the results of the discoverability experiment. Skips prox 0 251,732 251,732 Twenty-three of the thirty subjects successfully figured out how Prox cache miss 299,846 74,951 74,951 to complete the task using G ESTURE Q UERY while only seventeen Prox cache hit 143,750 21,944 21,944 students were able to complete the task using V ISUAL Q UERY B UILDER. In addition, for those who did complete the task, the average completion time using V ISUAL Q UERY B UILDER was more 5.4 Optimization Performance than 19% of that for G ESTURE Q UERY. If we consider a time-out In addition to user-facing numbers, we study the speedup of using completion time for the students who don’t complete the task as context caching, described in Section 4.5, as shown in Table 4 for 15 min, the percentage is increased to 31%. the three versions of the classifier compared in our experiments. The 297

10 . 1000 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 900 893 900 781 745 800 731 679 654 700 616 596 563 Discoverability(s) 550 600 525 514 476 455 447 446 500 429 402 384 340 400 329 313 305 294 268 256 240 240 300 218 205 198 196 185 178 169 200 128 111 108 80 58 100 0 Users GestureQuery Visual Query Builder Figure 7: Discoverability: time taken by users to discover how to complete a Join action in G ESTURE Q UERY and V ISUAL Q UERY B UILDER, in seconds. Tasks that were not completed within 15 minutes are displayed as 15 minutes. first optimization is that when the classifier is using compatibility appealing, does not consider the overall usability of the database information, it does not need to compute the proximity of incom- querying or its overall effectiveness. In this paper, we posit that a patible attributes. This leads to a 4× speedup of this computation. careful consideration of all contexts in the database is essential to The second optimization is to cache the computation of distances be- building a successful querying mechanism, which we consider to be tween rectangles in the UI. This leads to an additional 23% speedup a key contribution of our work. As demonstrated in our experimental when the first optimization is active and a 32% speedup when it is results, this careful consideration pays off in terms of quantifiable not. Note that the “Prox cache hit” and “Prox cache miss” do not improvements in usability. add up to the “Computes prox” values because “Computes prox” Probabilistic methods for improving gesture recognition [57] and only includes proximity computations from JOIN queries. mapping interaction to actions [14, 48] have been discussed before, however such methods would be too computationally intensive to recognize the space of all possible database queries. In contrast 6. RELATED WORK to these, our classifier performs a two-stage recognition, mapping Work towards making databases usable to end users has seen gesture coordinates to action types, and then using an arc layout and more attention lately [31]. Solutions towards usable data interaction database statistics to successfully identify the exact query. have ranged from innovations in the query paradigm such as natural Work in natural user interfaces [8] has expounded on the use language, example and output-driven querying [1, 30, 61, 53] to of interfaces in non-keyboard contexts. In the paradigm of direct query visualization [22, 15], to user interface innovations in spread- manipulation, [49] have discussed methods to directly interact with sheet interfaces [7, 6, 38] and autocompletion [43]. Automated data allowing the user to situate the interaction in the same flow as form generation [32, 12] assists the process of creating and evolving the result output. We are motivated and inspired by these bodies queries for analytical use cases, while query recommendation [11, of work. However, querying databases poses several interaction 35, 4] efforts using logs have focused on generating the queries challenges even in the context of natural user interfaces and direct themselves. A majority of these query interfaces rely heavily on manipulation – addressing these challenges is the central focus of textual or traditional user interface input which is impractical or our paper. An often-used, and intuitive natural database interface is infeasible in our context. to map direct manipulation actions to a query algebra [51], thereby Studies on multitouch interfaces [18, 54] have demonstrated offloading the burden of computation to the database. However, in the superiority of gestural interaction over mouse-driven WIMP gestural interfaces, several aspects of such a mapping are impractical, paradigms. Kammer et al. [33] formalize gestures according to the motivating our rethinking of the database query paradigm itself. semantics, pragmatics and syntactics of interaction, while Yee [59] The use of a feedback loop has been studied in HCI work, es- discusses concerns regarding direct and indirect gestural interac- pecially in assistive input methods [56]. In the context of mixed- tions. Visual analytics systems such as Tableau [51] and TaP [21] initiative user interfaces [26], the idea of prioritization of apparent map interactions and gestures from the UI layer to a set of database actions to the end-user is helpful. By modeling the database query- query templates, without considering the contents of the database ing step as a transition from an ambiguous set of queries to an itself. Liu et al. [39] have discussed scale challenges in interactive articulate query, our system’s prioritization of feedback during the visualization. The visual mining and exploration of datasets [34, 20] transition draws from the principle of mixed-initiative interfaces. In has been discussed before, and can be considered ideal applications database literature, priority scheduling of real-time databases [46] on top of our database architecture, allowing for both scale and focuses on performance guarantees in multi-tenant contexts, mini- effectiveness of querying. mizing the number of missed deadlines – work in this area does not Interaction with databases using non-keyboard interfaces has re- consider the query specification stage. For interactive querying, on- ceived attention, most lately in the vision we have laid out for our line and approximate query execution [2, 24] focus on the surfacing system [42, 28]. However, most existing systems map interaction of answers for explicit queries as they are computed. In our context, APIs to isolated database queries [25, 47] without any considera- the queries themselves are not created yet. Combining dynamic tion of the query paradigm, query algebra, data or schema of the query specification and the development of execution strategies for database [52, 36, 5, 3]. This ad-hoc mapping of gestures, while imprecise, interaction-oriented queries would be an ideal next step. 298

11 .7. CONCLUSION AND FUTURE WORK [2] S. Acharya, P. B. Gibbons, and V. Poosala. Aqua: A Fast Interacting with databases using gestures is challenging due to Decision Support Systems Using Approximate Query the large number of possible database queries. In this paper, we Answers. VLDB, 1999. present a novel query specification architecture that allows users to [3] S. Agrawal, S. Chaudhuri, and G. Das. DBXplorer: A System query databases with gestures. We present a novel, well-designed for Keyword-Based Search over Relational Databases. ICDE, gestural query language to express queries. We build a maximum- 2002. entropy classifier-based query recognition system that uses not only [4] J. Akbarnejad, G. Chatzopoulou, M. Eirinaki, S. Koshy, multitouch coordinates, but also database state such as schema and S. Mittal, D. On, N. Polyzotis, and J. S. V. Varman. SQL data statistics to correctly map multitouch gestures to relational QueRIE Recommendations. VLDB, 2010. database queries. When compared against conventional coordinate- [5] S. Amer-Yahia, L. V. Lakshmanan, and S. Pandit. FleXPath: based gesture mapping, our classifier is more accurate and predictive Flexible Structure and Full-Text Querying for XML. at identifying intended database queries, and performs well within SIGMOD, 2004. interactive latency constraints. [6] E. Bakke and E. Benson. The Schema-Independent Database We evaluate the efficiency, usability and learnability of our system UI. CIDR, 2011. to users at multiple levels of proficiency in querying databases and [7] E. Bakke, D. Karger, and R. Miller. A Spreadsheet-Based our gestural interface. Our detailed user studies demonstrate that our User Interface for Managing Plural Relationships in G ESTURE Q UERY system significantly outperforms current methods Structured Data. CHI, 2011. on multiple usability metrics for all classes of users. [8] A. Cˆamara. Natural User Interfaces. INTERACT, 2011. Going forward, there are multiple challenges that need to be [9] Canalys. Worldwide Smartphone and Client PC Shipment addressed. Some areas of expansion are at the implementation level, Estimates. 2012. such as dealing with textual input in a gesture-oriented interface, [10] S. K. Card, J. D. Mackinlay, and B. Schneiderman. Readings which has been well-studied in prior user interface and accessibility in Information Visualization: Using Vision to Think literature [56]. Gestural interaction with databases is currently a (Interactive Technologies). Morgan Kaufmann, 1999. completely unexplored area of research, and hence is ripe with a wide variety of possible extensions and follow-up problems. [11] G. Chatzopoulou, M. Eirinaki, and N. Polyzotis. Query As discussed in Section 3.4, scaling the Gestural Query process to Recommendations for Interactive Database Exploration. more complex queries and to larger and more complex datasets is an SSDBM, 2009. immediate next step. For larger datasets, the (possibly approximate) [12] K. Chen, H. Chen, N. Conway, J. M. Hellerstein, and T. S. execution of queries within interactive response times requires a fun- Parikh. Usher: Improving Data Quality with Dynamic Forms. damental rethinking of the query execution infrastructure. Further, TKDE, 2011. the direct manipulation of complex schema through summarized [13] S. Cucerzan and E. Brill. Spelling correction as an iterative representations would require extensions to the vocabulary of gestu- process that exploits the collective knowledge of web users. ral operations. A detailed study focusing on scaling challenges is EMNLP, 2004. ideal follow-up work. [14] S. Damaraju and A. Kerne. Multitouch Gesture Learning and Another area of study is the feedback generation used in our Recognition System. Tabletops and Interactive Surfaces, system. Intuitively, the feedback presented to the user is meant to aid 2008. them in quickly disambiguating the space of possible query intents, [15] J. Danaparamita and W. Gatterbauer. QueryViz: Helping while maximizing the entropy of the query results. The interactive Users Understand SQL Queries and their Patterns. EDBT, summarization of results [50] is significant for constrained display 2011. contexts such as mobile devices, and can be leveraged by our system. [16] G. Das, V. Hristidis, N. Kapoor, and S. Sudarshan. Ordering Further, generating and presenting such feedback within interactive the Attributes of Query Results. SIGMOD, 2006. latencies is a unique systems challenge, which can be solved using a [17] G. M. Draper, Y. Livnat, and R. F. Riesenfeld. A Survey of combination of information visualization methods [10], multi-layer Radial Methods for Information Visualization. IEEE VCG, caching techniques and online query execution strategies inspired 2009. by Hellerstein et al. [24]. [18] S. M. Drucker, D. Fisher, R. Sadana, J. Herron, et al. A final area of interest is that of using the gesture information TouchViz: A Case Study Comparing Two Interfaces for Data from existing interaction logs to tune the parameters of our classifier, Analytics on Tablets. CHI, 2013. which can then be evaluated to measure the benefits (completion time [19] L. Faulkner. Beyond the five-user assumption: Benefits of and anticipation) and generality (across both users and queries) of increased sample sizes in usability testing. Behavior Research user training, allowing us to investigate areas such as personalization Methods, Instruments, & Computers, 2003. of gestures and tuning of the gesture prediction itself. [20] M. C. Ferreira de Oliveira and H. Levkowitz. From Visual Data Exploration to Visual Data Mining: A Survey. IEEE 8. ACKNOWLEDGEMENTS VCG, 2003. We would like to thank the paper reviewers and Azza Abouzeid [21] S. Fl¨oring and T. Hesselmann. TaP: Towards Visual Analytics for their insights and feedback, which have helped significantly on Interactive Surfaces. CoVIS, 2010. improve the quality of this paper. [22] W. Gatterbauer. Databases will Visualize Queries too. VLDB, 2011. [23] A. Griffin and J. R. Hauser. The Voice of the Customer. 9. REFERENCES Marketing science, 1993. [1] A. Abouzied, J. Hellerstein, and A. Silberschatz. DataPlay: [24] J. M. Hellerstein, M. J. Franklin, S. Chandrasekaran, Interactive Tweaking and Example-driven Correction of A. Deshpande, et al. Adaptive Query Processing: Technology Graphical Database Queries. UIST, 2012. in Evolution. IEEE Data Engineering Bulletin, 2000. 299

12 .[25] S. Hirte, A. Seifert, S. Baumann, D. Klan, and K. Sattler. [53] Q. T. Tran, C.-Y. Chan, and S. Parthasarathy. Query by Output. Data3 – A Kinect Interface for OLAP using Complex Event SIGMOD, 2009. Processing. ICDE, 2012. [54] B. Ullmer and H. Ishii. Emerging Frameworks for Tangible [26] E. Horvitz. Principles of Mixed-Initiative User Interfaces. User Interfaces. IBM Sys. Journal, 2000. CHI, 1999. [55] M. R. Vieira, H. L. Razente, M. C. N. Barioni, [27] E. L. Hutchins, J. D. Hollan, and D. A. Norman. Direct M. Hadjieleftheriou, D. Srivastava, C. Traina, and V. J. Manipulation Interfaces. Human–Computer Interaction, 1985. Tsotras. On Query Result Diversification. ICDE, 2011. [28] S. Idreos and E. Liarou. dbTouch: Analytics at your [56] D. J. Ward, A. F. Blackwell, and D. J. MacKay. Dasher – a Fingertips. CIDR, 2013. Data Entry Interface Using Continuous Gestures and [29] International Data Corporation. Worldwide Quarterly Tablet Language Models. UIST, 2000. Tracker. 2013. [57] D. Weir, S. Rogers, R. Murray-Smith, and M. L¨ochtefeld. A [30] Y. Ishikawa, R. Subramanya, and C. Faloutsos. MindReader: User-Specific Machine Learning Approach for Improving Querying databases through multiple examples. VLDB, 1998. Touch Accuracy on Mobile Devices. UIST, 2012. [31] H. Jagadish, A. Chapman, A. Elkiss, M. Jayapandian, Y. Li, [58] G. J. Wills. NicheWorks – Interactive Visualization of Very A. Nandi, and C. Yu. Making Database Systems Usable. Large Graphs. Computational and Graphical Statistics, 1999. SIGMOD, 2007. [59] W. Yee. Potential Limitations of Multi-touch Gesture [32] M. Jayapandian and H. Jagadish. Automating the Design and Vocabulary: Differentiation, Adoption, Fatigue. Construction of Query Forms. TKDE, 2009. Human-Computer Interaction: Novel Interaction Methods and [33] D. Kammer, J. Wojdziak, M. Keck, R. Groh, and S. Taranko. Techniques, 2009. Towards a Formalization of Multi-touch Gestures. ITS, 2010. [60] C. Yu and H. Jagadish. Schema Summarization. VLDB, 2006. [34] D. A. Keim. Visual Exploration of Large Data Sets. CACM, [61] M. Zloof. Query by Example. NCCE, 1975. 2001. [35] N. Khoussainova, Y. Kwon, M. Balazinska, and D. Suciu. APPENDIX SnipSuggest: Context-Aware Autocompletion for SQL. anticipationc represents the anticipation time of the console-based VLDB, 2010. SQL, anticipationv represents the anticipation time of the visual [36] B. Kimelfeld and Y. Sagiv. Finding and Approximating Top-k query builder and anticipationg represents the anticipation time Answers in Keyword Proximity Search. PODS, 2006. of our system. [37] J. Lazar, J. H. Feng, and H. Hochheiser. Research Methods in Human-Computer Interaction. Wiley. com, 2010. [38] B. Liu and H. Jagadish. A Spreadsheet Algebra for a Direct A. T-TEST RESULT Data Manipulation Query Interface. ICDE, 2009. [39] Z. Liu, B. Jiang, and J. Heer. imMens: Real-time Visual Action Null hypothesis P-value Querying of Big Data. EuroVis, 2013. Preview anticipationc ≤ anticipationg 1.93E-12 [40] M. Miah, G. Das, V. Hristidis, and H. Mannila. Standing Out Preview anticipationv ≤ anticipationg 2.20E-16 in a Crowd: Selecting Attributes for Maximum Visibility. Filter anticipationc ≤ anticipationg 1.14E-12 ICDE, 2008. Filter anticipationv ≤ anticipationg 6.79E-09 [41] K. P. Murphy. Machine Learning: A Probabilistic Perspective Sort anticipationc ≤ anticipationg 4.50E-10 (Adaptive Computation and Machine Learning series). MIT Sort anticipationv ≤ anticipationg 1.29E-05 Press, 2012. Group by & anticipationc ≤ anticipationg 1.66E-09 [42] A. Nandi. Querying Without Keyboards. CIDR, 2013. Aggregate [43] A. Nandi and H. Jagadish. Assisted Querying using Group by & anticipationv ≤ anticipationg 1.26E-05 Instant-response Interfaces. SIGMOD, 2007. Aggregate [44] A. Nandi and H. Jagadish. Guided Interaction: Rethinking the Join anticipationc ≤ anticipationg 1.54E-12 Query-Result Paradigm. VLDB, 2011. Join anticipationv ≤ anticipationg 0.007703 Union anticipationc ≤ anticipationg 2.76E-10 [45] D. A. Norman. Affordance, Conventions, and Design. Union anticipationv ≤ anticipationg 8.90E-06 Interactions, 1999. [46] H. Pang, M. J. Carey, and M. Livny. Multiclass Query Scheduling in Real-Time Database Systems. TKDE, 1995. [47] S. Patney et al. SQL Server Kinection. PASS, 2011. [48] J. Schwarz, J. Mankoff, and S. Hudson. Monte Carlo Methods for Managing Interactive State, Action and Feedback Under Uncertainty. UIST, 2011. [49] B. Shneiderman, C. Williamson, and C. Ahlberg. Dynamic Queries: Database Searching by Direct Manipulation. CHI, 1992. [50] M. Singh, A. Nandi, and H. Jagadish. Skimmer: Rapid Scrolling of Relational Query Results. SIGMOD, 2012. [51] C. Stolte. Visual Interfaces to Data. SIGMOD, 2010. [52] A. Termehchy and M. Winslett. Using Structural Information in XML Keyword Search Effectively. TODS, 2011. 300