- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Graphical Database Queries

展开查看详情

1 . DataPlay: Interactive Tweaking and Example-driven Correction of Graphical Database Queries Azza Abouzied Joseph M. Hellerstein Avi Silberschatz Yale University Univ. of California, Berkeley Yale University azza@cs.yale.edu hellerstein@cs.berkeley.edu avi@cs.yale.edu ABSTRACT queries. Of all query tasks, users find non-trivial quan- Writing complex queries in SQL is a challenge for users. tification to be most difficult [20, 19]. Contemporary Prior work has developed several techniques to ease query query specification paradigms, such as query-by-example, specification but none of these techniques are applicable to a visualization-driven querying and faceted search offer help particularly difficult class of queries: quantified queries. Our with specifying simple query blocks, but they offer very hypothesis is that users prefer to specify quantified queries in- little assistance for precisely those queries that are most teractively by trial-and-error. We identify two impediments difficult to specify — quantified queries. For those queries, to this form of interactive trial-and-error query specification users are stuck with the powerful but difficult database query in SQL: (i) changing quantifiers often requires global syntac- language: SQL. tical query restructuring, and (ii) the absence of non-answers Quantified queries evaluate constraints over sets of tuples from SQL’s results makes verifying query correctness diffi- rather than individual tuples, to determine whether a set as cult. We remedy these issues with DataPlay, a query tool a whole satisfies the query. Inherent in these queries are (i) with an underlying graphical query language, a unique data the grouping of tuples into sets, and (ii) the binding of con- model and a graphical interface. DataPlay provides two in- straints with either existential or universal quantifiers. Exis- teraction features that support trial-and-error query specifi- tential quantifiers ensure that some tuple in the set satisfies the cation. First, DataPlay allows users to directly manipulate constraint, while universal quantifiers ensure that all tuples in a graphical query by changing quantifiers and modifying de- the set satisfy the constraint. pendencies between constraints. Users receive real-time feed- back in the form of updated answers and non-answers. Sec- People engage in casual quantified-query specification with ond, DataPlay can auto-correct a user’s query, based on user one another on a regular basis. For example, when buying feedback about which tuples to keep or drop from the answers flowers, we might request a bouquet of ‘some red and white and non-answers. We evaluated the effectiveness of each in- roses’. Despite this request being somewhat underspecified, teraction feature with a user study and we found that direct the florist will still try putting together a bouquet. He may query manipulation is more effective than auto-correction for add some red and white roses and then add a few lilies. If we simple queries but auto-correction is more effective than di- wanted only roses, we clarify the misunderstanding and say rect query manipulation for more complex queries. ‘only roses’. If we wanted only red or white roses, we might allow the lilies but object to pink roses. Such casual inter- Author Keywords actions suggest that we are accustomed to specifying quan- Quantification; Query Specification; Query Correction; tified queries by trial-and-error, where we adjust our initial Semantic fine-tuning query specification by modifying quantifiers — only roses — or modifying dependencies between constraints — only red ACM Classification Keywords or white roses but no other rose colors1 . H.5.2 Information interfaces and presentation: User Inter- What makes SQL — the de facto query language for faces - Graphical user interfaces. quantified querying — challenging is that it discourages this trial-and-error approach to querying. Effective trial-and-error General Terms query specification depends on (i) the facility to incremen- Design, Human Factors, Languages tally refine an incorrect query through small tweaks and (ii) the ability to understand the effect of these tweaks. SQL, INTRODUCTION however, does not facilitate incremental query refinement Despite decades of work on language and interface design, because it lacks syntax locality: semantically small tweaks, many database users find it difficult to specify complex such as changing a quantifier from existential to universal, usually result in large changes in the structure and syntax of a SQL query. Moreover, SQL does not present the complete effects of a query. While SQL presents answers or tuples that Permission to make digital or hard copies of all or part of this work for satisfy a query, it does not present non-answers or tuples that personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies do not satisfy the query. Non-answers and answers together bear this notice and the full citation on the first page. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific 1 permission and/or a fee. The dependency here is between a constraint on flower type and a UIST’12, October 7–10, 2012, Cambridge, Massachusetts, USA. constraint on flower color. Copyright 2012 ACM 978-1-4503-1580-7/12/10...$15.00.

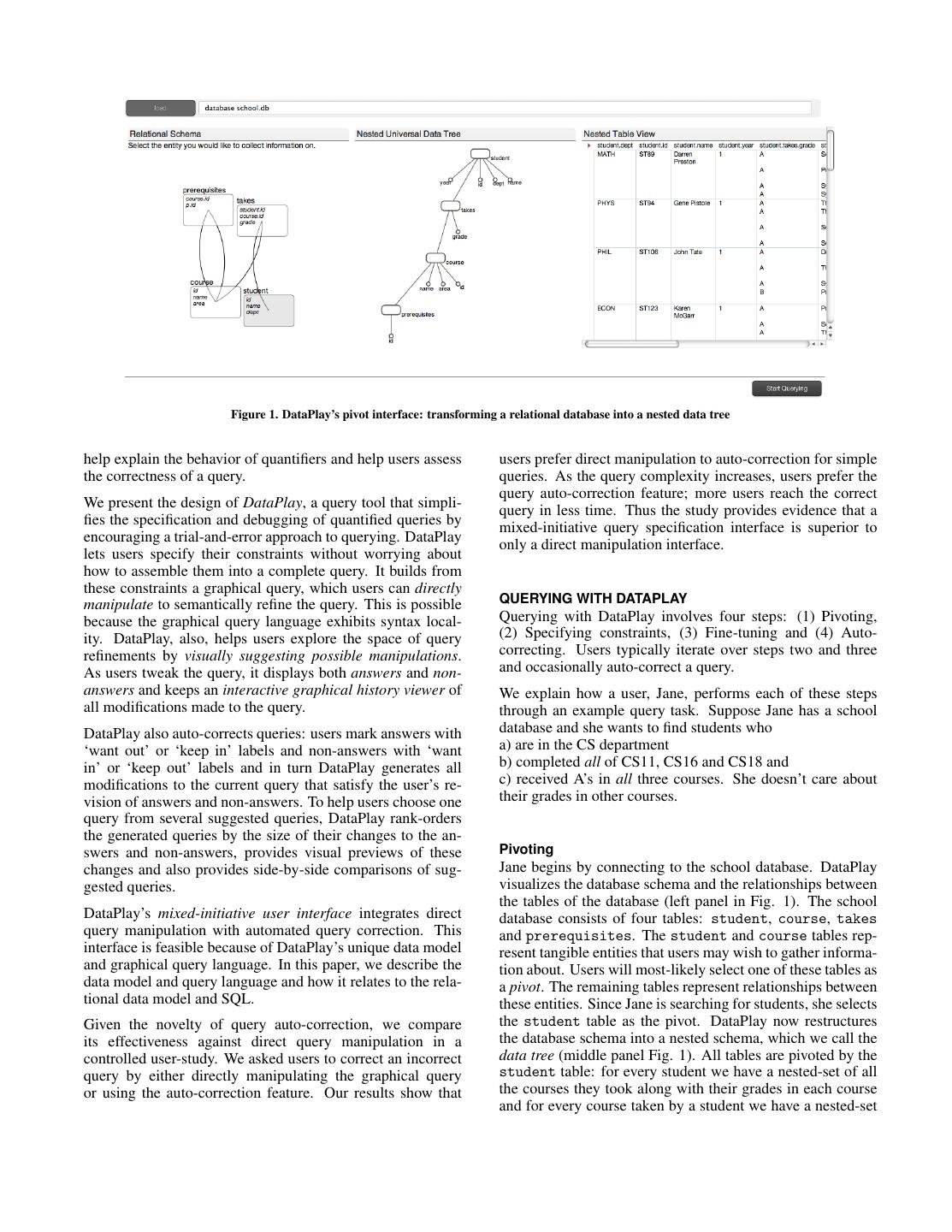

2 . Figure 1. DataPlay’s pivot interface: transforming a relational database into a nested data tree help explain the behavior of quantifiers and help users assess users prefer direct manipulation to auto-correction for simple the correctness of a query. queries. As the query complexity increases, users prefer the query auto-correction feature; more users reach the correct We present the design of DataPlay, a query tool that simpli- query in less time. Thus the study provides evidence that a fies the specification and debugging of quantified queries by mixed-initiative query specification interface is superior to encouraging a trial-and-error approach to querying. DataPlay only a direct manipulation interface. lets users specify their constraints without worrying about how to assemble them into a complete query. It builds from these constraints a graphical query, which users can directly manipulate to semantically refine the query. This is possible QUERYING WITH DATAPLAY because the graphical query language exhibits syntax local- Querying with DataPlay involves four steps: (1) Pivoting, ity. DataPlay, also, helps users explore the space of query (2) Specifying constraints, (3) Fine-tuning and (4) Auto- refinements by visually suggesting possible manipulations. correcting. Users typically iterate over steps two and three As users tweak the query, it displays both answers and non- and occasionally auto-correct a query. answers and keeps an interactive graphical history viewer of We explain how a user, Jane, performs each of these steps all modifications made to the query. through an example query task. Suppose Jane has a school DataPlay also auto-corrects queries: users mark answers with database and she wants to find students who ‘want out’ or ‘keep in’ labels and non-answers with ‘want a) are in the CS department in’ or ‘keep out’ labels and in turn DataPlay generates all b) completed all of CS11, CS16 and CS18 and modifications to the current query that satisfy the user’s re- c) received A’s in all three courses. She doesn’t care about vision of answers and non-answers. To help users choose one their grades in other courses. query from several suggested queries, DataPlay rank-orders the generated queries by the size of their changes to the an- swers and non-answers, provides visual previews of these Pivoting changes and also provides side-by-side comparisons of sug- Jane begins by connecting to the school database. DataPlay gested queries. visualizes the database schema and the relationships between the tables of the database (left panel in Fig. 1). The school DataPlay’s mixed-initiative user interface integrates direct database consists of four tables: student, course, takes query manipulation with automated query correction. This and prerequisites. The student and course tables rep- interface is feasible because of DataPlay’s unique data model resent tangible entities that users may wish to gather informa- and graphical query language. In this paper, we describe the tion about. Users will most-likely select one of these tables as data model and query language and how it relates to the rela- a pivot. The remaining tables represent relationships between tional data model and SQL. these entities. Since Jane is searching for students, she selects Given the novelty of query auto-correction, we compare the student table as the pivot. DataPlay now restructures its effectiveness against direct query manipulation in a the database schema into a nested schema, which we call the controlled user-study. We asked users to correct an incorrect data tree (middle panel Fig. 1). All tables are pivoted by the query by either directly manipulating the graphical query student table: for every student we have a nested-set of all or using the auto-correction feature. Our results show that the courses they took along with their grades in each course and for every course taken by a student we have a nested-set

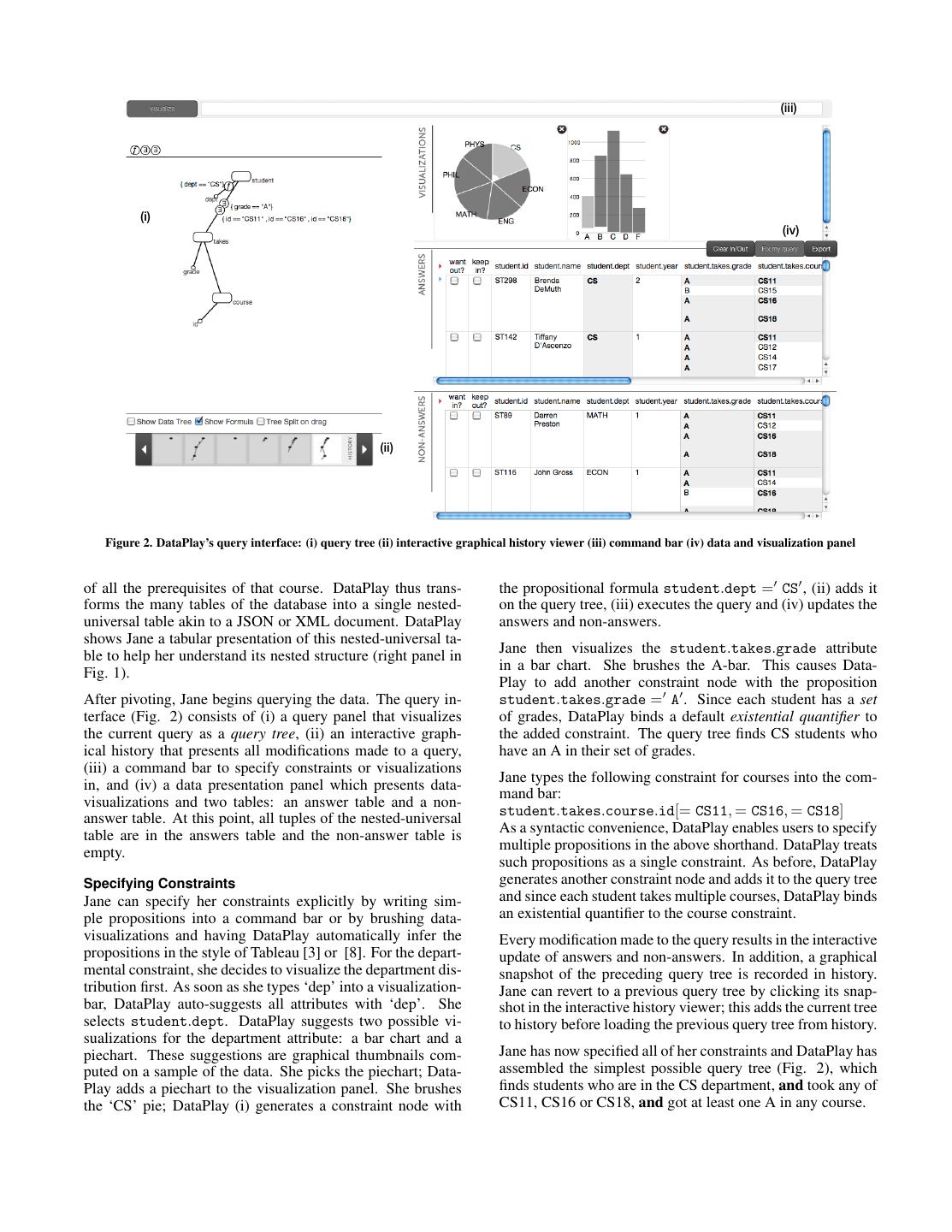

3 . (iii) (i) (iv) (ii) Figure 2. DataPlay’s query interface: (i) query tree (ii) interactive graphical history viewer (iii) command bar (iv) data and visualization panel of all the prerequisites of that course. DataPlay thus trans- the propositional formula student.dept = CS , (ii) adds it forms the many tables of the database into a single nested- on the query tree, (iii) executes the query and (iv) updates the universal table akin to a JSON or XML document. DataPlay answers and non-answers. shows Jane a tabular presentation of this nested-universal ta- ble to help her understand its nested structure (right panel in Jane then visualizes the student.takes.grade attribute in a bar chart. She brushes the A-bar. This causes Data- Fig. 1). Play to add another constraint node with the proposition After pivoting, Jane begins querying the data. The query in- student.takes.grade = A . Since each student has a set terface (Fig. 2) consists of (i) a query panel that visualizes of grades, DataPlay binds a default existential quantifier to the current query as a query tree, (ii) an interactive graph- the added constraint. The query tree finds CS students who ical history that presents all modifications made to a query, have an A in their set of grades. (iii) a command bar to specify constraints or visualizations Jane types the following constraint for courses into the com- in, and (iv) a data presentation panel which presents data- mand bar: visualizations and two tables: an answer table and a non- student.takes.course.id[= CS11, = CS16, = CS18] answer table. At this point, all tuples of the nested-universal As a syntactic convenience, DataPlay enables users to specify table are in the answers table and the non-answer table is empty. multiple propositions in the above shorthand. DataPlay treats such propositions as a single constraint. As before, DataPlay Specifying Constraints generates another constraint node and adds it to the query tree Jane can specify her constraints explicitly by writing sim- and since each student takes multiple courses, DataPlay binds ple propositions into a command bar or by brushing data- an existential quantifier to the course constraint. visualizations and having DataPlay automatically infer the Every modification made to the query results in the interactive propositions in the style of Tableau [3] or [8]. For the depart- update of answers and non-answers. In addition, a graphical mental constraint, she decides to visualize the department dis- snapshot of the preceding query tree is recorded in history. tribution first. As soon as she types ‘dep’ into a visualization- Jane can revert to a previous query tree by clicking its snap- bar, DataPlay auto-suggests all attributes with ‘dep’. She shot in the interactive history viewer; this adds the current tree selects student.dept. DataPlay suggests two possible vi- to history before loading the previous query tree from history. sualizations for the department attribute: a bar chart and a piechart. These suggestions are graphical thumbnails com- Jane has now specified all of her constraints and DataPlay has puted on a sample of the data. She picks the piechart; Data- assembled the simplest possible query tree (Fig. 2), which Play adds a piechart to the visualization panel. She brushes finds students who are in the CS department, and took any of the ‘CS’ pie; DataPlay (i) generates a constraint node with CS11, CS16 or CS18, and got at least one A in any course.

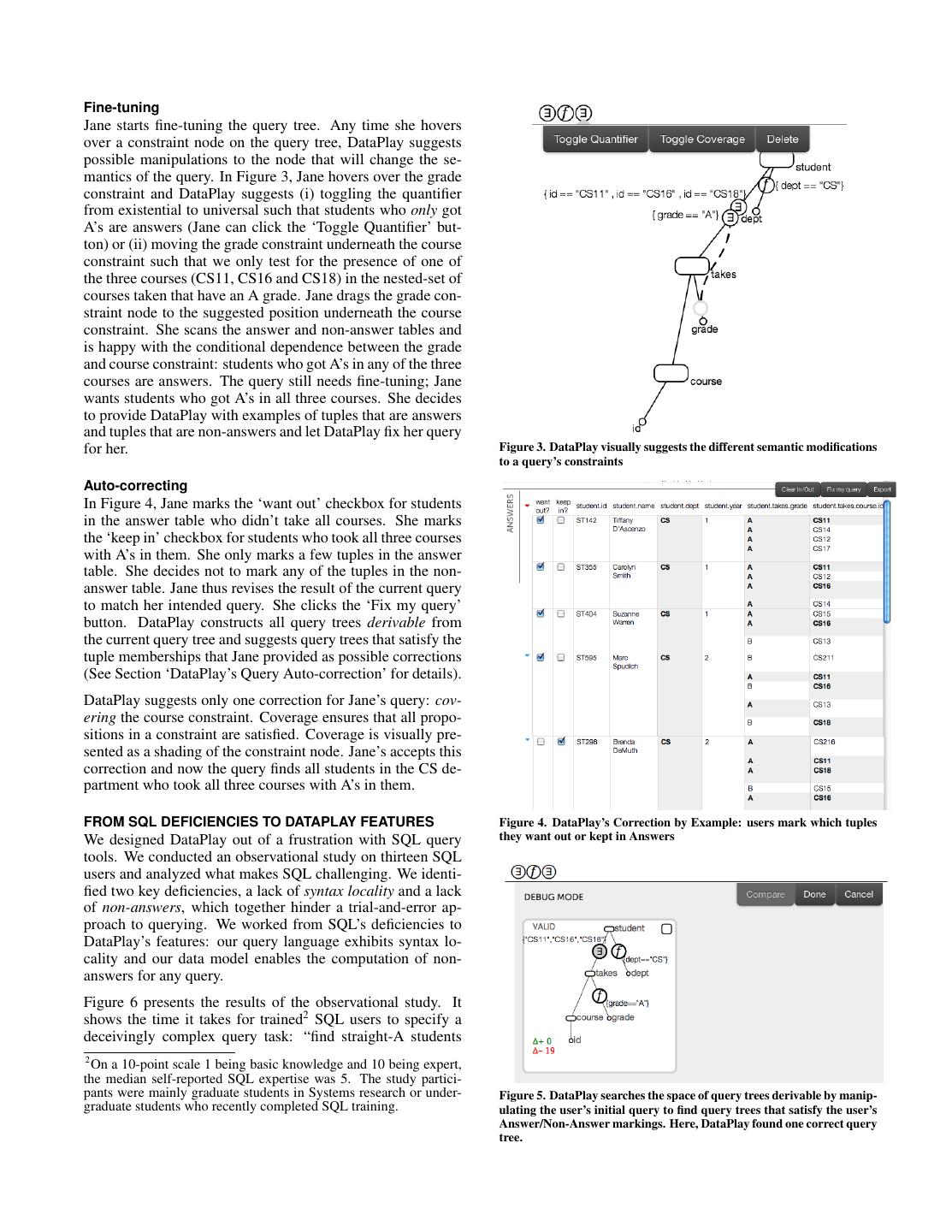

4 .Fine-tuning Jane starts fine-tuning the query tree. Any time she hovers over a constraint node on the query tree, DataPlay suggests possible manipulations to the node that will change the se- mantics of the query. In Figure 3, Jane hovers over the grade constraint and DataPlay suggests (i) toggling the quantifier from existential to universal such that students who only got A’s are answers (Jane can click the ‘Toggle Quantifier’ but- ton) or (ii) moving the grade constraint underneath the course constraint such that we only test for the presence of one of the three courses (CS11, CS16 and CS18) in the nested-set of courses taken that have an A grade. Jane drags the grade con- straint node to the suggested position underneath the course constraint. She scans the answer and non-answer tables and is happy with the conditional dependence between the grade and course constraint: students who got A’s in any of the three courses are answers. The query still needs fine-tuning; Jane wants students who got A’s in all three courses. She decides to provide DataPlay with examples of tuples that are answers and tuples that are non-answers and let DataPlay fix her query for her. Figure 3. DataPlay visually suggests the different semantic modifications to a query’s constraints Auto-correcting In Figure 4, Jane marks the ‘want out’ checkbox for students in the answer table who didn’t take all courses. She marks the ‘keep in’ checkbox for students who took all three courses with A’s in them. She only marks a few tuples in the answer table. She decides not to mark any of the tuples in the non- answer table. Jane thus revises the result of the current query to match her intended query. She clicks the ‘Fix my query’ button. DataPlay constructs all query trees derivable from the current query tree and suggests query trees that satisfy the tuple memberships that Jane provided as possible corrections (See Section ‘DataPlay’s Query Auto-correction’ for details). DataPlay suggests only one correction for Jane’s query: cov- ering the course constraint. Coverage ensures that all propo- sitions in a constraint are satisfied. Coverage is visually pre- sented as a shading of the constraint node. Jane’s accepts this correction and now the query finds all students in the CS de- partment who took all three courses with A’s in them. FROM SQL DEFICIENCIES TO DATAPLAY FEATURES Figure 4. DataPlay’s Correction by Example: users mark which tuples We designed DataPlay out of a frustration with SQL query they want out or kept in Answers tools. We conducted an observational study on thirteen SQL users and analyzed what makes SQL challenging. We identi- fied two key deficiencies, a lack of syntax locality and a lack of non-answers, which together hinder a trial-and-error ap- proach to querying. We worked from SQL’s deficiencies to DataPlay’s features: our query language exhibits syntax lo- cality and our data model enables the computation of non- answers for any query. Figure 6 presents the results of the observational study. It shows the time it takes for trained2 SQL users to specify a deceivingly complex query task: “find straight-A students 2 On a 10-point scale 1 being basic knowledge and 10 being expert, the median self-reported SQL expertise was 5. The study partici- pants were mainly graduate students in Systems research or under- Figure 5. DataPlay searches the space of query trees derivable by manip- graduate students who recently completed SQL training. ulating the user’s initial query to find query trees that satisfy the user’s Answer/Non-Answer markings. Here, DataPlay found one correct query tree.

5 . two of the study’s successful users wrote additional queries to check that their query was correct (Data Check phase in Figure 6): they arbitrarily chose a couple of students from the query result and wrote another query to view all the grades of these students to ensure that they indeed had no other non-A grades. Answers Non-Answers Nina Simone BLUS101 A Bill Withers CLAS101 C Existential Nina Simone JAZZ101 A Louis Armstrong REGA101 B Nina Simone SOUL101 A Bob Marley BLUS101 C Figure 6. SQL query specification behavior of 13 SQL users. Bill Withers BLUS101 A Bob Marley RYTM101 C Bill Withers RYTH101 A Bob Marley JAZZ101 C (a) (b) (students who got A’s in all their courses)”. Only 4 of the 13 users successfully specified the query within 10 minutes! Answers Non-Answers Most users (11 of 13) began with the simpler existential vari- Nina Simone BLUS101 A Bill Withers BLUS101 A ant of this query, i.e., they found students with at least one A. Nina Simone JAZZ101 A Bill Withers RYTH101 A Universal This ‘existential phase’ was followed by a ‘universal phase’: Nina Simone SOUL101 A Bill Withers CLAS101 C users attempted to rewrite the existential query to get to the Frank Sinatra CLAS101 A Louis Armstrong JAZZ101 A Frank Sinatra MELD101 A Louis Armstrong REGA101 B universal query. The prevalence of this two-phase querying (c) (d) behavior hints at the intrinsic trial-and-error nature of quanti- fied querying. Table 1. Answers and Non-Answers for queries that find students with at least one A and students with all A’s In the following sections, we explain how each SQL defi- ciency discourages trial-and-error querying. Tables 1a and 1c are example results of executing the existen- tial and the universal SQL queries for finding students who Syntax Locality got at least one A and students who got all As respectively. The following two SQL queries find students who got at least one A and students who got all A’s respectively. Without any additional information, it is impossible to deter- mine which query produced which of the sample results in SELECT * FROM student s, takes t, WHERE t.grade = ’A’ AND t.student_id = s.id; Tables 1a and 1c. SQL interfaces usually provide us with the answers or the tuples that satisfy our query, but they do not SELECT * FROM student s, takes t provide us with the answers’ complement: the non-answers WHERE t.student_id = s.id AND s.id NOT IN or tuples that do not satisfy our query. Answers alone, how- (SELECT student_id FROM takes WHERE grade != ’A’); ever, may not help us fully understand a query. It is only when A key observation about the SQL queries is that their struc- we see ‘Bill Withers’ in both the answers (Table 1a) and the tures are very different. The simple change of the quantifier non-answers (Table 1b), that we can deduce that Table 1a is from existential to universal appears to have global impact the result of the at least one A query. Similarly, it is only on the query syntax. For this reason we say that SQL often when we see A’s in the non-answers (Table 1d), that we can exhibits poor syntax locality with respect to changes in quan- deduce that Table 1c is the result of the straight-A query. tification. The inability to localize the details of the quanti- Without non-answers, a user looking for straight-A students fier make the language confusing to users. Poor syntax local- can mistakenly believe that an existentially-quantified query ity discourages trial-and-error tuning of query specifications: is correct just by examining the answers. Therefore, SQL small changes to query semantics can require large changes interfaces that do not present non-answers hinder query inter- to query syntax. pretation and ultimately correct query specification. Moreover, SQL users often feel stuck after formulating an in- Unfortunately, this problem is not amenable to a quick inter- correct query in SQL. Complex SQL queries tend to follow face fix: complements are not commonplace in SQL inter- templates or tricks, which users learn with experience: two faces because the pure relational query and data model make of the four users who wrote a correct SQL query were di- it hard to define and compute complements. Consider the fol- rectly applying a learned template; they skipped the ‘exis- lowing poorly constructed yet valid query: tential phase’. Users who haven’t yet learned such templates cannot brute-force their way out of incorrect queries by ap- SELECT * FROM student, takes WHERE student.id = x AND takes.student_id = x; plying different modifications. In this query it is difficult to determine the universe from Non-answers which we complement answers to form non-answers. It is As users write SQL queries, they look at the results to affirm likely that the user’s perspective of the universe is student that the written SQL matches their intended query. The pre- takes, which represents the courses taken by the student. sentation of a SQL query’s results, however, is insufficient: However, the query has no apparent join and one can infer the

6 .universe to be student × takes, which represents all possi- DATAPLAY’S GRAPHICAL QUERY LANGUAGE ble combinations of student tuples with takes tuples. If we A query in our language is called a query tree. The class infer the second universe, we will compute a much larger and of queries captured by query trees are Boolean conjunctive an incomprehensible non-answer set. quantified queries over nested relations. Each quantified ex- pression consists of conjunctions or Horn3 statements over DataPlay’s data model enables the presentation of non- Boolean functions (these are the constraints) and only con- answers and its graphical query language exhibits syntax junctions of quantified expressions are allowed. locality. We describe the data model and query language in the following sections. We describe how the language’s syn- (a) (b) tax locality and the presentation of answers and non-answers student student enable query auto-correction. We then discuss the inner grade = A grade = A workings of the query auto-correction feature. takes takes DATAPLAY’S DATA MODEL DataPlay restricts the relational data model to a nested uni- grade grade versal relation: while this limits the expressive power of Dat- Existential Quantifier Universal Quantifier aPlay compared to SQL to a degree, it enables a far more ef- fective specification interface for a large class of sophisticated queries. We describe the data model, the features it enables (c) (d) and its limitations. student student id {= CS11, =CS12} id {= CS11, =CS12} DataPlay uses a user-specified pivot to assemble a single nested universal table from the many tables of a database. We takes takes refer to the schema of this table as the data tree. Any rela- grade = A f grade = A f tional schema can be transformed into a data tree: we map the grade schema of the relational database into a graph where nodes f grade f course mark > 90 represent relations and edges represent primary-foreign key mark > 90 course mark id relationships between relations. We call this graph the key- mark id graph. The data tree is a rooted spanning-tree of the key- Nested Query-Trees Coverage graph with the pivot as the root. Starting with the pivot, we add it as the root of our data tree. We add all its attributes, Figure 7. DataPlay’s Graphical Query Language excluding any foreign keys, as leaves. We then traverse all edges outgoing from the pivot in the key-graph in a depth- The example query trees in Figure 7 comprehensively de- first fashion. For each relation that we add as a node to the scribe all aspects of DataPlay’s graphical query language. data tree, we add all its attributes as leaves excluding foreign Query trees are data trees overlaid with constraints. Every keys. In the data tree, every attribute is identified by its path relation node is a query tree that maps its tuples to either an- from the pivot. We populate the nested universal table by tak- swers or non-answers. An empty query tree — one without ing the join of a child relation with a parent relation in the constraints — maps all its tuples to answers. The trees are data tree. conjunctive in nature, they take the conjunction of all con- straints on their descendants —attributes or answer tuples The nested universal table is an abstract view on top of the from a nested query tree. relational database; we do need not to physically restructure the database or materialize multiple nested universal tables, In Figure 7a, the empty query tree at takes maps all its tu- one for every pivot. ples to answers as it has no constraints. For every student, the query tree at the pivot, student, determines whether there While the nested universal table is not a new concept [13], it is exists a tuple in the nested set of answer-tuples in takes a largely forgotten one. As the name suggests, it merges uni- where grade is A. Those students are answers. The remaining versal relations [14] with nested data models, such as JSON students are non-answers. In, Figure 7b, the query tree at the or XML. This buys us the following: pivot, student, determines whether all grades of a student in 1. A natural grouping hierarchy for a large class of quantified- the nested set of answer-tuples in takes are A. queries: For these queries, users are relieved from two Note that when a constraint node operates over a set of tuples, cumbersome SQL steps: join specification and group con- it has one of the following quantifier symbols in it: ∀ for the struction through sub-queries. universal quantifier or ∃ for the existential quantifier. If the 2. A closed-world: Querying in DataPlay is simply the par- constraint node operates over an individual tuple and not a set titioning of the tuples of nested universal table into an- of tuples, it requires no quantifier, and instead has the symbol swers or non-answers. If tuples of the pivot satisfy all f in it. our constraints then they are answers, otherwise, they are In Figure 7c, the query tree at takes maps tuples where grade non-answers. In contrast, non-answers, in the traditional is A and mark is above 90 to answers. The query tree at the relational-model, are neither well defined nor easy to com- pute as we discussed in the previous section. 3 e.g. a ∧ b → c

7 .student pivot, now tests if all of the nested answer tuples in Users validate a query’s correctness by examining its answers takes are either CS11 or CS12 and no other courses. The and non-answers and searching for misplaced tuples. En- universally-quantified course constraint here only examines abling users to label offending tuples in answers with ‘want the nested answer tuples of takes ignoring the nested non- out’ and actual answers misplaced in non-answers with ‘want answer tuples. in’ and in turn correcting the query for the user reduces the gulf of execution [17] of a query specification tool as users The query tree of Figure 7d illustrates a special feature of combine validation and debugging in a single process. In the language: coverage. Coverage allows users to ensure that DataPlay, we also allow user to label correctly placed answer all of a constraint’s propositions (assuming it has more than and non-answer tuples with ‘keep in’ and ‘keep out’ respec- one) are true for a set of tuples4 . The query tree of Figure tively. We refer to the user-specified mapping of tuples into 7d ensures that a student has taken both CS11 and CS12 with answers or non-answers as tuple memberships. Users only A’s and marks above 90. Coverage is denoted by a shading provide partial mappings of all database tuples to answers or of the constraint node. Technically, coverage is a redundant non-answers: unmapped tuples are free. Automated query- feature of the language as one can write separate constraints correction must support such partial mappings as no user will for every course and the conjunctive nature of DataPlay will map all tuples of a database to answers or non-answers. ensure coverage. However, users may conceptualize several propositions as a single self-contained constraint. Also, when DataPlay generates the space of all possible query trees deriv- brushing dense-visualizations, if we generate one constraint able from the current query tree by (i) toggling quantifiers, for every brushed area or glyph, rather than a list of proposi- (ii) toggling coverage or (iii) changing the positions of con- tions for a single constraint, we might over-crowd the query straints. DataPlay suggests all query trees in this space that tree. satisfy the given tuple memberships. If users provide a few or not enough selective tuple memberships the space of sug- The graphical query language is expressive enough to de- gested query trees can be large and it might be difficult for scribe a large class of quantified queries succinctly and with users to understand the semantic differences between the sug- syntax locality: compare the query trees of Figure 7a and gested query trees. To reduce this gulf of evaluation [17]: 7b with their equivalent SQL queries (see section on SQL ‘Syntax Locality’). Notice that to change the semantics of 1. We preserve tuple memberships across several iterations of a constraint from existential to universal, only a single sym- automated query correction. This allows users to pick any bol change is required. Users can tweak the properties of a one of the suggested query trees and provide more tuple constraint independently of other constraints and without re- memberships if the selected query tree turns out to be in- creating a new query tree. Since each constraint supports a correct. Users can explicitly clear tuple memberships by fixed set of manipulations (toggle quantifier, toggle coverage clicking a ‘Clear In/Out’ button. or change position), DataPlay visually suggests these manip- ulations to users. Users, no longer have to feel stuck: they can 2. We provide visual-previews of the effect of a suggested try out different manipulations until they reach their intended query tree: when users mouse-over over a suggested query query. tree, DataPlay shades tuples that are non-answers for that query tree. Moreover, the language ensures that for a given collection of constraints, there is a finite and searchable space of possi- 3. We allow users to diff suggested query trees; DataPlay ble query trees: this enables auto-correction. Instead of di- opens up a comparison window containing tuples on which rectly manipulating a query tree to achieve a certain partition the query trees disagree on whether they are answers or of tuples into answers or non-answers, users can simply spec- non-answers. ify this partition by marking tuples as either answers or non- answers and DataPlay will search the space of query trees to DataPlay rank-orders its query correction suggestions by the find one that achieves the required partition. number of tuples that change membership from answers to non-answers or vice-versa. We chose this order under the Finally, compared to a textual query representation, query assumption that users might directly manipulate a query to trees better reflect the hierarchical structure of the nested uni- get close to their intended answers/non-answers and then use versal table and better illustrate the dependencies between auto-correction to fix the membership of a few problematic constraints; nesting is visually awkward in a linear represen- tuples. Thus, we order query trees that drastically change tation. answers and non-answers last. This order, however, does not guarantee that the user’s intended query is at the top of all the DATAPLAY’S QUERY AUTO-CORRECTION query tree suggestions. We built the auto-correction feature because as the complex- ity of a query increases, the more difficult it becomes to debug EVALUATING DATAPLAY’S INTERFACE and fix a query by directly manipulating it as we empirically As an initial evaluation of our mixed-initiative user interface, demonstrate in the following section. we conducted a comparative user study of DataPlay’s novel 4 query specification features: direct query manipulation and A list of propositions is different from specifying a boolean con- junction or disjunction of these propositions: a list operates at the automated query correction. Our goal was to observe task set level, while the disjunction or conjunction operates at an individ- completion times for each feature to determine which feature ual tuple-value. users were more effective at debugging queries with.

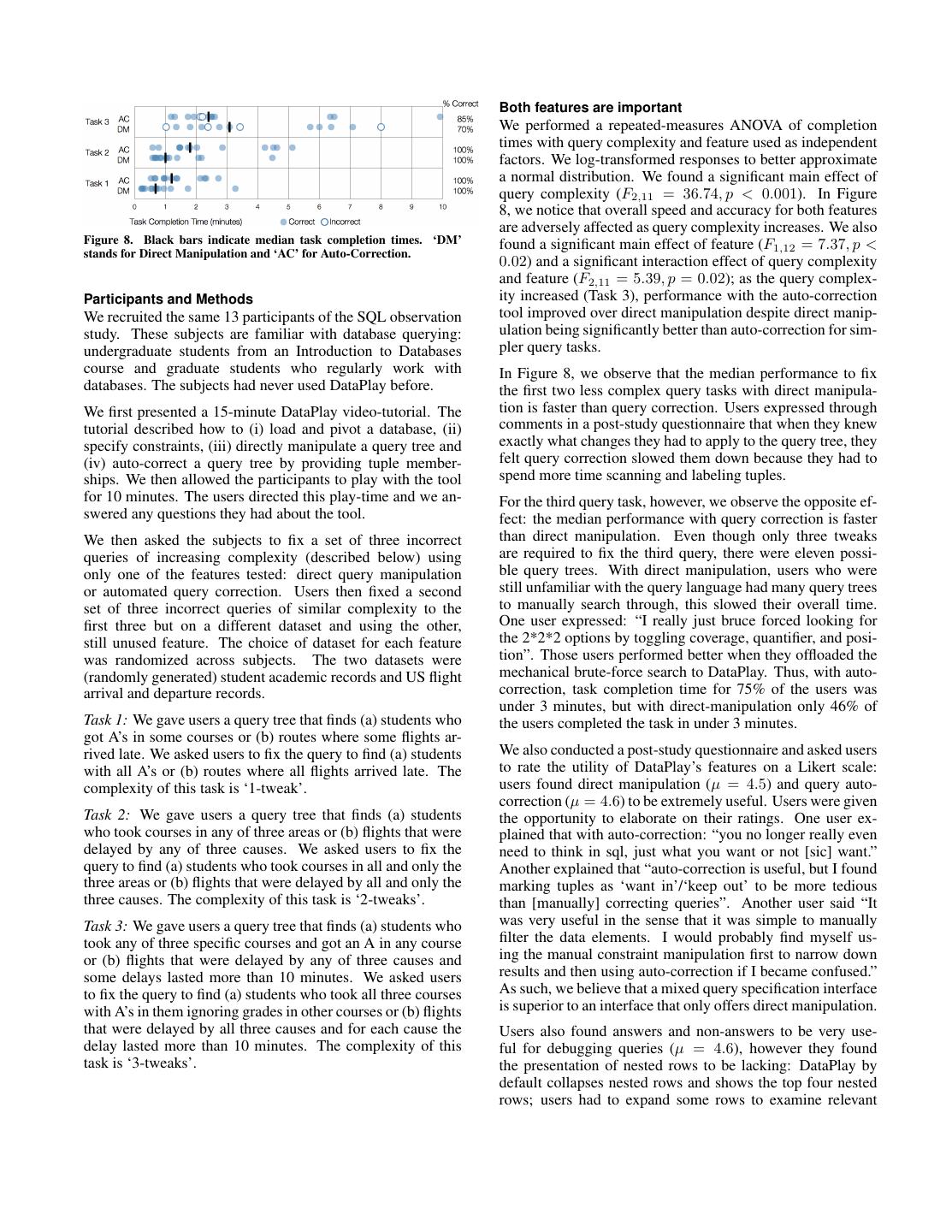

8 . Both features are important We performed a repeated-measures ANOVA of completion times with query complexity and feature used as independent factors. We log-transformed responses to better approximate a normal distribution. We found a significant main effect of query complexity (F2,11 = 36.74, p < 0.001). In Figure 8, we notice that overall speed and accuracy for both features are adversely affected as query complexity increases. We also Figure 8. Black bars indicate median task completion times. ‘DM’ found a significant main effect of feature (F1,12 = 7.37, p < stands for Direct Manipulation and ‘AC’ for Auto-Correction. 0.02) and a significant interaction effect of query complexity and feature (F2,11 = 5.39, p = 0.02); as the query complex- Participants and Methods ity increased (Task 3), performance with the auto-correction We recruited the same 13 participants of the SQL observation tool improved over direct manipulation despite direct manip- study. These subjects are familiar with database querying: ulation being significantly better than auto-correction for sim- undergraduate students from an Introduction to Databases pler query tasks. course and graduate students who regularly work with In Figure 8, we observe that the median performance to fix databases. The subjects had never used DataPlay before. the first two less complex query tasks with direct manipula- We first presented a 15-minute DataPlay video-tutorial. The tion is faster than query correction. Users expressed through tutorial described how to (i) load and pivot a database, (ii) comments in a post-study questionnaire that when they knew specify constraints, (iii) directly manipulate a query tree and exactly what changes they had to apply to the query tree, they (iv) auto-correct a query tree by providing tuple member- felt query correction slowed them down because they had to ships. We then allowed the participants to play with the tool spend more time scanning and labeling tuples. for 10 minutes. The users directed this play-time and we an- For the third query task, however, we observe the opposite ef- swered any questions they had about the tool. fect: the median performance with query correction is faster We then asked the subjects to fix a set of three incorrect than direct manipulation. Even though only three tweaks queries of increasing complexity (described below) using are required to fix the third query, there were eleven possi- only one of the features tested: direct query manipulation ble query trees. With direct manipulation, users who were or automated query correction. Users then fixed a second still unfamiliar with the query language had many query trees set of three incorrect queries of similar complexity to the to manually search through, this slowed their overall time. first three but on a different dataset and using the other, One user expressed: “I really just bruce forced looking for still unused feature. The choice of dataset for each feature the 2*2*2 options by toggling coverage, quantifier, and posi- was randomized across subjects. The two datasets were tion”. Those users performed better when they offloaded the (randomly generated) student academic records and US flight mechanical brute-force search to DataPlay. Thus, with auto- arrival and departure records. correction, task completion time for 75% of the users was under 3 minutes, but with direct-manipulation only 46% of Task 1: We gave users a query tree that finds (a) students who the users completed the task in under 3 minutes. got A’s in some courses or (b) routes where some flights ar- rived late. We asked users to fix the query to find (a) students We also conducted a post-study questionnaire and asked users with all A’s or (b) routes where all flights arrived late. The to rate the utility of DataPlay’s features on a Likert scale: complexity of this task is ‘1-tweak’. users found direct manipulation (µ = 4.5) and query auto- correction (µ = 4.6) to be extremely useful. Users were given Task 2: We gave users a query tree that finds (a) students the opportunity to elaborate on their ratings. One user ex- who took courses in any of three areas or (b) flights that were plained that with auto-correction: “you no longer really even delayed by any of three causes. We asked users to fix the need to think in sql, just what you want or not [sic] want.” query to find (a) students who took courses in all and only the Another explained that “auto-correction is useful, but I found three areas or (b) flights that were delayed by all and only the marking tuples as ‘want in’/‘keep out’ to be more tedious three causes. The complexity of this task is ‘2-tweaks’. than [manually] correcting queries”. Another user said “It Task 3: We gave users a query tree that finds (a) students who was very useful in the sense that it was simple to manually took any of three specific courses and got an A in any course filter the data elements. I would probably find myself us- or (b) flights that were delayed by any of three causes and ing the manual constraint manipulation first to narrow down some delays lasted more than 10 minutes. We asked users results and then using auto-correction if I became confused.” to fix the query to find (a) students who took all three courses As such, we believe that a mixed query specification interface with A’s in them ignoring grades in other courses or (b) flights is superior to an interface that only offers direct manipulation. that were delayed by all three causes and for each cause the Users also found answers and non-answers to be very use- delay lasted more than 10 minutes. The complexity of this ful for debugging queries (µ = 4.6), however they found task is ‘3-tweaks’. the presentation of nested rows to be lacking: DataPlay by default collapses nested rows and shows the top four nested rows; users had to expand some rows to examine relevant

9 .data. Users found DataPlay’s highlighting of values that sat- Student Grade, Course Area Pattern isfy a constraint to be a useful feature when scanning answers John A, Systems True, True and non-answers for correctness. B, Design False, False David C, Design False, False We note that the first query task in this study is as complex as B, Systems False, True the query task used in the SQL observational study. A qual- ! With three constraints on attributes two nesting levels deep, itative argument in support of DataPlay can be made here: while only 2 of 11 users successfully transformed an exis- the data space is 256 tuples and with four constraints, it tential SQL query to a universal one, all 13 users successfully is 65536 tuples. The double-exponential size of the data transformed an existential query to a universal one in less than space means that many semantically-different query trees 3.5 minutes. will satisfy the small sample of tuple memberships that users provide. Convergence to the one correct query may require Reducing errors: DATAPLAY v2.0 an unreasonably large sample of tuple memberships: no user will provide tuple memberships for the entire space. As query complexity increased, the likelihood of errors also Worse, the actual database may not be rich enough in its increased: 15% and 30% of users submitted incorrect queries content and only contain a small sample of the data space. for the third query task when using query auto-correction and We are currently exploring techniques from learning theory direct manipulation respectively (See Fig. 8). We examined to build an alternate interaction mode of query correction, screen-recordings of users who failed the third query task and where DataPlay constructs sample tuples in addition to found the following two issues: using existing database tuples to interactively ask users tuple 1. When users quickly scanned answers or non-answers, they membership questions that guarantee convergence to one sometimes missed tuples that indicate query-specification correct query tree within a reasonable number questions. errors, especially if those tuples are not within the first few Depending on the task, users provided as low as one tuple tuples. membership and as high as 19 tuple-memberships. The median number of tuple-memberships provided for each task 2. When correcting queries, users may provide a few or not was 4, 6 and 6. This data might help us define the bounds of enough selective tuple-memberships: this results in Data- what is reasonable. Play generating a large set of query trees that satisfy the In addition to reducing query specification errors, we would given tuple-memberships. DataPlay’s simple ordering of like to create more scalable query and data tree visualizations. suggested query trees may not bring forward the user’s In particular, we need to employ techniques such as interac- intended query. Users, who submitted incorrect queries, tive tree navigation and tree summarization to effectively vi- provided a few non-selective tuple-memberships, and then sualize deeply-nested and bushy data trees (tables with many picked the highest ranked query tree from DataPlay’s sug- attributes). gestions and hastily concluded that they fixed the query. The first issue can be resolved if we order answers and non- RELATED WORK answers such that they surface query errors or at least cluster DataPlay is influenced by and builds upon years of research tuples that have identical data with respect to the constraints on database data models and query languages. Our data and present one tuple from each cluster, hence increasing the model combines the universal relational model [14] with the diversity of the top-k presented tuples. Alternatively, we can nested relational model [13]. Our data model closely resem- allow users to resample the top-k results, thus extracting more bles that of the LORE database and our query language re- tuples from the database for further query verification. sembles LOREL, a non-graphical query language for nested data models [15]. The second issue is more complex and poses an exciting research challenge. Query trees map an extremely large DataPlay uses techniques from visualization-driven query data space to answers and non-answers, user-specified tuple tools [3, 18, 8]: users can brush visualizations in DataPlay to memberships only map a small random sampling of the data specify constraints. Similar to tools like Polaris [21] (and its space to answers and non-answers. To illustrate, with two commercial successor Tableau [3]) and Orion [9], DataPlay constraints over two attributes, for example, grade = A and transforms user-interface actions into formal data-processing course.area = Systems, we have 22 or 4 possible satisfiability specifications. Polaris maps drag-and-drop operations on combinations for a two-attribute tuple: data variables into database queries and visualizations over single data tables [21]. Orion maps user-interface actions Grade = A Area = Systems Example tuples to data transformation statements and visualizations over True True [A, Systems] network data [9]. True False [A, Design] False True [B, Systems] The visual representation of queries in DataPlay as query trees is influenced in part by the success of code interfaces False False [C, Theory] like Code Bubbles [4] and Stacksplorer [11] in enhancing ! code readability. These interfaces overlay a graphical ‘call- If the attributes are two nesting levels deep then our data graph’ representation over code. space! is the number of subsets containing elements from the ! four possible two-attribute tuples, i.e 24 or 16. For example: Recent research on query causality directed our attention

10 .towards the presentation of non-answers. Query causality queries. We can extend DataPlay such that, like SnipSuggest, provides users with explanations for a particular query re- it suggests popular constraints and it orders query trees by sult [16]. Users pose questions such as “why is a tuple a popularity during query correction. non-answer?” and in return the database traces the behavior of the query to identify features of the tuple that make it a CONCLUSION non-answer[5, 16]. It is from this research that we borrow the In this paper, we introduced DataPlay, a query tool that sim- nomenclature of non-answers. We view query correction as plifies the specification of quantified queries. We conducted a the dual of query causality: while causality finds the data that study to determine what makes quantified querying challeng- caused a given result set, query correction finds the query that ing in SQL. We observed that SQL hinders a trial and error brings about a particular result. approach to querying because it lacks syntax locality and it lack non-answers. Automated query correction is a novel feature of DataPlay. It applies techniques from example-driven program synthesis. We designed DataPlay bearing in mind SQL’s deficiencies. While such techniques are currently used for data cleaning DataPlay’s data model — a nested universal table — enables and integration [10, 7], we are not aware of any tool that the computation of non-answers for any query and DataPlay’s applies such techniques for correcting a database query from query language exhibits syntax locality. DataPlay’s unique example answers and non-answers. data model and query language help support several inter- action features: (i) Users specify simple constraints with- We point out that Query-By-Example (QBE) [22] is not the out worrying about how to assemble these constraints into a same as automated query correction. QBE is a graphical lan- whole query (ii) Users semantically tweak a query by directly guage for relational data. It allows users to write queries manipulating a graphical query representation. The syntax lo- by creating example table layouts and specifying constraints cality of the language ensures that such tweaks are equivalent called condition boxes in the columns of these tables. Users to small manipulations. (iii) Users asses the correctness of a find it difficult to specify quantified queries in QBE [19]; like query by examining answers and non-answers. Every query SQL, QBE does not exhibit syntax locality and does not pro- modification interactively updates answers and non-answers. vide non-answers. DataPlay provides automated query correction: users provide Commercial and open-source SQL graphical editors like tuple memberships (examples of answer and non-answer tu- Postgres’ pgAdmin [2] and MS Access [1] enable users to ples) and DataPlay suggests the possible manipulations to a build a graphical SQL query and directly manipulate it. With given query that will satisfy the user’s tuple memberships. these editors, users physically draw the query by dropping We compared the effectiveness of direct manipulation with tables into a drawing board and then connecting the tables auto-correction through a user study. The study demonstrates with edges to represent joins. Direct manipulation is limited that for simple queries, users perform better with direct ma- to removing or adding a table, selecting attributes to project nipulation, but as query complexity increases, users perform and clicking edges to edit joins. Constraints are specified better with auto-correction. separately and not presented on the drawing board. In MS Access, constraints are specified using QBE. Users then REFERENCES execute the query to view answers only. These graphical 1. Microsoft access. editors do not address SQL’s lack of syntax locality, thus office.microsoft.com/en-us/access/. users still need to learn SQL tricks and templates to specify quantified queries. DataPlay differs from these editors in 2. pgadmin: Postgresql administration and management many ways. DataPlay reverses the steps of query specifi- tools. www.pgadmin.org. cation: users begin querying by directly specifying their 3. Tableau software. www.tableausoftware.com. constraints and DataPlay assembles the constraints into a query tree. DataPlay also interactively updates answers and 4. Bragdon, A., et al. Code bubbles: a working set-based non-answers and maintains a graphical history of all query interface for code understanding and maintenance. In modifications. CHI (2010). Like DataPlay, two recent research query tools are targeting 5. Chapman, A., and Jagadish, H. V. Why not? In the apparent gap in usable query tools for complex queries. SIGMOD (2009). QueryViz, translates SQL into an equivalent graphical lan- 6. Danaparamita, J., and Gatterbauer, W. Queryviz: guage [6] to help users better understand a query, it does helping users understand sql queries and their patterns. not enable direct query manipulation and it does not visually In EDBT/ICDT (2011). expose possible semantic modifications. SnipSuggest [12] 7. Gulwani, S., Harris, W. R., and Sing, R. Spreadsheet helps users specify queries by auto-suggesting SQL clauses data manipulation using examples. In CACM (2012). in a manner similar to code-assist tools in IDEs. SnipSug- gest learns a variety of SQL templates from database users 8. Heer, J., Agrawala, M., and Willett, W. Generalized of a particular domain to provide context-aware suggestions selection via interactive query relaxation. CHI (2008). ordered by popularity. SnipSuggest helps users of heavily- 9. Heer, J., and Perer, A. Orion: A system for modeling, queried databases such as scientific data repositories by lever- transformation and visualization of multidimensional aging the work of the few initial users who write the complex heterogeneous networks. In VAST (2011).

11 .10. Kandel, S., Paepcke, A., Hellerstein, J., and Heer, J. answers and non-answers. Proc. VLDB Endow. 4, 1 Wrangler: Interactive visual specification of data (Oct. 2010). transformation scripts. In CHI (2011). 17. Norman, D. A. The Design of Everyday Things, reprint 11. Karrer, T., et al. Stacksplorer: call graph navigation paperback ed. Basic Books, New York, 2002. helps increasing code maintenance efficiency. In UIST ’11 (2011). 18. Olston, C., Stonebraker, M., Aiken, A., Aiken, E., and Hellerstein, J. M. Viqing: Visual interactive querying, 12. Khoussainova, N., Kwon, Y., Balazinska, M., and Suciu, 1998. D. Snipsuggest: context-aware autocompletion for sql. Proc. VLDB Endow. 4, 1 (2010). 19. Reisner, P. Human factors studies of database query languages: A survey and assessment. ACM Comput. 13. Levene, M. The nested universal relation database Surv. 13, 1 (1981), 13–31. model. Lecture notes in computer science. Springer-Verlag, 1990. 20. Reisner, P., Boyce, R. F., and Chamberlin, D. D. Human factors evaluation of two data base query languages: 14. Maier, D., Ullman, J. D., and Vardi, M. Y. On the square and sequel. In AFIPS ’75, ACM (New York, NY, foundations of the universal relation model. ACM Trans. USA, 1975), 447–452. Database Syst. 9, 2 (June 1984). 21. Stolte, C., and Hanrahan, P. Polaris: A system for query, 15. McHugh, J., Abiteboul, S., Goldman, R., Quass, D., and analysis and visualization of multi-dimensional Widom, J. Lore: a database management system for relational databases. In INFOVIS (2000). semistructured data. SIGMOD Rec. 26, 3 (Sept. 1997). 16. Meliou, A., Gatterbauer, W., Moore, K. F., and Suciu, D. 22. Zloof, M. M. Query by example. In AFIPS National The complexity of causality and responsibility for query Computer Conference (1975), 431–438.