- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

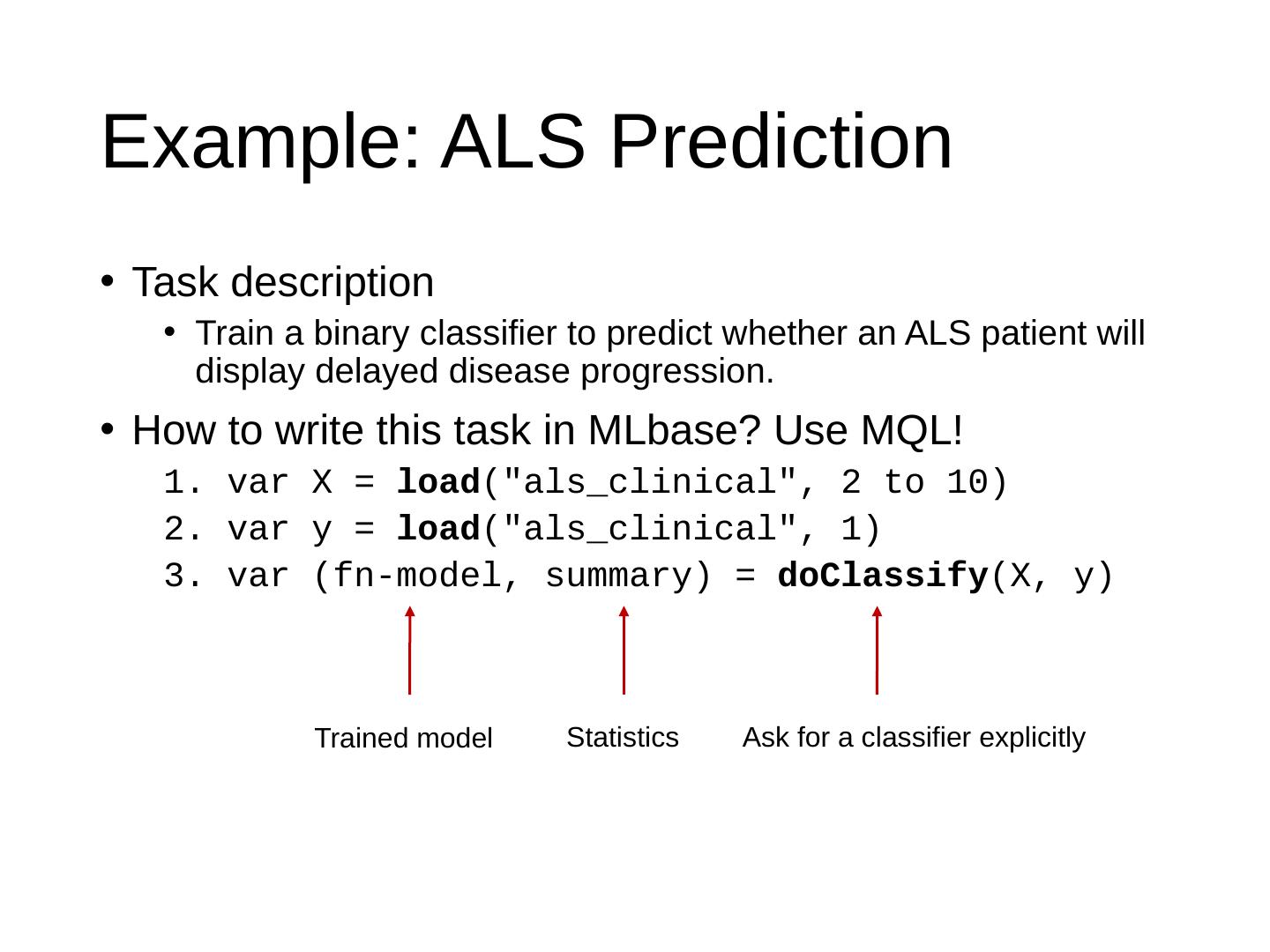

MLbase: A Distributed Machine-learning System

展开查看详情

1 .MLbase: A Distributed Machine-learning System Jialin Liu

2 .To perform a ML task When you have a dataset in your field... What you want Train a classifier to perform some analysis What you need to do actually Learn the internals of ML classification algorithms, sampling, feature selection, X-validation, ... Learn Spark(with cluster deployment) if you know your data is big enough Implement some of the classification algorithms Implement grid search to find parameters Implement validation algorithms Experiment with different sampling-sizes, algorithms, features... Need to take CS446 before you can do this!

3 .Machine Learning is hard... The complexity of machine learning algorithms Choosing of algorithms Trade-offs, parameterization, scaling... Writing a non-faulty workflow Programming language/API barriers Performance issues Running time Large dataset/model

4 .What does MLbase provide to tackle these problems? A declarative programming interface A novel optimizer to select learning algorithms A set of high-level operators A new run-time optimized for the data-access patterns of these operators

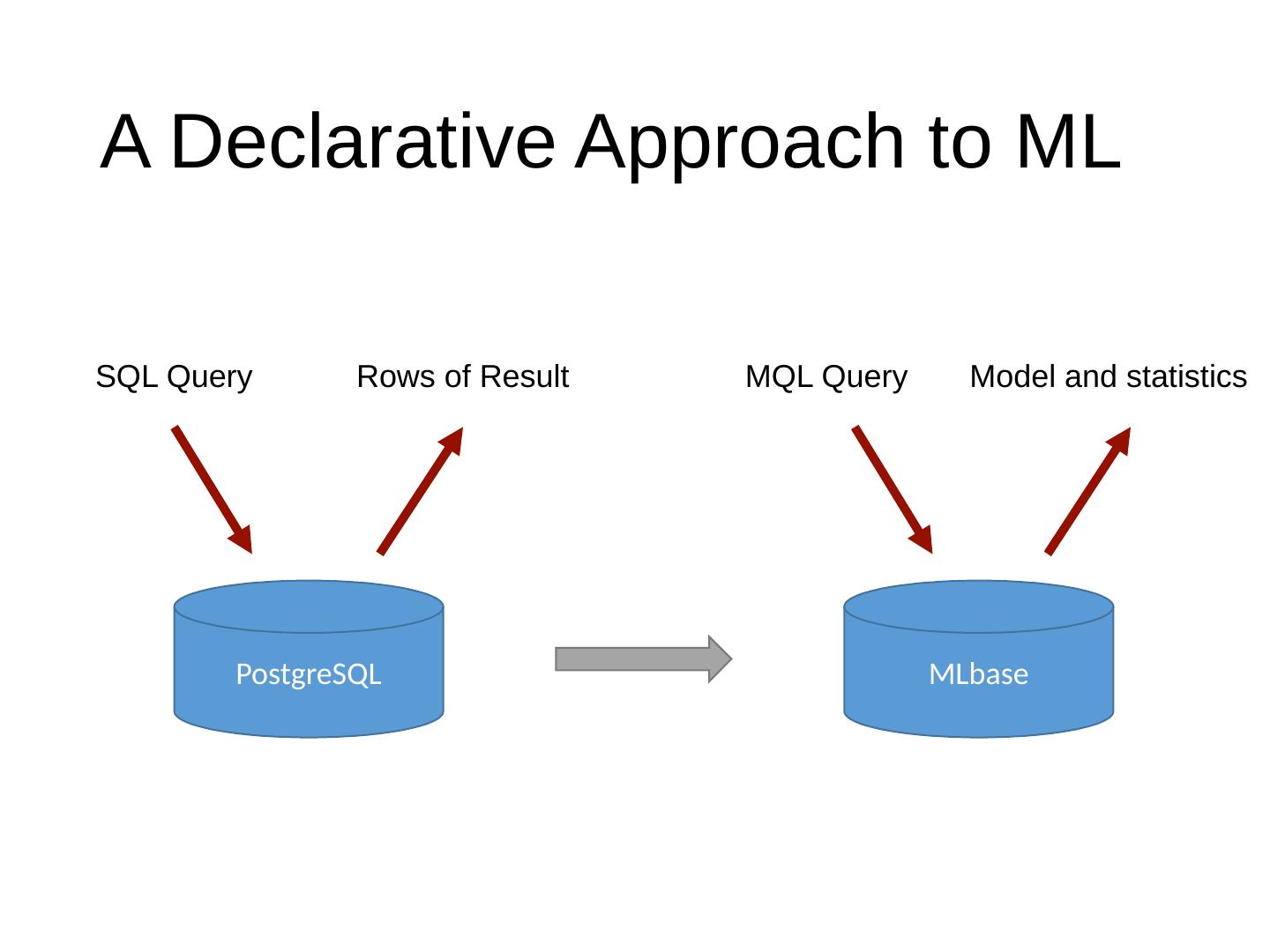

5 .A Declarative Approach to ML PostgreSQL MLbase SQL Query Rows of Result MQL Query Model and statistics

6 .Example: ALS Prediction Task description Train a binary classifier to predict whether an ALS patient will display delayed disease progression. How to write this task in MLbase? Us e MQL! var X = load (" als_clinical ", 2 to 10) var y = load (" als_clinical ", 1) var ( fn -model, summary) = doClassify (X, y) Trained model Statistics Ask for a classifier explicitly

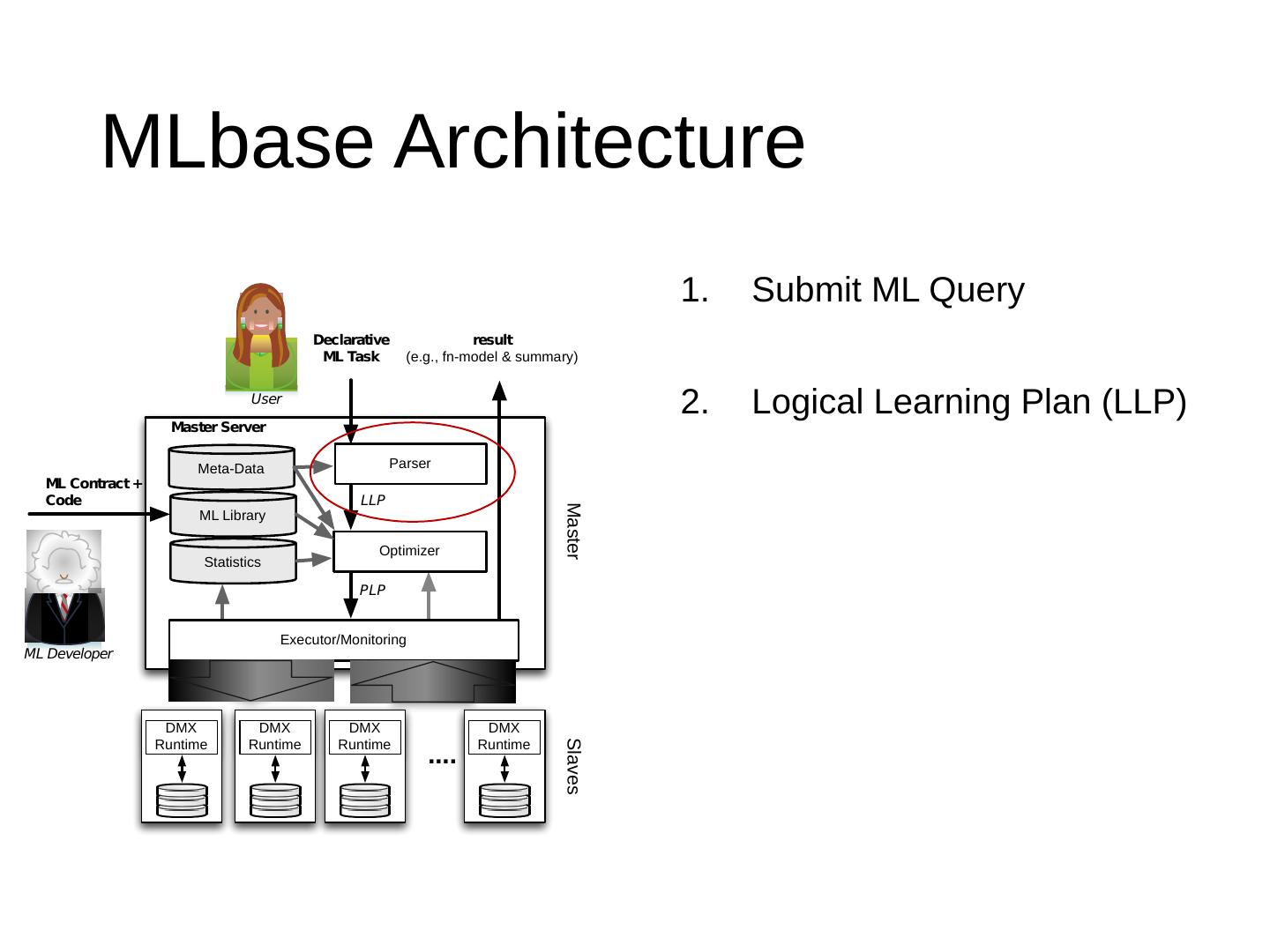

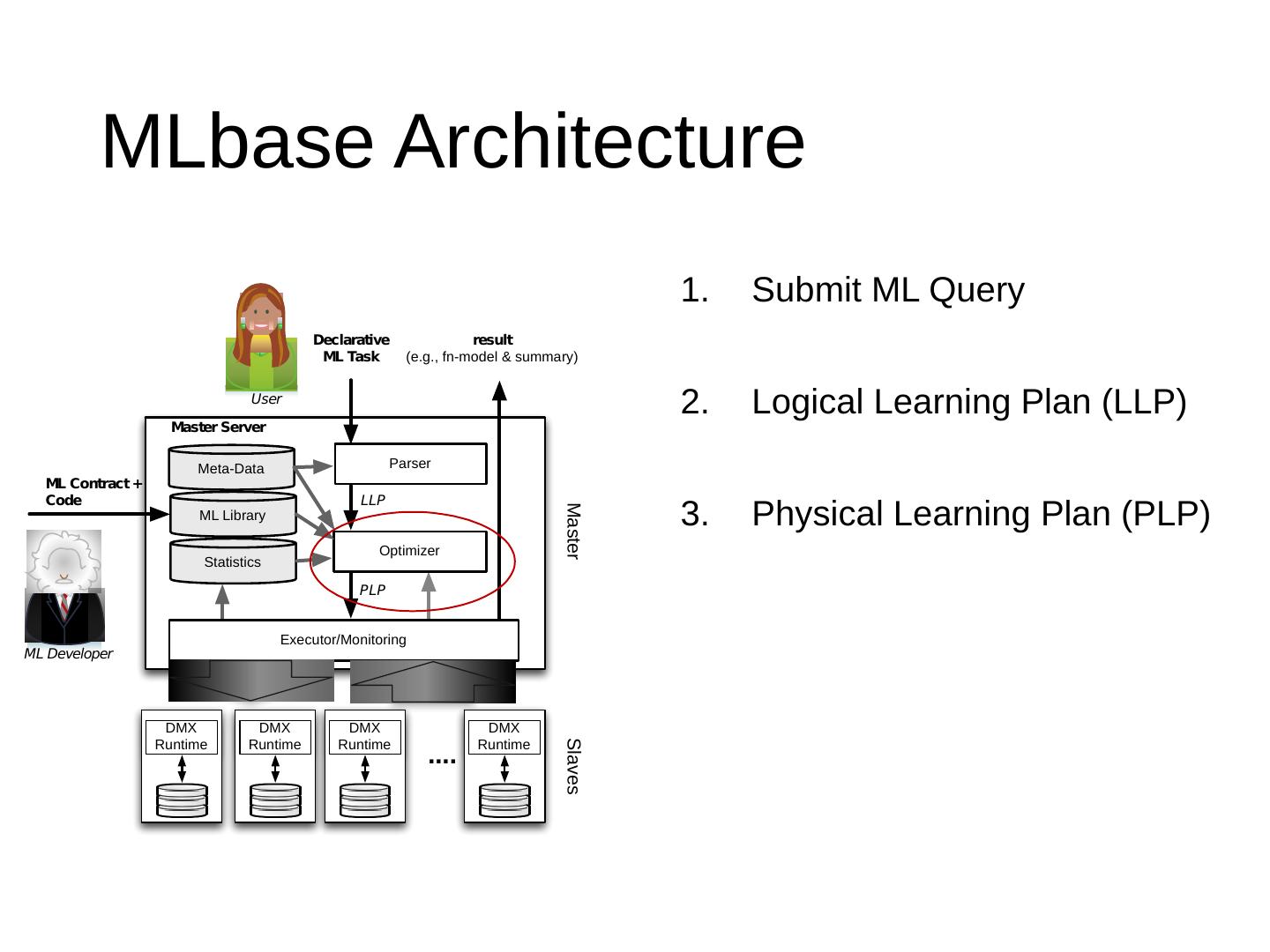

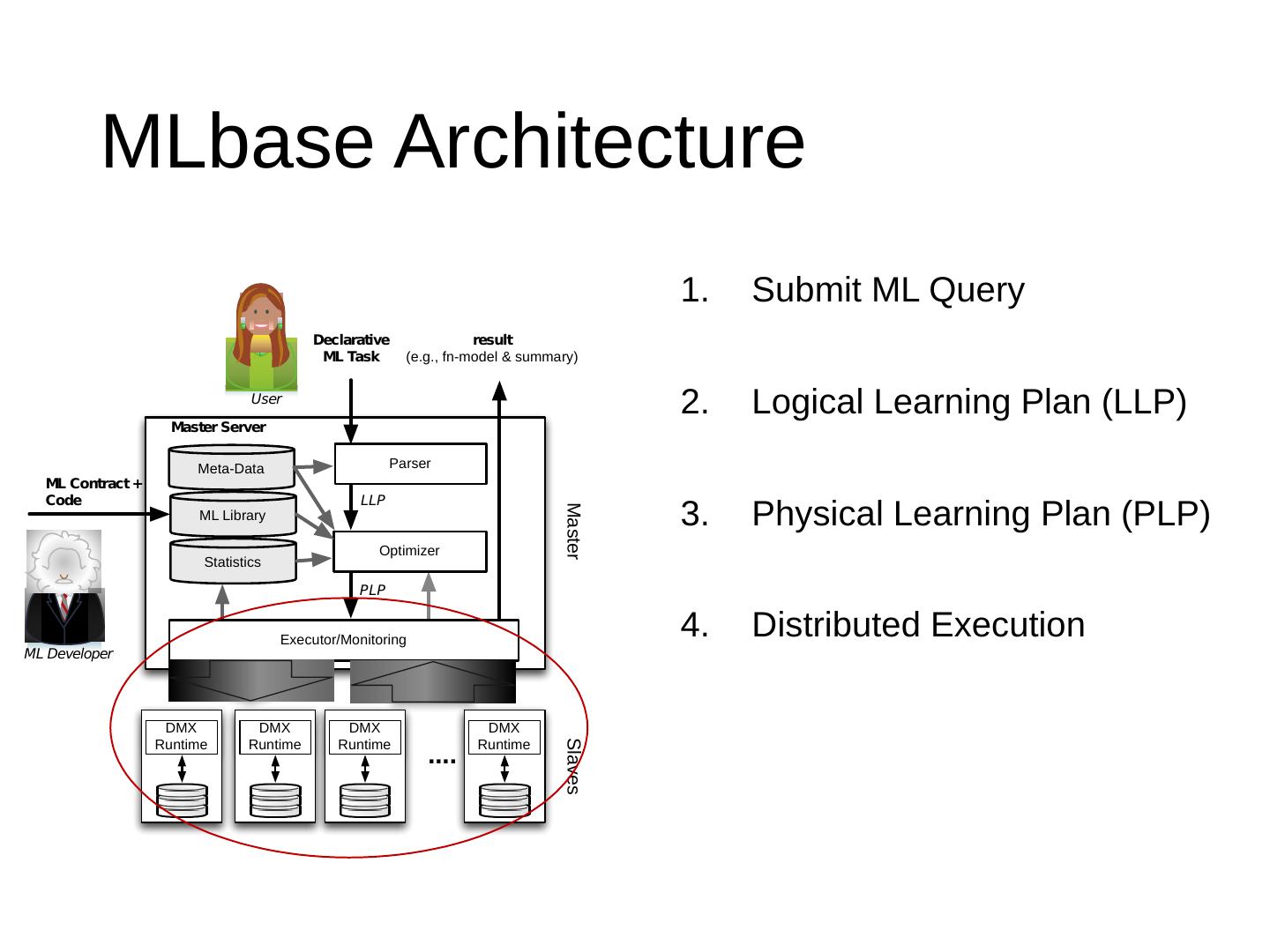

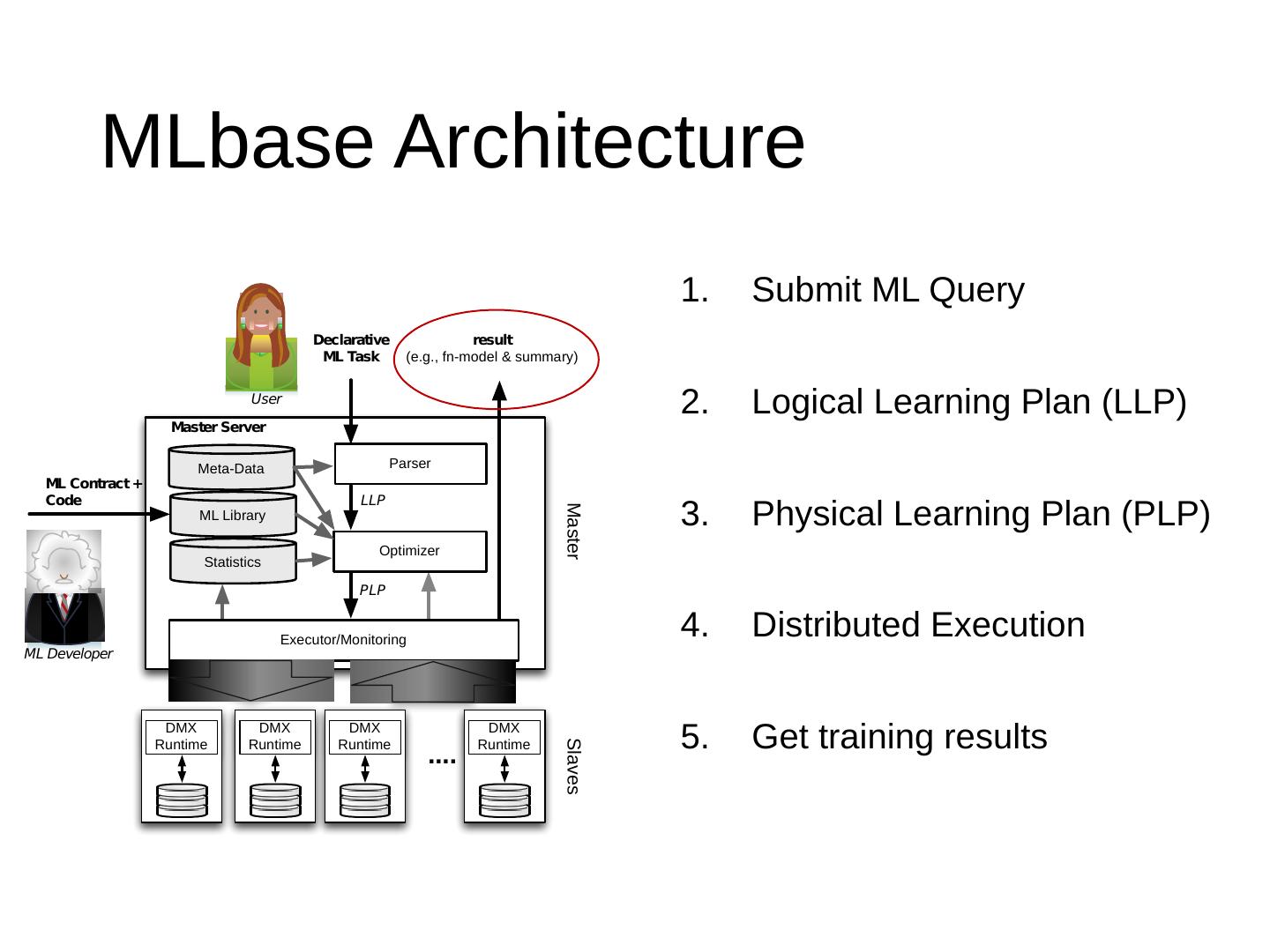

7 .MLbase Architecture Submit ML Query

8 .MLbase Architecture Submit ML Query Logical Learning Plan (LLP)

9 .MLbase Architecture Submit ML Query Logical Learning Plan (LLP) Physical Learning Plan (PLP)

10 .MLbase Architecture Submit ML Query Logical Learning Plan (LLP) Physical Learning Plan (PLP) Distributed Execution

11 .MLbase Architecture Submit ML Query Logical Learning Plan (LLP) Physical Learning Plan (PLP) Distributed Execution Get training results

12 .Step 1: Translation var X = load (" als_clinical ", 2 to 10) var y = load (" als_clinical ", 1) var ( fn -model, summary) = doClassify (X, y) (1)MQL (2)Generic Logical Plan

13 .Step 1: Translation var X = load (" als_clinical ", 2 to 10) var y = load (" als_clinical ", 1) var ( fn -model, summary) = doClassify (X, y) (1)MQL (2)Generic Logical Plan

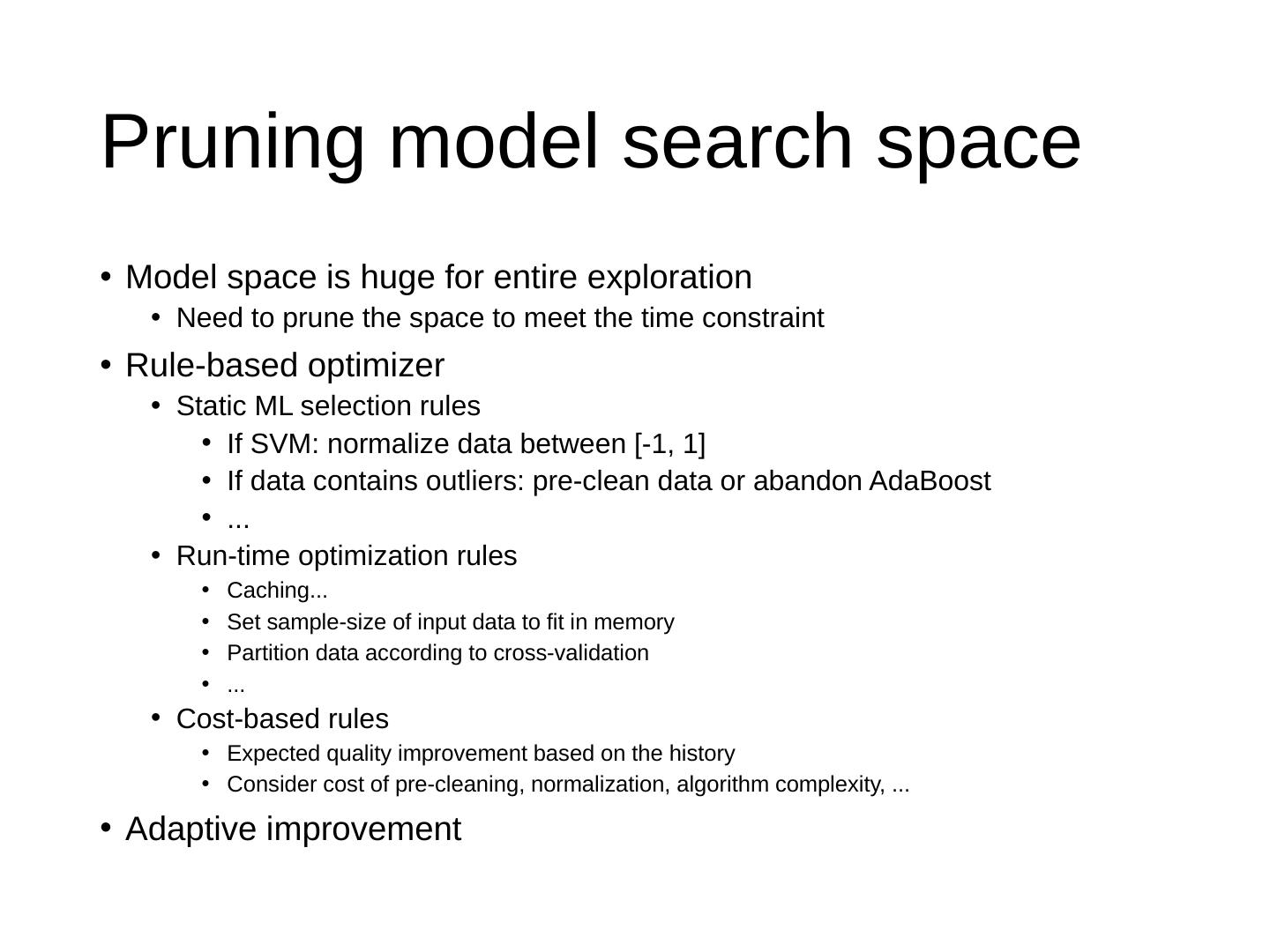

14 .Pruning model search space Model space is huge for entire exploration Need to prune the space to meet the time constraint Rule-based optimizer Static ML selection rules If SVM: normalize data between [-1, 1] If data contains outliers: pre-clean data or abandon AdaBoost ... Run-time optimization rules Caching... Set sample-size of input data to fit in memory Partition data according to cross-validation ... Cost-based rules Expected quality improvement based on the history Consider cost of pre-cleaning, normalization, algorithm complexity, ... Adaptive improvement

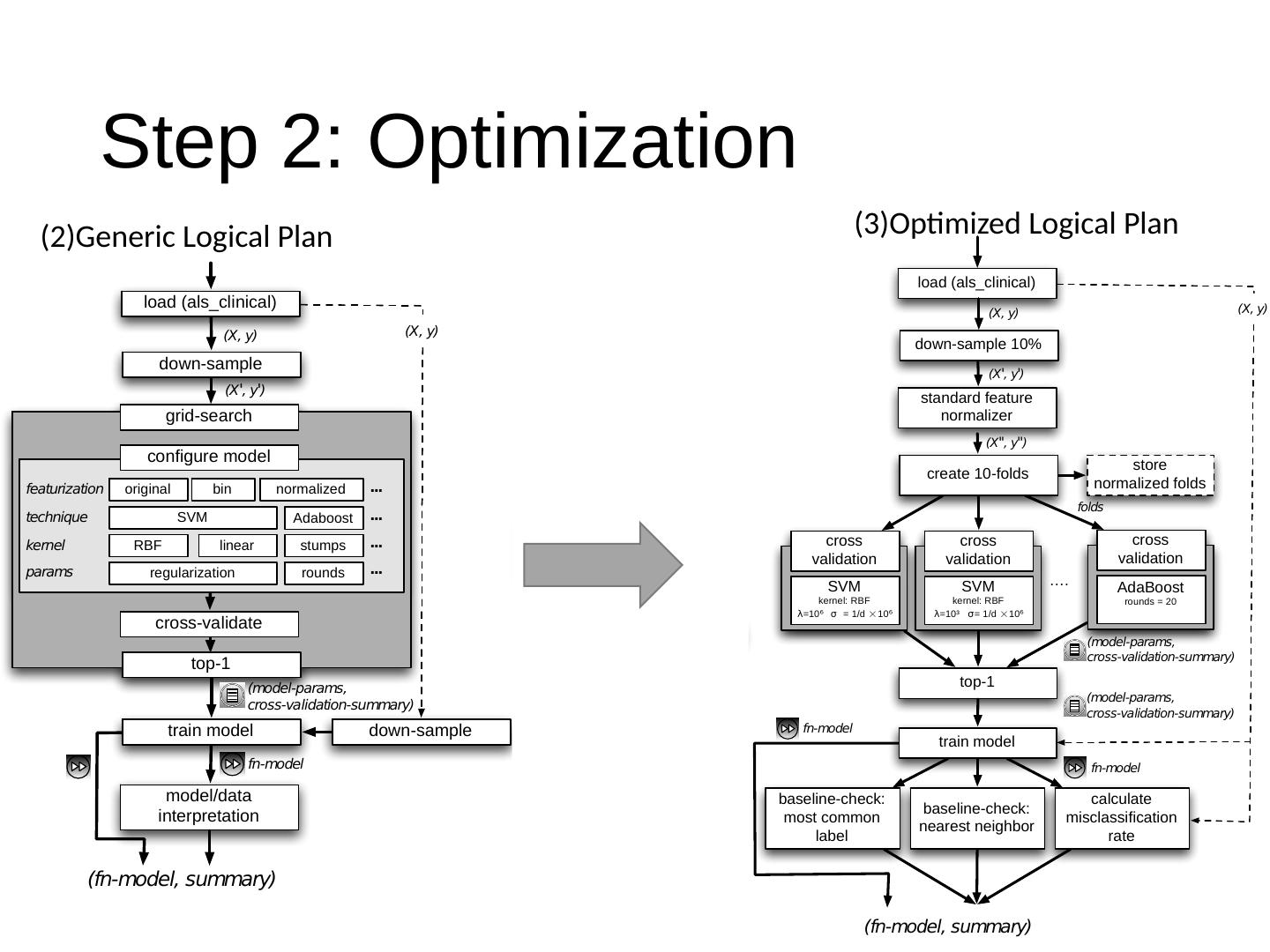

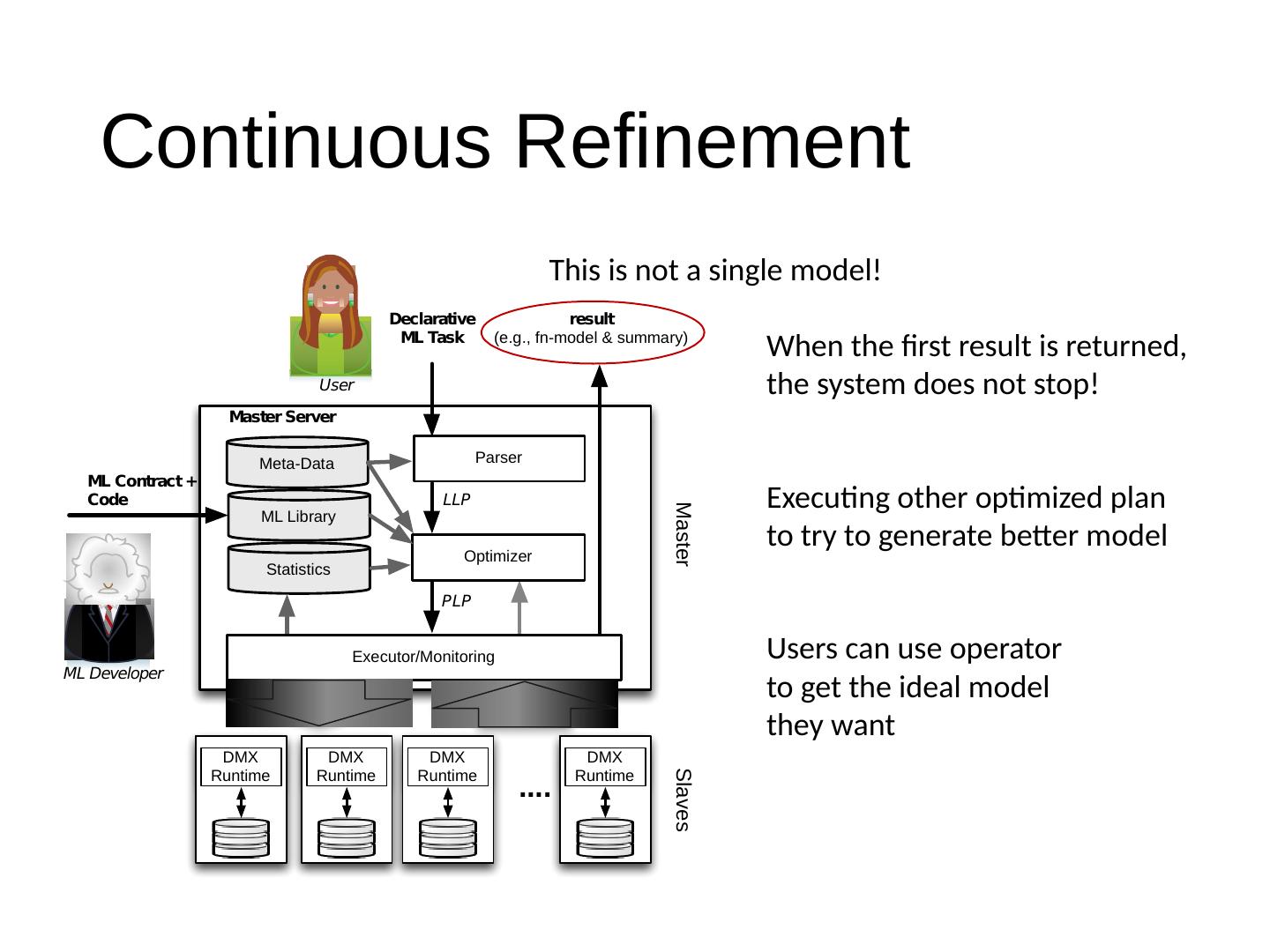

15 .Step 2: Optimization (2)Generic Logical Plan (3)Optimized Logical Plan

16 .Adaptive Optimizer Optimizer also receive feedback from monitor to improve estimation

17 .Optimizer Examples Use 6 datasets from LIBSVM website compare performance across different parameter combinations of SVM and AdaBoost Parameter Settings number of rounds in AdaBoost regularization RBF scale for SVM d is number of features in the dataset.

18 .Accuracy comparison(1) *Other parameters are tuned in each dataset

19 .Accuracy comparison(2)

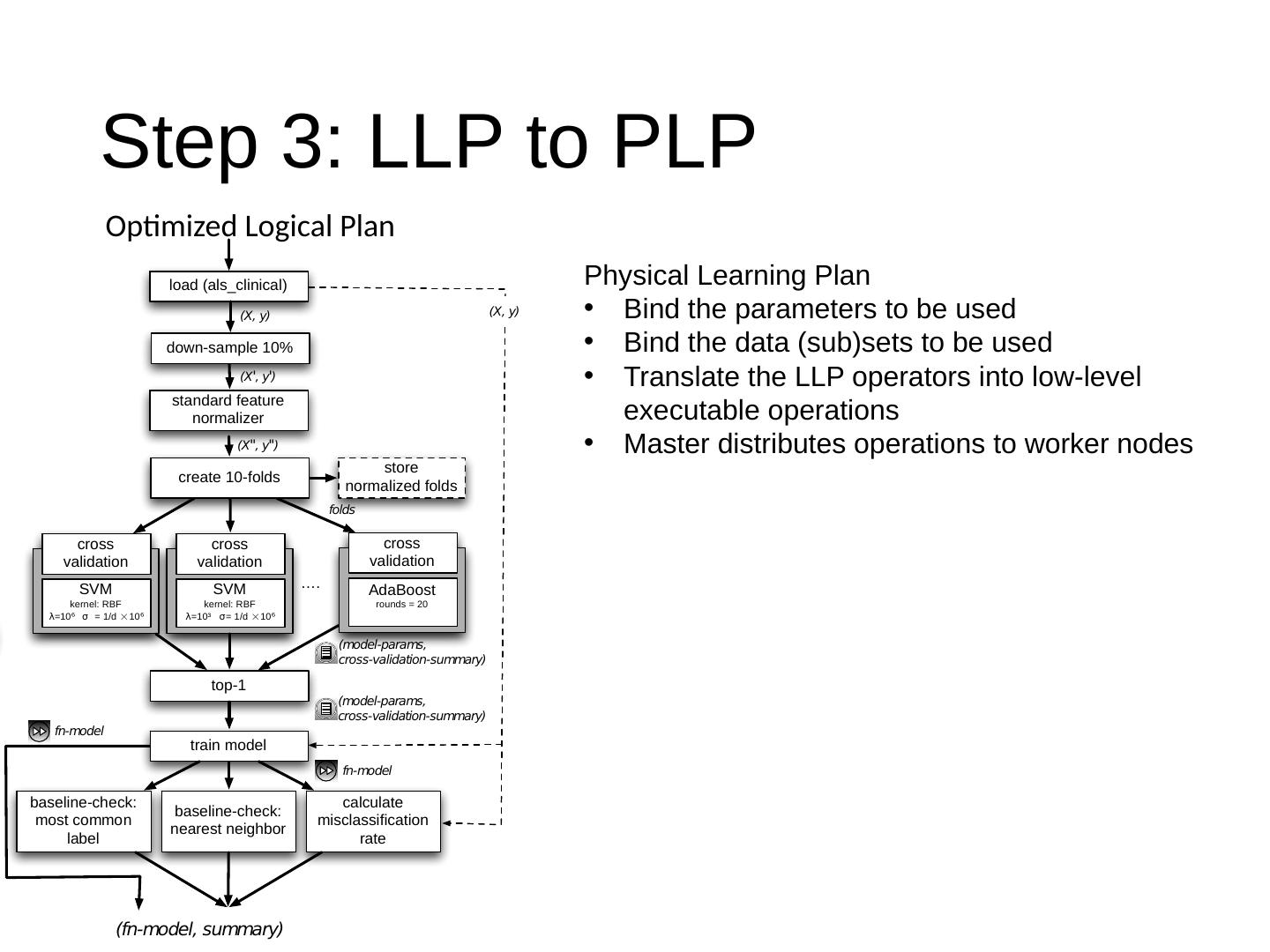

20 .Step 3: LLP to PLP Optimized Logical Plan Physical Learning Plan Bind the parameters to be used Bind the data (sub)sets to be used Translate the LLP operators into low-level executable operations Master distributes operations to worker nodes

21 .MLbase Runtime Some algorithms (e.g. gradient decent update) can tolerate stale gradient update. Give the system freedom to use asynchronous technique Example of Alternating-least-square algorithm

22 .Continuous Refinement This is not a single model! When the first result is returned, the system does not stop! Executing other optimized plan to try to generate better model Users can use operator to get the ideal model they want

23 .Stream Data Model var X = load (" als_clinical ", 2 to 10) var y = load (" als_clinical ", 1) var ( fn -model, summary) = doClassify (X, y) var X = load (" als_clinical ", 2 to 10) var y = load (" als_clinical ", 1) var ( fn -model, summary) = top( doClassify (X, y) , 10min) model1 model2 model3 Top model2 What you get in the end fn -model But MLbase does not stop here!

24 .From the “summary”

25 .ML Algorithm Extension

26 .Q: What is missing in this system?

27 .Q: What is missing in this system? The system is unaware of the original dataset Data cleaning Feature engineering

28 .Thanks!