- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Naïve Bayes Classifiers

展开查看详情

1 .Naïve Bayes Classifiers Jonathan Lee and Varun Mahadevan

2 .Independence Recap: Definition: Two events X and Y are independent if and only if Equivalently, if , then .

3 .Conditional Independence Definition: Two events A and B are conditionally independent given C if and only if Equivalently, if P(B) > 0 and P(C) > 0, then .

4 .Example: Randomly choose a day of the week A = { It is not a Monday } B = { It is a Saturday } C = { It is the weekend } A and B are dependent events P(A) = 6/7, P(B) = 1/7, P(AB) = 1/7 Now condition both A and B on C: P(A|C) = 1, P(B|C) = ½, P(AB|C) = ½ P(AB|C) = P(A|C)P(B|C) => A|C and B|C are independent

5 .Conditional Independence Conditional Independence does not imply Independence. Suppose X and Y are conditionally independent given Z, and X and Y are conditionally independent given Z C . Are X and Y independent?

6 .Conditional Independence Conditional Independence does not imply Independence . We have two coins, one fair and one 2-headed. Let X be the event that the first flip is a Head. Let Y be the event that the second flip is a Head. Let Z be the event that we choose the fair coin.

7 .Conditional Independence Conditional Independence does not imply Independence . P(X | Z) = ½, P(Y | Z) = ½, P(XY | Z) = ¼ Since P(X | Z)P(Y | Z) = P(XY|Z), X and Y are conditionally independent given Z. You can convince yourself that X and Y are conditionally independent given Z C using a similar argument.

8 .Conditional Independence Conditional Independence does not imply Independence. P(X) = ¾ , and P(Y) = ¾ P(XY) = P(XY|Z)P(Z) + P(XY|Z C )P(Z C ) = ¼ x ½ + 1 x ½ = 5/8 P(XY) ≠ P(X)P(Y), so X and Y are not independent.

9 .Programming Project: Spam Filter Implement a Naive Bayes classifier for classifying emails as either spam or ham (= nonspam ). You may use Java or Python 3. We’ve provided starter code in both. Read Jonathan Lee’s notes on the course web, start early, and ask for help if you get stuck!

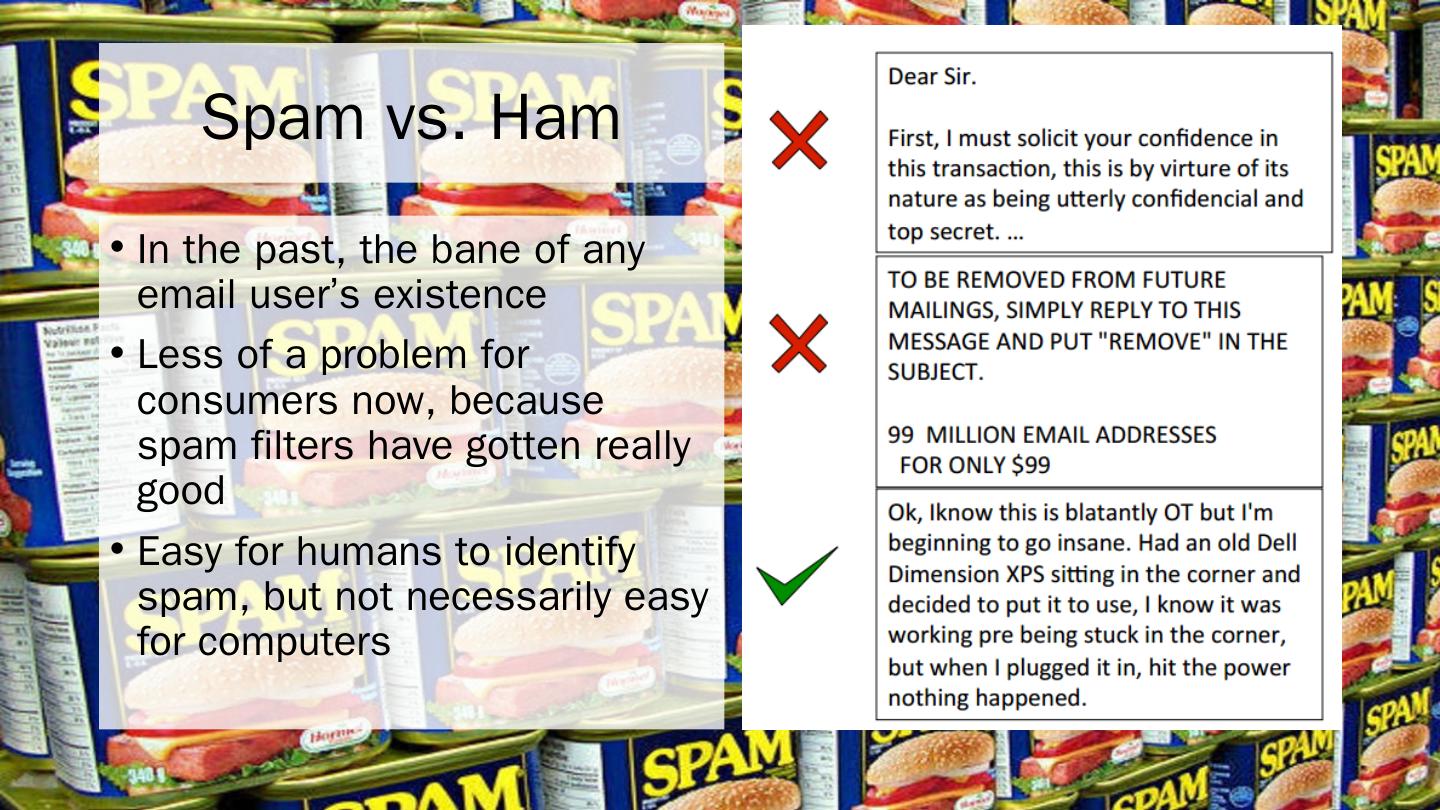

10 .Spam vs. Ham In the past, the bane of any email user’s existence Less of a problem for consumers now, because spam filters have gotten really good Easy for humans to identify spam, but not necessarily easy for computers

11 .The spam classification problem Input: collection of emails, already labeled spam or ham Someone has to label these by hand Called the training data Use this data to train a model that “understands” what makes an email spam or ham We’re using a Naïve Bayes classifier, but there are other approaches This is a Machine Learning problem (take CSE 446 for more) Test your model on emails whose label isn’t provided, and see how well it does Called the test data

12 .Naïve Bayes in the real world One of the oldest, simplest methods for classification Powerful and still used in the real world/industry Identifying credit card fraud Identifying fake Amazon reviews Identifying vandalism on Wikipedia Still used (with modifications) by Gmail to prevent spam Facial recognition Categorizing Google News articles Even used for medical diagnosis!

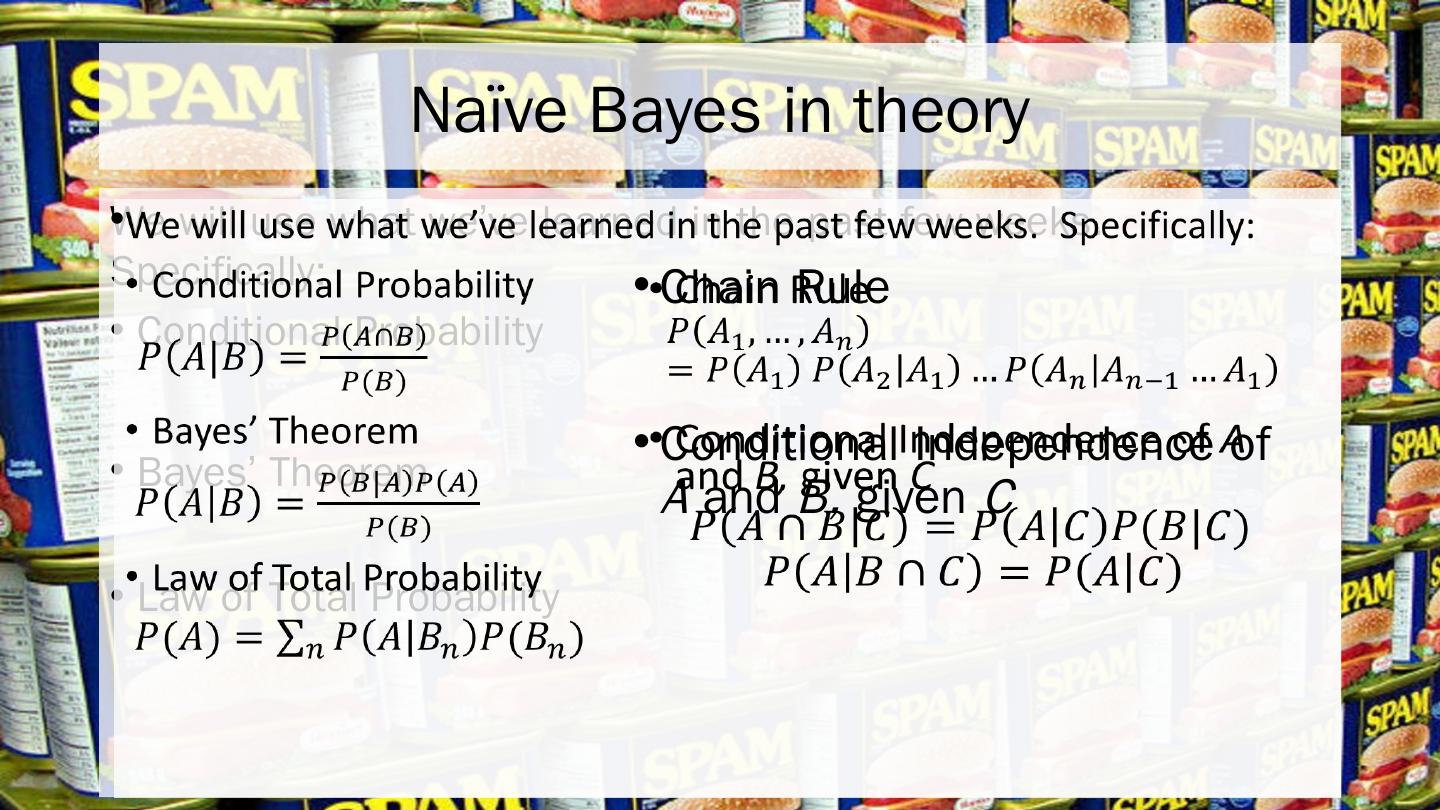

13 .Naïve Bayes in theory We will use what we’ve learned in the past few weeks. Specifically: Conditional Probability Bayes’ Theorem Law of Total Probability Chain Rule Conditional Independence of A and B, given C

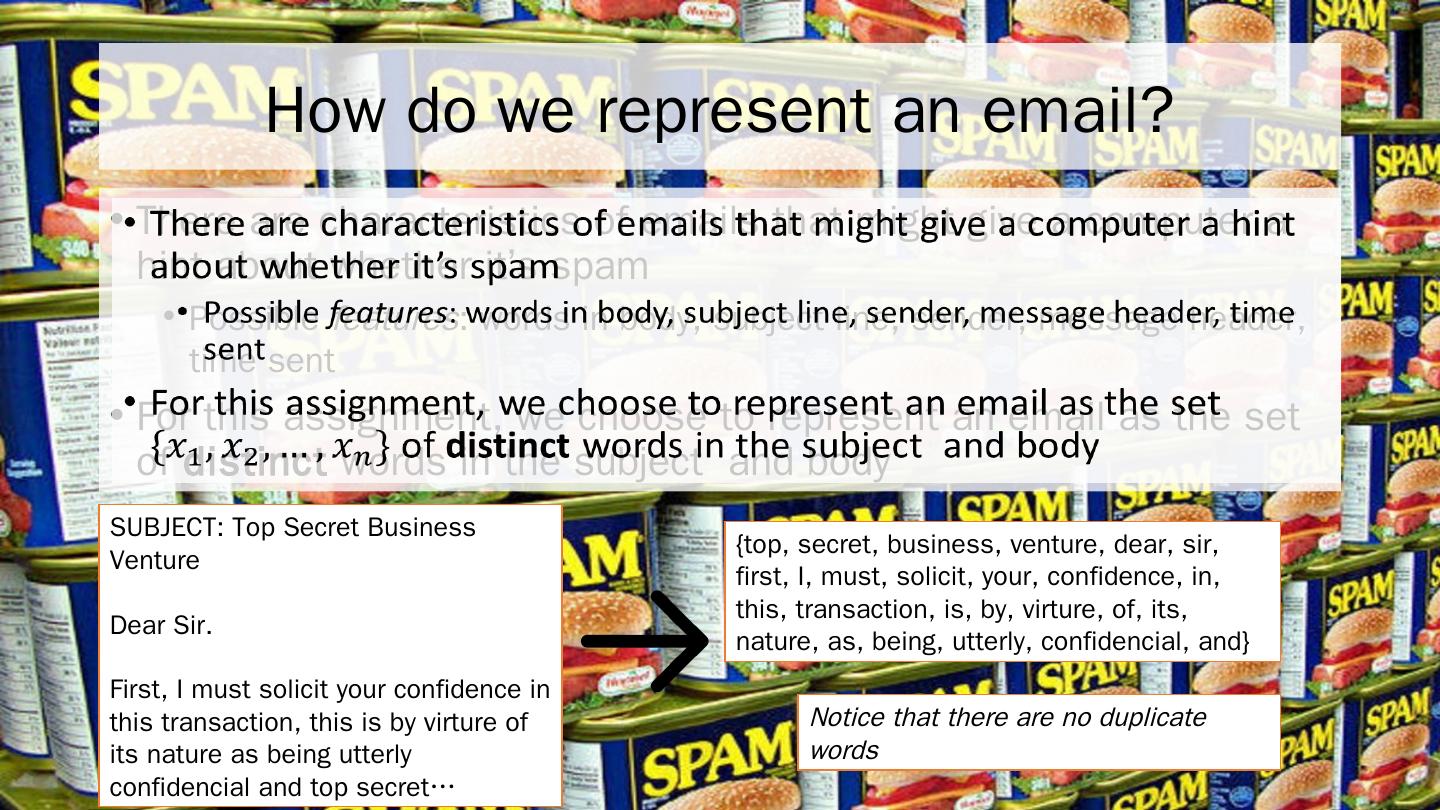

14 .How do we represent an email? SUBJECT: Top Secret Business Venture Dear Sir. First, I must solicit your confidence in this transaction, this is by virture of its nature as being utterly confidencial and top secret… { top , secret, business, venture, dear, sir, first, I, must, solicit, your, confidence, in, this, transaction, is, by, virture , of, its, nature, as, being, utterly, confidencial , and} There are characteristics of emails that might give a computer a hint about whether it’s spam Possible features : words in body, subject line, sender, message header, time sent For this assignment, we choose to represent an email as the set of distinct words in the subject and body Notice that there are no duplicate words

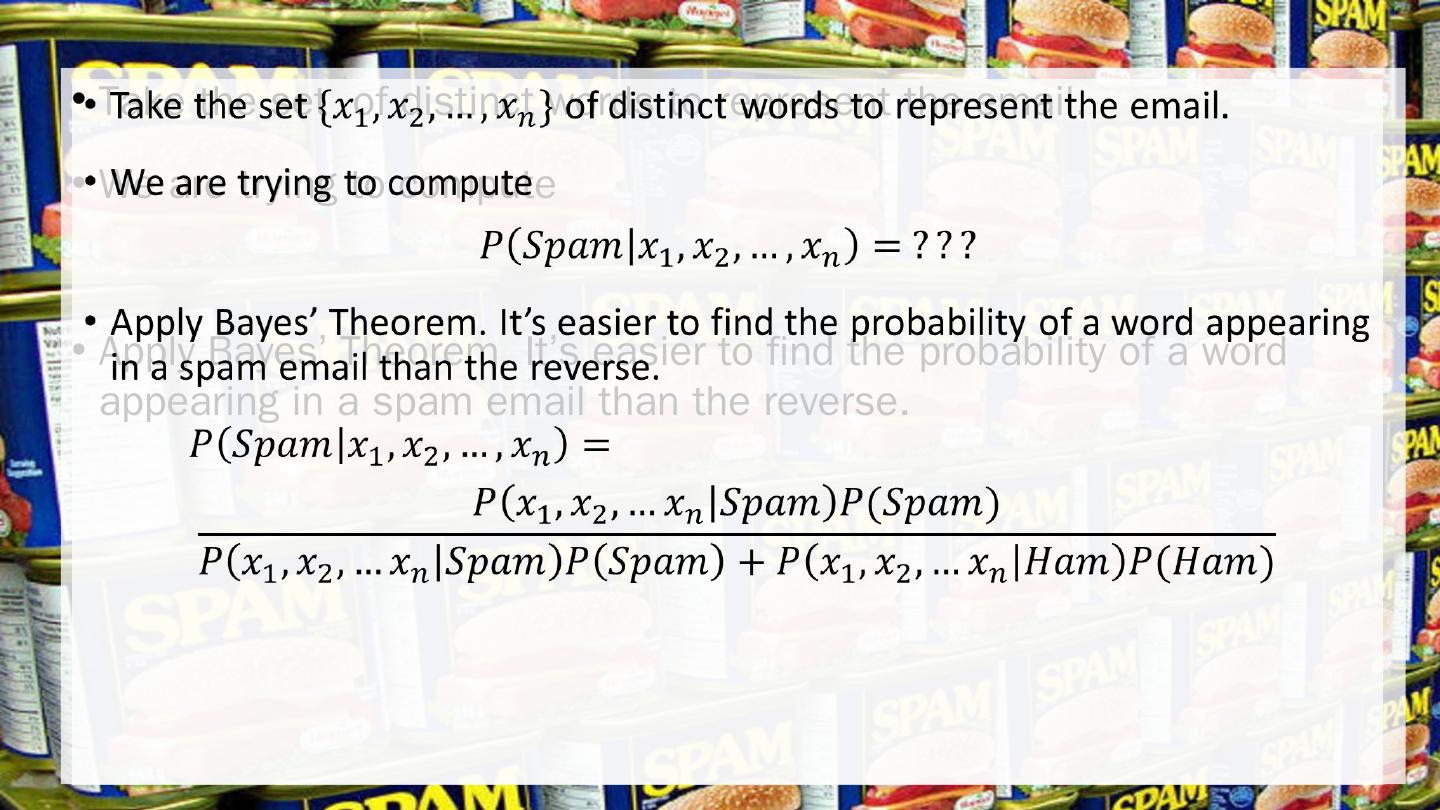

15 .Programming Project Take the set of distinct words to represent the email. We are trying to compute Apply Bayes’ Theorem. It’s easier to find the probability of a word appearing in a spam email than the reverse.

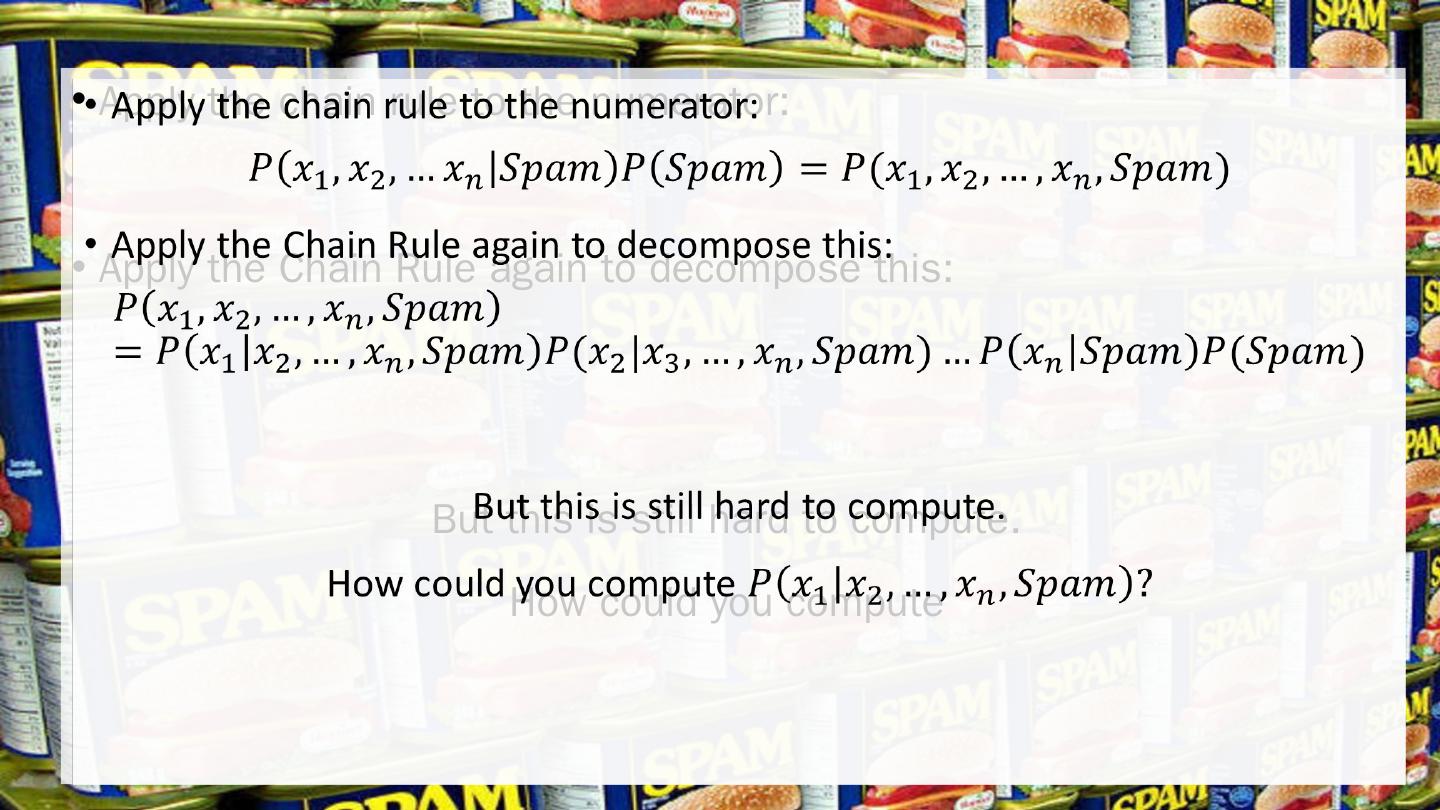

16 .Programming Project Apply the chain rule to the numerator: Apply the Chain Rule again to decompose this: But this is still hard to compute. How could you compute

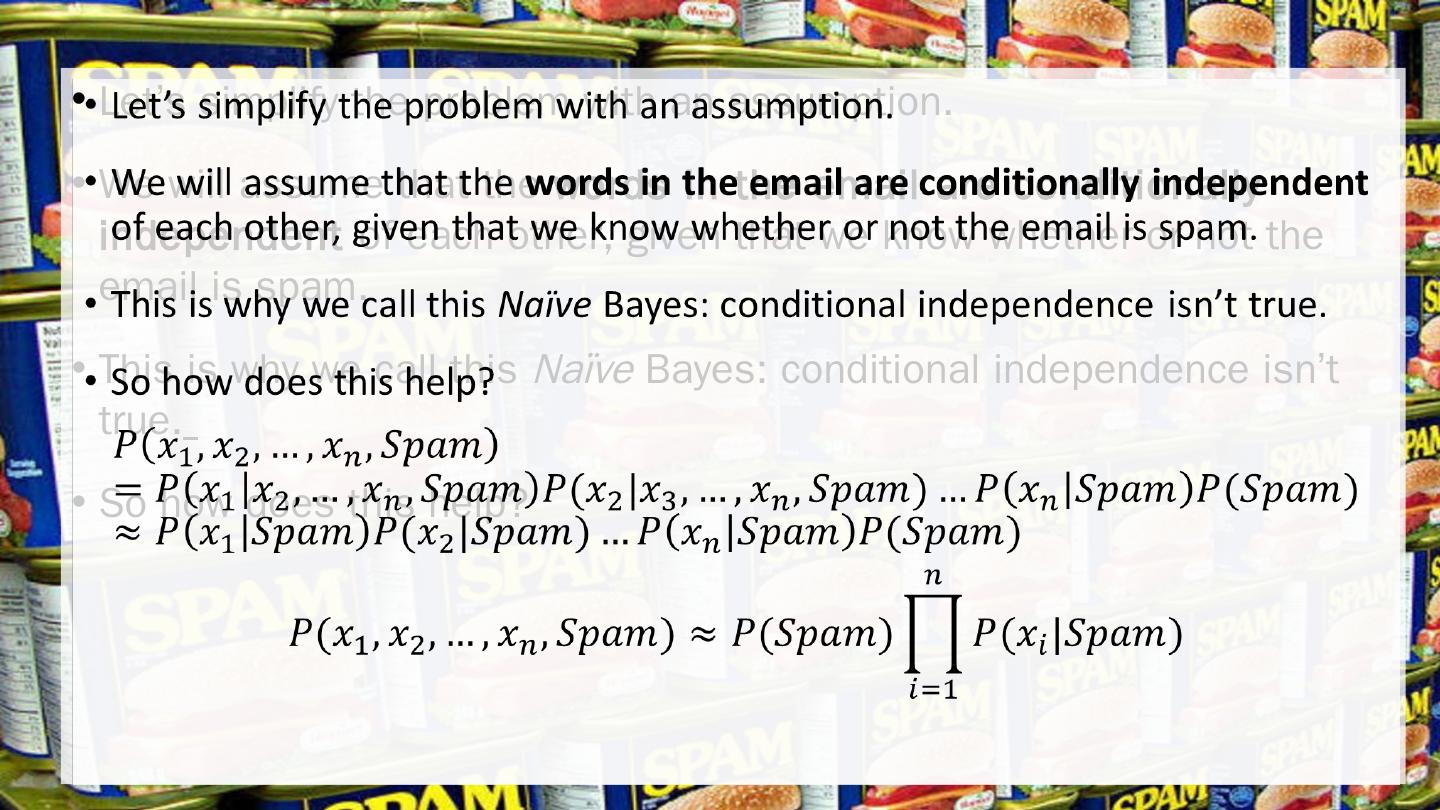

17 .Let’s simplify the problem with an assumption. We will assume that the words in the email are conditionally independent of each other, given that we know whether or not the email is spam. This is why we call this Naïve Bayes: conditional independence isn’t true. So how does this help?

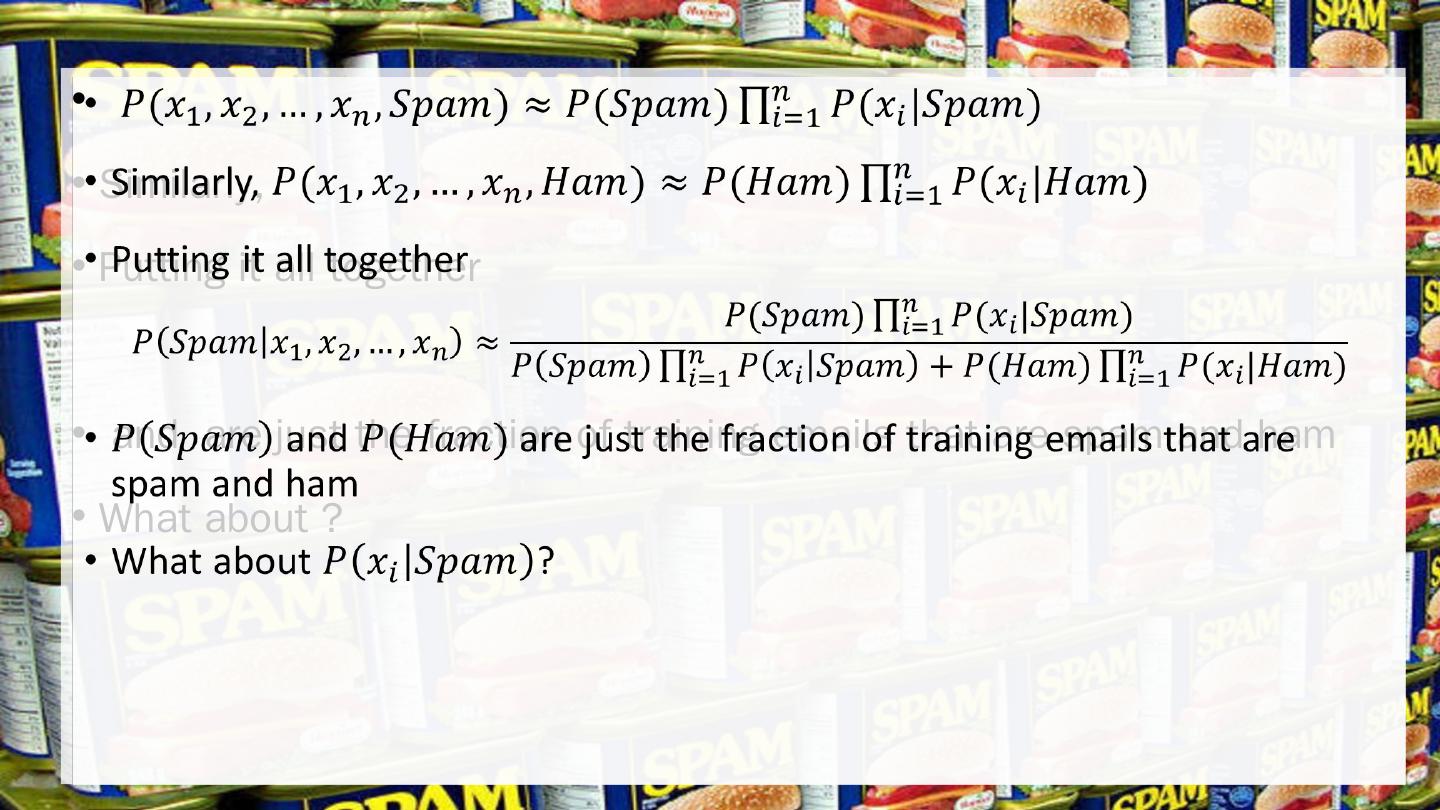

18 .Programming Project Similarly, Putting it all together and are just the fraction of training emails that are spam and ham What about ?

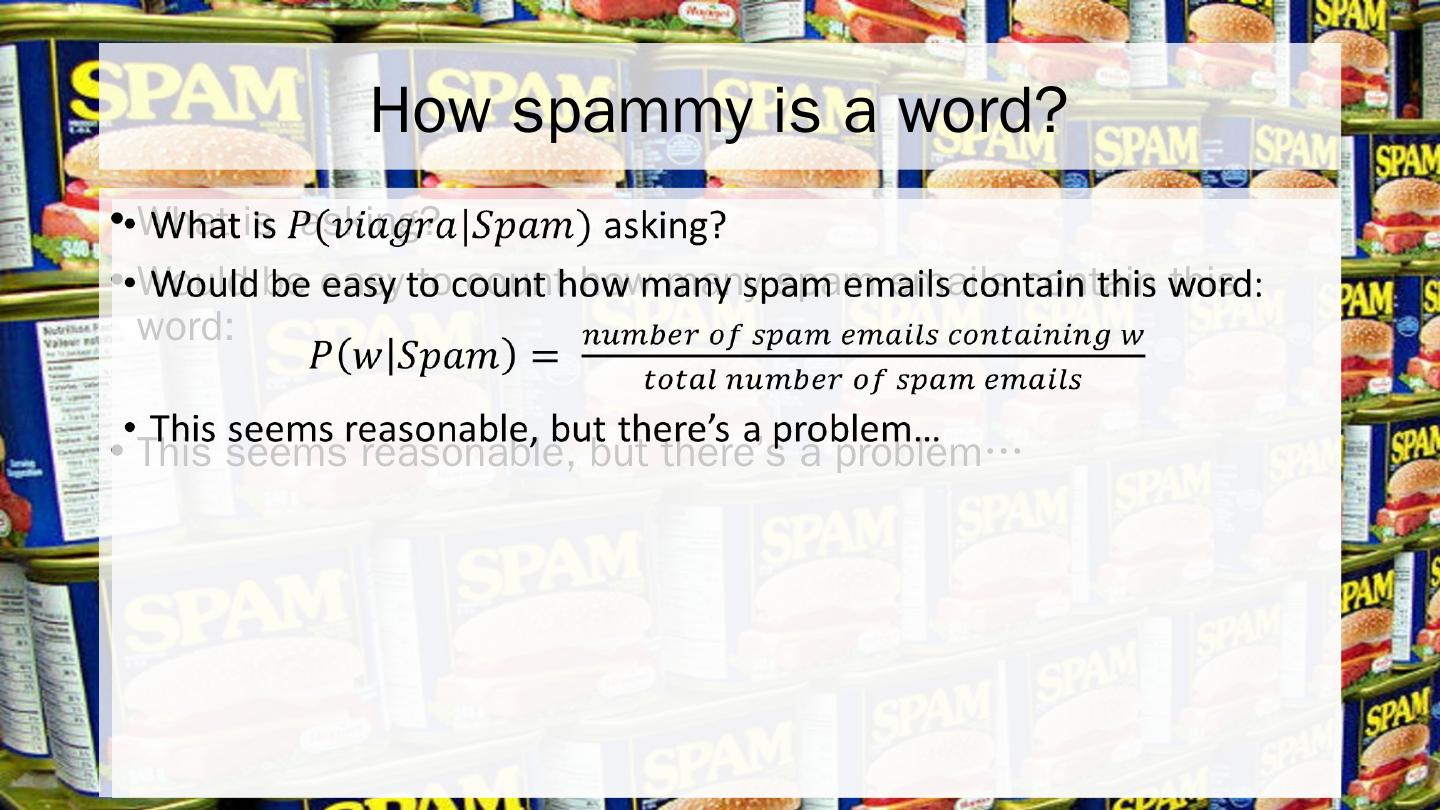

19 .How spammy is a word? What is asking? Would be easy to count how many spam emails contain this word: This seems reasonable, but there’s a problem…

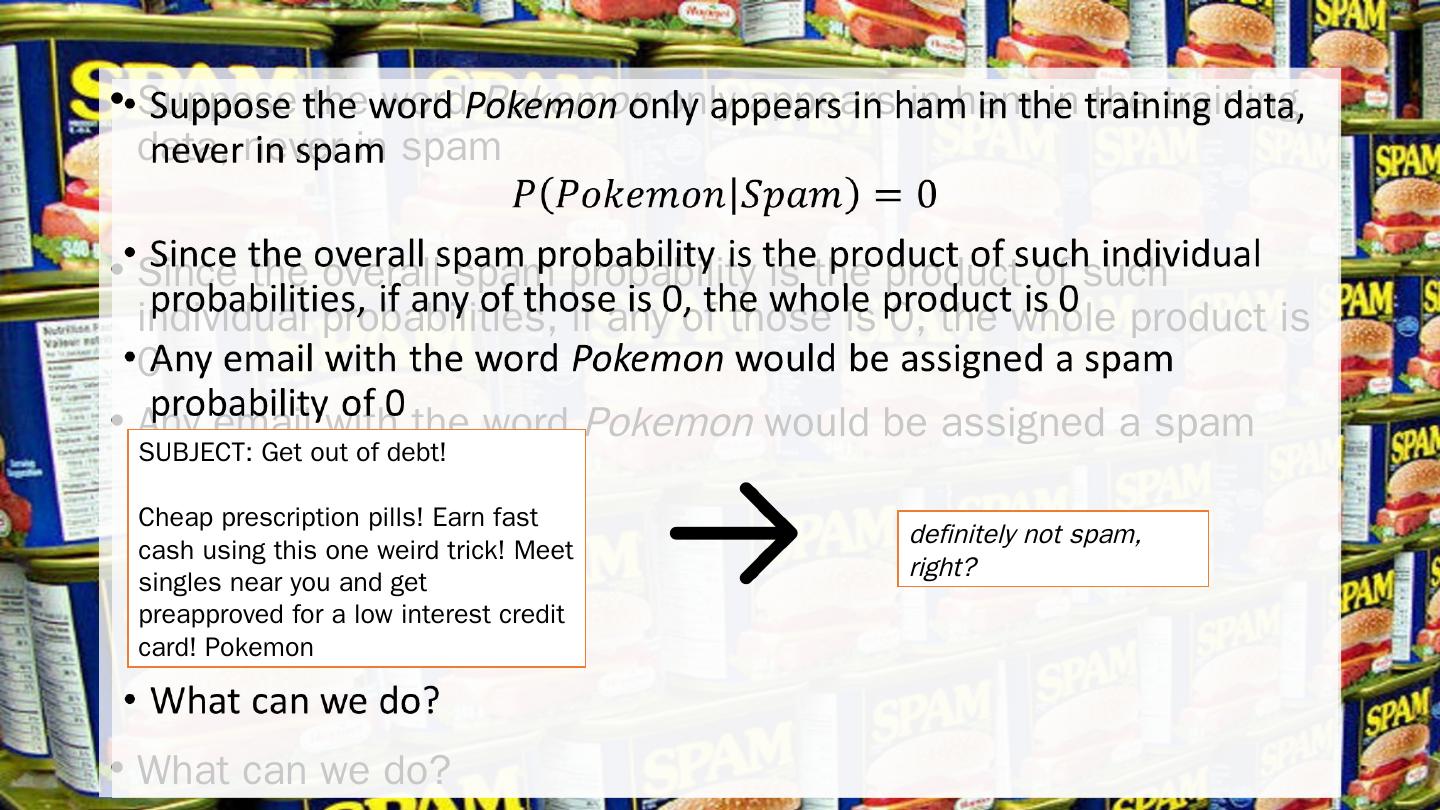

20 .Suppose the word Pokemon only appears in ham in the training data, never in spam Since the overall spam probability is the product of such individual probabilities, if any of those is 0, the whole product is 0 Any email with the word Pokemon would be assigned a spam probability of 0 What can we do? SUBJECT: Get out of debt! Cheap prescription pills! Earn fast cash using this one weird trick! Meet singles near you and get preapproved for a low interest credit card! Pokemon definitely not spam, right?

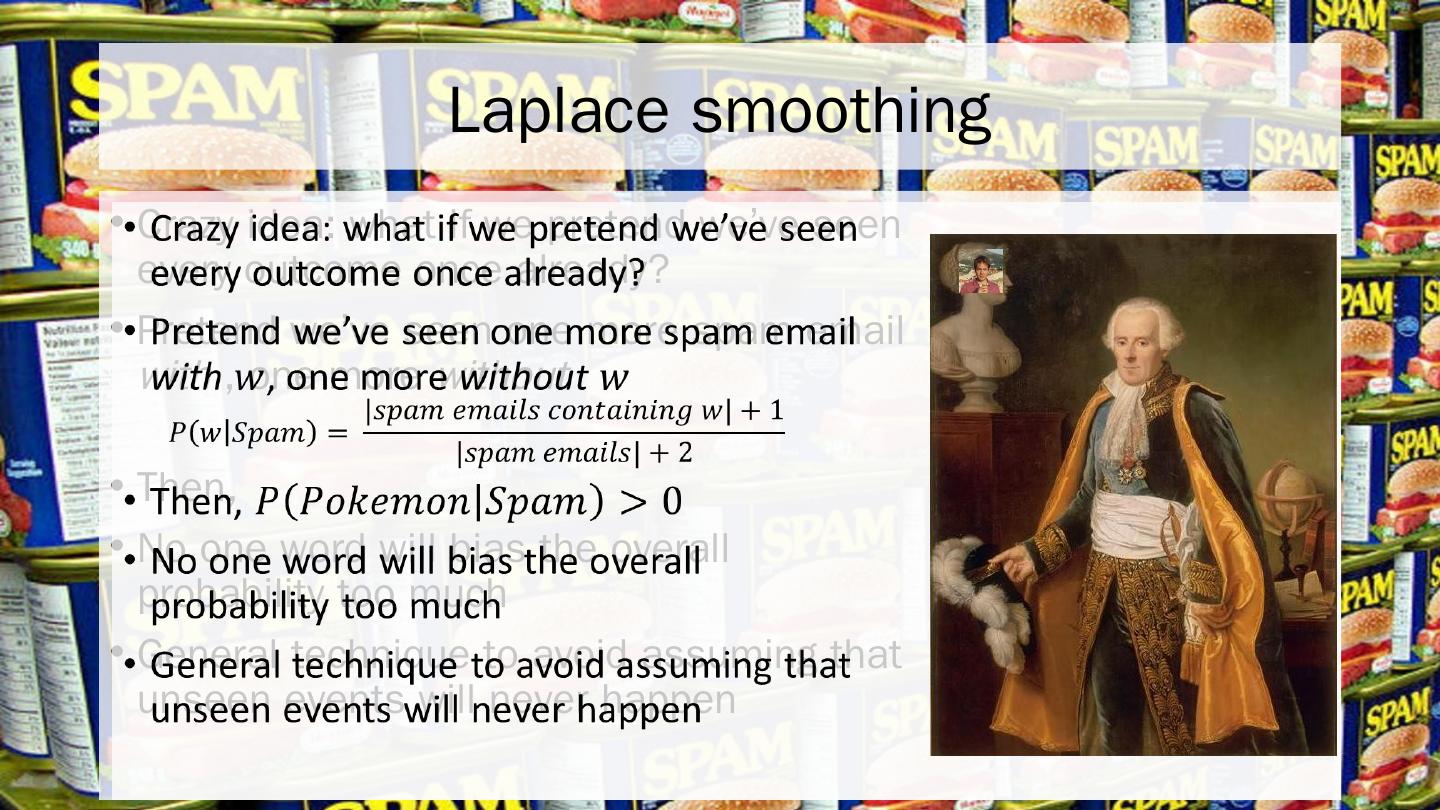

21 .Laplace smoothing Crazy idea: what if we pretend we’ve seen every outcome once already? Pretend we’ve seen one more spam email with , one more without Then, No one word will bias the overall probability too much General technique to avoid assuming that unseen events will never happen

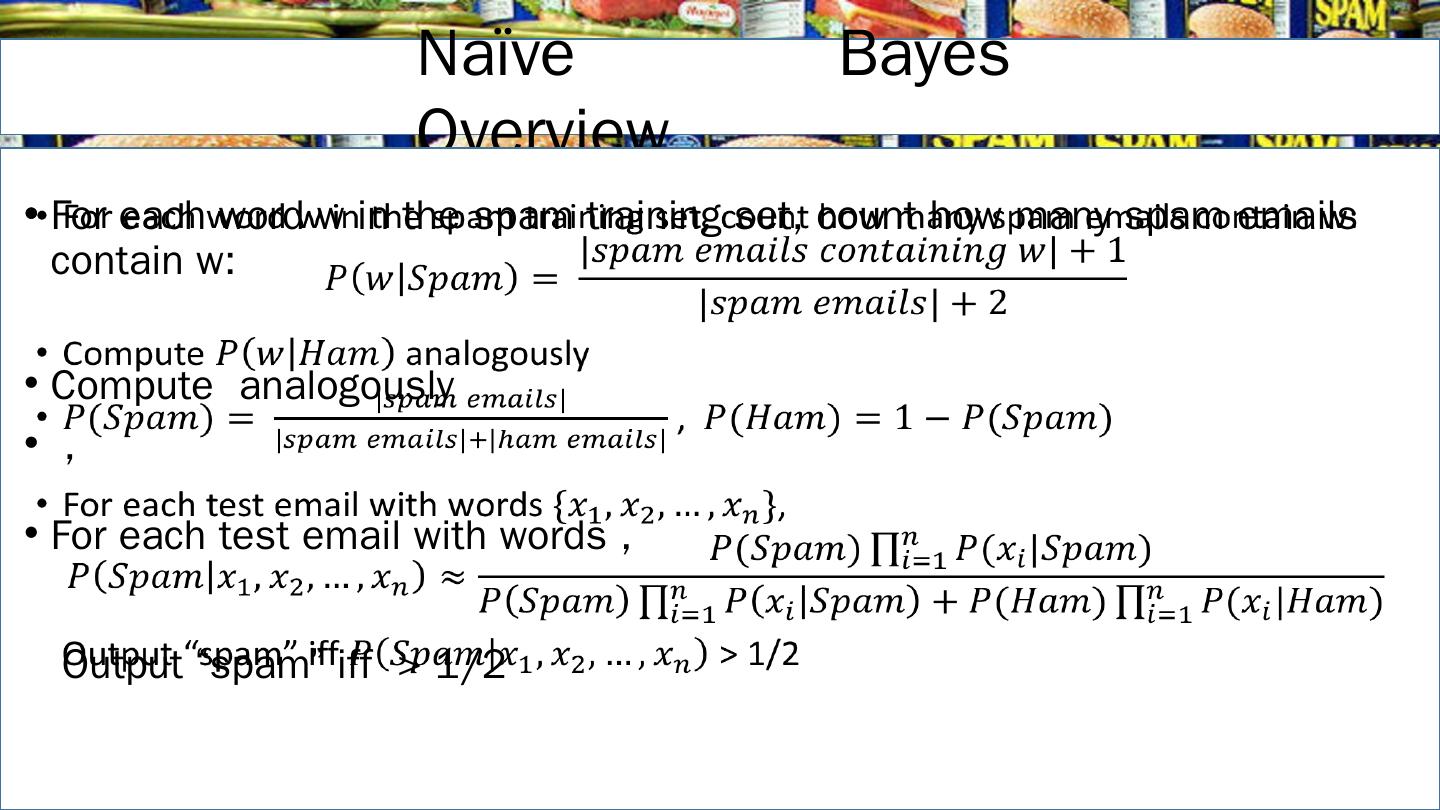

22 .Naïve Bayes Overview For each word w in the spam training set, count how many spam emails contain w: Compute analogously , For each test email with words , Output “spam” iff > 1/2

23 .Read the Notes! Before starting, read Jonathan Lee’s Naïve Bayes Notes on the course web for precise technical details. Describes how to avoid floating point underflow in formulas such as