- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Deep contextualized word representations

展开查看详情

1 . Deep contextualized word representations Matthew E. Peters† , Mark Neumann† , Mohit Iyyer† , Matt Gardner† , {matthewp,markn,mohiti,mattg}@allenai.org Christopher Clark∗ , Kenton Lee∗ , Luke Zettlemoyer†∗ {csquared,kentonl,lsz}@cs.washington.edu † Allen Institute for Artificial Intelligence ∗ Paul G. Allen School of Computer Science & Engineering, University of Washington Abstract guage model (LM) objective on a large text cor- pus. For this reason, we call them ELMo (Em- We introduce a new type of deep contextual- beddings from Language Models) representations. arXiv:1802.05365v2 [cs.CL] 22 Mar 2018 ized word representation that models both (1) Unlike previous approaches for learning contextu- complex characteristics of word use (e.g., syn- tax and semantics), and (2) how these uses alized word vectors (Peters et al., 2017; McCann vary across linguistic contexts (i.e., to model et al., 2017), ELMo representations are deep, in polysemy). Our word vectors are learned func- the sense that they are a function of all of the in- tions of the internal states of a deep bidirec- ternal layers of the biLM. More specifically, we tional language model (biLM), which is pre- learn a linear combination of the vectors stacked trained on a large text corpus. We show that above each input word for each end task, which these representations can be easily added to markedly improves performance over just using existing models and significantly improve the state of the art across six challenging NLP the top LSTM layer. problems, including question answering, tex- Combining the internal states in this manner al- tual entailment and sentiment analysis. We lows for very rich word representations. Using in- also present an analysis showing that exposing trinsic evaluations, we show that the higher-level the deep internals of the pre-trained network is LSTM states capture context-dependent aspects crucial, allowing downstream models to mix of word meaning (e.g., they can be used with- different types of semi-supervision signals. out modification to perform well on supervised word sense disambiguation tasks) while lower- 1 Introduction level states model aspects of syntax (e.g., they can Pre-trained word representations (Mikolov et al., be used to do part-of-speech tagging). Simultane- 2013; Pennington et al., 2014) are a key compo- ously exposing all of these signals is highly bene- nent in many neural language understanding mod- ficial, allowing the learned models select the types els. However, learning high quality representa- of semi-supervision that are most useful for each tions can be challenging. They should ideally end task. model both (1) complex characteristics of word Extensive experiments demonstrate that ELMo use (e.g., syntax and semantics), and (2) how these representations work extremely well in practice. uses vary across linguistic contexts (i.e., to model We first show that they can be easily added to polysemy). In this paper, we introduce a new type existing models for six diverse and challenging of deep contextualized word representation that language understanding problems, including tex- directly addresses both challenges, can be easily tual entailment, question answering and sentiment integrated into existing models, and significantly analysis. The addition of ELMo representations improves the state of the art in every considered alone significantly improves the state of the art case across a range of challenging language un- in every case, including up to 20% relative error derstanding problems. reductions. For tasks where direct comparisons Our representations differ from traditional word are possible, ELMo outperforms CoVe (McCann type embeddings in that each token is assigned a et al., 2017), which computes contextualized rep- representation that is a function of the entire input resentations using a neural machine translation en- sentence. We use vectors derived from a bidirec- coder. Finally, an analysis of both ELMo and tional LSTM that is trained with a coupled lan- CoVe reveals that deep representations outperform

2 .those derived from just the top layer of an LSTM. Previous work has also shown that different lay- Our trained models and code are publicly avail- ers of deep biRNNs encode different types of in- able, and we expect that ELMo will provide simi- formation. For example, introducing multi-task lar gains for many other NLP problems.1 syntactic supervision (e.g., part-of-speech tags) at the lower levels of a deep LSTM can improve 2 Related work overall performance of higher level tasks such as dependency parsing (Hashimoto et al., 2017) or Due to their ability to capture syntactic and se- CCG super tagging (Søgaard and Goldberg, 2016). mantic information of words from large scale un- In an RNN-based encoder-decoder machine trans- labeled text, pretrained word vectors (Turian et al., lation system, Belinkov et al. (2017) showed that 2010; Mikolov et al., 2013; Pennington et al., the representations learned at the first layer in a 2- 2014) are a standard component of most state-of- layer LSTM encoder are better at predicting POS the-art NLP architectures, including for question tags then second layer. Finally, the top layer of an answering (Liu et al., 2017), textual entailment LSTM for encoding word context (Melamud et al., (Chen et al., 2017) and semantic role labeling 2016) has been shown to learn representations of (He et al., 2017). However, these approaches for word sense. We show that similar signals are also learning word vectors only allow a single context- induced by the modified language model objective independent representation for each word. of our ELMo representations, and it can be very Previously proposed methods overcome some beneficial to learn models for downstream tasks of the shortcomings of traditional word vectors that mix these different types of semi-supervision. by either enriching them with subword informa- Dai and Le (2015) and Ramachandran et al. tion (e.g., Wieting et al., 2016; Bojanowski et al., (2017) pretrain encoder-decoder pairs using lan- 2017) or learning separate vectors for each word guage models and sequence autoencoders and then sense (e.g., Neelakantan et al., 2014). Our ap- fine tune with task specific supervision. In con- proach also benefits from subword units through trast, after pretraining the biLM with unlabeled the use of character convolutions, and we seam- data, we fix the weights and add additional task- lessly incorporate multi-sense information into specific model capacity, allowing us to leverage downstream tasks without explicitly training to large, rich and universal biLM representations for predict predefined sense classes. cases where downstream training data size dictates Other recent work has also focused on a smaller supervised model. learning context-dependent representations. context2vec (Melamud et al., 2016) uses a bidirectional Long Short Term Memory (LSTM; 3 ELMo: Embeddings from Language Hochreiter and Schmidhuber, 1997) to encode the Models context around a pivot word. Other approaches for learning contextual embeddings include the Unlike most widely used word embeddings (Pen- pivot word itself in the representation and are nington et al., 2014), ELMo word representations computed with the encoder of either a supervised are functions of the entire input sentence, as de- neural machine translation (MT) system (CoVe; scribed in this section. They are computed on top McCann et al., 2017) or an unsupervised lan- of two-layer biLMs with character convolutions guage model (Peters et al., 2017). Both of these (Sec. 3.1), as a linear function of the internal net- approaches benefit from large datasets, although work states (Sec. 3.2). This setup allows us to do the MT approach is limited by the size of parallel semi-supervised learning, where the biLM is pre- corpora. In this paper, we take full advantage of trained at a large scale (Sec. 3.4) and easily incor- access to plentiful monolingual data, and train porated into a wide range of existing neural NLP our biLM on a corpus with approximately 30 architectures (Sec. 3.3). million sentences (Chelba et al., 2014). We also generalize these approaches to deep contextual 3.1 Bidirectional language models representations, which we show work well across a broad range of diverse NLP tasks. Given a sequence of N tokens, (t1 , t2 , ..., tN ), a forward language model computes the probability 1 http://allennlp.org/elmo of the sequence by modeling the probability of to-

3 .ken tk given the history (t1 , ..., tk−1 ): each token tk , a L-layer biLM computes a set of 2L + 1 representations N p(t1 , t2 , . . . , tN ) = p(tk | t1 , t2 , . . . , tk−1 ). → − LM ← −LM Rk = {xLM k , h k,j , h k,j | j = 1, . . . , L} k=1 = {hLM k,j | j = 0, . . . , L}, Recent state-of-the-art neural language models (J´ozefowicz et al., 2016; Melis et al., 2017; Mer- where hLM k,0 is the token layer and hk,j LM = ity et al., 2017) compute a context-independent to- → − LM ← −LM [ h k,j ; h k,j ], for each biLSTM layer. ken representation xLM k (via token embeddings or For inclusion in a downstream model, ELMo a CNN over characters) then pass it through L lay- collapses all layers in R into a single vector, ers of forward LSTMs. At each position k, each ELMok = E(Rk ; Θe ). In the simplest case, LSTM layer outputs a context-dependent repre- ELMo just selects the top layer, E(Rk ) = hLM → − k,L , sentation h LMk,j where j = 1, . . . , L. The top layer as in TagLM (Peters et al., 2017) and CoVe (Mc- → − Cann et al., 2017). More generally, we compute a LSTM output, h LM k,L , is used to predict the next token tk+1 with a Softmax layer. task specific weighting of all biLM layers: A backward LM is similar to a forward LM, ex- L cept it runs over the sequence in reverse, predict- ELMotask = E(Rk ; Θ task )=γ task stask hLM k j k,j . ing the previous token given the future context: j=0 (1) N In (1), stask are softmax-normalized weights and p(t1 , t2 , . . . , tN ) = p(tk | tk+1 , tk+2 , . . . , tN ). the scalar parameter γ task allows the task model to k=1 scale the entire ELMo vector. γ is of practical im- It can be implemented in an analogous way to a portance to aid the optimization process (see sup- forward LM, with each backward LSTM layer j plemental material for details). Considering that in a L layer deep model producing representations the activations of each biLM layer have a different ←− distribution, in some cases it also helped to apply h LM k,j of tk given (tk+1 , . . . , tN ). A biLM combines both a forward and backward layer normalization (Ba et al., 2016) to each biLM LM. Our formulation jointly maximizes the log layer before weighting. likelihood of the forward and backward directions: 3.3 Using biLMs for supervised NLP tasks N Given a pre-trained biLM and a supervised archi- → − ( log p(tk | t1 , . . . , tk−1 ; Θx , Θ LST M , Θs ) tecture for a target NLP task, it is a simple process k=1 to use the biLM to improve the task model. We ← − + log p(tk | tk+1 , . . . , tN ; Θx , Θ LST M , Θs ) ) . simply run the biLM and record all of the layer representations for each word. Then, we let the We tie the parameters for both the token represen- end task model learn a linear combination of these tation (Θx ) and Softmax layer (Θs ) in the forward representations, as described below. and backward direction while maintaining sepa- First consider the lowest layers of the super- rate parameters for the LSTMs in each direction. vised model without the biLM. Most supervised Overall, this formulation is similar to the approach NLP models share a common architecture at the of Peters et al. (2017), with the exception that we lowest layers, allowing us to add ELMo in a share some weights between directions instead of consistent, unified manner. Given a sequence using completely independent parameters. In the of tokens (t1 , . . . , tN ), it is standard to form a next section, we depart from previous work by in- context-independent token representation xk for troducing a new approach for learning word rep- each token position using pre-trained word em- resentations that are a linear combination of the beddings and optionally character-based represen- biLM layers. tations. Then, the model forms a context-sensitive representation hk , typically using either bidirec- 3.2 ELMo tional RNNs, CNNs, or feed forward networks. ELMo is a task specific combination of the in- To add ELMo to the supervised model, we termediate layer representations in the biLM. For first freeze the weights of the biLM and then

4 .concatenate the ELMo vector ELMotask k with After training for 10 epochs on the 1B Word xk and pass the ELMo enhanced representation Benchmark (Chelba et al., 2014), the average for- [xk ; ELMotask k ] into the task RNN. For some ward and backward perplexities is 39.7, compared tasks (e.g., SNLI, SQuAD), we observe further to 30.0 for the forward CNN-BIG-LSTM. Gener- improvements by also including ELMo at the out- ally, we found the forward and backward perplex- put of the task RNN by introducing another set ities to be approximately equal, with the backward of output specific linear weights and replacing hk value slightly lower. with [hk ; ELMotaskk ]. As the remainder of the Once pretrained, the biLM can compute repre- supervised model remains unchanged, these addi- sentations for any task. In some cases, fine tuning tions can happen within the context of more com- the biLM on domain specific data leads to signifi- plex neural models. For example, see the SNLI cant drops in perplexity and an increase in down- experiments in Sec. 4 where a bi-attention layer stream task performance. This can be seen as a follows the biLSTMs, or the coreference resolu- type of domain transfer for the biLM. As a result, tion experiments where a clustering model is lay- in most cases we used a fine-tuned biLM in the ered on top of the biLSTMs. downstream task. See supplemental material for Finally, we found it beneficial to add a moder- details. ate amount of dropout to ELMo (Srivastava et al., 2014) and in some cases to regularize the ELMo 4 Evaluation weights by adding λ w 22 to the loss. This im- Table 1 shows the performance of ELMo across a poses an inductive bias on the ELMo weights to diverse set of six benchmark NLP tasks. In every stay close to an average of all biLM layers. task considered, simply adding ELMo establishes a new state-of-the-art result, with relative error re- 3.4 Pre-trained bidirectional language model ductions ranging from 6 - 20% over strong base architecture models. This is a very general result across a di- The pre-trained biLMs in this paper are similar to verse set model architectures and language under- the architectures in J´ozefowicz et al. (2016) and standing tasks. In the remainder of this section we Kim et al. (2015), but modified to support joint provide high-level sketches of the individual task training of both directions and add a residual con- results; see the supplemental material for full ex- nection between LSTM layers. We focus on large perimental details. scale biLMs in this work, as Peters et al. (2017) Question answering The Stanford Question highlighted the importance of using biLMs over Answering Dataset (SQuAD) (Rajpurkar et al., forward-only LMs and large scale training. 2016) contains 100K+ crowd sourced question- answer pairs where the answer is a span in a given To balance overall language model perplexity Wikipedia paragraph. Our baseline model (Clark with model size and computational requirements and Gardner, 2017) is an improved version of the for downstream tasks while maintaining a purely Bidirectional Attention Flow model in Seo et al. character-based input representation, we halved all (BiDAF; 2017). It adds a self-attention layer af- embedding and hidden dimensions from the single ter the bidirectional attention component, simpli- best model CNN-BIG-LSTM in J´ozefowicz et al. fies some of the pooling operations and substitutes (2016). The final model uses L = 2 biLSTM lay- the LSTMs for gated recurrent units (GRUs; Cho ers with 4096 units and 512 dimension projections et al., 2014). After adding ELMo to the baseline and a residual connection from the first to second model, test set F1 improved by 4.7% from 81.1% layer. The context insensitive type representation to 85.8%, a 24.9% relative error reduction over the uses 2048 character n-gram convolutional filters baseline, and improving the overall single model followed by two highway layers (Srivastava et al., state-of-the-art by 1.4%. A 11 member ensem- 2015) and a linear projection down to a 512 repre- ble pushes F1 to 87.4, the overall state-of-the-art sentation. As a result, the biLM provides three lay- at time of submission to the leaderboard.2 The ers of representations for each input token, includ- increase of 4.7% with ELMo is also significantly ing those outside the training set due to the purely larger then the 1.8% improvement from adding character input. In contrast, traditional word em- CoVe to a baseline model (McCann et al., 2017). bedding methods only provide one layer of repre- 2 sentation for tokens in a fixed vocabulary. As of November 17, 2017.

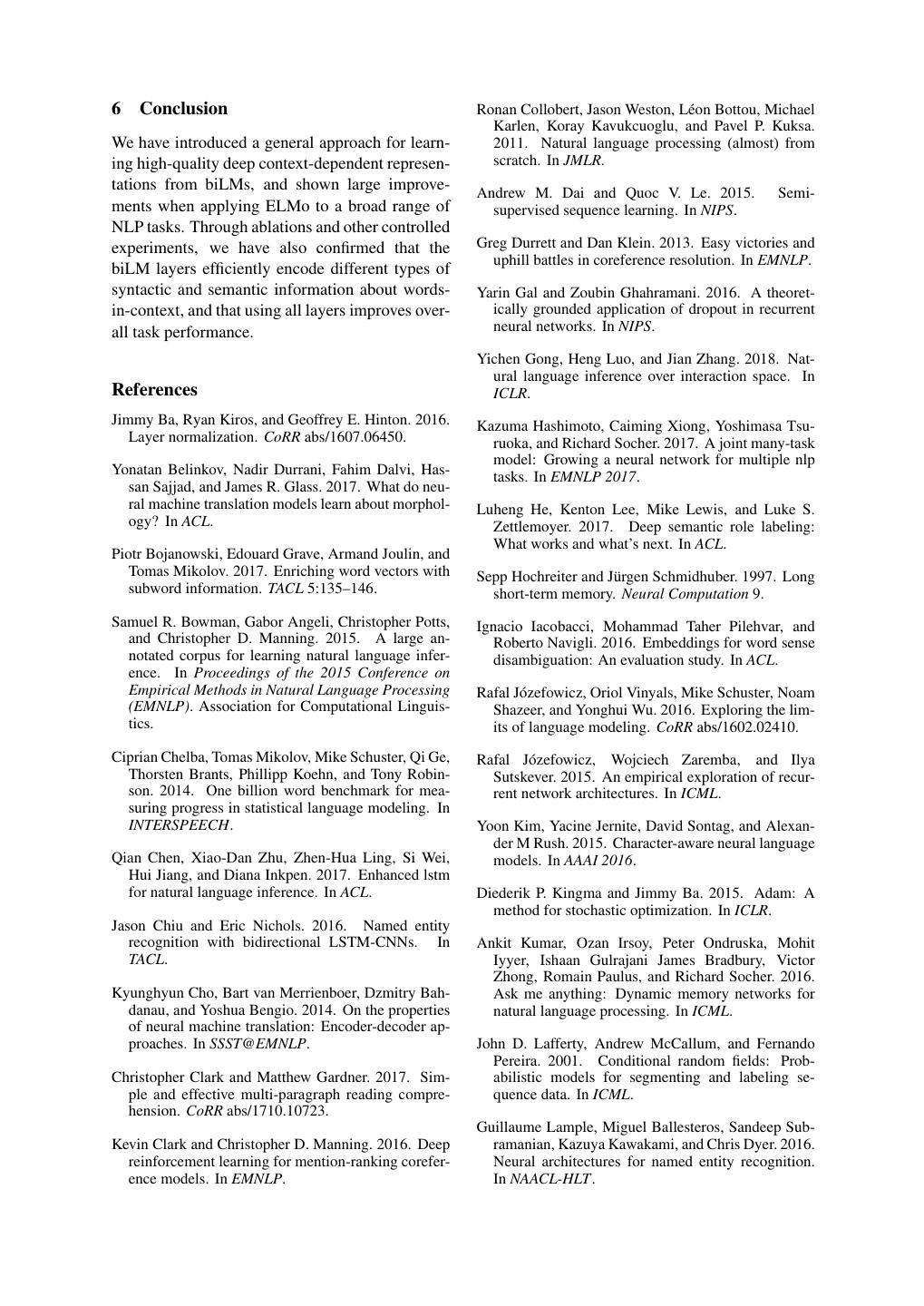

5 . I NCREASE O UR ELM O + TASK P REVIOUS SOTA ( ABSOLUTE / BASELINE BASELINE RELATIVE ) SQuAD Liu et al. (2017) 84.4 81.1 85.8 4.7 / 24.9% SNLI Chen et al. (2017) 88.6 88.0 88.7 ± 0.17 0.7 / 5.8% SRL He et al. (2017) 81.7 81.4 84.6 3.2 / 17.2% Coref Lee et al. (2017) 67.2 67.2 70.4 3.2 / 9.8% NER Peters et al. (2017) 91.93 ± 0.19 90.15 92.22 ± 0.10 2.06 / 21% SST-5 McCann et al. (2017) 53.7 51.4 54.7 ± 0.5 3.3 / 6.8% Table 1: Test set comparison of ELMo enhanced neural models with state-of-the-art single model baselines across six benchmark NLP tasks. The performance metric varies across tasks – accuracy for SNLI and SST-5; F1 for SQuAD, SRL and NER; average F1 for Coref. Due to the small test sizes for NER and SST-5, we report the mean and standard deviation across five runs with different random seeds. The “increase” column lists both the absolute and relative improvements over our baseline. Textual entailment Textual entailment is the and attention mechanism to first compute span task of determining whether a “hypothesis” is representations and then applies a softmax men- true, given a “premise”. The Stanford Natu- tion ranking model to find coreference chains. In ral Language Inference (SNLI) corpus (Bowman our experiments with the OntoNotes coreference et al., 2015) provides approximately 550K hypoth- annotations from the CoNLL 2012 shared task esis/premise pairs. Our baseline, the ESIM se- (Pradhan et al., 2012), adding ELMo improved the quence model from Chen et al. (2017), uses a biL- average F1 by 3.2% from 67.2 to 70.4, establish- STM to encode the premise and hypothesis, fol- ing a new state of the art, again improving over the lowed by a matrix attention layer, a local infer- previous best ensemble result by 1.6% F1 . ence layer, another biLSTM inference composi- Named entity extraction The CoNLL 2003 tion layer, and finally a pooling operation before NER task (Sang and Meulder, 2003) consists of the output layer. Overall, adding ELMo to the newswire from the Reuters RCV1 corpus tagged ESIM model improves accuracy by an average of with four different entity types (PER, LOC, ORG, 0.7% across five random seeds. A five member MISC). Following recent state-of-the-art systems ensemble pushes the overall accuracy to 89.3%, (Lample et al., 2016; Peters et al., 2017), the base- exceeding the previous ensemble best of 88.9% line model uses pre-trained word embeddings, a (Gong et al., 2018). character-based CNN representation, two biLSTM Semantic role labeling A semantic role label- layers and a conditional random field (CRF) loss ing (SRL) system models the predicate-argument (Lafferty et al., 2001), similar to Collobert et al. structure of a sentence, and is often described as (2011). As shown in Table 1, our ELMo enhanced answering “Who did what to whom”. He et al. biLSTM-CRF achieves 92.22% F1 averaged over (2017) modeled SRL as a BIO tagging problem five runs. The key difference between our system and used an 8-layer deep biLSTM with forward and the previous state of the art from Peters et al. and backward directions interleaved, following (2017) is that we allowed the task model to learn a Zhou and Xu (2015). As shown in Table 1, when weighted average of all biLM layers, whereas Pe- adding ELMo to a re-implementation of He et al. ters et al. (2017) only use the top biLM layer. As (2017) the single model test set F1 jumped 3.2% shown in Sec. 5.1, using all layers instead of just from 81.4% to 84.6% – a new state-of-the-art on the last layer improves performance across multi- the OntoNotes benchmark (Pradhan et al., 2013), ple tasks. even improving over the previous best ensemble Sentiment analysis The fine-grained sentiment result by 1.2%. classification task in the Stanford Sentiment Tree- Coreference resolution Coreference resolution bank (SST-5; Socher et al., 2013) involves select- is the task of clustering mentions in text that re- ing one of five labels (from very negative to very fer to the same underlying real world entities. Our positive) to describe a sentence from a movie re- baseline model is the end-to-end span-based neu- view. The sentences contain diverse linguistic ral model of Lee et al. (2017). It uses a biLSTM phenomena such as idioms and complex syntac-

6 . All layers 5.1 Alternate layer weighting schemes Task Baseline Last Only λ=1 λ=0.001 There are many alternatives to Equation 1 for com- SQuAD 80.8 84.7 85.0 85.2 bining the biLM layers. Previous work on con- SNLI 88.1 89.1 89.3 89.5 textual representations used only the last layer, SRL 81.6 84.1 84.6 84.8 whether it be from a biLM (Peters et al., 2017) or Table 2: Development set performance for SQuAD, an MT encoder (CoVe; McCann et al., 2017). The SNLI and SRL comparing using all layers of the biLM choice of the regularization parameter λ is also (with different choices of regularization strength λ) to important, as large values such as λ = 1 effec- just the top layer. tively reduce the weighting function to a simple average over the layers, while smaller values (e.g., Input Input & Output λ = 0.001) allow the layer weights to vary. Task Only Output Only Table 2 compares these alternatives for SQuAD, SQuAD 85.1 85.6 84.8 SNLI and SRL. Including representations from all SNLI 88.9 89.5 88.7 layers improves overall performance over just us- SRL 84.7 84.3 80.9 ing the last layer, and including contextual rep- resentations from the last layer improves perfor- Table 3: Development set performance for SQuAD, SNLI and SRL when including ELMo at different lo- mance over the baseline. For example, in the cations in the supervised model. case of SQuAD, using just the last biLM layer im- proves development F1 by 3.9% over the baseline. Averaging all biLM layers instead of using just the tic constructions such as negations that are diffi- last layer improves F1 another 0.3% (comparing cult for models to learn. Our baseline model is “Last Only” to λ=1 columns), and allowing the the biattentive classification network (BCN) from task model to learn individual layer weights im- McCann et al. (2017), which also held the prior proves F1 another 0.2% (λ=1 vs. λ=0.001). A state-of-the-art result when augmented with CoVe small λ is preferred in most cases with ELMo, al- embeddings. Replacing CoVe with ELMo in the though for NER, a task with a smaller training set, BCN model results in a 1.0% absolute accuracy the results are insensitive to λ (not shown). improvement over the state of the art. The overall trend is similar with CoVe but with smaller increases over the baseline. For SNLI, av- 5 Analysis eraging all layers with λ=1 improves development accuracy from 88.2 to 88.7% over using just the This section provides an ablation analysis to vali- last layer. SRL F1 increased a marginal 0.1% to date our chief claims and to elucidate some inter- 82.2 for the λ=1 case compared to using the last esting aspects of ELMo representations. Sec. 5.1 layer only. shows that using deep contextual representations 5.2 Where to include ELMo? in downstream tasks improves performance over previous work that uses just the top layer, regard- All of the task architectures in this paper include less of whether they are produced from a biLM or word embeddings only as input to the lowest layer MT encoder, and that ELMo representations pro- biRNN. However, we find that including ELMo at vide the best overall performance. Sec. 5.3 ex- the output of the biRNN in task-specific architec- plores the different types of contextual informa- tures improves overall results for some tasks. As tion captured in biLMs and uses two intrinsic eval- shown in Table 3, including ELMo at both the in- uations to show that syntactic information is better put and output layers for SNLI and SQuAD im- represented at lower layers while semantic infor- proves over just the input layer, but for SRL (and mation is captured a higher layers, consistent with coreference resolution, not shown) performance is MT encoders. It also shows that our biLM consis- highest when it is included at just the input layer. tently provides richer representations then CoVe. One possible explanation for this result is that both Additionally, we analyze the sensitivity to where the SNLI and SQuAD architectures use attention ELMo is included in the task model (Sec. 5.2), layers after the biRNN, so introducing ELMo at training set size (Sec. 5.4), and visualize the ELMo this layer allows the model to attend directly to the learned weights across the tasks (Sec. 5.5). biLM’s internal representations. In the SRL case,

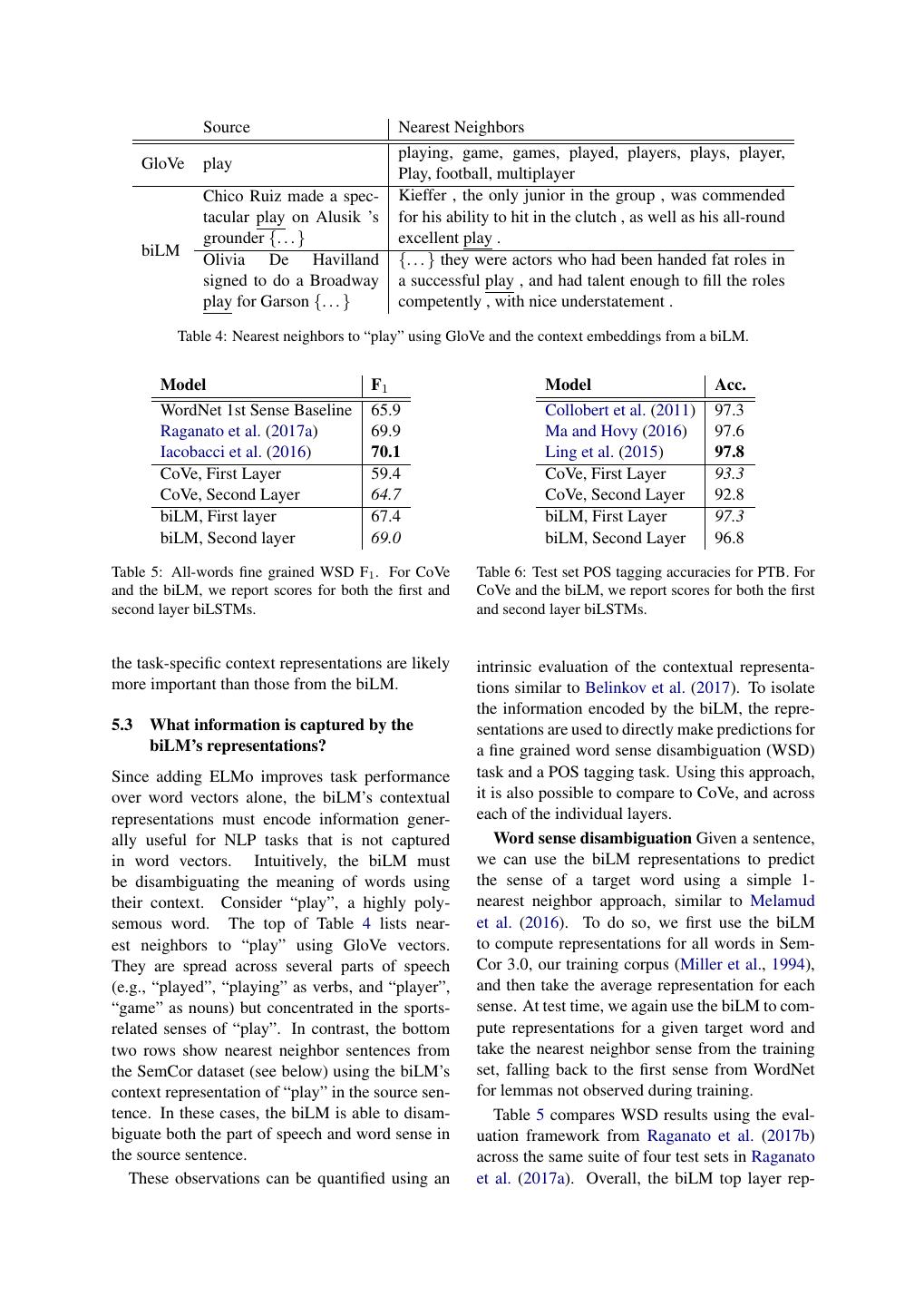

7 . Source Nearest Neighbors playing, game, games, played, players, plays, player, GloVe play Play, football, multiplayer Chico Ruiz made a spec- Kieffer , the only junior in the group , was commended tacular play on Alusik ’s for his ability to hit in the clutch , as well as his all-round grounder {. . . } excellent play . biLM Olivia De Havilland {. . . } they were actors who had been handed fat roles in signed to do a Broadway a successful play , and had talent enough to fill the roles play for Garson {. . . } competently , with nice understatement . Table 4: Nearest neighbors to “play” using GloVe and the context embeddings from a biLM. Model F1 Model Acc. WordNet 1st Sense Baseline 65.9 Collobert et al. (2011) 97.3 Raganato et al. (2017a) 69.9 Ma and Hovy (2016) 97.6 Iacobacci et al. (2016) 70.1 Ling et al. (2015) 97.8 CoVe, First Layer 59.4 CoVe, First Layer 93.3 CoVe, Second Layer 64.7 CoVe, Second Layer 92.8 biLM, First layer 67.4 biLM, First Layer 97.3 biLM, Second layer 69.0 biLM, Second Layer 96.8 Table 5: All-words fine grained WSD F1 . For CoVe Table 6: Test set POS tagging accuracies for PTB. For and the biLM, we report scores for both the first and CoVe and the biLM, we report scores for both the first second layer biLSTMs. and second layer biLSTMs. the task-specific context representations are likely intrinsic evaluation of the contextual representa- more important than those from the biLM. tions similar to Belinkov et al. (2017). To isolate the information encoded by the biLM, the repre- 5.3 What information is captured by the sentations are used to directly make predictions for biLM’s representations? a fine grained word sense disambiguation (WSD) Since adding ELMo improves task performance task and a POS tagging task. Using this approach, over word vectors alone, the biLM’s contextual it is also possible to compare to CoVe, and across representations must encode information gener- each of the individual layers. ally useful for NLP tasks that is not captured Word sense disambiguation Given a sentence, in word vectors. Intuitively, the biLM must we can use the biLM representations to predict be disambiguating the meaning of words using the sense of a target word using a simple 1- their context. Consider “play”, a highly poly- nearest neighbor approach, similar to Melamud semous word. The top of Table 4 lists near- et al. (2016). To do so, we first use the biLM est neighbors to “play” using GloVe vectors. to compute representations for all words in Sem- They are spread across several parts of speech Cor 3.0, our training corpus (Miller et al., 1994), (e.g., “played”, “playing” as verbs, and “player”, and then take the average representation for each “game” as nouns) but concentrated in the sports- sense. At test time, we again use the biLM to com- related senses of “play”. In contrast, the bottom pute representations for a given target word and two rows show nearest neighbor sentences from take the nearest neighbor sense from the training the SemCor dataset (see below) using the biLM’s set, falling back to the first sense from WordNet context representation of “play” in the source sen- for lemmas not observed during training. tence. In these cases, the biLM is able to disam- Table 5 compares WSD results using the eval- biguate both the part of speech and word sense in uation framework from Raganato et al. (2017b) the source sentence. across the same suite of four test sets in Raganato These observations can be quantified using an et al. (2017a). Overall, the biLM top layer rep-

8 .resentations have F1 of 69.0 and are better at WSD then the first layer. This is competitive with a state-of-the-art WSD-specific supervised model using hand crafted features (Iacobacci et al., 2016) and a task specific biLSTM that is also trained with auxiliary coarse-grained semantic labels and POS tags (Raganato et al., 2017a). The CoVe biLSTM layers follow a similar pattern to those from the biLM (higher overall performance at the second layer compared to the first); however, our biLM outperforms the CoVe biLSTM, which trails Figure 1: Comparison of baseline vs. ELMo perfor- the WordNet first sense baseline. mance for SNLI and SRL as the training set size is var- POS tagging To examine whether the biLM ied from 0.1% to 100%. captures basic syntax, we used the context repre- sentations as input to a linear classifier that pre- dicts POS tags with the Wall Street Journal portion of the Penn Treebank (PTB) (Marcus et al., 1993). As the linear classifier adds only a small amount of model capacity, this is direct test of the biLM’s representations. Similar to WSD, the biLM rep- resentations are competitive with carefully tuned, task specific biLSTMs (Ling et al., 2015; Ma and Hovy, 2016). However, unlike WSD, accuracies Figure 2: Visualization of softmax normalized biLM layer weights across tasks and ELMo locations. Nor- using the first biLM layer are higher than the malized weights less then 1/3 are hatched with hori- top layer, consistent with results from deep biL- zontal lines and those greater then 2/3 are speckled. STMs in multi-task training (Søgaard and Gold- berg, 2016; Hashimoto et al., 2017) and MT (Be- linkov et al., 2017). CoVe POS tagging accuracies the same level of performance. follow the same pattern as those from the biLM, In addition, ELMo-enhanced models use and just like for WSD, the biLM achieves higher smaller training sets more efficiently than mod- accuracies than the CoVe encoder. els without ELMo. Figure 1 compares the per- Implications for supervised tasks Taken to- formance of baselines models with and without gether, these experiments confirm different layers ELMo as the percentage of the full training set is in the biLM represent different types of informa- varied from 0.1% to 100%. Improvements with tion and explain why including all biLM layers is ELMo are largest for smaller training sets and important for the highest performance in down- significantly reduce the amount of training data stream tasks. In addition, the biLM’s representa- needed to reach a given level of performance. In tions are more transferable to WSD and POS tag- the SRL case, the ELMo model with 1% of the ging than those in CoVe, helping to illustrate why training set has about the same F1 as the baseline ELMo outperforms CoVe in downstream tasks. model with 10% of the training set. 5.4 Sample efficiency 5.5 Visualization of learned weights Adding ELMo to a model increases the sample ef- ficiency considerably, both in terms of number of Figure 2 visualizes the softmax-normalized parameter updates to reach state-of-the-art perfor- learned layer weights. At the input layer, the mance and the overall training set size. For ex- task model favors the first biLSTM layer. For ample, the SRL model reaches a maximum devel- coreference and SQuAD, the this is strongly opment F1 after 486 epochs of training without favored, but the distribution is less peaked for ELMo. After adding ELMo, the model exceeds the other tasks. The output layer weights are the baseline maximum at epoch 10, a 98% relative relatively balanced, with a slight preference for decrease in the number of updates needed to reach the lower layers.

9 .6 Conclusion Ronan Collobert, Jason Weston, L´eon Bottou, Michael Karlen, Koray Kavukcuoglu, and Pavel P. Kuksa. We have introduced a general approach for learn- 2011. Natural language processing (almost) from ing high-quality deep context-dependent represen- scratch. In JMLR. tations from biLMs, and shown large improve- Andrew M. Dai and Quoc V. Le. 2015. Semi- ments when applying ELMo to a broad range of supervised sequence learning. In NIPS. NLP tasks. Through ablations and other controlled experiments, we have also confirmed that the Greg Durrett and Dan Klein. 2013. Easy victories and uphill battles in coreference resolution. In EMNLP. biLM layers efficiently encode different types of syntactic and semantic information about words- Yarin Gal and Zoubin Ghahramani. 2016. A theoret- in-context, and that using all layers improves over- ically grounded application of dropout in recurrent all task performance. neural networks. In NIPS. Yichen Gong, Heng Luo, and Jian Zhang. 2018. Nat- ural language inference over interaction space. In References ICLR. Jimmy Ba, Ryan Kiros, and Geoffrey E. Hinton. 2016. Kazuma Hashimoto, Caiming Xiong, Yoshimasa Tsu- Layer normalization. CoRR abs/1607.06450. ruoka, and Richard Socher. 2017. A joint many-task model: Growing a neural network for multiple nlp Yonatan Belinkov, Nadir Durrani, Fahim Dalvi, Has- tasks. In EMNLP 2017. san Sajjad, and James R. Glass. 2017. What do neu- ral machine translation models learn about morphol- Luheng He, Kenton Lee, Mike Lewis, and Luke S. ogy? In ACL. Zettlemoyer. 2017. Deep semantic role labeling: What works and what’s next. In ACL. Piotr Bojanowski, Edouard Grave, Armand Joulin, and Tomas Mikolov. 2017. Enriching word vectors with Sepp Hochreiter and J¨urgen Schmidhuber. 1997. Long subword information. TACL 5:135–146. short-term memory. Neural Computation 9. Samuel R. Bowman, Gabor Angeli, Christopher Potts, Ignacio Iacobacci, Mohammad Taher Pilehvar, and and Christopher D. Manning. 2015. A large an- Roberto Navigli. 2016. Embeddings for word sense notated corpus for learning natural language infer- disambiguation: An evaluation study. In ACL. ence. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing Rafal J´ozefowicz, Oriol Vinyals, Mike Schuster, Noam (EMNLP). Association for Computational Linguis- Shazeer, and Yonghui Wu. 2016. Exploring the lim- tics. its of language modeling. CoRR abs/1602.02410. Ciprian Chelba, Tomas Mikolov, Mike Schuster, Qi Ge, Rafal J´ozefowicz, Wojciech Zaremba, and Ilya Thorsten Brants, Phillipp Koehn, and Tony Robin- Sutskever. 2015. An empirical exploration of recur- son. 2014. One billion word benchmark for mea- rent network architectures. In ICML. suring progress in statistical language modeling. In INTERSPEECH. Yoon Kim, Yacine Jernite, David Sontag, and Alexan- der M Rush. 2015. Character-aware neural language Qian Chen, Xiao-Dan Zhu, Zhen-Hua Ling, Si Wei, models. In AAAI 2016. Hui Jiang, and Diana Inkpen. 2017. Enhanced lstm for natural language inference. In ACL. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In ICLR. Jason Chiu and Eric Nichols. 2016. Named entity recognition with bidirectional LSTM-CNNs. In Ankit Kumar, Ozan Irsoy, Peter Ondruska, Mohit TACL. Iyyer, Ishaan Gulrajani James Bradbury, Victor Zhong, Romain Paulus, and Richard Socher. 2016. Kyunghyun Cho, Bart van Merrienboer, Dzmitry Bah- Ask me anything: Dynamic memory networks for danau, and Yoshua Bengio. 2014. On the properties natural language processing. In ICML. of neural machine translation: Encoder-decoder ap- proaches. In SSST@EMNLP. John D. Lafferty, Andrew McCallum, and Fernando Pereira. 2001. Conditional random fields: Prob- Christopher Clark and Matthew Gardner. 2017. Sim- abilistic models for segmenting and labeling se- ple and effective multi-paragraph reading compre- quence data. In ICML. hension. CoRR abs/1710.10723. Guillaume Lample, Miguel Ballesteros, Sandeep Sub- Kevin Clark and Christopher D. Manning. 2016. Deep ramanian, Kazuya Kawakami, and Chris Dyer. 2016. reinforcement learning for mention-ranking corefer- Neural architectures for named entity recognition. ence models. In EMNLP. In NAACL-HLT.

10 .Kenton Lee, Luheng He, Mike Lewis, and Luke S. Matthew E. Peters, Waleed Ammar, Chandra Bhaga- Zettlemoyer. 2017. End-to-end neural coreference vatula, and Russell Power. 2017. Semi-supervised resolution. In EMNLP. sequence tagging with bidirectional language mod- els. In ACL. Wang Ling, Chris Dyer, Alan W. Black, Isabel Tran- coso, Ramon Fermandez, Silvio Amir, Lu´ıs Marujo, Sameer Pradhan, Alessandro Moschitti, Nianwen Xue, and Tiago Lu´ıs. 2015. Finding function in form: Hwee Tou Ng, Anders Bj¨orkelund, Olga Uryupina, Compositional character models for open vocabu- Yuchen Zhang, and Zhi Zhong. 2013. Towards ro- lary word representation. In EMNLP. bust linguistic analysis using ontonotes. In CoNLL. Xiaodong Liu, Yelong Shen, Kevin Duh, and Jian- Sameer Pradhan, Alessandro Moschitti, Nianwen Xue, feng Gao. 2017. Stochastic answer networks for Olga Uryupina, and Yuchen Zhang. 2012. Conll- machine reading comprehension. arXiv preprint 2012 shared task: Modeling multilingual unre- arXiv:1712.03556 . stricted coreference in ontonotes. In EMNLP- CoNLL Shared Task. Xuezhe Ma and Eduard H. Hovy. 2016. End-to-end sequence labeling via bi-directional LSTM-CNNs- Alessandro Raganato, Claudio Delli Bovi, and Roberto CRF. In ACL. Navigli. 2017a. Neural sequence learning models for word sense disambiguation. In EMNLP. Mitchell P. Marcus, Beatrice Santorini, and Mary Ann Marcinkiewicz. 1993. Building a large annotated Alessandro Raganato, Jose Camacho-Collados, and corpus of english: The penn treebank. Computa- Roberto Navigli. 2017b. Word sense disambigua- tional Linguistics 19:313–330. tion: A unified evaluation framework and empirical comparison. In EACL. Bryan McCann, James Bradbury, Caiming Xiong, and Richard Socher. 2017. Learned in translation: Con- Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and textualized word vectors. In NIPS 2017. Percy Liang. 2016. Squad: 100, 000+ questions for machine comprehension of text. In EMNLP. Oren Melamud, Jacob Goldberger, and Ido Dagan. 2016. context2vec: Learning generic context em- Prajit Ramachandran, Peter Liu, and Quoc Le. 2017. bedding with bidirectional lstm. In CoNLL. Improving sequence to sequence learning with unla- beled data. In EMNLP. G´abor Melis, Chris Dyer, and Phil Blunsom. 2017. On the state of the art of evaluation in neural language Erik F. Tjong Kim Sang and Fien De Meulder. models. CoRR abs/1707.05589. 2003. Introduction to the CoNLL-2003 shared task: Stephen Merity, Nitish Shirish Keskar, and Richard Language-independent named entity recognition. In Socher. 2017. Regularizing and optimizing lstm lan- CoNLL. guage models. CoRR abs/1708.02182. Min Joon Seo, Aniruddha Kembhavi, Ali Farhadi, and Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Cor- Hannaneh Hajishirzi. 2017. Bidirectional attention rado, and Jeff Dean. 2013. Distributed representa- flow for machine comprehension. In ICLR. tions of words and phrases and their compositional- ity. In NIPS. Richard Socher, Alex Perelygin, Jean Y Wu, Jason Chuang, Christopher D Manning, Andrew Y Ng, George A. Miller, Martin Chodorow, Shari Landes, and Christopher Potts. 2013. Recursive deep mod- Claudia Leacock, and Robert G. Thomas. 1994. Us- els for semantic compositionality over a sentiment ing a semantic concordance for sense identification. treebank. In EMNLP. In HLT. Anders Søgaard and Yoav Goldberg. 2016. Deep Tsendsuren Munkhdalai and Hong Yu. 2017. Neural multi-task learning with low level tasks supervised tree indexers for text understanding. In EACL. at lower layers. In ACL 2016. Arvind Neelakantan, Jeevan Shankar, Alexandre Pas- Nitish Srivastava, Geoffrey E. Hinton, Alex sos, and Andrew McCallum. 2014. Efficient non- Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdi- parametric estimation of multiple embeddings per nov. 2014. Dropout: a simple way to prevent neural word in vector space. In EMNLP. networks from overfitting. Journal of Machine Learning Research 15:1929–1958. Martha Palmer, Paul Kingsbury, and Daniel Gildea. 2005. The proposition bank: An annotated corpus of Rupesh Kumar Srivastava, Klaus Greff, and J¨urgen semantic roles. Computational Linguistics 31:71– Schmidhuber. 2015. Training very deep networks. 106. In NIPS. Jeffrey Pennington, Richard Socher, and Christo- Joseph P. Turian, Lev-Arie Ratinov, and Yoshua Ben- pher D. Manning. 2014. Glove: Global vectors for gio. 2010. Word representations: A simple and gen- word representation. In EMNLP. eral method for semi-supervised learning. In ACL.

11 .Wenhui Wang, Nan Yang, Furu Wei, Baobao Chang, and Ming Zhou. 2017. Gated self-matching net- works for reading comprehension and question an- swering. In ACL. John Wieting, Mohit Bansal, Kevin Gimpel, and Karen Livescu. 2016. Charagram: Embedding words and sentences via character n-grams. In EMNLP. Sam Wiseman, Alexander M. Rush, and Stuart M. Shieber. 2016. Learning global features for coref- erence resolution. In HLT-NAACL. Matthew D. Zeiler. 2012. Adadelta: An adaptive learn- ing rate method. CoRR abs/1212.5701. Jie Zhou and Wei Xu. 2015. End-to-end learning of semantic role labeling using recurrent neural net- works. In ACL. Peng Zhou, Zhenyu Qi, Suncong Zheng, Jiaming Xu, Hongyun Bao, and Bo Xu. 2016. Text classification improved by integrating bidirectional lstm with two- dimensional max pooling. In COLING.

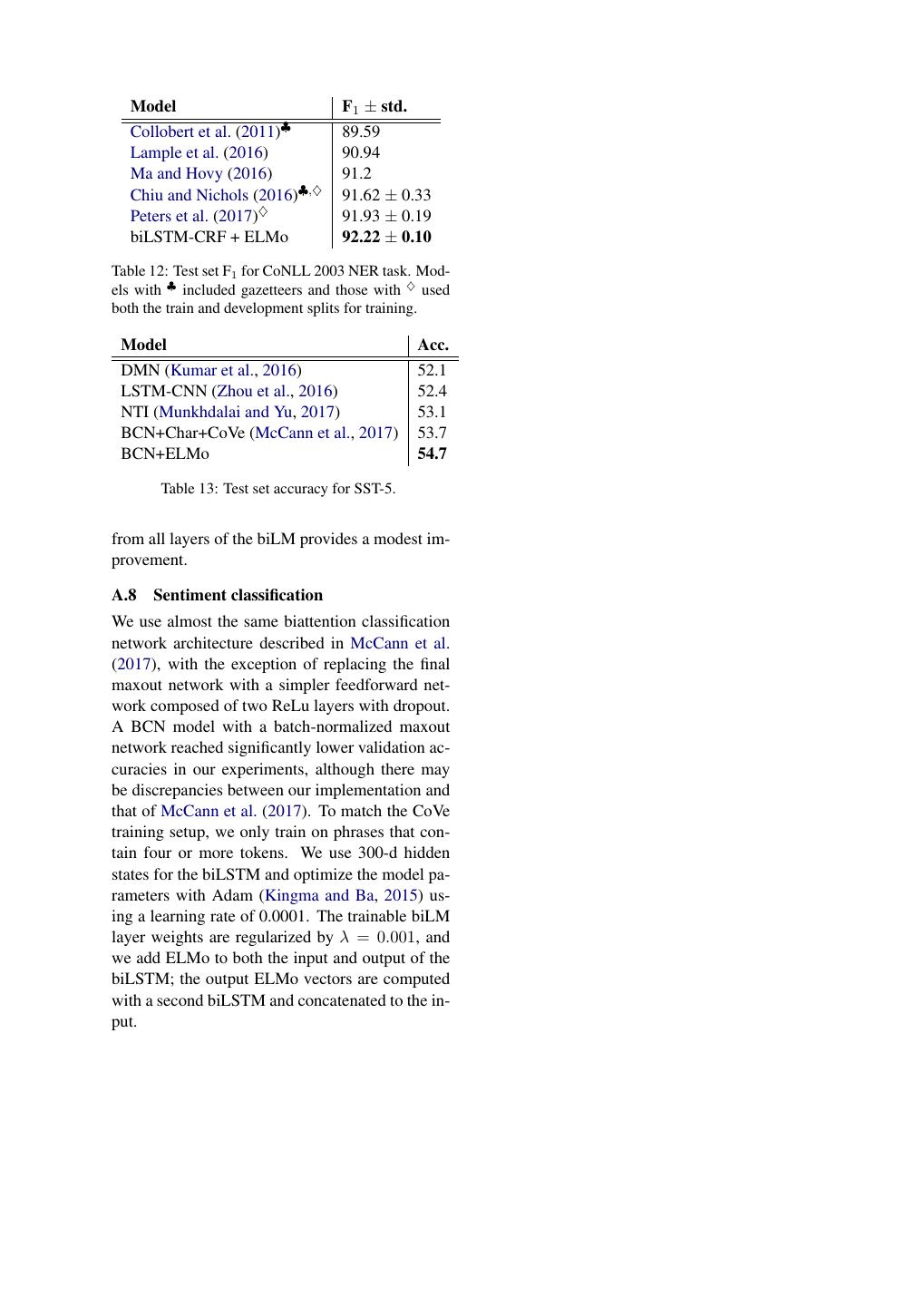

12 .A Supplemental Material to accompany Before After Dataset Deep contextualized word tuning tuning representations SNLI 72.1 16.8 CoNLL 2012 (coref/SRL) 92.3 - This supplement contains details of the model ar- CoNLL 2003 (NER) 103.2 46.3 chitectures, training routines and hyper-parameter Context 99.1 43.5 choices for the state-of-the-art models in Section SQuAD Questions 158.2 52.0 4. SST 131.5 78.6 All of the individual models share a common ar- chitecture in the lowest layers with a context inde- Table 7: Development set perplexity before and after pendent token representation below several layers fine tuning for one epoch on the training set for vari- of stacked RNNs – LSTMs in every case except ous datasets (lower is better). Reported values are the the SQuAD model that uses GRUs. average of the forward and backward perplexities. A.1 Fine tuning biLM added 50% variational dropout (Gal and Ghahra- As noted in Sec. 3.4, fine tuning the biLM on task mani, 2016) to the input of each LSTM layer and specific data typically resulted in significant drops 50% dropout (Srivastava et al., 2014) at the input in perplexity. To fine tune on a given task, the to the final two fully connected layers. All feed supervised labels were temporarily ignored, the forward layers use ReLU activations. Parame- biLM fine tuned for one epoch on the training split ters were optimized using Adam (Kingma and Ba, and evaluated on the development split. Once fine 2015) with gradient norms clipped at 5.0 and ini- tuned, the biLM weights were fixed during task tial learning rate 0.0004, decreasing by half each training. time accuracy on the development set did not in- Table 7 lists the development set perplexities for crease in subsequent epochs. The batch size was the considered tasks. In every case except CoNLL 32. 2012, fine tuning results in a large improvement in The best ELMo configuration added ELMo vec- perplexity, e.g., from 72.1 to 16.8 for SNLI. tors to both the input and output of the lowest The impact of fine tuning on supervised perfor- layer LSTM, using (1) with layer normalization mance is task dependent. In the case of SNLI, and λ = 0.001. Due to the increased number of fine tuning the biLM increased development accu- parameters in the ELMo model, we added 2 reg- racy 0.6% from 88.9% to 89.5% for our single best ularization with regularization coefficient 0.0001 model. However, for sentiment classification de- to all recurrent and feed forward weight matrices velopment set accuracy is approximately the same and 50% dropout after the attention layer. regardless whether a fine tuned biLM was used. Table 8 compares test set accuracy of our sys- tem to previously published systems. Overall, A.2 Importance of γ in Eqn. (1) adding ELMo to the ESIM model improved ac- The γ parameter in Eqn. (1) was of practical im- curacy by 0.7% establishing a new single model portance to aid optimization, due to the differ- state-of-the-art of 88.7%, and a five member en- ent distributions between the biLM internal rep- semble pushes the overall accuracy to 89.3%. resentations and the task specific representations. It is especially important in the last-only case in A.4 Question Answering Sec. 5.1. Without this parameter, the last-only Our QA model is a simplified version of the model case performed poorly (well below the baseline) from Clark and Gardner (2017). It embeds to- for SNLI and training failed completely for SRL. kens by concatenating each token’s case-sensitive 300 dimensional GloVe word vector (Penning- A.3 Textual Entailment ton et al., 2014) with a character-derived embed- Our baseline SNLI model is the ESIM sequence ding produced using a convolutional neural net- model from Chen et al. (2017). Following the work followed by max-pooling on learned char- original implementation, we used 300 dimensions acter embeddings. The token embeddings are for all LSTM and feed forward layers and pre- passed through a shared bi-directional GRU, and trained 300 dimensional GloVe embeddings that then the bi-directional attention mechanism from were fixed during training. For regularization, we BiDAF (Seo et al., 2017). The augmented con-

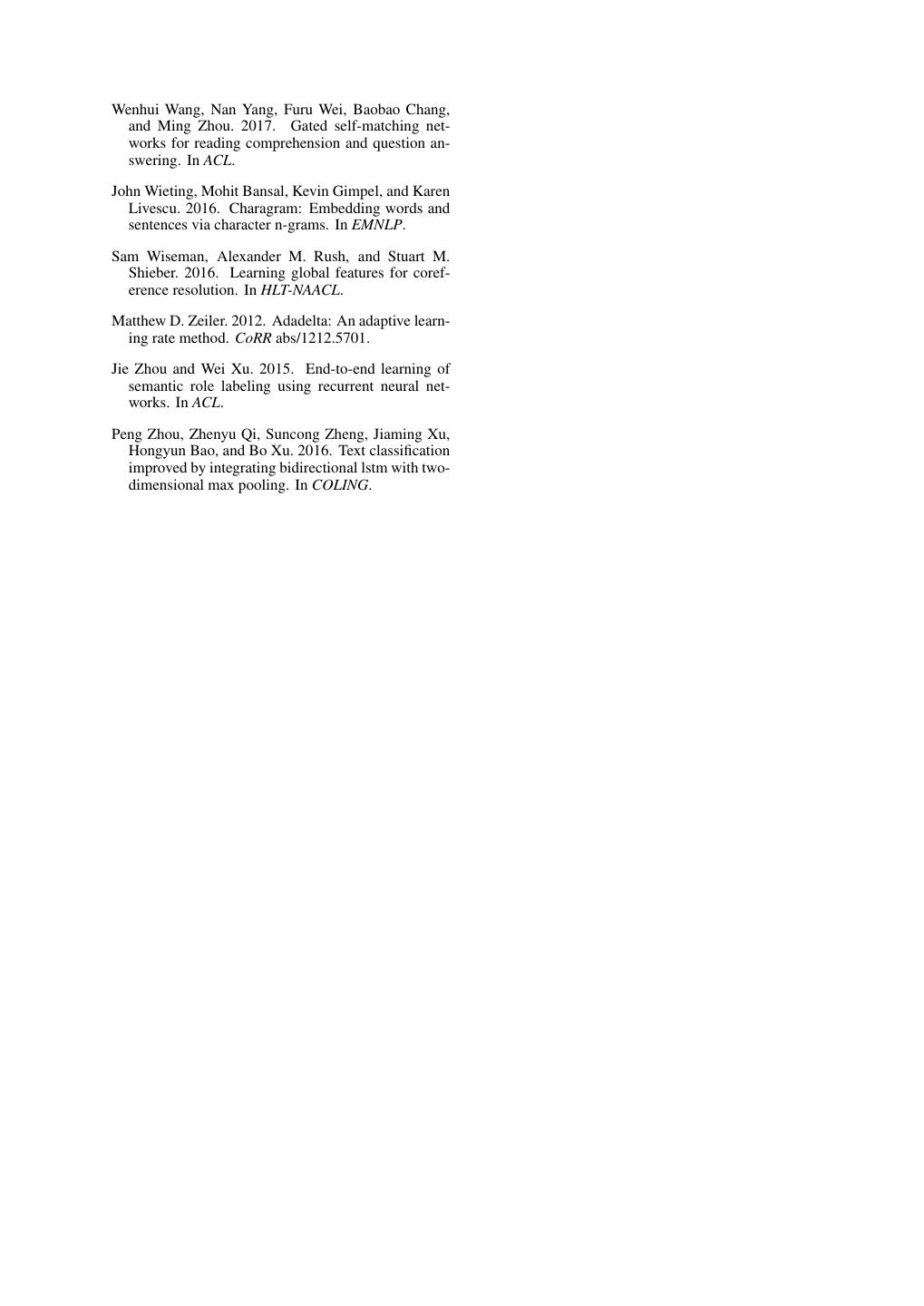

13 . Model Acc. Feature based (Bowman et al., 2015) 78.2 DIIN (Gong et al., 2018) 88.0 BCN+Char+CoVe (McCann et al., 2017) 88.1 ESIM (Chen et al., 2017) 88.0 ESIM+TreeLSTM (Chen et al., 2017) 88.6 ESIM+ELMo 88.7 ± 0.17 DIIN ensemble (Gong et al., 2018) 88.9 ESIM+ELMo ensemble 89.3 Table 8: SNLI test set accuracy.3 Single model results occupy the portion, with ensemble results at the bottom. text vectors are then passed through a linear layer bedding. This 200 dimensional token represen- with ReLU activations, a residual self-attention tation is then passed through an 8 layer “inter- layer that uses a GRU followed by the same atten- leaved” biLSTM with a 300 dimensional hidden tion mechanism applied context-to-context, and size, in which the directions of the LSTM layers another linear layer with ReLU activations. Fi- alternate per layer. This deep LSTM uses High- nally, the results are fed through linear layers to way connections (Srivastava et al., 2015) between predict the start and end token of the answer. layers and variational recurrent dropout (Gal and Variational dropout is used before the input to Ghahramani, 2016). This deep representation is the GRUs and the linear layers at a rate of 0.2. A then projected using a final dense layer followed dimensionality of 90 is used for the GRUs, and by a softmax activation to form a distribution over 180 for the linear layers. We optimize the model all possible tags. Labels consist of semantic roles using Adadelta with a batch size of 45. At test from PropBank (Palmer et al., 2005) augmented time we use an exponential moving average of the with a BIO labeling scheme to represent argu- weights and limit the output span to be of at most ment spans. During training, we minimize the size 17. We do not update the word vectors during negative log likelihood of the tag sequence using training. Adadelta with a learning rate of 1.0 and ρ = 0.95 Performance was highest when adding ELMo (Zeiler, 2012). At test time, we perform Viterbi without layer normalization to both the input and decoding to enforce valid spans using BIO con- output of the contextual GRU layer and leaving the straints. Variational dropout of 10% is added to ELMo weights unregularized (λ = 0). all LSTM hidden layers. Gradients are clipped if Table 9 compares test set results from the their value exceeds 1.0. Models are trained for 500 SQuAD leaderboard as of November 17, 2017 epochs or until validation F1 does not improve for when we submitted our system. Overall, our sub- 200 epochs, whichever is sooner. The pretrained mission had the highest single model and ensem- GloVe vectors are fine-tuned during training. The ble results, improving the previous single model final dense layer and all cells of all LSTMs are ini- result (SAN) by 1.4% F1 and our baseline by tialized to be orthogonal. The forget gate bias is 4.2%. A 11 member ensemble pushes F1 to initialized to 1 for all LSTMs, with all other gates 87.4%, 1.0% increase over the previous ensemble initialized to 0, as per (J´ozefowicz et al., 2015). best. Table 10 compares test set F1 scores of our ELMo augmented implementation of (He et al., A.5 Semantic Role Labeling 2017) with previous results. Our single model Our baseline SRL model is an exact reimplemen- score of 84.6 F1 represents a new state-of-the-art tation of (He et al., 2017). Words are represented result on the CONLL 2012 Semantic Role Label- using a concatenation of 100 dimensional vector ing task, surpassing the previous single model re- representations, initialized using GloVe (Penning- sult by 2.9 F1 and a 5-model ensemble by 1.2 F1. ton et al., 2014) and a binary, per-word predicate feature, represented using an 100 dimensional em- A.6 Coreference resolution 3 A comprehensive comparison can be found at https: Our baseline coreference model is the end-to-end //nlp.stanford.edu/projects/snli/ neural model from Lee et al. (2017) with all hy-

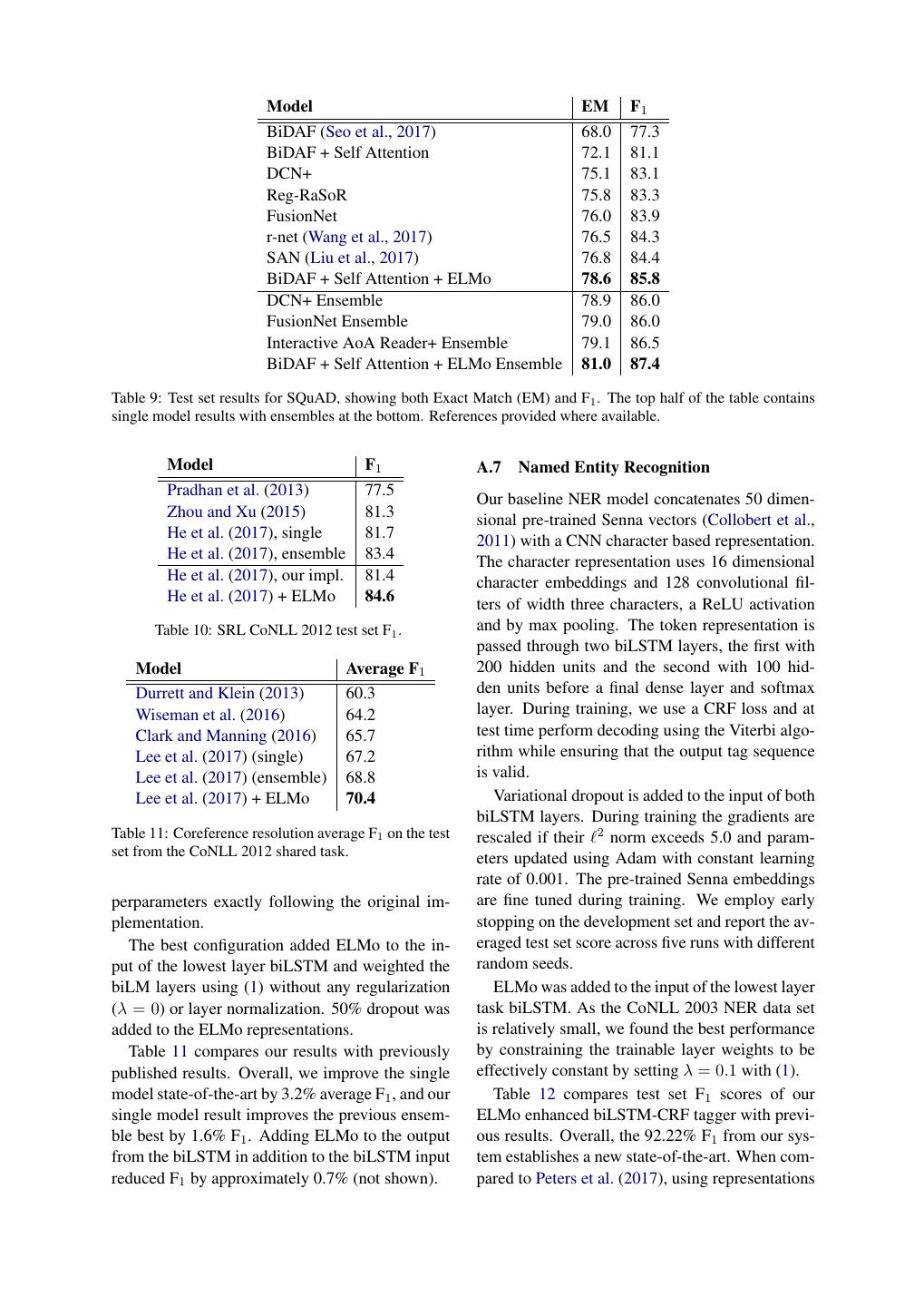

14 . Model EM F1 BiDAF (Seo et al., 2017) 68.0 77.3 BiDAF + Self Attention 72.1 81.1 DCN+ 75.1 83.1 Reg-RaSoR 75.8 83.3 FusionNet 76.0 83.9 r-net (Wang et al., 2017) 76.5 84.3 SAN (Liu et al., 2017) 76.8 84.4 BiDAF + Self Attention + ELMo 78.6 85.8 DCN+ Ensemble 78.9 86.0 FusionNet Ensemble 79.0 86.0 Interactive AoA Reader+ Ensemble 79.1 86.5 BiDAF + Self Attention + ELMo Ensemble 81.0 87.4 Table 9: Test set results for SQuAD, showing both Exact Match (EM) and F1 . The top half of the table contains single model results with ensembles at the bottom. References provided where available. Model F1 A.7 Named Entity Recognition Pradhan et al. (2013) 77.5 Our baseline NER model concatenates 50 dimen- Zhou and Xu (2015) 81.3 sional pre-trained Senna vectors (Collobert et al., He et al. (2017), single 81.7 2011) with a CNN character based representation. He et al. (2017), ensemble 83.4 The character representation uses 16 dimensional He et al. (2017), our impl. 81.4 character embeddings and 128 convolutional fil- He et al. (2017) + ELMo 84.6 ters of width three characters, a ReLU activation Table 10: SRL CoNLL 2012 test set F1 . and by max pooling. The token representation is passed through two biLSTM layers, the first with Model Average F1 200 hidden units and the second with 100 hid- Durrett and Klein (2013) 60.3 den units before a final dense layer and softmax Wiseman et al. (2016) 64.2 layer. During training, we use a CRF loss and at Clark and Manning (2016) 65.7 test time perform decoding using the Viterbi algo- Lee et al. (2017) (single) 67.2 rithm while ensuring that the output tag sequence Lee et al. (2017) (ensemble) 68.8 is valid. Lee et al. (2017) + ELMo 70.4 Variational dropout is added to the input of both biLSTM layers. During training the gradients are Table 11: Coreference resolution average F1 on the test rescaled if their 2 norm exceeds 5.0 and param- set from the CoNLL 2012 shared task. eters updated using Adam with constant learning rate of 0.001. The pre-trained Senna embeddings perparameters exactly following the original im- are fine tuned during training. We employ early plementation. stopping on the development set and report the av- The best configuration added ELMo to the in- eraged test set score across five runs with different put of the lowest layer biLSTM and weighted the random seeds. biLM layers using (1) without any regularization ELMo was added to the input of the lowest layer (λ = 0) or layer normalization. 50% dropout was task biLSTM. As the CoNLL 2003 NER data set added to the ELMo representations. is relatively small, we found the best performance Table 11 compares our results with previously by constraining the trainable layer weights to be published results. Overall, we improve the single effectively constant by setting λ = 0.1 with (1). model state-of-the-art by 3.2% average F1 , and our Table 12 compares test set F1 scores of our single model result improves the previous ensem- ELMo enhanced biLSTM-CRF tagger with previ- ble best by 1.6% F1 . Adding ELMo to the output ous results. Overall, the 92.22% F1 from our sys- from the biLSTM in addition to the biLSTM input tem establishes a new state-of-the-art. When com- reduced F1 by approximately 0.7% (not shown). pared to Peters et al. (2017), using representations

15 . Model F1 ± std. Collobert et al. (2011)♣ 89.59 Lample et al. (2016) 90.94 Ma and Hovy (2016) 91.2 Chiu and Nichols (2016)♣,♦ 91.62 ± 0.33 Peters et al. (2017)♦ 91.93 ± 0.19 biLSTM-CRF + ELMo 92.22 ± 0.10 Table 12: Test set F1 for CoNLL 2003 NER task. Mod- els with ♣ included gazetteers and those with ♦ used both the train and development splits for training. Model Acc. DMN (Kumar et al., 2016) 52.1 LSTM-CNN (Zhou et al., 2016) 52.4 NTI (Munkhdalai and Yu, 2017) 53.1 BCN+Char+CoVe (McCann et al., 2017) 53.7 BCN+ELMo 54.7 Table 13: Test set accuracy for SST-5. from all layers of the biLM provides a modest im- provement. A.8 Sentiment classification We use almost the same biattention classification network architecture described in McCann et al. (2017), with the exception of replacing the final maxout network with a simpler feedforward net- work composed of two ReLu layers with dropout. A BCN model with a batch-normalized maxout network reached significantly lower validation ac- curacies in our experiments, although there may be discrepancies between our implementation and that of McCann et al. (2017). To match the CoVe training setup, we only train on phrases that con- tain four or more tokens. We use 300-d hidden states for the biLSTM and optimize the model pa- rameters with Adam (Kingma and Ba, 2015) us- ing a learning rate of 0.0001. The trainable biLM layer weights are regularized by λ = 0.001, and we add ELMo to both the input and output of the biLSTM; the output ELMo vectors are computed with a second biLSTM and concatenated to the in- put.