- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

使用变体索引改进查询性能

展开查看详情

1 . Improved Query Performance with Variant Indexes Patrick O’Neil Dallan Quass Department of Mathematics and Computer Science Department of Computer Science University of Massachusetts at Boston Stanford University Boston, MA 02125-3393 Stanford, CA 94305 poneil@cs.umb.edu quass@cs.stanford.edu Abstract: The read-mostly environment of data warehousing the query at hand. The Sybase IQ product currently provides makes it possible to use more complex indexes to speed up both variant index types [EDEL95, FREN95], and recommends queries than in situations where concurrent updates are present. multiple indexes per column in some cases. The current paper presents a short review of current indexing technology, including row-set representation by Bitmaps, and Late in the paper, we introduce a new indexing approach to then introduces two approaches we call Bit-Sliced indexing and support OLAP-type queries, commonly used in Data Projection indexing. A Projection index materializes all values Warehouses. Such queries are called Datacube queries in of a column in RID order, and a Bit-Sliced index essentially [GBLP96]. OLAP query performance depends on creating a set takes an orthogonal bit-by-bit view of the same data. While of summary tables to efficiently evaluate an expected set of some of these concepts started with the MODEL 204 product, queries. The summary tables pre-materialize needed aggregates, and both Bit-Sliced and Projection indexing are now fully real- an approach that is possible only when the expected set of ized in Sybase IQ, this is the first rigorous examination of such queries is known in advance. Specifically, the OLAP approach indexing capabilities in the literature. We compare algorithms addresses queries that group by different combinations of that become feasible with these variant index types against algo- columns, known as dimensions. rithms using more conventional indexes. The analysis demon- strates important performance advantages for variant indexes in Example 1.1. Assume that we are given a star-join schema, some types of SQL aggregation, predicate evaluation, and consisting of a central fact table Sales, containing sales data, and grouping. The paper concludes by introducing a new method dimension tables known as Stores (where the sales are made), whereby multi-dimensional group-by queries, reminiscent of Time (when the sales are made), Product (involved in the sales), OLAP/Datacube queries but with more flexibility, can be very and Promotion (method of promotion being used). (See efficiently performed. [KIMB96], Chapter 2, for a detailed explanation of this schema. A comparable Star schema is pictured in Figure 5.1.) Using pre- 1. Introduction calculated summary tables based on these dimensions, OLAP systems can answer some queries quickly, such as the total dol- Data warehouses are large, special-purpose databases that con- lar sales that were made for a brand of products in a store on the tain data integrated from a number of independent sources, sup- East coast during the past 4 weeks with a sales promotion based porting clients who wish to analyze the data for trends and on price reduction. The dimensions by which the aggregates are anomalies. The process of analysis is usually performed with "sliced and diced" result in a multi-dimensional crosstabs calcu- queries that aggregate, filter, and group the data in a variety of lation (Datacube) where some or all of the cells may be precalcu- ways. Because the queries are often complex and the warehouse lated and stored in summary tables. But if we want to perform database is often very large, processing the queries quickly is a some selection criterion that has not been precalculated, such as critical issue in the data warehousing environment. repeating the query just given, but only for sales that occurred on days where the temperature reached 90, the answer could not Data warehouses are typically updated only periodically, in a be supplied quickly if summary tables with dimensions based batch fashion, and during this process the warehouse is un- upon temperature did not exist. And there is a limit to the num- available for querying. This means a batch update process can ber of dimensions that can be represented in precalculated sum- reorganize data and indexes to a new optimal clustered form, in mary tables, since all combinations of such dimensions must be a manner that would not work if the indexes were in use. In this precalculated in order to achieve good performance at runtime. simplified situation, it is possible to use specialized indexes This suggests that queries requiring rich selection criteria must and materialized aggregate views (called summary tables in be evaluated by accessing the base data, rather than precalcu- data warehousing literature), to speed up query evaluation. lated summary tables. u This paper reviews current indexing technology, including row- The paper explores indexes for efficient evaluation of OLAP- set representation by Bitmaps, for speeding up evaluation of style queries with such rich selection criteria. complex queries. It then introduces two indexing structures, which we call Bit-Sliced indexes and Projection indexes. We Paper outline: We define Value-List, Projection, and Bit- show that these indexes each provide significant performance Sliced indexes and their use in query processing in Section 2. advantages over traditional Value-List indexes for certain Section 3 presents algorithms for evaluating aggregate functions classes of queries, and argue that it may be desirable in a data using the index types presented in Section 2. Algorithms for warehousing environment to have more than one type of index evaluating Where Clause conditions, specifically range predi- available on a column, so that the best index can be chosen for cates, are presented in Section 4. In Section 5, we introduce an index method whereby OLAP-style queries that permit non-di- Permission to copy without fee all or part of this material is mensional selection criteria can be efficiently performed. The granted provided that the copies are not made or distributed for method combines Bitmap indexing and physical row clustering, direct commercial advantage, the ACM copyright notice and the two features which provide important advantage for OLAP- title of the publication and its date appear, and notice is given that copying is by permission of the Association of Computing style queries. Our conclusions are given in Section 6. Machinery. To copy otherwise, or to republish, requires a fee and/or specific permission. SIGMOD '97 5/97, Tucson, Arizona, USA -1-

2 .2. Indexing Definitions Note that while there are n rows in T = {r1 , r2 , . . . rn }, it is not necessarily true that the maximum row number M is the same as In this section we examine traditional Value-List indexes and n, since a method is commonly used to associate a fixed number show how Bitmap representations for RID-lists can easily be of rows p with each disk page for fast lookup. Thus for a given used. We then introduce Projection and Bit-Sliced indexes. row r with row number j, the table page number accessed to re- trieve row r is j/p and the page slot is (in C terms) j%p. This 2.1 Traditional Value-List Indexes means that rows will be assigned row numbers in disk clustered sequence, a valuable property. Since the rows might have vari- Database indexes provided today by most database systems use able size and we may not always be able to accommodate an B +-tree1 indexes to retrieve rows of a table with specified values equal number of rows on each disk page, the value p must be a involving one or more columns (see [COMER79]). The leaf chosen as a maximum, so some integers in Z[M] might be wasted. level of the B-tree index consists of a sequence of entries for in- They will correspond to non-existent slots on pages that cannot dex keyvalues. Each keyvalue reflects the value of the indexed accommodate the full set of p rows. (And we may find that m-1(j) column or columns in one or more rows in the table, and each for some row numbers j in Z[M] is undefined.) keyvalue entry references the set of rows with that value. Since all rows of an indexed relational table are referenced exactly once A "Bitmap" B is defined on T as a sequence of M bits. If a Bitmap in the B-tree, the rows are partitioned by keyvalue. However, B is meant to list rows in T with a given property P, then for object-relational databases allow rows to have multi-valued at- each row r with row number j that has the property P, we set bit tributes, so that in the future the same row may appear under j in B to one; all other bits are set to zero. A Bitmap index for a many keyvalues in the index. We therefore refer to this type of column C with values v1, v2, . . ., vk, is a B-tree with entries hav- index simply as a Value-List index. ing these keyvalues and associated data portions that contain Bitmaps for the properties C = v1, . . ., C = vk. Thus Bitmaps in Traditionally, Value-List (B-tree) indexes have referenced each this index are just a new way to specify lists of RIDs for specific row individually as a RID, a Row IDentifier, specifying the disk column values. See Figure 2.1 for an Example. Note that a series position of the row. A sequence of RIDs, known as a RID-list, of successive Bitmap Fragments make up the entry for "depart- is held in each distinct keyvalue entry in the B-tree. In indexes ment = 'sports'". with a relatively small number of keyvalues compared to the number of rows, most keyvalues will have a large number of as- sociated RIDs and the potential for compression arises by list- B-tree Root Node for department ing a keyvalue once, at the head of what we call a RID-list index Fragment, containing a long list of RIDs for rows with this 'clothes''china'... 'sports' ... keyvalue. For example, MVS DB2 provides this kind of com- 'tools' pression, (see [O'NEI96], Figure 7.19). Keyvalues with RID- lists that cross leaf pages require multiple Fragments. We as- sume in what follows that RID-lists (and Bitmaps, which fol- low) are read from disk in multiples of Fragments. With this amortization of the space for the keyvalue over multiple 4-byte RIDs of a Fragment, the length in bytes of the leaf level of the B- tree index can be approximated as 4 times the number of rows in ' spor t s 101101 . . . ' spor t s 01011 . . the table, divided by the average fullness of the leaf nodes. In what follows, we assume that we are dealing with data that is ' ' . updated infrequently, so that B-tree leaf pages can be completely Figure 2.1. Example of a Bitmap Index on department, filled, reorganized during batch updates. Thus the length in a column of the SALES table bytes of the leaf level of a B-tree index with a small number of keyvalues is about 4 times the number of table rows. We say that Bitmaps are dense if the proportion of one-bits in the Bitmap is large. A Bitmap index for a column with 32 values 2.1.1 Bitmap Indexes will have Bitmaps with average density of 1/32. In this case the disk space to hold a Bitmap column index will be comparable to Bitmap indexes were first developed for database use in the the disk space needed for a RID-list index (which requires about Model 204 product from Computer Corporation of America (see 32 bits for each RID present). While the uncompressed Bitmap [O'NEI87]). A Bitmap is an alternate form for representing RID- index size is proportional to the number of column values, a lists in a Value-List index. Bitmaps are more space-efficient than RID-list index is about the same size for any number of values RID-lists when the number of keyvalues for the index is low. (as long as we can continue to amortize the keysize with a long Furthermore, we will show that Bitmaps are usually more CPU- block of RIDs). For a column index with a very small number of efficient as well, because of the simplicity of their representation. values, the Bitmaps will have high densities (such as 50% for To create Bitmaps for the n rows of a table T = {r1, r2, . . . rn}, we predicates such as GENDER = 'M' or GENDER = 'F'), and the start with a 1-1 mapping m from rows of T to Z[M], the first M disk savings is enormous. On the other hand, when average positive integers. In what follows we avoid frequent reference to Bitmap density for a Bitmap index becomes too low, methods ex- the mapping m. When we speak of the row number of a row r of ist for compressing a Bitmap. The simplest of these is to trans- T, we will mean the value m(r). late the Bitmap back to a RID list, and we will assume this in what follows. 2.1.2 Bitmap Index Performance 1B+-trees are commonly referred to simply as B-trees in database An important consideration for database query performance is the fact that Boolean operations, such as AND, OR, and NOT are documentation, and we will follow this convention. -2-

3 .extremely fast for Bitmaps. Given Bitmaps B1 and B2, we can [2.1] SELECT K10, K25, COUNT(*) FROM BENCH calculate a new Bitmap B3, B3 = B1 AND B2, by treating all GROUP BY K10, K25; bitmaps as arrays of long ints and looping through them, using the & operation of C: A 1995 benchmark on a 66 MHz Power PC of the Praxis Omni Warehouse, a C language version of MODEL 204, demonstrated for (i = 0; i < len(B1); i++) an elapsed time of 19.25 seconds to perform this query. The /* Note: len(B1)=len(B2)=len(B3) */ query plan was to read Bitmaps from the indexes for all values of B3[i] = B1[i] & B2[i]; K10 and K25, perform a double loop through all 250 pairs of /* B3 = B1 AND B2 */ values, AND all pairs of Bitmaps, and COUNT the results. The 250 ANDs and 250 COUNTs of 1,000,000 bit Bitmaps required We would not normally expect the entire Bitmap to be memory only 19.25 seconds on a relatively weak processor. By compar- resident, but would perform a loop to operate on Bitmaps by ison, MVS DB2 Version 2.3, running on an IBM 9221/170 used reading them in from disk in long Fragments. We ignore this an algorithm that extracted and wrote out all pairs of (K10, K25) loop here. Using a similar approach, we can calculate B3 = B1 values from the rows, sorted by value pair, and counted the re- OR B2. But calculating B3 = NOT(B1) requires an extra step. sult in groups, taking 248 seconds of elapsed time and 223 sec- Since some bit positions can correspond to non-existent rows, onds of CPU. (See [O'NEI96] for more details.) u we postulate an Existence Bitmap (designated EBM) which has exactly those 1 bits corresponding to existing rows. Now when 2.1.3 Segmentation we perform a NOT on a Bitmap B, we loop through a long int ar- ray performing the ~ operation of C, then AND the result with To optimize Bitmap index access, Bitmaps can be broken into the corresponding long int from EBM. Fragments of equal sizes to fit on single fixed-size disk pages. Corresponding to these Fragments, the rows of a table are parti- for (i = 0; i < len(B1); i++) tioned into Segments, with an equal number of row slots for B3[i] = ~B1[i] & EBM[i]; each segment. In MODEL 204 (see [M204, O'NEI87]), a Bitmap /* B3 = NOT(B1)for rows that exist */ Fragment fits on a 6 KByte page, and contains about 48K bits, so the table is broken into segments of about 48K rows each. Typical Select statements may have a number of predicates in This segmentation has two important implications. their Where Clause that must be combined in a Boolean manner. The resulting set of rows, which is then retrieved or aggregated The first implication involves RID-lists. When Bitmaps are suf- in the Select target-list, is called a Foundset in what follows. ficiently sparse that they need to be converted to RID-lists, the Sometimes, the rows filtered by the Where Clause must be further RID-list for a segment is guaranteed to fit on a disk page (1/32 of grouped, due to a group-by clause, and we refer to the set of 48K is about 1.5K; MODEL 204 actually allows sparser rows restricted to a single group as a Groupset. Bitmaps than 1/32, so several RID lists might fit on a single disk page). Furthermore, RIDs need only be two bytes in Finally, we show how the COUNT function for a Bitmap of a length, because they only specify the row position within the Foundset can be efficiently performed. First, a short int array segment (the 48K rows of a segment can be counted in a short shcount[ ] is declared, with entries initialized to contain the int). At the beginning of each RID-list, the segment number will number of bits in the entry subscript. Given this array, we can specify the higher order bits of a longer RID (4 byte or more), loop through a Bitmap as an array of short int values, to get the but the segment-relative RIDs only use two bytes each. This is count of the total Bitmap as shown in Algorithm 2.1. Clearly an important form of prefix RID compression, which greatly the shcount[ ] array is used to provide parallelism in calculating speeds up index range search. the COUNT on many bits at once. The second implication of segmentation involves combining Algorithm 2.1. Performing COUNT with a Bitmap predicates. The B-tree index entry for a particular value in /* Assume B1[ ] is a short int array MODEL 204 is made up of a number of pointers by segment to overlaying a Foundset Bitmap */ Bitmap or RID-list Fragments, but there are no pointers for seg- count = 0; ments that have no representative rows. In the case of a clus- for (i = 0; i < SHNUM; i++) tered index, for example, each particular index value entry will count += shcount[B1[i]]; have pointers to only a small set of segments. Now if several /* add count of bits for next short int */ predicates involving different column indexes are ANDed, the u evaluation takes place segment-by-segment. If one of the predi- cate indexes has no pointer to a Bitmap Fragment for a segment, Loops for Bitmap AND, OR, NOT, or COUNT are extremely fast then the segment Fragments for the other indexes can be ignored compared to loop operations on RID lists, where several opera- as well. Queries like this can turn out to be very common in a tions are required for each RID, so long as the Bitmaps involved workload, and the I/O saved by ignoring I/O for these index have reasonably high density (down to about 1%). Fragments can significantly improve performance. Example 2.1. In the Set Query benchmark of [O'NEI91], the In some sense, Bitmap representations and RID-list representa- results from one of the SQL statements in Query Suite Q5 gives tions are interchangeable: both provide a way to list all rows a good illustration of Bitmap performance. For a table named with a given index value or range of values. It is simply the case BENCH of 1,000,000 rows, two columns named K10 and K25 that, when the Bitmap representations involved are relatively have cardinalities 10 and 25, respectively, with all rows in the dense, Bitmaps are much more efficient than RID-lists, both in table equally likely to take on any valid value for either column. storage use and efficiency of Boolean operations. Indeed a Thus the Bitmap densities for indexes on this column are 10% Bitmap index can contain RID-lists for some entry values or and 4% respectively. One SQL statement from the Q5 Suite is: even for some Segments within a value entry, whenever the number of rows with a given keyvalue would be too sparse in -3-

4 .the segment for a Bitmap to be efficiently used. In what follows, they provide an efficient means to calculate aggregates of we will assume that a Bitmapped index combines Bitmap and Foundsets. We begin our definition of Bit-Sliced indexes with RID-list representations where appropriate, and continue to re- an example. fer to the hybrid form as a Value-List Index. When we refer to the Bitmap for a given value v in the index, this should be under- Example 2.2. Consider a table named SALES which contains stood to be a generic name: it may be a Bitmap or it may be a rows for all sales that have been made during the past month by RID-list, or a segment-by-segment combination of the two forms. individual stores belonging to some large chain. The SALES table has a column named dollar_sales, which represents for each 2.2 Projection Indexes row the dollar amount received for the sale. Assume that C is a column of a table T; then the Projection in- Now interpret the dollar_sales column as an integer number of dex on C consists of a stored sequence of column values from C, pennies, represented as a binary number with N+1 bits. We de- in order by the row number in T from which the values are ex- fine a function D(n, i), i = 0, . . . , N, for row number n in SALES, tracted. (Holes might exist for unused row numbers.) If the col- to have value 0, except for rows with a non-null value for dol- umn C is 4 bytes in length, then we can fit 1000 values from C lar_sales, where the value of D(n, i) is defined as follows: on each 4 KByte disk page (assuming no holes), and continue to do this for successive column values, until we have constructed D(n, 0) = 1 if the 1 bit for dollar_sales in row number n is on the Projection index. Now for a given row number n = m(r) in D(n, 1) = 1 if the 2 bit for dollar_sales in row number n is on the table, we can access the proper disk page, p, and slot, s, to re- . . . trieve the appropriate C value with a simple calculation: p = D(n, i) = 1 if the 2i bit for dollar_sales in row number n is on n/1000 and s = n%1000. Furthermore, given a C value in a given position of the Projection index, we can calculate the row Now for each value i, i = 0 to N, such that D(n, i) > 0 for some number easily: n = 1000*p + s. row in SALES, we define a Bitmap Bi on the SALES table so that bit n of Bitmap Bi is set to D(n, I). Note that by requiring If the column values for C are variable length instead of fixed that D(n, i) > 0 for some row in SALES, we have guaranteed that length, there are two alternatives. We can set a maximum size we do not have to represent any Bitmap of all zeros. For a real and place a fixed number of column value on each page, as before, table such as SALES, the appropriate set of Bitmaps with non- or we can use a B-tree structure to access the column value C by zero bits can easily be determined at Create Index time. u a lookup of the row number n. The case of variable-length val- ues is obviously somewhat less efficient than fixed-length, and The definitions of Example 2.1 generalize to any column C in a we will assume fixed-length C values in what follows. table T, where the column C is interpreted as a sequence of bits, from least significant (i = 0) to most significant (i = N). The Projection index turns out to be quite efficient in certain cases where column values must be retrieved for all rows of a Definition 2.1: Bit-Sliced Index. The Bit-Sliced index on the Foundset. For example, if the density of the Foundset is 1/50 C column of table T is the set of all Bitmaps Bi as defined analo- (no clustering, so the density is uniform across all table seg- gously for dollar_sales in Example 2.2. Since a null value in the ments), and the column values are 4 bytes in length, as above, C column will not have any bits set to 1, it is clear that only then 1000 values will fit on a 4 KByte page, and we expect to rows with non-null values appear as 1-bits in any of these pick up 20 values per Projection index page. In contrast, if the Bitmaps. Each individual Bitmap Bi is called a Bit-Slice of the rows of the table were retrieved, then assuming 200-byte rows column. We also define the Bit-Sliced index to have a Bitmap only 20 rows will fit on a 4 KByte page, and we expect to pick B nn representing the set of rows with non-null values in column up only 1 row per page. Thus reading the values from a C, and a Bitmap Bn representing the set of rows with null values. Projection index requires only 1/20 the number of disk page ac- cess as reading the values from the rows. The Sybase IQ product Clearly Bn can be derived from Bnn and the Existence Bitmap is the first one to have utilized the Projection index heavily, EBM, but we want to save this effort in algorithms below. In under the name of "Fast Projection Index" [EDEL95, FREN95]. fact, the Bitmaps Bnn and Bn are so useful that we assume from now on that Bnn exists for Value-List Bitmap indexes (clearly Bn The definition of a Projection index is reminiscent of vertically already exists, since null is a particular value). u partitioning the columns of a table. Vertical partitioning is a good strategy for workloads where small numbers of columns are In the algorithms that follow, we will normally be assuming that retrieved by most Select statements, but it is a bad idea when the column C is numeric, either an integer or a floating point most queries retrieve many most of the columns. Vertical parti- value. In using Bit-Sliced indexes, it is necessary that different tioning is actually forbidden by the TPC-D benchmark, presum- values have matching decimal points in their binary representa- ably on the theory that the queries chosen have not been suffi- tions. Depending on the variation in size of the floating point ciently tuned to penalize this strategy. But Projection indexes numbers, this could lead to an exceptionally large number of are not the same as vertical partitioning. We assume that rows of slices when values differ by many orders of magnitude. Such an the table are still stored in contiguous form (the TPC-D require- eventuality is unlikely in business applications, however. ment) and the Projection indexes are auxiliary aids to retrieval efficiency. Of course this means that column values will be du- A user-defined method to bit-slice aggregate quantities was plicated in the index, but in fact all traditional indexes duplicate used by some MODEL 204 customers and is defined on page 48 column values in this same sense. of [O'NEI87]. Sybase IQ currently provides a fully realized Bit- Sliced index, which is known to the query optimizer and trans- 2.3. Bit-Sliced Indexes parent to SQL users. Usually, a Bit-Sliced index for a quantity of the kind in Example 2.2 will involve a relatively small num- A Bit-Sliced index stores a set of "Bitmap slices" which are "or- ber of Bitmaps (less than the maximum significance), although thogonal" to the data held in a Projection index. As we will see, there is no real limit imposed by the definition. Note that 20 -4-

5 .Bitmaps, 0 . . .19, for the dollar_sales column will represent The time to perform such a sequence of I/Os, assuming one disk quantities up to 220 - 1 pennies, or $10,485.75, a large sale by arm retrieves 100 disk pages per second in relatively close se- most standards. If we assume normal sales range up to $100.00, quence on disk, is 16,484 seconds, or more than 4 hours of disk it is very likely that nearly all values under $100.00 will occur arm use. We estimate 25 instructions needed to retrieve the for some row in a large SALES table. Thus, a Value-List index proper row and column value from each buffer resident page, and would have nearly 10,000 different values, and row-sets with this occurs 2,000,000 times, but in fact the CPU utilization as- these values in a Value-List index would almost certainly be sociated with reading the proper page into buffer is much more represented by RID-lists rather than Bitmaps. The efficiency of significant. Each disk page I/O is generally assumed to require performing Boolean Bitmap operations would be lost with a several thousand instructions to perform (see, for example, Value-List index, but not with a Bit-Sliced index, where all val- [PH96], Section 6.7, where 10,000 instructions are assumed). ues are represented with about 20 Bitmaps. Query Plan 2: Calculating SUM with a Projection index. It is important to realize that these index types are all basically We can use the Projection index to calculate the sum by access- equivalent. ing each dollar_sales value in the index corresponding to a row number in the Foundset; these row numbers will be provided in Theorem 2.1. For a given column C on a table T, the informa- increasing order. We assume as in Example 2.2 that the dol- tion in a Bit-sliced index, Value-List index, or Projection index lar_sales Projection index will contain 1000 values per 4 can each be derived from either of the others. KByte disk page. Thus the Projection index will require 100,000 disk pages, and we can expect all of these pages to be Proof. With all three types of indexes, we are able to determine accessed in sequence when the values for the 2,000,000 row the values of columns C for all rows in T, and this information is Foundset are retrieved. This implies we will have 100,000 disk sufficient to create any other index. u page I/Os, with elapsed time 1000 seconds (roughly 17 min- utes), given the same I/O assumptions as in Query Plan 1. In ad- Although the three index types contain the same information, dition to the I/O, we will use perhaps 10 instructions to convert they provide different performance advantages for different opera- the Bitmap row number into a disk page offset, access the appro- tions. In the next few sections of the paper we explore this. priate value, and add this to the SUM. 3. Comparing Index types for Aggregate Evaluation Query Plan 3: Calculating SUM with a Value-List index. Assuming we have a Value-List index on dollar_sales, we can In this section we give algorithms showing how Value-List in- calculate SUM(dollar_sales) for our Foundset by ranging dexes, Projection indexes, and Bit-Sliced indexes can be used to through all possible values in the index and determining the speed up the evaluation of aggregate functions in SQL queries. rows with each value, then determining how many rows with We begin with an analysis evaluating SUM on a single column. each value are in the Foundset, and finally multiplying that Other aggregate functions are considered later. count by the value and adding to the SUM. In pseudo code, we have Algorithm 3.1 below. 3.1 Evaluating Single-Column Sum Aggregates Algorithm 3.1. Evaluating SUM(C) with a Value-List Index Example 3.1. Assume that the SALES table of Example 2.2 has If (COUNT(Bf AND Bnn) == 0) /* no non-null values * / 100 million rows which are each 200 bytes in length, stored 20 Return null; to a 4 KByte disk page, and that the following Select statement SUM = 0.00; has been submitted: For each non-null value v in the index for C { Designate the set of rows with the value v as Bv [3.1] SELECT SUM(dollar_sales) FROM SALES SUM += v * COUNT(Bf AND Bv); WHERE condition; } Return SUM; The condition in the Where clause that restricts rows of the u SALES table will result in a Foundset of rows. We assume in what follows that the Foundset has already been determined, Our earlier analysis counted about 10,000 distinct values in and is represented by a Bitmap Bf, it contains 2 million rows and this index, so the Value-List index evaluation of SUM(C) re- the rows are not clustered in a range of disk pages, but are quires 10,000 Bitmap ANDs and 10,000 COUNTs. If we make spread out evenly across the entire table. We vary these as- the assumption that the Bitmap B f is held in memory sumptions later. The most likely case is that determining the (100,000,000 bits, or 12,500,000 bytes) while we loop through Foundset was easily accomplished by performing Boolean oper- the values, and that the sets Bv for each value v are actually RID- ations on a few indexes, so the resources used were relatively lists, this will entail 3125 I/Os to read in Bf , 100,000 I/Os to insignificant compared to the aggregate evaluation to follow. read in the index RID-lists for all values (100,000,000 RIDs of 4 bytes each, assuming all pages are completely full), and a loop of Query Plan 1: Direct access to rows to calculate SUM. several instructions to translate 100,000,000 RIDs to bit posi- Each disk page contains only 20 rows, so there must be a total tions and test if they are on in Bf. of 5,000,000 disk pages occupied by the SALES table. Since 2,000,000 rows in the Foundset Bf represent only 1/50 of all Note that this algorithm gains an enormous advantage by as- rows in the SALES table, the number of disk pages that the suming Bf is a Bitmap (rather than a RID-list), and that it can be Foundset occupies can be estimated (see [O'NEI96], Formula [7.6.4]) as: held in memory, so that RIDs from the index can be looked up quickly. If Bf were held as a RID-list instead, the lookup would 5 ,000,000(1 - e-2,000,000/5,000,000) = 1,648,400 disk pages be a good deal less efficient, and would probably entail a sort by RID value of values from the index, followed by a merge-inter- -5-

6 .sect with the RID-list Bf. Even with the assumption that Bf is a submitted at a rate of once each 1,000 seconds, the most expen- Bitmap in memory, the loop through 100,000,000 RIDs is ex- sive plan, "Add from rows", will keep 13.41 disks busy at a cost tremely CPU intensive, especially if the translation from RID to of $8046 purchase. We calculate the number of CPU instruc- bit ordinal entails a complex lookup in a memory-resident tree to tions needed for I/O for the various plans, with the varying as- determine the extent containing the disk page of the RID and the sumptions in Table 3.2 of how many instructions are needed to corresponding RID number within the extent. With optimal as- perform an I/O. Adding the CPU cost for algorithmic loops to sumptions, Plan 3 seems to require 103,125 I/Os and a loop of the I/O cost, we determine the total dollar cost ($Cost) to sup- length 100,000,000, with a loop body of perhaps 10 instruc- port the method. For example, for the "Add from Rows" plan, as- tions. Even so, Query Plan 3 is probably superior to Query suming one submission each 1000 seconds, if an I/O uses (2K, Plan 1, which requires I/O for 1,340,640 disk pages. 5K, 10K) instructions, the CPU cost is ($32.78, $81.06, $161.52). The cost for disk access ($8046) clearly swamps the Query Plan 4: Calculating SUM with a Bit-Sliced index. cost of CPU in this case, and in fact the relative cost of I/O com- Assuming we have a Bit-Sliced index on dollar_sales as defined pared to CPU holds for all methods. Table 3.2 shows that the in Example 2.2, we can calculate SUM(dollar_sales) with the Bit-sliced index is the most efficient for this problem, with the pseudo code of Algorithm 3.2. Projection index and Value-List index a close second and third. The Projection index is so much better than the fourth ranked Algorithm 3.2. Evaluating SUM(C) with a Bit-Sliced Index plan of accessing the rows that one would prefer it even if thir- / * We are given a Bit-Sliced index for C, containing bitmaps teen different columns were to be summed, notwithstanding the Bi, i = 0 to N (N = 19), Bn and Bnn, as in Example 2.2 savings to be achieved by summing all the different columns and Definition 2.1. */ from the same memory-resident row. If (COUNT(Bf AND Bnn) == 0) Return null; Method $Cost for $Cost for $Cost for SUM = 0.00 2K ins 5K ins 10K ins For i = 0 to N per I/O per I/O per I/O SUM += 2i * COUNT(Bi AND Bf); Add from Rows $8079 $8127 $8207 Return SUM; Projection index $603 $606 $612 u Value-List index $632 $636 $642 Bit-Sliced index $418 $421 $425 With Algorithm 3.2, we can calculate a SUM by performing 21 ANDs and 21 COUNTs of 100,000,000 bit Bitmaps. Each Bitmap is 12.5 MBytes in length, requiring 3125 I/Os, but we Table 3.2. Dollar costs of four plans for SUM assume that Bf can remain in memory after the first time it is read. Therefore, we need to read a total of 22 Bitmaps from disk, using 3.1.2 Varying Foundset Density and Clustering 22*3125 = 68,750 I/Os, a bit over half the number needed in Query Plan 2. For CPU, we need to AND 21 pairs of Bitmaps, Changing the number of rows in the Foundset has little effect on which is done by looping through the Bitmaps in long int the Value-List index or Bit-Sliced index algorithms, because the chunks, a total number of loop passes on a 32-bit machine equal entire index must still be read in both cases. However, the algo- to: 21*(100,000,000/32) = 65,625,000. Then we need to per- rithms Add from rows and using a Projection index entail work form 21 COUNTs, looping through Bitmaps in half-word proportional to the number of rows in the foundset. We stop chunks, with 131,250,000 passes. However, all these considering the plan to Add from rows in what follows. 196,875,000 passes to perform ANDs and COUNTs are single instruction loops, and thus presumably take a good deal less Suppose the Foundset contains kM (k million) rows, clustered time than the 100,000,000 multi-instruction loops of Plan 2. on a fraction f of the disk space. Both the Projection and Bit- Sliced index algorithms can take advantage of the clustering. 3.1.1 Comparing Algorithm Performance The table below shows the comparison between the three index algorithms. Table 3.1 compares the above four Query Plans to calculate SUM, in terms of I/O and factors contributing to CPU. Method I/O CPU contributions Projection index f . 100K I/O + kM . (10 ins) Method I/O CPU contributions Value-List index 103K I/O + 100M . (10 ins) Add from Rows 1,341K I/O + 2M*(25 ins) Bit-Sliced index f . 69K I/O + f .197M . (1 ins) Projection index 100K I/O + 2M *(10 ins) Value-List index 103K I/O + 100M *(10 ins) Table 3.3. Costs of four plans, I/O and CPU factors, with Bit-Sliced index 69K I/O + 197M *(1 ins) kM rows and clustering fraction f Clearly there is a relationship between k and f in Table 3.3, since Table 3.1. I/O and CPU factors for the four plans for k = 100, 100M rows sit on a fraction f = 1.0 of the table, we must have k ≤ f.100. Also, if f becomes very small compared to We can compare the four query plans in terms of dollar cost by k/100, we will no longer pick up every page in the Projection converting I/O and CPU costs to dollar amounts, as in [GP87]. or Bit-Sliced index. In what follows, we assume that f is suffi- In 1997, a 2 GB hard disk with a 10 ms access time costs ciently large that the I/O approximations in Table 3.3 are valid. roughly $600. With the I/O rate we have been assuming, this is approximately $6.00 per I/O per second. A 200 MHz Pentium The dollar cost of I/O continues to dominate total dollar cost of computer, which processes approximately 150 MIPS (million in- the plans when each plan is submitted once every 1000 seconds. structions per second), costs roughly $1800, or approximately For the Projection index, the I/O cost is f.$600. The CPU cost, $12.00 per MIPS. If we assume that each of the plans above is -6-

7 .assuming that I/O requires 10K instructions is: To calculate MEDIAN(C) with C a keyvalue in a Value-List in- ((f.100 .10,000+k .1000 .10)/1,000,000).$12. Since k ≤ f.100, the dex, one loops through the non-null values of C in decreasing formula f.100.10,000 + k.1000.10 ≤ f.100.10,000 + f.100.1000.10 (or increasing) order, keeping a count of rows encountered, until = f.2,000,000. Thus, the total CPU cost is bounded above by for the first time with some value v the number of rows encoun- f.$24, which is still cheap compared to an I/O cost of f.$600. Yet tered so far is greater than COUNT(Bf AND Bnn )/2. Then v is this is the highest cost we assume for CPU due to I/O, which is the MEDIAN. Projection indexes are not useful for evaluating the dominant CPU term. In Table 3.4, we give the maximum dol- MEDIAN, unless the number of rows in the Foundset is very lar cost for each index approach. small, since all values have to be extracted and sorted. Surprisingly, a Bit-Sliced index can also be used to determine Method $Cost for 10K the MEDIAN, in about the same amount of time as it takes to de- ins per I/O termine SUM (see [O'NQUA]). Projection index f.$624 The N-TILE aggregate function finds values v1 , v2 , . . ., vN-1 , Value-List index $642 which partition the rows in Bf into N sets of (approximately) Bit-Sliced index f.$425 equal size based on the interval in which their C value falls: C <= v1, v1 < C <= v2, . . ., vN-1 < C. MEDIAN equals 2-TILE. Table 3.4. Costs of the four plans in dollars, with kM rows and clustering fraction f An example of a COLUMN-PRODUCT aggregate function is one which involves the product of different columns. In the The clustered case clearly affects the plans by making the TPC-D benchmark, the LINEITEM table has columns Projection and Bit-Sliced indexes more efficient compared to the L_EXTENDEDPRICE and L_DISCOUNT. A large number of Value-List index. queries in TPC-D retrieve the aggregate: SUM(L_EXTENDEDPRICE*(1-L_DISCOUNT)), usually with 3.2 Evaluating Other Column Aggregate Functions the column alias "REVENUE". The most efficient method for cal- culating Column-Product Aggregates uses Projection indexes We consider aggregate functions of the form in [3.2], where for the columns involved. It is possible to calculate products of AGG is an aggregate function, such as COUNT, MAX, MIN, etc. columns using Value-List or Bit-Sliced indexes, with the sort of algorithm that was used for SUM, but in both cases, Foundsets [3.2] SELECT AGG(C) FROM T WHERE condition; of all possible cross-terms of values must be formed and counted, so the algorithm are terribly inefficient. Table 3.5 lists a group of aggregate functions and the index types to evaluate these functions. We enter the value "Best" in a 4. Evaluating Range Predicates cell if the given index type is the most efficient one to have for this aggregation, "Slow" if the index type works but not very ef- Consider a Select statement of the following form: ficiently, etc. Note that Table 3.5 demonstrates how different in- dex types are optimal for different aggregate situations. [4.1] SELECT target-list FROM T WHERE C-range AND <condition>; Aggregate Value-List Projection Bit-Sliced Index Index Index Here, C is a column of T, and <condition> is a general search- COUNT Not needed Not needed Not needed condition resulting in a Foundset Bf. The C-range represents a SUM Not bad Good Best range predicate, {C > c1, C >= c1, C = c1, C >= c1, C > c1, C be- AVG ( SUM/COUNT) Not bad Good Best tween c1 and c2}, where c1 and c2 are constant values. We will MAX and MIN Best Slow Slow demonstrate below how to further restrict the Foundset Bf, creat- MEDIAN, N-TILE Usually Not Useful Sometimes ing a new Foundset BF, so that the compound predicate "C-range Best Best 2 AND <condition>" holds for exactly those rows contained in Column-Product Very Slow Best Very Slow B F. We do this with varying assumptions regarding index types on the column C. Table 3.5. Tabulation of Performance by Index Type for Evaluating Aggregate Functions Evaluating the Range using a Projection Index. If there is a Projection index on C, we can create BF by accessing each C The COUNT and SUM aggregates have already been covered. value in the index corresponding to a row number in Bf and test- COUNT requires no index, and AVG can be evaluated as ing whether it lies within the specified range. SUM/COUNT, with performance determined by SUM. Evaluating the Range using a Value-List Index. With a The MAX and MIN aggregate functions are best evaluated with Value-List index, evaluation the C-range restriction of [4.1] uses a Value-List index. To determine MAX for a Foundset Bf, one an algorithm common in most database products, looping loops from the largest value in the Value-List index down to the through the index entries for the range of values. We vary smallest, until finding a row in Bf. To find MAX and MIN using slightly by accumulating a Bitmap Br as an OR of all row sets in a Projection index, one must loop through all values stored. the index for values that lie in the specified range, then AND The algorithm to evaluate MAX or MIN using a Bit-Sliced index this result with Bf to get BF. See Algorithm 4.1. is given in our extended paper, [O'NQUA], together with other algorithms not detailed in this Section. Note that for Algorithm 4.1 to be efficiently performed, we must find some way to guarantee that the Bitmap Br remains in memory 2Best only if there is a clustering of rows in B in a local at all times as we loop through the values v in the range. This region, a fraction f of the pages, f ≤ 0.755. requires some forethought in the Query Optimizer if the table T -7-

8 .being queried is large: 100 million rows will mean that a to test each value and, if the row passes the range test, to turn on Bitmap Br of 12.5 MBytes must be kept resident. the appropriate bit in a Foundset. Algorithm 4.1. Range Predicate with a Value-List Index As we have just seen, it is possible to determine the Foundset of Br = the empty set rows in a range using Bit-Sliced indexes. We can calculate the For each entry v in the index for C that satisfies the range predicate c2 >= C >= c1 using a Bit-Sliced index by calcu- range specified lating BGE for c1 and BLE for c2, then ANDing the two. Once Designate the set of rows with the value v as Bv again the calculation is generally comparable in cost to calculat- Br = Br OR Bv ing a SUM aggregate, as seen in Fig. 3.2. BF = Bf AND Br u With a Value-List index, algorithmic effort is proportional to the width of the range, and for a wide range, it is comparable to the Evaluating the Range using a Bit-Sliced Index. Rather sur- effort needed to perform SUM for a large Foundset. Thus for wide prisingly, it is possible to evaluate range predicates efficiently ranges the Projection and Bit-Sliced indexes have a performance using a Bit-Sliced index. Given a Foundset Bf, we demonstrate advantage. For short ranges the work to perform the Projection and Bit-Sliced algorithms remain nearly the same (assuming the in Algorithm 4.2 how to evaluate the set of rows BGT such that range variable is not a clustering value), while the work to per- C > c1, BGE such that C >= c1, BEQ such that C = c1, BLE such form the Value-List algorithm is proportional to the number of that C <= c1, BLT such that C < c1. rows found in the range. Eventually as the width of the range decreases the Value-List algorithm is the better choice. These In use, we can drop Bitmap calculations in Algorithm 4.2 that considerations are summarized in Table 4.1. do not evaluate the condition we seek. If we only need to eval- uate C >= c1, we don't need steps that evaluate BLE or BLT. Range Evaluation Value-List Projection Bit-Sliced Index Index Index Algorithm 4.2. Range Predicate with a Bit-Sliced Index Narrow Range Best Good Good BGT = BLT = the empty set; BEQ = Bnn Wide Range Not bad Good Best For each Bit-Slice Bi for C in decreasing significance If bit i is on in constant c1 BLT = BLT OR (BEQ AND NOT(Bi)) Table 4.1. Range Evaluation Performance by Index Type BEQ = BEQ AND Bi 4.2 Range Predicate with a Non-Binary Bit-Sliced Index else BGT = BGT OR (BEQ AND Bi) Sybase IQ was the first product to demonstrate in practice that BEQ = BEQ AND NOT(Bi) the same Bit-Sliced index, called the "High NonGroup Index" BEQ = BEQ AND Bf; [EDEL95], could be used both for evaluating range predicates BGT = BGT AND Bf; BLT = BLT AND Bf (Algorithm 4.2) and performing Aggregates (Algorithm 3.2, et BLE = BLT OR BEQ; BGE = BGT OR BEQ al). For many years, MODEL 204 has used a form of indexing to u evaluate range predicates, known as "Numeric Range" [M204]. Numeric Range evaluation is similar to Bit-Sliced Algorithm Proof that BEQ BGT and BGE are properly evaluated. The 4.2, except that numeric quantities are expressed in a larger base method to evaluate BEQ clearly determines all rows with C = c1, (base 10). It turns out that the effort of performing a range re- since it requires that all 1-bits on in c1 be on and all 0-bits 0 in trieval can be reduced if we are willing to store a larger number c1 be off for all rows in BEQ. Next, note that BGT is the OR of a of Bitmaps. In [O'NQUA] we show how Bit-Sliced Algorithm 4.2 can be generalized to base 8, where the Bit-Slices represent set of Bitmaps with certain conditions, which we now describe. sets of rows with octal digit Oi ≥ c, c a non-zero octal digit. Assume that the bit representation of c1 is bN b N-1 . . .b1 b 0 , and This is a generalization of Binary Bit-Slices, which represent sets of rows with binary digit Bi ≥ 1. that the bit representation of C for some row r in the database is rNrN-1. . .r1r0. For each bit position i from 0 to N with bit bi off in 5. Evaluating OLAP-style Queries c1, a row r will be in BGT if bit ri is on and bits rNrN-1. . .r1ri+1 are all equal to bits bN b N-1 . . .bi+1 . It is clear that C > c1 for any Figure 5.1 pictures a star-join schema with a central fact table, such row r in BGT . Furthermore for any value of C > c1, there SALES, containing sales data, together with dimension tables must be some bit position i such that the i-th bit position in c1 known as TIME (when the sales are made), PRODUCT (product is off, the i-th bit position of C is on, and all more-significant sold), and CUSTOMER (purchaser in the sale). Most OLAP bits in the two values are identical. Therefore, Algorithm 4.2 products do not express their queries in SQL, but much of the properly evaluates BGT. u work of typical OLAP queries could be represented in SQL [GBLP96] (although more than one query might be needed). 4.1 Comparing Algorithm Performance [5.1] SELECT P.brand, T.week, C.city, SUM(S.dollar_sales) Now we compare performance of these algorithms to evaluate a FROM SALES S, PRODUCT P, CUSTOMER C, TIME T range predicate, "C between c1 and c2". We assume that C val- WHERE S.day = T.day and S.cid = C.cid ues are not clustered on disk. The cost of evaluating a range and S.pid = P.pid and P.brand = :brandvar predicate using a Projection index is similar to evaluating SUM and T.week >= :datevar and C.state in using a Projection index, as seen in Fig. 3.2. We need the I/O to ('Maine', 'New Hampshire', 'Vermont', access each of the index pages with C values plus the CPU cost 'Massachusetts', 'Connecticut', 'Rhode Island') GROUP BY P.brand, T.week, C.city; -8-

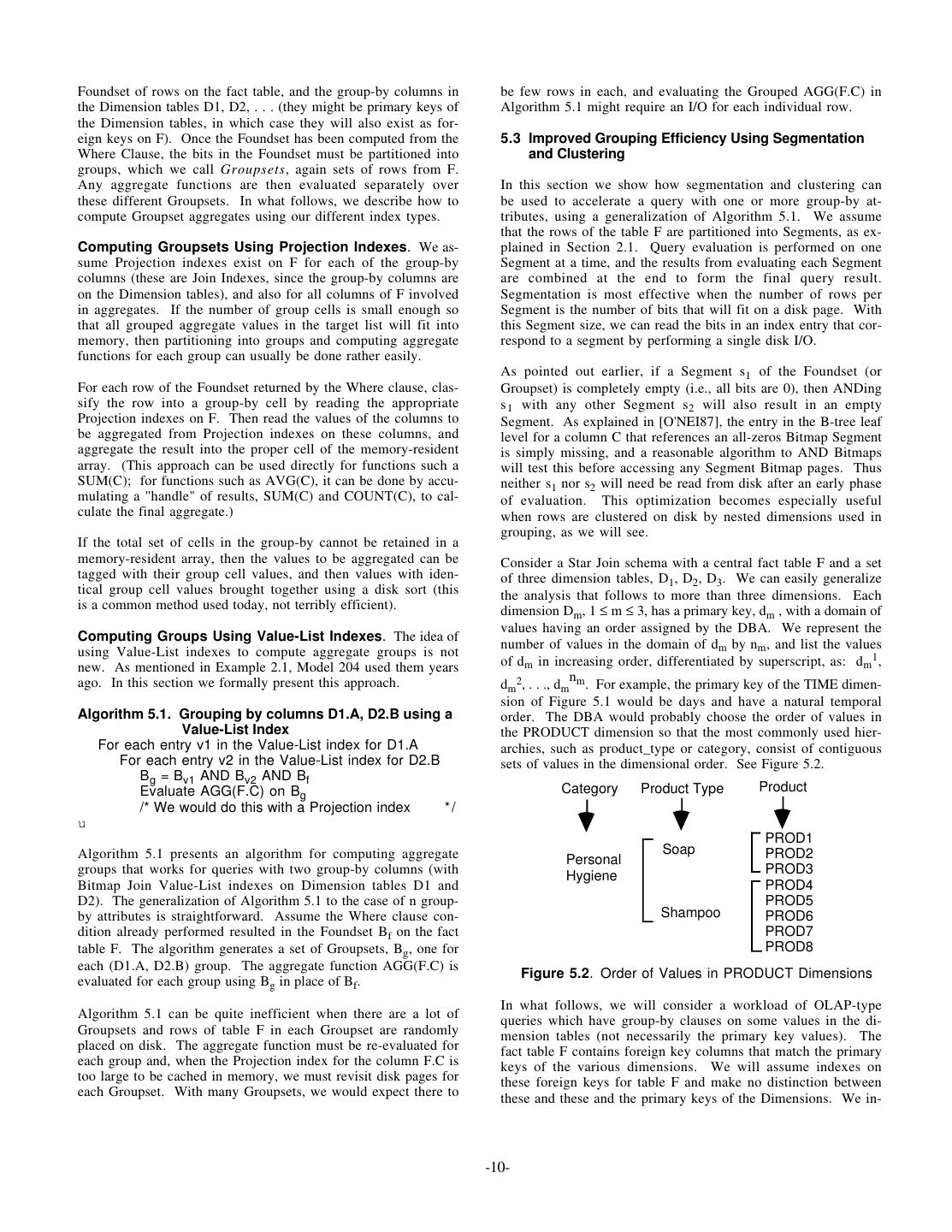

9 .Query [5.1] retrieves total dollar sales that were made for a prod- of data in the summary tables grows as the product of the number uct brand during the past 4 weeks to customers in New of values in the independent dimensions (counting values of hi- England. erarchies within each dimension), it soon becomes impossible to provide dimensions for all possible restrictions. The goal of this section is to describe and analyze a variant indexing ap- CUS TO ME R P RO DUCT proach that is useful for evaluating OLAP-style queries Dime ns ion Dime ns ion quickly, even when the queries cannot make use of preaggrega- tion. To begin, we need to explain Join indexes. cid pid gender SKU 5.1 Join Indexes city S ALE S Fa c t brand Definition 5.1. Join Index. A Join index is an index on one state size table for a quantity that involves a column value of a different cid weight table through a commonly encountered join u zip pid package_type hobby day Join indexes can be used to avoid actual joins of tables, or to dollar_sales greatly reduce the volume of data that must be joined, by per- TI ME dollar_cost forming restrictions in advance. For example, the Star Join index Dime ns io — invented a number of years ago — concatenates ordinal en- unit_sales codings of column values from different dimension tables of a n Star schema, and lists RIDs in the central fact table for each con- day catenated value. The Star Join index was the best approach week known in its day, but there is a problem with it, comparable to the problem with summary tables. If there are numerous columns month used for restrictions in each dimension table, then the number of year Star Join indexes needed to be able to combine arbitrary column holiday_flg restrictions from each dimension table is a product of the number weekday_flg of columns in each dimension. Thus, there will be a "combinato- rial explosion" of Join Indexes in terms of the number of inde- pendent columns. Figure 5.1. Star Join Schema of SALES, CUSTOMER, PRODUCT, and TIME The Bitmap join index, defined in [O'NGG95], addresses this problem. In its simplest form, this is an index on a table T based An important advantage of OLAP products is evaluating such on a single column of a table S, where S commonly joins with T queries quickly, even though the fact tables are usually very in a specified way. For example, in the TPC-D benchmark large. The OLAP approach precalculates results of some database, the O_ORDERDATE column belongs to the ORDER Grouped queries and stores them in what we have been calling table, but two TPC-D queries need to join ORDER with summary tables. For example, we might create a summary table LINEITEM to restrict LINEITEM rows to a range of where sums of Sales.dollar_sales and sums of Sales.unit_sales O_ORDERDATE. This can better be accomplished by creating are precalculated for all combination of values at the lowest an index for the value ORDERDATE on the LINEITEM table. level of granularity for the dimensions, e.g., for C.cid values, This doesn't change the design of the LINEITEM table, since T.day values, and P.pid values. Within each dimension there are the index on ORDERDATE is for a virtual column through a also hierarchies sitting above the lowest level of granularity. A join. The number of indexes of this kind increases linearly with week has 7 days and a year has 52 weeks, and so on. Similarly, a the number of useful columns in all dimension tables. We de- customer exists in a geographic hierarchy of city and state. pend on the speed of combining Bitmapped indexes to create ad- When we precalculate a summary table at the lowest dimen- hoc combinations, and thus the explosion of Star Join indexes sional level, there might be many rows of detail data associated because of different combinations of dimension columns is not a with a particular cid, day, and pid (a busy product reseller cus- problem. Another way of looking at this is that Bitmap join in- tomer), or there might be none. A summary table, at the lowest dexes are Recombinant, whereas Star join indexes are not. level of granularity, will usually save a lot of work, compared to detailed data, for queries that group by attributes at higher lev- The variant indexes of the current paper lead to an important els of the dimensional hierarchy, such as city (of customers), point, that Join indexes can be of any type: Projection, Value- week, and brand. We would typically create many summary ta- List, or Bit-Sliced. To speed up Query [5.1], we use Join in- bles, combining various levels of the dimensional hierarchies. dexes on the SALES fact table for columns in the dimensions. If The higher the dimensional levels, the fewer elements in the appropriate join indexes exist for all dimension table columns summary table, but there are a lot of possible combinations of hi- mentioned in the queries, then explicit joins with dimension ta- erarchies. Luckily, we don't need to create all possible summary bles may no longer be necessary at all. Using Value-List or Bit- tables in order to speed up the queries a great deal. For more de- Sliced join indexes we can evaluate the selection conditions in tails, see [STG95, HRU96]. the Where Clause to arrive at a Foundset on SALES, and using Projection join indexes we can then retrieve the dimensional By doing the aggregation work beforehand, summary tables pro- values for the Query [5.1] target-list, without any join needed. vide quick response to queries, so long as all selection condi- tions are restrictions on dimensions that have been foreseen in 5.2 Calculating Groupset Aggregates advance. But, as we pointed out in Example 1.1, if some restric- tions are non-dimensional, such as temperature, then summary We assume that in star-join queries like [5.1], an aggregation is tables sliced by dimensions will be useless. And since the size performed on columns of the central fact table, F. There is a -9-

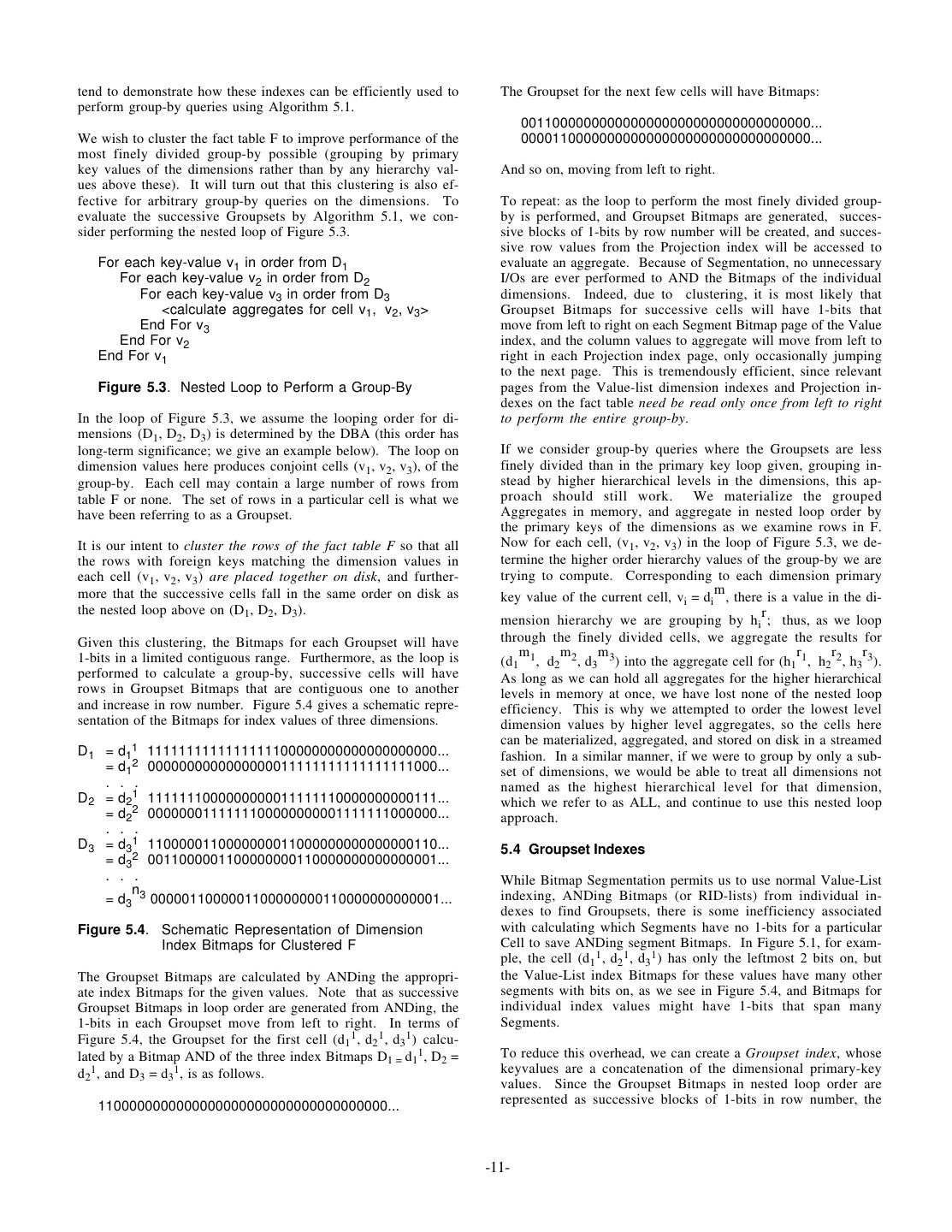

10 .Foundset of rows on the fact table, and the group-by columns in be few rows in each, and evaluating the Grouped AGG(F.C) in the Dimension tables D1, D2, . . . (they might be primary keys of Algorithm 5.1 might require an I/O for each individual row. the Dimension tables, in which case they will also exist as for- eign keys on F). Once the Foundset has been computed from the 5.3 Improved Grouping Efficiency Using Segmentation Where Clause, the bits in the Foundset must be partitioned into and Clustering groups, which we call Groupsets, again sets of rows from F. Any aggregate functions are then evaluated separately over In this section we show how segmentation and clustering can these different Groupsets. In what follows, we describe how to be used to accelerate a query with one or more group-by at- compute Groupset aggregates using our different index types. tributes, using a generalization of Algorithm 5.1. We assume that the rows of the table F are partitioned into Segments, as ex- Computing Groupsets Using Projection Indexes. We as- plained in Section 2.1. Query evaluation is performed on one sume Projection indexes exist on F for each of the group-by Segment at a time, and the results from evaluating each Segment columns (these are Join Indexes, since the group-by columns are are combined at the end to form the final query result. on the Dimension tables), and also for all columns of F involved Segmentation is most effective when the number of rows per in aggregates. If the number of group cells is small enough so Segment is the number of bits that will fit on a disk page. With that all grouped aggregate values in the target list will fit into this Segment size, we can read the bits in an index entry that cor- memory, then partitioning into groups and computing aggregate respond to a segment by performing a single disk I/O. functions for each group can usually be done rather easily. As pointed out earlier, if a Segment s1 of the Foundset (or For each row of the Foundset returned by the Where clause, clas- Groupset) is completely empty (i.e., all bits are 0), then ANDing sify the row into a group-by cell by reading the appropriate s 1 with any other Segment s2 will also result in an empty Projection indexes on F. Then read the values of the columns to Segment. As explained in [O'NEI87], the entry in the B-tree leaf be aggregated from Projection indexes on these columns, and level for a column C that references an all-zeros Bitmap Segment aggregate the result into the proper cell of the memory-resident is simply missing, and a reasonable algorithm to AND Bitmaps array. (This approach can be used directly for functions such a will test this before accessing any Segment Bitmap pages. Thus SUM(C); for functions such as AVG(C), it can be done by accu- neither s1 nor s2 will need be read from disk after an early phase mulating a "handle" of results, SUM(C) and COUNT(C), to cal- of evaluation. This optimization becomes especially useful culate the final aggregate.) when rows are clustered on disk by nested dimensions used in grouping, as we will see. If the total set of cells in the group-by cannot be retained in a memory-resident array, then the values to be aggregated can be Consider a Star Join schema with a central fact table F and a set tagged with their group cell values, and then values with iden- of three dimension tables, D1, D2, D3. We can easily generalize tical group cell values brought together using a disk sort (this the analysis that follows to more than three dimensions. Each is a common method used today, not terribly efficient). dimension Dm, 1 ≤ m ≤ 3, has a primary key, dm , with a domain of values having an order assigned by the DBA. We represent the Computing Groups Using Value-List Indexes. The idea of number of values in the domain of dm by nm , and list the values using Value-List indexes to compute aggregate groups is not new. As mentioned in Example 2.1, Model 204 used them years of dm in increasing order, differentiated by superscript, as: dm 1, n ago. In this section we formally present this approach. dm2, . . ., dm m. For example, the primary key of the TIME dimen- sion of Figure 5.1 would be days and have a natural temporal Algorithm 5.1. Grouping by columns D1.A, D2.B using a order. The DBA would probably choose the order of values in Value-List Index the PRODUCT dimension so that the most commonly used hier- For each entry v1 in the Value-List index for D1.A archies, such as product_type or category, consist of contiguous For each entry v2 in the Value-List index for D2.B sets of values in the dimensional order. See Figure 5.2. B g = Bv1 AND Bv2 AND Bf Evaluate AGG(F.C) on Bg Category Product Type Product /* We would do this with a Projection index */ u PROD1 Algorithm 5.1 presents an algorithm for computing aggregate Soap PROD2 Personal groups that works for queries with two group-by columns (with Hygiene PROD3 Bitmap Join Value-List indexes on Dimension tables D1 and PROD4 D2). The generalization of Algorithm 5.1 to the case of n group- PROD5 by attributes is straightforward. Assume the Where clause con- Shampoo PROD6 dition already performed resulted in the Foundset Bf on the fact PROD7 table F. The algorithm generates a set of Groupsets, Bg, one for PROD8 each (D1.A, D2.B) group. The aggregate function AGG(F.C) is Figure 5.2. Order of Values in PRODUCT Dimensions evaluated for each group using Bg in place of Bf. In what follows, we will consider a workload of OLAP-type Algorithm 5.1 can be quite inefficient when there are a lot of queries which have group-by clauses on some values in the di- Groupsets and rows of table F in each Groupset are randomly mension tables (not necessarily the primary key values). The placed on disk. The aggregate function must be re-evaluated for fact table F contains foreign key columns that match the primary each group and, when the Projection index for the column F.C is keys of the various dimensions. We will assume indexes on too large to be cached in memory, we must revisit disk pages for these foreign keys for table F and make no distinction between each Groupset. With many Groupsets, we would expect there to these and these and the primary keys of the Dimensions. We in- -10-

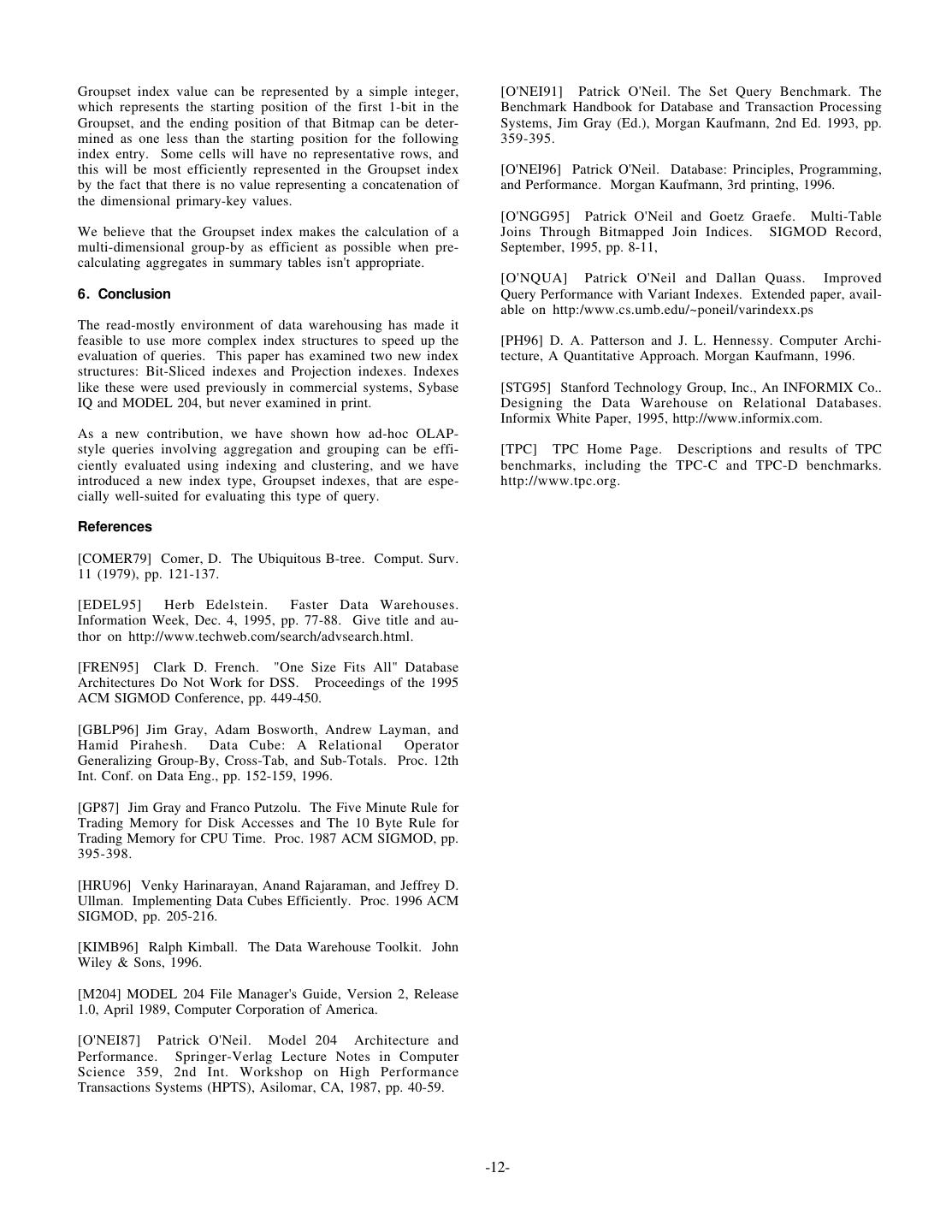

11 .tend to demonstrate how these indexes can be efficiently used to The Groupset for the next few cells will have Bitmaps: perform group-by queries using Algorithm 5.1. 0011000000000000000000000000000000000... We wish to cluster the fact table F to improve performance of the 0000110000000000000000000000000000000... most finely divided group-by possible (grouping by primary key values of the dimensions rather than by any hierarchy val- And so on, moving from left to right. ues above these). It will turn out that this clustering is also ef- fective for arbitrary group-by queries on the dimensions. To To repeat: as the loop to perform the most finely divided group- evaluate the successive Groupsets by Algorithm 5.1, we con- by is performed, and Groupset Bitmaps are generated, succes- sider performing the nested loop of Figure 5.3. sive blocks of 1-bits by row number will be created, and succes- sive row values from the Projection index will be accessed to For each key-value v1 in order from D1 evaluate an aggregate. Because of Segmentation, no unnecessary For each key-value v2 in order from D2 I/Os are ever performed to AND the Bitmaps of the individual For each key-value v3 in order from D3 dimensions. Indeed, due to clustering, it is most likely that <calculate aggregates for cell v1, v2, v3> Groupset Bitmaps for successive cells will have 1-bits that End For v3 move from left to right on each Segment Bitmap page of the Value End For v2 index, and the column values to aggregate will move from left to End For v1 right in each Projection index page, only occasionally jumping to the next page. This is tremendously efficient, since relevant Figure 5.3. Nested Loop to Perform a Group-By pages from the Value-list dimension indexes and Projection in- dexes on the fact table need be read only once from left to right In the loop of Figure 5.3, we assume the looping order for di- to perform the entire group-by. mensions (D1, D2, D3) is determined by the DBA (this order has long-term significance; we give an example below). The loop on If we consider group-by queries where the Groupsets are less dimension values here produces conjoint cells (v1, v2, v3), of the finely divided than in the primary key loop given, grouping in- group-by. Each cell may contain a large number of rows from stead by higher hierarchical levels in the dimensions, this ap- table F or none. The set of rows in a particular cell is what we proach should still work. We materialize the grouped have been referring to as a Groupset. Aggregates in memory, and aggregate in nested loop order by the primary keys of the dimensions as we examine rows in F. It is our intent to cluster the rows of the fact table F so that all Now for each cell, (v1 , v2 , v3 ) in the loop of Figure 5.3, we de- the rows with foreign keys matching the dimension values in termine the higher order hierarchy values of the group-by we are each cell (v1 , v2 , v3 ) are placed together on disk, and further- trying to compute. Corresponding to each dimension primary more that the successive cells fall in the same order on disk as m key value of the current cell, vi = di , there is a value in the di- the nested loop above on (D1, D2, D3). r mension hierarchy we are grouping by hi ; thus, as we loop Given this clustering, the Bitmaps for each Groupset will have through the finely divided cells, we aggregate the results for 1-bits in a limited contiguous range. Furthermore, as the loop is m m m r r r (d1 1, d2 2, d3 3) into the aggregate cell for (h1 1, h2 2, h3 3). performed to calculate a group-by, successive cells will have As long as we can hold all aggregates for the higher hierarchical rows in Groupset Bitmaps that are contiguous one to another levels in memory at once, we have lost none of the nested loop and increase in row number. Figure 5.4 gives a schematic repre- efficiency. This is why we attempted to order the lowest level sentation of the Bitmaps for index values of three dimensions. dimension values by higher level aggregates, so the cells here can be materialized, aggregated, and stored on disk in a streamed D 1 = d11 1111111111111111100000000000000000000... fashion. In a similar manner, if we were to group by only a sub- = d12 0000000000000000011111111111111111000... set of dimensions, we would be able to treat all dimensions not . . . named as the highest hierarchical level for that dimension, D 2 = d21 1111111000000000011111110000000000111... which we refer to as ALL, and continue to use this nested loop = d22 0000000111111100000000001111111000000... approach. . . . D 3 = d31 1100000110000000011000000000000000110... 5.4 Groupset Indexes = d32 0011000001100000000110000000000000001... . . . While Bitmap Segmentation permits us to use normal Value-List n indexing, ANDing Bitmaps (or RID-lists) from individual in- = d3 3 0000011000001100000000110000000000001... dexes to find Groupsets, there is some inefficiency associated Figure 5.4. Schematic Representation of Dimension with calculating which Segments have no 1-bits for a particular Index Bitmaps for Clustered F Cell to save ANDing segment Bitmaps. In Figure 5.1, for exam- ple, the cell (d1 1 , d2 1 , d3 1 ) has only the leftmost 2 bits on, but The Groupset Bitmaps are calculated by ANDing the appropri- the Value-List index Bitmaps for these values have many other ate index Bitmaps for the given values. Note that as successive segments with bits on, as we see in Figure 5.4, and Bitmaps for Groupset Bitmaps in loop order are generated from ANDing, the individual index values might have 1-bits that span many 1-bits in each Groupset move from left to right. In terms of Segments. Figure 5.4, the Groupset for the first cell (d1 1 , d2 1 , d3 1 ) calcu- lated by a Bitmap AND of the three index Bitmaps D1 = d11, D2 = To reduce this overhead, we can create a Groupset index, whose d21, and D3 = d31, is as follows. keyvalues are a concatenation of the dimensional primary-key values. Since the Groupset Bitmaps in nested loop order are 1100000000000000000000000000000000000... represented as successive blocks of 1-bits in row number, the -11-

12 .Groupset index value can be represented by a simple integer, [O'NEI91] Patrick O'Neil. The Set Query Benchmark. The which represents the starting position of the first 1-bit in the Benchmark Handbook for Database and Transaction Processing Groupset, and the ending position of that Bitmap can be deter- Systems, Jim Gray (Ed.), Morgan Kaufmann, 2nd Ed. 1993, pp. mined as one less than the starting position for the following 359-395. index entry. Some cells will have no representative rows, and this will be most efficiently represented in the Groupset index [O'NEI96] Patrick O'Neil. Database: Principles, Programming, by the fact that there is no value representing a concatenation of and Performance. Morgan Kaufmann, 3rd printing, 1996. the dimensional primary-key values. [O'NGG95] Patrick O'Neil and Goetz Graefe. Multi-Table We believe that the Groupset index makes the calculation of a Joins Through Bitmapped Join Indices. SIGMOD Record, multi-dimensional group-by as efficient as possible when pre- September, 1995, pp. 8-11, calculating aggregates in summary tables isn't appropriate. [O'NQUA] Patrick O'Neil and Dallan Quass. Improved 6. Conclusion Query Performance with Variant Indexes. Extended paper, avail- able on http:/www.cs.umb.edu/~poneil/varindexx.ps The read-mostly environment of data warehousing has made it feasible to use more complex index structures to speed up the [PH96] D. A. Patterson and J. L. Hennessy. Computer Archi- evaluation of queries. This paper has examined two new index tecture, A Quantitative Approach. Morgan Kaufmann, 1996. structures: Bit-Sliced indexes and Projection indexes. Indexes like these were used previously in commercial systems, Sybase [STG95] Stanford Technology Group, Inc., An INFORMIX Co.. IQ and MODEL 204, but never examined in print. Designing the Data Warehouse on Relational Databases. Informix White Paper, 1995, http://www.informix.com. As a new contribution, we have shown how ad-hoc OLAP- style queries involving aggregation and grouping can be effi- [TPC] TPC Home Page. Descriptions and results of TPC ciently evaluated using indexing and clustering, and we have benchmarks, including the TPC-C and TPC-D benchmarks. introduced a new index type, Groupset indexes, that are espe- http://www.tpc.org. cially well-suited for evaluating this type of query. References [COMER79] Comer, D. The Ubiquitous B-tree. Comput. Surv. 11 (1979), pp. 121-137. [EDEL95] Herb Edelstein. Faster Data Warehouses. Information Week, Dec. 4, 1995, pp. 77-88. Give title and au- thor on http://www.techweb.com/search/advsearch.html. [FREN95] Clark D. French. "One Size Fits All" Database Architectures Do Not Work for DSS. Proceedings of the 1995 ACM SIGMOD Conference, pp. 449-450. [GBLP96] Jim Gray, Adam Bosworth, Andrew Layman, and Hamid Pirahesh. Data Cube: A Relational Operator Generalizing Group-By, Cross-Tab, and Sub-Totals. Proc. 12th Int. Conf. on Data Eng., pp. 152-159, 1996. [GP87] Jim Gray and Franco Putzolu. The Five Minute Rule for Trading Memory for Disk Accesses and The 10 Byte Rule for Trading Memory for CPU Time. Proc. 1987 ACM SIGMOD, pp. 395-398. [HRU96] Venky Harinarayan, Anand Rajaraman, and Jeffrey D. Ullman. Implementing Data Cubes Efficiently. Proc. 1996 ACM SIGMOD, pp. 205-216. [KIMB96] Ralph Kimball. The Data Warehouse Toolkit. John Wiley & Sons, 1996. [M204] MODEL 204 File Manager's Guide, Version 2, Release 1.0, April 1989, Computer Corporation of America. [O'NEI87] Patrick O'Neil. Model 204 Architecture and Performance. Springer-Verlag Lecture Notes in Computer Science 359, 2nd Int. Workshop on High Performance Transactions Systems (HPTS), Asilomar, CA, 1987, pp. 40-59. -12-