- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Concurrency

展开查看详情

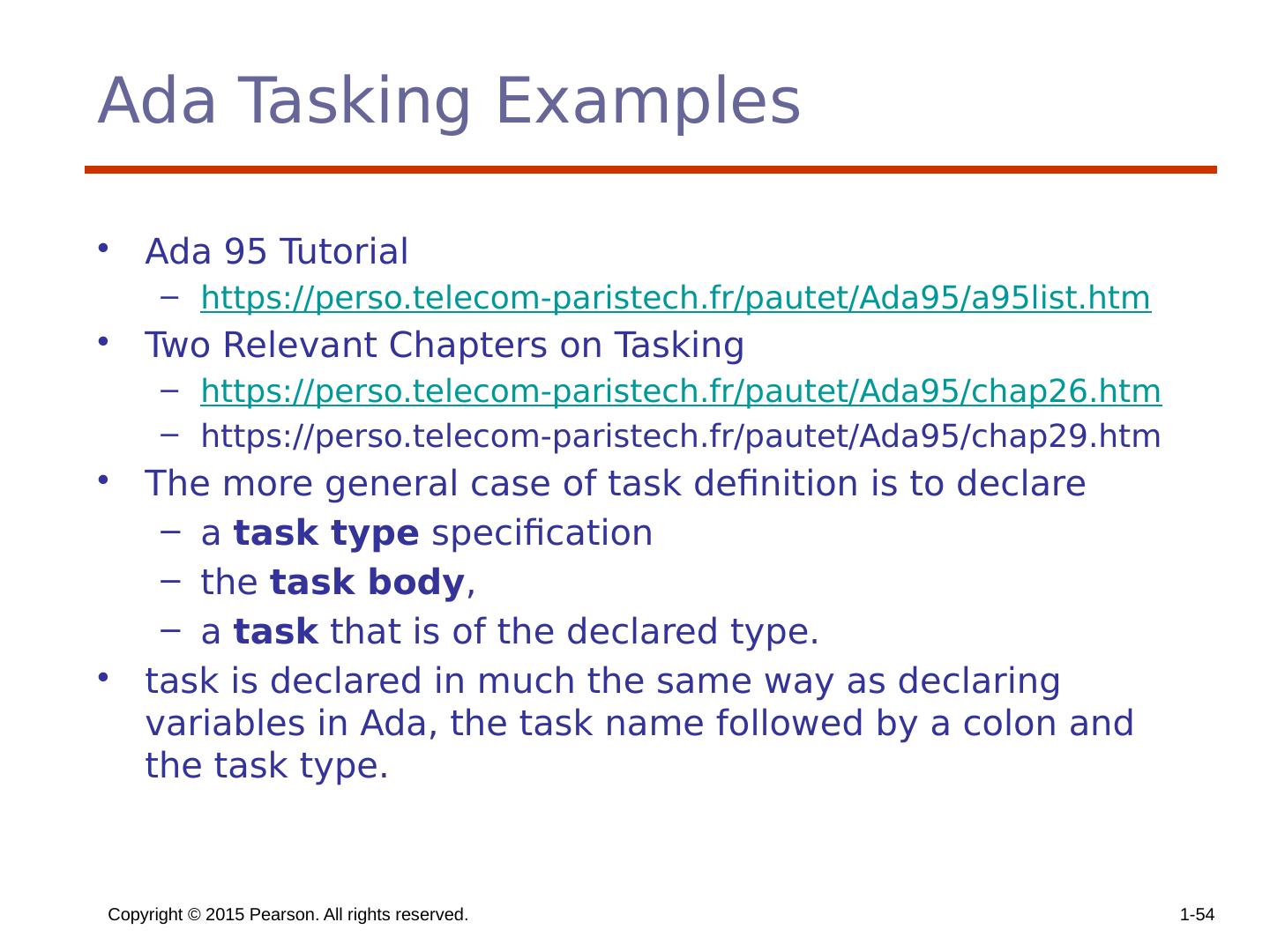

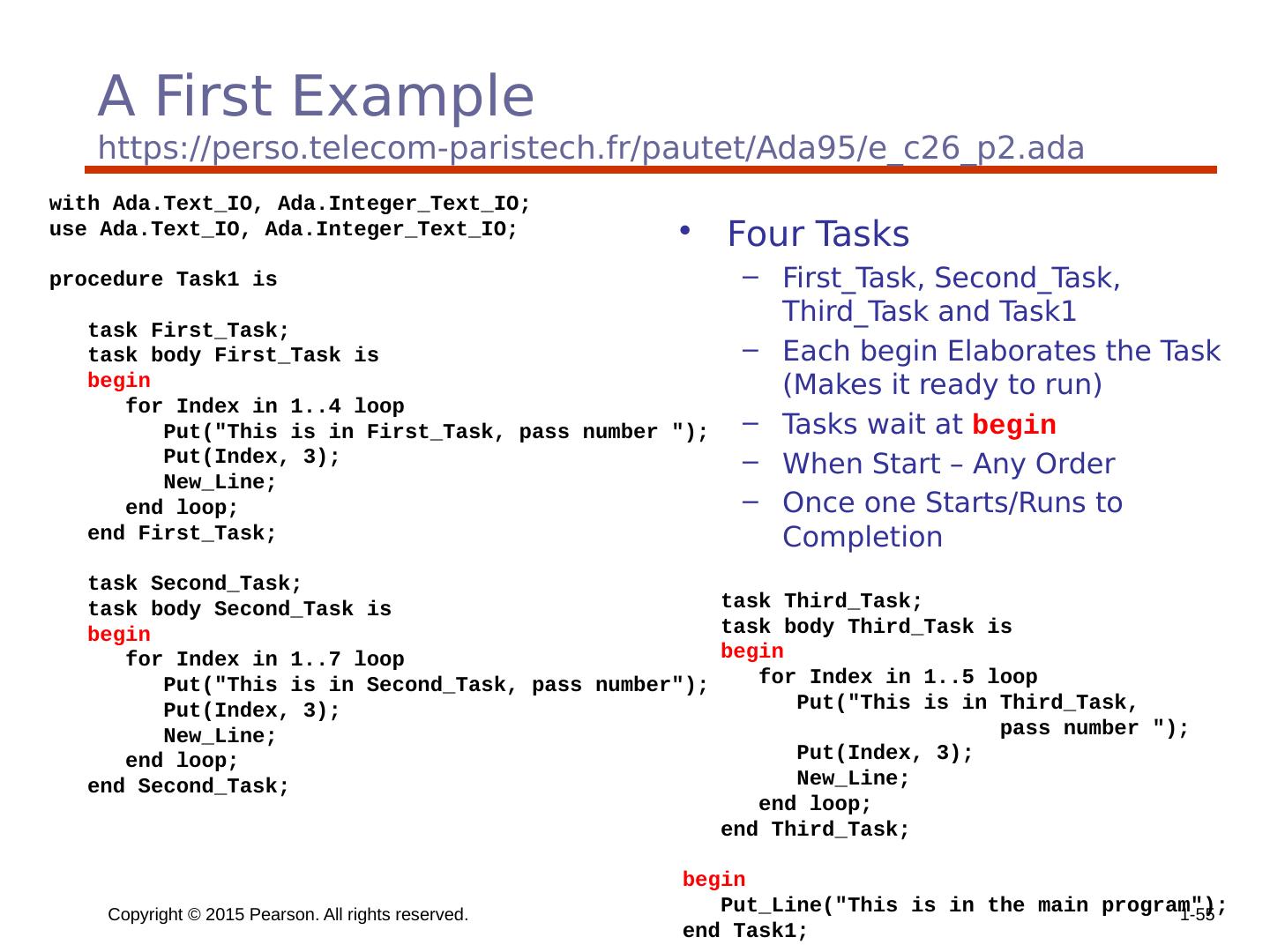

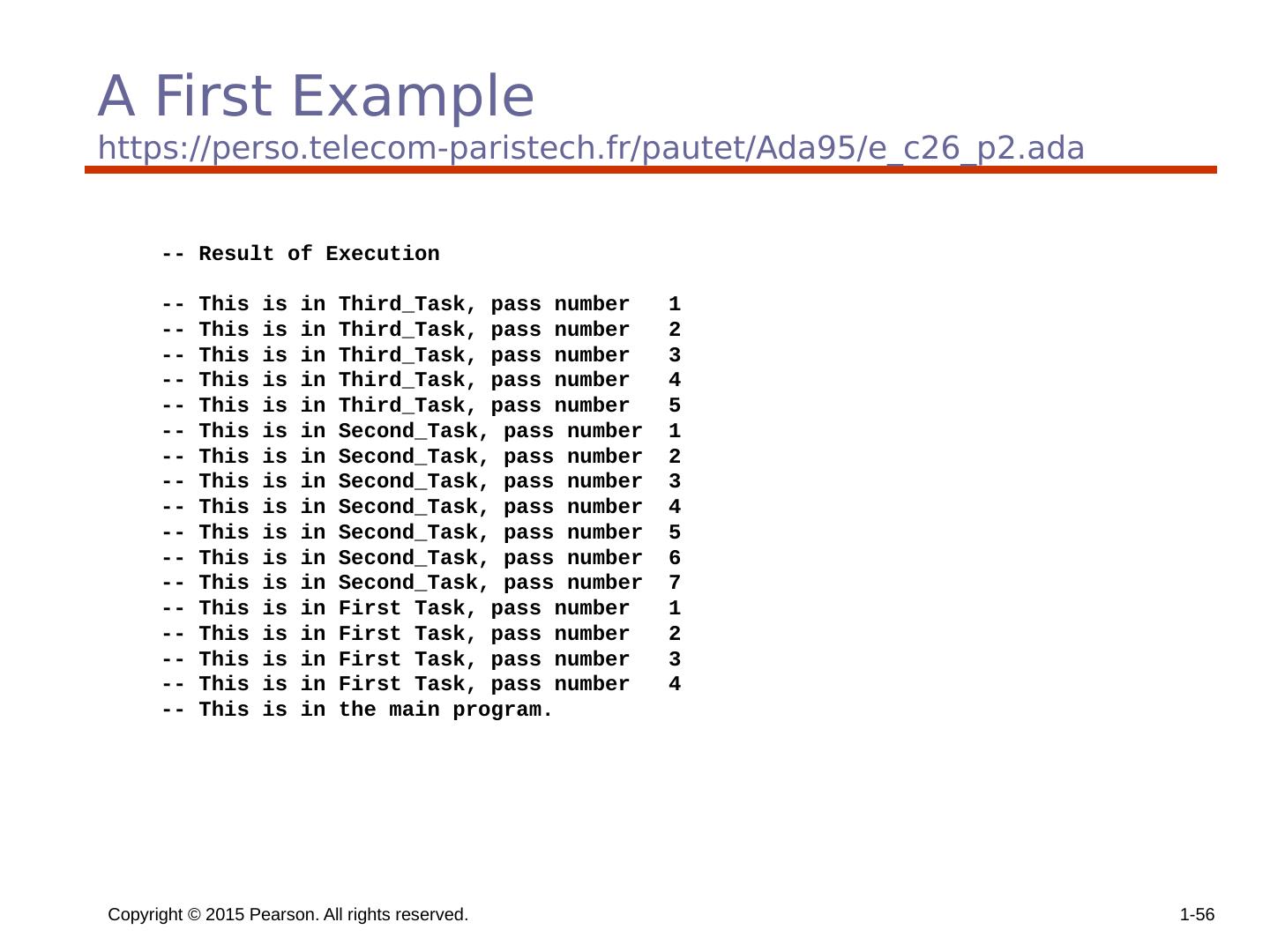

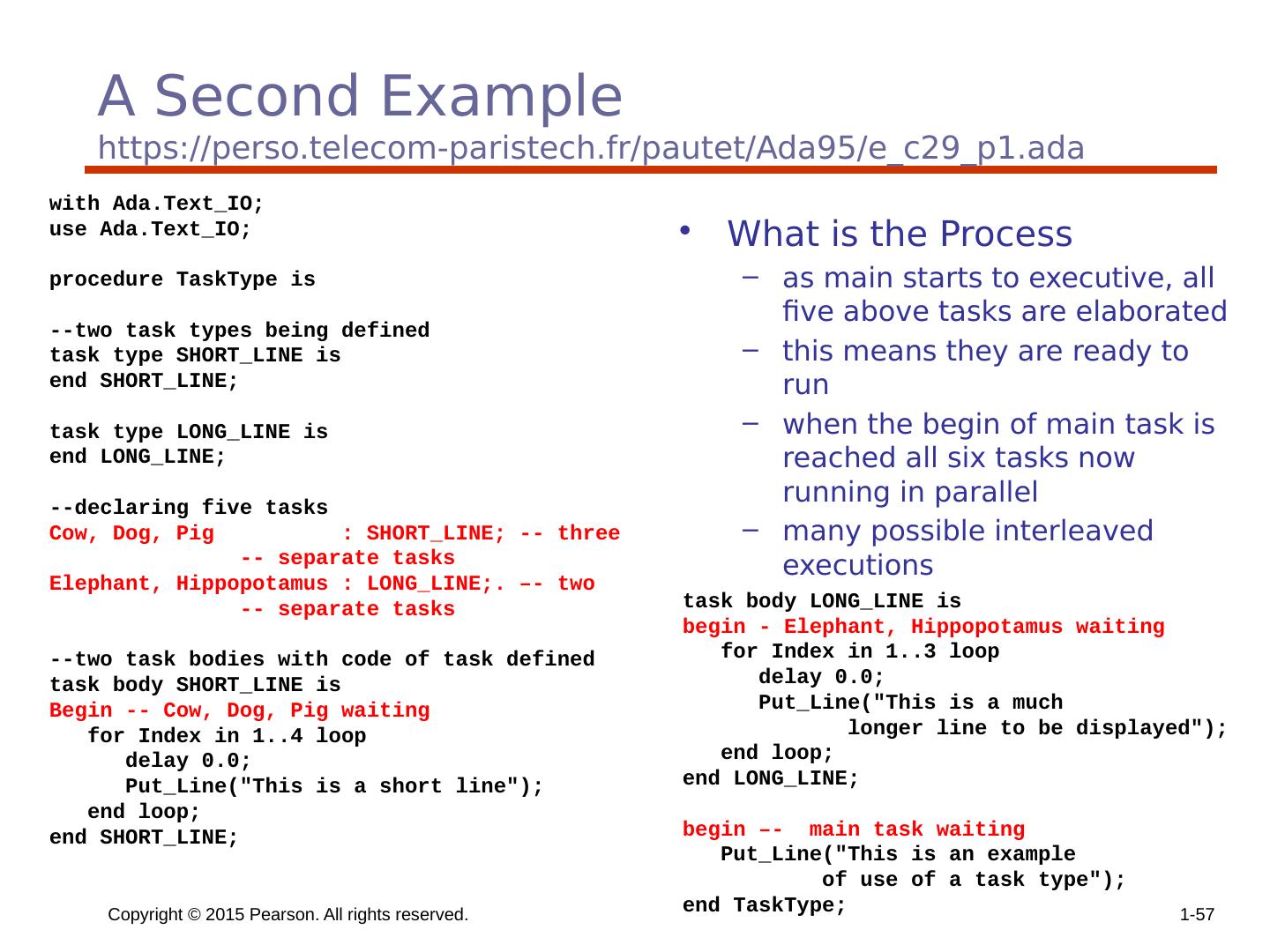

1 .Chapter 13 Concurrency

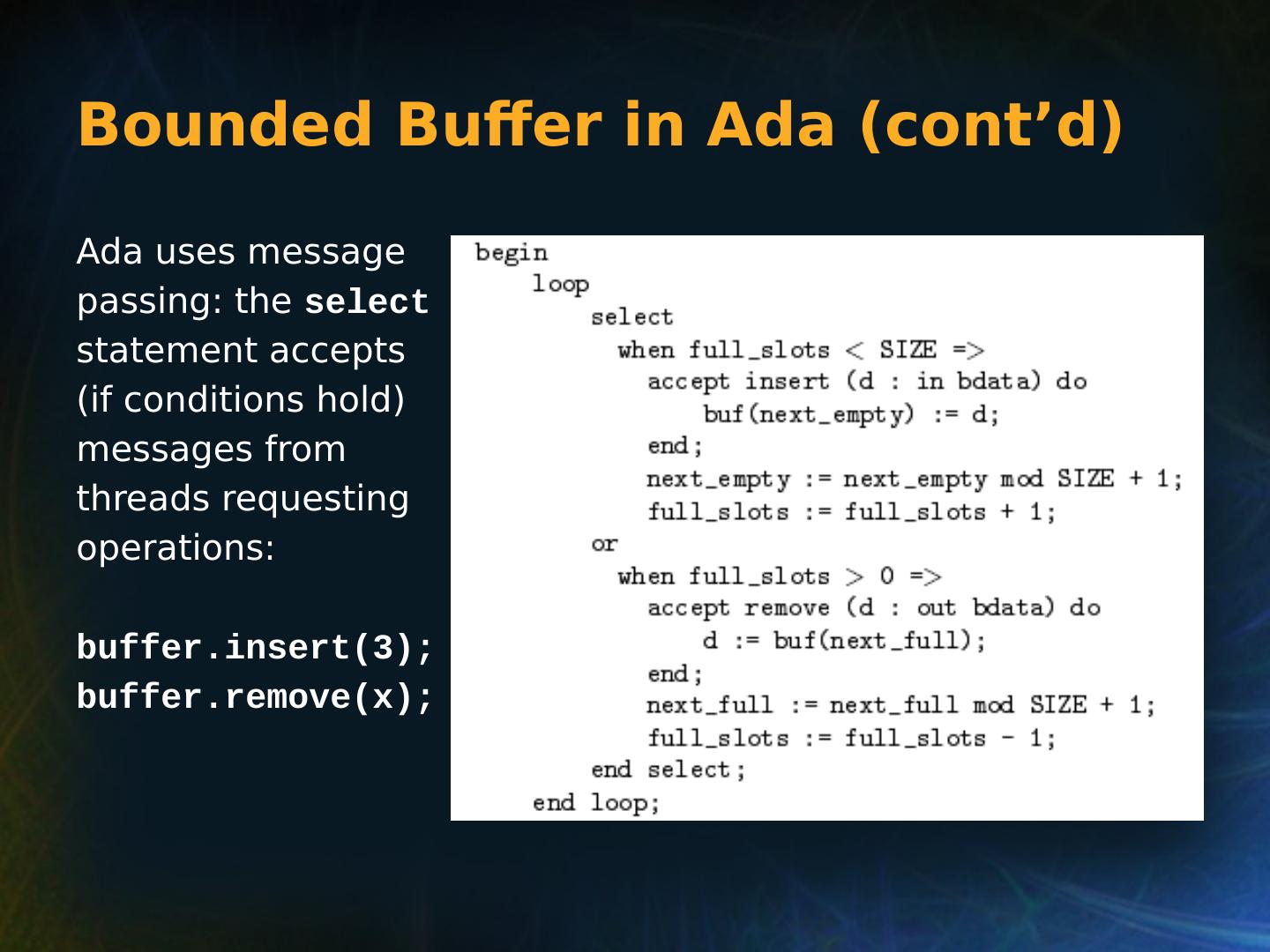

2 .Augment Sebesta Material Concurrent Programming https://www.cise.ufl.edu/class/cop5556sp16/Lecture_29.ppt Concurrency in Ada http://cs.nyu.edu/courses/fall04/G22.3110-001/tasking.ppt

3 .Copyright © 2015 Pearson. All rights reserved. 1- 3 Chapter 13 Topics History of Concurrent Programming Introduction Introduction to Subprogram-Level Concurrency Semaphores Monitors Message Passing Ada support for Concurrency Java Threads C# Threads Concurrency in Functional Languages Statement-Level Concurrency

4 .A Little History Since the beginning, differences in device speed caused lots of processor idle time. First concurrent systems introduced interrupt-driven I/O: system keeps track of which processes are waiting for I/O to complete. OS hands off I/O task, and assigns processor to a runnable process. When I/O task is complete, process sends interrupt signal to OS. OS schedules it for processor time.

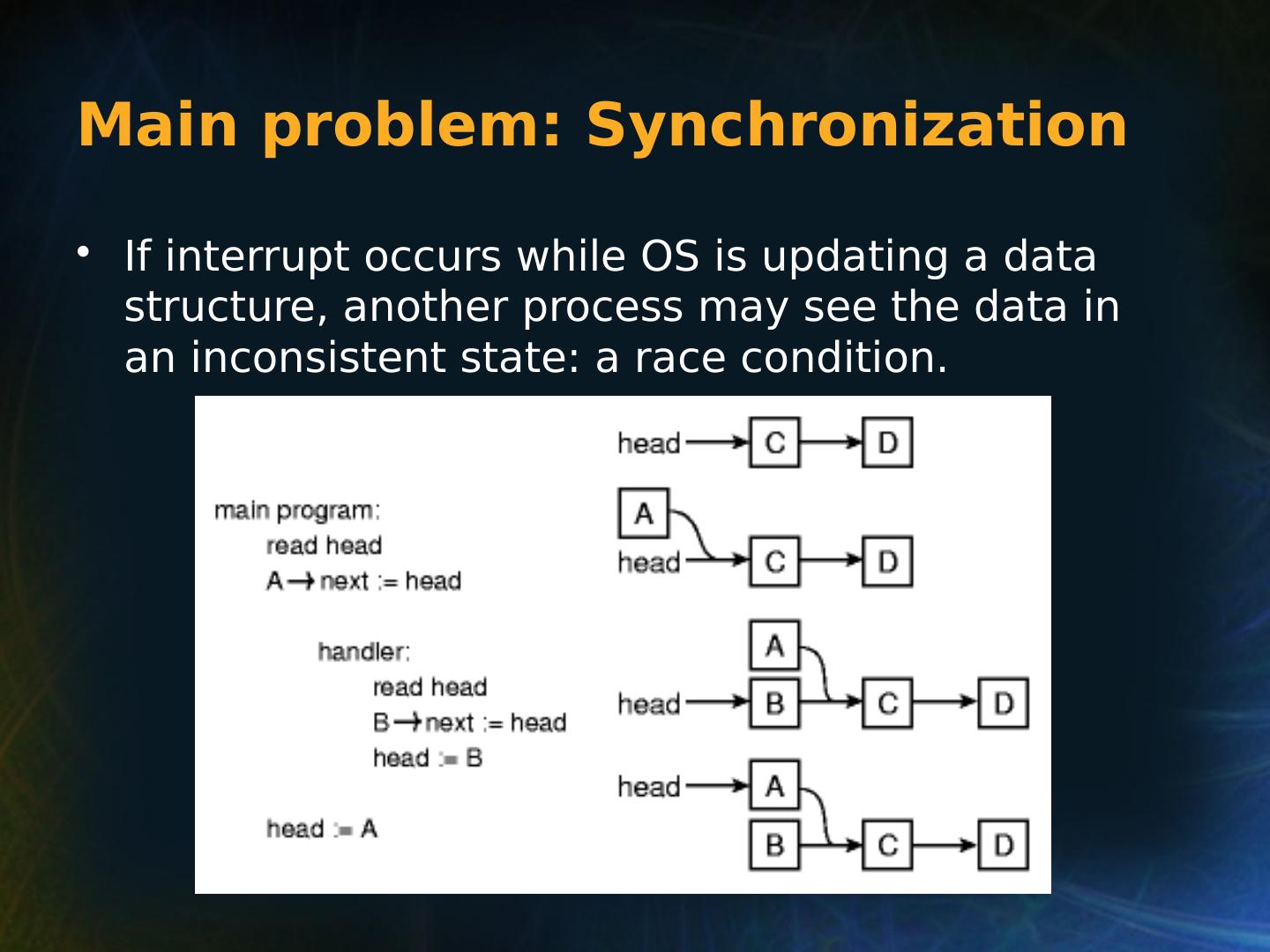

5 .Main problem: Synchronization If interrupt occurs while OS is updating a data structure, another process may see the data in an inconsistent state: a race condition.

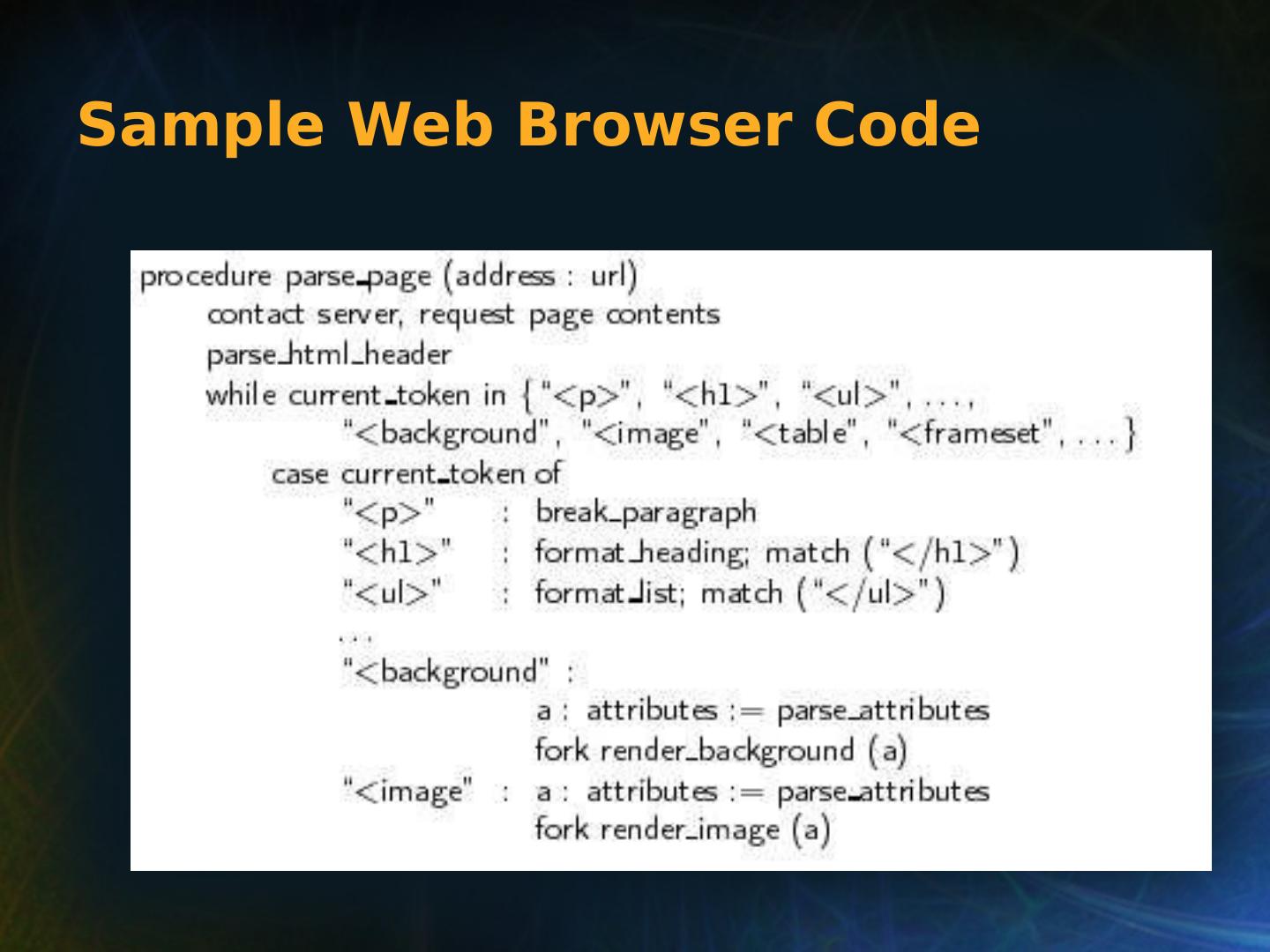

6 .A Concurrency Application (WWW) A modern-day web browser parses HTML commands, and creates many threads. User can access new items, edit bookmarks, etc. while browser renders portions of a page. Browser “forks” off processes to format and render frames.

7 .Sample Web Browser Code

8 .Communication and Synchronization Main issues in concurrency. Synchronization: control order of events. Communication: signaling information from one process to another. Two main communication models: Message-passing model: threads communicate by explicitly sending messages. Action taken upon receipt. Shared-memory model: threads communicate by reading/storing data in a common location.

9 .Communication and Synchronization In either communication model, synchronization performed by either: Busy-wait: run loop, keep checking until a certain condition holds. Blocking: relinquish processor, leave a note. Later, some other thread that changes the condition will request a “wakeup”.

10 .Copyright © 2015 Pearson. All rights reserved. 1- 10 Introduction Concurrency can occur at four levels: Machine instruction level High-level language statement level Unit level Program level Because there are no language issues in instruction- and program-level concurrency, they are not addressed here

11 .Copyright © 2015 Pearson. All rights reserved. 1- 11 Multiprocessor Architectures Late 1950s - one general-purpose processor and one or more special-purpose processors for input and output operations Early 1960s - multiple complete processors, used for program-level concurrency Mid-1960s - multiple partial processors, used for instruction-level concurrency Single-Instruction Multiple-Data (SIMD) machines Multiple-Instruction Multiple-Data (MIMD) machines A primary focus of this chapter is shared memory MIMD machines (multiprocessors)

12 .Copyright © 2015 Pearson. All rights reserved. 1- 12 Categories of Concurrency Categories of Concurrency: Physical concurrency - Multiple independent processors ( multiple threads of control) Logical concurrency - The appearance of physical concurrency is presented by time-sharing one processor (software can be designed as if there were multiple threads of control) Coroutines ( quasi-concurrency) have a single thread of control A thread of control in a program is the sequence of program points reached as control flows through the program

13 .Copyright © 2015 Pearson. All rights reserved. 1- 13 Motivations for the Use of Concurrency Multiprocessor computers capable of physical concurrency are now widely used Even if a machine has just one processor, a program written to use concurrent execution can be faster than the same program written for nonconcurrent execution Involves a different way of designing software that can be very useful—many real-world situations involve concurrency Many program applications are now spread over multiple machines, either locally or over a network

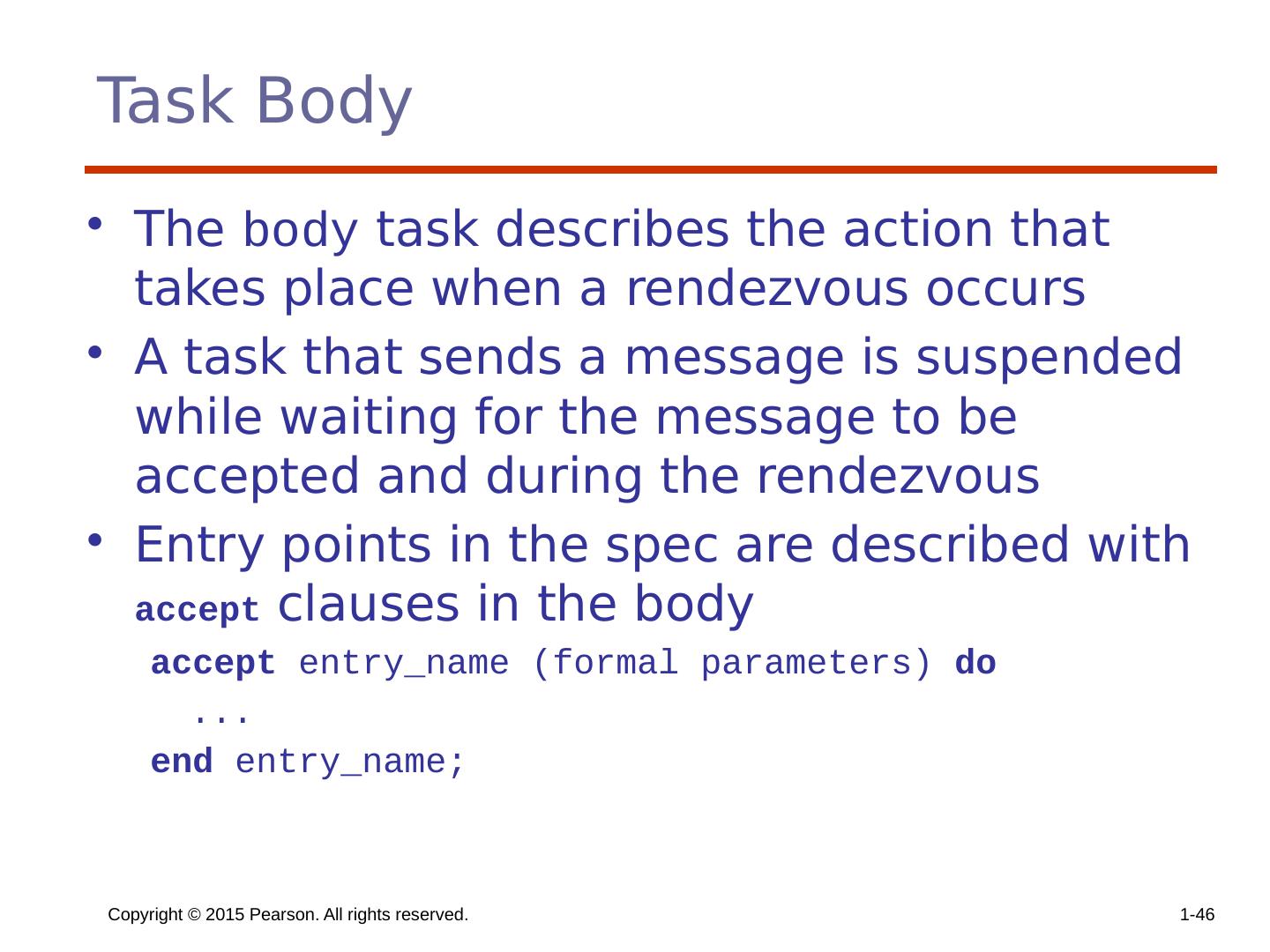

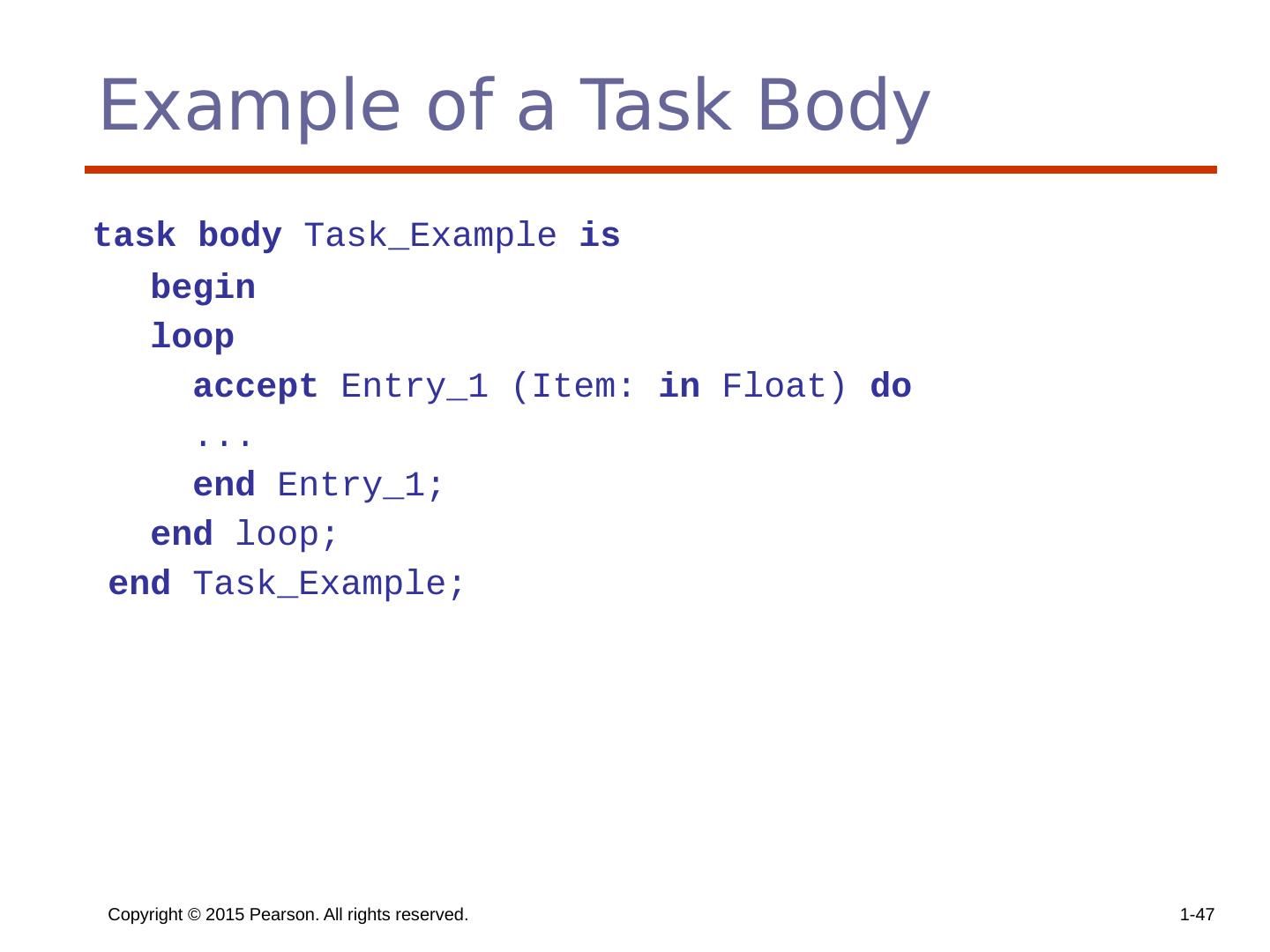

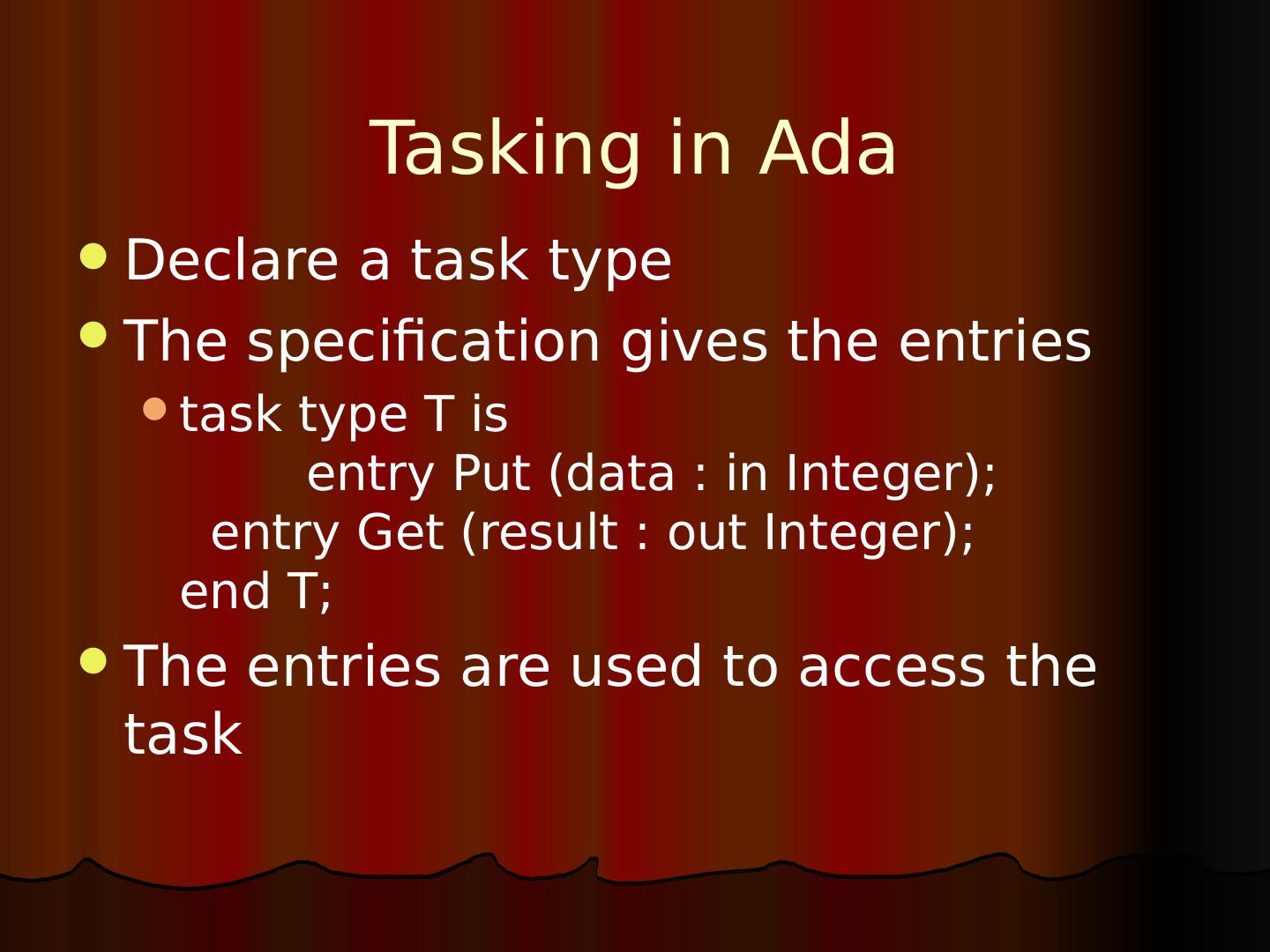

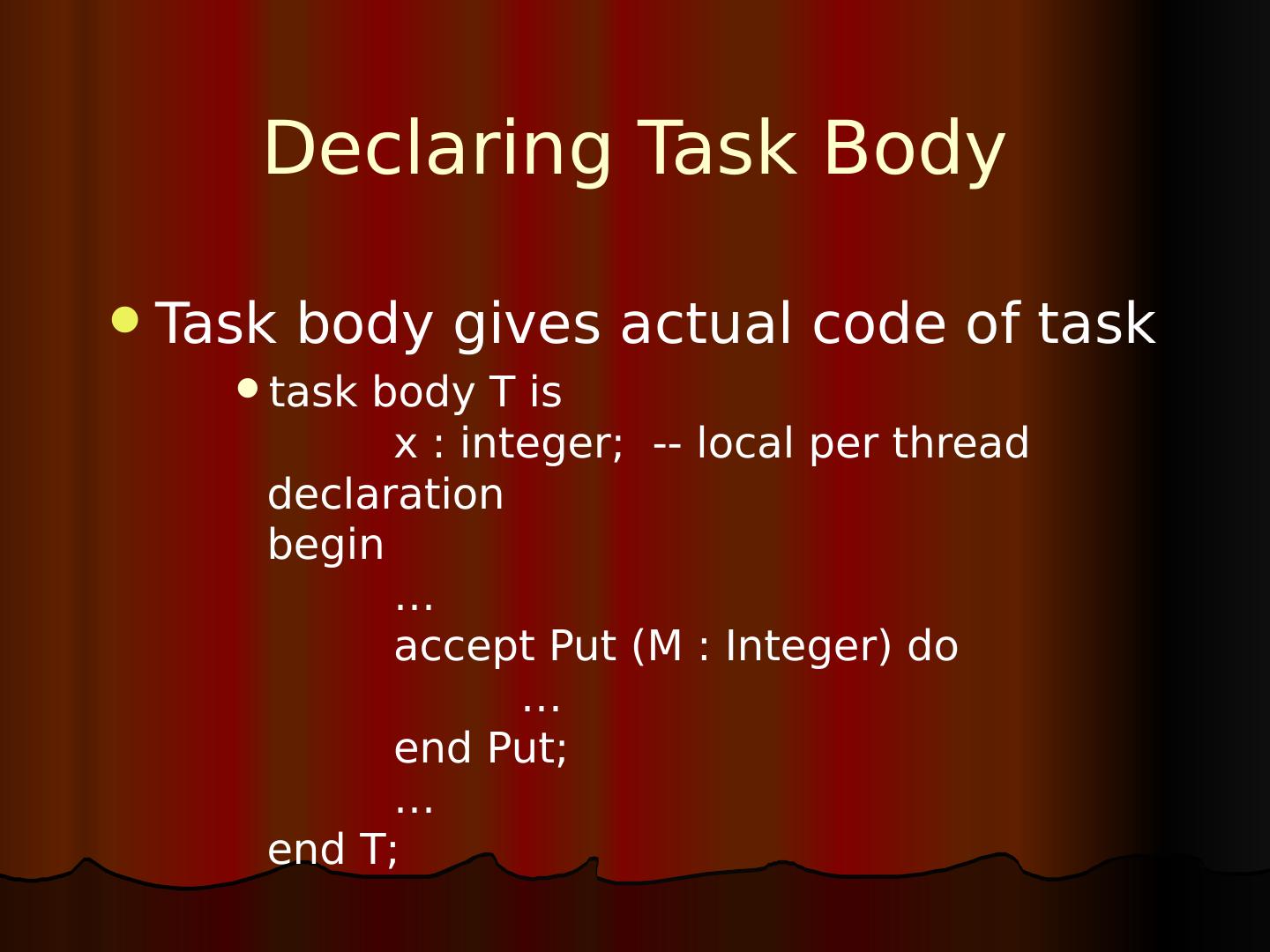

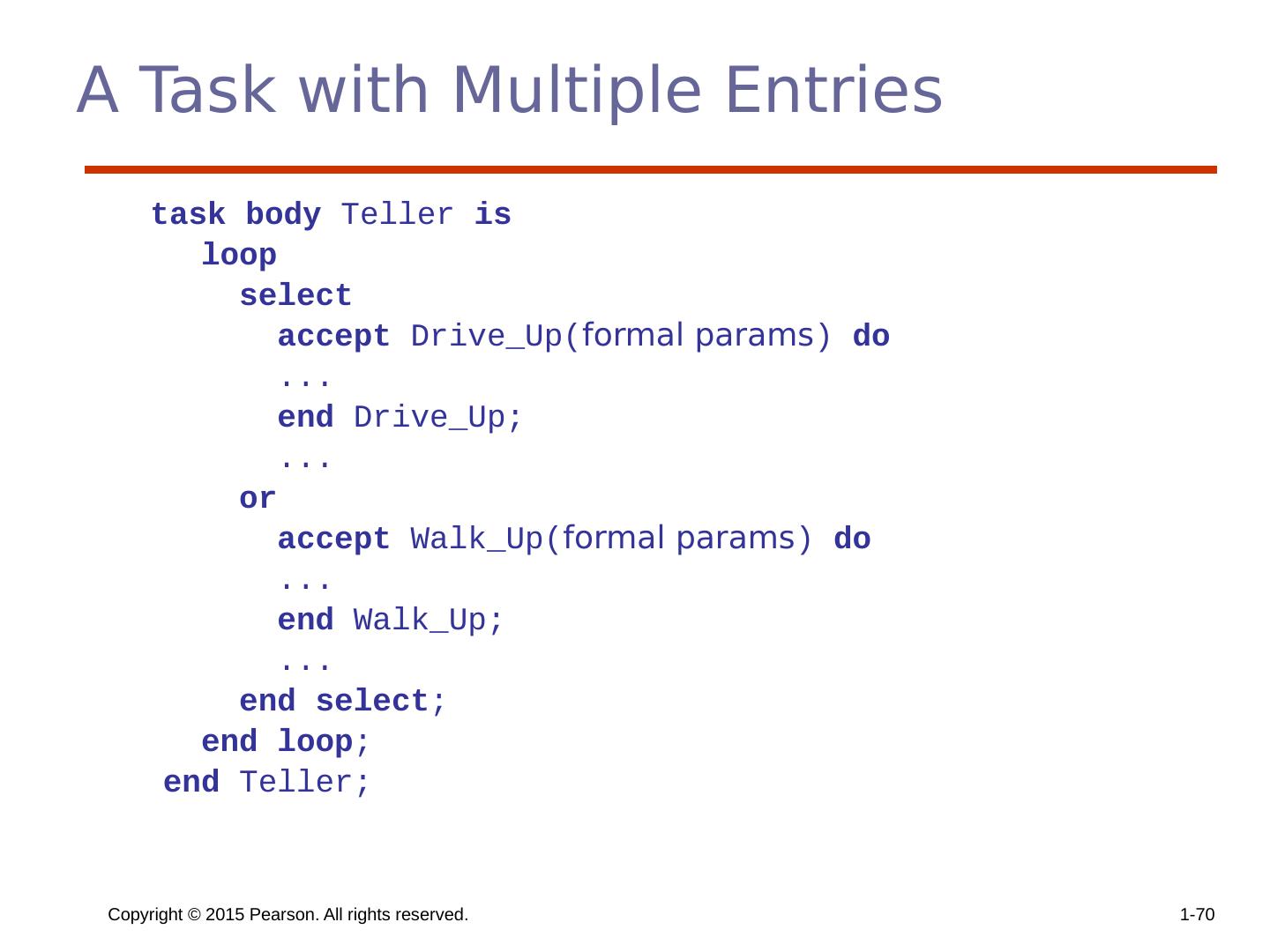

14 .Copyright © 2015 Pearson. All rights reserved. 1- 14 Introduction to Subprogram-Level Concurrency A task or process or thread is a program unit that can be in concurrent execution with other program units Tasks differ from ordinary subprograms in that: A task may be implicitly started When a program unit starts the execution of a task, it is not necessarily suspended When a task’s execution is completed, control may not return to the caller Tasks usually work together

15 .Copyright © 2015 Pearson. All rights reserved. 1- 15 Two General Categories of Tasks Heavyweight tasks execute in their own address space Lightweight tasks all run in the same address space – more efficient A task is disjoint if it does not communicate with or affect the execution of any other task in the program in any way

16 .Copyright © 2015 Pearson. All rights reserved. 1- 16 Task Synchronization A mechanism that controls the order in which tasks execute Two kinds of synchronization Cooperation synchronization Competition synchronization Task communication is necessary for synchronization, provided by: - Shared nonlocal variables - Parameters - Message passing

17 .Copyright © 2015 Pearson. All rights reserved. 1- 17 Kinds of synchronization Cooperation : Task A must wait for task B to complete some specific activity before task A can continue its execution, e.g., the producer-consumer problem Competition : Two or more tasks must use some resource that cannot be simultaneously used, e.g., a shared counter Competition is usually provided by mutually exclusive access (approaches are discussed later)

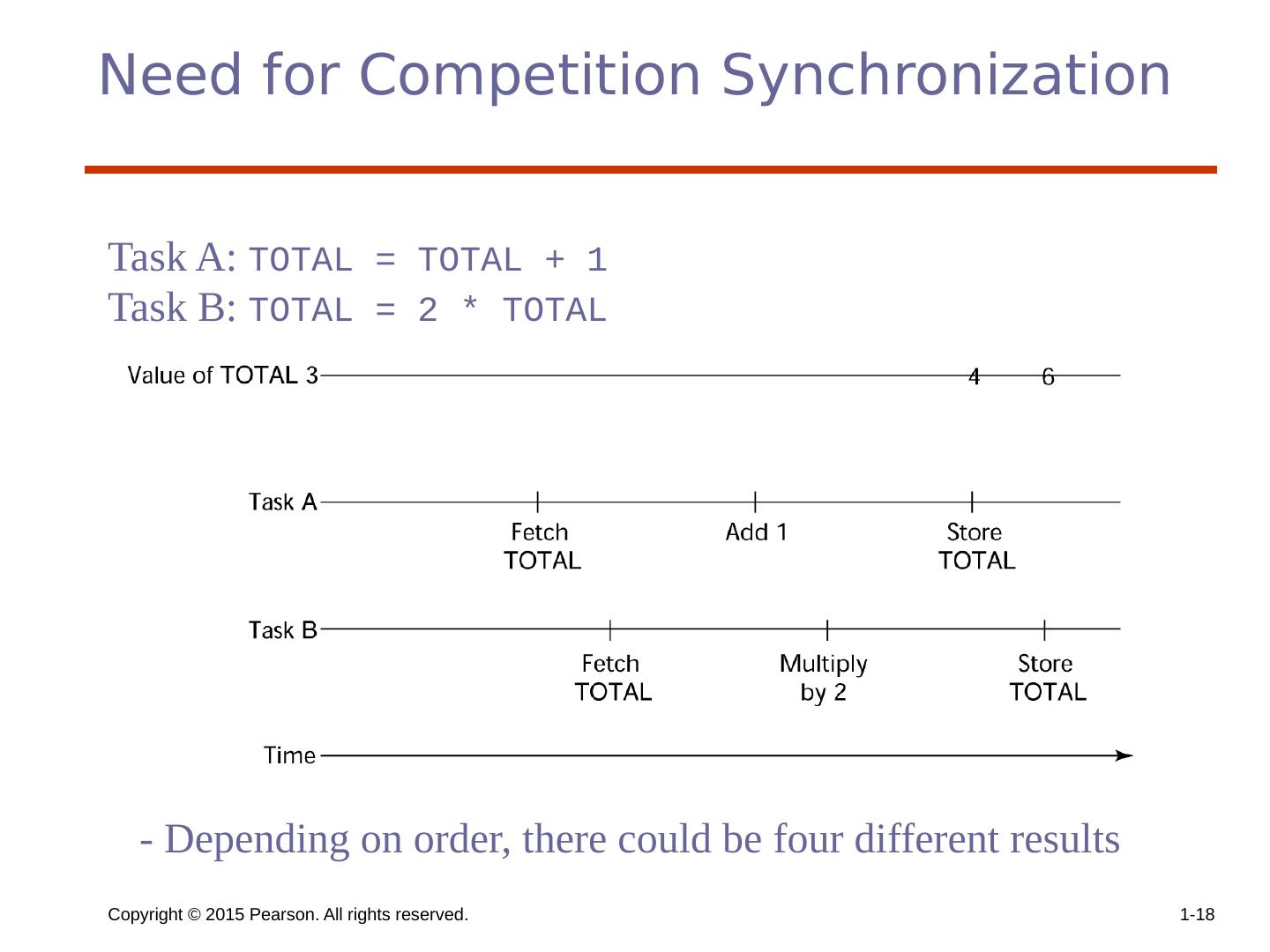

18 .Copyright © 2015 Pearson. All rights reserved. 1- 18 Need for Competition Synchronization Task A: TOTAL = TOTAL + 1 Task B: TOTAL = 2 * TOTAL - Depending on order, there could be four different results

19 .Copyright © 2015 Pearson. All rights reserved. 1- 19 Scheduler Providing synchronization requires a mechanism for delaying task execution Task execution control is maintained by a program called the scheduler , which maps task execution onto available processors

20 .Copyright © 2015 Pearson. All rights reserved. 1- 20 Task Execution States New - created but not yet started R eady - ready to run but not currently running (no available processor) Running Blocked - has been running, but cannot now continue (usually waiting for some event to occur) Dead - no longer active in any sense

21 .Copyright © 2015 Pearson. All rights reserved. 1- 21 Liveness and Deadlock Liveness is a characteristic that a program unit may or may not have - In sequential code, it means the unit will eventually complete its execution In a concurrent environment, a task can easily lose its liveness If all tasks in a concurrent environment lose their liveness, it is called deadlock

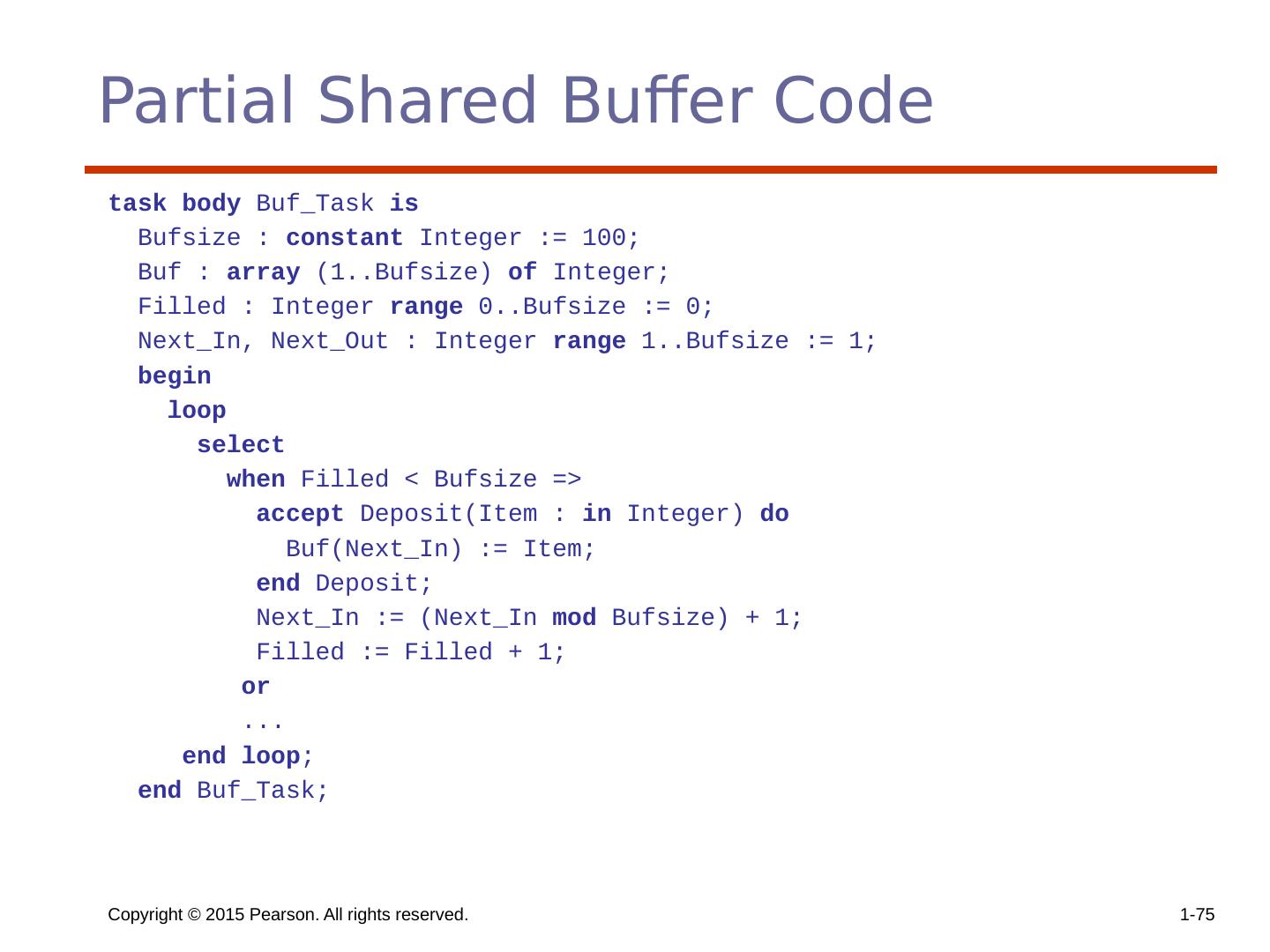

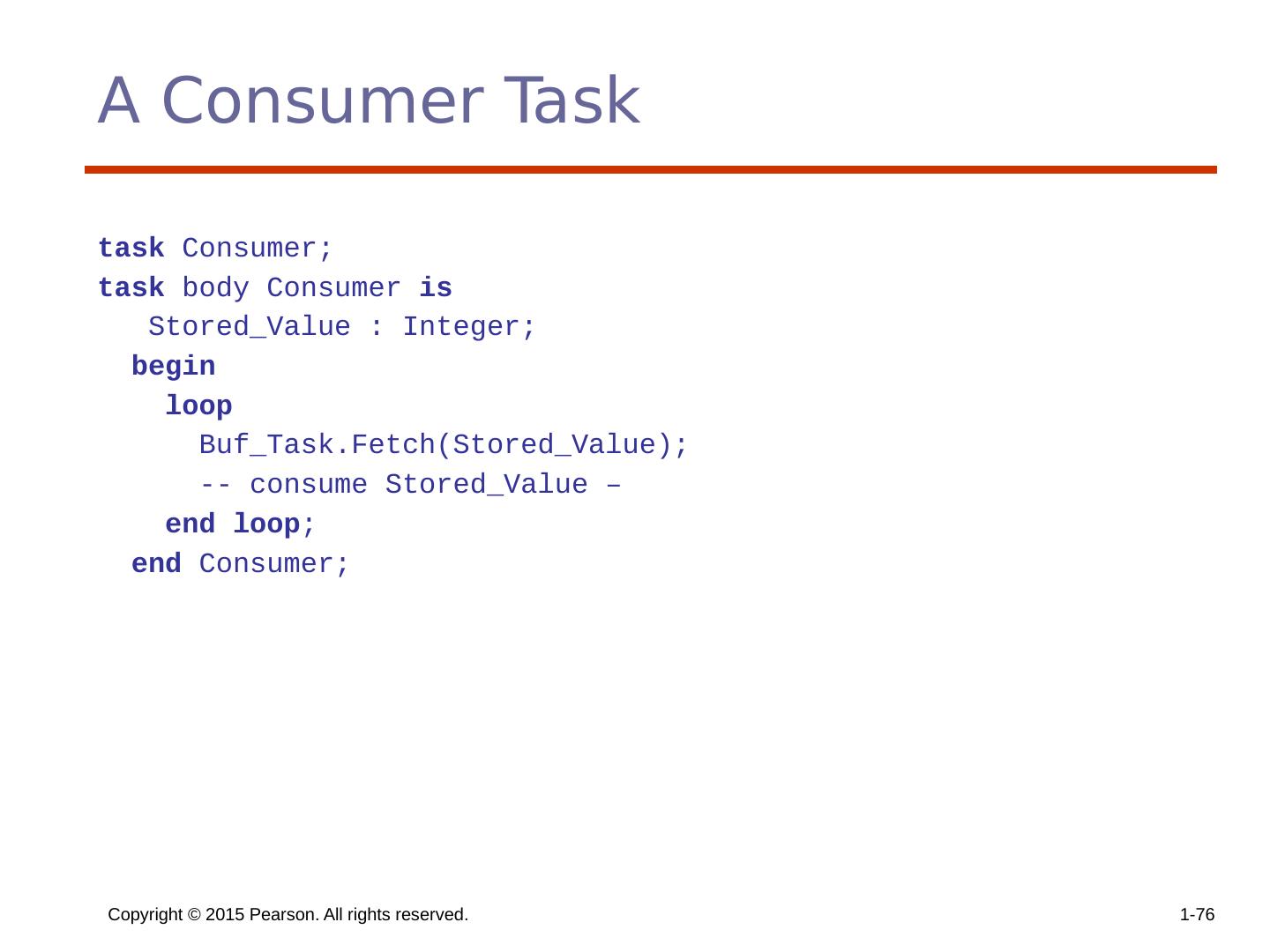

22 .Copyright © 2015 Pearson. All rights reserved. 1- 22 Design Issues for Concurrency Competition and cooperation synchronization* Controlling task scheduling How can an application influence task scheduling How and when tasks start and end execution How and when are tasks created * The most important issue

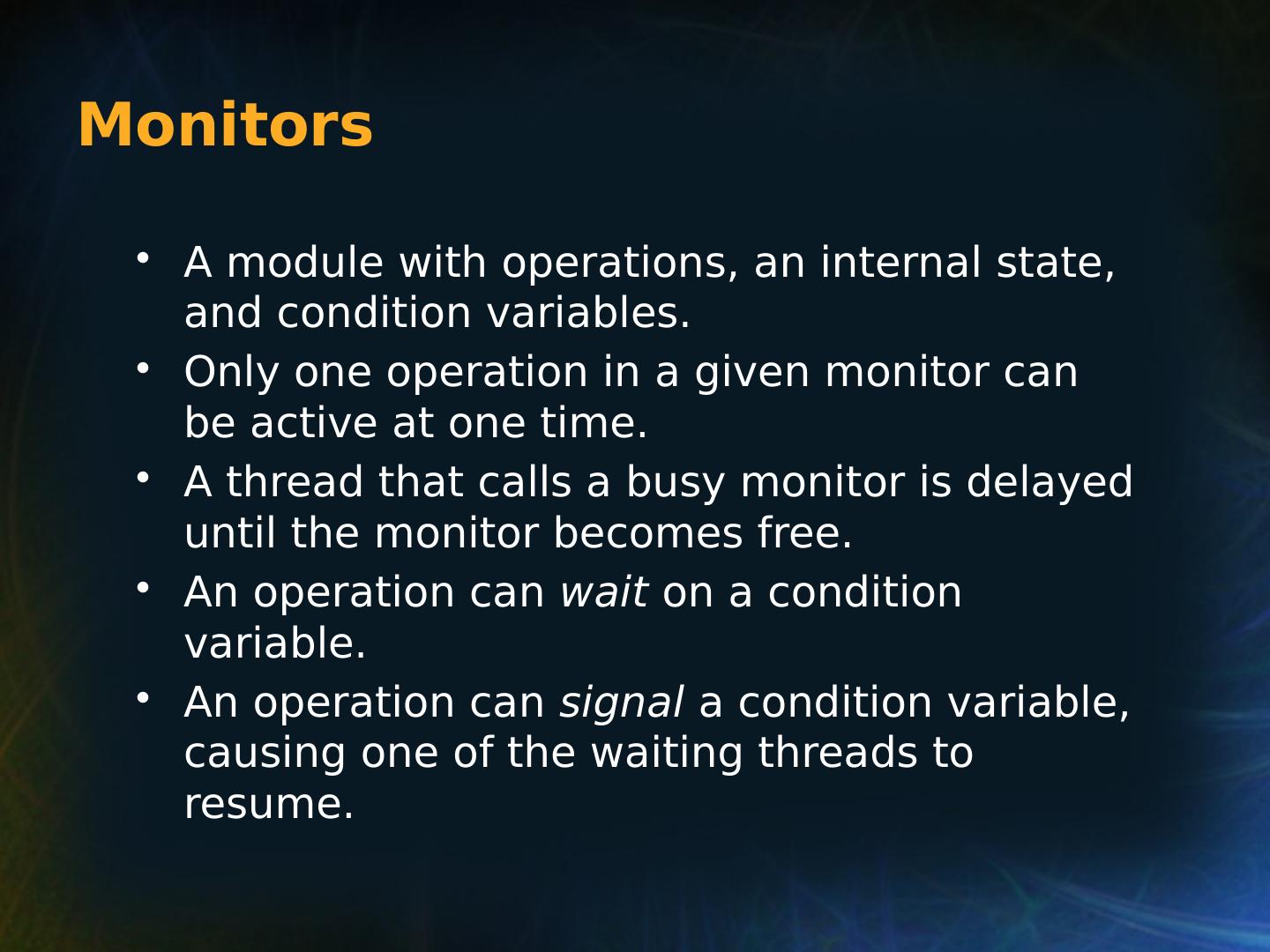

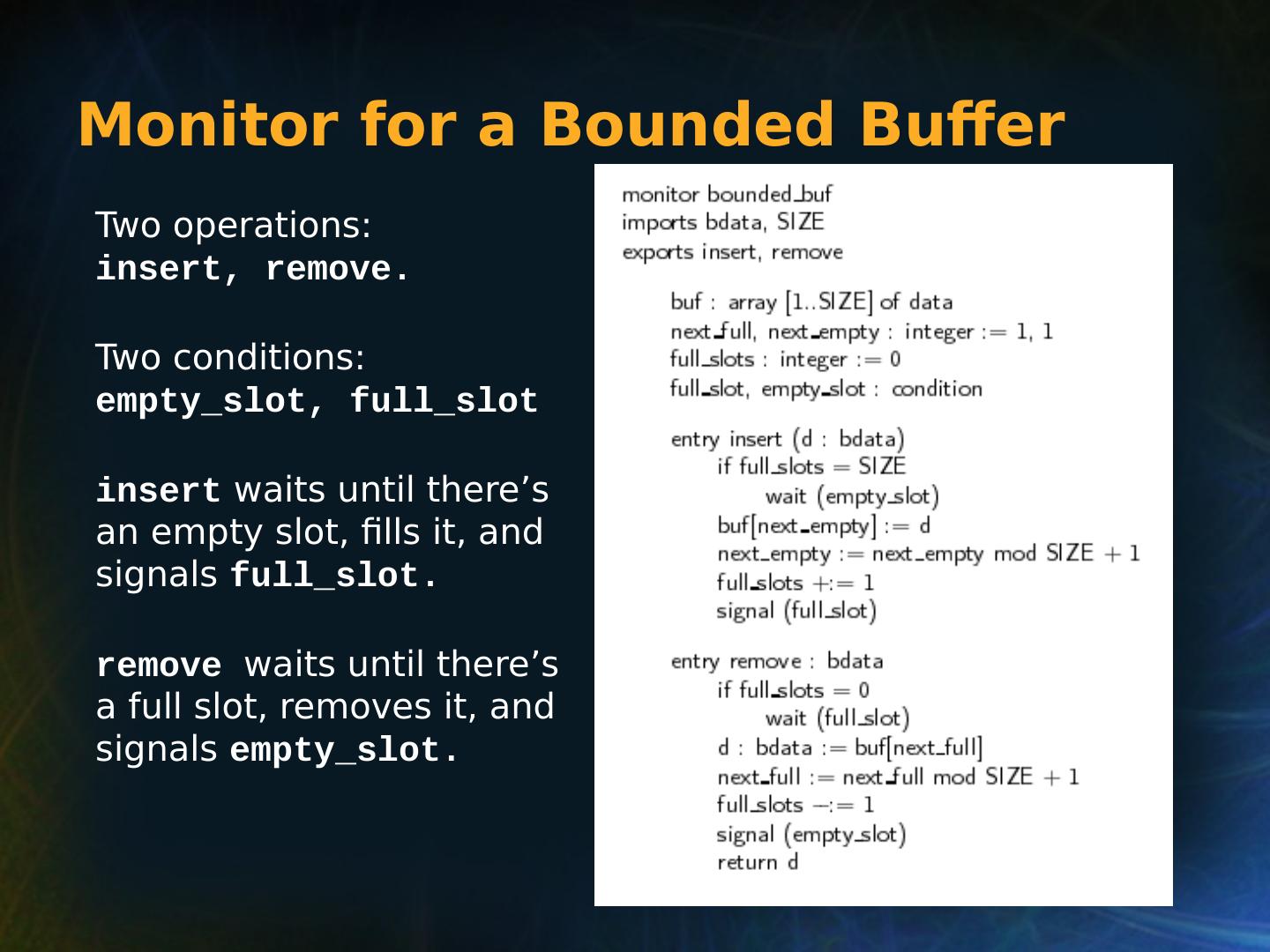

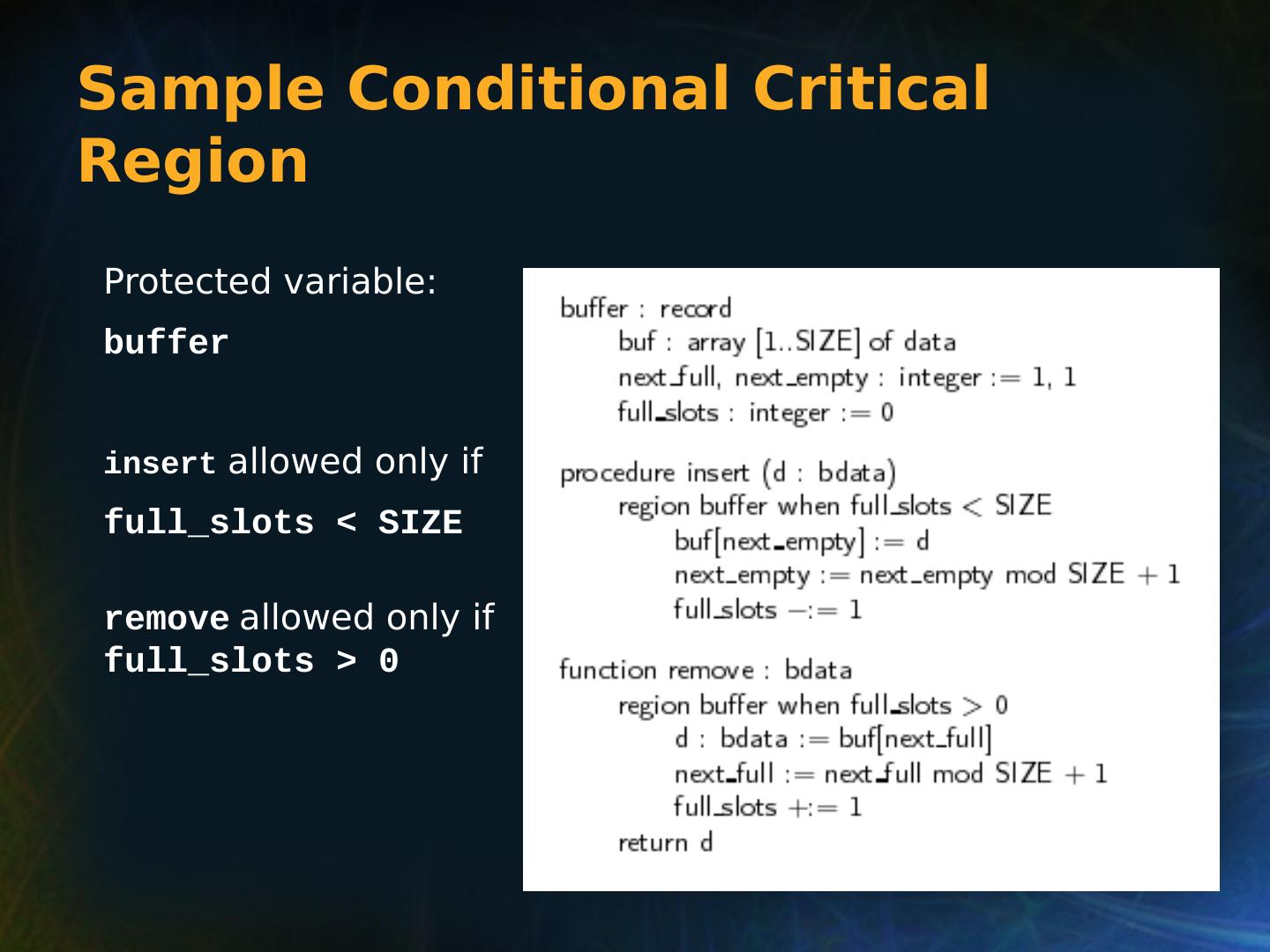

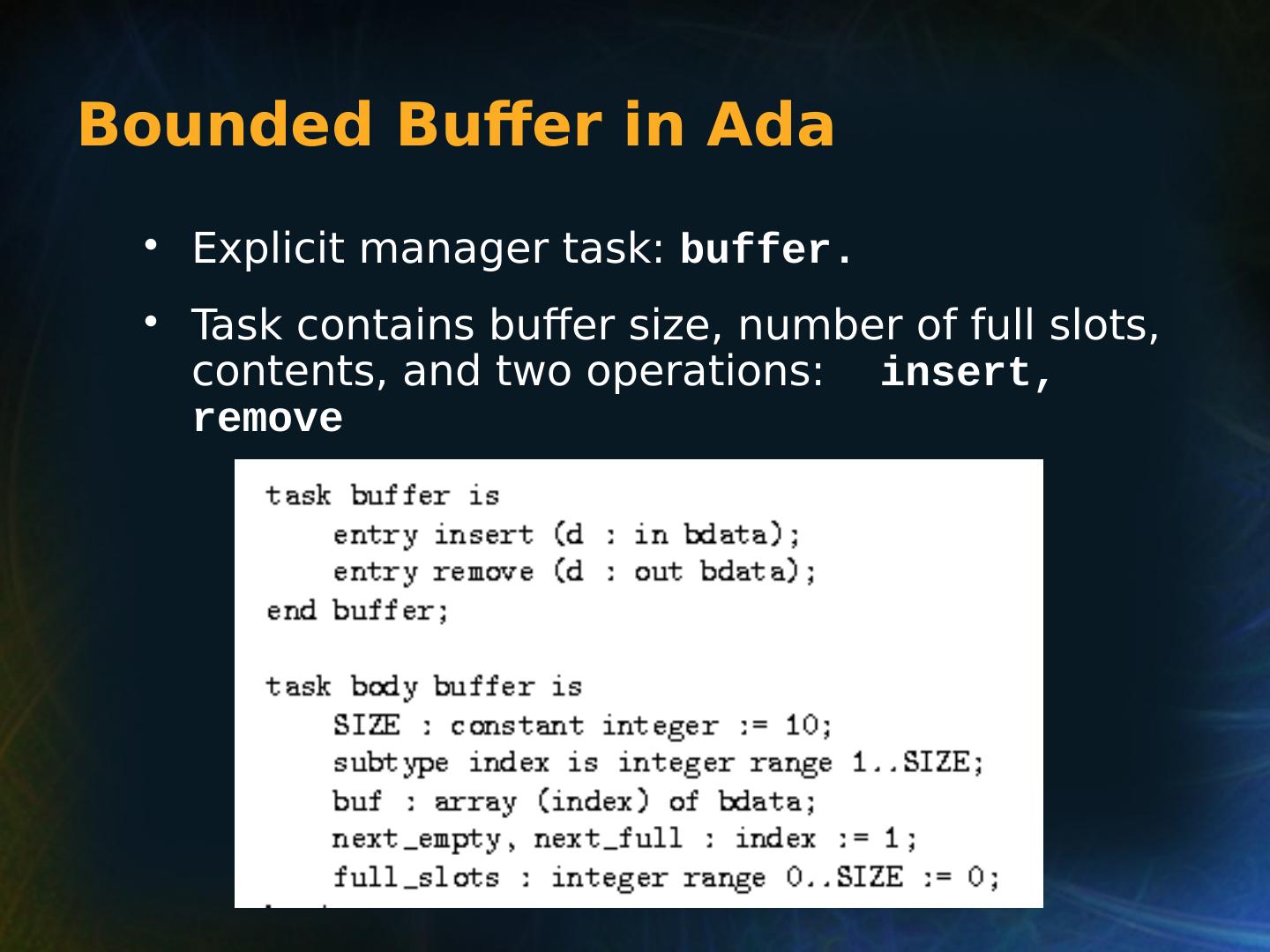

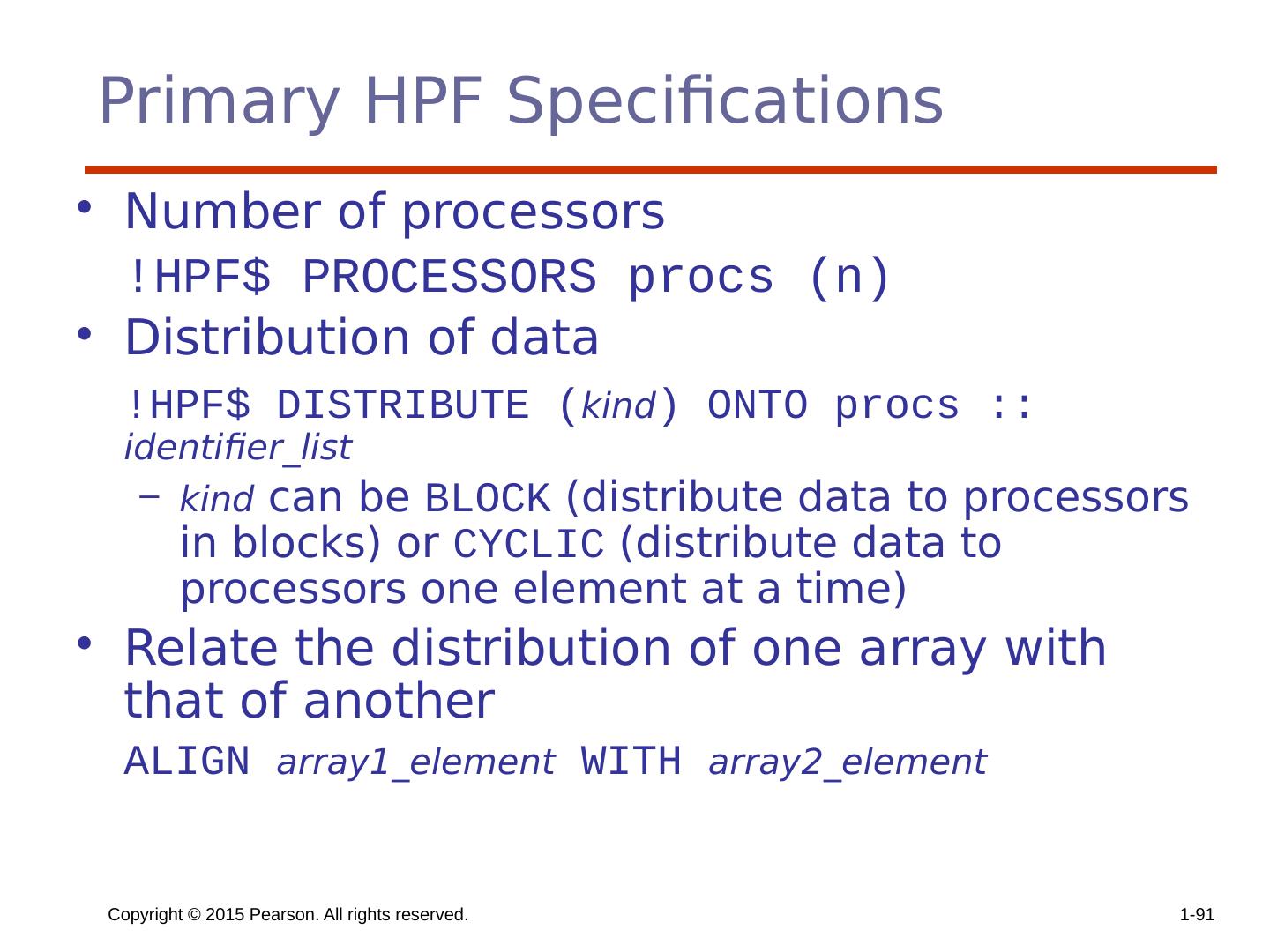

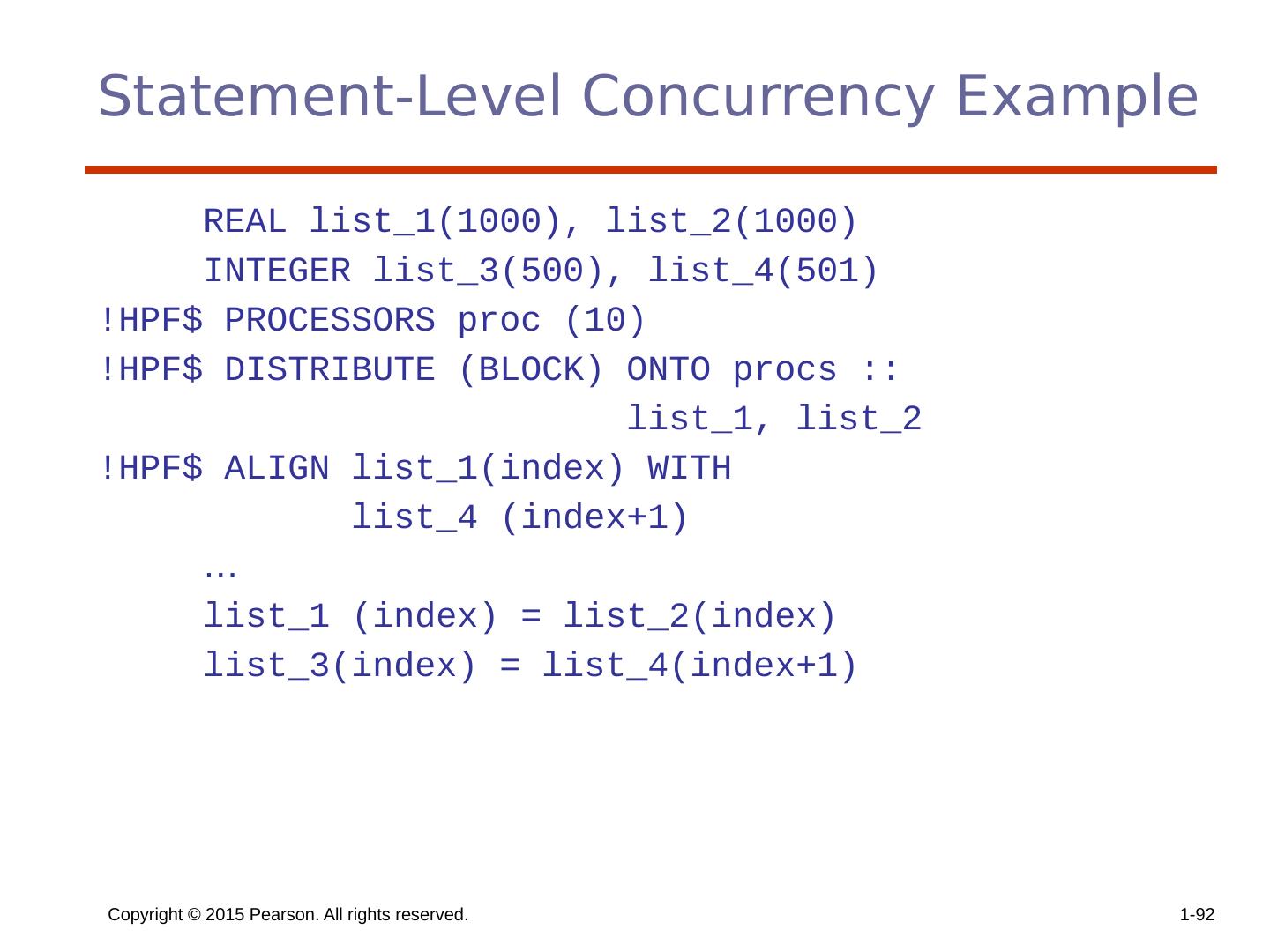

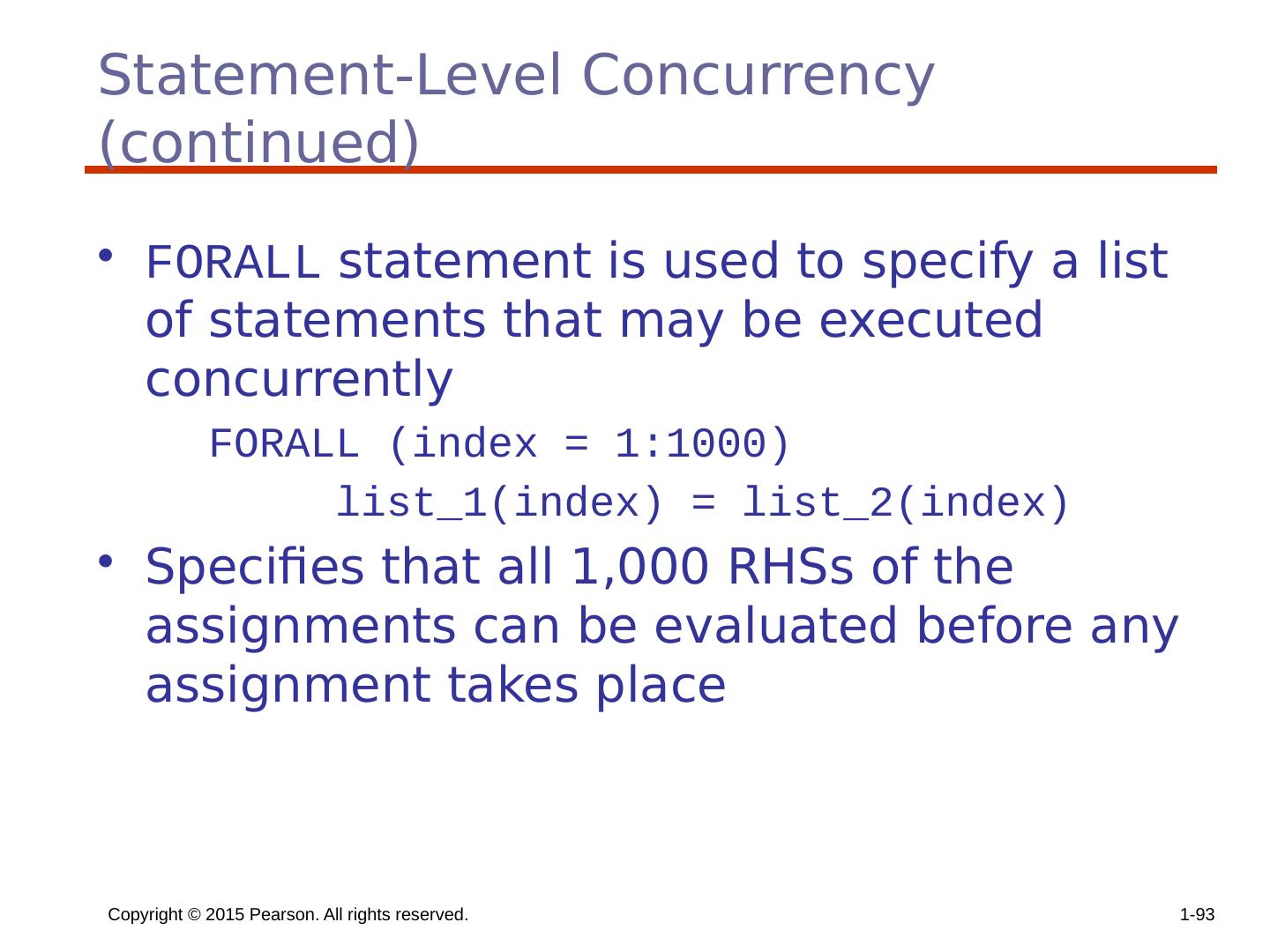

23 .Copyright © 2015 Pearson. All rights reserved. 1- 23 Methods of Providing Synchronization Semaphores Monitors Message Passing

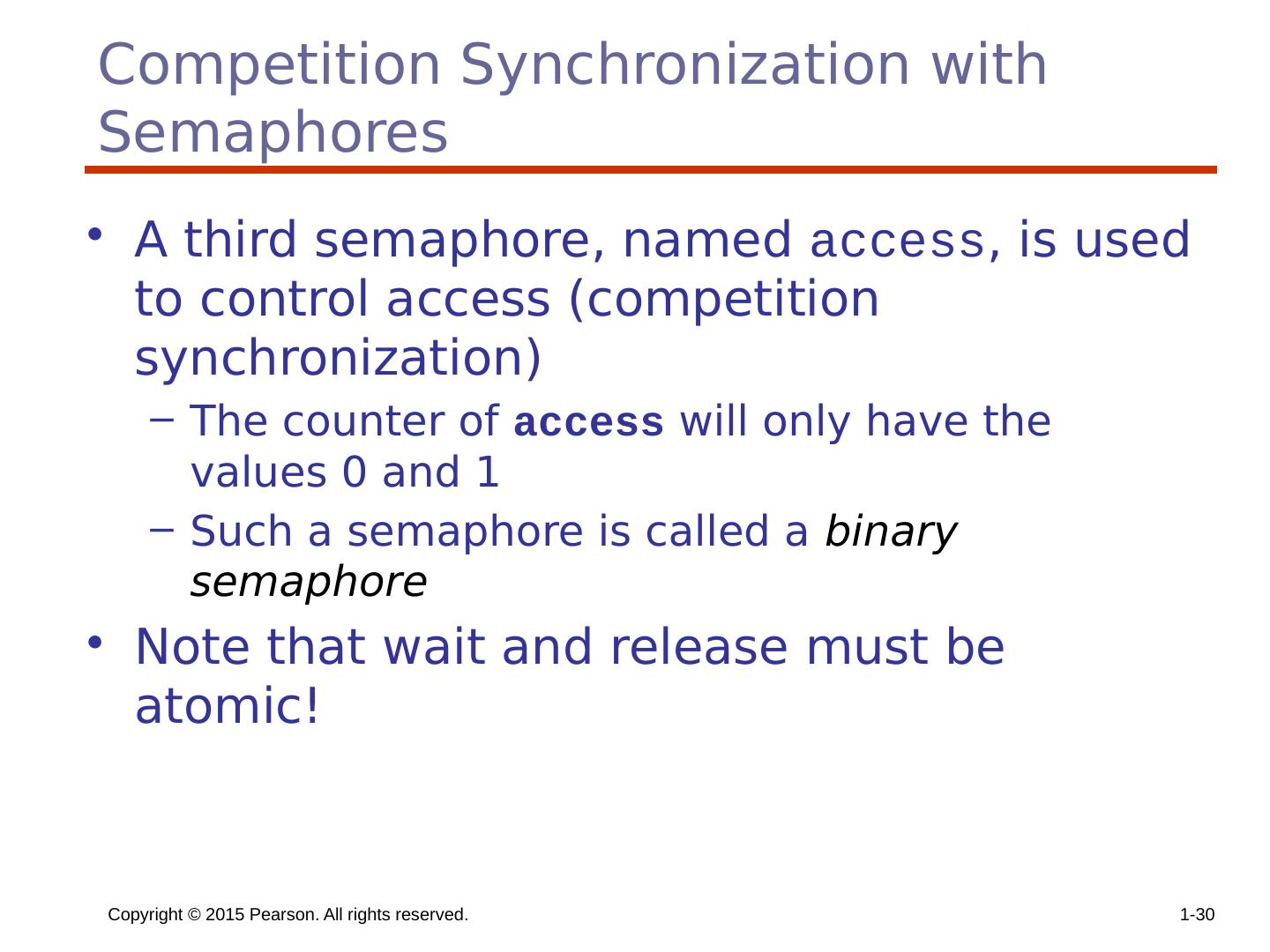

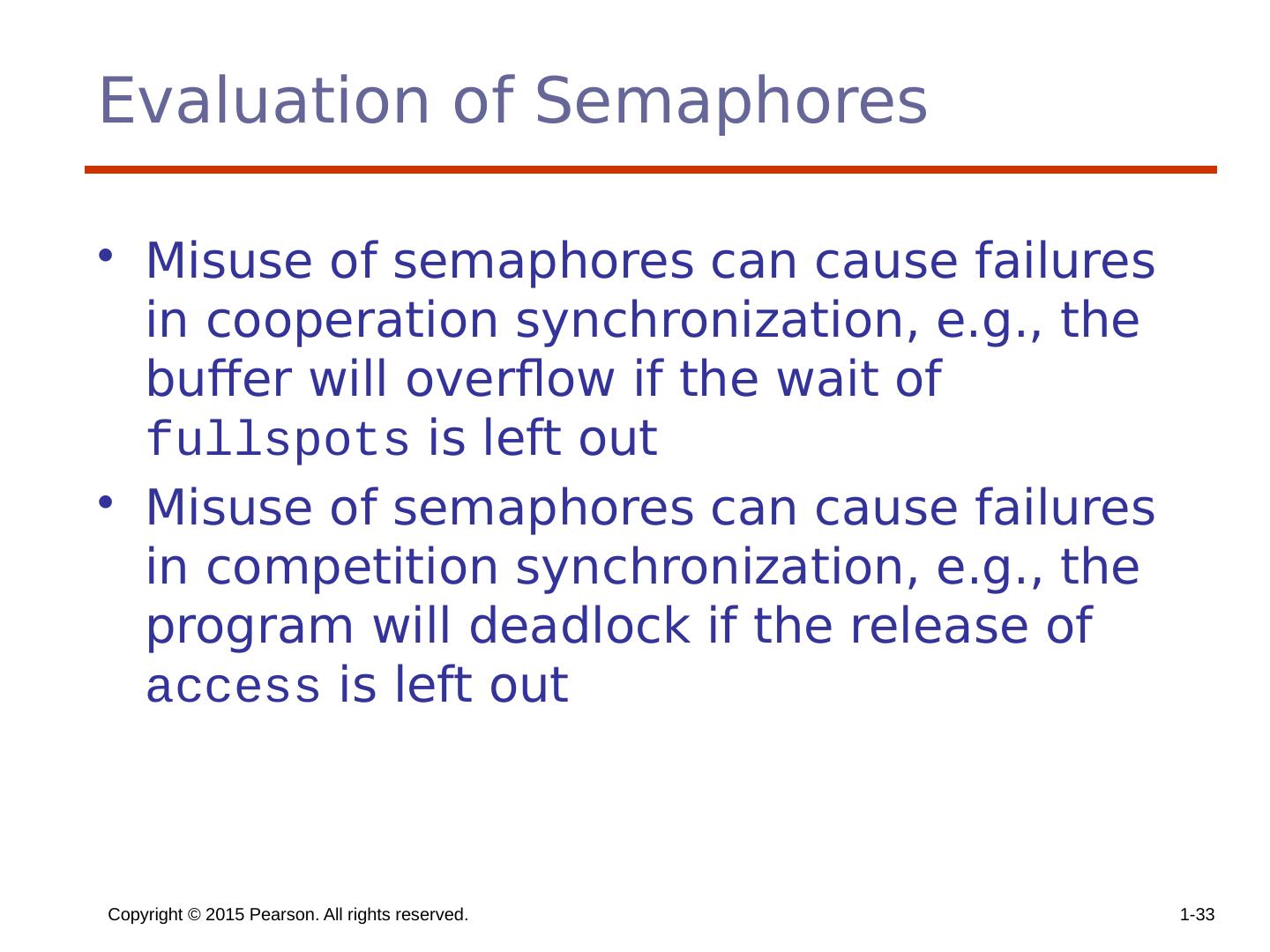

24 .Copyright © 2015 Pearson. All rights reserved. 1- 24 Semaphores Dijkstra - 1965 A semaphore is a data structure consisting of a counter and a queue for storing task descriptors A task descriptor is a data structure that stores all of the relevant information about the execution state of the task Semaphores can be used to implement guards on the code that accesses shared data structures Semaphores have only two operations, wait and release (originally called P and V by Dijkstra) Semaphores can be used to provide both competition and cooperation synchronization

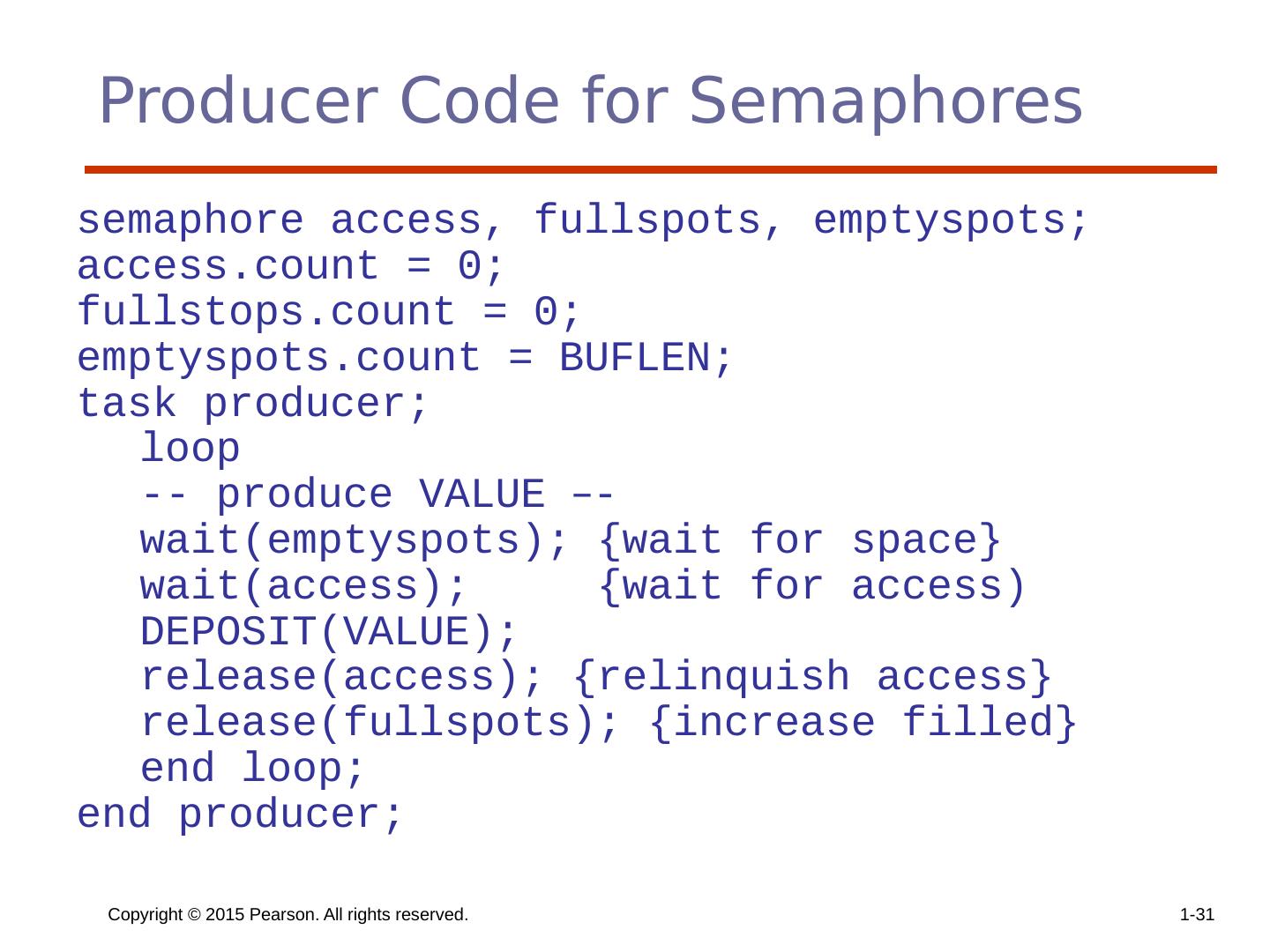

25 .Copyright © 2015 Pearson. All rights reserved. 1- 25 Cooperation Synchronization with Semaphores Example: A shared buffer The buffer is implemented as an ADT with the operations DEPOSIT and FETCH as the only ways to access the buffer Use two semaphores for cooperation: emptyspots and fullspots The semaphore counters are used to store the numbers of empty spots and full spots in the buffer

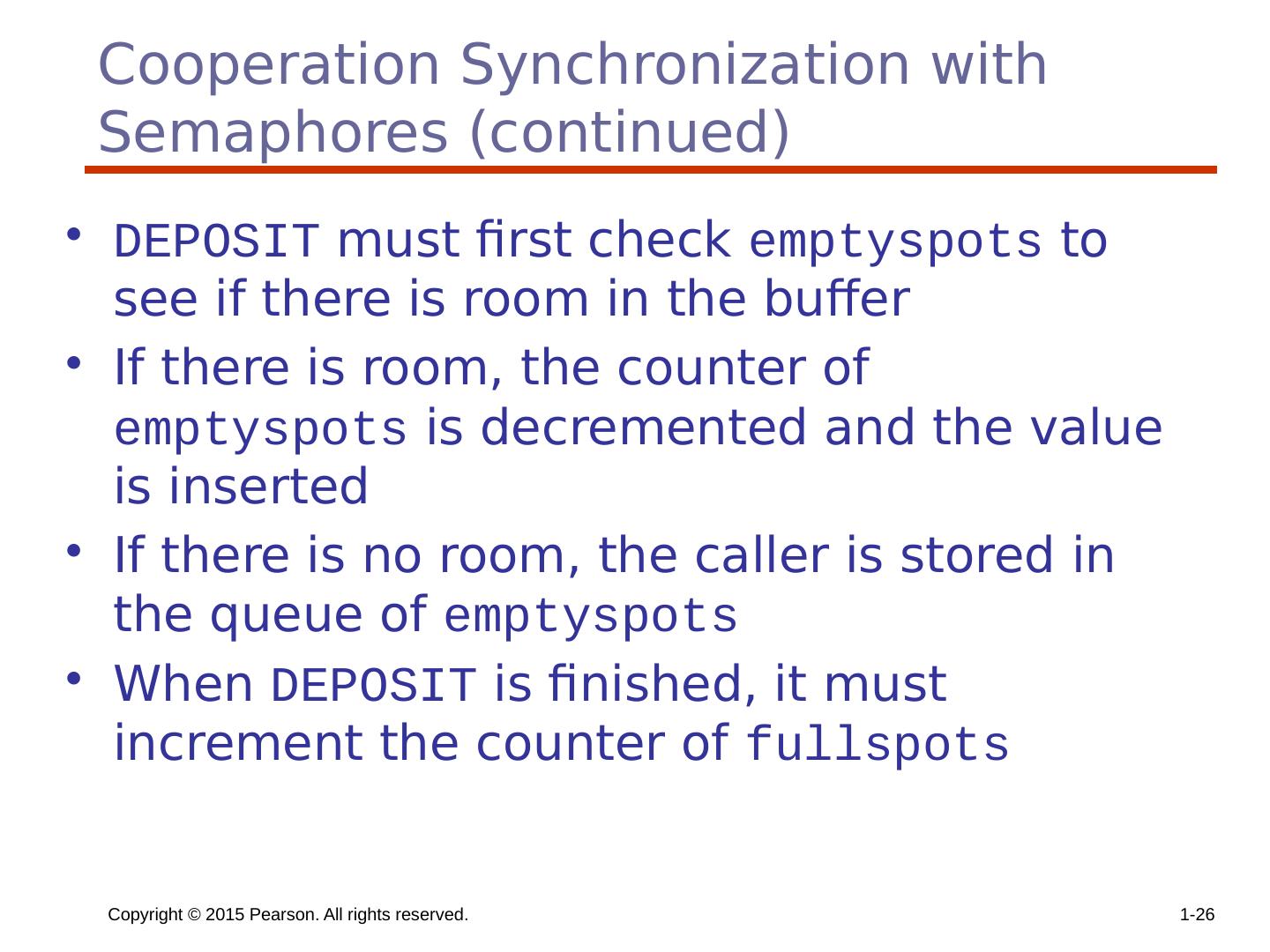

26 .Copyright © 2015 Pearson. All rights reserved. 1- 26 Cooperation Synchronization with Semaphores (continued) DEPOSIT must first check emptyspots to see if there is room in the buffer If there is room, the counter of emptyspots is decremented and the value is inserted If there is no room, the caller is stored in the queue of emptyspots When DEPOSIT is finished, it must increment the counter of fullspots

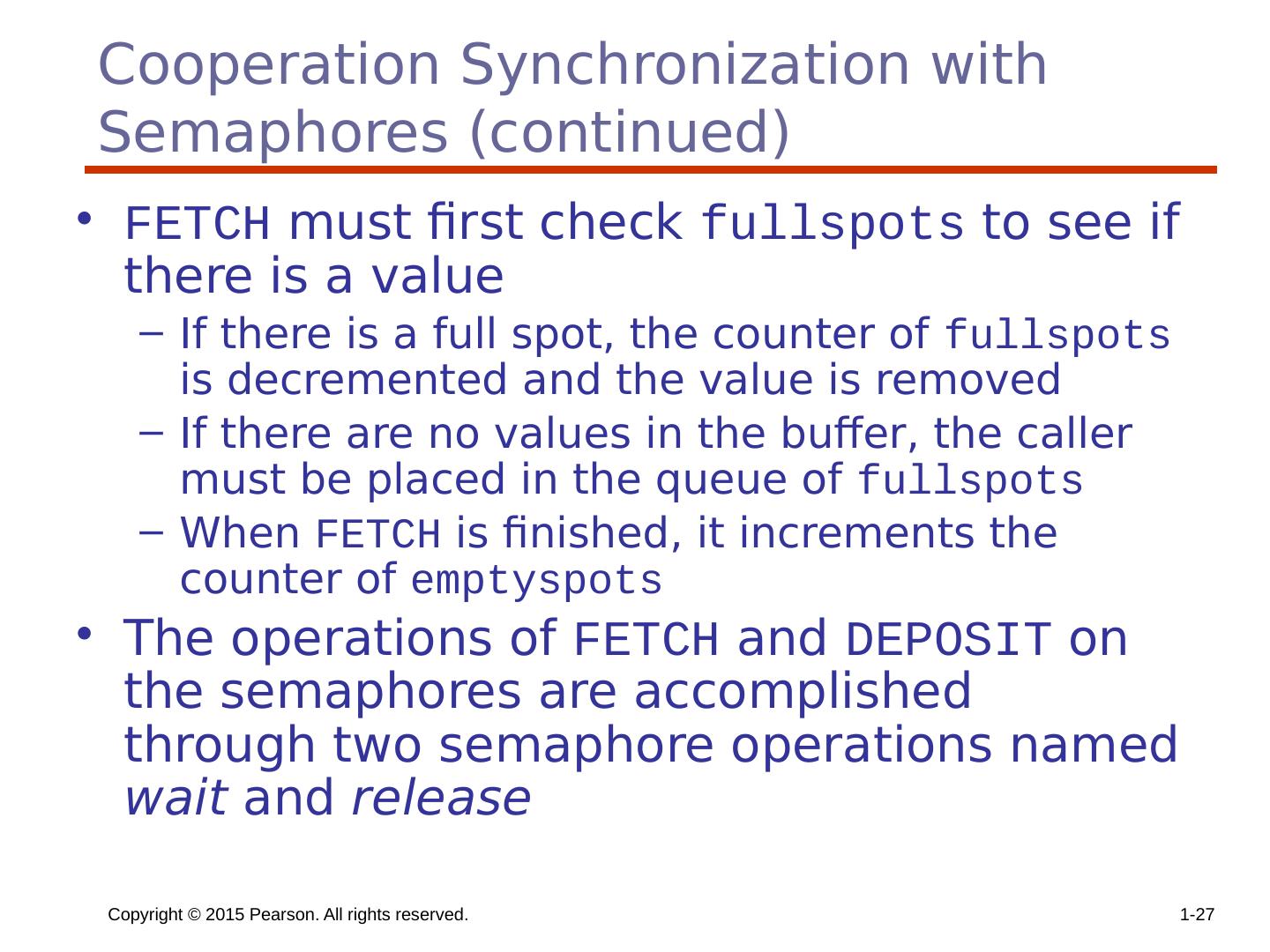

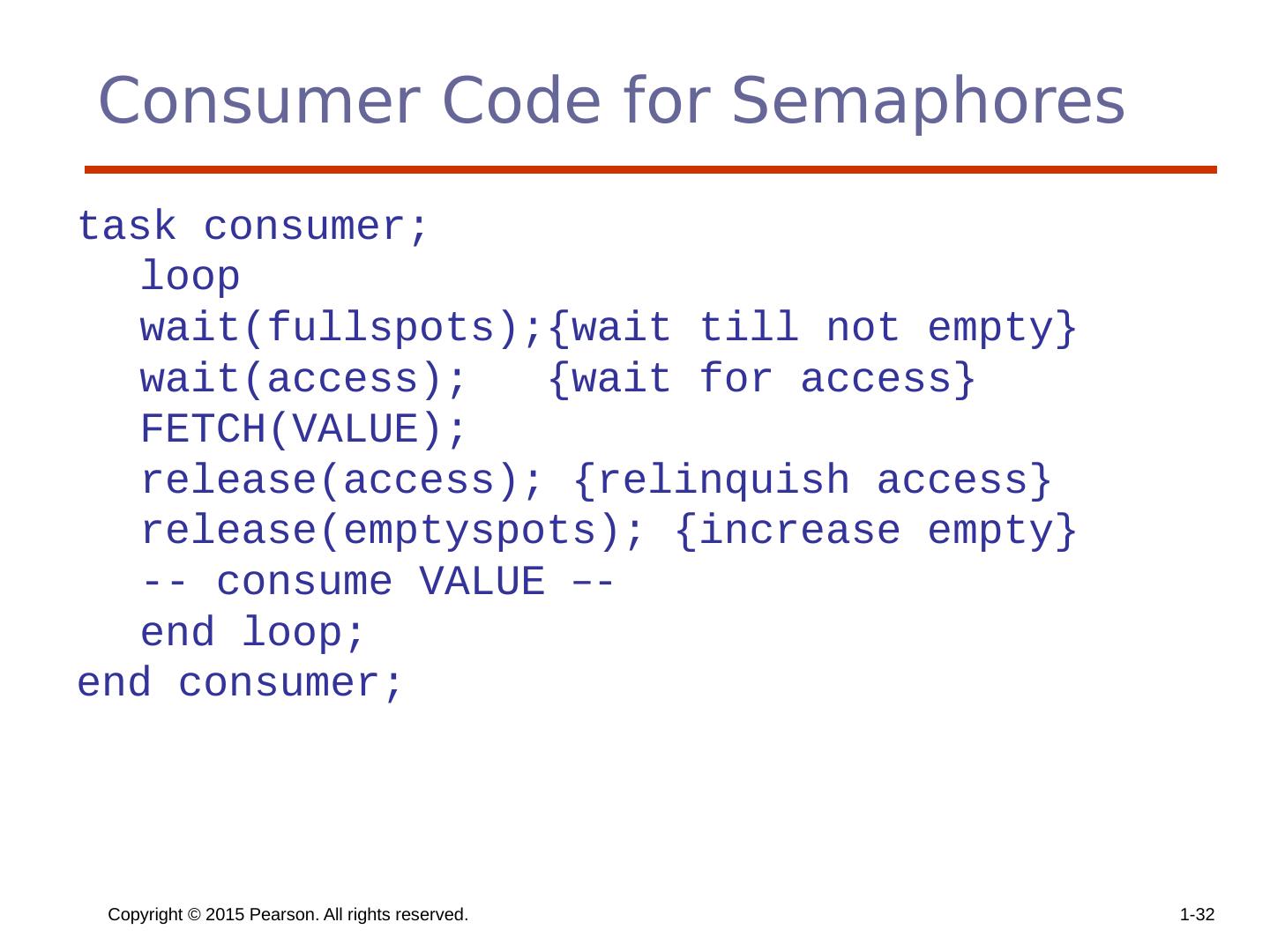

27 .Copyright © 2015 Pearson. All rights reserved. 1- 27 Cooperation Synchronization with Semaphores (continued) FETCH must first check fullspots to see if there is a value If there is a full spot, the counter of fullspots is decremented and the value is removed If there are no values in the buffer, the caller must be placed in the queue of fullspots When FETCH is finished, it increments the counter of emptyspots The operations of FETCH and DEPOSIT on the semaphores are accomplished through two semaphore operations named wait and release

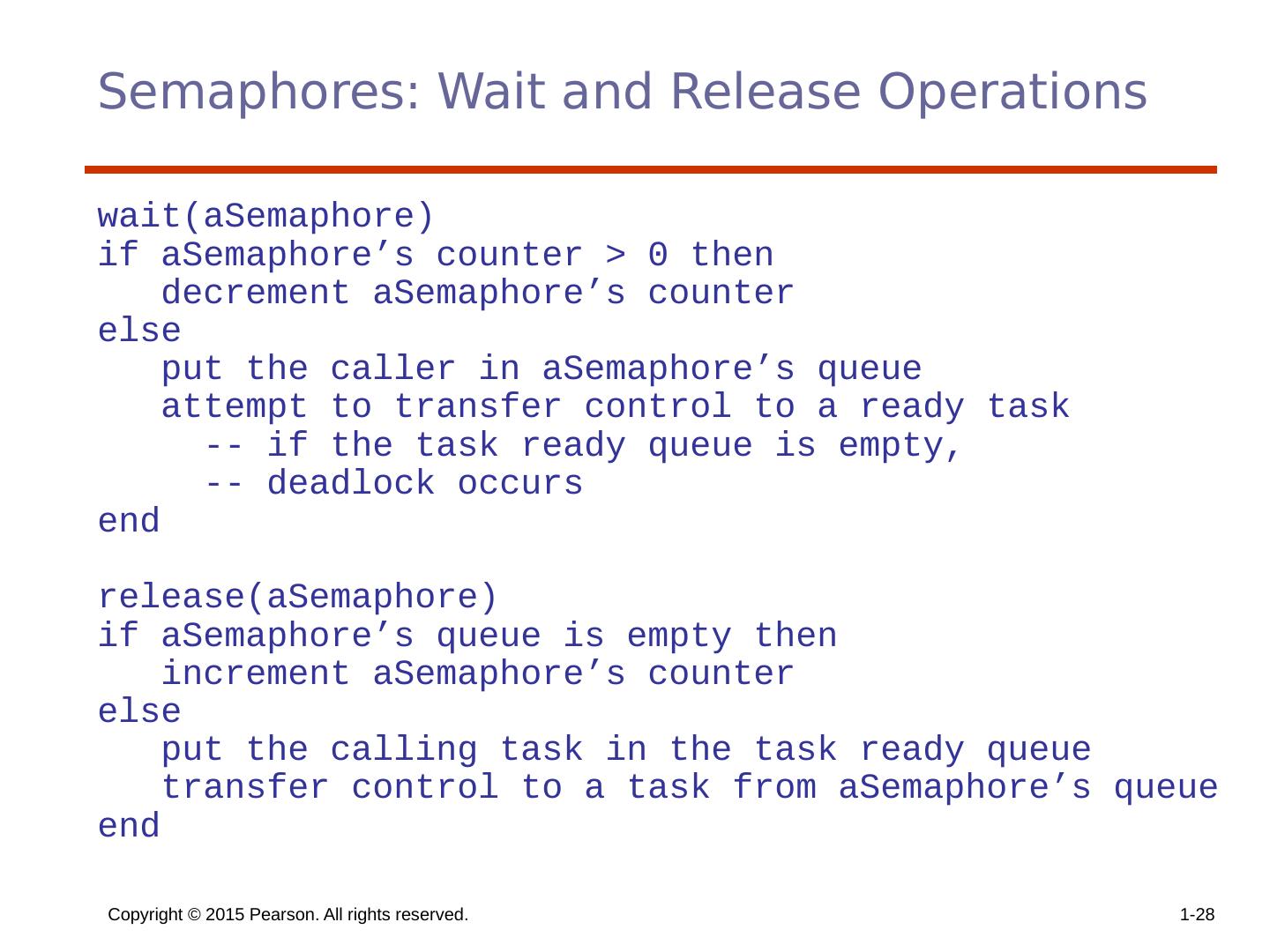

28 .Copyright © 2015 Pearson. All rights reserved. 1- 28 Semaphores: Wait and Release Operations wait(aSemaphore) if aSemaphore’s counter > 0 then decrement aSemaphore’s counter else put the caller in aSemaphore’s queue attempt to transfer control to a ready task -- if the task ready queue is empty, -- deadlock occurs end release(aSemaphore) if aSemaphore’s queue is empty then increment aSemaphore’s counter else put the calling task in the task ready queue transfer control to a task from aSemaphore’s queue end

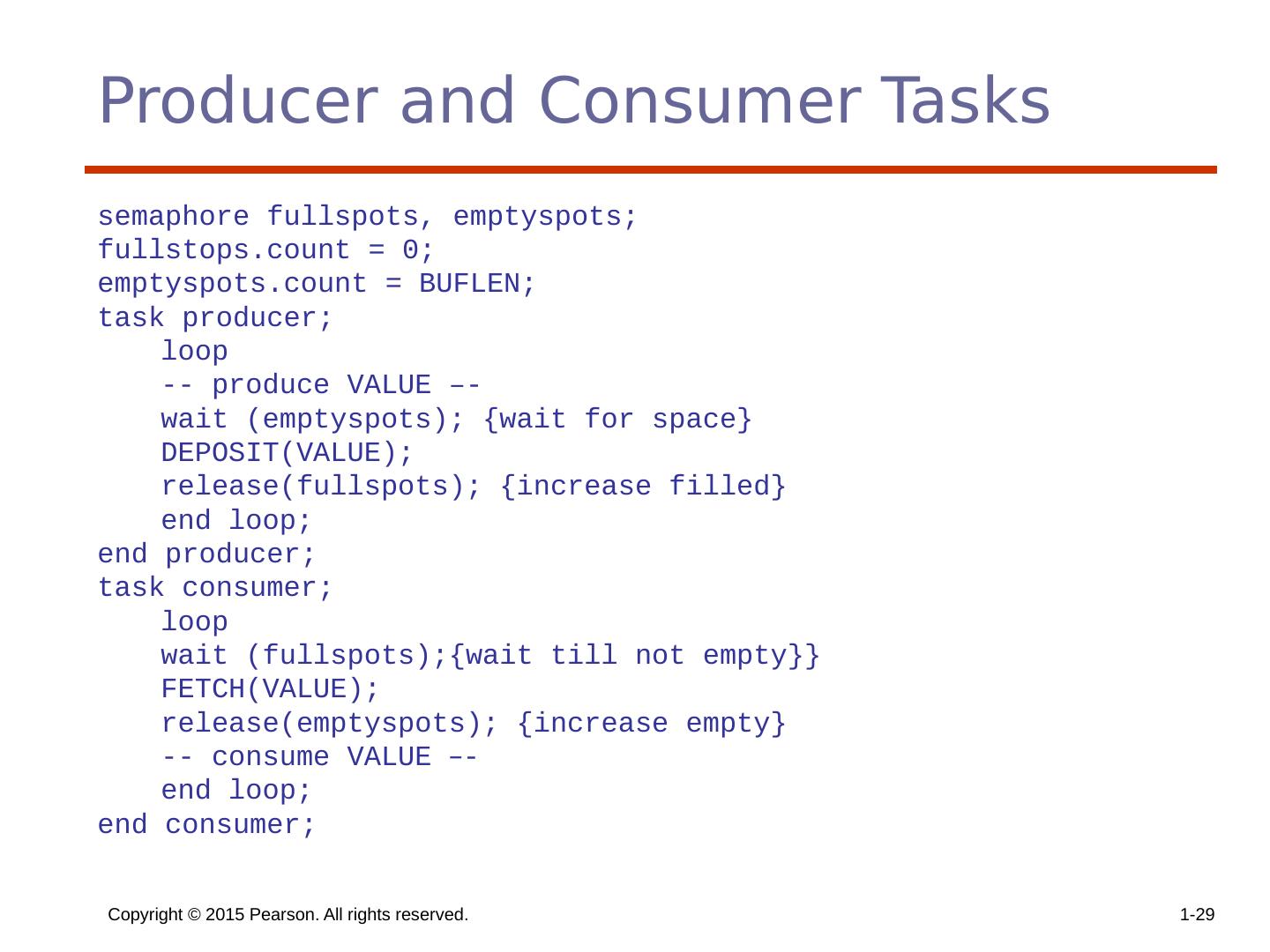

29 .Copyright © 2015 Pearson. All rights reserved. 1- 29 Producer and Consumer Tasks semaphore fullspots, emptyspots; fullstops.count = 0; emptyspots.count = BUFLEN; task producer; loop -- produce VALUE –- wait (emptyspots); {wait for space} DEPOSIT(VALUE); release(fullspots); {increase filled} end loop; end producer; task consumer; loop wait (fullspots);{wait till not empty}} FETCH(VALUE); release(emptyspots); {increase empty} -- consume VALUE – - end loop; end consumer;