- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

TensorFlow-Clipper

展开查看详情

1 .TensorFlow and Clipper (Lecture 24, cs262a) Ali Ghodsi and Ion Stoica, UC Berkeley April 18, 2018

2 .Today’s lecture Abadi et al., “ TensorFlow : A System for Large-Scale Machine Learning”, OSDI 2016 ( https:// www.usenix.org/system/files/conference/osdi16/osdi16-abadi.pdf) Crankshaw et al., “ Clipper: A Low-Latency Online Prediction Serving System”, NSDI 2017 ( https:// www.usenix.org/conference/nsdi17/technical-sessions/presentation/crankshaw )

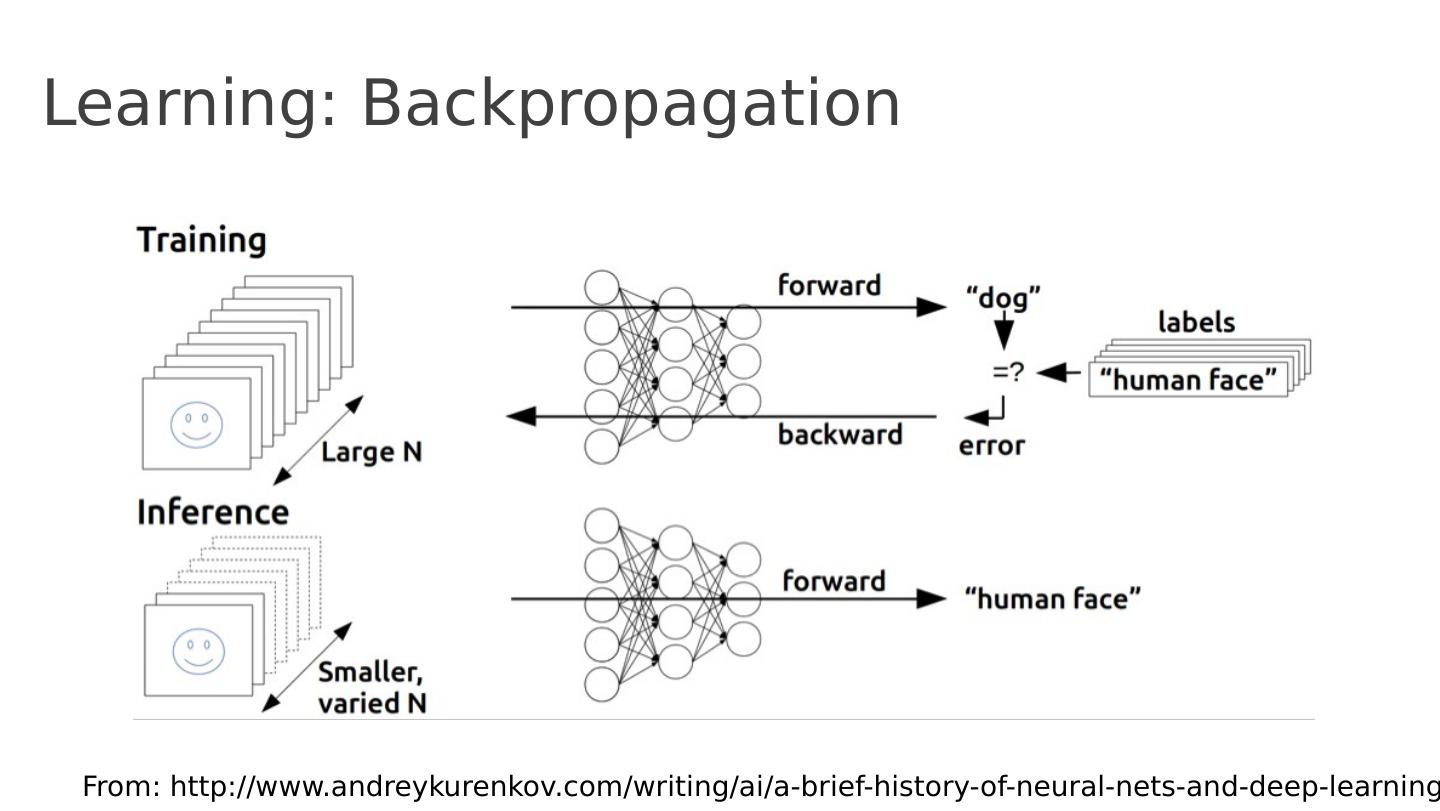

3 .A short history of Neural Networks 1957: Perceptron (Frank Rosenblatt): one layer network neural network 1959 : first neural network to solve a real world problem, i.e., eliminates echoes on phone lines ( Widrow & Hoff) 1988: Backpropagation ( Rumelhart , Hinton , Williams): learning a multi-layered network

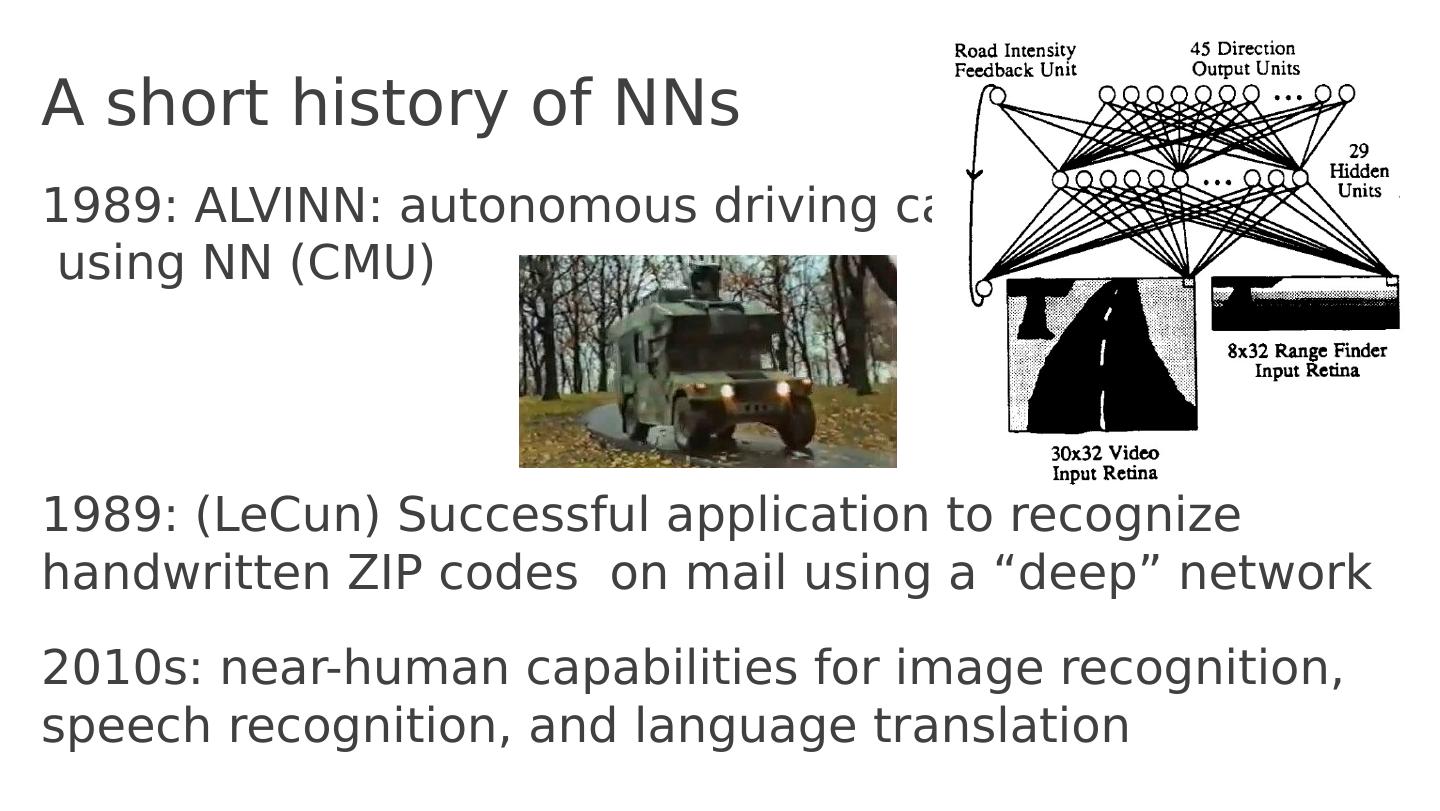

4 .A short history of NNs 1989: ALVINN: autonomous driving car using NN (CMU) 1989: ( LeCun ) Successful application to recognize handwritten ZIP codes on mail using a “deep” network 2010s: near-human capabilities for image recognition, speech recognition, and language translation

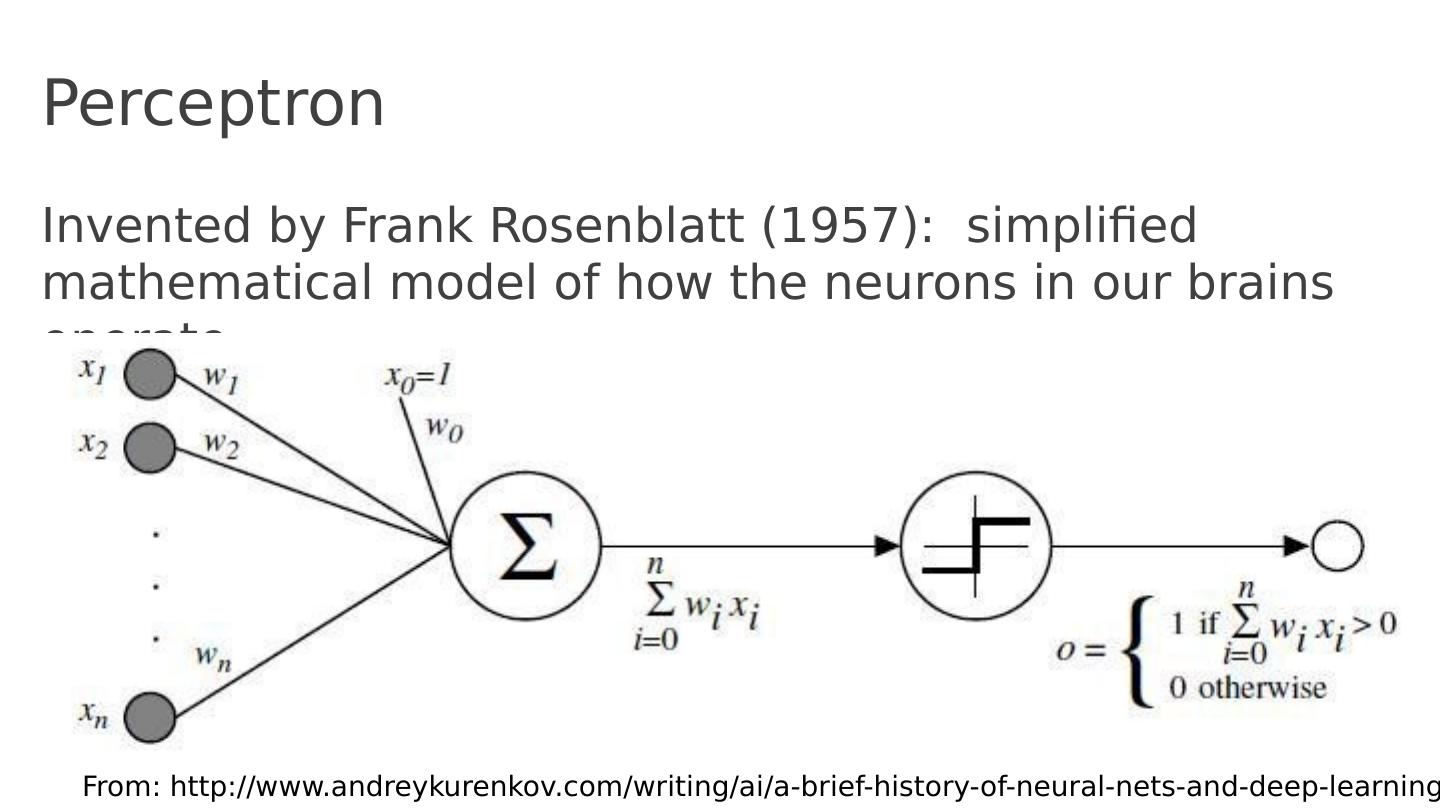

5 .Perceptron Invented by Frank Rosenblatt (1957): simplified mathematical model of how the neurons in our brains operate From: http:// www.andreykurenkov.com /writing/ ai /a-brief-history-of-neural-nets-and-deep-learning/

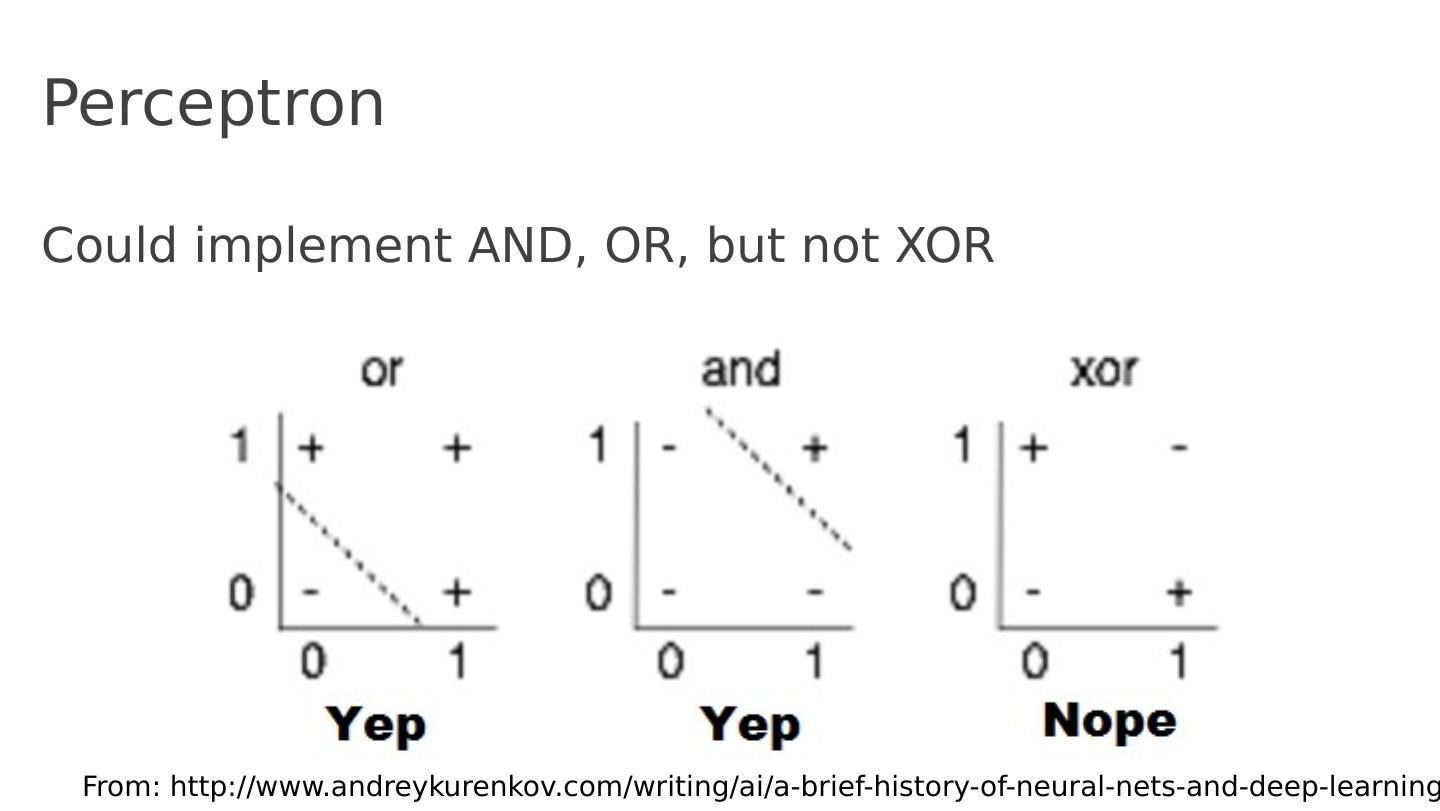

6 .Perceptron Could implement AND, OR, but not XOR From: http:// www.andreykurenkov.com /writing/ ai /a-brief-history-of-neural-nets-and-deep-learning/

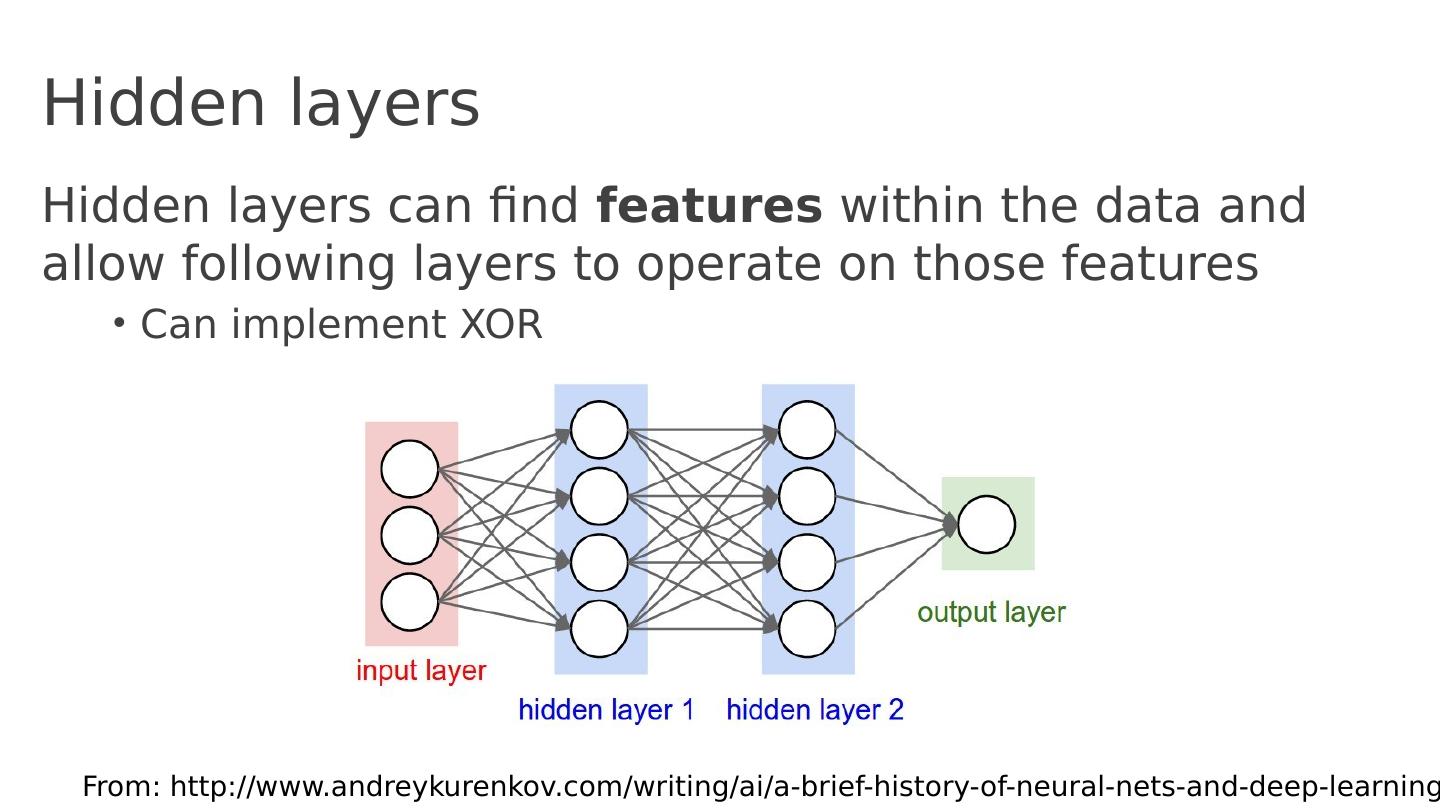

7 .Hidden layers H idden layers can find features within the data and allow following layers to operate on those features Can implement XOR From: http:// www.andreykurenkov.com /writing/ ai /a-brief-history-of-neural-nets-and-deep-learning/

8 .Learning: Backpropagation From: http:// www.andreykurenkov.com /writing/ ai /a-brief-history-of-neural-nets-and-deep-learning/

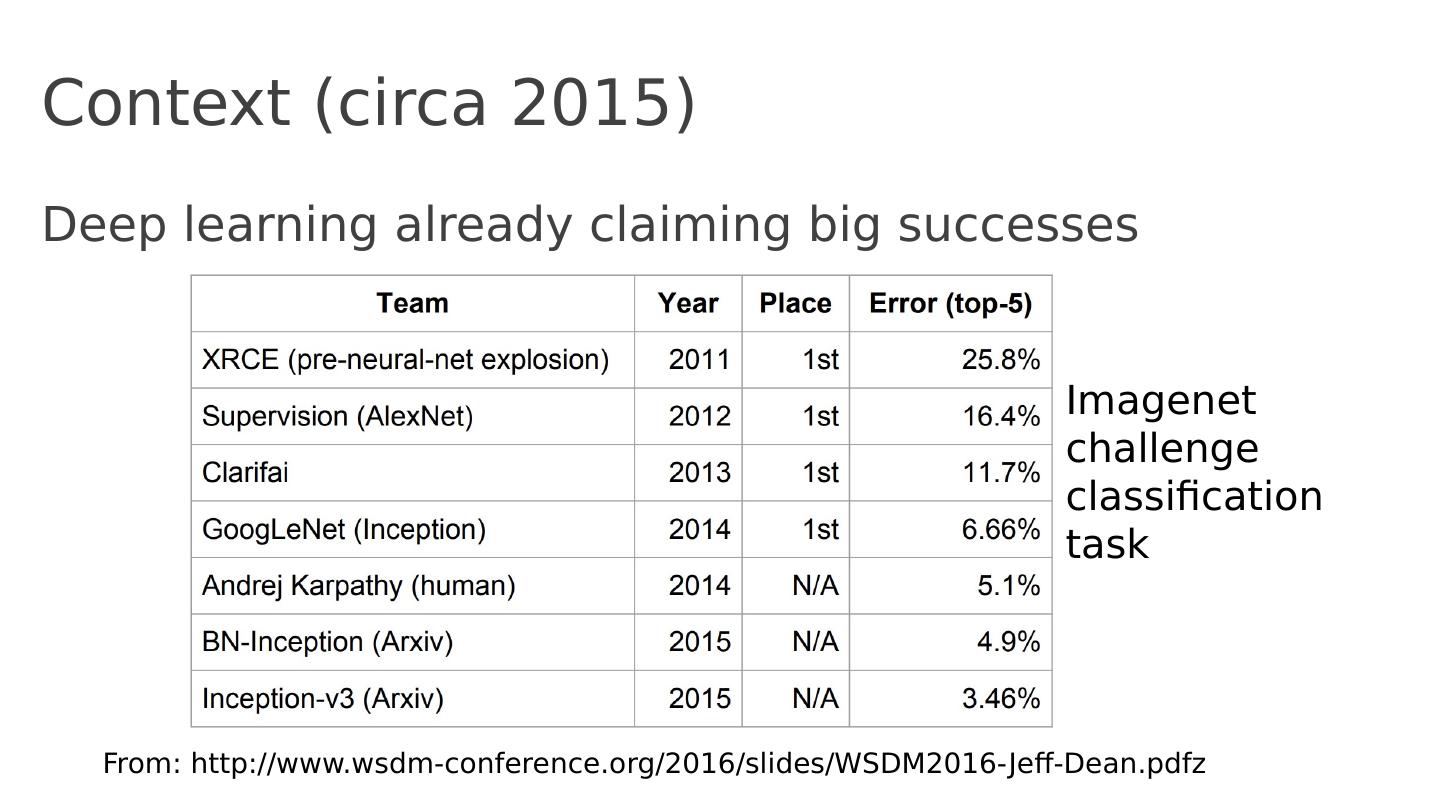

9 .Context (circa 2015) Deep learning already claiming big successes From: http:// www.wsdm-conference.org /2016/slides/WSDM2016-Jeff-Dean.pdfz Imagenet challenge classification task

10 .Context (circa 2015) Deep learning already claiming big successes Number of developers/researchers exploding A “zoo” of tools and libraries, some of questionable quality …

11 .What is TensorFlow ? Open source library for numerical computation using data flow graphs D eveloped by Google Brain Team to conduct machine learning research Based on DisBelief used internally at Google since 2011 “Tensor F low is an interface for expressing machine learning algorithms, and an implementation for executing such algorithms ”

12 .What is TensorFlow Key i dea : express a numeric computation as a graph Graph nodes are operations with any number of inputs and outputs Graph edges are tensors which flow between nodes

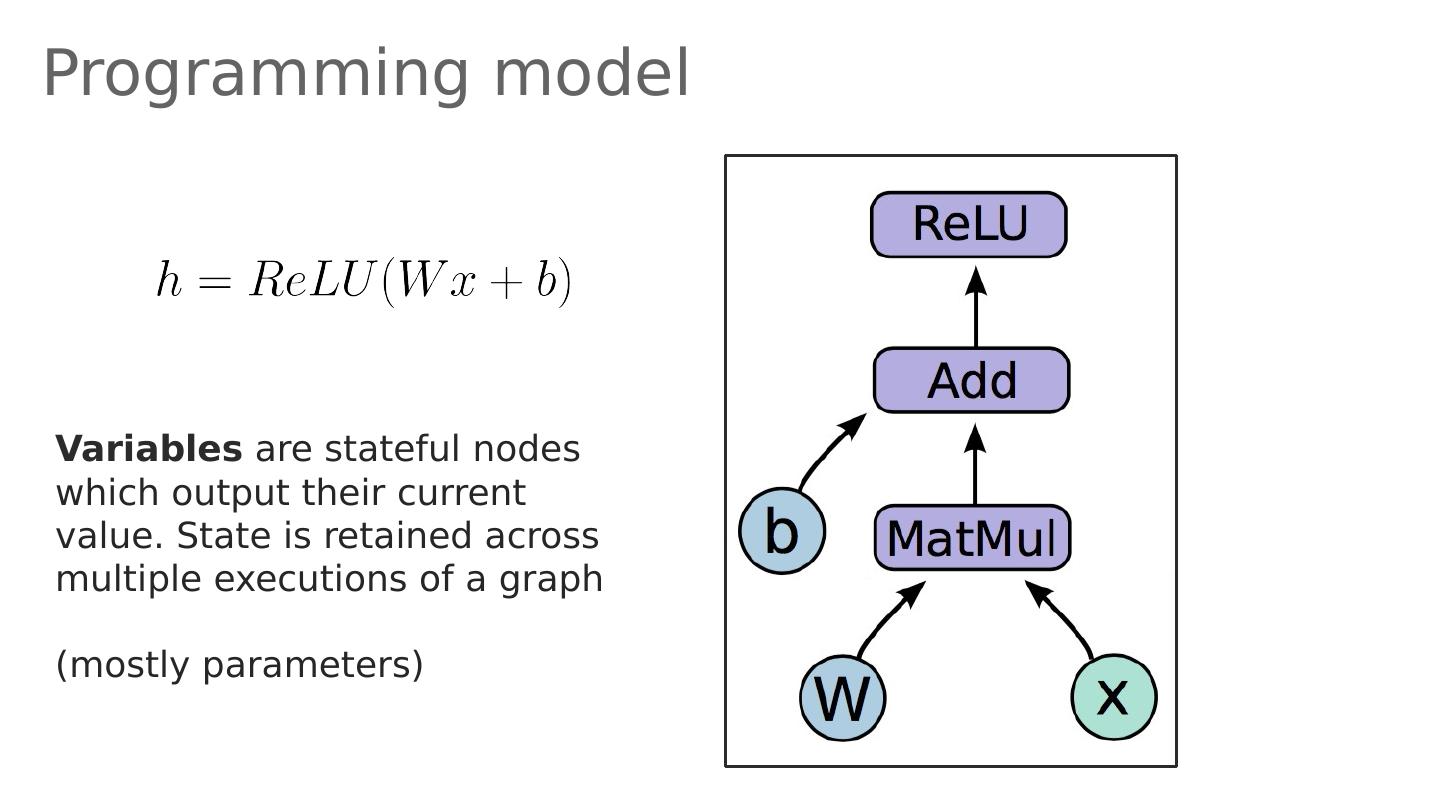

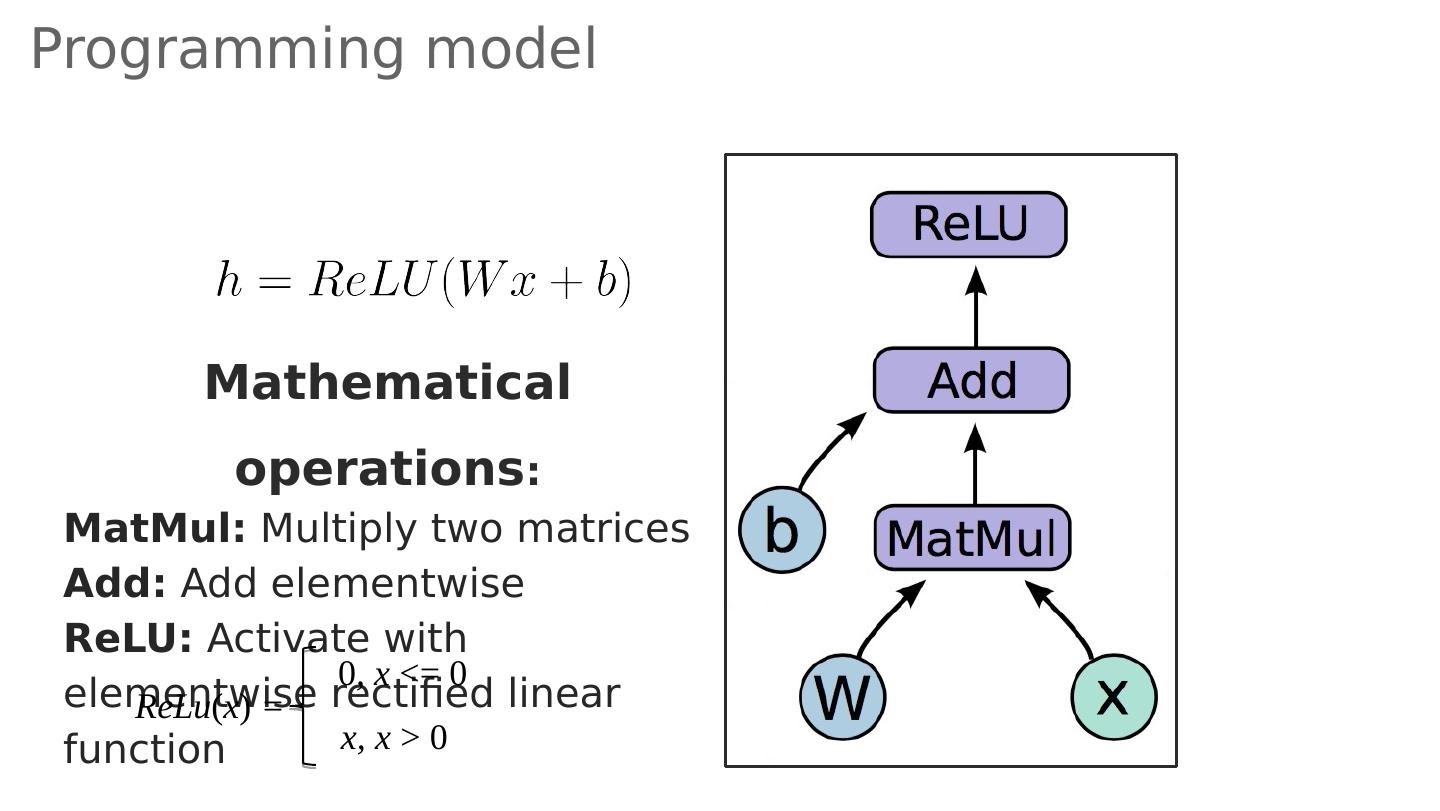

13 .Programming model

14 .Variables are stateful nodes which output their current value. State is retained across multiple executions of a graph (mostly parameters) Programming model

15 .Programming model Placeholders are nodes whose value is fed in at execution time (inputs, labels, …)

16 .Programming model Mathematical operations : MatMul : Multiply two matri c es Add: Add elementwise ReLU : Activate with elementwise rectified linear function ReLu ( x ) = 0 , x <= 0 x, x > 0

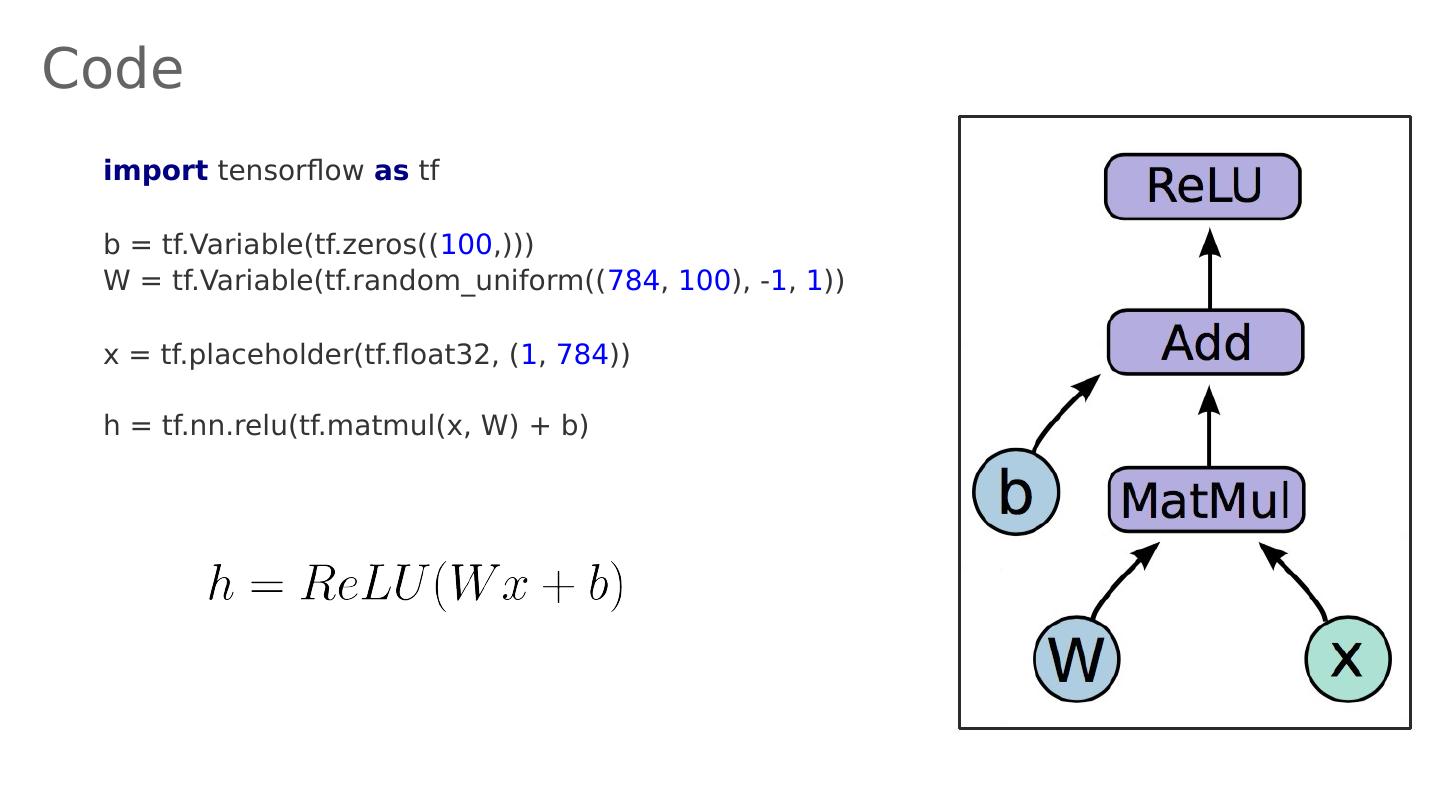

17 .Code import tensorflow as tf b = tf.Variable ( tf.zeros (( 100 ,))) W = tf.Variable ( tf.random_uniform (( 784 , 100 ), - 1 , 1 )) x = tf.placeholder (tf.float32, ( 1 , 784 )) h = tf.nn.relu ( tf.matmul (x, W) + b)

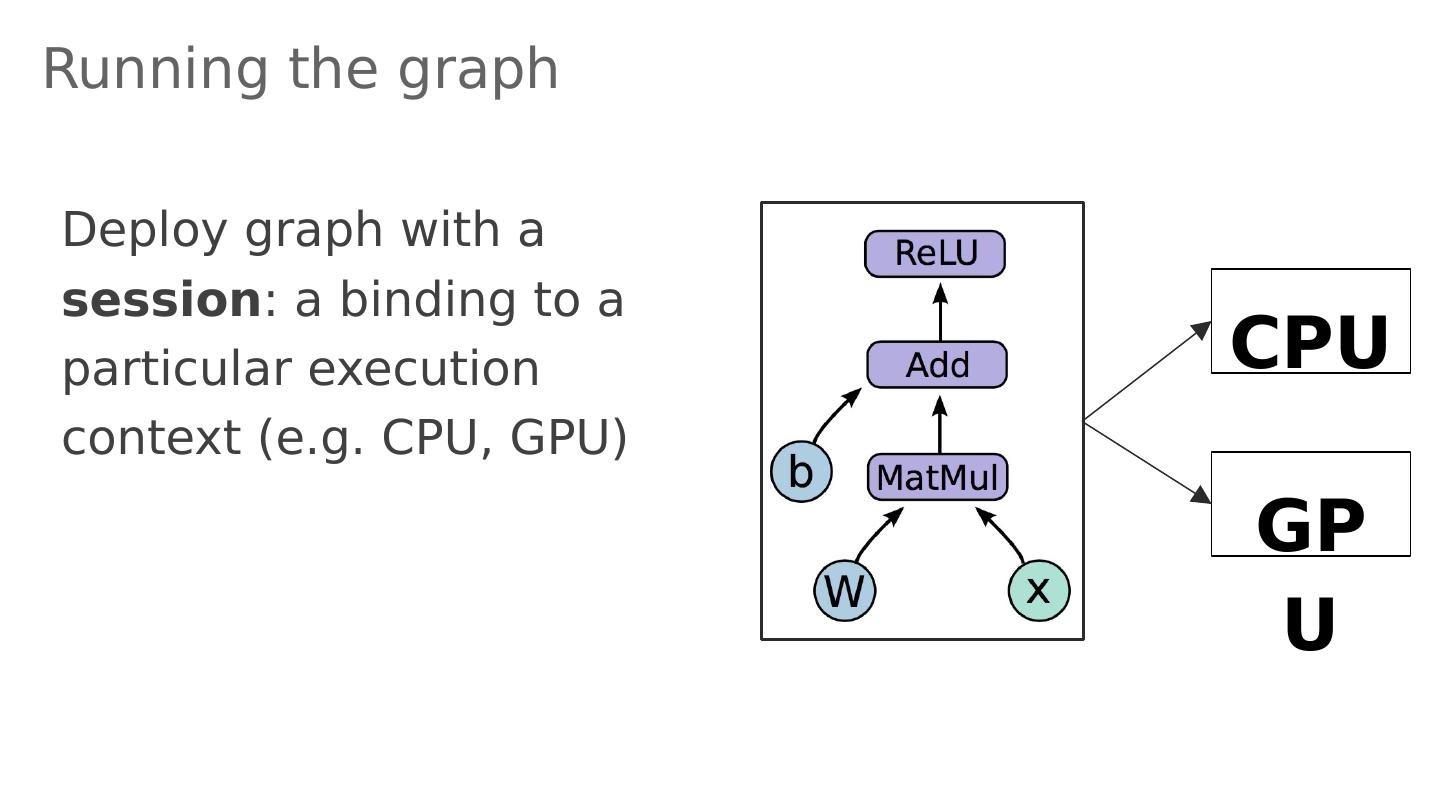

18 .Running the graph D eploy graph with a session : a binding to a particular execution context (e.g. CPU, GPU) CPU GPU

19 .End-to-end S o far: B uilt a graph using variables and placeholders D eploy the graph onto a session , i.e., execution environment Next: train model Define loss function Compute gradients

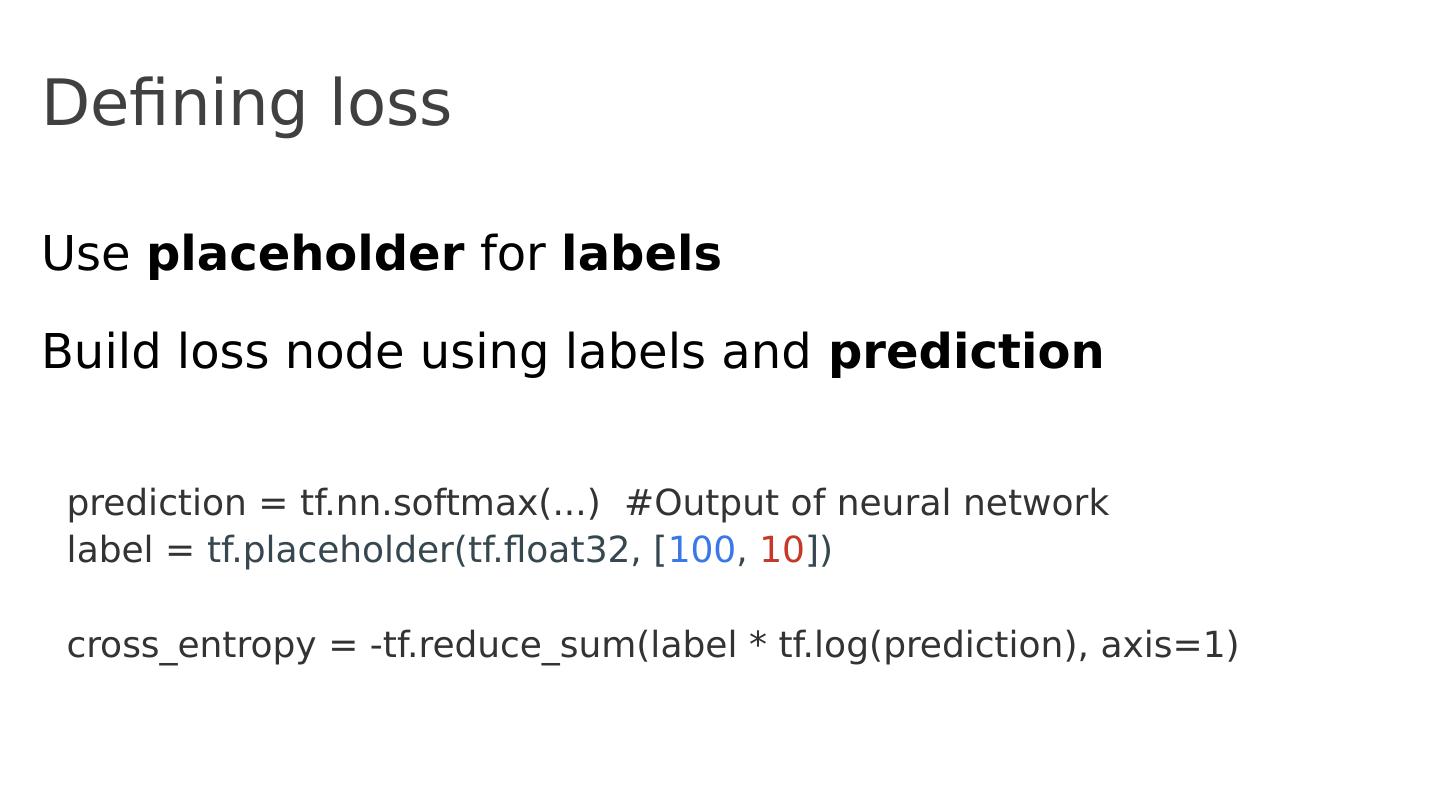

20 .Defining loss Use placeholder for labels Build loss node using labels and prediction prediction = tf.nn.softmax (...) #Output of neural network label = tf.placeholder (tf.float32, [ 100 , 10 ]) cross_entropy = - tf.reduce_sum (label * tf.log (prediction), axis=1)

21 .Gradient computation: Backpropagation train_step = tf.train.GradientDescentOptimizer (0.5).minimize( cross_entropy ) tf.train.GradientDescentOptimizer is an Optimizer object tf.train.GradientDescentOptimizer ( lr ).minimize( cross_entropy ) adds optimization operation to computation graph TensorFlow graph nodes have attached gradient operations Gradient with respect to parameters computed with backpropagation … automatically

22 .Design Principles Dataflow graphs of primitive operators Deferred execution (two phases) Define program i.e., symbolic dataflow graph w/ placeholders Executes optimized version of program on set of available devices Common abstraction for heterogeneous accelerators Issue a kernel for execution Allocate memory for inputs and outputs Transfer buffers to and from host memory

23 .Dynamic Flow Control Problem : support ML algos that contain conditional and iterative control flow, e.g. Recurrent Neural Networks (RNNs) Long-Short Term Memory (LSTM) Solution : Add conditional (if statement) and iterative (while loop) programming constructs

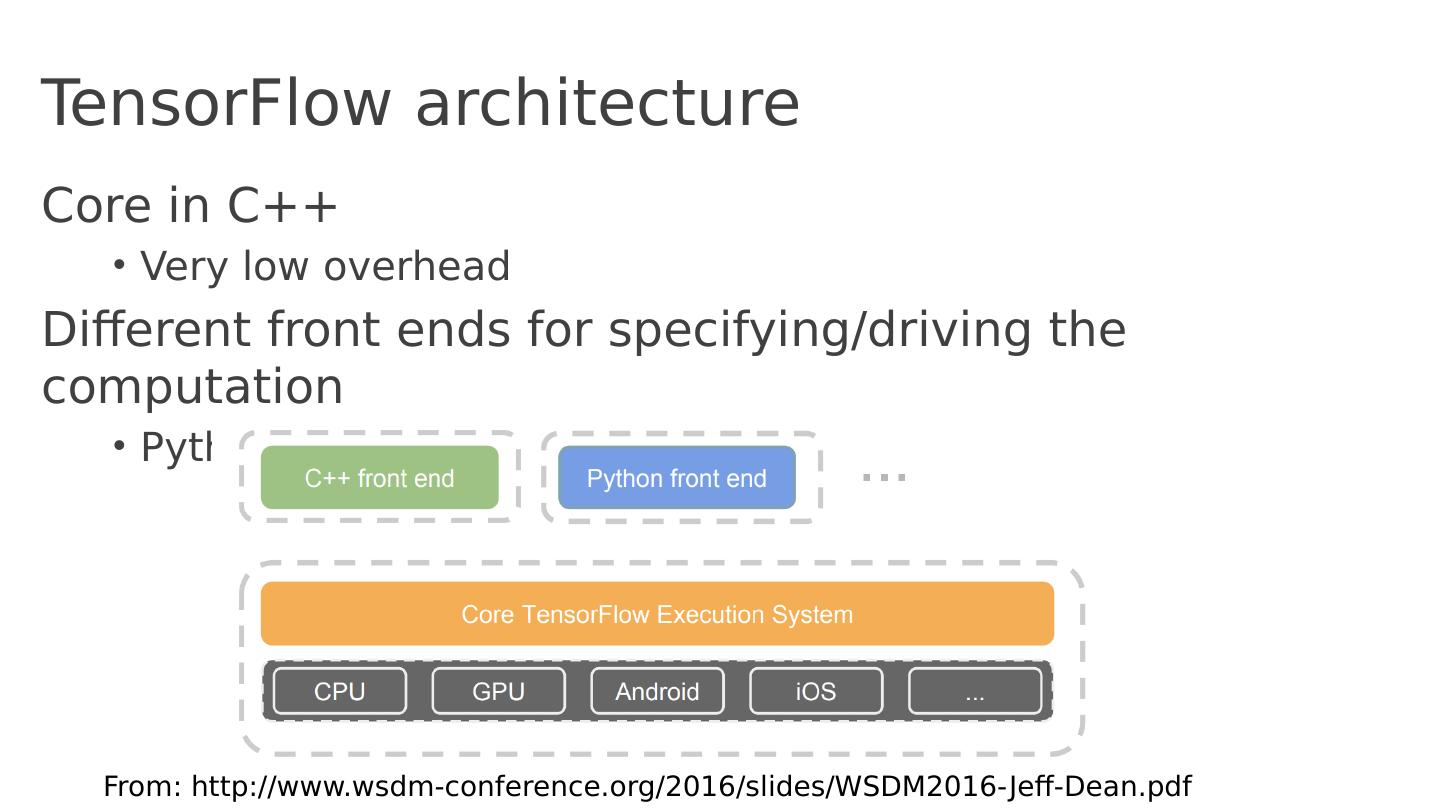

24 .TensorFlow high-level architecture Core in C ++ Very low overhead Different front ends for specifying/driving the computation Python and C++ today, easy to add more From: http:// www.wsdm-conference.org /2016/slides/WSDM2016-Jeff-Dean.pdf

25 .TensorFlow architecture Core in C ++ Very low overhead Different front ends for specifying/driving the computation Python and C++ today, easy to add more From: http:// www.wsdm-conference.org /2016/slides/WSDM2016-Jeff-Dean.pdf

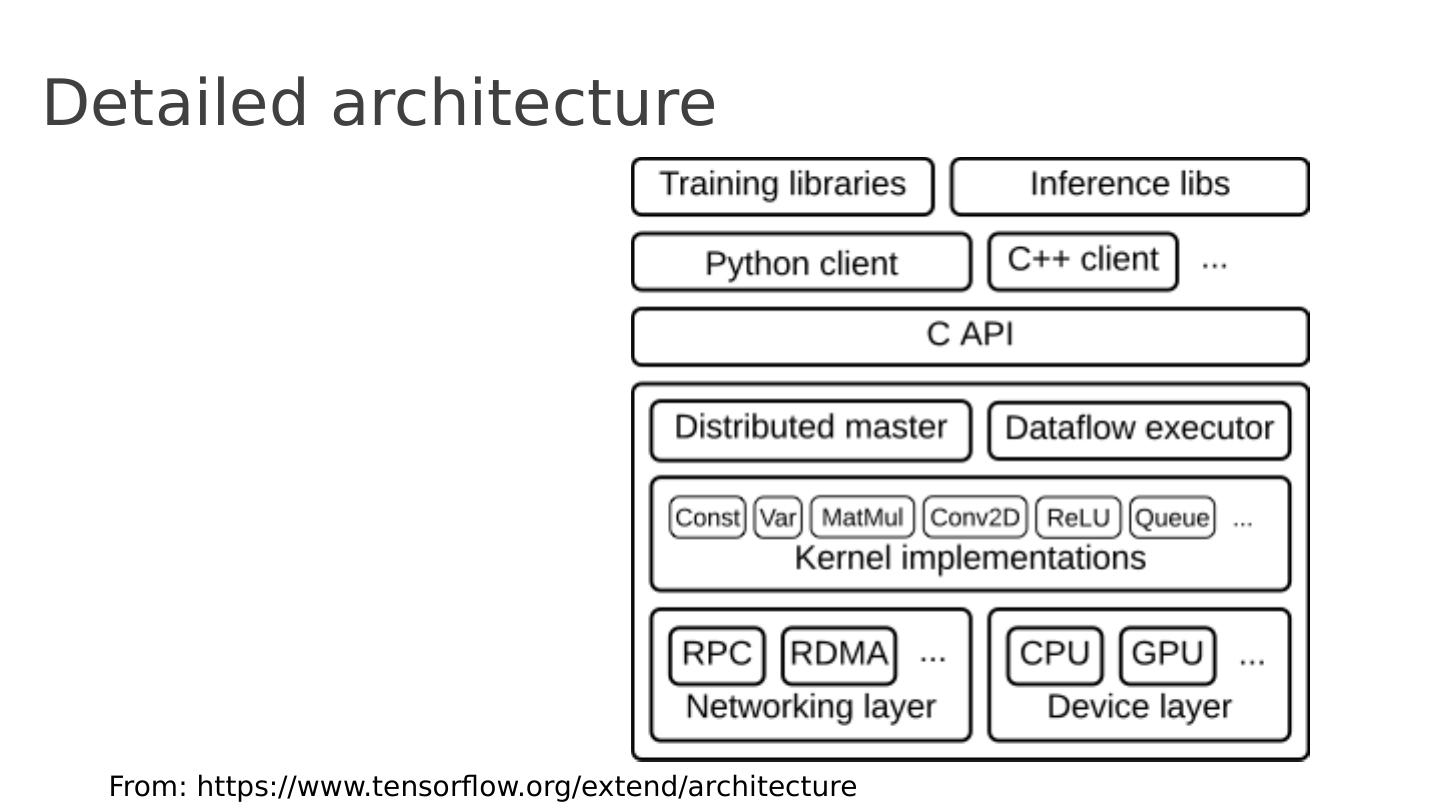

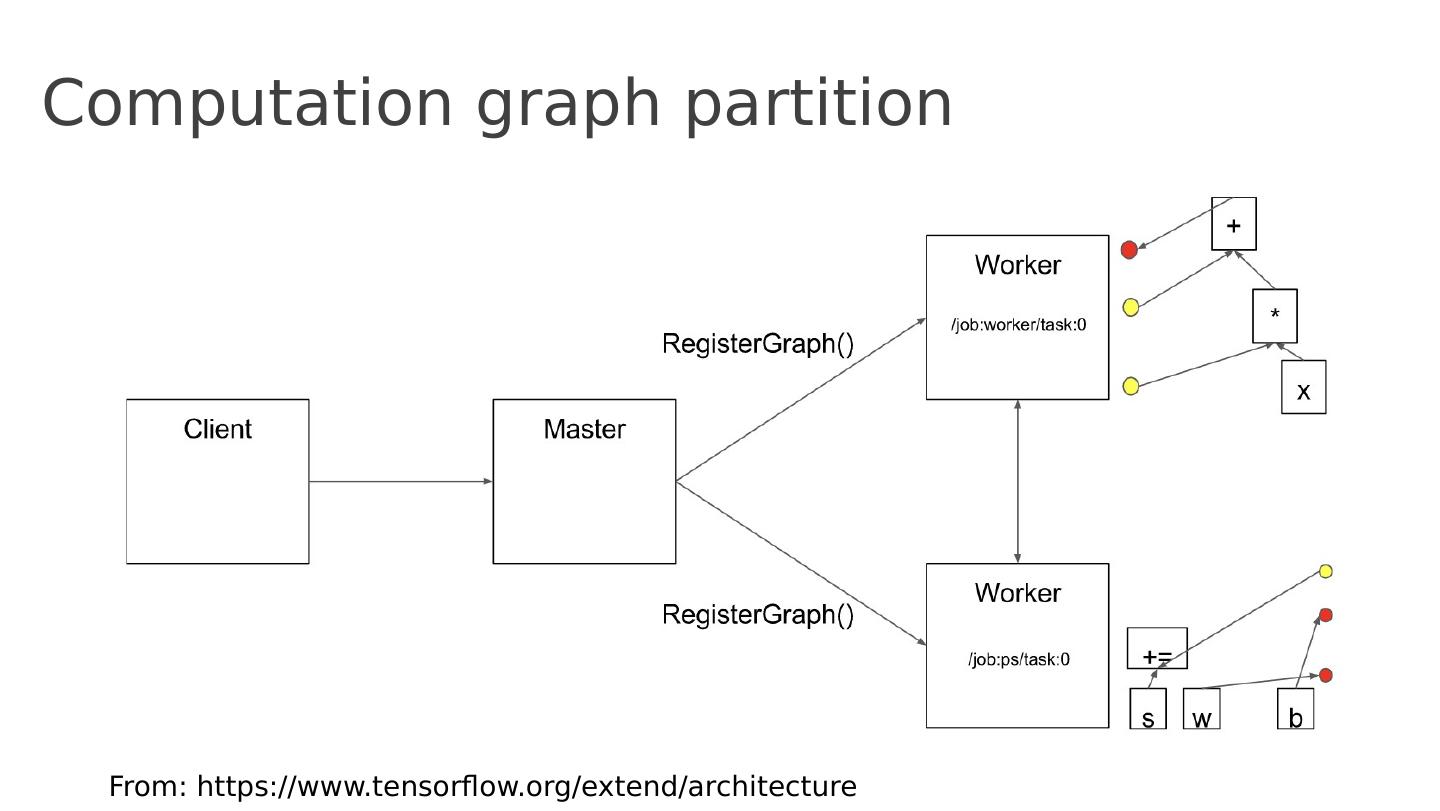

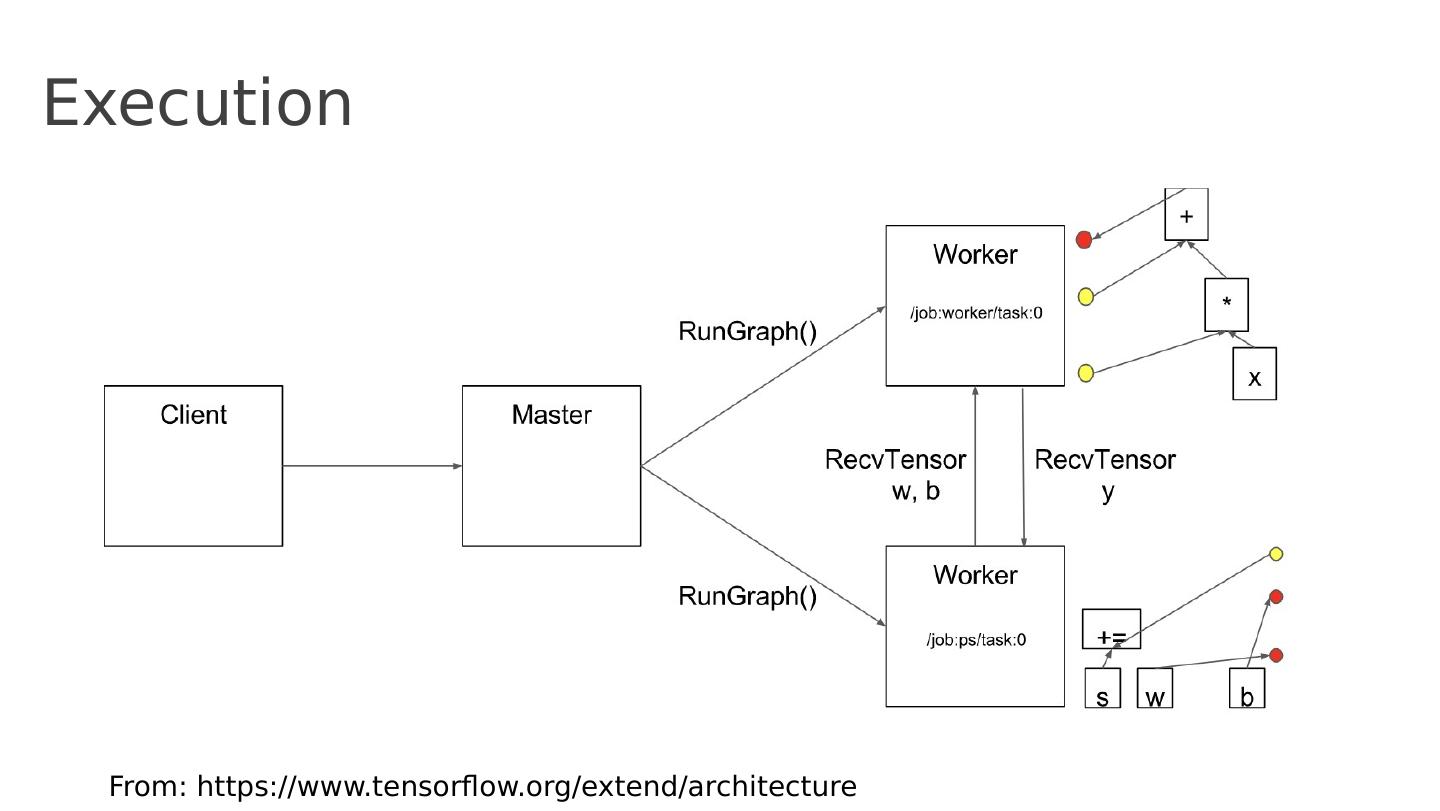

26 .Detailed architecture From: https:// www.tensorflow.org /extend/architecture

27 .Key components Similar to MapReduce, Apache Hadoop, Apache Spark, … From: https:// www.tensorflow.org /extend/architecture

28 .Client From: https:// www.tensorflow.org /extend/architecture

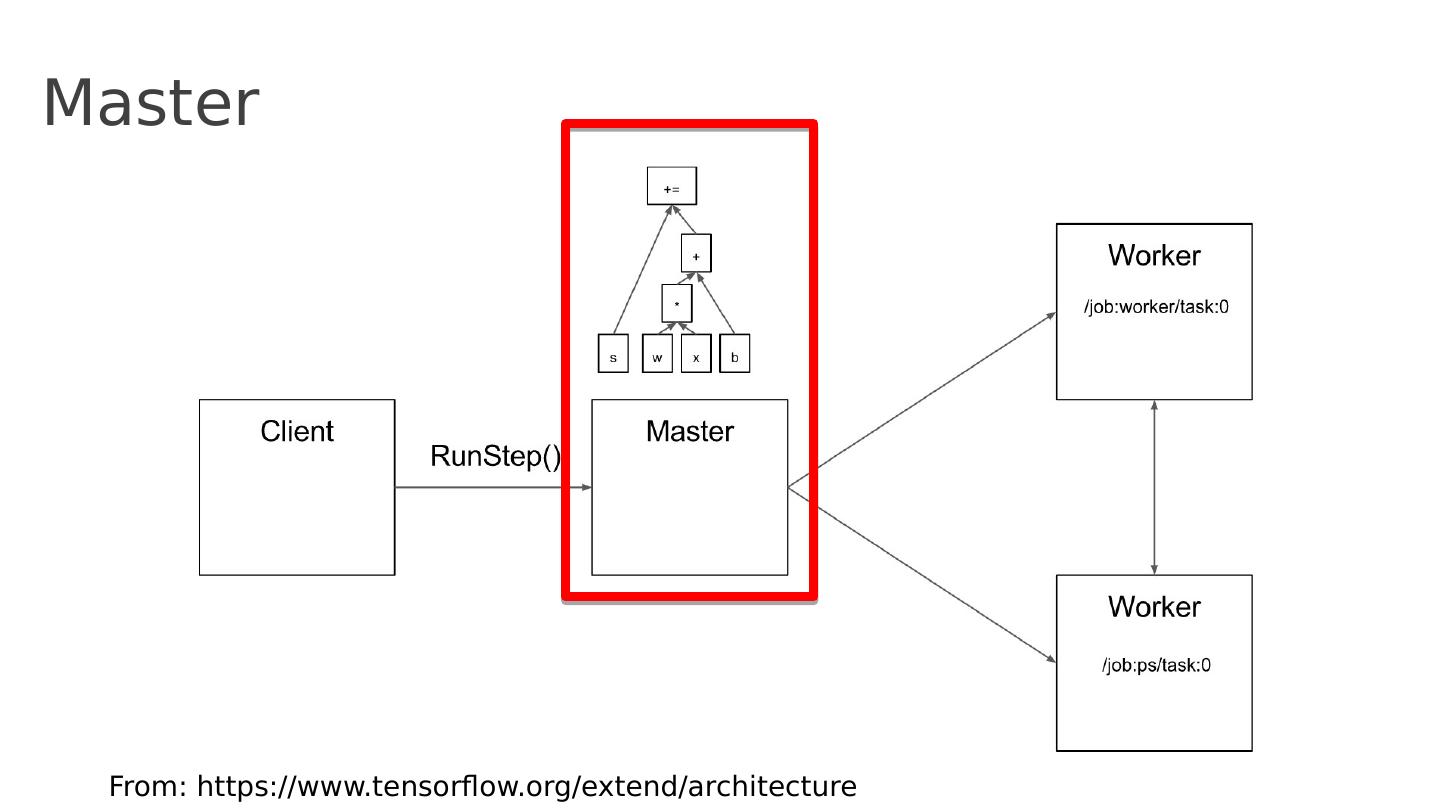

29 .Master From: https:// www.tensorflow.org /extend/architecture