- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

贪婪算法

展开查看详情

1 . Greedy Algorithms Subhash Suri March 20, 2018 1 Introduction • Greedy algorithms are a commonly used paradigm for combinatorial algorithms. Com- binatorial problems intuitively are those for which feasible solutions are subsets of a finite set (typically from items of input). Therefore, in principle, these problems can always be solved optimally in exponential time (say, O(2n )) by examining each of those feasible solutions. The goal of a greedy algorithm is find the optimal by searching only a tiny fraction. • In the 1980’s iconic movie Wall Street, Michael Douglas shouts in front of a room full of stockholders: “Greed is good. Greed is right... Greed works.” In this lecture, we will explore how well and when greed can work for solving computational or optimization problems. • Defining precisely what a greedy algorithm is hard, if not impossible. In an informal way, an algorithm follows the Greedy Design Principle if it makes a series of choices, and each choice is locally optimized ; in other words, when viewed in isolation, that step is performed optimally. • The tricky question is when and why such myopic strategy (looking at each step in- dividually, and ignoring the global considerations) can still lead to globally optimal solutions. In fact, when a greedy strategy leads to an optimal solution, it says some- thing interesting about the structure (nature) of the problem itself! In other cases, even if the greedy does not give optimal, in many cases it leads to provably good (not too far from optimal) solution. • Let us start with a trivial problem, but it will serve to illustrate the basic idea: Coin Changing. • The US mint produces coins in the following four denominations: 25, 10, 5, 1. 1

2 . • Given an integer X between 0 and 99, making change for X involves finding coins that sum to X using the least number of coins. Mathematically, we can write X = 25a + 10b + 5c + 1d, so that a + b + c + d is minimum where a, b, c, d ≥ 0 are all integers. • Greedy Coin Changing. – Choose as many quarters as possible. That is, find largest a with 25a ≤ X. – Next, choose as many dimes as possible to change X − 25a, and so on. – An example. Consider X = 73. – Choose 2 quarters, so a = 2. Remainder: 73 − 2 × 25 = 23. – Next, choose 2 dimes, so b = 2. Remainder: 23 − 2 × 10 = 3. – Choose 0 nickels, so c = 0. Remainder: 3. – Finally, choose 3 pennies, so d = 3. Remainder: 3 − 3 = 0. – Solution is a = 2, b = 2, c = 0, d = 3. • Does Greedy Fails Always Work for Coin Changing? Prove that the greedy always produces optimal change for US coin denominations. • Does it also work for other denominations? In other words, does the correctness of Greedy Change Making depend on the choice of coins? • No, the greedy does not always return the optimal solution. Consider the case with coins types {12, 5, 1}. For X = 15, the greedy uses 4 coins: 1 × 12 + 0 × 5 + 3 × 1. The optimal uses 3 coins: 3 × 5. Moral: Greed, the quick path to success or to ruin! 2 Activity Selection, or Interval Scheduling • We now come to a simple but interesting optimization problem for which a greedy strategy works, but the strategy is not obvious. • The input to the problem is a list of N activities, each specified with a start and end time, which require the use of some resource. • Only one activity can be be scheduled on the resource at a time; once an activity is started, it must be run to completion; no pre-emption allowed. • What is the maximum possible number of activities we can schedule? • This is an abstraction that fits that many applications. For instance, activities can be computation tasks and resource the processor, or activities can be college classes and resource a lecture hall. 2

3 . • More formally, we denote the list of activities as S = {1, 2, . . . , n}. • Each activity has a specific start time and a specific finish time; the durations of different activities can be different. Specifically, activity i is given as tuple (s(i), f (i)), where s(i) ≤ f (i), namely, that the finish time must be after the start time. • For instance, suppose the input is {(3, 6), (1, 4), (1.2, 2.5), (6, 8), (0, 2)}. Activities (3, 6) and (6, 8) are compatible—they can both be scheduled—since they do not overlap in their duration (endpoints are ok). • Clearly a combinatorial problem: input is a list of n objects, and output is a subset of those objects. • Thus, each activity is pretty inflexible; if chosen, it must start at time s(i) and end at f (i). • A subset of activities is a feasible schedule if no two activities overlap (in time). • Objective: Design an algorithm to find a feasible schedule with as many activities as possible. 2.1 Potential Greedy Strategies • The first obvious one is to pick the one that starts first. Remove those activities that overlap with it, and repeat. ---- ---- ---- ---- ----------------------------------- It is easy to see how this is not always optimal. Greedy picks just one (the longest one), while optimal has 4. • A more sophisticated algorithm might repeatedly pick the activity with the smallest duration (and does not overlap with those already chosen). However, a simple example shows that this can also fail. ------------ ------------- ------------ • Yet another possibility is to count the number of other jobs that overlap with each activity, and then choose the one with the smallest (overlap) count. This seems a bit better, and does get optimal for both the earlier two cases. Still, this also fails to guarantee optimality some times. 3

4 . --------- ---------- --------- --------- --------- --------- --------- --------- --------- --------- --------- --------- --------- The greedy starts by picking the one in the middle, which right away ensures that it cannot have more than 3. The optimal chooses the 4 in the top row. 2.2 The Correct Greedy Strategy for Interval Scheduling • All these false starts and counterexamples should give you pause whether any greedy can ensure that it will find optimal in all cases. • Fortunately, it turns out that there is such a strategy, though it may not be the one that seems the most natural. • The correct strategy is to choose jobs in the Earliest Finish Time order! • More precisely, we sort the jobs in the increasing order of their finish time. By simple relabeling of jobs, let us just assume that f (j1 ) ≤ f (j2 ) ≤ f (j3 ) · · · ≤ f (jn ) • An example instance of the problem. Activity Start Finish 1 1 4 2 3 5 3 0 6 4 5 7 5 3 8 6 5 9 7 6 10 8 8 11 9 8 12 10 2 13 11 12 14 ------------- ---------- 4

5 . -------- ---------------- ----------------- -------------- ------------------- --------------------- ---------- ------------------------------------------------- -------------------------- 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 • We can visualize the scheduling problem as choosing non-overlapping intervals along the time-axis. (In my example, jobs are already labeled this way.) • In pseudo-code, we can write: A = {1}; j = 1; // accept job 1 for i = 2 to n do if s(i) >= f(j) then A = A + {i}; j = i; return A • In our example, the greedy algorithm first chooses 1; then skips 2 and 3; next it chooses 4, and skips 5, 6, 7; so on. 2.3 Analysis: Correctness • It is not obvious that this method will (should) always return the optimal solution. After all, the previous 3 methods failed. • But the method clearly finds a feasible schedule; no two activities accepted by this method can conflict; this is guaranteed by the if statement. • In order to show optimality, let us argue in the following manner. Suppose OPT is an optimal schedule. Ideally we would like to show that it is always the case that A ≡ OP T ; but this is too much to ask; in many cases there can be multiple different optima, and the best we can hope for is that their cardinalities are the same: that is, |A| = |OP T |; the contain the same number of activities. • The proof idea, which is a typical one for greedy algorithms, is to show that the greedy stays ahead of the optimal solution at all times. So, step by step, the greedy is doing at least as well as the optimal, so in the end, we can’t lose. 5

6 .• Some formalization and notation to express the proof. • Suppose a1 , a2 , . . . , ak are the (indices of the) set of jobs in the Greedy schedule, and b1 , b2 , . . . bm the set of jobs in an optimal schedule OPT. We would like to argue that k = m. • Mnemonically, ai ’s are jobs picked by our algorithm while bi ’s are best (optimal) sched- ule jobs. • In both schedules the jobs are listed in increasing order (either by start or finish time; both orders are the same). • Our intuition for greedy is that it chooses jobs in such a way as to make the resource free again as soon as possible. Thus, for instance, our choice of greedy ensures that f (a1 ) ≤ f (b1 ) (that is, a1 finishes no later than b1 .) • This is the sense in which greedy “stays ahead.” We now turn this intuition into a formal statement. • Lemma: For any i ≤ k, we have that f (ai ) ≤ f (bi ). • That is, the ith job chosen by greedy finishes no later than the ith job chosen by the optimal. • Note that the jobs have non-overlapping durations in both greedy and optimal, so they are uniquely ordered left to right. • Proof. – We already saw that the statement is true for i = 1, by the design of greedy. – We inductively assume this is true for all jobs up to i − 1, and prove it for i. – So, the induction hypothesis says that f (ai−1 ) ≤ f (bi−1 ). – Since clearly f (bi−1 ) ≤ s(bi ), we must also have f (ai−1 ) ≤ s(bi ). – That is, the ith job selected by optimal is also available to the greedy for choosing as its ith job. The greedy may pick some other job instead, but if it does, it must be because f (ai ) ≤ f (bi ). Thus, the induction step is complete. • With this technical insight about the greedy, it is now a simple matter to wrap up the greedy’s proof of optimality. 6

7 . • Theorem: The greedy algorithm returns an optimal solution for the activity selection problem. • Proof. By contradiction. If A were not optimal, then an optimal solution OPT must have more jobs than A. That is, m > k. • Consider what happens when i = k in our earlier lemma. We have that f (ak ) ≤ f (bk ). So, the greedy’s last job has finished by the time OPT’s kth job finishes. • If m > k, meaning there is at least one other job that optimal accepts, that job is also available to Greedy; it cannot conflict with anything greedy has scheduled. Because the greedy does not stop until it no longer has any acceptable jobs left, this is a contradiction. 2.4 Analysis: Running time • The greedy strategy can be implemented in worst-case time O(n log n). We begin by sorting the jobs in increasing order of their finish times, which takes O(n log n). After that, the algorithm simply makes one scan of the list, spending a constant time per job. • So total time complexity is O(n log n) + O(n) = O(n log n). 3 Interval Partitioning Problem • Let us now consider a different scheduling problem: given the set of activities, we must schedule them all using the minimum number of machines (rooms). • An example. • An obvious greedy algorithm to try is the following: Use the Interval Scheduling algorithm to find the max number of activities that can be scheduled in one room. Delete and repeat on the rest, until no activities left. • Surprisingly, this algorithm does not always produce the optimal answer. 7

8 . a b c ------ ------ ---------------------------- d e f --------------------- ------- --------- These activities can be scheduled in 2 rooms, but Greedy will need 3, because d and c cannot be scheduled in the same room. • Instead a different, and simpler, Greedy works. Sort activities by start time. Start Room 1 for activity 1. for i = 2 to n if activity i can fit in any existing room, schedule it in that room otherwise start a new room with activity i • Proof of Correctness. Define depth of activity set as the maximum number of activities that are concurrent at any time. • Let depth be D. Optimal must use at least D rooms. Greedy uses no more than D rooms. 4 Data Compression: Huffman Codes • Huffman coding is an example of a beautiful algorithm working behind the scenes, used in digital communication and storage. It is also a fundamental result in theory of data compression. • For instance, mp3 audio compression scheme basically works as follows: 1. The audio signal is digitized by sampling at, say, 44KHz. 2. This produces a sequence of real numbers s1 , s2 , . . . , sT . For instance, a 50 min symphony corresponds to T = 50 × 60 × 44000 = 130M numbers. 3. Each si is quantized, using a finite set G (e.g., 256 values for 8 bit quantization.) The quantization is fine enough that human ear doesn’t perceive the difference. 4. The quantized string of length T over alphabet G is encoded in binary. 5. This last step uses Huffman encoding. 8

9 .• To get a feel for compression, let’s consider a toy example: a data file with 100, 000 characters. • Assume that the cost of storage or transmission is proportional to the number of bits required. What is the best way to store or transmit this file? • In our example file, there are only 6 different characters (G), with their frequencies as shown below. Char a b c d e f Freq(K) 45 13 12 16 9 5 • We want to design binary codes to achieve maximum compression. Suppose we use fixed length codes. Clearly, we need 3 bits to represent six characters. One possible such set of codes is: Char a b c d e f Code 000 001 010 011 100 101 • Storing the 100K character requires 300K bits using this code. Is it possible to improve upon this? • Huffman Codes. We can improve on this using Variable Length Codes. • Motivation: use shorter codes for more frequent letters, and longer codes for infrequent letters. • (A similar idea underlies Morse code: e = dot; t = dash; a = dot-dash ; etc. But Morse code is a heuristics; not optimal in any formal sense.) • One such set of codes shown below. Char a b c d e f VLC 0 101 100 111 1101 1100 • Note that some codes are smaller (1 bit), while others are longer (4 bits) than the fixed length code. Still, using this code2, the file requires 1 × 45 + 3 × 13 + 3 × 12 + 3 × 16 + 4 × 9 + 4 × 5 Kbits, which is 224 Kbits. 9

10 . • Improvement is 25% over fixed length codes. In general, variable length codes can give 20 − 90% savings. • Problems with Variable Length Codes. We have a potential problem with variable length codes: while with fixed length coding, decoding is trivial, it is not the case for variable length codes. • Example: Suppose 0 and 000 are codes for letters x and y. What should decoder do upon receiving 00000? • We could put special marker codes but that reduce efficiency. • Instead we consider prefix codes: no codeword is a prefix of another codeword. • So, 0 and 000 will not be prefix codes, but (0, 101, 100, 111, 1101, 1100), the example shown earlier, do form a prefix code. • To encode, just concatenate the codes for each letter of the file; to decode, extract the first valid codeword, and repeat. • Example: Code for ‘abc’ is 0101100. And ‘001011101’ uniquely decodes to ’aabe’. 4.1 Representing Codes by a Tree • Instead of the table-based format, the coding and decoding is more intuitive to describe using a Binary Tree format, in which characters are associated with leaves. • Code for a letter is the sequence of bits between root and that leaf. 4.2 Measuring Optimality • Before we claim the optimality of a coding scheme, we must agree on a precise quanti- tative measure by which we evaluate the goodness (figure of merit). Let us formalize this. • Let C denote the alphabet. Let f (p) be the frequency of a letter p in C. Let T be the tree for a prefix code; let dT (p) be the depth of p in T . The number of bits needed to encode our file using this code is: B(T ) = f (p)dT (p) p∈C 10

11 .• Think of this as bit complexity. We want a code that achieves the minimum possible value of B(T ). • Optimal Tree Property. An optimal tree must be full: each internal node has two children. Otherwise we can improve the code. • Thus, by inspection, the fixed length code above is not optimal! • Greedy Strategies. Ideas for optimal coding??? Simple obvious heuristic ideas do not work; a useful exercise will be to try to “prove” the correctness of your suggested heuristic. • Huffman Story: Developed his coding procedure, in a term paper he wrote while a graduate student at MIT. Joined the faculty of MIT in 1953. In 1967, became the founding faculty member of the Computer Science Department at UCSC. Died in 1999. 11

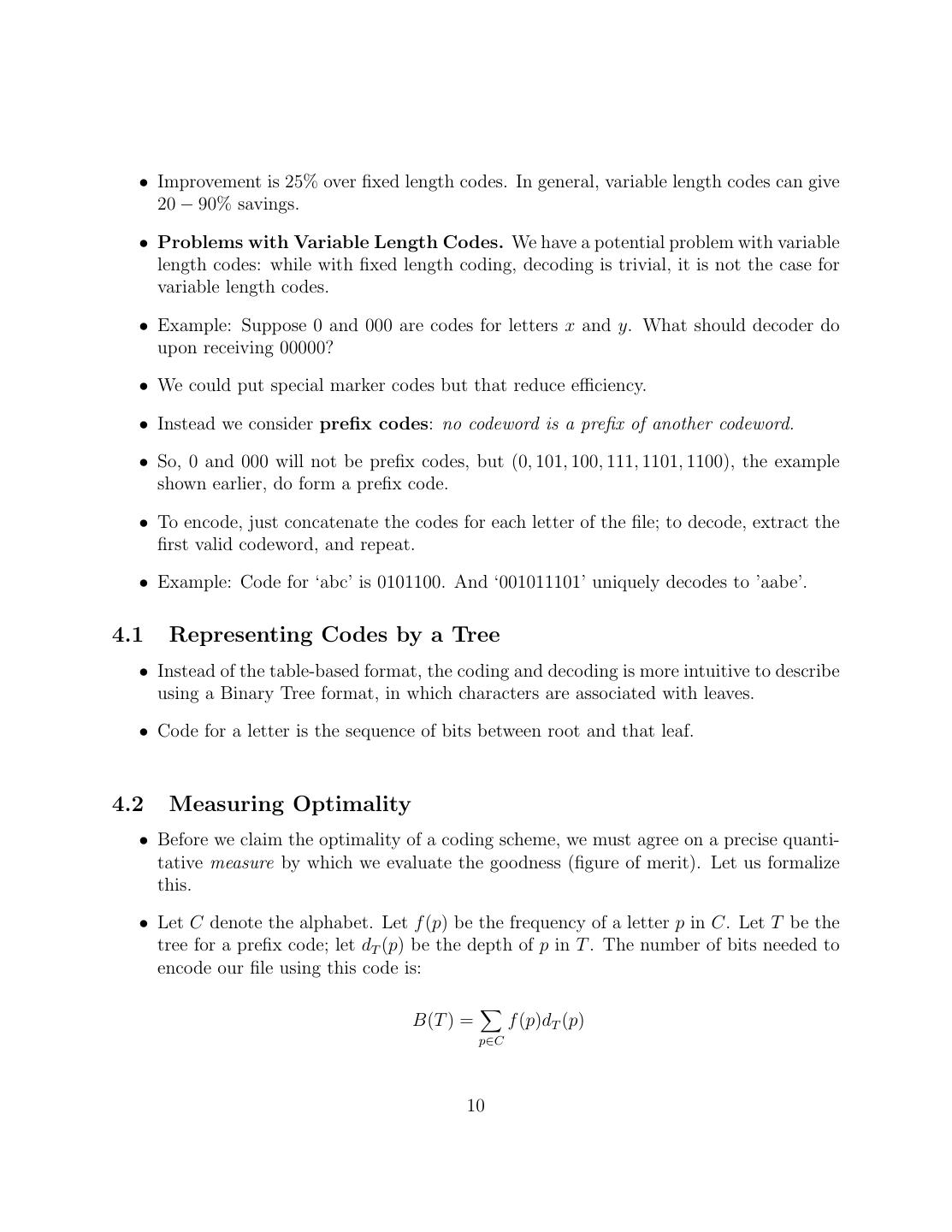

12 .• Excerpt from an Scientific American article about this: In 1951 David A. Huffman and his classmates in an electrical engineering graduate course on information theory were given the choice of a term paper or a final exam. For the term paper, Huffman’s professor, Robert M. Fano, had assigned what at first appeared to be a simple problem. Students were asked to find the most efficient method of representing numbers, letters or other symbols using a binary code. Besides being a nimble intellectual ex- ercise, finding such a code would enable information to be compressed for transmission over a computer network or for storage in a computer’s memory. Huffman worked on the problem for months, developing a number of ap- proaches, but none that he could prove to be the most efficient. Finally, he despaired of ever reaching a solution and decided to start studying for the final. Just as he was throwing his notes in the garbage, the solution came to him. “It was the most singular moment of my life,” Huffman says. “There was the absolute lightning of sudden realization.” Huffman says he might never have tried his hand at the problem—-much less solved it at the age of 25–if he had known that Fano, his professor, and Claude E. Shannon, the creator of information theory, had struggled with it. “It was my luck to be there at the right time and also not have my professor discourage me by telling me that other good people had struggled with this problem,” he says. Huffman Codes are used in nearly every application that involves the com- pression and transmission of digital data, such as fax machines, modems, computer networks, and high-definition television. • Huffman’s Algorithm. The algorithm constructs the binary tree T representing the optimal code. • Initially, each letter represented by a single-node tree. The weight of the tree is the letter’s frequency. • Huffman repeatedly chooses the two smallest trees (by weight), and merges them. The new tree’s weight is the sum of the two children’s weights. • If there are n letters in the alphabet, there are n − 1 merges. • In the pseudo-code, below Q is a priority Queue (say, heap). 1. Q ← C 2. for i = 1 to n − 1 do 12

13 . – z ← allocateN ode() – x ← lef t[z] ← DeleteM in(Q) – y ← right[z] ← DeleteM in(Q) – f [z] ← f [x] + f [y] – Insert(Q, z) 3. return F indM in(Q) 8. Illustration of Huffman Algorithm. Initial f:5 e:9 c:12 b:13 d:16 a:45 Merge/Reorder c:12 b:13 f+e:14 d:16 a:45 Next f+e:14 d:16 c+b:25 a:45 Next c+b:25 (f+e)+d:30 a:45 Next a:45 (c+b)+((f+e)+d):55 4.3 Analysis of Huffman • Time complexity is O(n log n). Initial sorting plus n heap operations. • We now prove that the prefix code generated is optimal. It is a greedy algorithm, and we use the swapping argument. • Lemma: Suppose x and y are the two letters of lowest frequency. Then, there exists an optimal prefix code in which codewords for x and y have the same (and maximum) length and they differ only in the last bit. • Proof. The idea of the proof is to take the tree T representing an optimal prefix code, and modify it to make a tree representing another optimal prefix code in which the characters x and y appear as sibling leaves of max depth. • In that case, x and y will have the same code length, with only the last bit different. • Assume an optimal tree that does not satisfy the claim. Assume, without loss of generality, that a and b are the two characters that are sibling leaves of max depth in T . Without loss of generality, assume that f (a) ≤ f (b) and f (x) ≤ f (y) • Because f (x) and f (y) are 2 lowest frequencies, we get: f (x) ≤ f (a) and f (y) ≤ f (b) 13

14 .• (Remark. Note that x, y, a, b need not all be distinct; for instance, may be y lies at the max depth and is therefore one of a or b.) • We first transform T into T by swapping the positions of x and a. • Intuitively, since dT (a) ≥ dT (x) and f (a) ≥ f (x), the swap does not increase the f requency × depth cost. Specifically, B(T ) − B(T ) = [f (p)dT (p)] − [f (p)dT (p)] p p = [f (x)dT (x) + f (a)dT (a)] − [f (x)dT (x) + f (a)dT (a)] = [f (x)dT (x) + f (a)dT (a)] − [f (x)dT (a) + f (a)dT (x)] 14

15 . = [f (a) − f (x)] × [dT (a) − dT (x)] ≥ 0 • Thus, this transformation does not increase the total bit cost. • Similarly, we then transform T into T by exchanging y and b, which again does not increase the cost. So, we get that B(T ) ≤ B(T ) ≤ B(T ). If T was optimal, so is T , but in T x and y are sibling leaves and they are at the max depth. • This completes the proof of the lemma. 15

16 .• We can now finish the proof of Huffman’s optimality. • But need to be very careful about the proper use of induction. For instance, here is a simple and bogus idea: Base case: two characters. Huffman’s algorithm is trivially optimal. Suppose it’s optimal for n − 1, and consider n characters. Delete the largest frequency character, and build the tree for the remaining n − 1 characters. Now, add the nth character as follows: create a new root; make the nth character left child, and hang the (n − 1) subtree as its right child. Even though this tree will have the properly of Lemma above (two smallest characters being deepest leaves, and the largest frequency having the shortest code), this proof is all wrong—it neither shows that the resulting tree is optimal, nor is that tree even the output of actual Huffman’s algorithm. • Instead, we will do induction by removing two smallest keys, replacing them with their “union” key, and looking at the difference in the tree when those leaves are added back in. • When x and y are merged; we pretend a new character z arises, with f (z) = f (x)+f (y). • We compute the optimal code/tree for these n − 1 letters: C + {z} − {x, y}. Call this tree T1 . • We then attach two new leaves to the node z, corresponding to x and y, obtaining the tree T . This is now the Huffman Code tree for character set C. • Proof of optimality. The cost B(T ) can be expressed in terms of cost B(T1 ), as follows. For each character p not equal to x and y, its depth is the same in both trees, so no difference. • Furthermore, dT (x) = dT (y) = dT1 (z) + 1, so we have f (x)dT (x) + f (y)dT (y) = [f (x) + f (y)] × [dT1 (z) + 1] = f (z)dT1 (z) + [f (x) + f (y)] • So, B(T ) = B(T1 ) + f (x) + f (y). • We now prove the optimality of Huffman algorithm by contradiction. Suppose T is not an optimal prefix code, and another tree T ∗ is claimed to be optimal, meaning B(T ∗ ) < B(T ). • By the earlier lemma, T ∗ has x and y as siblings. Let T1∗ be this tree with the common parent of x and y replaced by a leave z, whose frequency is f (z) = f (x) + f (y). 16

17 . • Then, B(T1∗ ) = B(T ∗ 2) − f (x) − f (y) < B(T ) − f (x) − f (y) < B(T1 ) which contradicts the assumption that T1 is an optimal prefix code for the character set C = C + {z} − {x, y}. End of proof. 4.4 Beyond Huffman codes • Is Huffman coding the end of the road, or are there other coding schemes that are even better than Huffman? • Depends on problem assumptions. • Huffman code does not adapt to variations in the text. For instance, if the first half is mostly a, b, and the second half c, d, one can do better by adaptively changing the encoding. • One can also get better-than-Huffman codes by coding longer words instead of indi- vidual characters. This is done in arithmetic coding. Huffman coding is still useful because it is easier to compute (or you can rely on a table, for instance using the frequency of characters in the English language). • There are also codes that serve a different purpose than Huffman coding: error detect- ing and error correcting codes, for example. Such codes are very important in some areas, in particular in industrial applications. • One can also do better by allowing lossy compression. • Even if the goal is lossless compression, depending on the data, Huffman code might not be suitable: music, images, movies, and so on. • One practical disadvantage of Huffman coding is that it requires 2 passes over the data: one to construct to code table, and second to encode, which means it can be slow and also not suitable for streaming data. Not being error-correcting is also a weakness. 5 Greedy Algorithms in Graphs • The shortest path algorithm of Dijkstra and minimum spanning algorithms of Prim and Kruskal are instances of greedy paradigm. 17

18 .• We briefly review the Dijkstra and Kruskal algorithms, and sketch their proofs of optimality, as examples of greedy alorithm proofs. • Dijkstra’s algorithm can be described as follows (somewhat different from the way it’s implemented, but equivalent.) • Dijkstra’s Algorithm. 1. Let S be the set of explored nodes. 2. Let d(u) be the shortest path distance from s to u, for each u ∈ S. 3. Initially S = {s}, and d(s) = 0. 4. While S = V do (a) Select v ∈ S with the minimum value of d (v) = min {d(u) + cost(u, v)} (u,v),u∈S (b) Add v to S, set d(v) = d (v). • Example. • Proof of Correctness. 1. We show that at any time d(u) is the shortest path distance to u for all u in S. 2. Consider the instant when node v is chosen by the algorithm. 3. Let (u, v) be the edge, with u ∈ S, that is incident to v. 4. Suppose, for the sake of contradiction, that d(u) + cost(u, v) is not the shortest path distance to v. 5. Instead a shorter path P exists to v. 6. Since that path starts at s, it has to leave S at some node. Let x be that node, and let y ∈ S be the edge that goes from S to S. 7. So our claim is that length(P ) = d(x) + cost(x, y) + length(y, v) is shorter than d(u) + cost(u, v). 18

19 . 8. But note that the algorithm chose v over y, so it must be that d(u) + cost(u, v) ≤ d(x) + cost(x, y). 9. In addition, since length(y, v) > 0, this contradicts our hypothesis that P is shorter than d(u) + cost(u, v). 10. Thus, the d(v) = d(u) + cost(u, v) is correct shortest path distance. • Next we consider the Kruskal’s MST algorithm. • Example. • Proof of Correctness. 1. For simplicity, assume that all edge costs are distinct so that the MST is unique. Otherwise, add a tie-breaking rule to consistency order the edges. 2. Proof by contradiction: let (v, w) be the first edge chosen by Kruskal that is not in the optimal MST. 3. Consider the state of the Kruskal just before (v, w) is considered. 4. Let S be the set of nodes connected to v by a path in this graph. Clearly, w ∈ S. 5. The optimal MST does not contain (v, w) but must contain a path connecting v to w, by virtue of being spanning. 6. Since v ∈ S and w ∈ S, this path must contain at least one edge (x, y) with x ∈ S and y ∈ S. 7. Note that (x, y) cannot be in Kruskal’s graph at the time (v, w) was considered because otherwise y will have been in S. 8. Thus, (x, y) is more expensive than (v, w) because it came after (v, w) in Kruskal’s scan order. 9. If we replace (x, y) with (v, w) in the optimal MST, it remains spanning and has lower cost, which contradicts its optimality. 10. So, the hypothesis that (v, w) is not in optimal must be false. 19

20 .6 An Application of Kruskal’s Algorithm to Clustering • Clustering is a mathematical abstraction of a frequently-faced task: Classifying a col- lection of objects (photographs, documents, microorganisms) into coherent (similar) groups. Other examples include – Routing in mobile ad hoc networks: cluster heads – Patterns in gene expression – Document categories for web search – Similarity search in medical image databases – Skycat: cluster billions of sky objects into stars, quasars, galaxies. • The first step is to decide how to “measure” similarity between objects. A common approach is to define a “distance function” with the interpretation that two objects at larger distances are more dissimilar from each other than two objects with smaller distance. • When objects are situated in a physical space, the distance function may simply be their physical distance. But in many applications, the distances only have an abstract meaning: e.g. – distance between two species may be the number of years since they diverged in the course of evolution – distance between two images in a video stream may be the number of correspond- ing pixels at which intensity values differ by some threshold. • Given a set of objects and a distance function between them, the clustering problem is to divide the objects into groups so that objects within each group are similar to each other and objects in different groups are far apart. • Starting from this admittedly vague but intuitively desirable objective, the field of clustering studies a vast number of technically different approaches. The minimum spanning trees (MSTs) play an important role in one of the most basic formulations. • Input is a U of n objects, labeled p1 , p2 , . . . , pn . For each pair, we have a distance d(pi , pj) where – d(pi , pi ) = 0 – d(pi , pj ) > 0 whenever i = j – d(pi , pj ) = d(pj , pi ). 20

21 .• Goal is to divide U into k groups, where k is given part of the input. This is called k- Clustering, and formally it is a partition of U into k non-empty subsets C1 , C2 , . . . , Ck . • Optimization Criterion: Spacing of a k-Clustering is the minimum distance between two objects lying in different clusters. Find a k-Clustering with the maximum spacing. (This matches our goal of putting dissimilar objects in different clusters.) Picture. • Algorithm: – Think of each pi as a node, and d(pi , pj ) as the weight of the edge (pi , pj ). – Computer a MST of this graph. – Delete the (k − 1) most expensive edges of the MST. – Output the resulting set of k components C1 , C2 , . . . , Ck clusters. • We will prove the following theorem: The MST algorithm produces Spacing Maximizing k-Clustering. • Why is this true? Clustering and Kruskal’s MST algorithm share a common feature: • In clustering, we want to repeatedly and greedily group objects that are closest, stop- ping when we have k-clusters. • In Kruskal’s algorithm, we add edges between disconnected components by adding shortest edges first. • Proof of the Theorem. 1. Let d∗ be the weight of the (k − 1)st most expensive edge in MST. (This is the edge that Kruskal would have added to merge two components in the next step.) 21

22 . 2. Then, the spacing of our output is d∗ . We show that all other k-clusterings have spacing ≤ d∗ . 3. Consider an optimal clustering OPT, with components D1 , D2 , . . . , Dk . 4. Since OPT differs from MST clustering, at least one of the greedy clusters, say, Cr , is not a subset of any of the clusters of OPT. 5. This means that we have 2 objects pi and pj that lie in the same MST cluster Cr but in different OPT cluster. Suppose pi lies in Di , and pj in Dj . 6. Consider the Kruskal clustering, and the component Cr . Since pi , pj both lie in the same cluster, Kruskal’s algorithm must have added all the edges in the path connecting pi and pj . 7. In particular, all of these edges have distance < d∗ , which is the Kruskal cutoff. 8. Since pi ∈ Di but pj ∈ Dj , let p be the first node on this path after pi that is not in Di , and let p be the node just before that. 9. Then d(p, p ) ≤ d∗ . 10. But then d(Di , Dj ) ≤ d∗ , which proves that spacing of OPT is ≤ d∗ . QED. 22