- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

SVDslides.ppt - NYU/CNS

展开查看详情

1 . Smallest font Please put away electronic devices Take out clickers Smallest font

2 .Math Tools

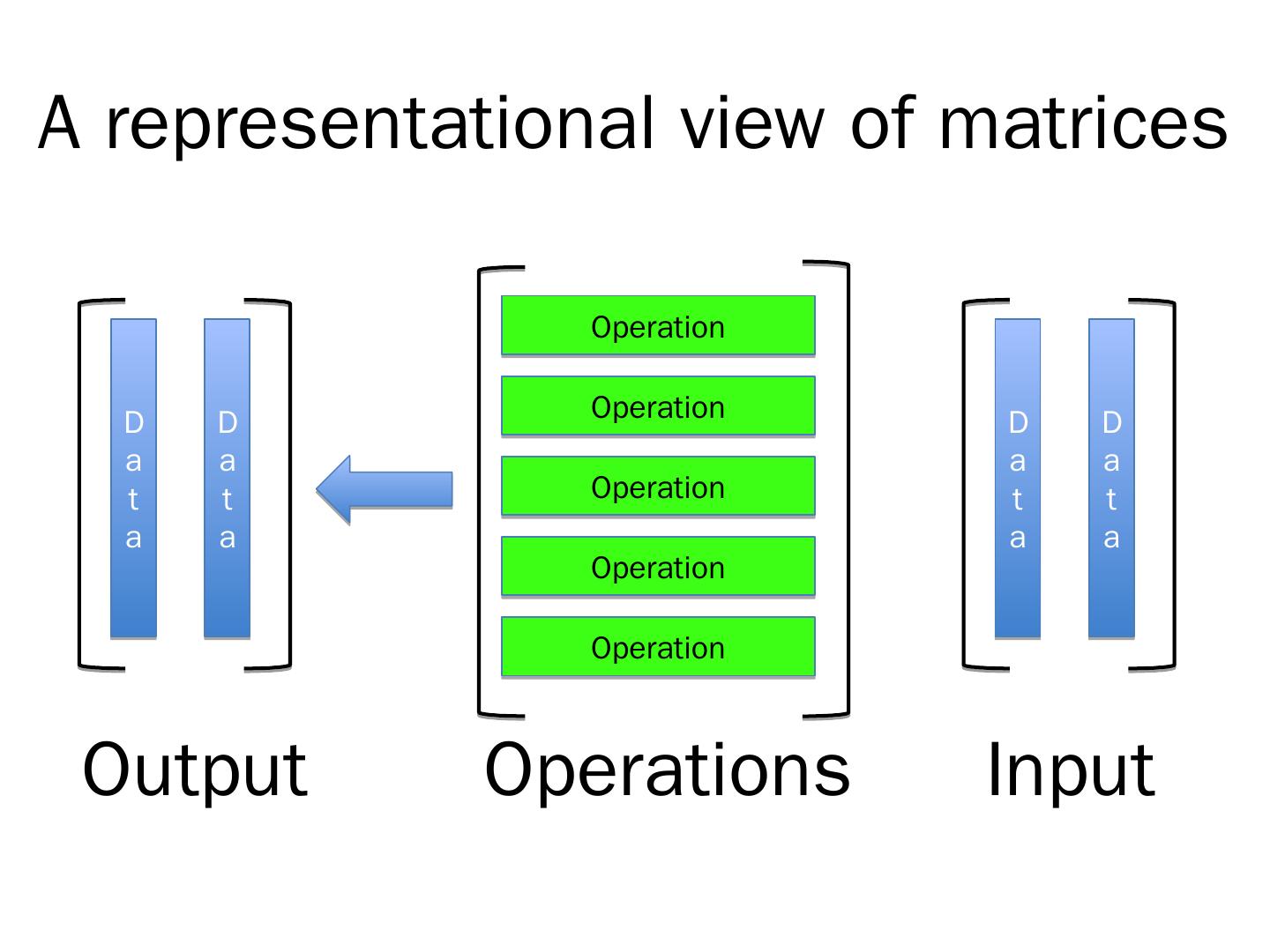

3 .A representational view of matrices Operation D D Operation D D a a a a t t Operation t t a a a a Operation Operation Output Operations Input

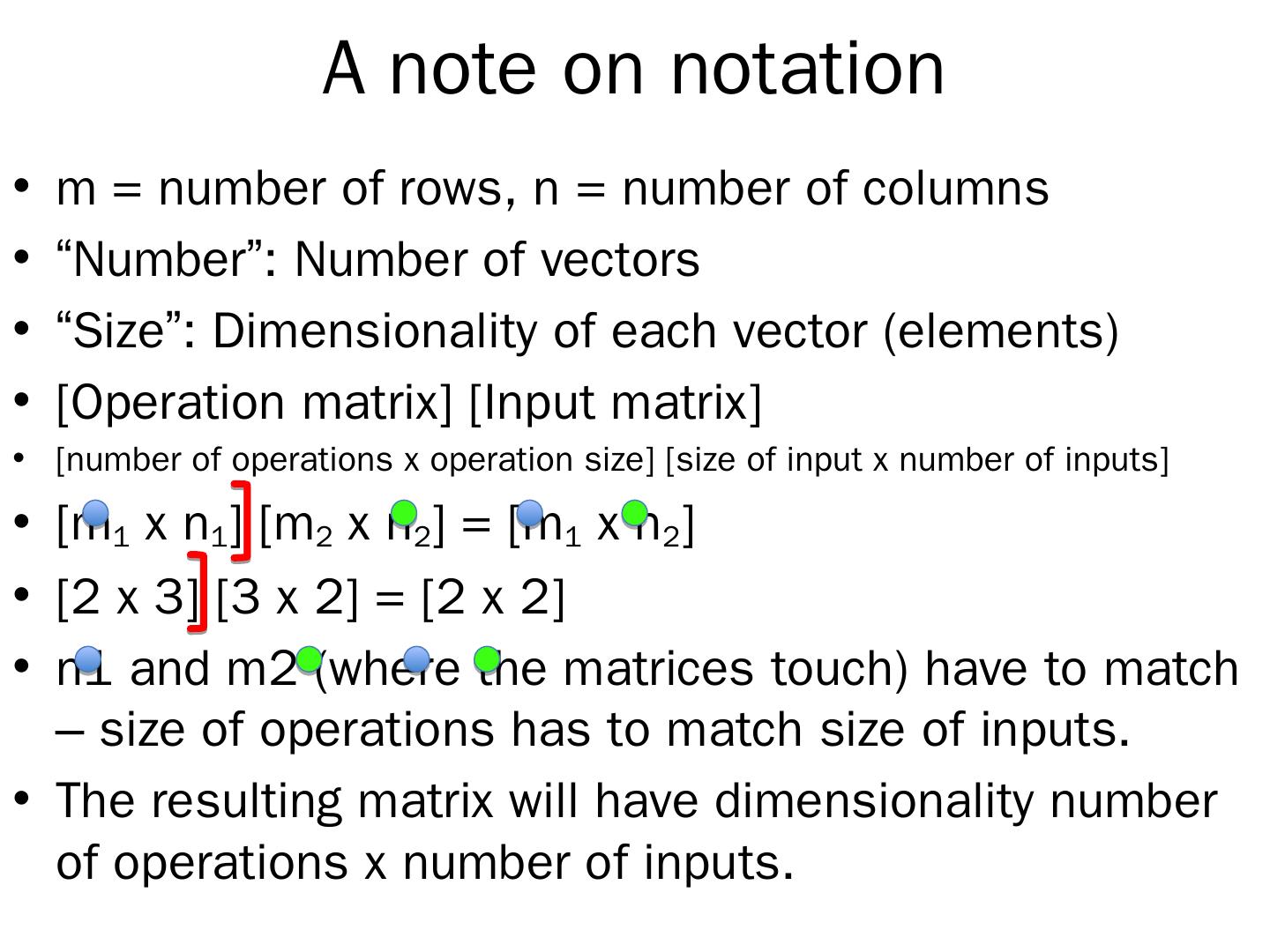

4 . A note on notation • m = number of rows, n = number of columns • “Number”: Number of vectors • “Size”: Dimensionality of each vector (elements) • [Operation matrix] [Input matrix] • [number of operations x operation size] [size of input x number of inputs] • [m1 x n1] [m2 x n2] = [m1 x n2] • [2 x 3] [3 x 2] = [2 x 2] • n1 and m2 (where the matrices touch) have to match – size of operations has to match size of inputs. • The resulting matrix will have dimensionality number of operations x number of inputs.

5 . Inner Product = Dot product Dot product = Row Vector * Column Vector = Scalar scalar DP = [1xn] [mx1 [1x1] (m=n) 5 ] 1234 1*5 + 2*6 + 3*7 + 4*8 70 6

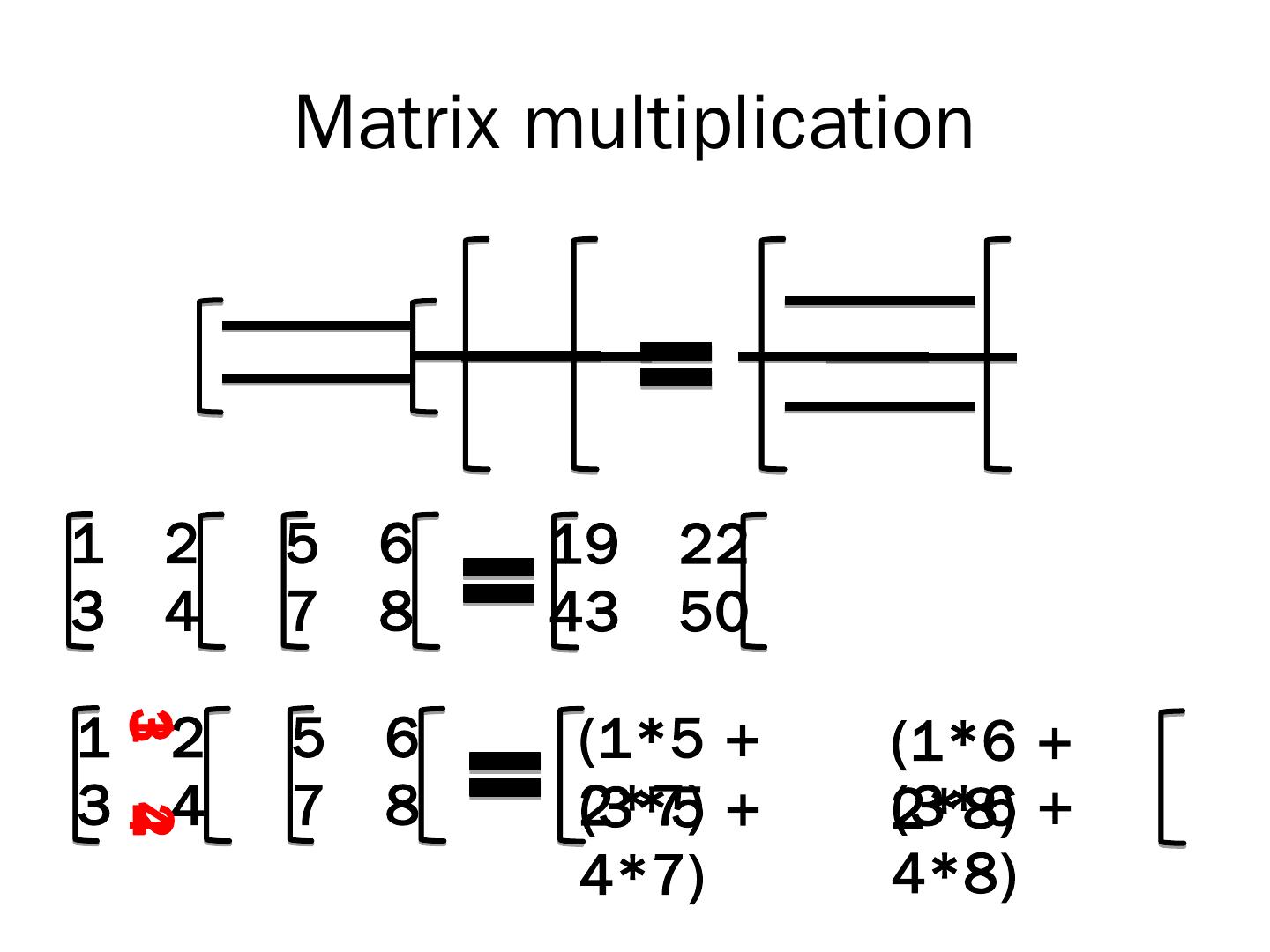

6 . Matrix multiplication 1 2 5 6 19 22 3 4 7 8 43 50 1 2 5 6 (1*5 + (1*6 + 3 1 3 4 7 8 2*7) (3*5 + (3*6 + 2*8) 4 2 4*7) 4*8)

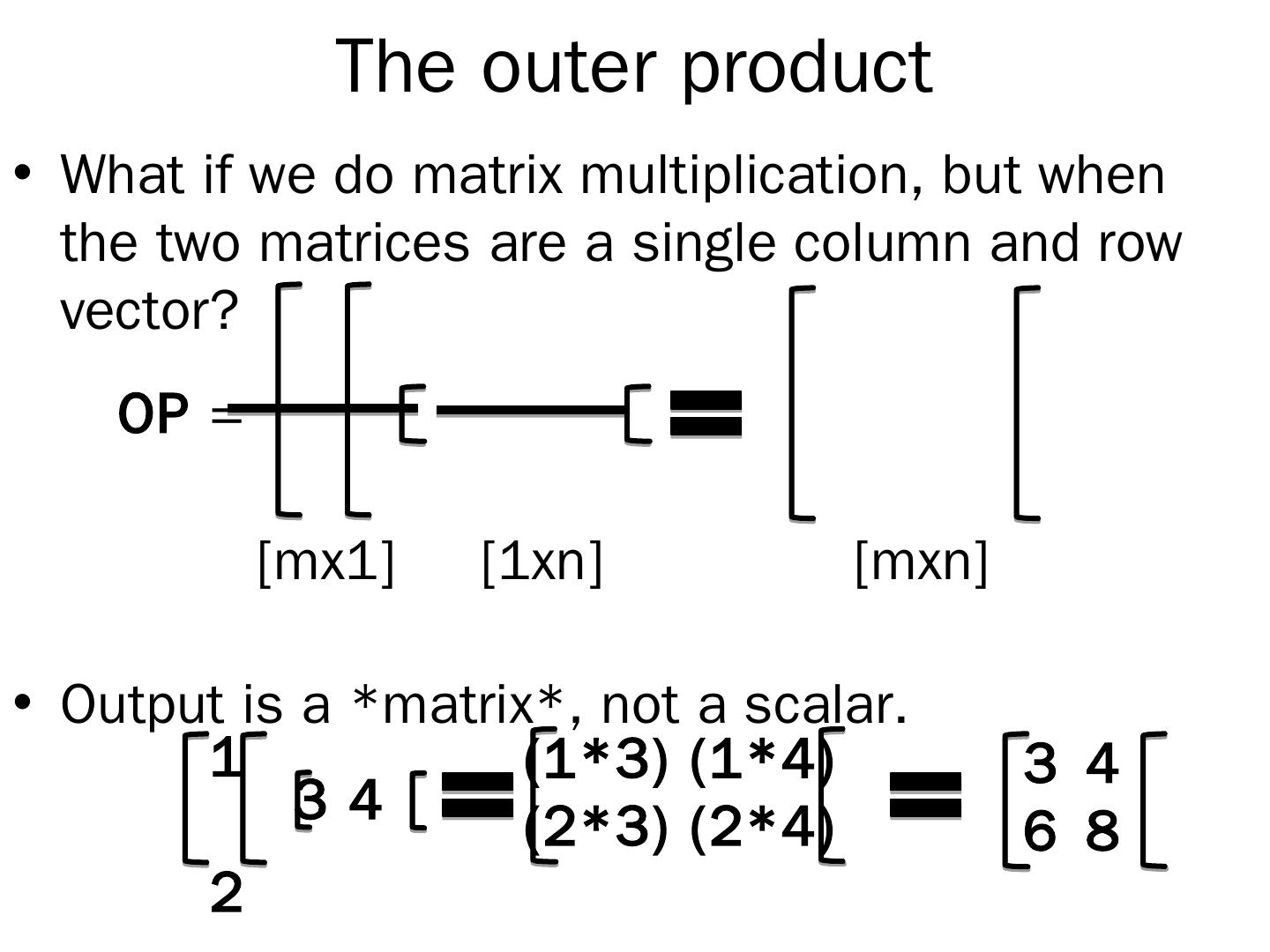

7 . The outer product • What if we do matrix multiplication, but when the two matrices are a single column and row vector? OP = [mx1] [1xn] [mxn] • Output is a *matrix*, not a scalar. 1 (1*3) (1*4) 3 4 34 (2*3) (2*4) 6 8 2

8 .The transpose = flipping the matrix 1 23 1 47 4 56 2 5 8 7 8 9 3 6 NOT just a rotation! 9

9 .Singular Value Decomposition (SVD) • If you really want to understand what Eigenvalues and Eigenvectors are… • The culminating end point of a linear algebra course. • What we will use for the class (and beyond) to use the power of linear algebra to do stuff we care about.

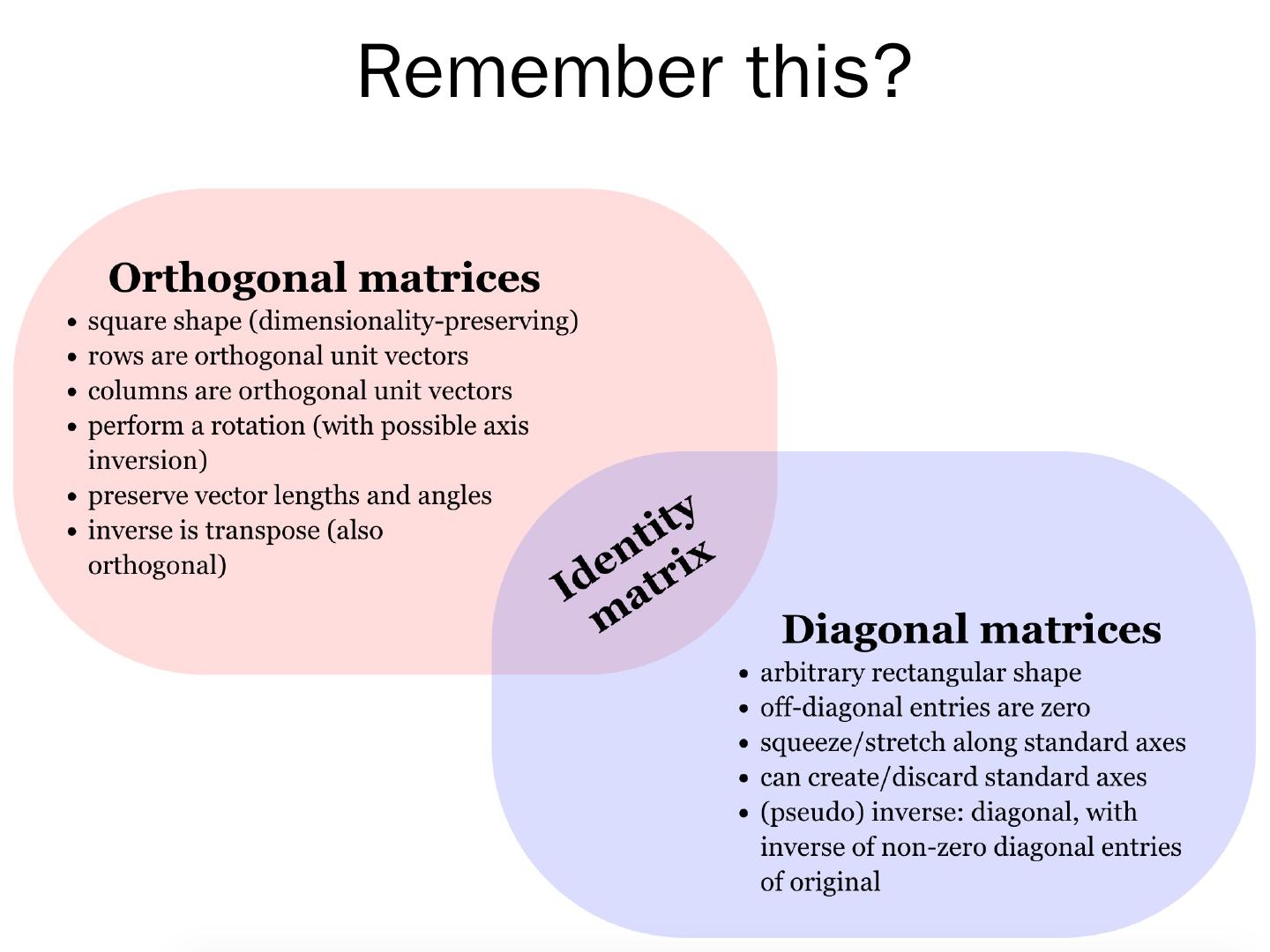

10 .Remember this?

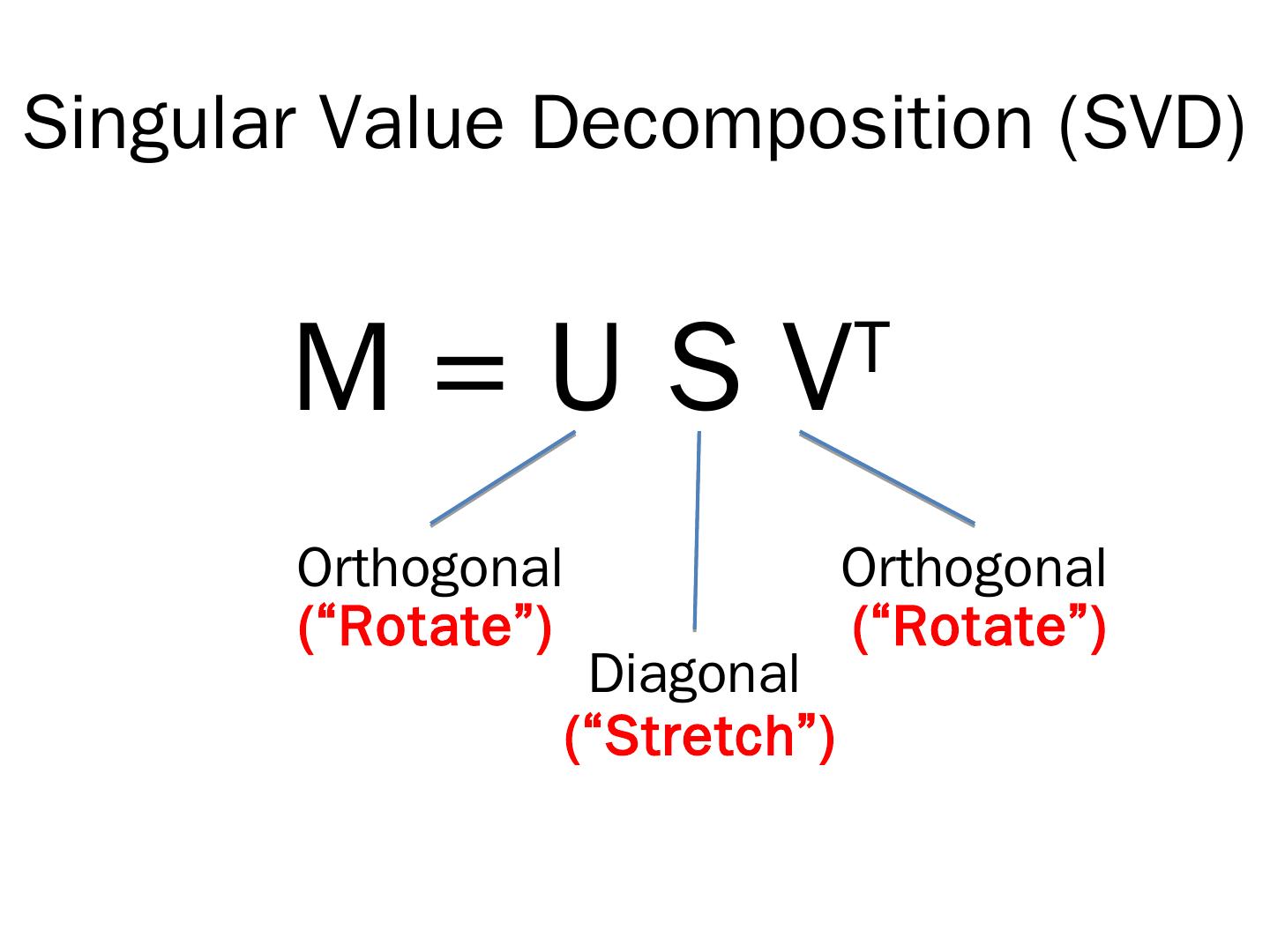

11 .Singular Value Decomposition (SVD) M=USV T Orthogonal Orthogonal (“Rotate”) (“Rotate”) Diagonal (“Stretch”)

12 . SVD U S V T Output Scaling Input

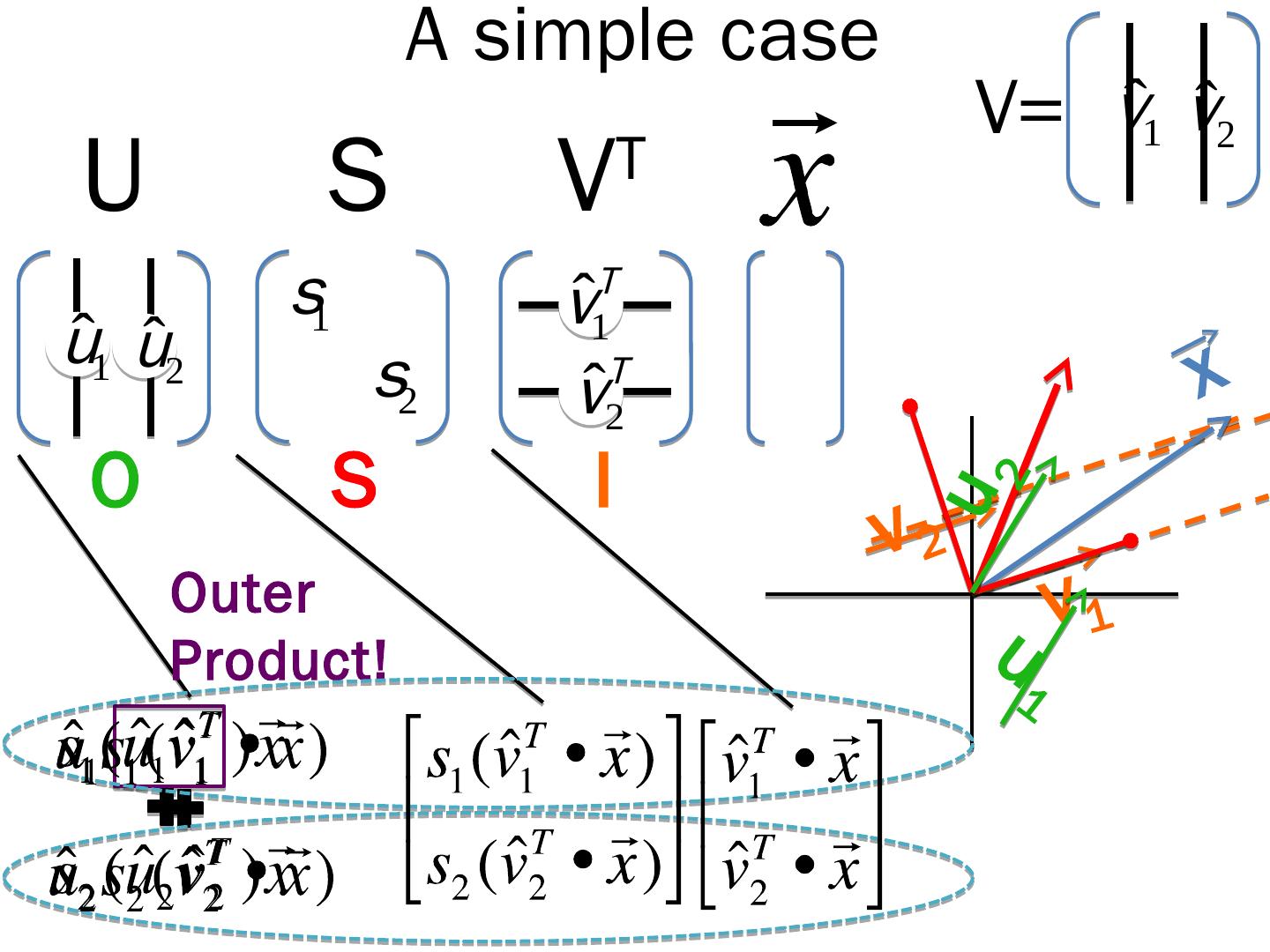

13 . A simple case V= vˆ1 vˆ2 U S V T s1 T vˆ uˆ1 uˆ2 s2 1 T x vˆ 2 O S I 2 v2 u Outer v1 Product! u 1

14 . SVD can be interpreted as • A sum of outer products! • Decomposing the matrix into a sum of scaled outer products. • Key insight: The operations on respective dimensions stay separate from each other, all the way – through v, s and u. • They are grouped, each operating on another piece of the input.

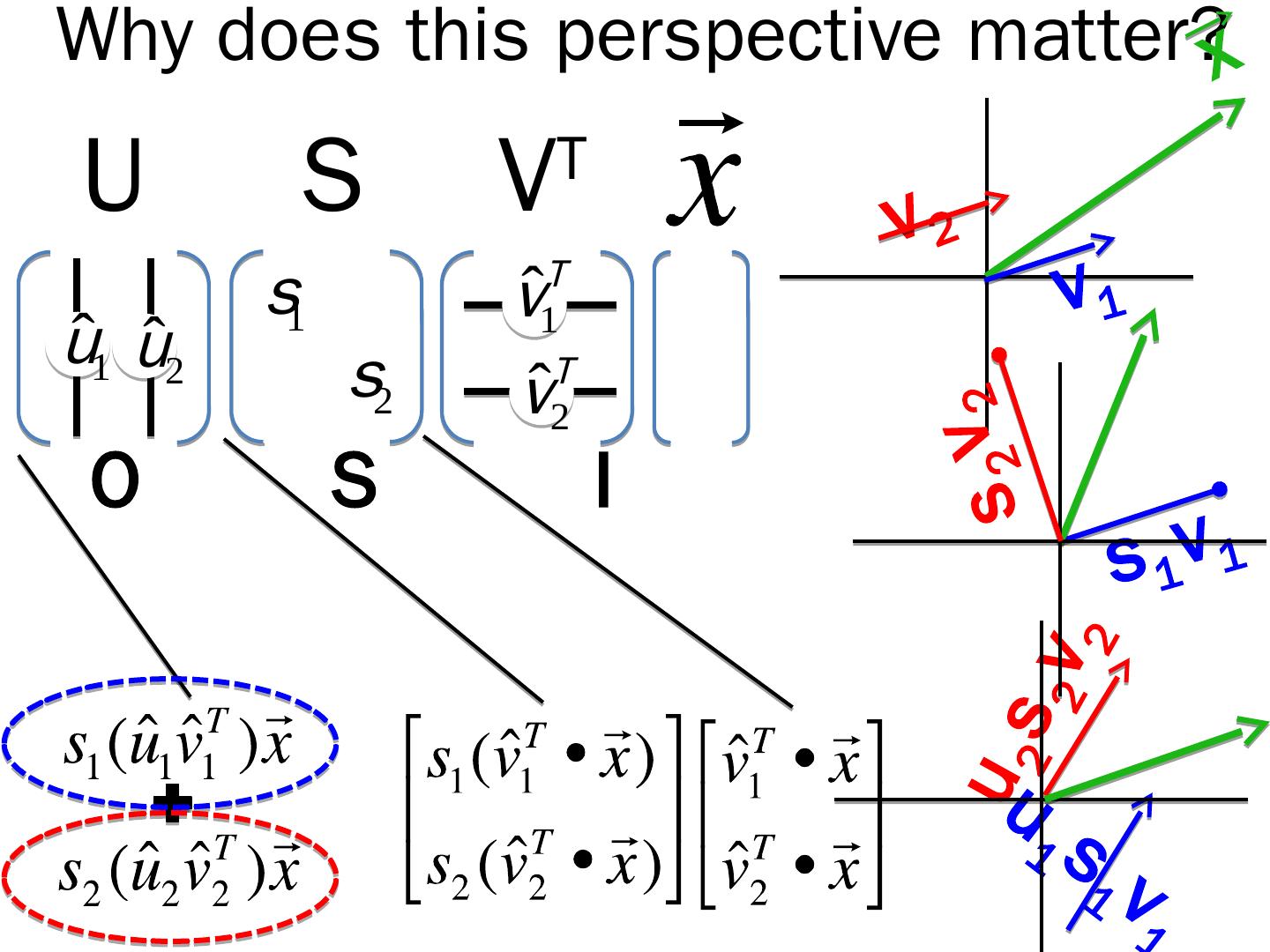

15 .Why does this perspective matter?x U S V T v2 s1 vˆT v1 uˆ1 uˆ2 s2 1 T vˆ s2 2 2 v O S I s 1v 1 v2 2 u2 s u 1 s 1 v1

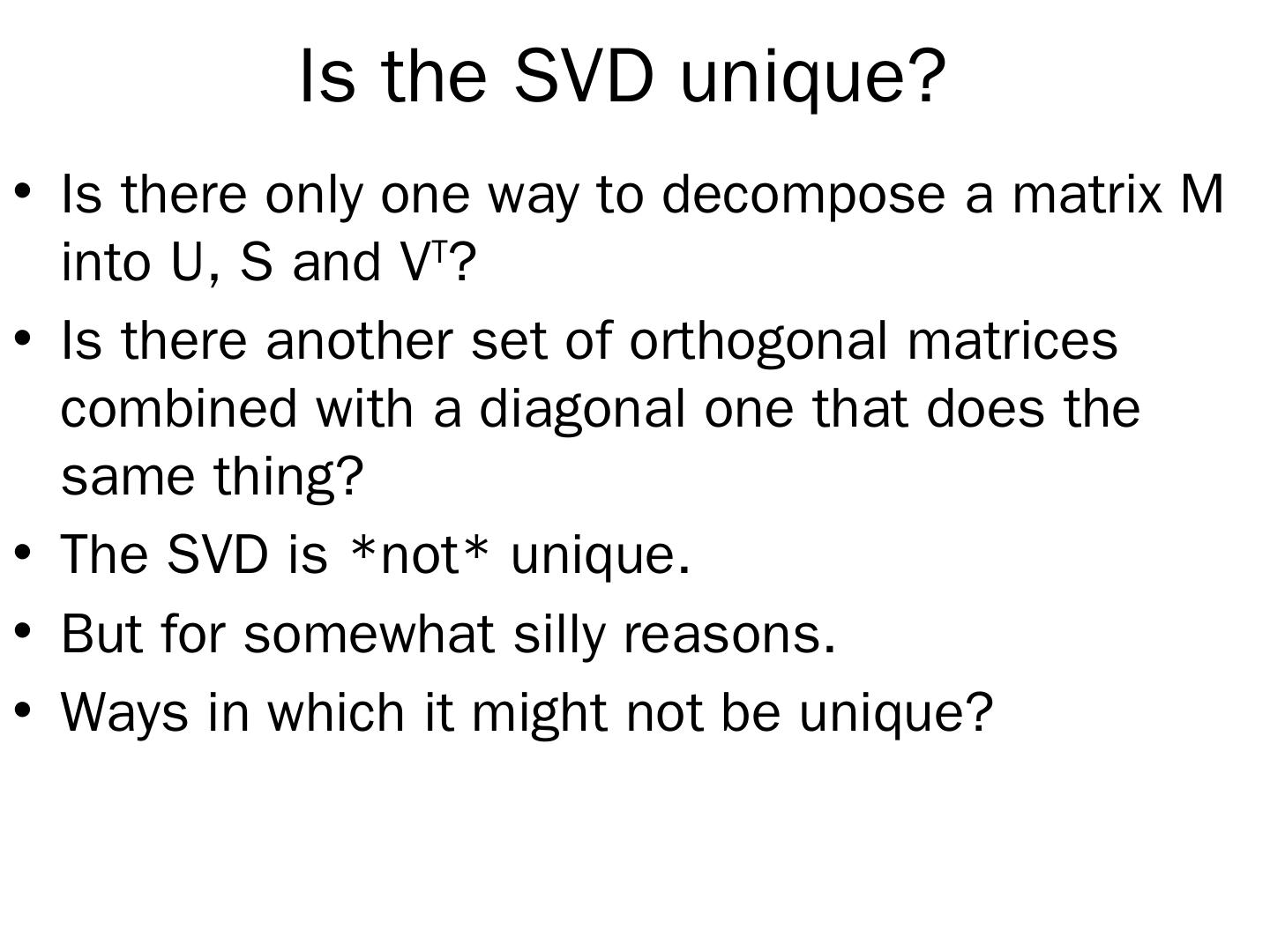

16 . Is the SVD unique? • Is there only one way to decompose a matrix M into U, S and VT? • Is there another set of orthogonal matrices combined with a diagonal one that does the same thing? • The SVD is *not* unique. • But for somewhat silly reasons. • Ways in which it might not be unique?

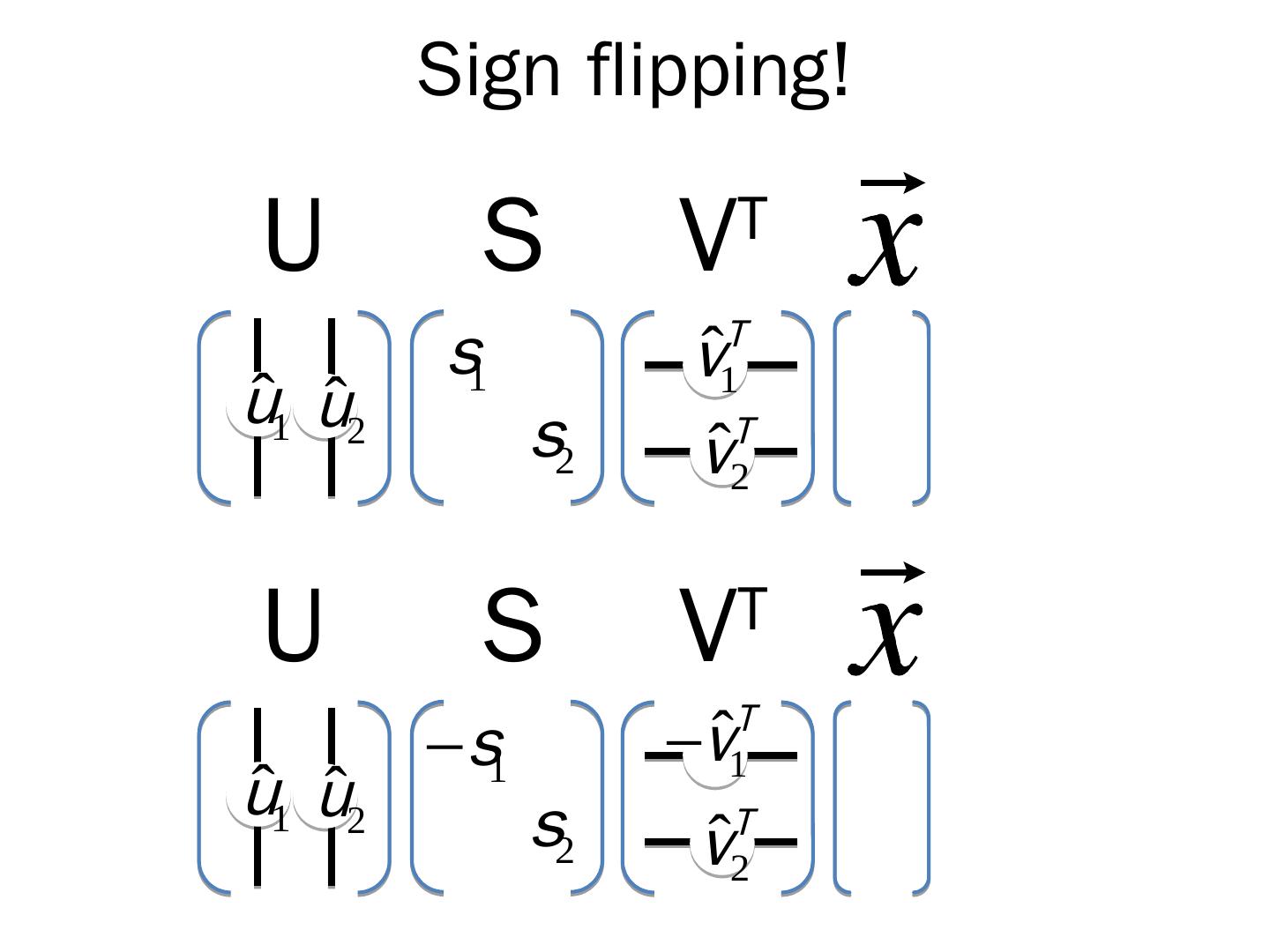

17 . Sign flipping! U S V T s1 ˆv1T uˆ1 uˆ2 s2 T vˆ2 U S V T T s1 vˆ1 uˆ1 uˆ2 s2 T vˆ2

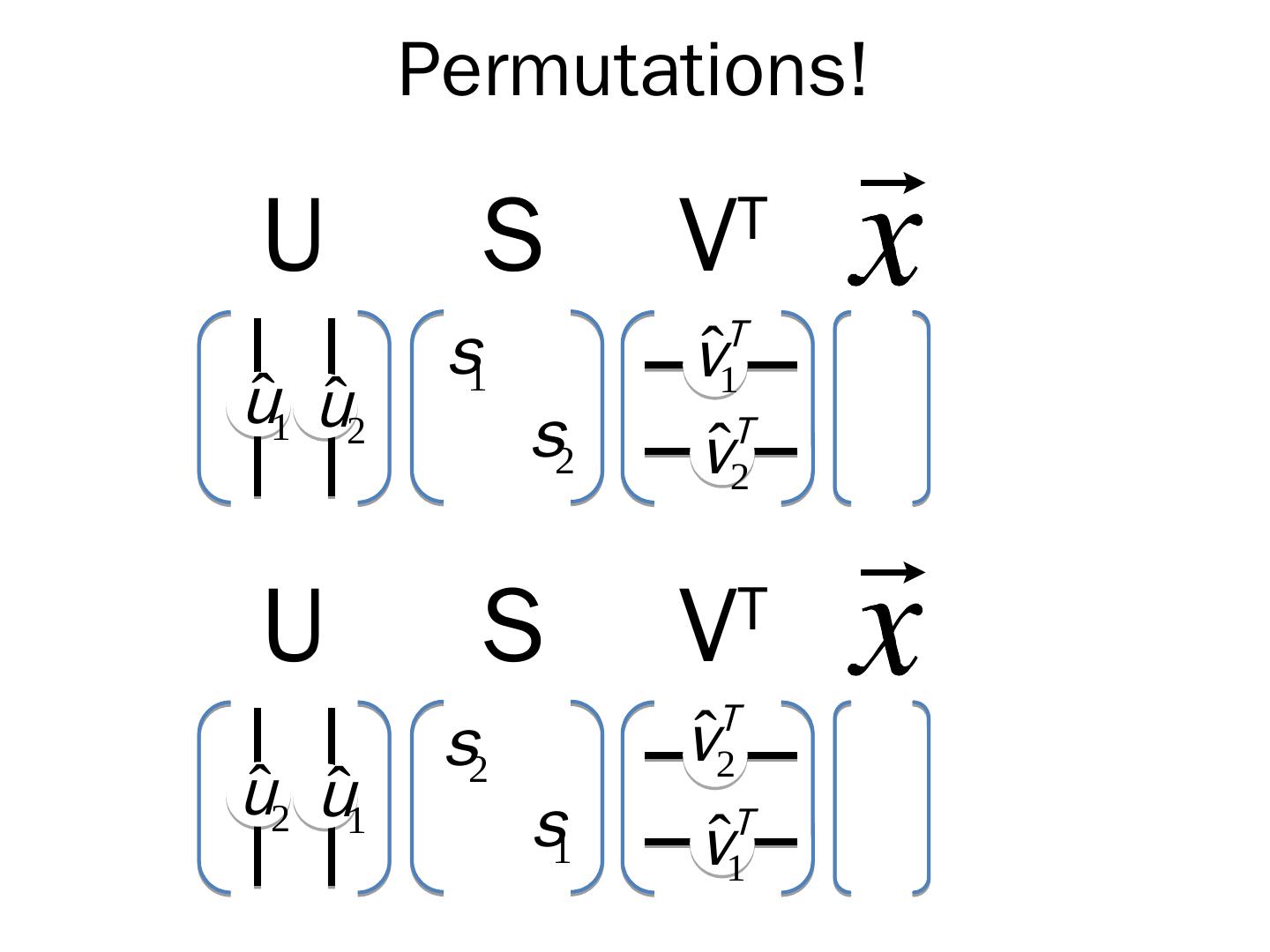

18 . Permutations! U S V T s1 ˆv1T uˆ1 uˆ2 s2 T vˆ2 U S V T T s2 vˆ 2 uˆ2 uˆ1 s1 T vˆ 1

19 .Given that the SVD is *not* unique, how can we make sure programs will all arrive at the exact *same* SVD? • Conventions! • MATLAB picks the values in the main diagonal of S to be positive – this also sets the signs in the other two matrices. • MATLAB orders them in decreasing magnitude, with the largest one in the upper left. • If it runs its algorithm and arrives at the wrong order, it can always sort them (and does this under the hood).

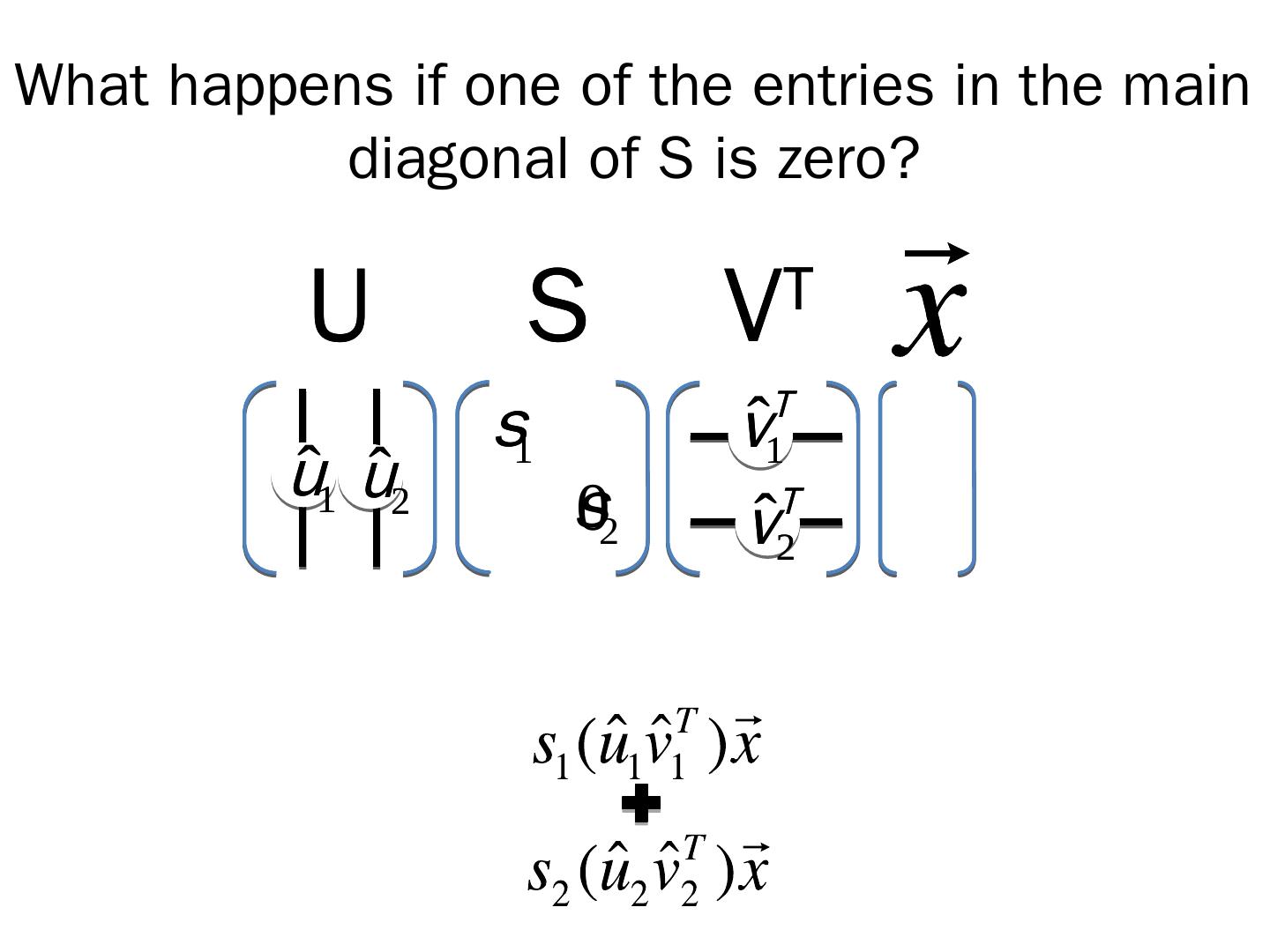

20 .What happens if one of the entries in the main diagonal of S is zero? U S V T s1 ˆv1T uˆ1 uˆ2 s02 T vˆ2

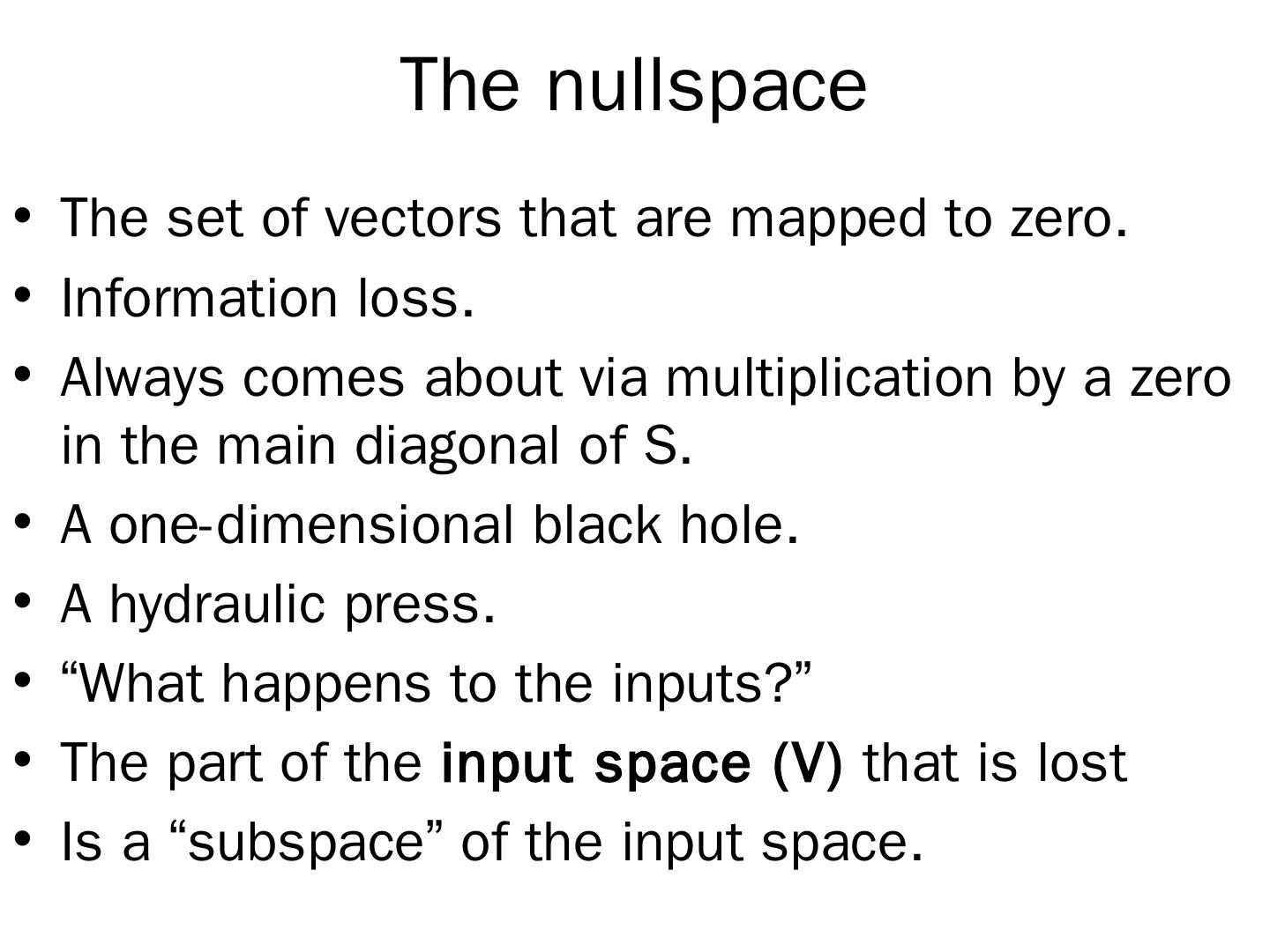

21 . The nullspace • The set of vectors that are mapped to zero. • Information loss. • Always comes about via multiplication by a zero in the main diagonal of S. • A one-dimensional black hole. • A hydraulic press. • “What happens to the inputs?” • The part of the input space (V) that is lost • Is a “subspace” of the input space.

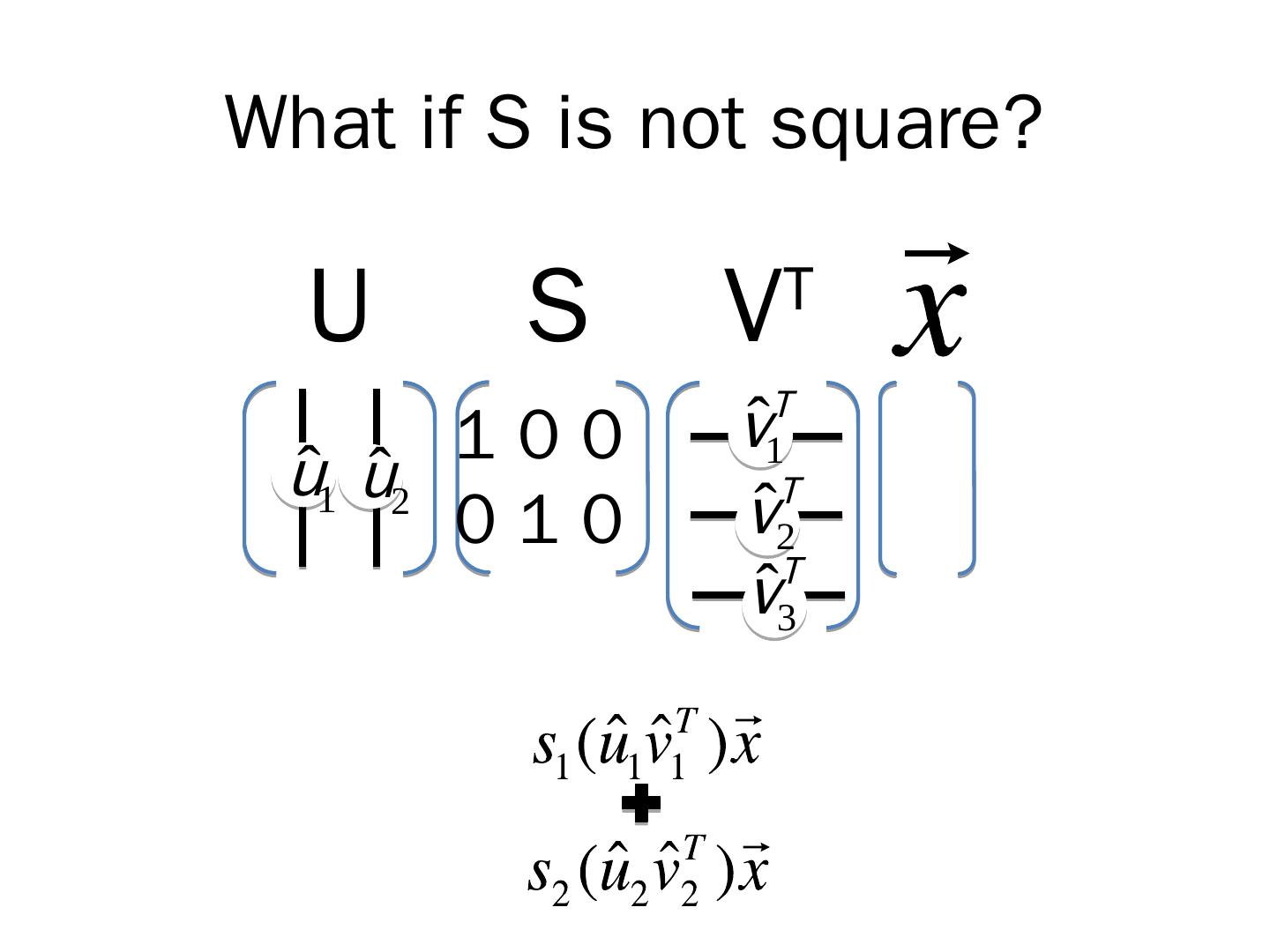

22 .What if S is not square? U S V T 1 0 0 ˆv1T uˆ1 uˆ2 T 010 vˆ2 T vˆ3

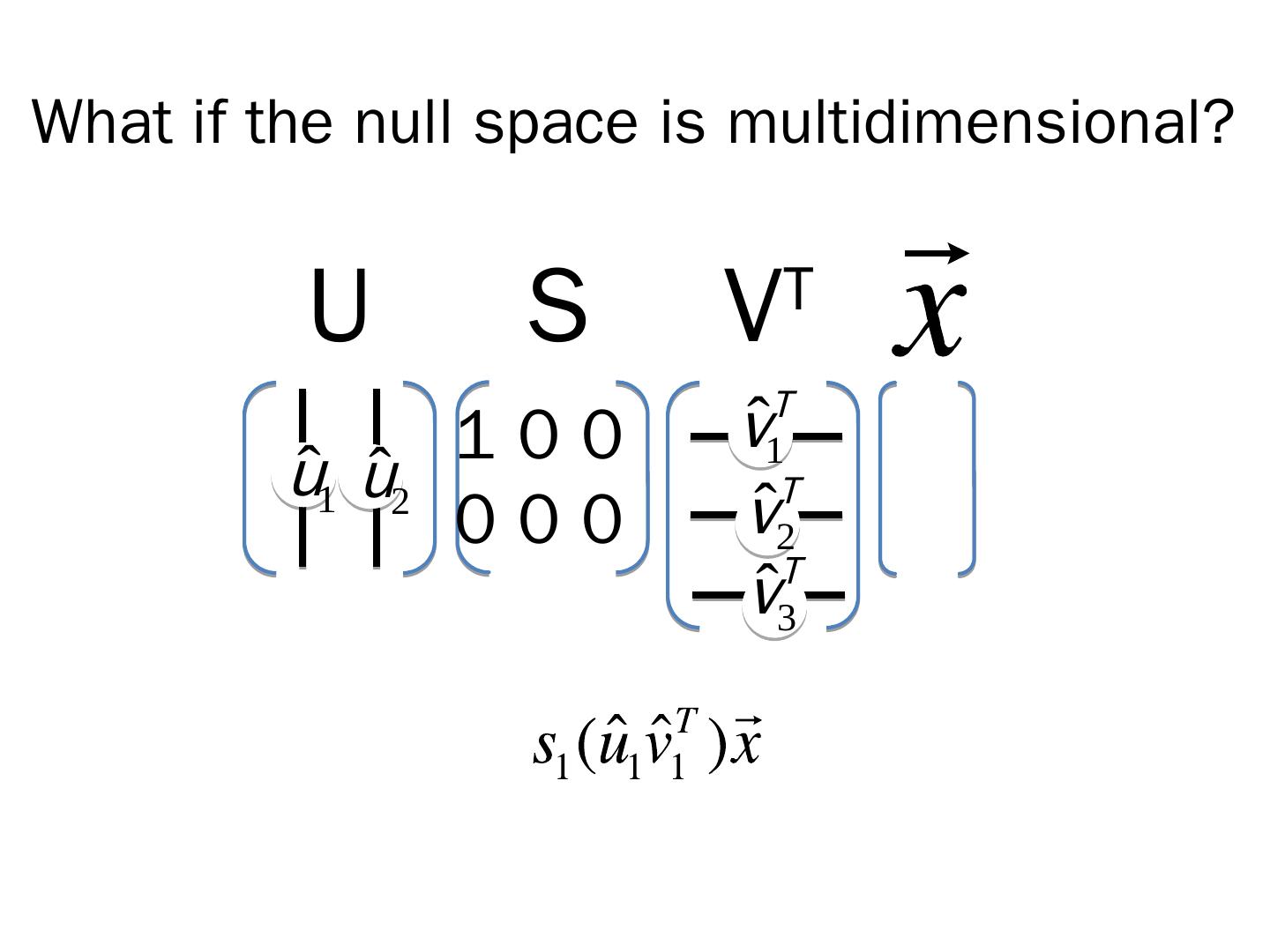

23 .What if the null space is multidimensional? U S V T 1 0 0 ˆv1T uˆ1 uˆ2 T 000 vˆ2 T vˆ3

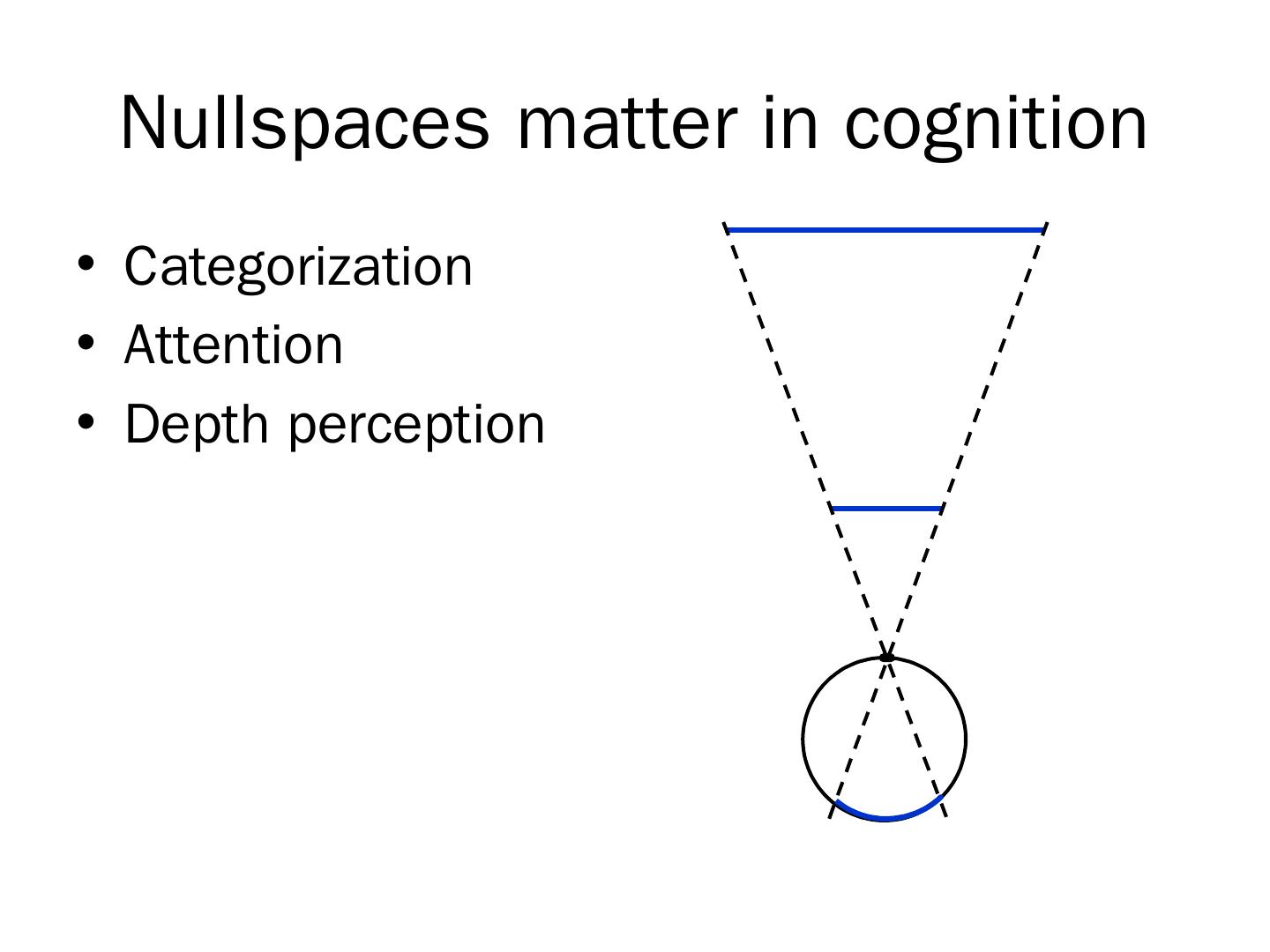

24 . Nullspaces matter in cognition • Categorization • Attention • Depth perception

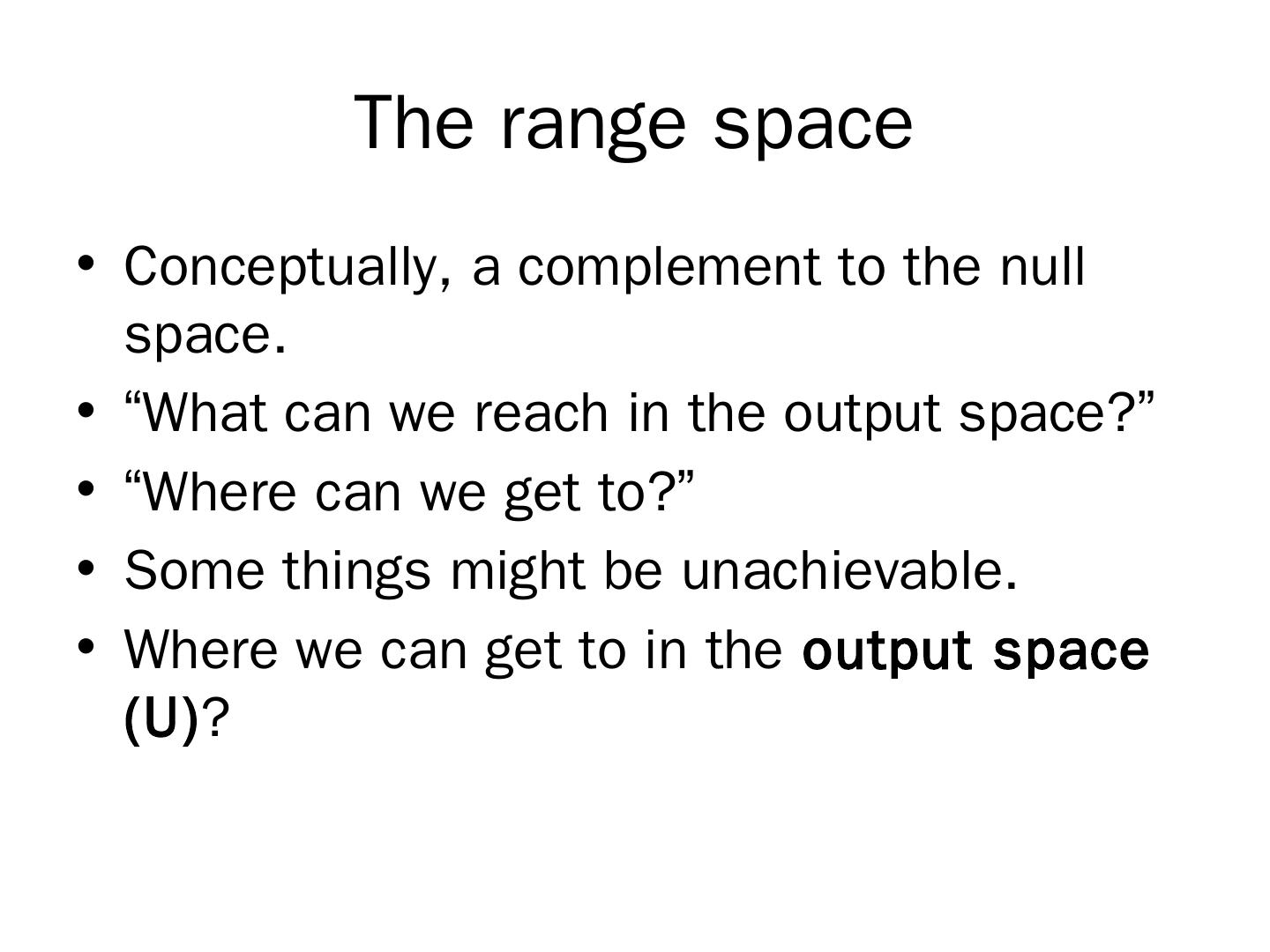

25 . The range space • Conceptually, a complement to the null space. • “What can we reach in the output space?” • “Where can we get to?” • Some things might be unachievable. • Where we can get to in the output space (U)?

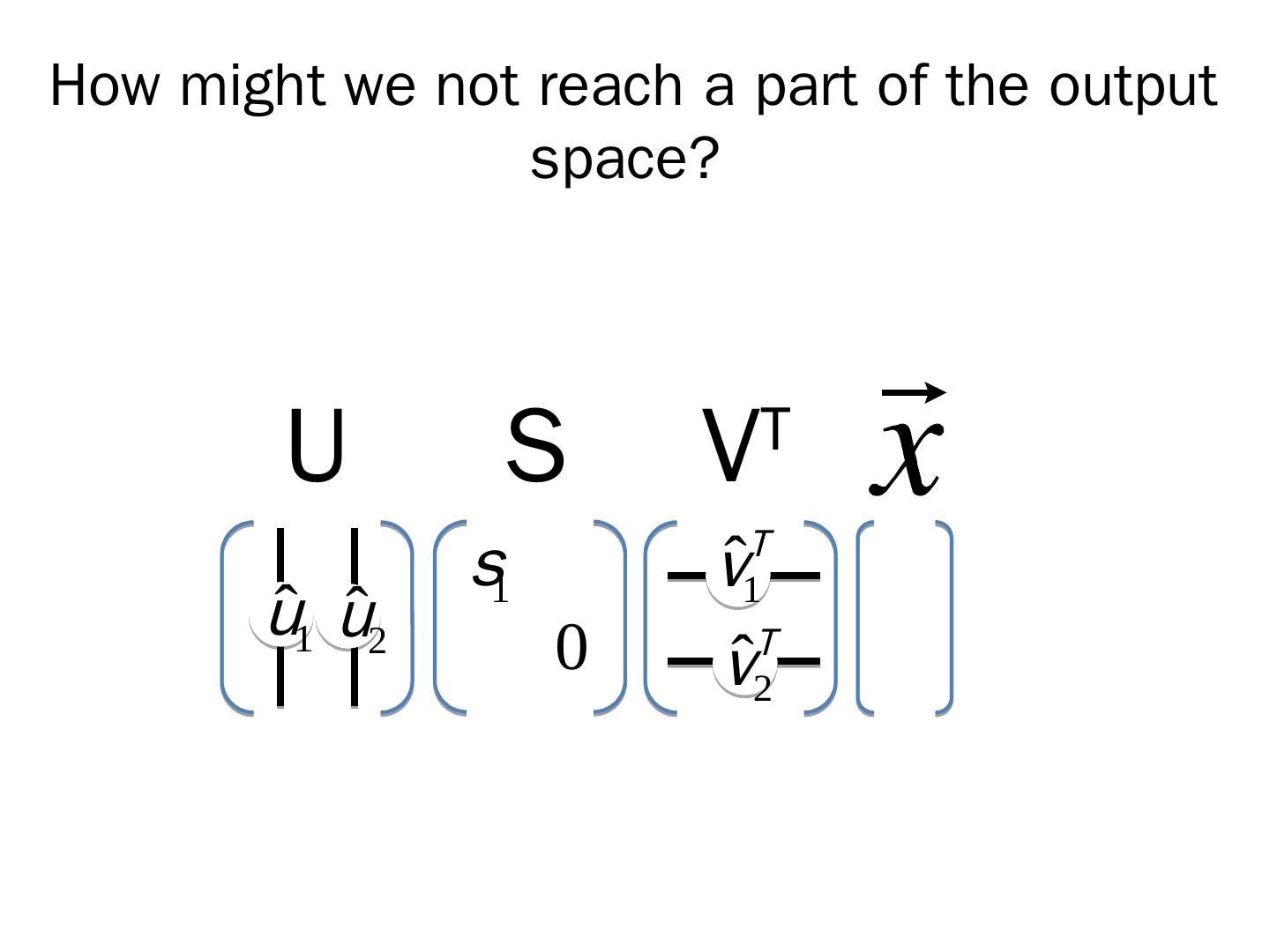

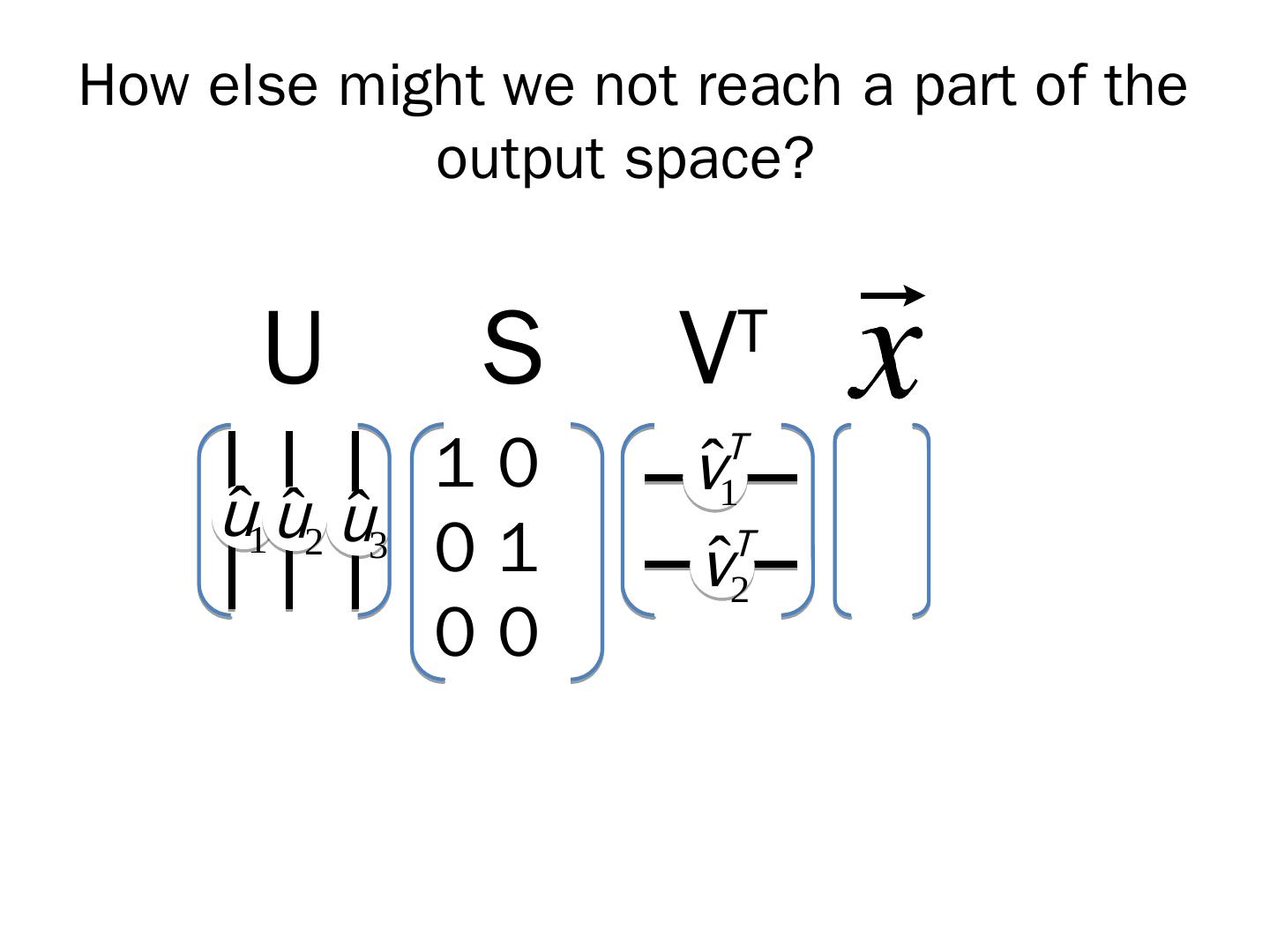

26 .How might we not reach a part of the output space? U S V T s1 vˆT uˆ1 uˆ2 0 1 T vˆ2

27 . The inverse question • If I observe the outputs of a linear system and watch what is coming, could I figure out what the inputs were? • Related problem: If you start with 2 things in the input space and run them through the system and compare the outputs, can we still distinguish them as different? • So when is the linear system invertible? • How might it not be?

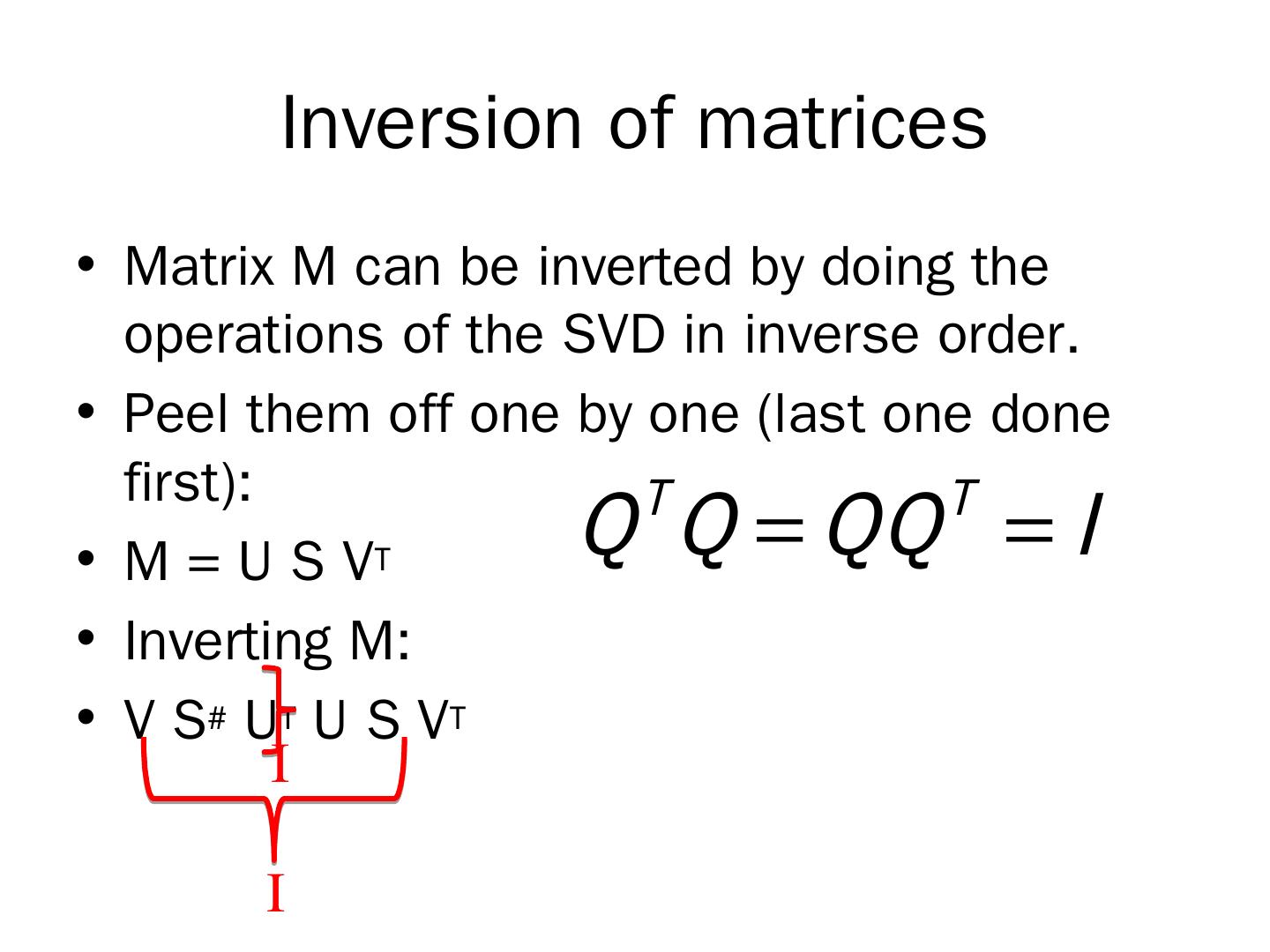

28 . Inversion of matrices • Matrix M can be inverted by doing the operations of the SVD in inverse order. • Peel them off one by one (last one done first): T T • M = U S VT Q Q QQ I • Inverting M: • V S# U T U S V T I I

29 . The pseudoinverse • “The best we can do”. • We recover the information we can. • The non-zero dimensions in the diagonal of S.