- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

database management systems

展开查看详情

1 . What’s Really New with NewSQL? Andrew Pavlo Matthew Aslett Carnegie Mellon University 451 Research pavlo@cs.cmu.edu matthew.aslett@451research.com ABSTRACT The late 1980s and early 1990s brought about a new class A new class of database management systems (DBMSs) called of DBMSs that were designed to overcome the much touted NewSQL tout their ability to scale modern on-line transac- impedance mismatch between the relational model and object- tion processing (OLTP) workloads in a way that is not possible oriented programming languages [65]. These object-oriented with legacy systems. The term NewSQL was first used by one DBMSs, however, never saw wide-spread market adoption be- of the authors of this article in a 2011 business analysis report cause they lacked a standard interface like SQL. But many discussing the rise of new database systems as challengers to of the ideas from them were eventually incorporated in rela- these established vendors (Oracle, IBM, Microsoft). The other tional DBMSs when the major vendors added object and XML author was working on what became one of the first examples support a decade later, and then again in document-oriented of a NewSQL DBMS. Since then several companies and re- NoSQL systems over two decades later. search projects have used this term (rightly and wrongly) to The other notable event during the 1990s was the start of describe their systems. today’s two major open-source DBMS projects. MySQL was Given that relational DBMSs have been around for over four started in Sweden in 1995 based on the earlier ISAM-based decades, it is justifiable to ask whether the claim of NewSQL’s mSQL system. PostgreSQL began in 1994 when two Berke- superiority is actually true or whether it is simply marketing. ley graduate students forked the original QUEL-based Post- If they are indeed able to get better performance, then the next gres code from the 1980s to add support for SQL. question is whether there is anything scientifically new about The 2000s brought the arrival of Internet applications that them that enables them to achieve these gains or is it just that had more challenging resource requirements than applications hardware has advanced so much that now the bottlenecks from from previous years. They needed to scale to support large earlier years are no longer a problem. number of concurrent users and had to be on-line all the time. To do this, we first discuss the history of databases to under- But the database for these new applications was consistently stand how NewSQL systems came about. We then provide a found to be a bottleneck because the resource demands were detailed explanation of what the term NewSQL means and the much greater than what DBMSs and hardware could support different categories of systems that fall under this definition. at the time. Many tried the most obvious option of scaling their DBMS vertically by moving the database to a machine with better hardware. This, however, only improves perfor- 1. A BRIEF HISTORY OF DBMSS mance so much and has diminishing returns. Furthermore, The first DBMSs came on-line in the mid 1960s. One of the moving the database from one machine to another is a com- first was IBM’s IMS that was built to keep track of the supplies plex process and often requires significant downtime, which is and parts inventory for the Saturn V and Apollo space explo- unacceptable for these Web-based applications. To overcome ration projects. It helped introduce the idea that an applica- this problem, some companies created custom middleware to tion’s code should be separate from the data that it operates on. shard single-node DBMSs over a cluster of less expensive ma- This allows developers to write applications that only focus on chines. Such middleware presents a single logical database to the access and manipulation of data, and not the complications the application that is stored across multiple physical nodes. and overhead associated with how to actually perform these When the application issues queries against this database, the operations. IMS was later followed by the pioneering work in middleware redirects and/or rewrites them to distribute their the early 1970s on the first relational DBMSs, IBM’s System execution on one or more nodes in the cluster. The nodes exe- R and the University of California’s INGRES. INGRES was cute these queries and send the results back to the middleware, soon adopted at other universities for their information sys- which then coalesces them into a single response to the ap- tems and was subsequently commercialized in the late 1970s. plication. Two notable examples of this middleware approach Around the same time, Oracle released the first version of their were eBay’s Oracle-based cluster [53] and Google’s MySQL- DBMS that was similar to System R’s design. Other compa- based cluster [54]. This approach was later adopted by Face- nies were founded in the early 1980s that sought to repeat the book for their own MySQL cluster that is still used today. success of the first commercial DBMSs, including Sybase and Sharding middleware works well for simple operations like Informix. Although IBM never made System R available to reading or updating a single record. It is more difficult, how- the public, it later released a new relational DBMS (DB2) in ever, to execute queries that update more than one record in 1983 that used parts of the System R code base. a transaction or join tables. As such, these early middleware SIGMOD Record, June 2016 (Vol. 45, No. 2) 45

2 .systems did not support these types of operations. eBay’s mid- 2. THE RISE OF NEWSQL dleware in 2002, for example, required their developers to im- Our definition of NewSQL is that they are a class of mod- plement all join operations in application-level code. ern relational DBMSs that seek to provide the same scalable Eventually some of these companies moved away from us- performance of NoSQL for OLTP read-write workloads while ing middleware and developed their own distributed DBMSs. still maintaining ACID guarantees for transactions. In other The motivation for this was three-fold. Foremost was that tra- words, these systems want to achieve the same scalability of ditional DBMSs at that time were focused on consistency and NoSQL DBMSs from the 2000s, but still keep the relational correctness at the expense of availability and performance. But model (with SQL) and transaction support of the legacy DBMSs this trade-off was deemed inappropriate for Web-based appli- from the 1970–80s. This enables applications to execute a cations that need to be on-line all the time and have to sup- large number of concurrent transactions to ingest new infor- port a large number of concurrent operations. Secondly, it mation and modify the state of the database using SQL (in- was thought that there was too much overhead in using a full- stead of a proprietary API). If an application uses a NewSQL featured DBMS like MySQL as a “dumb” data store. Like- DBMS, then developers do not have to write logic to deal with wise, it was also thought that the relational model was not the eventually consistent updates as they would in a NoSQL sys- best way to represent an application’s data and that using SQL tem. As we discuss below, this interpretation covers a number was an overkill for simple look-up queries. of both academic and commercial systems. These problems turned out to be the origin of the impe- We note that there are data warehouse DBMSs that came out tus for the NoSQL1 movement in the mid to late 2000s [22]. in the mid-2000s that some people think meet this criteria (e.g., The key aspect of these NoSQL systems is that they forgo Vertica, Greenplum, Aster Data). These DBMSs target on-line strong transactional guarantees and the relational model of tra- analytical processing (OLAP) workloads and should not be ditional DBMSs in favor of eventual consistency and alterna- considered NewSQL systems. OLAP DBMSs are focused on tive data models (e.g., key/value, graphs, documents). This is executing complex read-only queries (i.e., aggregations, multi- because it was believed that these aspects of existing DBMSs way joins) that take a long time to process large data sets (e.g., inhibit their ability to scale out and achieve the high avail- seconds or even minutes). Each of these queries can be signif- ability that is needed to support Web-based applications. The icantly different than the previous. The applications targeted two most well-known systems that first followed this creed are by NewSQL DBMSs, on the other hand, are characterized as Google’s BigTable [23] and Amazon’s Dynamo [26]. Nei- executing read-write transactions that (1) are short-lived (i.e., ther of these two systems were available outside of their re- no user stalls), (2) touch a small subset of data using index spective company at first (although they are now as cloud ser- lookups (i.e., no full table scans or large distributed joins), and vices), thus other organizations created their own open source (3) are repetitive (i.e., executing the same queries with differ- clones of them. These include Facebook’s Cassandra (based ent inputs). Others have argued for a more narrow definition on BigTable and Dynamo) and PowerSet’s Hbase (based on where a NewSQL system’s implementation has to use (1) a BigTable). Other start-ups created their own systems that were lock-free concurrency control scheme and (2) a shared-nothing not necessarily copies of Google’s or Amazon’s systems but distributed architecture [57]. All of the DBMSs that we clas- still followed the tenets of the NoSQL philosophy; the most sify as NewSQL in Section 3 indeed share these properties and well-known of these is MongoDB. thus we agree with this assessment. By the end of the 2000s, there was now a diverse set of scal- able and more affordable distributed DBMSs available. The advantage of using a NoSQL system (or so people thought) 3. CATEGORIZATION was that developers could focus on the aspects of their ap- Given the above definition, we now examine the landscape plication that were more beneficial to their business or orga- of today’s NewSQL DBMSs. To simplify this analysis, we nization, rather than having to worry about how to scale the will group systems based on the salient aspects of their imple- DBMS. Many applications, however, are unable to use these mentation. The three categories that we believe best represent NoSQL systems because they cannot give up strong transac- NewSQL systems are (1) novel systems that are built from tional and consistency requirements. This is common for en- the ground-up using a new architecture, (2) middleware that terprise systems that handle high-profile data (e.g., financial re-implement the same sharding infrastructure that was devel- and order processing systems). Some organizations, most no- oped in the 2000s by Google and others, and (3) database-as-a- tably Google [24], have found that NoSQL DBMSs cause their service offerings from cloud computing providers that are also developers to spend too much time writing code to handle in- based on new architectures. consistent data and that using transactions makes them more Both authors have previously included alternative storage productive because they provide a useful abstraction that is engines for existing single-node DBMSs in our categorization easier for humans to reason about. Thus, the only options of NewSQL systems. The most common examples of these available for these organizations were to either purchase a more are replacements for MySQL’s default InnoDB storage engine powerful single-node machine and to scale the DBMS ver- (e.g., TokuDB, ScaleDB, Akiban, deepSQL). The advantage tically, or to develop their own custom sharding middleware of using a new engine is that an organization can get better that supports transactions. Both approaches are prohibitively performance without having to change anything in their ap- expensive and are therefore not an option for many. It is in this plication and still leverage the DBMS’s existing ecosystem environment that brought about NewSQL systems. (e.g., tools, APIs). The most interesting of these was ScaleDB because it provided transparent sharding underneath the sys- 1 The NoSQL community argues that the sobriquet should now tem without using middleware by redistributing execution be- be interpreted as “Not Only SQL”, since some of these systems tween storage engines; the company, however, has since piv- have since support some dialect of SQL. oted to another problem domain. There has been other sim- 46 SIGMOD Record, June 2016 (Vol. 42, No. 2)

3 .ilar extensions for systems other than MySQL. Microsoft’s to the more popular DBMS vendors. It also means that an in-memory Hekaton OLTP engine for SQL Server integrates organization will potentially lose access to existing adminis- almost seamlessly with the traditional, disk-resident tables. tration and reporting tools. Some DBMSs, like Clustrix and Others use Postgres’ foreign data wrappers and API hooks to MemSQL, avoid this problem by maintaining compatibility achieve the same type of integration but target OLAP work- with the MySQL wire protocol. loads (e.g., Vitesse, CitusDB). We now assert that such storage engines and extensions for Examples: Clustrix [6], CockroachDB [7], Google Span- single-node DBMSs are not representative of NewSQL sys- ner [24], H-Store [8], HyPer [39], MemSQL [11], NuoDB [14], tems and omit them from our taxonomy. MySQL’s InnoDB SAP HANA [55], VoltDB [17]. has improved significantly in terms of reliability and perfor- mance, so the benefits of switching to another engine for OLTP 3.2 Transparent Sharding Middleware applications are not that pronounced. We acknowledge that the There are now products available that provide the same kind benefits from switching from the row-oriented InnoDB engine of sharding middleware that eBay, Google, Facebook, and other to a column-store engine for OLAP workloads are more signif- companies developed in the 2000s. These allow an organi- icant (e.g., Infobright, InfiniDB). But in general, the MySQL zation to split a database into multiple shards that are stored storage engine replacement business for OLTP workloads is across a cluster of single-node DBMS instances. Sharding is the graveyard of failed database projects. different than database federation technologies of the 1990s because each node (1) runs the same DBMS, (2) only has a 3.1 New Architectures portion of the overall database, and (3) is not meant to be ac- This category contains the most interesting NewSQL sys- cessed and updated independently by separate applications. tems for us because they are new DBMSs built from scratch. The centralized middleware component routes queries, co- That is, rather than extending an existing system (e.g., Mi- ordinates transactions, as well as manages data placement, repli- crosoft’s Hekaton for SQL Server), they are designed from cation, and partitioning across the nodes. There is typically a a new codebase without any of the architectural baggage of shim layer installed on each DBMS node that communicates legacy systems. All of the DBMSs in this category are based with the middleware. This component is responsible for exe- on distributed architectures that operate on shared-nothing re- cuting queries on behalf of the middleware at its local DBMS sources and contain components to support multi-node con- instance and returning results. All together, these allow mid- currency control, fault tolerance through replication, flow con- dleware products to present a single logical database to the trol, and distributed query processing. The advantage of us- application without needing to modify the underlying DBMS. ing a new DBMS that is built for distributed execution is that The key advantage of using a sharding middleware is that all parts of the system can be optimized for multi-node envi- they are often a drop-in replacement for an application that ronments. This includes things like the query optimizer and is already using an existing single-node DBMS. Developers communication protocol between nodes. For example, most do not need to make any changes to their application to use NewSQL DBMSs are able to send intra-query data directly the new sharded database. The most common target for mid- between nodes rather than having to route them to a central dleware systems is MySQL. This means that in order to be location like with some middleware systems. MySQL compatible, the middleware must support the MySQL Every one of the DBMSs in this category (with the excep- wire protocol. Oracle provides the MySQL Proxy [13] and tion of Google Spanner) also manages their own primary stor- Fabric [12] toolkits to do this, but others have written their age, either in-memory or on disk. This means that the DBMS owning protocol handler library to avoid GPL licensing issues. is responsible for distributing the database across its resources Although middleware makes it easy for an organization to with a custom engine instead of relying on an off-the-shelf dis- scale their database out across multiple nodes, such systems tributed filesystem (e.g., HDFS) or storage fabric (e.g., Apache still have to use a traditional DBMS on each node (e.g., MySQL, Ignite). This is an important aspect of them because it allows Postgres, Oracle). These DBMSs are based on the disk-oriented the DBMS to “send the query to the data” rather than “bring architecture that was developed in the 1970s, and thus they the data to the query,” which results in significantly less net- cannot use a storage manager or concurrency control scheme work traffic since transmitting the queries is typically less net- that is optimized for memory-oriented storage like in some work traffic than having to transmit data (not just tuples, but of the NewSQL systems that are built on new architectures. also indexes and materialized views) to the computation. Previous research has shown that the legacy components of Managing their own storage also enables a DBMS to em- disk-oriented architectures is a significant encumbrance that ploy more sophisticated replication schemes than what is pos- prevents these traditional DBMSs from scaling up to take ad- sible with the block-based replication scheme used in HDFS. vantage of higher CPU core counts and larger memory capac- In general, it allows these DBMSs to achieve better perfor- ities [38]. The middleware approach can also incur redundant mance than other systems that are layered on top of other query planning and optimization on sharded nodes for com- existing technologies; examples of this include the “SQL on plex queries (i.e., once at the middleware and once on the in- Hadoop” systems like Trafodion [4] and Splice Machine [16] dividual DBMS nodes), but this does allow each node to apply that provide transactions on top of Hbase. As such, we believe their own local optimizations on each query. that such systems should not be considered NewSQL. But there are downsides to using a DBMS based on a new Examples: AgilData Scalable Cluster 2 [1], MariaDB MaxS- architecture. Foremost is that many organizations are wary of cale [10], ScaleArc [15], ScaleBase3 . adopting technologies that are too new and un-vetted with a 2 large installation base. This means that the number of people Prior to 2015, AgilData Cluster was known as dbShards. 3 that are experienced in the system is much smaller compared ScaleBase was acquired by ScaleArc in 2015 and is no longer sold. SIGMOD Record, June 2016 (Vol. 45, No. 2) 47

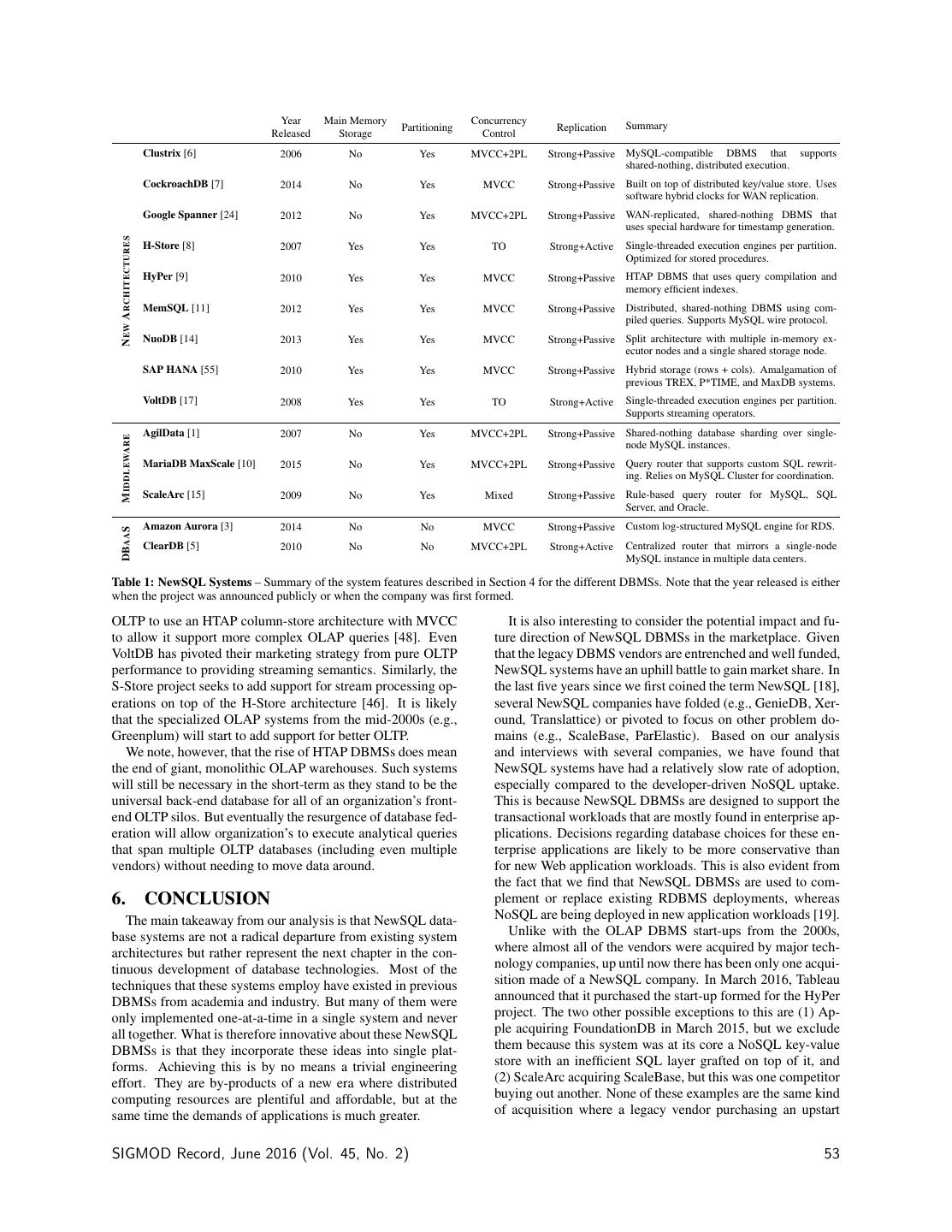

4 .3.3 Database-as-a-Service sumed to be on a block-addressable durable storage device, Lastly, there are cloud computing providers that offer NewSQL like an SSD or HDD. Since reading and writing to these de- database-as-a-service (DBaaS) products. With these services, vices is slow, DBMSs use memory to cache blocks read from organizations do not have to maintain the DBMS on either disk and to buffer updates from transactions. This was nec- their own private hardware or on a cloud-hosted virtual ma- essary because historically memory was much more expen- chine (VM). Instead, the DBaaS provider is responsible for sive and had a limited capacity compared to disks. We have maintaining the physical configuration of the database, includ- now reached the point, however, where capacities and prices ing system tuning (e.g., buffer pool size), replication, and back- are such that it is affordable to store all but the largest OLTP ups. The customer is provided with a connection URL to the databases entirely in memory. The benefit of this approach DBMS, along with a dashboard or API to control the system. is that it enables certain optimizations because the DBMS no DBaaS customers pay according to their expected applica- longer has to assume that a transaction could access data at any tion’s resource utilization. Since database queries vary widely time that is not in memory and will have to stall. Thus, these in how they use computing resources, DBaaS providers typ- systems can get better performance because many of the com- ically do not meter query invocations in the same way that ponents that are necessary to handle these cases, like a buffer they meter operations in block-oriented storage services (e.g., pool manager or heavy-weight concurrency control schemes, Amazon’s S3, Google’s Cloud Storage). Instead, customers are not needed [38]. subscribe to a pricing tier that specifies the maximum resource There are several NewSQL DBMSs that are based on a main utilization threshold (e.g., storage size, computation power, memory storage architecture, including both academic (e.g., memory allocation) that the provider will guarantee. H-Store, HyPer) and commercial (e.g., MemSQL, SAP HANA, As in most aspects of cloud computing, the largest com- VoltDB) systems. These systems perform significantly better panies are the major players in the DBaaS field due to the than disk-based DBMSs for OLTP workloads because of this economies of scale. But almost all of the DBaaSs just pro- main memory orientation. vide a managed instance of a traditional, single-node DBMS The idea of storing a database entirely in main memory is (e.g., MySQL): notable examples include Google Cloud SQL, not a new one [28, 33]. The seminal research at the University Microsoft Azure SQL, Rackspace Cloud Database, and Sales- of Wisconsin-Madison in the early 1980s established the foun- force Heroku. We do not consider these to be NewSQL sys- dation for many aspects of main memory DBMSs [43], includ- tems as they use the same underlying disk-oriented DBMSs ing indexes, query processing, and recovery algorithms. In based on the 1970s architectures. Some vendors, like Mi- that same decade, the first distributed main-memory DBMSs, crosoft, retro-fitted their DBMS to provide better support for PRISMA/DB, was also developed [40]. The first commercial multi-tenant deployments [21]. main memory DBMSs appeared in 1990s; Altibase [2], Ora- We instead regard only those DBaaS products that are based cle’s TimesTen [60], and AT&T’s DataBlitz [20] were early on a new architecture as NewSQL. The most notable examples proponents of this approach. is Amazon’s Aurora for their MySQL RDS. Its distinguish- One thing that is new with main memory NewSQL systems ing feature over InnoDB is that it uses a log-structured storage is the ability to evict a subset of the database out to persistent manager to improve I/O parallelism. storage to reduce its memory footprint. This allows the DBMS There are also companies that do not maintain their own to support databases that are larger than the amount of mem- data centers but rather sell DBaaS software that run on top of ory available without having to switch back to a disk-oriented these public cloud platforms. ClearDB provides their own cus- architecture. The general approach is to use an internal track- tom DBaaS that can be deployed on all of the major cloud plat- ing mechanism inside of the system to identify which tuples forms. This has the advantage that it can distribute a database are not being accessed anymore and then chose them for evic- across different providers in the same geographical region to tion. H-Store’s anti-caching component moves cold tuples to avoid downtimes due to service outages. a disk-resident store and then installs a “tombstone” record in Aurora and ClearDB are the only two products available the database with the location of the original data [25]. When in this NewSQL category as of 2016. We note that several a transaction tries to access a tuple through one of these tomb- companies in this space have failed (e.g., GenieDB, Xeround), stones, it is aborted and then a separate thread asynchronously forcing their customers to scramble to find a new provider and retrieves that record and moves it back into memory. An- migrate their data out of those DBaaS before they were shut other variant for supporting larger-than-memory databases is down. We attribute their failure due to being ahead of market an academic project from EPFL that uses OS virtual mem- demand and from being out-priced from the major vendors. ory paging in VoltDB [56]. To avoid false negatives, all of these DBMSs retain the keys for evicted tuples in databases’ Examples: Amazon Aurora [3], ClearDB [5]. indexes, which inhibits the potential memory savings for those applications with many secondary indexes. Although not a NewSQL DBMS, Microsoft’s Project Siberia [29] for Heka- 4. THE STATE OF THE ART ton maintains a Bloom filter per index to reduce the in-memory We next discuss the features of NewSQL DBMSs to under- storage overhead of tracking evicted tuples. stand what (if anything) is novel in these systems. A summary Another DBMS that takes a different approach for larger- of our analysis is shown in Table 1. than-memory databases is MemSQL where an administrator can manually instruct the DBMS to store a table in a columnar 4.1 Main Memory Storage format. MemSQL does not maintain any in-memory tracking All of the major DBMSs use a disk-oriented storage archi- meta-data for these disk-resident tuples. It organizes this data tecture based on the original DBMSs from the 1970s. In these in log-structured storage to reduce the overhead of updates, systems, the primary storage location of the database is as- 48 SIGMOD Record, June 2016 (Vol. 42, No. 2)

5 .which are traditionally slow in OLAP data warehouses. tions finish correctly at different nodes. The NewSQL DBMSs that deviate from the homegenous 4.2 Partitioning / Sharding cluster node architecture are NuoDB and MemSQL. For NuoDB, The way that almost all of the distributed NewSQL DBMSs it designates one or more nodes as storage managers (SM) scale out is to split a database up into disjoint subsets, called that each store a partition of the database. The SMs splits either partitions or shards. a database into blocks (called “atoms” in NuoDB parlance). Distributed transaction processing on partitioned databases All other nodes in the cluster are designated as transaction en- is not a new idea. Many of the fundamentals of these sys- gines (TEs) that act as an in-memory cache of atoms. To pro- tems came from the seminal work by the great Phil Bernstein cess a query, a TE node retrieves all of the atoms that it needs (and others) in the SDD-1 project in the late 1970s [51]. In for that query (either from the appropriate SMs or from other the early 1980s, the teams behind the two pioneering, single- TEs). TEs acquire write-locks on tuples and then broadcasts node DBMSs, System R and INGRES, both also created dis- any changes to atoms to the other TEs and the SM. To avoid tributed versions of their respective systems. IBM’s R* was atoms from moving back and forth between nodes, NuoDB ex- a shared-nothing, disk-oriented distributed DBMS like SDD- poses load-balancing schemes to ensure that data that is used 1 [63]. The distributed version of INGRES is mostly remem- together often reside at the same TE. This means that NuoDB bered for its dynamic query optimization algorithm that re- ends up with the same partitioning scheme as the other dis- cursively breaks a distributed query into smaller pieces [31]. tributed DBMSs but without having to pre-partition the database Later, the GAMMA project [27] from the University of Wis- or identify the relationships between tables. consin-Madison explored different partitioning strategies. MemSQL also uses a similar heterogeneous architecture com- But these earlier distributed DBMSs never caught on for two prised of execution-only aggregator nodes and leaf nodes that reasons. The first of these was that computing hardware in the store the actual data. The difference between these two sys- 20th century was so expensive that most organizations could tems is in how they reduce the amount of data that is pulled not afford to deploy their database on a cluster of machines. from the storage nodes to the execution nodes. With NuoDB, The second issue was that the application demand for a high- the TEs cache atoms to reduce the amount data that they read performance distributed DBMS was simply not there. Back from the SMs. MemSQL’s aggregator nodes do not cache any then the expected peak throughput of a DBMS was typically data, but the leaf nodes execute parts of queries to reduce the measured at tens to hundreds of transactions per second. We amount of data that is sent to the aggregator nodes; this is not now live in an era where both of these assumptions are no possible in NuoDB because the SMs are only a data store. longer true. Creating a large-scale, data-intensive application These two systems are able to add additional execution re- is easier now than it ever has been, in part due to the prolifera- sources to the DBMS’s cluster (NuoDB’s TE nodes, Mem- tion of open-source distributed system tools, cloud computing SQL’s aggregator nodes) without needing to re-partition the platforms, and affordable mobile devices. database. A research prototype of SAP HANA also explored The database’s tables are horizontally divided into multiple using this approach [36]. It remains to be seen, however, whether fragments whose boundaries are based on the values of one (or such a heterogeneous architecture is superior to a homegenous more) of the table’s columns (i.e., the partitioning attributes). one (i.e., were each node both stores data and executes queries) The DBMS assigns each tuple to a fragment based on the val- in terms of either performance or operational complexity. ues of these attributes using either range or hash partitioning. Another aspect of partitioning in NewSQL systems that is Related fragments from multiple tables are combined together new is that some of them support live migration. This al- to form a partition that is managed by a single node. That node lows the DBMS to move data between physical resources to is responsible for executing any query that needs to access data re-balance and alleviate hotspots, or to increase/decrease the stored in its partition. Only the DBaaS systems (Amazon Au- DBMS’s capacity without any interruption to service. This rora, ClearDB) do not support this type of partitioning. is similar to re-balancing in NoSQL systems, but it is more Ideally, the DBMS should be able to also distribute the ex- difficult because a NewSQL DBMS has to maintain ACID ecution of a query to multiple partitions and then combine guarantees for transactions during the migration [30]. There their results together into a single result. All of the NewSQL two approaches that DBMSs use to achieve this. The first systems except for ScaleArc that support native partitionining is to organize the database in many coarse-grained “virtual” provide this functionality. (i.e., logical) partitions that are spread amongst the physical The databases for many OLTP applications have a key prop- nodes [52]. Then when the DBMS needs to re-balance, it erty that makes them amenable to partitioning. Their database moves these virtual partitions between nodes. This is the ap- schemas can be transposed into a tree-like structure where de- proach used in Clustrix and AgilData, as well as in NoSQL scendants in the tree have a foreign key relationship to the systems like Cassandra and DynamoDB. The other approach root [58]. The tables are then partitioned on the attributes is for the DBMS to perform more fine-grained re-balancing involved in these relationships such that all of the data for by redistributing individual tuples or groups of tuples through a single entity are co-located together in the same partition. range partitioning. This is akin to the auto-sharding feature For example, the root of the tree could be the customer table, in the MongoDB NoSQL DBMS. It is used in systems like and the database is partitioned such that each customer, along ScaleBase and H-Store [30]. with their order records and account information, are stored together. The benefit of this is that it allows most (if not all) 4.3 Concurrency Control transactions to only need to access data at a single partition. Concurrency control scheme is the most salient and impor- This in turn reduces the communication overhead of the sys- tant implementation detail of a transaction processing DBMS tem because it does not have to use an atomic commitment as it affects almost all aspects of the system. Concurrency con- protocol (e.g., two-phase commit) to make sure that transac- trol permits end-users to access a database in a multi-program- SIGMOD Record, June 2016 (Vol. 45, No. 2) 49

6 .med fashion while preserving the illusion that each of them is is also used in both Google’s Spanner, NuoDB, and Clustrix. executing their transaction alone on a dedicated system. It es- NuoDB improves on the original MVCC by employing a gos- sentially provides the atomicity and isolation guarantees in the sip protocol to broadcast versioning information between nodes. system, and as such it influences the entire system’s behavior. All of the middleware and DBaaS services inherit the con- Beyond which scheme a system uses, another important as- currency control scheme of their underlying DBMS architec- pect of the design of a distributed DBMS is whether the sys- ture; since most of them use MySQL, this makes them 2PL tem uses a centralized or decentralized transaction coordina- with MVCC systems. tion protocol. In a system with a centralized coordinator, all We regard the concurrency control implementation in Span- transactions’ operations have to go through the coordinator, ner (along with its descendants F1 [54] and SpannerSQL) as which then makes decisions about whether transactions are al- one of the most novel of the NewSQL systems. The actual lowed to proceed or not. This is the same approach used by scheme itself is based on the 2PL and MVCC combination de- the TP monitors of the 1970–1980s (e.g., IBM CICS, Oracle veloped in previous decades. But what makes Spanner differ- Tuxedo). In a decentralized system, each node maintains the ent is that it uses hardware devices (e.g., GPS, atomic clocks) state of transactions that access the data that it manages. The for high-precision clock synchronization. The DBMS uses nodes then have to coordinate with each other to determine these clocks to assign timestamps to transactions to enforce whether concurrent transactions conflict. A decentralized co- consistent views of its multi-version database over wide-area ordinator is better for scalability but requires that the clocks in networks. CockroachDB also purports to provide the same the DBMS nodes are highly synchronized in order to generate kind of consistency for transactions across data centers as Span- a global ordering of transactions [24]. ner but without the use of atomic clocks. They instead rely on The first distributed DBMSs from the 1970–80s used two- a hybrid clock protocol that combines loosely synchronized phase locking (2PL) schemes. SDD-1 was the first DBMS hardware clocks and logical counters [41]. specifically designed for distributed transaction processing ac- Spanner is also noteworthy because it heralds Google’s re- ross a cluster of shared-nothing nodes managed by a central- turn to using transactions for its most critical services. The ized coordinator. IBM’s R* was similar to SDD-1, but the authors of Spanner even remark that it is better to have their main difference was that the coordination of transactions in application programmers deal with performance problems due R* was completely decentralized; it used distributed 2PL pro- to overuse of transactions, rather than writing code to deal with tocol where transactions locked data items that they access di- the lack of transactions as one does with a NoSQL DBMS [24]. rectly at nodes. The distributed version of INGRES also used Lastly, the only commercial NewSQL DBMS that is not us- decentralized 2PL with centralized deadlock detection. ing some MVCC variant is VoltDB. This system still uses TO Almost all of the NewSQL systems based on new archi- concurrency control, but instead of interleaving transactions tectures eschew 2PL because the complexity of dealing with like in MVCC, it schedules transactions to execute one-at-a- deadlocks. Instead, the current trend is to use variants of times- time at each partition. It also uses a hybrid architecture where tamp ordering (TO) concurrency control where the DBMS as- single-partition transactions are scheduled in a decentralized sumes that transactions will not execute interleaved operations manner but multi-partition transactions are scheduled with a that will violate serializable ordering. The most widely used centralized coordinator. VoltDB orders transactions based on protocol in NewSQL systems is decentralized multi-version logical timestamps and then schedules them for execution at concurrency control (MVCC) where the DBMS creates a new a partition when it is their turn. When a transaction executes version of a tuple in the database when it is updated by a trans- at a partition, it has exclusive access to all of the data at that action. Maintaining multiple versions potentially allows trans- partition and thus the system does not have to set fine-grained actions to still complete even if another transaction updates the locks and latches on its data structures. This allows transac- same tuples. It also allows for long-running, read-only trans- tions that only have to access a single partition to execute effi- actions to not block on writers. This protocol is used in al- ciently because there is no contention from other transactions. most all of the NewSQL systems based on new architectures, The downside of partition-based concurrency control is that like MemSQL, HyPer, HANA, and CockroachDB. Although it does not work well if transactions span multiple partitions there are engineering optimizations and tweaks that these sys- because the network communication delays cause nodes to sit tems use in their MVCC implementations to improve perfor- idle while they wait for messages. This partition-based con- mance, the basic concepts of the scheme are not new. The currency is not a new idea. An early variant of it was first first known work describing MVCC is a MIT PhD dissertation proposed in a 1992 paper by Hector Garcia-Molina [34] and from 1979 [49], while the first commercial DBMSs to use it implemented in the kdb system in late 1990s [62] and in H- were Digital’s VAX Rdb and InterBase in the early 1980s. We Store (which is the academic predecessor of VoltDB). note that the architecture of InterBase was designed by Jim In general, we find that there is nothing significantly new Starkey, who is also the original designer of NuoDB and the about the core concurrency control schemes in NewSQL sys- failed Falcon MySQL storage engine project. tems other than laudable engineering to make these algorithms Other systems use a combination of 2PL and MVCC to- work well in the context of modern hardware and distributed gether. With this approach, transactions still have to acquire operating environments. locks under the 2PL scheme to modify the database. When a transaction modifies a record, the DBMS creates a new ver- 4.4 Secondary Indexes sion of that record just as it would with MVCC. This scheme A secondary index contains a subset of attributes from a ta- allows read-only queries to avoid having to acquire locks and ble that are different than its primary key(s). This allows the therefore not block on writing transactions. The most famous DBMS to support fast queries beyond primary key or parti- implementation of this approach is MySQL’s InnoDB, but it tioning key look-ups. They are trivial to support in a non- partitioned DBMS because the entire database is located on a 50 SIGMOD Record, June 2016 (Vol. 42, No. 2)

7 .single node. The challenge with secondary indexes in a dis- before that transaction is considered committed (i.e., durable). tributed DBMS is that they cannot always be partitioned in The advantage of this approach is that replicas can serve read- the same manner as with the rest of the database. For exam- only queries and still be consistent. That is, if the application ple, suppose that the tables of a database are partitioned based receives an acknowledgement that a transaction has commit- on the customer’s table primary key. But then there are some ted, then any modifications made by that transaction are visible queries that want to do a reverse look-up from the customer’s to any subsequent transaction in the future regardless of what email address to the account. Since the tables are partitioned DBMS node they access. It also means that when a replica on the primary key, the DBMS will have to broadcast these fails, there are no lost updates because all the other nodes queries to every node, which is obviously inefficient. are synchronized. But maintaining this synchronization re- The two design decisions for supporting secondary indexes quires the DBMS to use an atomic commitment protocol (e..g, in a distributed DBMS are (1) where the system will store them two-phase commit) to ensure that all replicas agree with the and (2) how it will maintain them in the context of transac- outcome of a transaction, which has additional overhead and tions. In a system with a centralized coordinator, like with can lead to stalls if a node fails or if there is a network parti- sharding middleware, secondary indexes can reside on both tion/delay. This is why NoSQL systems opt for a weakly con- the coordinator node and the shard nodes. The advantage of sistent model (also called eventual consistency) where not all this approach is that there is only a single version of the index replicas have to acknowledge a modification before the DBMS in the entire system, and thus it is easier to maintain. notifies the application that the write succeeded. All of the NewSQL systems based on new architectures All of the NewSQL systems that we are aware of support are decentralized and use partitioned secondary indexes. This strongly consistent replication. But there is nothing novel about means that each node stores a portion of the index, rather than how these systems ensure this consistency. The fundamentals each node having a complete copy of it. The trade-off be- of state machine replication for DBMSs were studied back in tween partitioned and replicated indexes is that with the for- the 1970s [37, 42]. NonStop SQL was one of the first dis- mer queries may need to span multiple nodes to find what they tributed DBMSs built in the 1980s using strongly consistency are looking for but if a transaction updates an index it will only replication to provide fault tolerance in this same manner [59]. have to modify one node. In a replicated index, the roles are In addition to the policy of when a DBMS propagates up- reversed: a look-up query can be satisfied by just one node in dates to replicas, there are also two different execution mod- the cluster, but any time a transaction modifies the attributes els for how the DBMS performs this propagation. The first, referenced in secondary index’s underlying table (i.e., the key known as active-active replication, is where each replica node or the value), the DBMS has to execute a distributed transac- processes the same request simultaneously. For example, when tion that updates all copies of the index. a transaction executes a query, the DBMS executes that query An example of a decentralized secondary index that mixes in parallel at all of the replicas. This is different from active- both of these concepts is in Clustrix. The DBMS first main- passive replication where a request is first processed at a sin- tains a replicated, coarse-grained (i.e., range-based) index at gle node and then the DBMS transfers the resultant state to the each node that maps values to partitions. This mapping al- other replicas. Most NewSQL DBMSs implement this second lows the DBMS to route queries to the appropriate node using approach because they use a non-deterministic concurrency an attribute that is not the table’s partitioning attribute. These control scheme. This means that they cannot send queries to queries will then access a second partitioned index at that node replicas as they arrive on the master because they may get ex- that maps exact values to tuples. Such a two-tier approach re- ecuted in a different order on the replicas and the state of the duces the amount of coordination that is needed to keep the databases will diverge at each replica. This is because their replicated index in sync across the cluster since it only maps execution order depends on several factors, including network ranges instead of individual values. delays, cache stalls, and clock skew. The most common way that developers create secondary in- Deterministic DBMSs (e.g., H-Store, VoltDB, ClearDB) on dexes when using a NewSQL DBMS that does not support the other hand do not perform these additional coordination them is to deploy an index using an in-memory, distributed steps. This is because the DBMS guarantees that transactions’ cache, such as Memcached [32]. But using an external sys- operations execute in the same order on each replica and thus tem requires the application to maintain the cache since the the state of the database is guaranteed to be the same [44]. DBMSs will not automatically invalidate the external cache. Both VoltDB and ClearDB also ensure that the application does not execute queries that utilize sources of information 4.5 Replication that are external to the DBMS that may be different on each The best way that an organization can ensure high availabil- replica (e.g., setting a timestamp field to the local system clock). ity and data durability for their OLTP application is to replicate One aspect of the NewSQL systems that is different than their database. All modern DBMSs, including NewSQL sys- previous work outside of academia is the consideration of repli- tems, support some kind of replication mechanism. DBaaS cation over the wide-area network (WAN). This is a byproduct have a distinct advantage in this area because they hide all of modern operating environments where it is now trivial to of the gritty details of setting of replication from their cus- deploy systems across multiple data centers that are separated tomers. They make it easy to deploy a replicated DBMS with- by large geographical differences. Any NewSQL DBMS can out the administrator having to worry about transmitting logs be configured to provide synchronous updates of data over the and making sure that nodes are in sync. WAN, but this would cause significant slowdown for normal There are two design decisions when it comes to database operations. Thus, they instead provide asynchronous repli- replication. The first is how the DBMS enforces data consis- cation methods. To the best of our knowledge, Spanner and tency across nodes. In a strongly consistent DBMS, a transac- CockroachDB are the only NewSQL systems to provide a repli- tion’s writes must be acknowledged and installed at all replicas SIGMOD Record, June 2016 (Vol. 45, No. 2) 51

8 .cation scheme that is optimized for strongly consistent replicas ical data sets with new data [35]. This differs from traditional over the WAN. They again achieve this through a combination business intelligence operations from the previous decade that of atomic and GPS hardware clocks (in case of Spanner [24]), could only perform this analysis on historical data. Having a or hybrid clocks (in the case of CockroachDB [41]). shorter turnaround time is important in modern applications because data has immense value as soon as it is created, but 4.6 Crash Recovery that value diminishes over time. Another important feature of a NewSQL DBMS for provid- There are three approaches to supporting HTAP pipelines in ing fault tolerance is its crash recovery mechanism. But unlike a database application. The most common is to deploy sepa- traditional DBMSs where the main concern of fault tolerance rate DBMSs: one for transactions and another for analytical is to ensure that no updates are lost [47], newer DBMSs must queries. With this architecture, the front-end OLTP DBMS also minimize downtime. Modern web applications are ex- stores all of the new information generated from transactions. pected to be on-line all the time and site outages are costly. Then in the background, the system uses an extract-transform- The traditional approach to recovery in a single-node sys- load utility to migrate data from this OLTP DBMS to a second tem without replicas is that when the DBMS comes back on- back-end data warehouse DBMS. The application executes all line after a crash, it loads in the last checkpoint that it took complex OLAP queries in the back-end DBMS to avoid slow- from disk and then replays its write-ahead log (WAL) to re- ing down the OLTP system. Any new information generated turn the state of the database to where it was at the moment of from the OLAP system is pushed forward to front-end DBMS. the crash. The canonical method of this approach, known as Another prevailing system design, known as the lambda ar- ARIES [47], was invented by IBM researchers in the 1990s. chitecture [45], is to use a separate batch processing system All major DBMSs implement some variant of ARIES. (e.g., Hadoop, Spark) to compute a comprehensive view on In a distributed DBMS with replicas, however, the tradi- historical data, while simultaneously using a stream process- tional single-node approach is not directly applicable. This ing system (e.g., Storm [61], Spark Streaming [64]) to provide is because when the master node crashes, the system will pro- views of incoming data. In this split architecture, the batch mote one of the slave nodes to be the new master. When the processing system periodically rescans the data set and per- previous master comes back on-line, it cannot just load in its forms a bulk upload of the result to the stream processing sys- last checkpoint and rerun its WAL because the DBMS has con- tem, which then makes modifications based on new updates. tinued to process transactions and therefore the state of the There are several problems inherent with the bifurcated en- database has moved forward. The recovering node needs to get vironment of these two approaches. Foremost is that the time the updates from the new master (and potentially other repli- it takes to propagate changes between the separate systems cas) that it missed while it was down. There are two potential is often measured in minutes or even hours. This data trans- ways to do this. The first is for the recovering node to load fer inhibits an application’s ability to act on data immediately in its last checkpoint and WAL from its local storage and then when it is entered in the database. Second, the administrative pull log entries that it missed from the other nodes. As long overhead of deploying and maintaining two different DBMSs as the node can process the log faster than new updates are is non-trivial as personnel is estimated to be almost 50% of appended to it, the node will eventually converge to the same the total ownership cost of a large-scale database system [50]. state as the other replica nodes. This is possible if the DBMS It also requires the application developer to write a query for uses physical or physiological logging, since the time to apply multiple systems if they want to combine data from different the log updates directly to tuples is much less than the time databases. Some systems that try to achieve a single platform it takes to execute the original SQL statement. To reduce the by hiding this split system architecture; an example of this time it takes to recover, the other option is for the recovering is Splice Machine [16], but this approach has other technical node to discard its checkpoint and have system take a new one issues due to copying data from the OLTP system (Hbase) be- that the node will recover from. One additional benefit of this fore it can be used in the OLAP system (Spark). approach is that this same mechanism can also be used in the The third (and in our opinion better) approach is to use a DBMS to add a new replica node. single HTAP DBMS that supports the high throughput and low The middleware and DBaaS systems rely on the built-in latency demands of OLTP workloads, while also allowing for mechanisms of their underlying single-node DBMSs, but add complex, longer running OLAP queries to operate on both hot additional infrastructure for leader election and other manage- (transactional) and cold (historical) data. What makes these ment capabilities. The NewSQL systems that are based on new newer HTAP systems different from legacy general-purpose architectures use a combination of off-the-shelf components DBMSs is that they incorporate the advancements from the (e.g., ZooKeeper, Raft) and their own custom implementations last decade in the specialized OLTP (e.g., in-memory storage, of existing algorithms (e.g., Paxos). All of these are standard lock-free execution) and OLAP (e.g., columnar storage, vec- procedures and technologies that have been available in com- torized execution) systems, but within a single DBMS. mercial distributed systems since the 1990s. SAP HANA and MemSQL were the first NewSQL DBMSs to market themselves as HTAP systems. HANA achieves this by using multiple execution engines internally: one engine for 5. FUTURE TRENDS row-oriented data that is better for transactions and a different We foresee the next trend for database applications in the engine for column-oriented data that is better for analytical near future is the ability to execute analytical queries and ma- queries. MemSQL uses two different storage managers (one chine learning algorithms on freshly obtained data. Such work- for rows, one for columns) but mixes them together in a single loads, colloquially known as “real-time analytics” or hybrid execution engine. HyPer switched from a row-oriented system transaction-analytical processing (HTAP), seek to extrapolate with H-Store-style concurrency control that was focused on insights and knowledge by analyzing a combination of histor- 52 SIGMOD Record, June 2016 (Vol. 42, No. 2)

9 . Year Main Memory Concurrency Summary Partitioning Replication Released Storage Control Clustrix [6] 2006 No Yes MVCC+2PL Strong+Passive MySQL-compatible DBMS that supports shared-nothing, distributed execution. CockroachDB [7] 2014 No Yes MVCC Strong+Passive Built on top of distributed key/value store. Uses software hybrid clocks for WAN replication. Google Spanner [24] 2012 No Yes MVCC+2PL Strong+Passive WAN-replicated, shared-nothing DBMS that uses special hardware for timestamp generation. N EW A RCHITECTURES H-Store [8] 2007 Yes Yes TO Strong+Active Single-threaded execution engines per partition. Optimized for stored procedures. HyPer [9] 2010 Yes Yes MVCC Strong+Passive HTAP DBMS that uses query compilation and memory efficient indexes. MemSQL [11] 2012 Yes Yes MVCC Strong+Passive Distributed, shared-nothing DBMS using com- piled queries. Supports MySQL wire protocol. NuoDB [14] 2013 Yes Yes MVCC Strong+Passive Split architecture with multiple in-memory ex- ecutor nodes and a single shared storage node. SAP HANA [55] 2010 Yes Yes MVCC Strong+Passive Hybrid storage (rows + cols). Amalgamation of previous TREX, P*TIME, and MaxDB systems. VoltDB [17] 2008 Yes Yes TO Strong+Active Single-threaded execution engines per partition. Supports streaming operators. AgilData [1] 2007 No Yes MVCC+2PL Strong+Passive Shared-nothing database sharding over single- M IDDLEWARE node MySQL instances. MariaDB MaxScale [10] 2015 No Yes MVCC+2PL Strong+Passive Query router that supports custom SQL rewrit- ing. Relies on MySQL Cluster for coordination. ScaleArc [15] 2009 No Yes Mixed Strong+Passive Rule-based query router for MySQL, SQL Server, and Oracle. Amazon Aurora [3] 2014 No No MVCC Strong+Passive Custom log-structured MySQL engine for RDS. DBAA S ClearDB [5] 2010 No No MVCC+2PL Strong+Active Centralized router that mirrors a single-node MySQL instance in multiple data centers. Table 1: NewSQL Systems – Summary of the system features described in Section 4 for the different DBMSs. Note that the year released is either when the project was announced publicly or when the company was first formed. OLTP to use an HTAP column-store architecture with MVCC It is also interesting to consider the potential impact and fu- to allow it support more complex OLAP queries [48]. Even ture direction of NewSQL DBMSs in the marketplace. Given VoltDB has pivoted their marketing strategy from pure OLTP that the legacy DBMS vendors are entrenched and well funded, performance to providing streaming semantics. Similarly, the NewSQL systems have an uphill battle to gain market share. In S-Store project seeks to add support for stream processing op- the last five years since we first coined the term NewSQL [18], erations on top of the H-Store architecture [46]. It is likely several NewSQL companies have folded (e.g., GenieDB, Xer- that the specialized OLAP systems from the mid-2000s (e.g., ound, Translattice) or pivoted to focus on other problem do- Greenplum) will start to add support for better OLTP. mains (e.g., ScaleBase, ParElastic). Based on our analysis We note, however, that the rise of HTAP DBMSs does mean and interviews with several companies, we have found that the end of giant, monolithic OLAP warehouses. Such systems NewSQL systems have had a relatively slow rate of adoption, will still be necessary in the short-term as they stand to be the especially compared to the developer-driven NoSQL uptake. universal back-end database for all of an organization’s front- This is because NewSQL DBMSs are designed to support the end OLTP silos. But eventually the resurgence of database fed- transactional workloads that are mostly found in enterprise ap- eration will allow organization’s to execute analytical queries plications. Decisions regarding database choices for these en- that span multiple OLTP databases (including even multiple terprise applications are likely to be more conservative than vendors) without needing to move data around. for new Web application workloads. This is also evident from the fact that we find that NewSQL DBMSs are used to com- 6. CONCLUSION plement or replace existing RDBMS deployments, whereas The main takeaway from our analysis is that NewSQL data- NoSQL are being deployed in new application workloads [19]. base systems are not a radical departure from existing system Unlike with the OLAP DBMS start-ups from the 2000s, architectures but rather represent the next chapter in the con- where almost all of the vendors were acquired by major tech- tinuous development of database technologies. Most of the nology companies, up until now there has been only one acqui- techniques that these systems employ have existed in previous sition made of a NewSQL company. In March 2016, Tableau DBMSs from academia and industry. But many of them were announced that it purchased the start-up formed for the HyPer only implemented one-at-a-time in a single system and never project. The two other possible exceptions to this are (1) Ap- all together. What is therefore innovative about these NewSQL ple acquiring FoundationDB in March 2015, but we exclude DBMSs is that they incorporate these ideas into single plat- them because this system was at its core a NoSQL key-value forms. Achieving this is by no means a trivial engineering store with an inefficient SQL layer grafted on top of it, and effort. They are by-products of a new era where distributed (2) ScaleArc acquiring ScaleBase, but this was one competitor computing resources are plentiful and affordable, but at the buying out another. None of these examples are the same kind same time the demands of applications is much greater. of acquisition where a legacy vendor purchasing an upstart SIGMOD Record, June 2016 (Vol. 45, No. 2) 53

10 .system (e.g., Teradata buying Aster Data Systems in 2011). [20] J. Baulier, P. Bohannon, S. Gogate, S. Joshi, C. Gupta, We instead see that the large vendors are choosing to innovate A. Khivesera, H. F. Korth, P. McIlroy, J. Miller, P. P. S. and improve their own systems rather than acquire NewSQL Narayan, M. Nemeth, R. Rastogi, A. Silberschatz, and start-ups. Microsoft added the in-memory Hekaton engine S. Sudarshan. DataBlitz: A high performance to SQL Server in 2014 to improve OLTP workloads. Oracle main-memory storage manager. VLDB, pages 701–, and IBM have been slightly slower to innovate; they recently 1998. added column-oriented storage extensions to their systems to [21] P. A. Bernstein, I. Cseri, N. Dani, N. Ellis, A. Kalhan, compete with the rising popularity of OLAP DBMSs like HP G. Kakivaya, D. B. Lomet, R. Manne, L. Novik, and Vertica and Amazon Redshift. It is possible that they will add T. Talius. Adapting microsoft SQL server for cloud an in-memory option for OLTP workloads in the future. computing. In ICDE, pages 1255–1263, 2011. More long term, we believe that there will be a conver- [22] R. Cattell. Scalable sql and nosql data stores. SIGMOD gence of features in the four classes of systems that we dis- Rec., 39:12–27, 2011. cussed here: (1) the older DBMSs from the 1980-1990s, (2) [23] F. Chang, J. Dean, S. Ghemawat, W. C. Hsieh, D. A. the OLAP data warehouses from the 2000s, (3) the NoSQL Wallach, M. Burrows, T. Chandra, A. Fikes, and R. E. DBMSs from the 2000s, and (4) the NewSQL DBMSs from Gruber. Bigtable: A distributed storage system for the 2010s. We expect that all of the key systems in these structured data. ACM Trans. Comput. Syst., 26:4:1–4:26, groups will support some form of the relational model and June 2008. SQL (if they do not already), as well as both OLTP opera- [24] J. C. Corbett, J. Dean, M. Epstein, A. Fikes, C. Frost, tions and OLAP queries together like HTAP DBMSs. When J. Furman, S. Ghemawat, A. Gubarev, C. Heiser, this occurs, such labels will be meaningless. P. Hochschild, W. Hsieh, S. Kanthak, E. Kogan, H. Li, A. Lloyd, S. Melnik, D. Mwaura, D. Nagle, S. Quinlan, ACKNOWLEDGMENTS R. Rao, L. Rolig, Y. Saito, M. Szymaniak, C. Taylor, The authors would like to thank the following people for their R. Wang, and D. Woodford. Spanner: Google’s feedback: Andy Grove (AgilData), Prakhar Verma (Amazon), Globally-Distributed Database. In OSDI, 2012. Cashton Coleman (ClearDB), Dave Anselmi (Clustrix), Spencer [25] J. DeBrabant, A. Pavlo, S. Tu, M. Stonebraker, and S. B. Kimball (CockroachDB), Peter Mattis (CockroachDB), Ankur Zdonik. Anti-caching: A new approach to database Goyal (MemSQL), Seth Proctor (NuoDB), Anil Goel (SAP management system architecture. PVLDB, HANA), Ryan Betts (VoltDB). This work was supported (in 6(14):1942–1953, 2013. part) by the National Science Foundation (Award CCF-1438955). [26] G. DeCandia, D. Hastorun, M. Jampani, G. Kakulapati, For questions or comments about this paper, please call A. Lakshman, A. Pilchin, S. Sivasubramanian, the CMU Database Hotline at +1-844-88-CMUDB. P. Vosshall, and W. Vogels. Dynamo: amazon’s highly available key-value store. SIGOPS Oper. Syst. Rev., 7. REFERENCES 41:205–220, October 2007. [1] AgilData Scalable Cluster for MySQL. [27] D. J. DeWitt, R. H. Gerber, G. Graefe, M. L. Heytens, http://www.agildata.com/. K. B. Kumar, and M. Muralikrishna. GAMMA - a high [2] Altibase. http://altibase.com. performance dataflow database machine. In VLDB, [3] Amazon Aurora. pages 228–237, 1986. https://aws.amazon.com/rds/aurora. [28] D. J. DeWitt, R. H. Katz, F. Olken, L. D. Shapiro, M. R. [4] Apache Trafodion. http://trafodion.apache.org. Stonebraker, and D. Wood. Implementation techniques for main memory database systems. SIGMOD Rec., [5] ClearDB. https://www.cleardb.com. 14(2):1–8, 1984. [6] Clustrix. http://www.clustrix.com. [29] A. Eldawy, J. Levandoski, and P.-Å. Larson. Trekking [7] CockroachDB. https://www.cockroachlabs.com/. through siberia: Managing cold data in a [8] H-Store. http://hstore.cs.brown.edu. memory-optimized database. Proceedings of the VLDB [9] HyPer. http://hyper-db.de. Endowment, 7(11):931–942, 2014. [10] MariaDB MaxScale. https: [30] A. J. Elmore, V. Arora, R. Taft, A. Pavlo, D. Agrawal, //mariadb.com/products/mariadb-maxscale. and A. E. Abbadi. Squall: Fine-grained live [11] MemSQL. http://www.memsql.com. reconfiguration for partitioned main memory databases. [12] MySQL Fabric. https://www.mysql.com/products/ SIGMOD, pages 299–313, 2015. enterprise/fabric.html. [31] R. Epstein, M. Stonebraker, and E. Wong. Distributed [13] MySQL Proxy. query processing in a relational data base system. http://dev.mysql.com/doc/mysql-proxy/en/. SIGMOD, pages 169–180, 1978. [14] NuoDB. http://www.nuodb.com. [32] B. Fitzpatrick. Distributed Caching with Memcached. [15] ScaleArc. http://scalearc.com. Linux J., 2004(124):5–, Aug. 2004. [16] Splice Machine. http://www.splicemachine.com. [33] H. Garcia-Molina, R. J. Lipton, and J. Valdes. A [17] VoltDB. http://www.voltdb.com. massive memory machine. IEEE Trans. Comput., [18] M. Aslett. How will the database incumbents respond to 33(5):391–399, May 1984. NoSQL and NewSQL? The 451 Group, April 2011. [34] H. Garcia-Molina and K. Salem. Main memory database [19] M. Aslett. MySQL vs. NoSQL and NewSQL: systems: An overview. IEEE Trans. on Knowl. and Data 2011-2015. The 451 Group, May 2012. Eng., 4(6):509–516, Dec. 1992. 54 SIGMOD Record, June 2016 (Vol. 42, No. 2)

11 .[35] Gartner. Hybrid Transaction/Analytical Processing Will [50] A. Rosenberg. Improving query performance in data Foster Opportunities for Dramatic Business Innovation. warehouses. Business Intelligence Journal, 11, Jan. https://www.gartner.com/doc/2657815/, 2014. 2006. [36] A. K. Goel, J. Pound, N. Auch, P. Bumbulis, [51] J. B. Rothnie, Jr., P. A. Bernstein, S. Fox, N. Goodman, S. MacLean, F. Färber, F. Gropengiesser, C. Mathis, M. Hammer, T. A. Landers, C. Reeve, D. W. Shipman, T. Bodner, and W. Lehner. Towards scalable real-time and E. Wong. Introduction to a system for distributed analytics: An architecture for scale-out of olxp databases (SDD-1). ACM Trans. Database Syst., workloads. Proc. VLDB Endow., 8(12):1716–1727, 5(1):1–17, Mar. 1980. Aug. 2015. [52] M. Serafini, E. Mansour, A. Aboulnaga, K. Salem, [37] J. Gray. Concurrency Control and Recovery in Database T. Rafiq, and U. F. Minhas. Accordion: Elastic Systems, chapter Notes on data base operating systems, scalability for database systems supporting distributed pages 393–481. Springer-Verlag, 1978. transactions. Proc. VLDB Endow., 7(12):1035–1046, [38] S. Harizopoulos, D. J. Abadi, S. Madden, and Aug. 2014. M. Stonebraker. OLTP through the looking glass, and [53] R. Shoup and D. Pritchett. The ebay architecture. SD what we found there. In SIGMOD, pages 981–992, Forum, November 2006. 2008. [54] J. Shute, R. Vingralek, B. Samwel, B. Handy, [39] A. Kemper and T. Neumann. HyPer: A hybrid C. Whipkey, E. Rollins, M. Oancea, K. Littlefield, OLTP&OLAP main memory database system based on D. Menestrina, S. Ellner, J. Cieslewicz, I. Rae, virtual memory snapshots. ICDE, pages 195–206, 2011. T. Stancescu, and H. Apte. F1: A distributed sql [40] M. L. Kersten, P. M. Apers, M. A. Houtsma, E. J. Kuyk, database that scales. Proc. VLDB Endow., and R. L. Weg. A distributed, main-memory database 6(11):1068–1079, Aug. 2013. machine. In Database Machines and Knowledge Base [55] V. Sikka, F. Färber, W. Lehner, S. K. Cha, T. Peh, and Machines, volume 43 of The Kluwer International C. Bornhövd. Efficient transaction processing in sap Series in Engineering and Computer Science, pages hana database: The end of a column store myth. 353–369. 1988. SIGMOD, pages 731–742, 2012. [41] S. Kimball. Living without atomic clocks. [56] R. Stoica and A. Ailamaki. Enabling efficient os paging https://www.cockroachlabs.com/blog/ for main-memory OLTP databases. In DaMon, 2013. living-without-atomic-clocks/, February 2016. [57] M. Stonebraker. New sql: An alternative to nosql and [42] L. Lamport. The implementation of reliable distributed old sql for new oltp apps. BLOG@CACM, June 2011. multiprocess systems. Computer Networks, 2:95–114, [58] M. Stonebraker, S. Madden, D. J. Abadi, 1978. S. Harizopoulos, N. Hachem, and P. Helland. The end of [43] T. J. Lehman. Design and performance evaluation of a an architectural era: (it’s time for a complete rewrite). In main memory relational database system. PhD thesis, VLDB, pages 1150–1160, 2007. University of Wisconsin–Madison, 1986. [59] Tandem Database Group. NonStop SQL, a distributed, [44] N. Malviya, A. Weisberg, S. Madden, and high-performance, high-availability implementation of M. Stonebraker. Rethinking main memory oltp recovery. sql. Technical report, Tandem, Apr. 1987. In ICDE, pages 604–615, 2014. [60] T. Team. In-memory data management for consumer [45] N. Marz and J. Warren. Big Data: Principles and best transactions the timesten approach. SIGMOD ’99, pages practices of scalable realtime data systems. Manning 528–529, 1999. Publications, 2013. [61] A. Toshniwal, S. Taneja, A. Shukla, K. Ramasamy, J. M. [46] J. Meehan, N. Tatbul, S. Zdonik, C. Aslantas, Patel, S. Kulkarni, J. Jackson, K. Gade, M. Fu, U. Çetintemel, J. Du, T. Kraska, S. Madden, D. Maier, J. Donham, N. Bhagat, S. Mittal, and D. Ryaboy. A. Pavlo, M. Stonebraker, K. Tufte, and H. Wang. Storm@twitter. In SIGMOD, pages 147–156, 2014. S-store: Streaming meets transaction processing. [62] A. Whitney, D. Shasha, and S. Apter. High Volume PVLDB, 8(13):2134–2145, 2015. Transaction Processing Without Concurrency Control, [47] C. Mohan, D. Haderle, B. Lindsay, H. Pirahesh, and Two Phase Commit, SQL or C++. In HPTS, 1997. P. Schwarz. ARIES: a transaction recovery method [63] R. Williams, D. Daniels, L. Haas, G. Lapis, B. Lindsay, supporting fine-granularity locking and partial rollbacks P. Ng, R. Obermarck, P. Selinger, A. Walker, P. Wilms, using write-ahead logging. ACM Trans. Database Syst., and R. Yost. Distributed systems, vol. ii: distributed data 17(1):94–162, 1992. base systems. chapter R*: an overview of the [48] T. Neumann, T. Mühlbauer, and A. Kemper. Fast architecture, pages 435–461. 1986. serializable multi-version concurrency control for [64] M. Zaharia, T. Das, H. Li, T. Hunter, S. Shenker, and main-memory database systems. SIGMOD, pages I. Stoica. Discretized streams: Fault-tolerant streaming 677–689, 2015. computation at scale. In SOSP, 2013. [49] D. P. Reed. Naming and synchronization in a [65] S. B. Zdonik and D. Maier, editors. Readings in decentralized computer system. PhD thesis, MIT, 1979. Object-Oriented Database Systems. Morgan Kaufmann, 1990. SIGMOD Record, June 2016 (Vol. 45, No. 2) 55