- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Compacting Transactional Data in Hybrid OLTP&OLAP Databases

展开查看详情

1 . Compacting Transactional Data in Hybrid OLTP&OLAP Databases Florian Funke Alfons Kemper Thomas Neumann TU Munchen ¨ TU Munchen ¨ TU Munchen ¨ Munich, Germany Munich, Germany Munich, Germany florian.funke@in.tum.de alfons.kemper@in.tum.de thomas.neumann@in.tum.de ABSTRACT head from buffer management or locking. This model allows Growing main memory sizes have facilitated database man- for record-breaking transaction throughput, but necessitates agement systems that keep the entire database in main mem- that all transactions execute quickly to prevent congestion ory. The drastic performance improvements that came along in the serial execution pipeline. with these in-memory systems have made it possible to re- As a result of this dilemma, OLTP engines often refrain unite the two areas of online transaction processing (OLTP) from compressing their data and thus waste memory space. and online analytical processing (OLAP): An emerging class The lack of a compact data representation becomes even of hybrid OLTP and OLAP database systems allows to pro- more impeding, when the database system is capable of run- cess analytical queries directly on the transactional data. ning OLAP-style queries on the transactional data, like the By offering arbitrarily current snapshots of the transactional HyPer system [19] or SAP HANA [11]. In this scenario, com- data for OLAP, these systems enable real-time business in- pression does not only reduce memory consumption, but also telligence. promises faster query execution [32, 1, 4, 14]. To facilitate Despite memory sizes of several Terabytes in a single com- efficient query processing directly on the transactional data, modity server, RAM is still a precious resource: Since free these hybrid OLTP & OLAP systems create snapshots of the memory can be used for intermediate results in query pro- transactional data, that should not be ignored in the con- cessing, the amount of memory determines query perfor- text of space efficiency. Therefore we introduce the notion of mance to a large extent. Consequently, we propose the com- compaction, a concept that embraces two mechanisms that paction of memory-resident databases. Compaction con- serve a similar purpose: sists of two tasks: First, separating the mutable working • Compression of the data set to save storage space and set from the immutable “frozen” data. Second, compressing speed-up query execution. the immutable data and optimizing it for efficient, memory- • Reorganization of the data set for efficient and memory- consumption-friendly snapshotting. Our approach reorga- consumption friendly snapshotting. nizes and compresses transactional data online and yet hard- ly affects the mission-critical OLTP throughput. This is Values Hot achieved by unburdening the OLTP threads from all addi- - Working Set (hot data) tional processing and performing these tasks asynchronously. - Uncompressed Cooling - Small memory pages - Hot/re-heated 1. INTRODUCTION & cold items mixed - Uncompressed Small memory page Modern in-memory database systems with high-perfor- - Small memory pages Cold mance transaction processing capabilities face a dilemma: - Cold data items only On the one hand, memory is a scarce resource and these sys- - Not compressed yet Frozen tems would therefore benefit from compressing their data. On the other hand, their fast and lean transaction models - Cold & compressed penalize additional processing severely which often prevents data them from compressing data in favor of transaction through- - Huge memory pages put. A good example is the lock-free transaction processing - Rarely accessed by OLTP model pioneered by H-Store/VoltDB [18, 30] that executes - Immutable: Deleted/ transactions serially on private partitions without any over- modified items are marked "invalid" and Huge memory page copied to Hot Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies Figure 1: Hot/cold clustering for compaction. bear this notice and the full citation on the first page. To copy otherwise, to = hot (volatile) data item, = cold data item, republish, to post on servers or to redistribute to lists, requires prior specific = cold & compressed data item. permission and/or a fee. Articles from this volume were invited to present their results at The 38th International Conference on Very Large Data Bases, We demonstrate that even though it is more difficult to August 27th - 31st 2012, Istanbul, Turkey. Proceedings of the VLDB Endowment, Vol. 5, No. 11 compress transactional data due to its volatile nature, it Copyright 2012 VLDB Endowment 2150-8097/12/07... $ 10.00. is feasible to do it efficiently. Our approach is based on 1424

2 .the observation that while OLTP workloads frequently mod- systems, for query processing can be substituted efficiently ify the dataset, they often follow the working set assump- in frequently changing datasets. tion [10]: Only a small subset of the data is accessed and Oracle 11g [27] has an OLTP Table Compression feature. an even smaller subset of this working set is being modified Newly inserted data is left uncompressed at first. When (cf. Figure 1). In business applications, this working set is insertions reach a threshold, the uncompressed tuples are mostly comprised of tuples that were added to the database being compressed. Algorithm details or performance num- in the recent past, as it can be observed in the TPC-C work- bers are not published, but the focus appears to be on disc- load [29]. based systems with traditional transaction processing mod- Our system uses a lightweight, hardware-assisted moni- els, not high-performance in-memory systems. Also, the fea- toring component to observe accesses to the dataset and ture seems to be applicable only in pure OLTP workloads identify opportunities to reorganize data such that it is clus- without analytical queries. tered into hot and cold parts. After clustering the data, the Approaches that maintain two separate data stores, an database system compresses cold chunks to reduce memory uncompressed “delta” store for freshly inserted data and a consumption and streamline query processing. Cold chunks compressed “main”-store for older data, require costly merge are stored on huge virtual memory pages and protected from phases that periodically insert new data into the main store any modifications to allow for compact and fast OLAP snap- in a bulk operation [22]. This involves the exclusive locking shots. These physical reorganizations are performed at run- of tables and also slows down transaction processing. Kr¨ uger time with virtually no overhead for transaction processing as et al. [21] state that their approach deliberately compromises all time consuming tasks execute asynchronously to trans- OLTP performance to facilitate compression. action processing. The remainder of this paper is organized as follows: In 3. DESIGN Section 2, we give an overview of related work on compres- sion for transaction processing systems and analytical sys- 3.1 The HyPer System tems. Section 3 presents our transaction model and physical We integrated our compaction approach into the HyPer [19] data representation, how we cluster tuples into hot and cold system, an in-memory, high-performance hybrid OLTP and parts and which compression schemes we propose to use. OLAP DBMS. We briefly present the system here, but the Furthermore, we describe the impact of our approach on approach is generally applicable to OLTP&OLAP in-memory query processing and present a space-efficient secondary in- database systems. dex implementation. In Section 4, we describe techniques HyPer belongs to the emerging class of database systems to observe data access patterns of transactions, including that have – in addition to an OLTP engine – capabilities hardware-assisted, low-overhead mechanisms. Section 5 con- to run OLAP queries directly on the transactional data and tains the experimental evaluation of our approach substan- thus enable real-time business intelligence. HyPer allows tiating the claim that compaction hardly affects transaction to run queries in parallel to transactions with extremely low processing while it can speed up query execution times. Sec- overhead. This is achieved by executing the queries in a sep- tion 6 concludes our findings. arate process that was forked from the OLTP process (cf. Figure 2) and constitutes a transaction-consistent snapshot 2. RELATED WORK of the OLTP data. The snapshot is kept consistent by the Compression techniques for database systems is a topic operating system with the assistance of the memory man- extensively studied, primarily in the context of analytical agement unit (MMU). Before the OLTP process modifies a systems [14, 32, 1, 16, 33, 28]. However, the proposed tech- page, the original page is replicated for the OLAP process niques are not directly applicable to OLTP systems which to maintain the memory state from the time of snapshot are optimized for high-frequency write accesses. We nei- creation. This mechanism is a cornerstone of HyPer’s per- ther propose new compression algorithms, nor focus on the formance, allowing it to compete with the fastest dedicated integration of compression with query processing in this OLTP systems and the fasted dedicated analytical system work. Rather, we describe how existing compression tech- – even when both workloads are executed in parallel on the niques can be adapted for and integrated in transactional same data in HyPer. M¨ uhe et al. [25] found this hardware- systems. The challenge in doing so is that compression based snapshotting approach superior to software-based so- must be performed in a non-disturbing manner with regard lutions. to the mission-critical transaction processing. In addition HyPer’s transaction model is similar to the model pio- to OLTP, next-generation database systems like HyPer [19] neered by H-Store [18] and VoltDB [30]: The database is and SAP HANA [11] offer OLAP capabilities operating di- split into p partitions, such that most transactions only need rectly on the transactional data. Thus, OLAP-style data to access one of these partitions. Thus one OLTP-thread accesses patterns must be taken into account when design- can be assigned to each partition and can operate within ing a compression features. the partition without having to acquire any locks or latches. For efficient update handling in compressed OLAP data- Only for the rare case of partition-crossing transactions, the bases, H´eman et al. proposed Positional Delta Trees [15]. p threads must be synchronized. Moreover, transactions in They allow for updates in ordered, compressed relations and HyPer are written as stored procedures and are compiled to yet maintain good scan performance. Binnig et al. propose native code by leveraging the LLVM compiler back-end, as ordered-dictionary compression that can be bulk-updated described in [26]. This model allows for very fast transac- efficiently [3]. Both techniques are not designed for OLTP- tion processing in the order of 100 000s of transactions per style updates, but rather for updates in data warehouses. second [19]. We will show how the benefits of order-preserving dictionary HyPer’s query engine is built upon a novel query compila- compression, which is infeasible in hybrid OLTP&OLAP tion technique described in [26]. It avoids the performance 1425

3 . forked OLAP-Snapshot Partition Shadow Partition1 Partition2 OLTP Data copy id country addr 1 C1 A1 Vector Chunk OLAP Queries 1 C1 A1 4 C4 A3 2 C2 A2 2 C2 A2 5 C3 A4 3 C2 A2 3 C2 A2 6 C3 A4 4 C4 A3 Transactions C 5 C3 A4 8 C1 A5 7 C3 A4 D 6 C3 A4 9 C1 A7 10 C4 A6 7 C3 A4 15 C2 A5 11 C3 A4 e Replicated page A C' rit 8 C1 A5 16 C1 A5 12 C4 A3 B D n -w (due to update of 9 C1 A7 o 17 C1 A5 13 C3 A4 E G py- C to C' by OLTP) 10 C4 A6 F H co 11 C3 A4 18 C2 A2 14 C3 A4 12 C4 A3 13 C3 A4 14 C3 A4 15 C2 A5 Figure 2: HyPer’s VM snapshot mechanism. Only 16 C1 A5 those memory pages get replicated that are modified 17 C1 A5 18 C2 A2 during the lifetime of a snapshot. (a) (b) overhead of classic, iterator-style processing techniques that suffer from a lack of code locality and frequent instruc- Figure 3: (a) Example relation. (b) Physical rep- tion mispredictions by translating queries into LLVM code. resentation of example relation (without compres- This portable assembler code can be executed directly using sion). LLVM’s optimizing just-in-time compiler. It produces com- pact and efficient machine code that makes column scans completely data driven. Together with its sophisticated Hot Entries in a hot vector are frequently read/updated or query optimizer, this enables HyPer to achieve sub-second tuples are deleted and/or inserted into this chunk. query response times on typical business intelligence queries Cooling Most entries remain untouched, very few are be- (top customers, top products, etc.) that can compete with ing accessed or tuples are being deleted in this chunk. the fastest dedicated OLAP systems. If the Access Observer determines that the same pages were accessed between subsequent scans and they make 3.2 Data Representation up only a small fraction of the chunk’s total number We added a storage back-end to HyPer that combines hor- of pages, it marks the chunk cooling. Accessing an izontal partitioning and columnar storage: A relation is rep- attribute in a cooling chunk triggers the relocation of resented as a hierarchy of partitions, chunks and vectors (see the tuple to a hot chunk. Figure 3). Partitions split relations into p disjoint subsets Cold Entries in a cold vector are not accessed, i.e. the Ac- and are the basis of the transaction model described above. cess Observer has repeatedly found no reads or writes Within one partition, tuples are stored using a decomposed in this vector. storage model [9]. Unlike designs where each attribute is stored in one continuous block of memory, we store a col- Frozen Entries are neither read nor written and have been umn in multiple blocks (“vectors”), as proposed in Monet- compressed physically and optimized for OLAP as de- DB/X100 [4]. In contrast to X100, our main rationale for scribed below. doing so is that each vector that stores a certain attribute can represent it differently, e.g. compressed lightly, heavily “Access” refers only to reads and writes performed by OLTP or uncompressed. In addition, they can reside on different threads – OLAP queries potentially executing in parallel types of memory pages, i.e. regular or huge pages as dis- do not affect the temperature of a vector, as described in cussed in Section 3.3. Each chunk constitutes a horizontal Section 4.1. partition of the relation, i.e. it holds one vector for each Cold chunks of the data can be “frozen”, i.e. converted of the relation’s attributes and thus stores a subset of the into a compact, OLAP-friendly representation as they are partition’s tuples, as depicted in Figure 3. likely to be almost exclusively accessed by analytical queries in the future. They are compressed, stored on huge virtual 3.3 Hot/Cold Clustering memory pages and made immutable. The use of huge pages (2MB per page on x86) for frozen data has multiple advan- Hot/cold clustering aims at partitioning the data into fre- tages over the use of regular pages (4kB on x86): quently accessed data items and those that are accessed rarely (or not at all). This allows for physical optimizations 1. Scanning huge pages is faster than scanning regular depending on the access characteristics of data. pages. Manegold et al. [24] analyze the impact of We measure the “temperature” of data on virtual mem- translation lookaside buffer (TLB) misses on query ory page granularity. Since we store attributes column-wise, performance and conclude with regard to the page size this allows us to maintain a separate temperature value for that the use of huge pages reduces the probability of each attribute of a chunk, i.e. for each vector. Both read and TLB misses. write accesses to the vectors are monitored by the Access Ob- server component using a lightweight, hardware-assisted ap- 2. The TLB of the processor’s memory management unit proach described in Section 4.1. It distinguishes four states has separate sections for huge and normal pages on a vector can have: most platforms as depicted in Figure 4. Since the bulk 1426

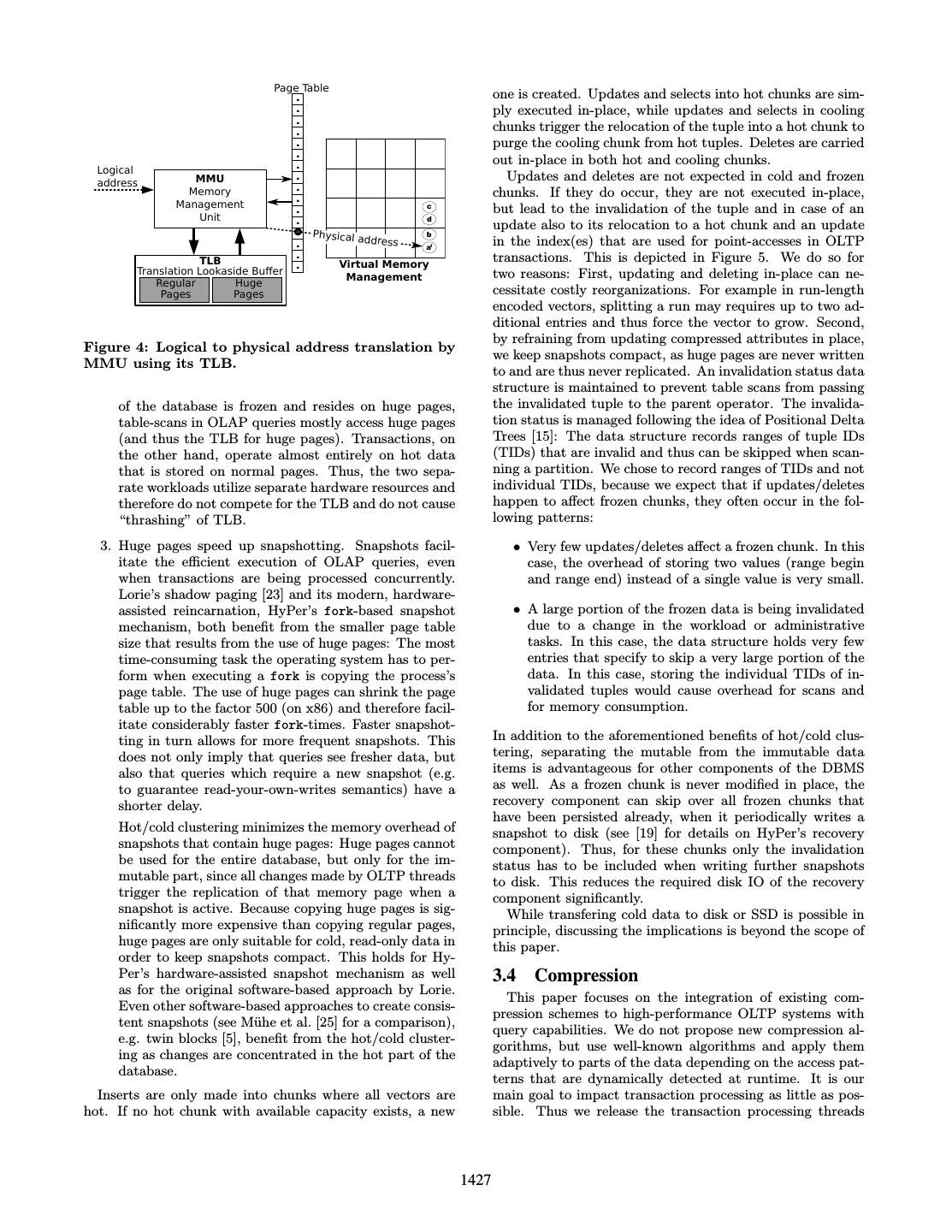

4 . Page Table . one is created. Updates and selects into hot chunks are sim- . ply executed in-place, while updates and selects in cooling . . chunks trigger the relocation of the tuple into a hot chunk to . purge the cooling chunk from hot tuples. Deletes are carried . . out in-place in both hot and cooling chunks. Logical MMU . Updates and deletes are not expected in cold and frozen address . Memory chunks. If they do occur, they are not executed in-place, . Management c but lead to the invalidation of the tuple and in case of an . Unit . d update also to its relocation to a hot chunk and an update Physical ad b . dress in the index(es) that are used for point-accesses in OLTP a’ . transactions. This is depicted in Figure 5. We do so for TLB . Virtual Memory Translation Lookaside Buffer two reasons: First, updating and deleting in-place can ne- Management Regular Huge Pages Pages cessitate costly reorganizations. For example in run-length encoded vectors, splitting a run may requires up to two ad- ditional entries and thus force the vector to grow. Second, by refraining from updating compressed attributes in place, Figure 4: Logical to physical address translation by we keep snapshots compact, as huge pages are never written MMU using its TLB. to and are thus never replicated. An invalidation status data structure is maintained to prevent table scans from passing of the database is frozen and resides on huge pages, the invalidated tuple to the parent operator. The invalida- table-scans in OLAP queries mostly access huge pages tion status is managed following the idea of Positional Delta (and thus the TLB for huge pages). Transactions, on Trees [15]: The data structure records ranges of tuple IDs the other hand, operate almost entirely on hot data (TIDs) that are invalid and thus can be skipped when scan- that is stored on normal pages. Thus, the two sepa- ning a partition. We chose to record ranges of TIDs and not rate workloads utilize separate hardware resources and individual TIDs, because we expect that if updates/deletes therefore do not compete for the TLB and do not cause happen to affect frozen chunks, they often occur in the fol- “thrashing” of TLB. lowing patterns: 3. Huge pages speed up snapshotting. Snapshots facil- • Very few updates/deletes affect a frozen chunk. In this itate the efficient execution of OLAP queries, even case, the overhead of storing two values (range begin when transactions are being processed concurrently. and range end) instead of a single value is very small. Lorie’s shadow paging [23] and its modern, hardware- assisted reincarnation, HyPer’s fork-based snapshot • A large portion of the frozen data is being invalidated mechanism, both benefit from the smaller page table due to a change in the workload or administrative size that results from the use of huge pages: The most tasks. In this case, the data structure holds very few time-consuming task the operating system has to per- entries that specify to skip a very large portion of the form when executing a fork is copying the process’s data. In this case, storing the individual TIDs of in- page table. The use of huge pages can shrink the page validated tuples would cause overhead for scans and table up to the factor 500 (on x86) and therefore facil- for memory consumption. itate considerably faster fork-times. Faster snapshot- ting in turn allows for more frequent snapshots. This In addition to the aforementioned benefits of hot/cold clus- does not only imply that queries see fresher data, but tering, separating the mutable from the immutable data also that queries which require a new snapshot (e.g. items is advantageous for other components of the DBMS to guarantee read-your-own-writes semantics) have a as well. As a frozen chunk is never modified in place, the shorter delay. recovery component can skip over all frozen chunks that have been persisted already, when it periodically writes a Hot/cold clustering minimizes the memory overhead of snapshot to disk (see [19] for details on HyPer’s recovery snapshots that contain huge pages: Huge pages cannot component). Thus, for these chunks only the invalidation be used for the entire database, but only for the im- status has to be included when writing further snapshots mutable part, since all changes made by OLTP threads to disk. This reduces the required disk IO of the recovery trigger the replication of that memory page when a component significantly. snapshot is active. Because copying huge pages is sig- While transfering cold data to disk or SSD is possible in nificantly more expensive than copying regular pages, principle, discussing the implications is beyond the scope of huge pages are only suitable for cold, read-only data in this paper. order to keep snapshots compact. This holds for Hy- Per’s hardware-assisted snapshot mechanism as well 3.4 Compression as for the original software-based approach by Lorie. This paper focuses on the integration of existing com- Even other software-based approaches to create consis- pression schemes to high-performance OLTP systems with tent snapshots (see M¨ uhe et al. [25] for a comparison), query capabilities. We do not propose new compression al- e.g. twin blocks [5], benefit from the hot/cold cluster- gorithms, but use well-known algorithms and apply them ing as changes are concentrated in the hot part of the adaptively to parts of the data depending on the access pat- database. terns that are dynamically detected at runtime. It is our Inserts are only made into chunks where all vectors are main goal to impact transaction processing as little as pos- hot. If no hot chunk with available capacity exists, a new sible. Thus we release the transaction processing threads 1427

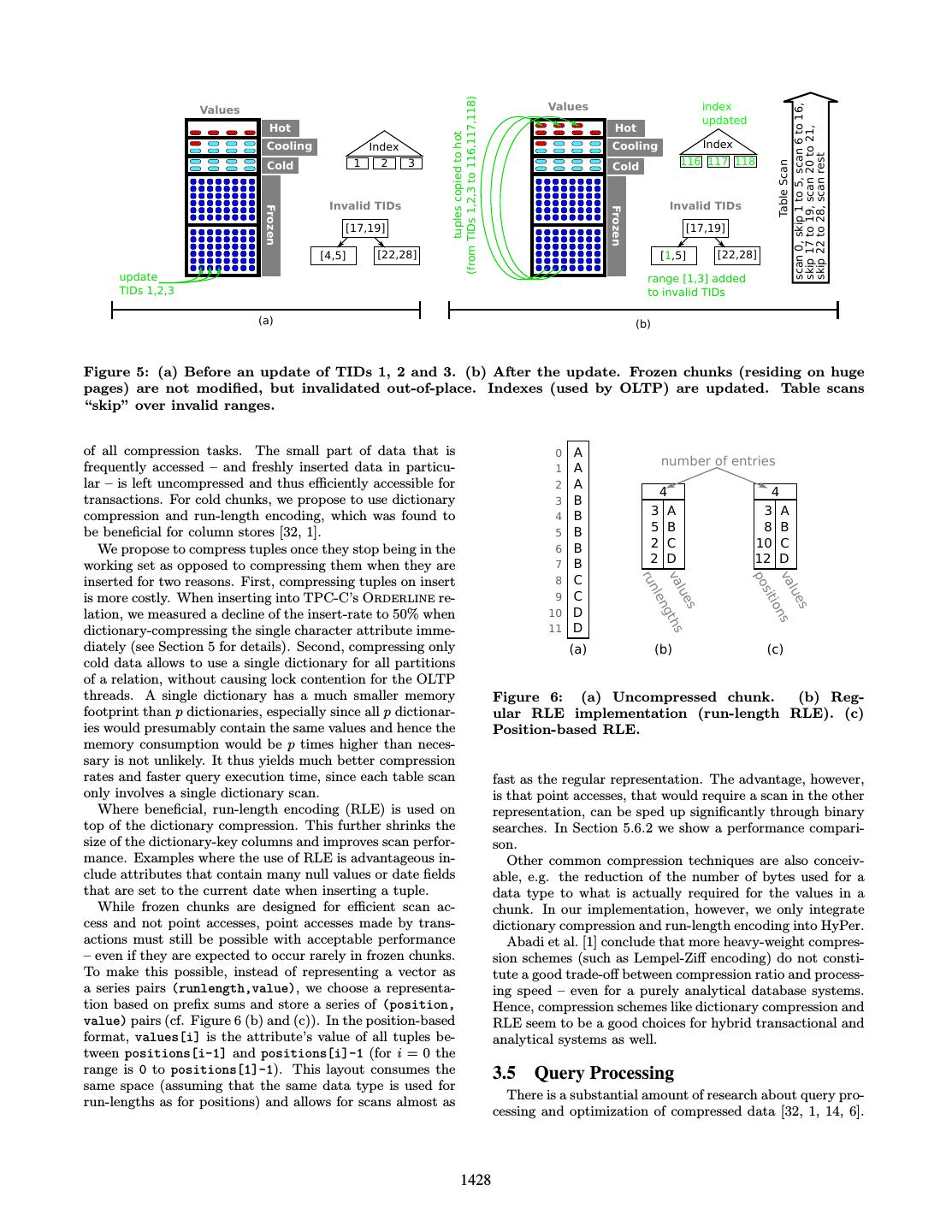

5 . (from TIDs 1,2,3 to 116,117,118) Values Values index scan 0, skip 1 to 5, scan 6 to 16, updated Hot Hot skip 17 to 19, scan 20 to 21, tuples copied to hot Cooling Index Cooling Index 116 117 118 skip 22 to 28, scan rest Cold 1 2 3 Cold Table Scan Invalid TIDs Invalid TIDs Frozen Frozen [17,19] [17,19] [4,5] [22,28] [1,5] [22,28] update range [1,3] added TIDs 1,2,3 to invalid TIDs (a) (b) Figure 5: (a) Before an update of TIDs 1, 2 and 3. (b) After the update. Frozen chunks (residing on huge pages) are not modified, but invalidated out-of-place. Indexes (used by OLTP) are updated. Table scans “skip” over invalid ranges. of all compression tasks. The small part of data that is 0 A number of entries frequently accessed – and freshly inserted data in particu- 1 A lar – is left uncompressed and thus efficiently accessible for 2 A 4 4 transactions. For cold chunks, we propose to use dictionary 3 B compression and run-length encoding, which was found to 4 B 3 A 3 A be beneficial for column stores [32, 1]. 5 B 5 B 8 B We propose to compress tuples once they stop being in the 6 B 2 C 10 C working set as opposed to compressing them when they are 7 B 2 D 12 D 8 C ru va po va inserted for two reasons. First, compressing tuples on insert nle C lue lue sit is more costly. When inserting into TPC-C’s Orderline re- 9 ion D ng 10 s s lation, we measured a decline of the insert-rate to 50% when th D s dictionary-compressing the single character attribute imme- 11 s diately (see Section 5 for details). Second, compressing only (a) (b) (c) cold data allows to use a single dictionary for all partitions of a relation, without causing lock contention for the OLTP threads. A single dictionary has a much smaller memory Figure 6: (a) Uncompressed chunk. (b) Reg- footprint than p dictionaries, especially since all p dictionar- ular RLE implementation (run-length RLE). (c) ies would presumably contain the same values and hence the Position-based RLE. memory consumption would be p times higher than neces- sary is not unlikely. It thus yields much better compression rates and faster query execution time, since each table scan fast as the regular representation. The advantage, however, only involves a single dictionary scan. is that point accesses, that would require a scan in the other Where beneficial, run-length encoding (RLE) is used on representation, can be sped up significantly through binary top of the dictionary compression. This further shrinks the searches. In Section 5.6.2 we show a performance compari- size of the dictionary-key columns and improves scan perfor- son. mance. Examples where the use of RLE is advantageous in- Other common compression techniques are also conceiv- clude attributes that contain many null values or date fields able, e.g. the reduction of the number of bytes used for a that are set to the current date when inserting a tuple. data type to what is actually required for the values in a While frozen chunks are designed for efficient scan ac- chunk. In our implementation, however, we only integrate cess and not point accesses, point accesses made by trans- dictionary compression and run-length encoding into HyPer. actions must still be possible with acceptable performance Abadi et al. [1] conclude that more heavy-weight compres- – even if they are expected to occur rarely in frozen chunks. sion schemes (such as Lempel-Ziff encoding) do not consti- To make this possible, instead of representing a vector as tute a good trade-off between compression ratio and process- a series pairs (runlength,value), we choose a representa- ing speed – even for a purely analytical database systems. tion based on prefix sums and store a series of (position, Hence, compression schemes like dictionary compression and value) pairs (cf. Figure 6 (b) and (c)). In the position-based RLE seem to be a good choices for hybrid transactional and format, values[i] is the attribute’s value of all tuples be- analytical systems as well. tween positions[i-1] and positions[i]-1 (for i = 0 the range is 0 to positions[1]-1). This layout consumes the 3.5 Query Processing same space (assuming that the same data type is used for There is a substantial amount of research about query pro- run-lengths as for positions) and allows for scans almost as cessing and optimization of compressed data [32, 1, 14, 6]. 1428

6 . Secondary Index (RB-Tree) sorting or partitioning/cracking [17] the compressed vector Relation Dictionary is not possible in a hybrid OLTP and OLAP database sys- TID Key key=0 Key Value tem as it would require massive updates in the indexes that val='BBB' 0 2 1 0 BBB are required for the mission-critical transaction processing. key=2 1 0 key=1 3 0 val='CCC' 1 AAA Navigating the tree index performs many random accesses. 2 3 val='AAA' 2 CCC While this access pattern causes a performance problem for 3 1 key=0 4 key=2 5 3 DDD disk-based systems, in-memory systems can efficiently use val='CCC' 4 0 val='BBB' this compact secondary index as an accelerator where pre- key=3 2 5 2 val='DDD' fix queries would benefit from order-preserving dictionary compression. We demonstrate the efficiency of this index in Section 5.6.3. For equality filter conditions (e.g. attribute = ’value’), the scan operator first performs a lookup of the value in Figure 7: Secondary tree index (gray information is the dictionary (regardless of whether it is ordered or not) not materialized). E.g. entry 1 compares less then to determine the associated key and a subsequent scan of entry 0, because the key at TID 1 (0key ) refers to the compressed column passing only those tuples to the up- a smaller value (BBB) as the key at TID 0 (2key ) stream operator that match the key. If a secondary index on which refers to CCC. the compressed attribute exists, the scan operator directly uses this index to determine the qualifying tuples and thus obviates a full table scan. Filter conditions other than prefixes or equality compar- Many of the insights from this work are also applicable to our isons cannot be efficiently processed with any of the tech- approach. Order-preserving dictionary compression, how- niques presented here. HyPer evaluates them by first scan- ever, can often be found in analytical database systems [2, ning the dictionary and selecting the qualifying keys into a 3] but is not feasible in write-intensive scenarios. In ordered hash table. This hash table is then used for a hash join with dictionaries, keyi < keyj implies that valuei < valuej . the relation, i.e. it is probed with the keys of the compressed This property can be utilized to do query processing di- chunks. Since the hash table is likely to be small for many rectly on the compressed representation of the data. While queries, it often fits into cache which makes this hash join this property can speed up execution, it makes ordered dic- very efficient. tionaries very difficult to maintain even in data warehouses, All algorithms presented in this section are performed by where data is inserted in bulk operations. Hybrid OLTP and the scan operator. The scan operator thus decompresses en- OLAP systems have to handle high-frequency updates and coded chunks and unites tuples from compressed and from inserts. Therefore, maintaining an ordered dictionary in this uncompressed chunks. Thereby, compression is transparent scenario is virtually impossible. However, we will present a for all upstream operators and does not require their adap- novel variant of a secondary index. Using this index on the tion. compressed attribute is an alternative that is feasible, out- performs an ordered dictionary in many cases and consumes significantly less memory than traditional indexes on string 4. IMPLEMENTATION DETAILS attributes. Ordered dictionaries demonstrate their strength when ex- 4.1 Access Observer ecuting queries with range and prefix filter conditions (e.g. The Access Observer component monitors reads and writes attribute LIKE ’prefix%’). The currently proposed algo- performed by OLTP threads in order to determine which rithm [3] first determines the qualifying keys through binary parts of the database are cold and which ones are hot. We search in the dictionary. Then, the relation’s attribute col- present different approaches for its implementation. umn is scanned and each key is tested for inclusion in the The first approach is purely software-based: Each OLTP range. This algorithm is very efficient for unselective range thread records reads and writes itself. The advantage is that queries. For more selective queries, however, a secondary the granularity used to record accesses can be freely chosen. tree index is dominant (as we show in Section 5.6.3) because The obvious drawback is the significant overhead this im- it does not require a full table scan, but directly accesses poses on the OLTP thread: Even when leaving out reads the selected tuples. Figure 8 contrasts the two different pro- that are required to locate the requested values, a single in- cessing techniques. The secondary index (tree index) can be vocation of TPC-C’s NewOrder transaction performs over constructed with very moderate memory consumption over- 50 read and over 100 write accesses on average. Since the head: Instead of storing the values (e.g. strings) in the index purpose of the Access Observer and hot/cold clustering is to and thus reverting the benefit of compression, we propose to unburden the OLTP threads as much as possible, we dismiss only store the 8 byte TID of each tuple. The sort order of this approach. the TIDs is then determined by the order of the values they The second approach uses a technique often employed point to, as depicted in Figure 7. I.e. the index entry (TID) for live migration in virtual machine monitors like Linux’ tid1 compares less then entry tid2 , if the two keys k1 and k2 Kernel-based Virtual Machine (KVM) [20] and the Xen Vir- these TIDs point to refer to values in the dictionary v1 and tual Machine Monitor [7]: The mprotect system call is used v2 such that v1 < v2 . If two values are equal, the TID serves to prevent accesses to a range of virtual memory pages. as a tie-breaker in order to maintain a strict order. A strict When a read or write to this region occurs, a SIGSEGV sig- order allows for efficient deletes and updates in the index as nal is sent to the calling process, which has installed a signal index entries of a given TID are quickly found. It is impor- handler that records the access and removes the protection tant to point out that, in contrast to pure OLAP systems, from the page. This technique uses hardware-support, but 1429

7 . Secondary Index Compressed Compressed Vector Vector Range of qualifying TIDs 4 Scan [2,4] 1 [ ] 2 4 Unordered 1 Ordered 2 Dictionary 6 Dictionary 5 qualifying keys 1 BBB 3 1 AAA 6 Range of [ 2 DDD 5 2 BBB 3 3 FFF 4 3 CCC 1 4 AAA 4 DDD 2 4 ] 5 CCC 5 EEE 6 EEE 6 FFF (a) (b) Figure 8: Execution of the range query ... BETWEEN ’BBB’ AND ’DDD’ using a (a) secondary index and (b) order-preserving dictionary. OLTP Process still has a downside: For each page, the first write in an OLTP Thread Address Space Access Observer observation cycle causes a trap into the operating system. While this is no problem for transactions that mostly insert D Dirty pages Y Y Young pages data, others that perform updates to distributed data items write 'SMITH' 00 0 0 0 read and reset are impeded. 0 00 00 00 AA AB AC AD to 0xAB010 dirty & young The third approach also uses hardware-assistance, but has 0x 0x 0x 0x bits in Page Table, no overhead when monitoring the database. Here, the Ac- update cess Observer component runs asynchronously to the OLTP bookkeeping (and OLAP) threads and uses information collected by the hardware. Userspace Kernelspace Virtual memory management is a task modern operat- Logical Physical Dirty/Young ... ... Access ing systems master efficiently thanks to the support of the Obeserver 0xAA Page 2 Y CPU’s memory management unit (MMU). In particular, 0xAB Page 4 Kernel Page Table: Module page frame reclamation relies on hardware assistance: The 0xAC Page 1 MMU sets flags for each physical memory page indicating 0xAD Page 7 D+Y ... ... if addresses within this page have been accessed (young) or modified (dirty). The Linux Virtual Memory Manager uses Kernelspace this information during page frame reclamation to assess if a Translate D+Y Hardware 0xAB010 Page 4, Page 0 Page 1 Page 2 page is in frequent use and whether it needs to be written to and set offset 0x010 the swap area before a different virtual page can be mapped flags on it [13]. In HyPer, we prevent memory pages from getting 0xAB010 Page 3 Page 4 Page 5 Page 4, paged out to the swap area by using the mlock system call. CPU MMU i.e. page 0xAB offset 0x010 Thus we can read and reset the young and dirty flags in offset 0x010 Page 6 Page 7 Page 8 each observation cycle to monitor accesses to the database with virtually no overhead. Figure 9 shows the interaction of different components of Physical Memory HyPer. We have implemented this type of Access Observer as a kernel module for an (unmodified) Linux kernel for the Figure 9: Access Observer architecture of the x86 architecture. On other architectures, Linux even pro- third approach (simplified illustration without TLB, vides a dirty bit that can be used exclusively by user space caches, etc.). programs [12]. While the component is certainly platform- dependent, Linux is not the only system where this ap- proach is conceivable. To broaden the platform support for database systems using the Access Observer, the mprotect- The hardware-supported approaches operate on page gran- based approach can be used as a fall-back option. ularity. Thus by themselves they cannot distinguish between Since the kernel module can distinguish between read-only a single change and many changes in one page. For those and write accesses, we can refine our hot/cold model from (small) parts of the data, where this distinction is important, Section 3.3. Compression techniques, like dictionary com- the hardware-supported techniques could be combined with pression, that incur little overhead on read-only point ac- the software-based approach. In our test-cases, however, we cesses can be applied to cold chunks that are still read, but did observe a situation where this was necessary. not written. Compression schemes where point-accesses are For hot/cold clustering, solely accesses from OLTP threads more expensive can be applied once the chunk is neither should be take into account. While OLAP queries never per- read nor written to. form writes, they frequently scan over entire relations. In both hardware-based approaches, this causes the page to be considered young. Thus, read flags of a given relation are 1430

8 . key ref (implicit) value count 350000 0 string0 4 dictionary lock 300000 Throughput [tps] 1 string1 3 2 string2 9 250000 3 string3 2 200000 4 string4 0 150000 5 string5 5 6 string6 0 100000 Hash-Index compression 50000 ... value-to-key interval n stringn 7 0 4 free list 0 2e+06 4e+06 6e+06 8e+06 Transactions Executed 6 Figure 11: Transactional performance with acti- Figure 10: Dictionary data structure for strings. vated compression ( ) and without compression ( ). only considered, if no OLAP query has accessed this relation a combination of the TPC-C with TPC-H-like queries op- in the last observation cycle. erating on the same data concurrently. The schema is an entirely unmodified TPC-C schema extended by three fixed- 4.2 Dictionary Compression size relations from TPC-H: Supplier, Nation and Region. Dictionaries consist of two columns: A reference counter The transactional part of the workload consists of the five indicating the number of tuples that reference the value and original TPC-C transactions (New-Order, Payment, Order- the actual value. The key is not stored explicitly, but is the Status, Delivery, Stock-Level), with the following changes: offset into the dictionary, as shown in Figure 10. A reference count of zero indicates that this slot in the dictionary can • Since the TPC-C generates strings with high entropy be reused. In order to avoid duplicate entries, a value-to- that exhibits little potential for compression and is key hash-index is used to lookup existing values when a new unrealistic in business applications, we replaced the tuple is inserted or a compressed value is being updated. strings with US Census 2010 data. E.g. for the at- We maintain one dictionary per relation. Following the tribute ol dist info we do not use a random string, transaction model described in Section 3.2, this means that but a last name (e.g. the last name of the person re- multiple OLTP threads access the dictionary concurrently. sponsible for this orderline). The last name is selected Since only cold data is dictionary-compressed, we expect from the list of the most common 88.800 family names very little updates in the dictionary by OLTP threads. Yet, with a probability according to the frequency of the an OLTP thread may make modifications in a vector that is name. currently being compressed. Therefore, during the compres- sion of a chunk, the reorganization thread uses the Access • The CH-BenCHmark follows the proposal made by Observer to track write accesses, while a new (and smaller) VoltDB [31] to deviate from the underlying TPC-C vector is filled with the dictionary keys equivalent to the benchmark by not simulating the terminals and by original values. If a memory page was modified during com- generating client requests without any think-time. pression and processing a value within this page has already We conduct our experiments on a server with two quad- caused changes in the dictionary, it is re-worked: The modi- core Intel Xeon CPUs clocked at 2.93GHz and with 64GB fied page is scanned and every value is compared to the value of main memory running Redhat Enterprise Linux 5.4. The the new key-vector points to: If the values do not match, benchmark is scaled to 12 warehouses. the dictionary’s reference counter for the value pointed to by the current key is decremented (i.e. the insert into the dic- tionary is undone) and the new value is being compressed. 5.1 Transactional Performance This optimistic concurrency control is facilitated by the Ac- We quantify the impact of our compression technique on cess Observer’s ability to detect writes retrospectively. transaction throughput using the transactional part of the As OLTP and reorganization threads access the dictio- benchmark. In the following experiment, we compare bench- nary concurrently, they need to synchronize dictionary ac- mark runs with two different HyPer setups: One without any cess with a lock. However, since the hot part of the data is compression techniques and one including the compression uncompressed, transactions inserting new tuples or updat- techniques. In both runs, we configured HyPer to use five ing/deleting hot tuples never have to acquire the dictionary OLTP threads. lock. Therefore lock contention is not a problem here. The CH-BenCHmark schema has 32 attributes of type char or varchar that have length 20 or more and thus ex- 5. EVALUATION hibit compression-potential. The most interesting relations, In this section, we substantiate our claims that transac- however, are the three constantly growing relations out of tional data can be compressed with very little overhead and which two, Orderline and History, have a varchar at- that the performed compression is beneficial for query pro- tribute of length 24 and thus require recurring compression cessing. Basis for the experiments is the CH-BenCHmark [8], of freshly inserted tuples. 1431

9 . Figure 11 shows the result of the CH-BenCHmark runs Scale Instant Compr. No Compr. in which we simulate one week of transaction processing of 10M orderlines 4,249ms 2,790ms the world’s larges online retailer: Amazon generates a yearly 215 unique values revenue of around $30 billion, i.e. assuming an average item 10M orderlines 5,589ms 2,791ms price of $30, Amazon adds nearly 20 million orderlines to 218 unique values its database each week. We configured HyPer to compress 50M orderlines 19,664ms 12,555ms of the Orderline and History relations’ cold chunks con- 215 unique values taining the same amount of transactional data Amazon gen- 50M orderlines 26,254ms 12,614ms erates in one week (according to our back-of-the-envelope 218 unique values calculation) all at once, to show the impact of the com- pression as clearly as possible. In this compression interval, Table 1: The costs of instant compression: Time HyPer compressed 18.3 million Orderline and 0.9 million required to insert 10M and 50M orderlines. History tuples in 3.8 seconds. The transaction through- put in these 3.8s was 12.9% slower in the setup that per- formed compression than in the setup without compression. takes 18ms in hot chunks. As updates in frozen chunks are Since there are no synchronization points for the compres- rare, this slowdown of factor 2.55 is unproblematic. sion thread and the OLTP threads while a chunk is being compressed, this seems to result from competing accesses to 5.3 Access Observer the memory bus. Note that fluctuations before the compres- In this section, we compare the impact of the Access sion interval do not result from the techniques described in Observer implementation on HyPer’s performance. While this paper, but originate from the benchmark driver. the implementation based on the young and dirty flags imposes absolutely no overhead on the OLTP threads, we 5.2 Update of Cooling and Frozen Data measured the impact of the alternative implementation (us- The Access Observer tries to ensure that the number of ing mprotect) by protecting vectors of numeric attributes accesses to cool and frozen chunks is minimal and our ex- and then performing random writes to them. This situation periments with the CH-BenCHmark/TPC-C show that this arises multiple times in TPC-C, e.g. in the stock or customer goal is often achievable. However, individual accesses to relation. When performing 100,000 updates to 10 million these tuples cannot be ruled out in general, so we quantify entries, the mprotected version requires 94ms, while a run their costs here. without takes only 4ms. Consequently, the no-overhead im- Cooling chunks are diagnosed by the Access Observer to plementation based on the young and dirty flags is our first only contain a few tuples that change. Thus HyPer chooses choice for HyPer. to relocate these tuples to a hot chunk in order to be able to compress the cooling chunk. We mark hot chunks “cooling” 5.4 Compression Performance in order to quantify the costs of these relocations. In a re- We conduct experiments to substantiate the claim that lation with 50 million tuples, we forcefully mark all chunks our approach of lazy-compression is superior to eager com- “cooling” and then update them, to trigger their relocation press. For run-length encoding, the disadvantages of in- to a newly created hot chunk. With 3,595ms, the run time stantly compressing tuples are obvious: Updates of RLE is over twice as long as the updates take when all chunks are values can split a run and generate up to two additional en- correctly marked “hot” (1,605ms) and thus require no relo- tries that do not fit in the vector (and cause the relocation cation of tuples. This result indicates that while it requires of all following entries if the do). In addition, locating an extra processing to access a cooling tuple, the amortized attribute’s value associated with a TID is possible due to cost over all accesses is negligible, given the fact that ac- our design, but still compromises performance. Therefore cesses to cooling chunks are rare compared to accesses to we limit the experiment to dictionary compression. Table 1 hot chunks. If massive accesses to cooling chunks should shows the time required to insert TPC-C orderlines when occur, the Access Observer detects the warming up of the compressing instantly and when performing no compression chunk and switches its temperature back to hot, which pre- of the char(24) attribute ol dist info. Thus, the addi- vents further relocations. tional lookup/insert in the dictionary can slow down the Frozen chunks are compressed and reside on huge memory insert rate to 50%. This results from the fact that without pages and their tuples are therefore not modified in place, compression, inserting an orderline only requires an insert but invalidated. In addition to relocating the tuple as for into the primary key index in addition to actually inserting cooling chunks, it also requires an update in the partition’s the 10 attributes and is therefore extremely fast. invalidation status. Unlike cooling chunks, frozen chunks must perform this procedure. We first perform a run where 5.5 Compression Effectiveness we update all 50 million tuples in sequence which requires While the focus of this work is on the integration of com- about the same time (3,436ms) as the updates in the cool- pression techniques rather than on compression itself and ing chunks. I.e. the costs of invalidating the tuples are thus has only limited influence on the compression factor, dominated by the costs of relocating them, as only one in- we measure how much our approach can reduce the memory validation entry per chunk is being created. When updating consumption in the CH-BenCHmark. We execute trans- random tuples, inserts into the validation status are more actions until our database size is 50GB and then activate costly: We update 10,000 random orders in the Orderline our compression feature to compress the cold parts of the relation. Each order consists of 10 consecutively located database. We configure HyPer to use dictionary compres- orderline tuples, i.e. 100,000 orderlines are updated. Per- sion for all cold vectors of string attributes. We populated forming these updates in frozen chunks takes 46ms, while it these attributes with values according to a Zipf distribution 1432

10 . 1000 20000 800 Snapshot delay [ms] Throughput [tps] 15000 600 10000 400 200 5000 All on Regular Pages All on Regular Pages Cold on Huge, Hot on Small Cold on Huge, Hot on Small 0 0 0 2e+06 4e+06 6e+06 8e+06 1e+07 0 2e+06 4e+06 6e+06 8e+06 1e+07 Transactions Executed Transactions Executed (a) (b) Figure 12: Impact of page size on snapshot performance (a) and transactional performance (b). with parameter s = 1.2. This yields a compression ratio of 334 334 1.12. This low number does not result from a possible in- 300 effectiveness in our design, but from the fact that only two attributes in the three continuously growing relations have 250 Execution Time [ms] character types (h data and ol dist info) and their length is only 24 characters. When increasing the length to 240 200 characters, the compression factor rises to 2.42. Activating run-length encoding in addition to dictionary 150 compression shrinks Orderline’s ol o id attribute by a 100 factor of 3.3 as each order has an average of 10 orderlines 73 61 that are stored consecutively and thus produce runs of av- 50 erage length 10. The timestamp attributes o entry d and 1512 h date are naturally sorted in the database, as they are set to the current date and time on insert. Thus they contain 10 50 Run-Length runs of several thousand entries, resulting in extreme com- pression factors for these attributes. Figure 13: Comparison of RLE schemes when sum- For all three growing relations, History, Orderline and ming up 500M values. uncompressed, position- Order, the Access Observer indicates that all chunks but based RLE (prefix sum), regular RLE. the one or two last ones could be frozen at any time during the benchmark run. 5.6.2 Run-Length Encoding In Section 3.4, we described the layout of a RLE vec- 5.6 Query Performance tor that uses positions instead of run-lengths. We did so Query performance in hybrid OLTP and OLAP systems to bridge the gap between scan-based OLAP accesses and depends on two factors: Snapshot performance and query occasional point-wise OLTP accesses. Figure 13 shows the execution time. Compression and the usage of huge pages execution time of queries summing up 500 million integers for frozen chunks improve snapshot performance. Query ex- for two different run-lengths. The position-based encoding ecution time benefits from huge pages and compression, too. is hardly slower than the regular run-length encoding, when Scanning invalidated tuples can have a negative effect on comparing both runs on compressed data with the run on query performance, but we demonstrate that this drawback uncompressed data. is negligible. The benefits of this trade-off in scan performance are rel- atively efficient point-accesses: For the test-case with run- 5.6.1 Snapshot Performance length 10, lookups using our position-based RLE were only Snapshot performance primarily depends on the size of 7 times slower than lookups in uncompressed data, for the the page table that has to be copied when a snapshot is cre- test case with run-length 50, the factor is 5 times. Point ac- ated. It impacts transaction throughput as well as average cesses in regular run-length encoded data on the other hand query response times: During the creation of a snapshot, require a linear scan of all runs until the TID is found, re- OLTP workers must be quiesced and queries waiting for the sulting in up to six orders of magnitude longer lookup times snapshot are stalled. We compare a setup were the whole in our benchmark. Thus the use of regular RLE is out of database resides on regular-sized pages to a configuration in the question, even though point accesses to compressed data which huge pages are used for frozen chunks. are infrequent. Position-based RLE however seems to be an Figure 12 compares both setups when a new snapshot excellent compromise. is created every 40,000 transactions while a single OLTP thread processes transactions. Plot (a) shows the benefits 5.6.3 Prefix Scan Performance of using huge pages for frozen chunks for snapshot creation CH-BenCHmark’s query Q1 performs a scan of the Or- performance. Plot (b) demonstrates that the use of huge derline relation, groups and sorts by the orderline number pages improves transaction throughput. and computes different aggregations. Since our approach 1433

11 . 91 in the dictionary is dominated by the probe phase in both algorithms, as it accounts for less then 1% of the run-time. 80 78 We compare these two algorithms with a lookup in a sec- 75 ondary index. The red-black-tree index we used in our ex- Execution Time [ms] 66 64 periment does not store the values of the indexed attribute, 62 63 60 but only the TIDs referencing the dictionary-keys of these values (cf. Figure 8). Thus it is very memory consumption friendly. Our red-black-tree requires 24 bytes per entry, but 40 a B-tree could be used instead and would consume only a 26 26 little bit more than the 8 byte payload per entry (mostly 21 depending on the B-tree’s load-factor). 20 17 Figure 14 shows that for selectivities of 3.3% or higher, 8 the ordered dictionary is faster than the direct lookup in 0 the secondary index. For selectivities of less than 3.3%, 10% 3.3% 1% the secondary index outperforms the order-preserving dic- Prefix Selectivity tionary. As analytical queries often have single-digit selec- Figure 14: Comparison of range scan algorithms ex- tivities, we believe that secondary indexes are a valid alter- ecuting Q1 on 3.6M orderlines. uncompressed, native for ordered-dictionaries, especially in high-frequency unordered dictionary (i.e. hash-join with dictio- update and insert scenarios where ordered-dictionaries are nary), ordered dictionary, secondary index. impossible to maintain efficiently. To demonstrate that our invalidation mechanism for frozen chunks hardly affects scan performance, we invalidate 10,000 SELECT ol_number , random orders, i.e. about 100,000 orderlines in the dataset SUM ( ol_quantity ) AS sum_qty , (as every order has an average of 10 orderlines) and com- SUM ( ol_amount ) AS sum_amount , pare the scan results with a run in which the same amount AVG ( ol_quantity ) AS avg_qty , of orderlines where simply deleted. This means that in both AVG ( ol_amount ) AS avg_amount , setups, 3.5M orderlines are aggregated by query Q1 . Even in COUNT (*) AS count_order 1 this extreme scenario where as much as 36 of the dataset is FROM orderline invalid and the invalidations are scattered, the performance WHERE ol_delivery_d > ’ 2007 -01 -02 00:00:00 ’ decline of the three prefix queries from Figure 14 was only AND ol_dist_info LIKE ’ < prefix >% ’ between 4.5% and 7.9%. GROUP BY ol_number ORDER BY ol_number Figure 15: CH-BenCHmark Query Q1 with ad- 6. CONCLUSION ditional (prefix) filter condition ol dist info LIKE We have shown that compression can – against common ’<prefix>%’. belief – indeed be integrated into high-performance OLTP systems without impacting transaction throughput. This only changes the scan operator, we conduct experiments can be achieved by relieving the OLTP threads from all with variations of this scan-oriented query to carve out the compression-related tasks and performing compression asyn- performance impact of dictionary compression. chronously as soon as tuples are stopped being used by Figure 14 shows the performance of different prefix scan transactions. We have presented hardware-assisted, low- algorithms on the query listed in Figure 15. The attribute overhead monitoring techniques to assess when tuples should ol dist info of the Orderline relation is populated with be compressed. This, again, unburdens transaction process- the aforementioned US family name dataset to obtain a re- ing from the overhead of keeping track of all data accesses. alistic distribution of values. Experiments with TPC-C have shown that our hot/cold Since HyPer pushes simple selection conditions into the clustering technique has very little overhead and can iden- scan operator, the following algorithms are possible: The tify the bulk of the database as cold. Cold parts of the baseline for the experiment is an algorithm that is not only database indentified through the aforementioned observa- applicable to prefix queries, but to arbitrary filter condi- tion method can be easily compressed using various com- tions: It scans the dictionary and selects all matches into pression schemes and can be physically optimized for query a hash table which is then used to probe the attribute’s processing and compact snapshots by freezing them and re- dictionary keys into. The algorithm’s performance mostly locating them to huge memory pages. Future work includes depends on the size of the relation, but also on whether or other optimizations such as small materialized aggregates not the hash table fits into cache. for each frozen chunk. We have also shown the impact To evaluate the performance of order-preserving dictio- of compression on query performance and have presented naries, we use the algorithm described in Section 3.4 that is a memory-consumption friendly secondary index for the ef- based on the determination of the qualifying key-range and ficient evaluation of unselective prefix and range queries. a subsequent scan of the relation. This algorithm’s perfor- mance is again mostly determined by the size of the relation, but the probing phase is far less costly compared to the first Acknowledgements algorithm which requires the computation of a hash value This work is partially funded by the German Research Foun- and a lookup in the hash table. The selection of the key- dation (DFG). range in the dictionary is also faster then the creation of the We would like to thank IBM for supporting Florian Funke hash table in the first algorithm. However, the time spent with an IBM Ph.D. Fellowship Award. 1434

12 .7. REFERENCES [17] S. Idreos, M. L. Kersten, and S. Manegold. Database [1] D. J. Abadi, S. Madden, and M. Ferreira. Integrating Cracking. In CIDR, pages 68–78, 2007. Compression and Execution in Column-Oriented [18] R. Kallman, H. Kimura, J. Natkins, A. Pavlo, Database Systems. In SIGMOD, pages 671–682, 2006. A. Rasin, S. B. Zdonik, E. P. C. Jones, S. Madden, [2] G. Antoshenkov, D. B. Lomet, and J. Murray. Order M. Stonebraker, Y. Zhang, J. Hugg, and D. J. Abadi. Preserving Compression. In ICDE, pages 655–663, H-Store: A High-Performance, Distributed Main 1996. Memory Transaction Processing System. PVLDB, [3] C. Binnig, S. Hildenbrand, and F. F¨ arber. 1(2):1496–1499, 2008. Dictionary-based Order-preserving String [19] A. Kemper and T. Neumann. HyPer: A Hybrid Compression for Main Memory Column Stores. In OLTP&OLAP Main Memory Database System Based SIGMOD, pages 283–296, 2009. on Virtual Memory Snapshots. In ICDE, pages [4] P. A. Boncz, M. Zukowski, and N. Nes. 195–206, 2011. MonetDB/X100: Hyper-Pipelining Query Execution. [20] A. Kivity, Y. Kamay, D. Laor, U. Lublin, and In CIDR, pages 225–237, 2005. A. Liguori. kvm : the Linux Virtual Machine Monitor. [5] T. Cao, M. A. V. Salles, B. Sowell, Y. Yue, A. J. kernel.org, 2007. Demers, J. Gehrke, and W. M. White. Fast [21] J. Kr¨uger, M. Grund, C. Tinnefeld, H. Plattner, Checkpoint Recovery Algorithms for Frequently A. Zeier, and F. Faerber. Optimizing Write Consistent Applications. In SIGMOD, pages 265–276, Performance for Read Optimized Databases. In 2011. Database Systems for Advanced Applications, pages [6] Z. Chen, J. Gehrke, and F. Korn. Query Optimization 291–305. Springer, 2010. In Compressed Database Systems. In SIGMOD, pages [22] J. Kr¨uger, C. Kim, M. Grund, N. Satish, D. Schwalb, 271–282, 2001. J. Chhugani, H. Plattner, P. Dubey, and A. Zeier. [7] C. Clark, K. Fraser, S. Hand, J. G. Hansen, E. Jul, Fast Updates on Read-Optimized Databases Using C. Limpach, I. Pratt, and A. Warfield. Live Migration Multi-Core CPUs. PVLDB, 5(1):61–72, 2012. of Virtual Machines. In NSDI, pages 273–286, 2005. [23] R. A. Lorie. Physical Integrity in a Large Segmented [8] R. Cole, F. Funke, L. Giakoumakis, W. Guy, Database. TODS, 2(1):91–104, 1977. A. Kemper, S. Krompass, H. A. Kuno, R. O. [24] S. Manegold, P. A. Boncz, and M. L. Kersten. What Nambiar, T. Neumann, M. Poess, K.-U. Sattler, Happens During a Join? Dissecting CPU and Memory M. Seibold, E. Simon, and F. Waas. The mixed Optimization Effects. In VLDB, pages 339–350, 2000. workload CH-benCHmark. In DBTest, page 8, 2011. [25] H. M¨ uhe, A. Kemper, and T. Neumann. How to [9] G. P. Copeland and S. Khoshafian. A Decomposition Efficiently Snapshot Transactional Data: Hardware or Storage Model. In SIGMOD, pages 268–279, 1985. Software Controlled? In DaMoN, pages 17–26, 2011. [10] P. J. Denning. The Working Set Model for Program [26] T. Neumann. Efficiently Compiling Efficient Query Behaviour. Communications of the ACM, Plans. In VLDB, pages 539–550, 2011. 11(5):323–333, 1968. [27] Oracle. Advanced Compression with Oracle Database [11] F. F¨arber, S. K. Cha, J. Primsch, C. Bornh¨ovd, 11g. Whitepaper, 2011. S. Sigg, and W. Lehner. SAP HANA Database: Data [28] V. Raman, G. Swart, L. Qiao, F. Reiss, V. Dialani, Management for Modern Business Applications. D. Kossmann, I. Narang, and R. Sidle. Constant-Time SIGMOD Record, 40(4):45–51, 2011. Query Processing. In ICDE, pages 60–69, 2008. [12] F. Funke. [s390] introduce dirty bit for kvm live [29] Transaction Processing Performance Council. TPC-C migration. Patch integrated in Linux kernel 2.6.28, specification. Oct 2008. http://git.kernel.org/?p=linux/ www.tpc.org/tpcc/spec/TPC-C\_v5-11.pdf, 2010. kernel/git/stable/linux-stable.git;a=commit;h= [30] VoltDB. Technical Overview. 15e86b0c752d50e910b2cca6e83ce74c4440d06c. http://www.voltdb.com, March 2010. [13] M. Gorman. Understanding the Linux Virtual Memory [31] VoltDB Community. VoltDB TPC-C-like Benchmark Manager. Prentice Hall PTR, 2004. Comparison-Benchmark Description. [14] G. Graefe and L. D. Shapiro. Data Compression and http://community.voltdb.com/node/134, 2010. Database Performance. In In ACM/IEEE-CS [32] T. Westmann, D. Kossmann, S. Helmer, and Symposium On Applied Computing, pages 22–27, 1991. G. Moerkotte. The Implementation and Performance [15] S. H´eman, M. Zukowski, N. J. Nes, L. Sidirourgos, of Compressed Databases. SIGMOD Record, and P. A. Boncz. Positional Update Handling in 29(3):55–67, 2000. Column Stores. In SIGMOD, pages 543–554, 2010. [33] M. Zukowski, S. H´eman, N. Nes, and P. A. Boncz. [16] A. L. Holloway, V. Raman, G. Swart, and D. J. Super-Scalar RAM-CPU Cache Compression. In DeWitt. How to Barter Bits for Chronons: ICDE, page 59, 2006. Compression and Bandwidth Trade Offs for Database Scans. In SIGMOD, pages 389–400, 2007. 1435