- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

利用 Clipperd部署和监控异构机器学习应用

展开查看详情

1 .Clipper A Low-Latency Online Prediction Serving System Dan Crankshaw crankshaw@cs.berkeley.edu http://clipper.ai https://github.com/ucbrise/clipper June 6, 2018

2 .Clipper Team Ø Dan Crankshaw Ø Eric Sheng Ø Corey Zumar Ø Joseph Gonzalez Ø Simon Mo Ø Ion Stoica Ø Alexey Tumanov Ø Many other Ø Eyal Sela contributors Ø Rehan Durrani

3 .What is the Machine Learning Lifecycle? Model Development Training Inference Prediction Service Data Cleaning & Query Collection Visualization Logic Prediction Trained End User Training & Feature Eng. & Training Pipelines Models Application Validation Model Design Offline Training Live Feedback Validation Data Data

4 .Machine Learning in Production Training Inference Prediction Service Query Logic Prediction Trained End User Training Pipelines Models Application Live Feedback Validation Data

5 .Machine Learning in Production Training Inference Prediction Service Query Logic Prediction Trained End User Training Pipelines Models Application Live Feedback Validation Data

6 . Inference Prediction Service Query Logic Prediction End User Application Feedback Goal: make predictions in ~10s of ms under heavy load è Need new systems

7 .In this talk… q Requirements for prediction serving systems q Clipper overview and architecture q Current Project Status q Future directions

8 .Requirements for Prediction-Serving Systems

9 .Prediction-Serving Requirements q Manageable ??? Create Caffe VW 9 q Fast and Scalable q Affordable

10 .Manageable q Simple to deploy wide range of ML applications and frameworks ??? q Debuggable Create VW Caffe 10 q Easy to monitor system and model performance

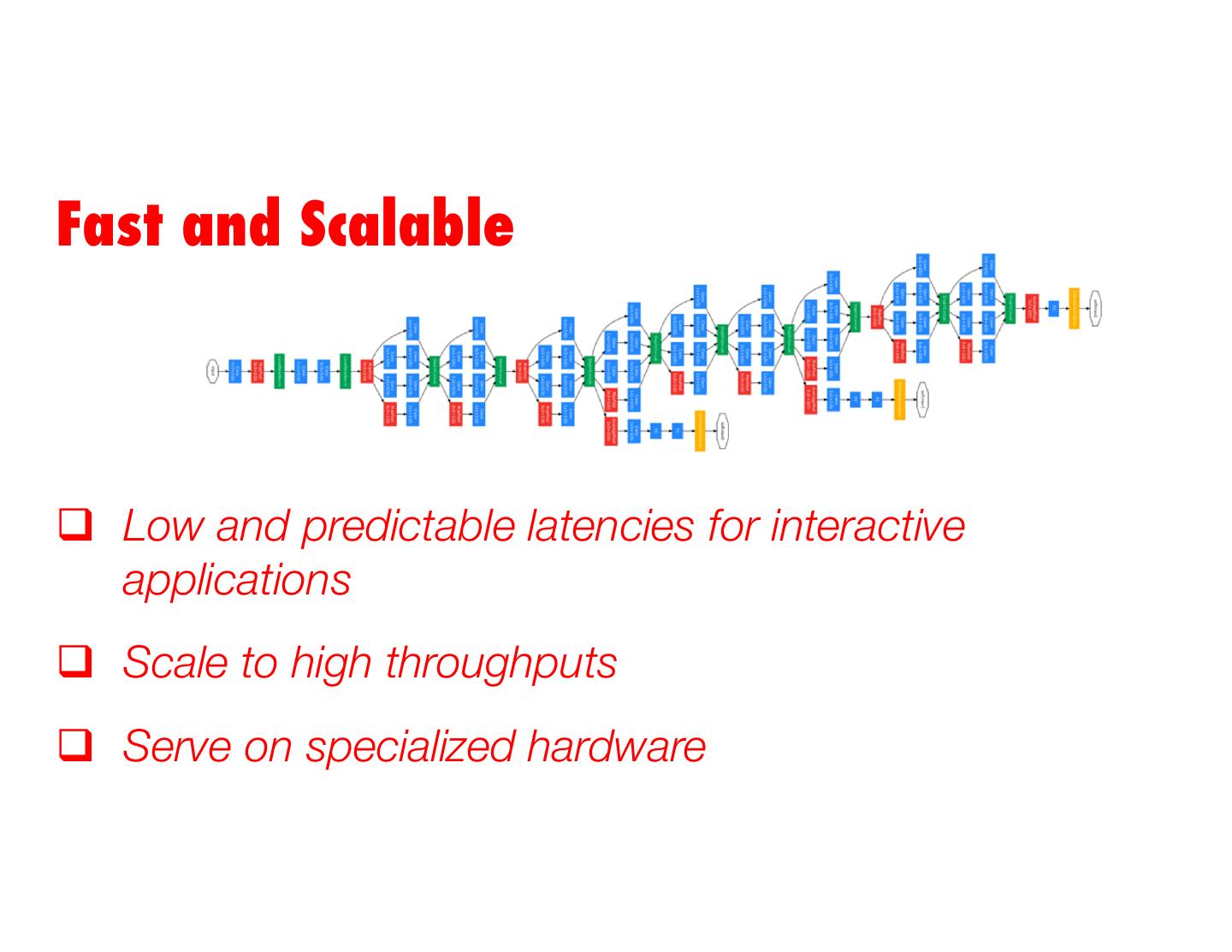

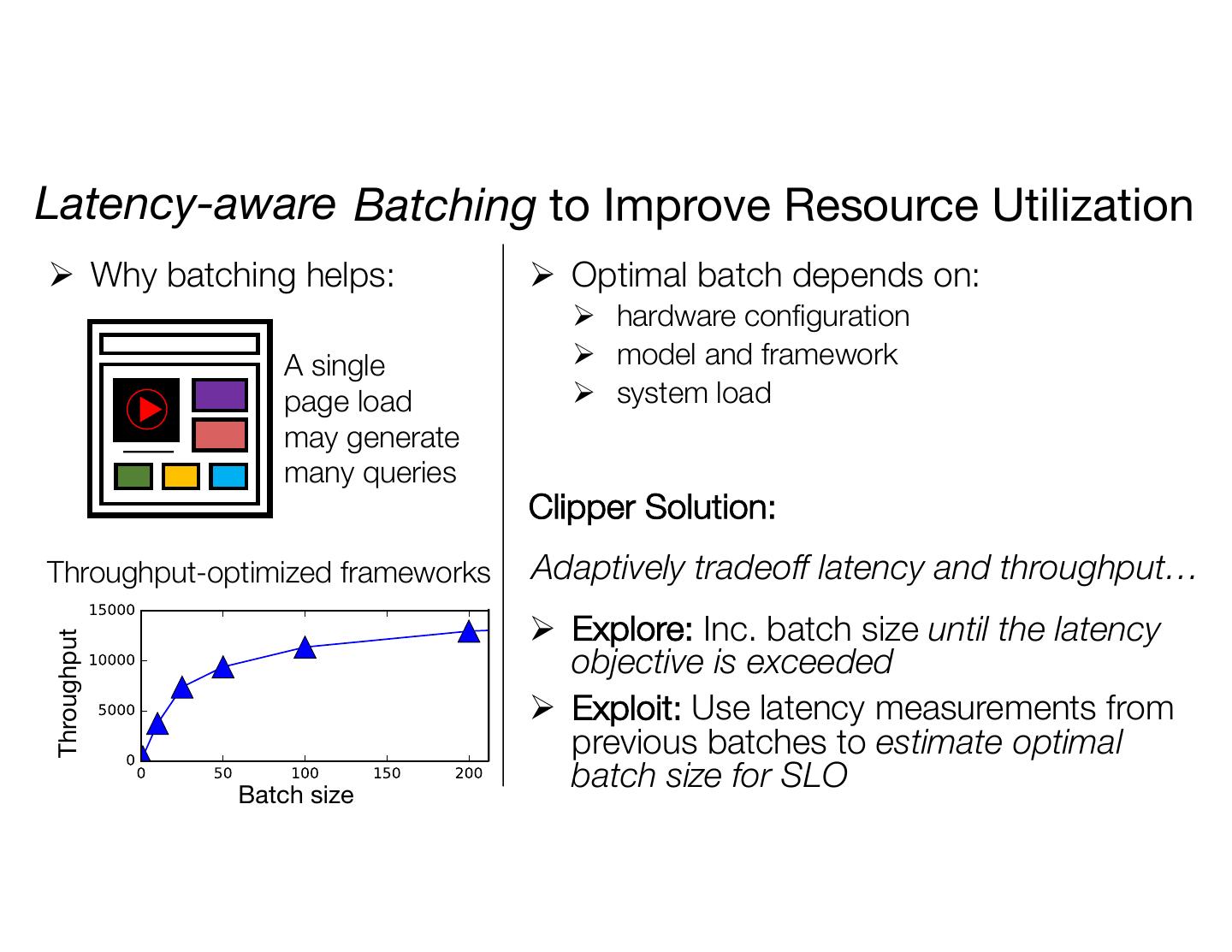

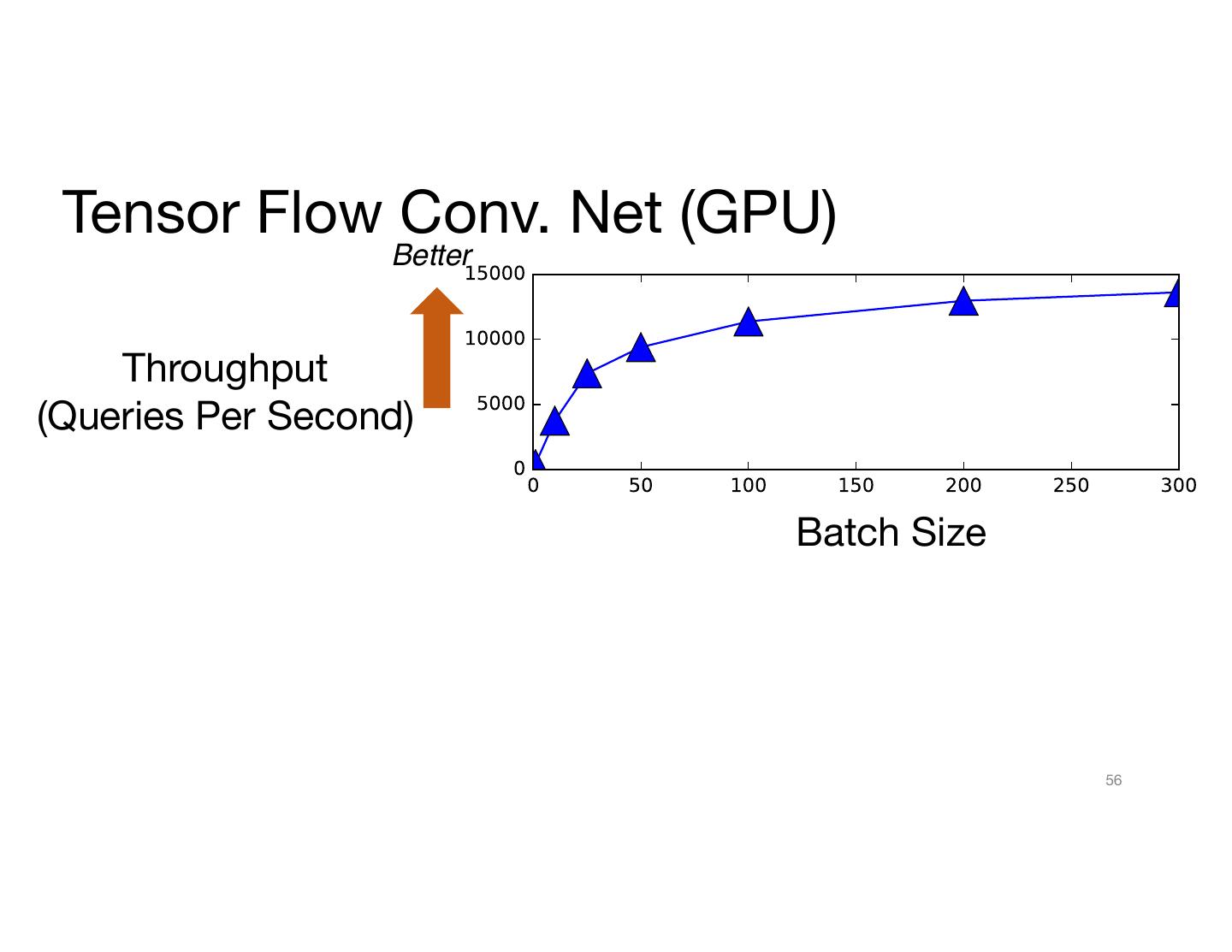

11 .Fast and Scalable q Low and predictable latencies for interactive applications q Scale to high throughputs q Serve on specialized hardware

12 .Affordable q Minimize storage cost q Minimize compute cost when using expensive compute resources

13 .Prediction-Serving Today Two Approaches: q Pre-materialize predictions q Put model in a container

14 . Pre-materialized Predictions Training Inference Prediction Service Query Logic Prediction Trained End User Training Pipelines Models Application Live Feedback Validation Data Data Data Engineer Engineer

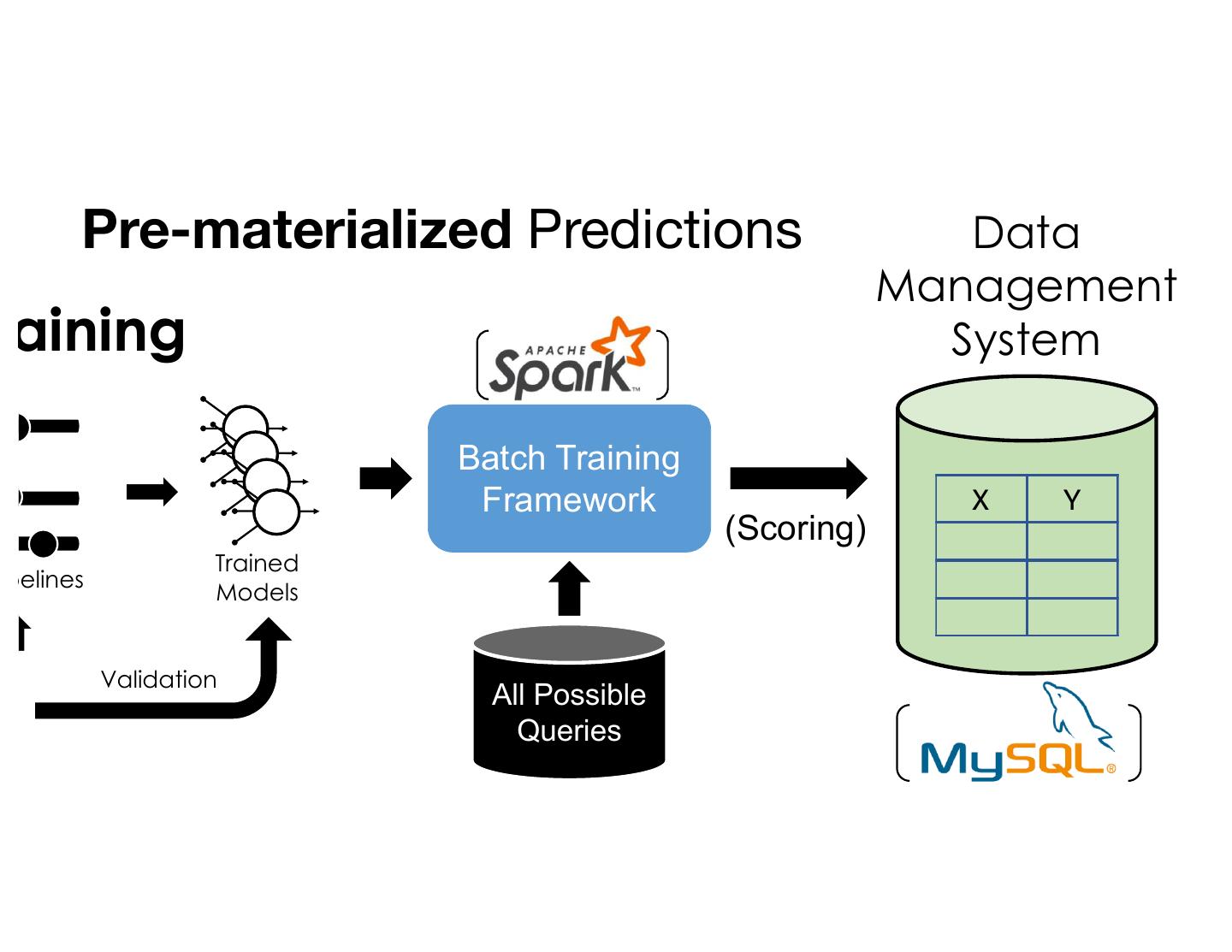

15 . Pre-materialized Predictions Training Batch Training Framework Trained Training Pipelines Models Live Validation Data All Possible Queries

16 . Pre-materialized Predictions Data Management raining System Batch Training Framework X Y (Scoring) Trained ipelines Models Validation All Possible Queries

17 . Pre-materialized Predictions Data Training Management System Batch Training Framework X Y (Scoring) Trained Training Pipelines Models Live Validation Data All Possible Queries Standard Data Eng. Tools

18 .Serving Pre-materialized Predictions Data Management System Query Batch Training Framework X Y (Scoring) Decision All Possible Queries Application Low-Latency Serving

19 .Serving Pre-materialized Predictions Data Advantages: Management System Ø Simple to deploy with standard tools Ø Low-latency at serving time Query Batch Training Framework X Y Problems:(Scoring) Ø Requires full set of queries ahead of time Decision Ø Small and bounded input domain All Possible Requires Ø Queries substantial computation and space Application Ø Example: scoring all content for all customers! Ø Costly update à rescore everything! Low-Latency Serving

20 . Model in a Container Training Inference Prediction Service Query Logic Prediction Trained End User Training Pipelines Models Application Live Feedback Validation Data Data Data Engineer Engineer

21 . Model in a Container Training {REST API} Trained Training Pipelines Models Live Validation Data

22 .Model in a Container {REST API} Query Decision Application

23 .Model in a Container Advantages: Ø General-purpose {REST API} Ø Renders predictions at serving time Query Problems: Decision Ø Requires data scientist to write performance-sensitive serving code Ø Inefficient use of compute resources à useApplication throughput- optimized frameworks to render single prediction Ø No support for monitoring or debugging models

24 .Prediction-Serving Today Pre-materialize predictions Model in a Container Can we design a system that {REST API} Batch Training Framework X Y (Scoring) does meet those requirements? All Possible Queries Current approaches do not meet the requirements for prediction-serving systems

25 .Clipper Overview and Architecture

26 . Wide range of application and frameworks ??? VW Caffe 26

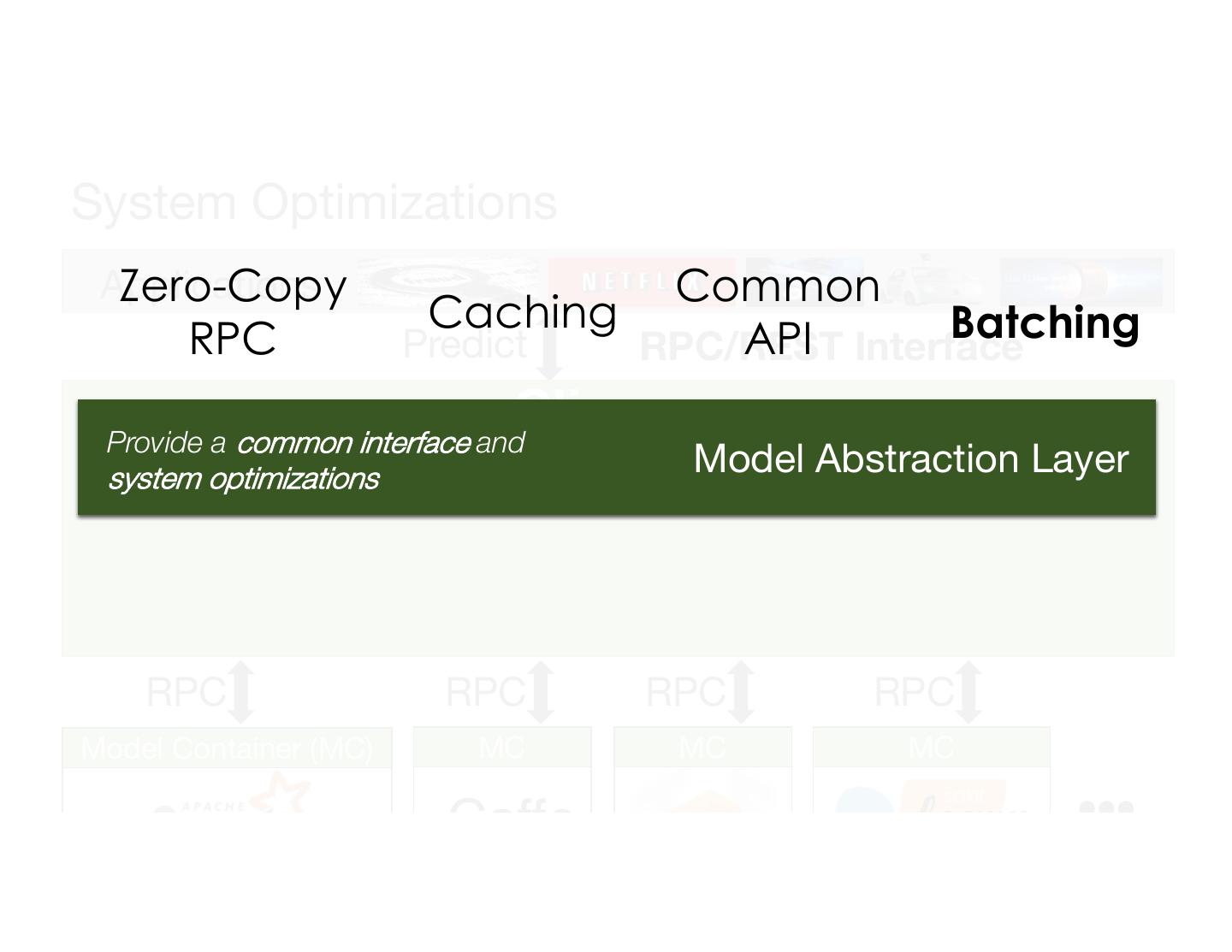

27 .Middle layer for prediction serving. Common System Abstraction Optimizations VW Caffe

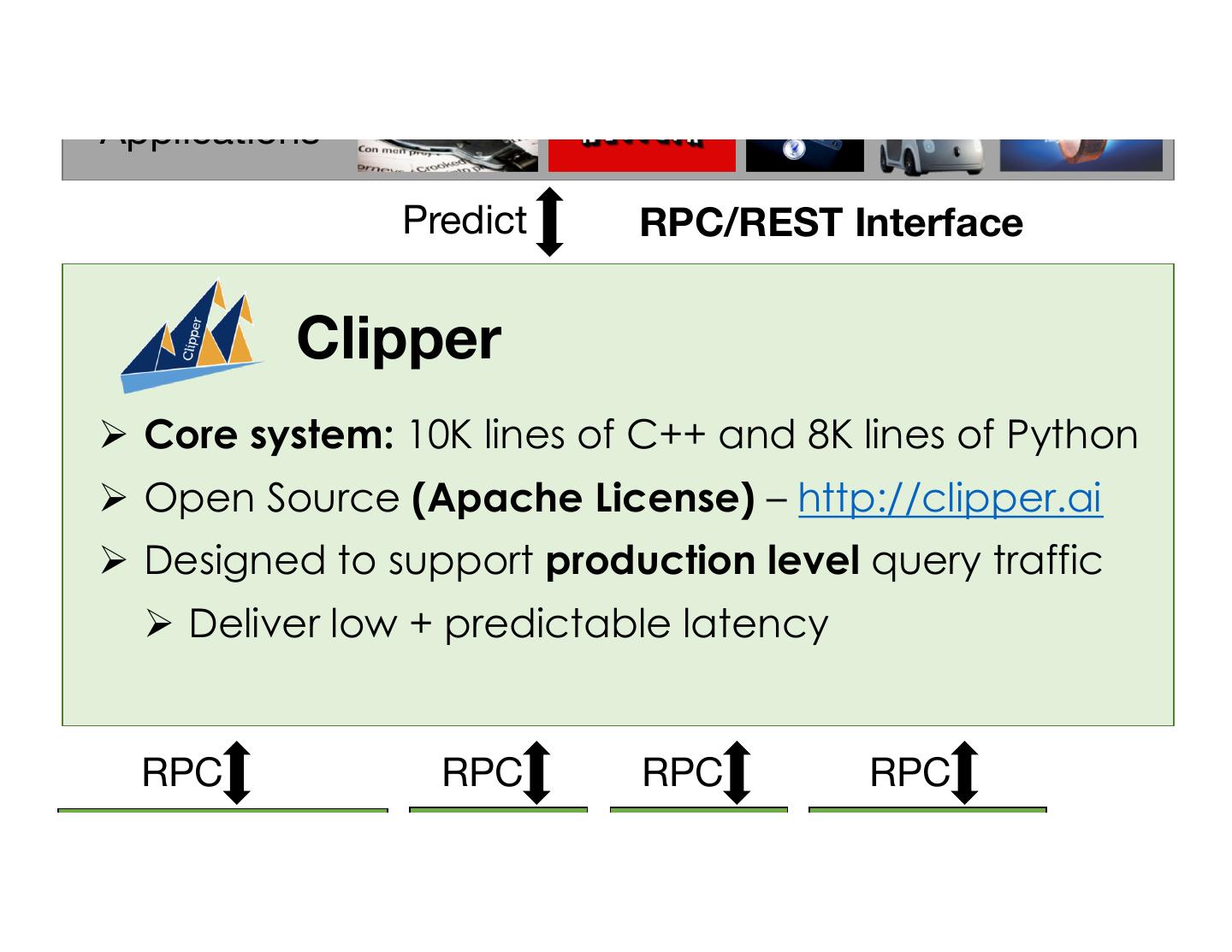

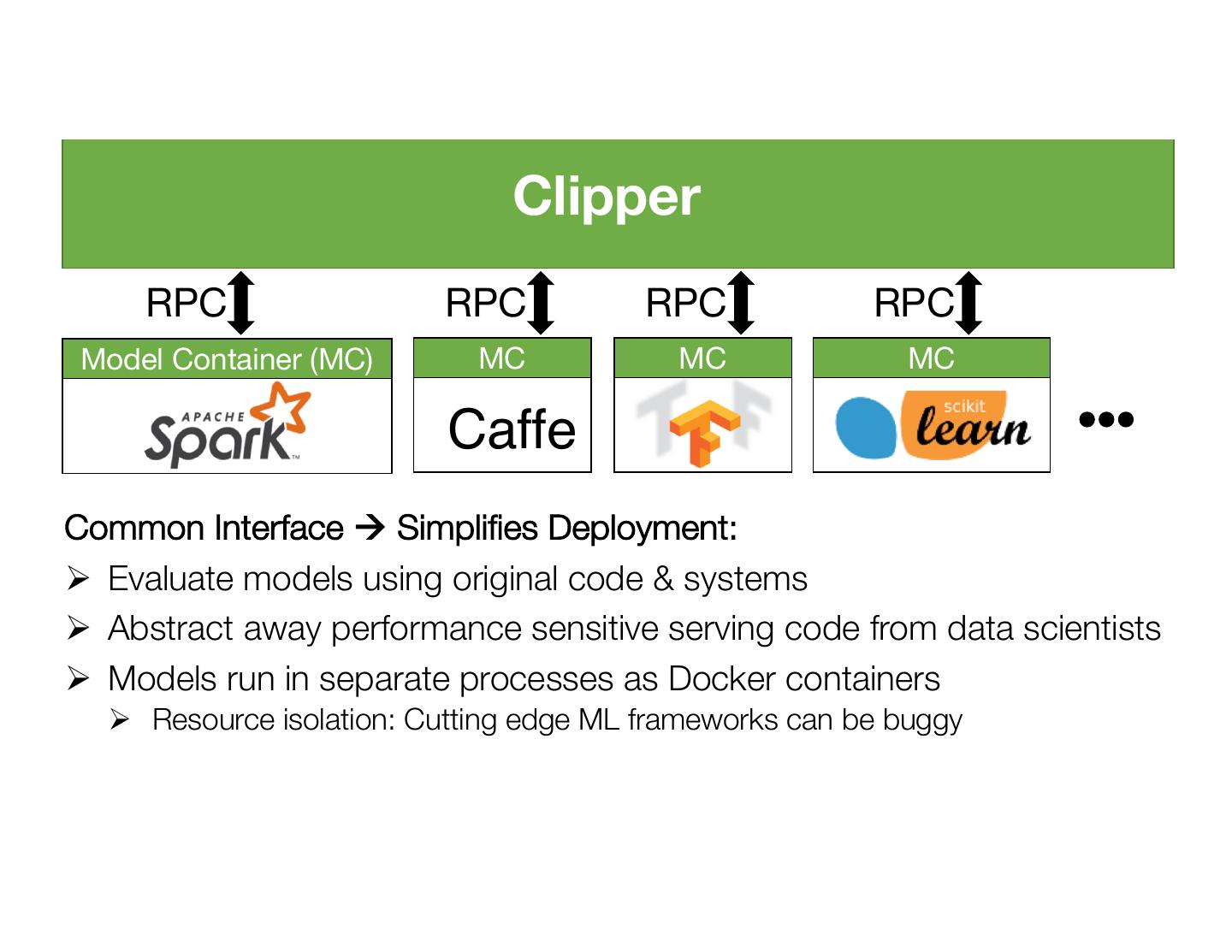

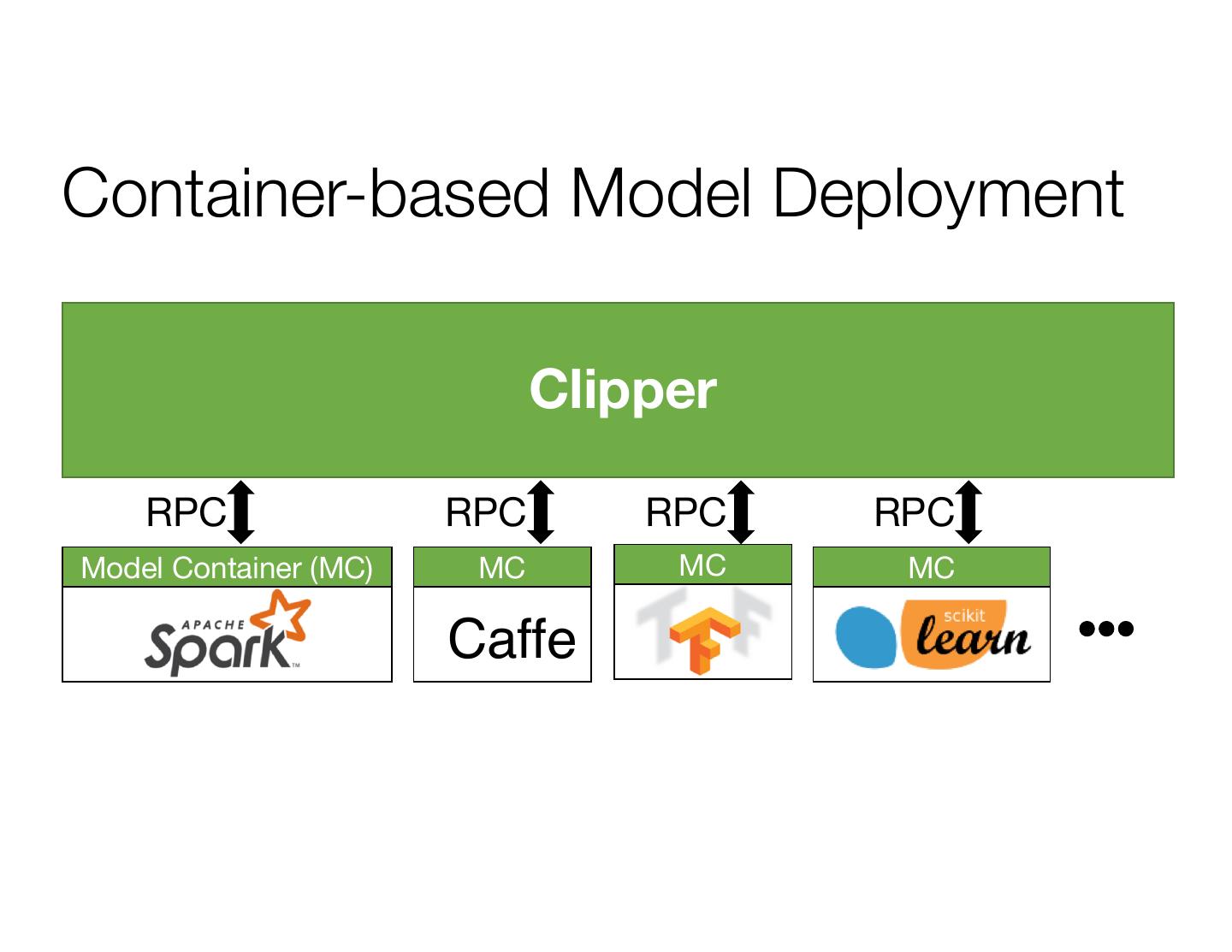

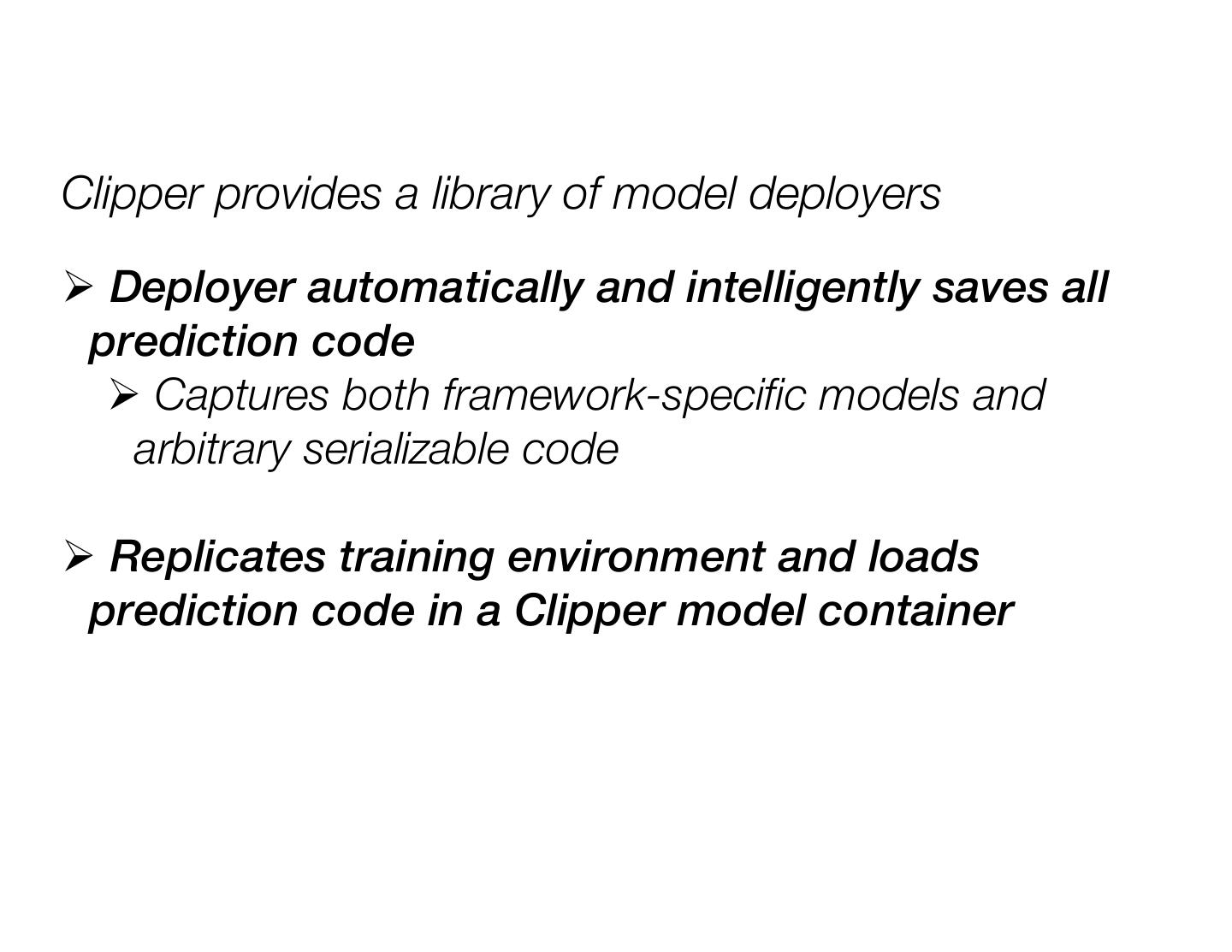

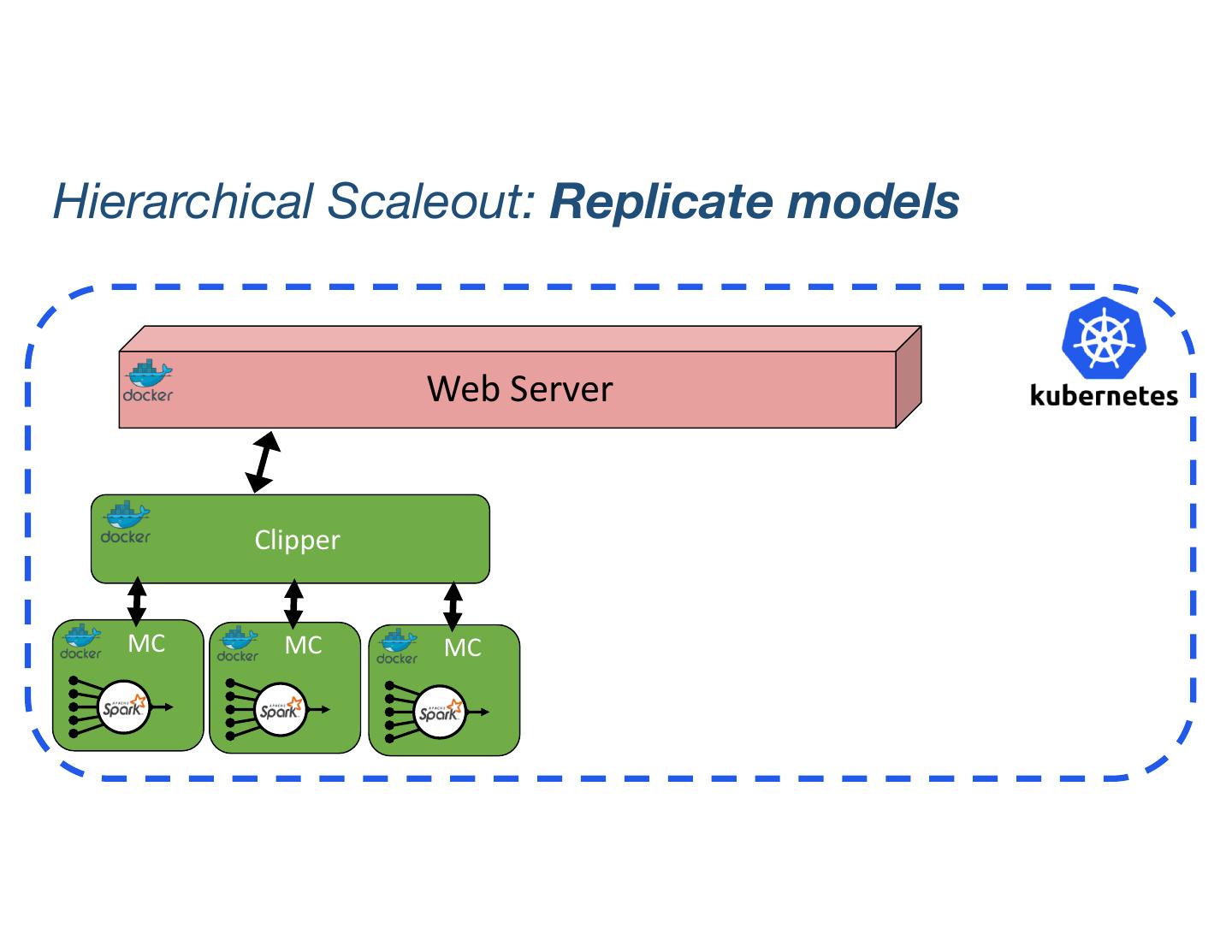

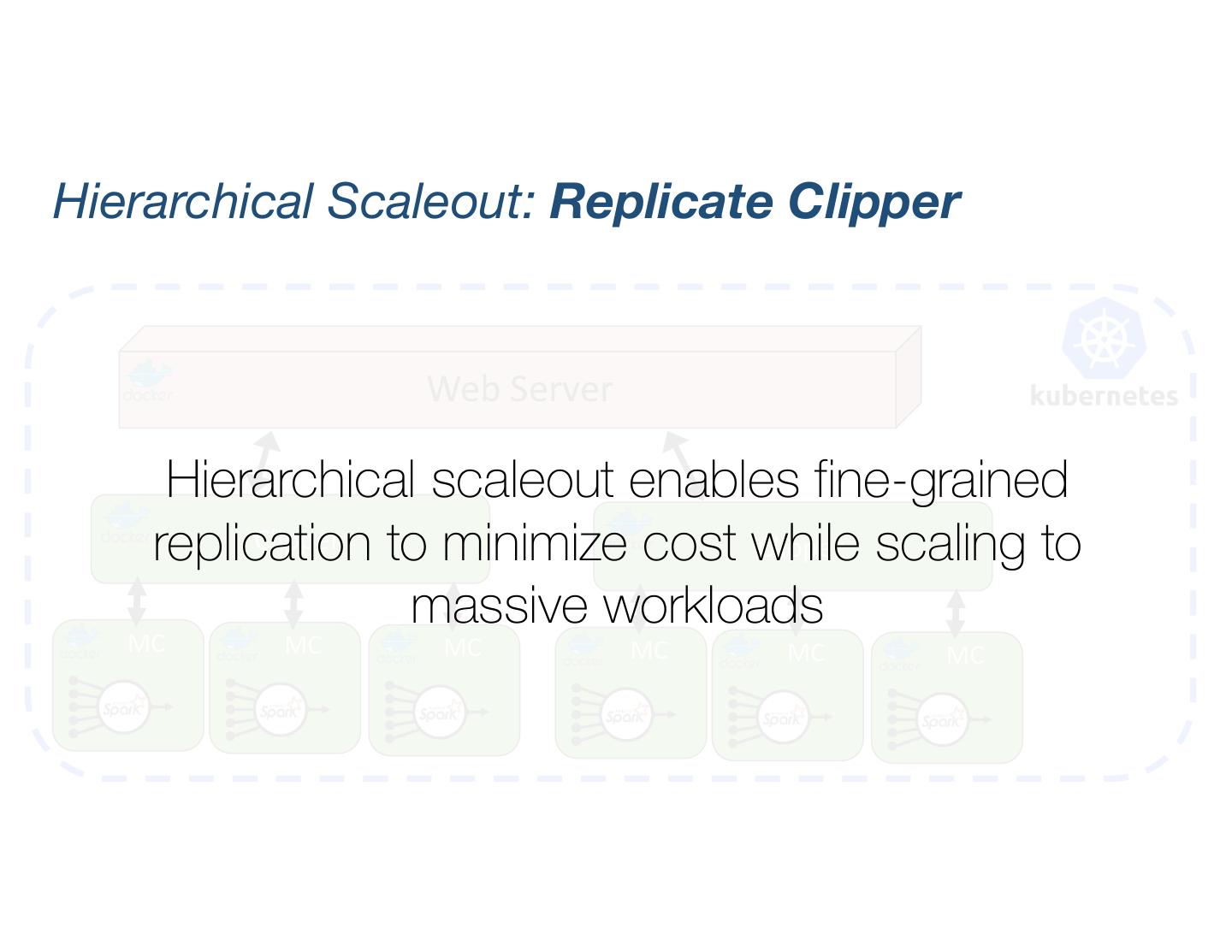

28 .Clipper Decouples Applications and Models Applications Predict RPC/REST Interface Clipper RPC RPC RPC RPC Model Container (MC) MC MC MC Caffe

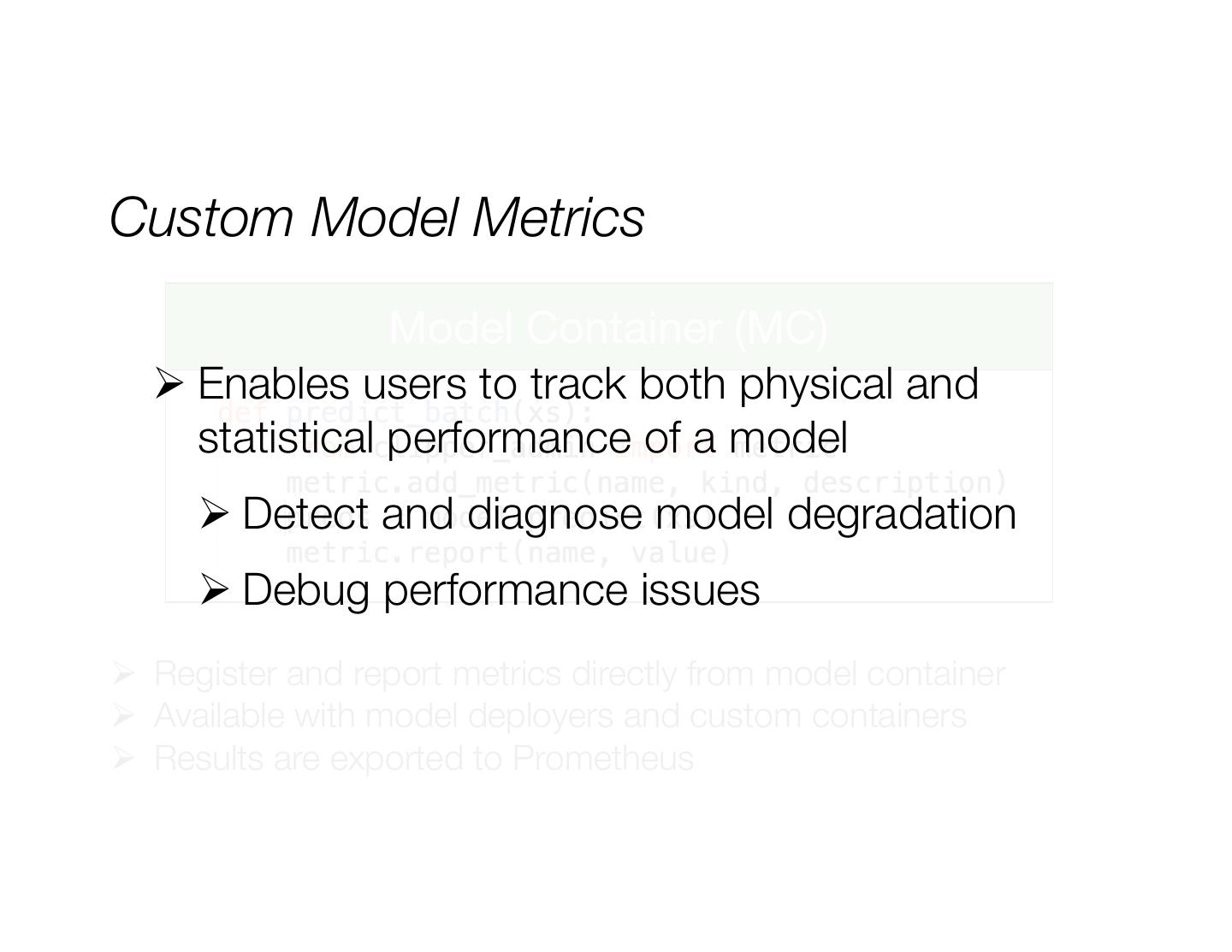

29 .Clipper Implementation clipper admin Applications Predict Clipper Clipper Query Processor Management Prometheus Redis Model Model Container Container Monitoring ConfigDB