- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

深度学习系统推荐

展开查看详情

1 .Deep Learning for Recommender Systems Nick Pentreath Principal Engineer @MLnick DBG / June 6, 2018 / © 2018 IBM Corporation

2 .About @MLnick on Twitter & Github Principal Engineer, IBM CODAIT - Center for Open-Source Data & AI Technologies Machine Learning & AI Apache Spark committer & PMC Author of Machine Learning with Spark Various conferences & meetups DBG / June 6, 2018 / © 2018 IBM Corporation

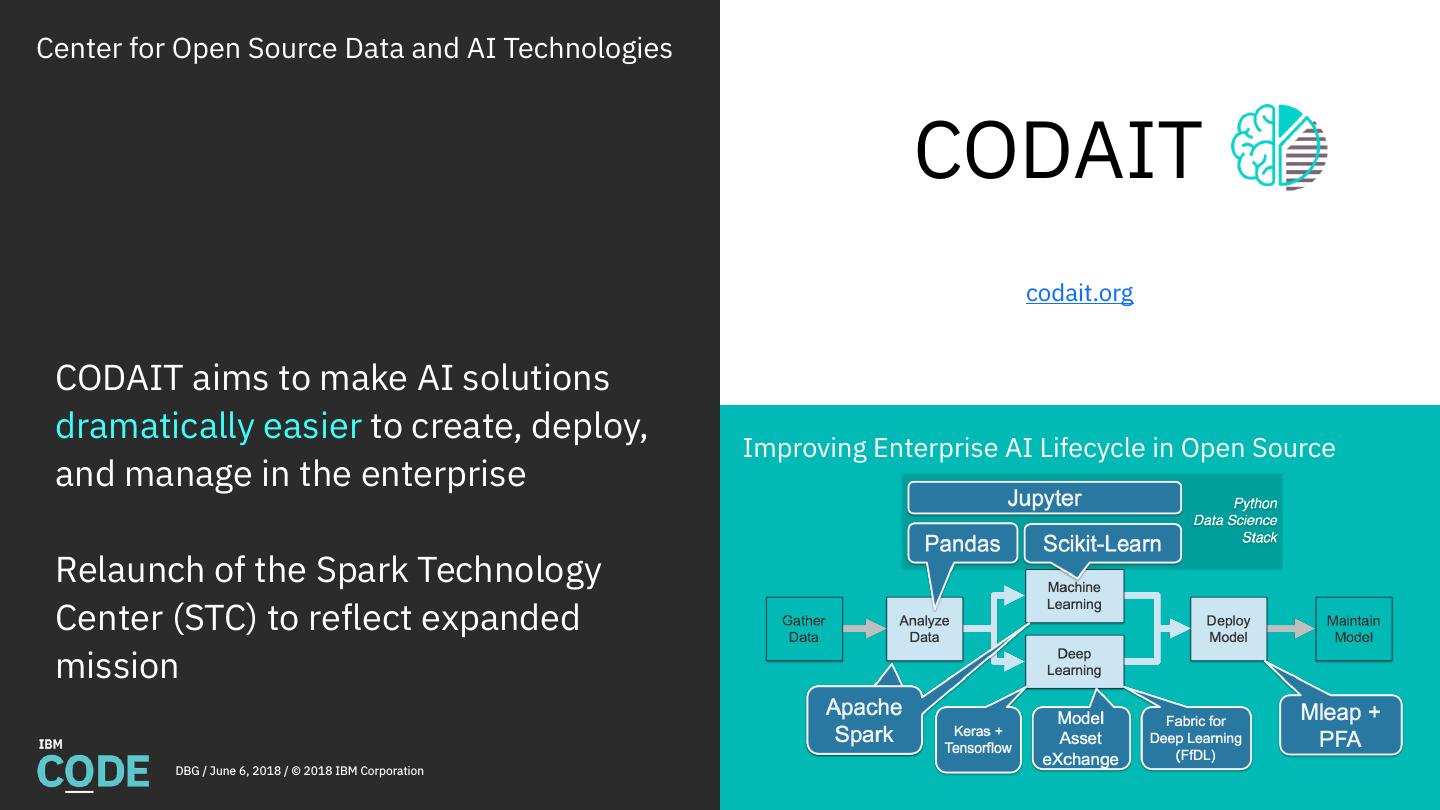

3 .Center for Open Source Data and AI Technologies CODAIT codait.org CODAIT aims to make AI solutions dramatically easier to create, deploy, Improving Enterprise AI Lifecycle in Open Source and manage in the enterprise Relaunch of the Spark Technology Center (STC) to reflect expanded mission DBG / June 6, 2018 / © 2018 IBM Corporation

4 .Agenda Recommender systems overview Deep learning overview Deep learning for recommendations Challenges and future directions DBG / June 6, 2018 / © 2018 IBM Corporation

5 .Recommender Systems DBG / June 6, 2018 / © 2018 IBM Corporation

6 .Recommender Systems Users and Items DBG / June 6, 2018 / © 2018 IBM Corporation

7 .Recommender Systems Events Implicit preference data ▪ Online – page view, click, app interaction ▪ Commerce – cart, purchase, return ▪ Media – preview, watch, listen Explicit preference data ▪ Ratings, reviews Intent ▪ Search query Social ▪ Like, share, follow, unfollow, block DBG / June 6, 2018 / © 2018 IBM Corporation

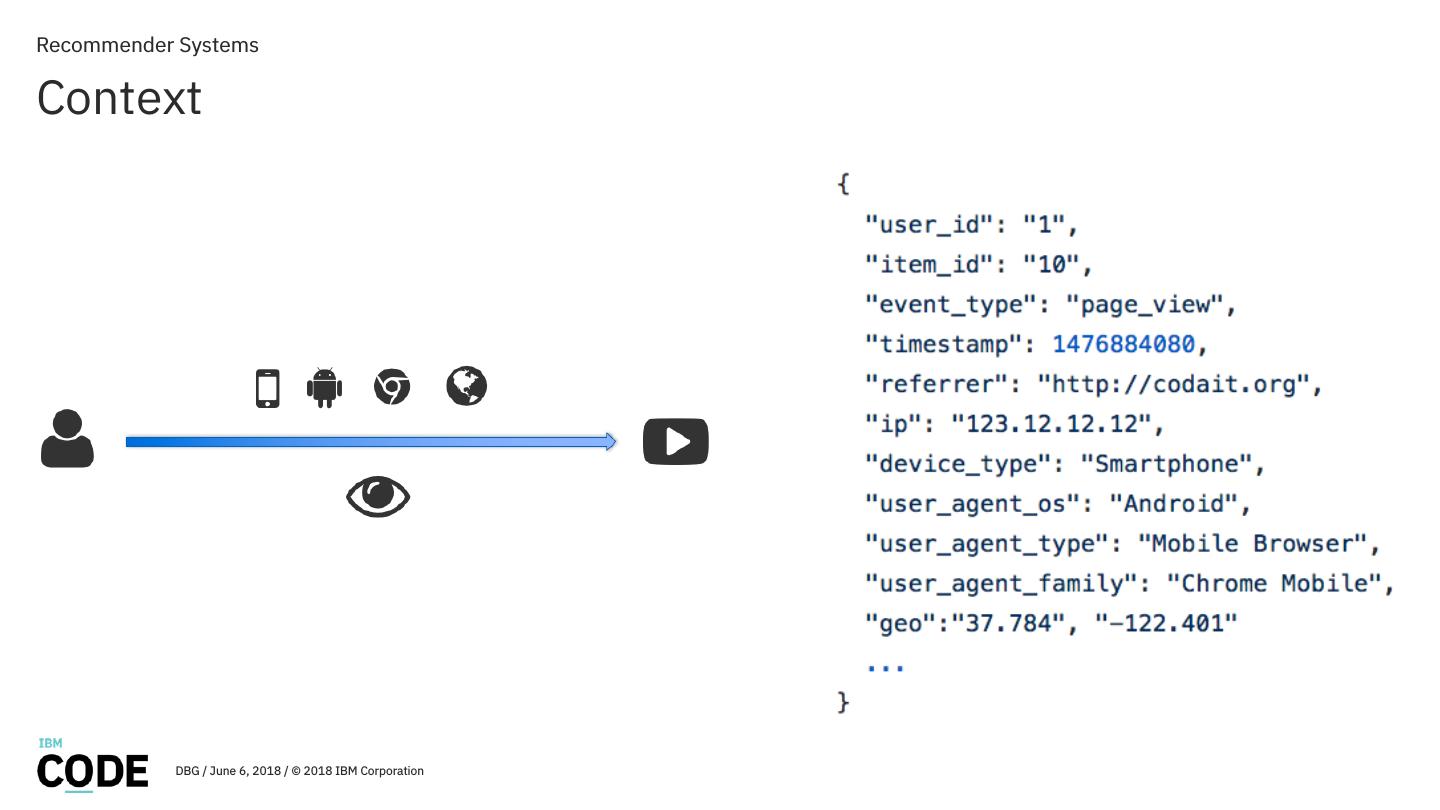

8 .Recommender Systems Context DBG / June 6, 2018 / © 2018 IBM Corporation

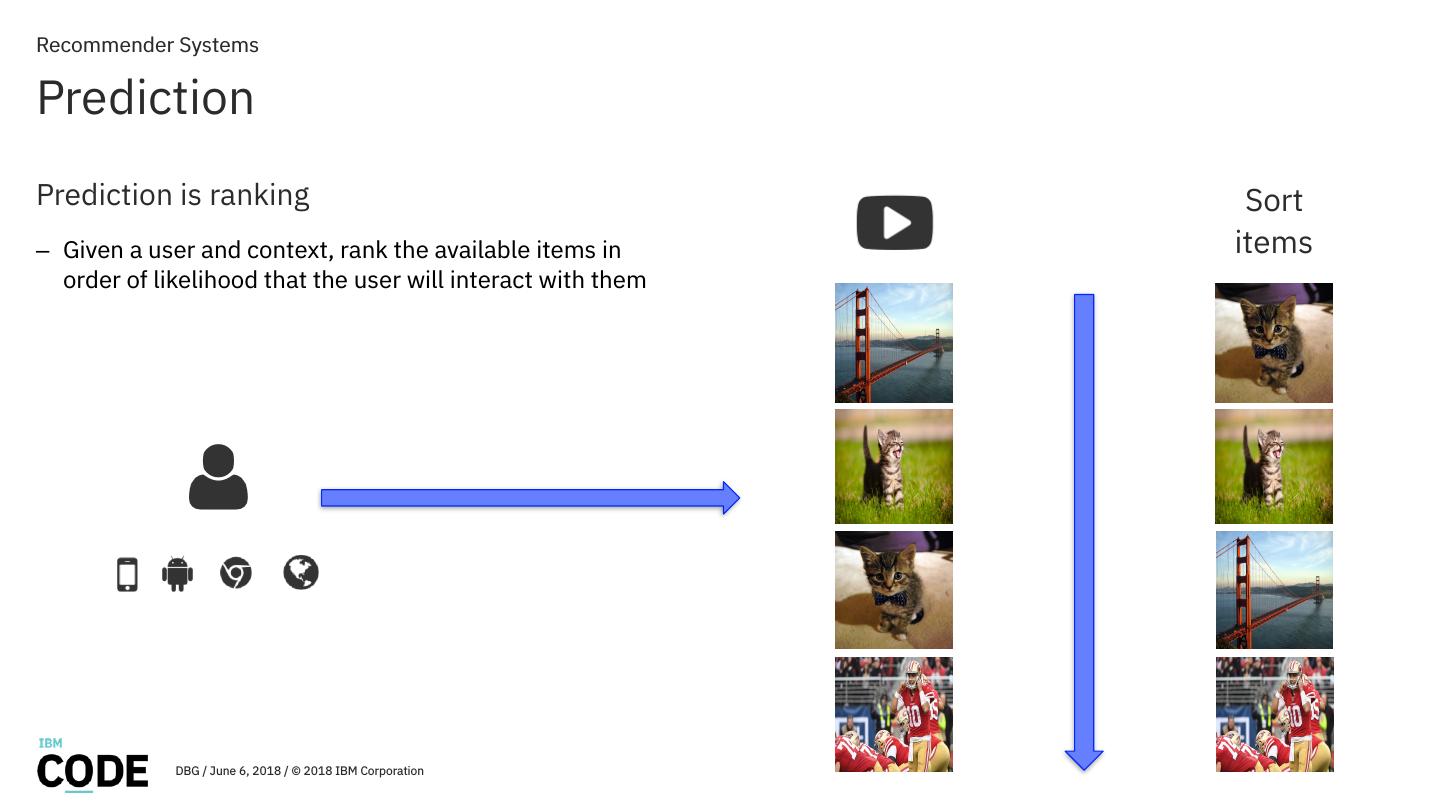

9 .Recommender Systems Prediction Prediction is ranking Sort – Given a user and context, rank the available items in items order of likelihood that the user will interact with them DBG / June 6, 2018 / © 2018 IBM Corporation

10 .Recommender Systems Cold Start New items – No historical interaction data – Typically use baselines or item content New (or unknown) users – Previously unseen or anonymous users have no user profile or historical interactions – Have context data (but possibly very limited) – Cannot directly use collaborative filtering models • Item-similarity for current item • Represent user as aggregation of items • Contextual models can incorporate short-term history DBG / June 6, 2018 / © 2018 IBM Corporation

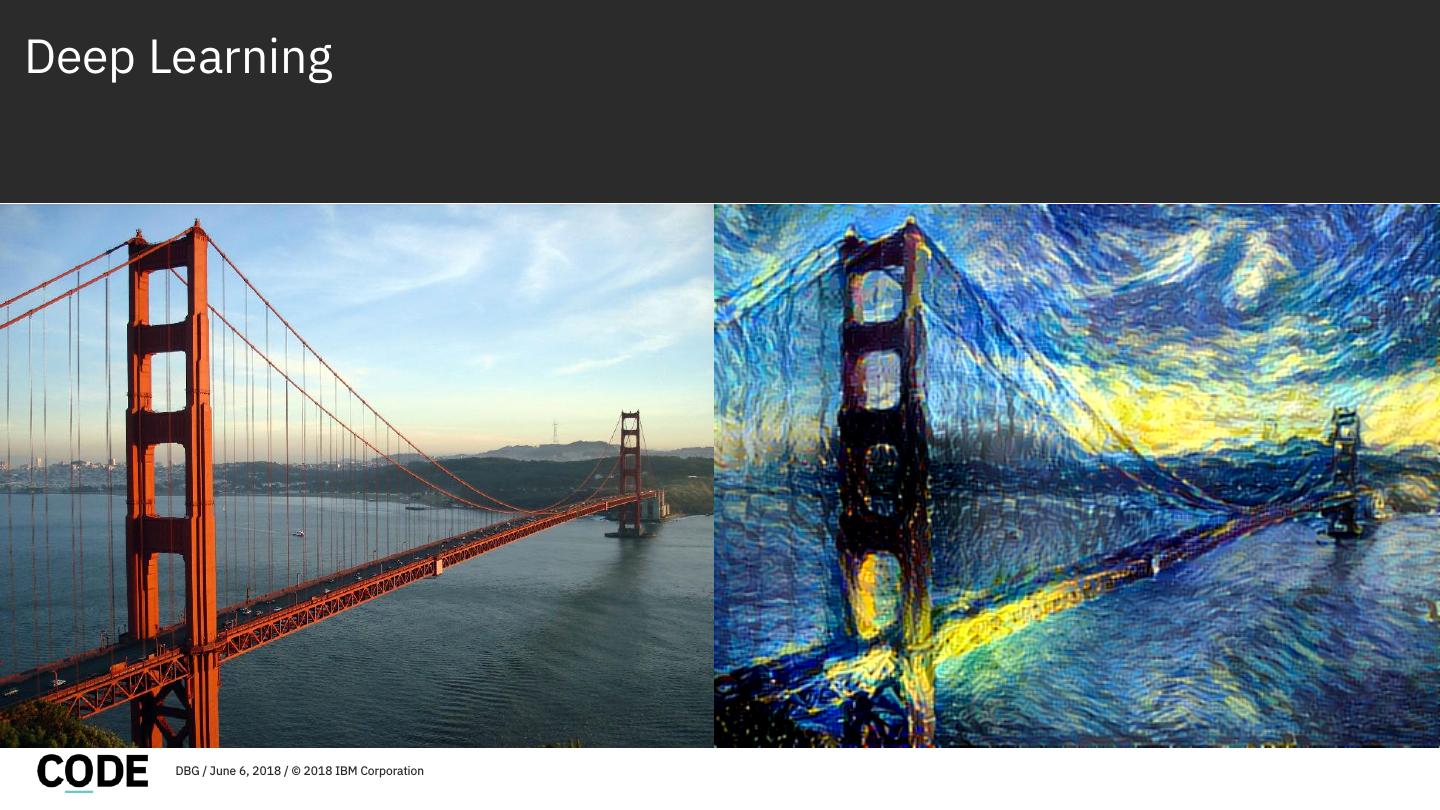

11 .Deep Learning DBG / June 6, 2018 / © 2018 IBM Corporation

12 .Deep Learning Source: Wikipedia Overview Original theory from 1940s; computer models originated around 1960s; fell out of favor in 1980s/90s Recent resurgence due to – Bigger (and better) data; standard datasets (e.g. ImageNet) – Better hardware (GPUs) – Improvements to algorithms, architectures and optimization Leading to new state-of-the-art results in computer vision (images and video); speech/ text; language translation and more DBG / June 6, 2018 / © 2018 IBM Corporation

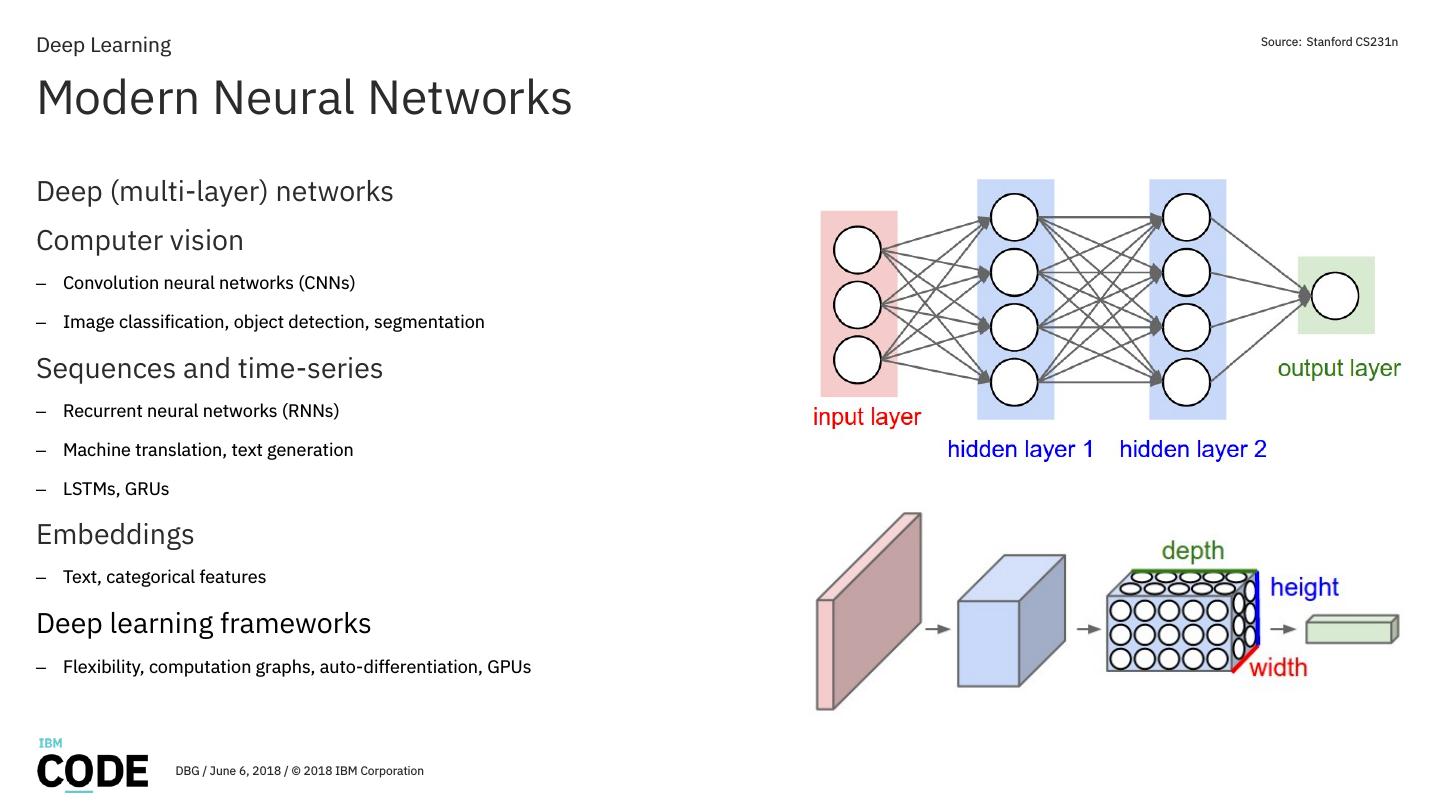

13 .Deep Learning Source: Stanford CS231n Modern Neural Networks Deep (multi-layer) networks Computer vision – Convolution neural networks (CNNs) – Image classification, object detection, segmentation Sequences and time-series – Recurrent neural networks (RNNs) – Machine translation, text generation – LSTMs, GRUs Embeddings – Text, categorical features Deep learning frameworks – Flexibility, computation graphs, auto-differentiation, GPUs DBG / June 6, 2018 / © 2018 IBM Corporation

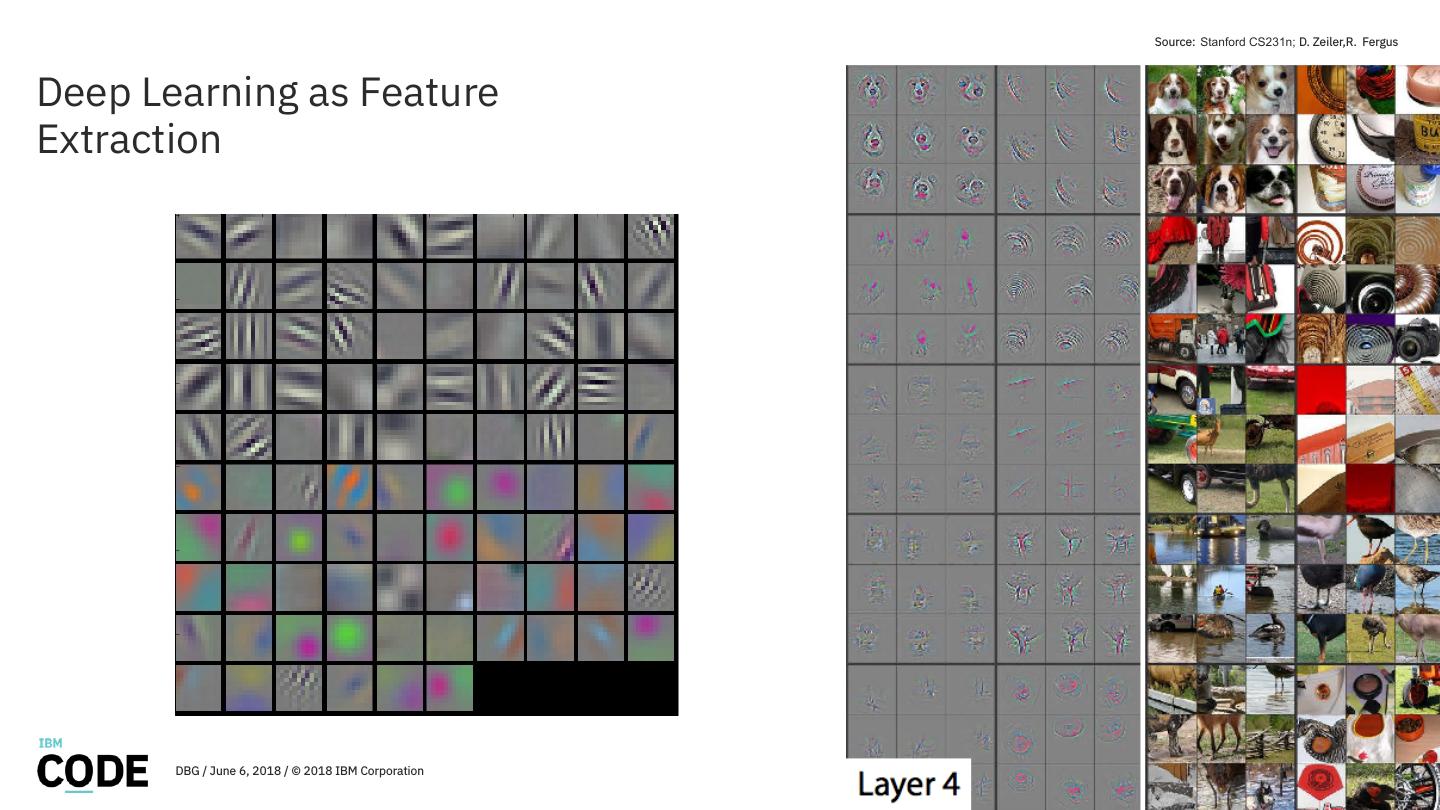

14 . Source: Stanford CS231n; D. Zeiler,R. Fergus Deep Learning as Feature Extraction DBG / June 6, 2018 / © 2018 IBM Corporation

15 .Deep Learning for Recommendations DBG / June 6, 2018 / © 2018 IBM Corporation

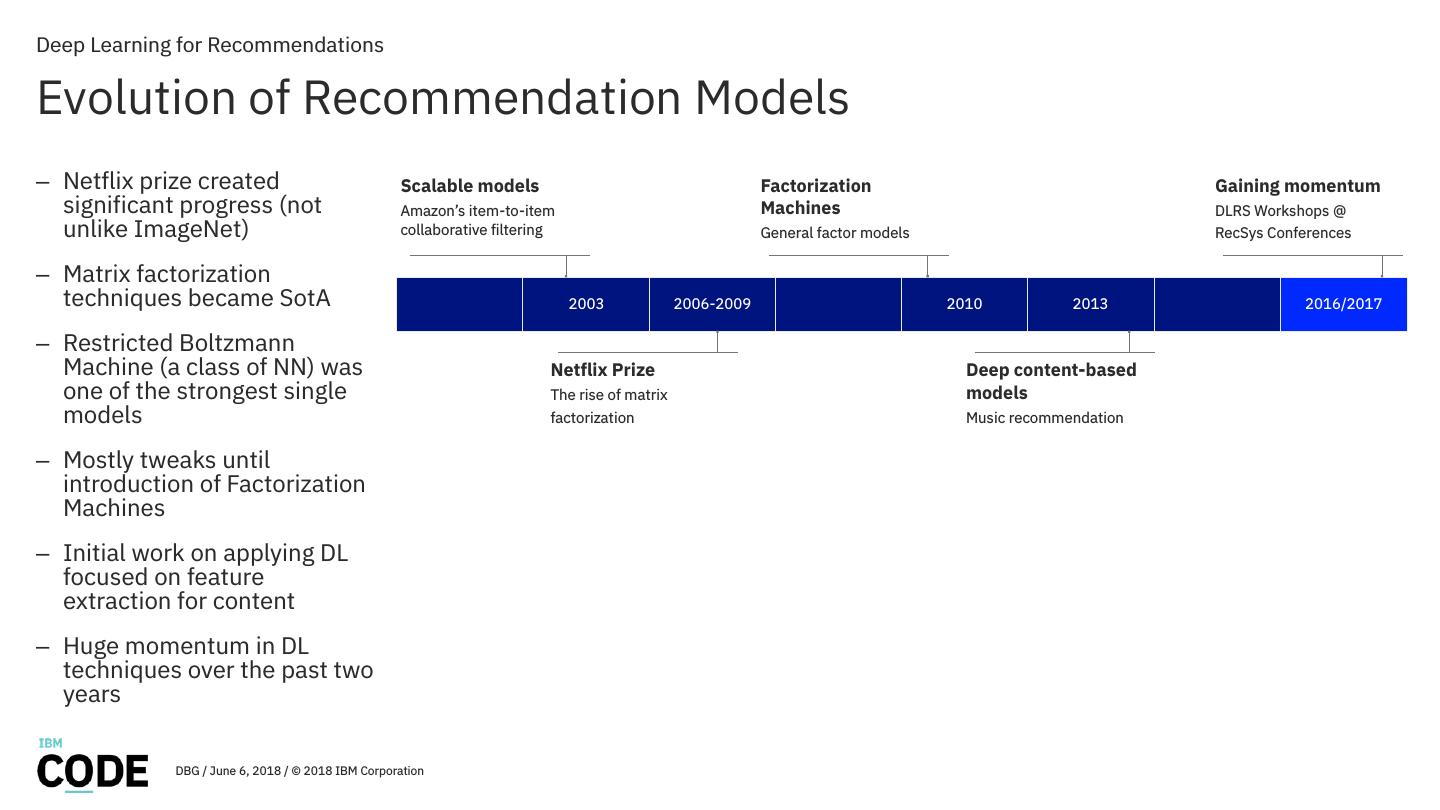

16 .Deep Learning for Recommendations Evolution of Recommendation Models – Netflix prize created Scalable models Factorization Gaining momentum significant progress (not Amazon’s item-to-item Machines DLRS Workshops @ unlike ImageNet) collaborative filtering General factor models RecSys Conferences – Matrix factorization techniques became SotA 2003 2006-2009 2010 2013 2016/2017 – Restricted Boltzmann Machine (a class of NN) was Netflix Prize Deep content-based one of the strongest single The rise of matrix models models factorization Music recommendation – Mostly tweaks until introduction of Factorization Machines – Initial work on applying DL focused on feature extraction for content – Huge momentum in DL techniques over the past two years DBG / June 6, 2018 / © 2018 IBM Corporation

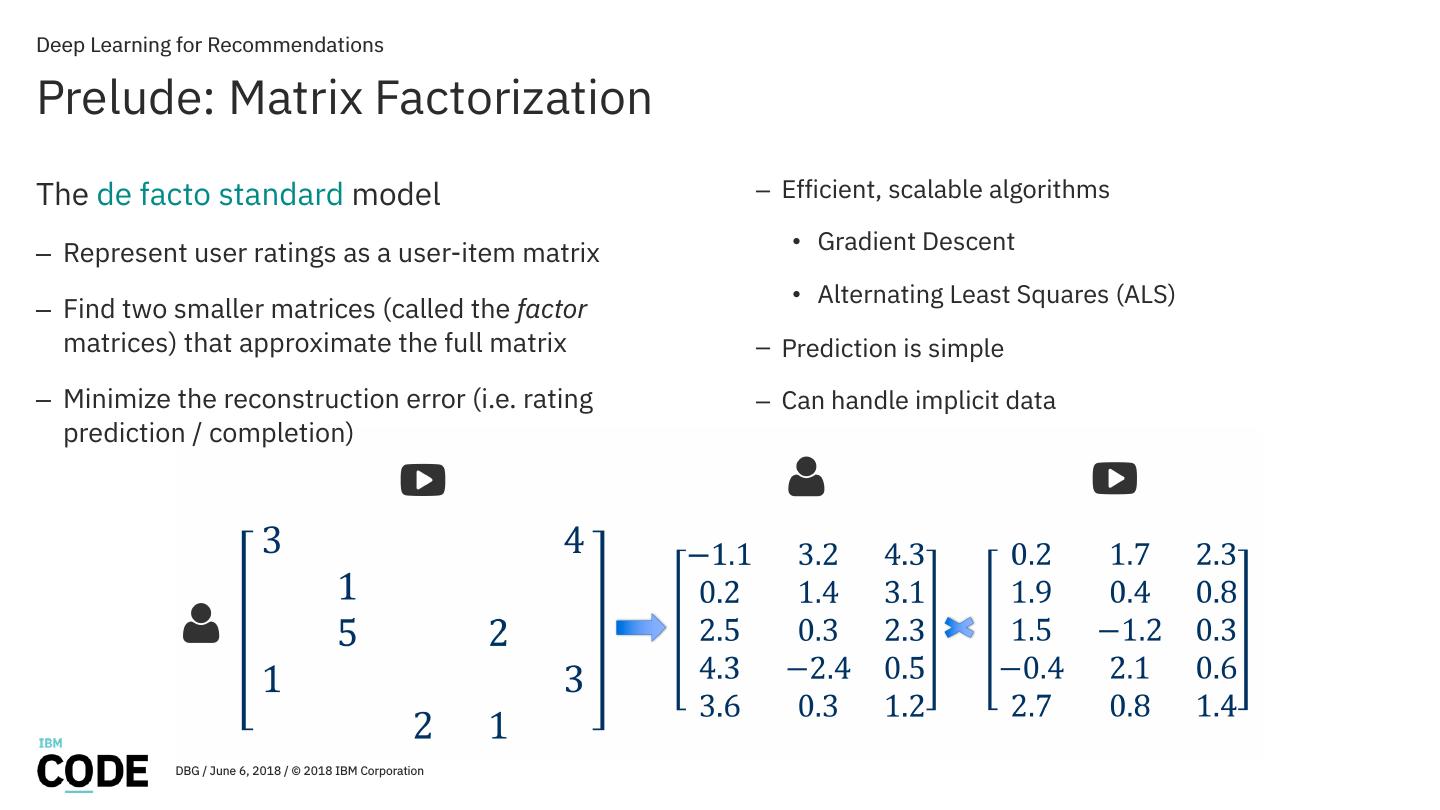

17 .Deep Learning for Recommendations Prelude: Matrix Factorization The de facto standard model – Efficient, scalable algorithms – Represent user ratings as a user-item matrix • Gradient Descent • Alternating Least Squares (ALS) – Find two smaller matrices (called the factor matrices) that approximate the full matrix – Prediction is simple – Minimize the reconstruction error (i.e. rating – Can handle implicit data prediction / completion) DBG / June 6, 2018 / © 2018 IBM Corporation

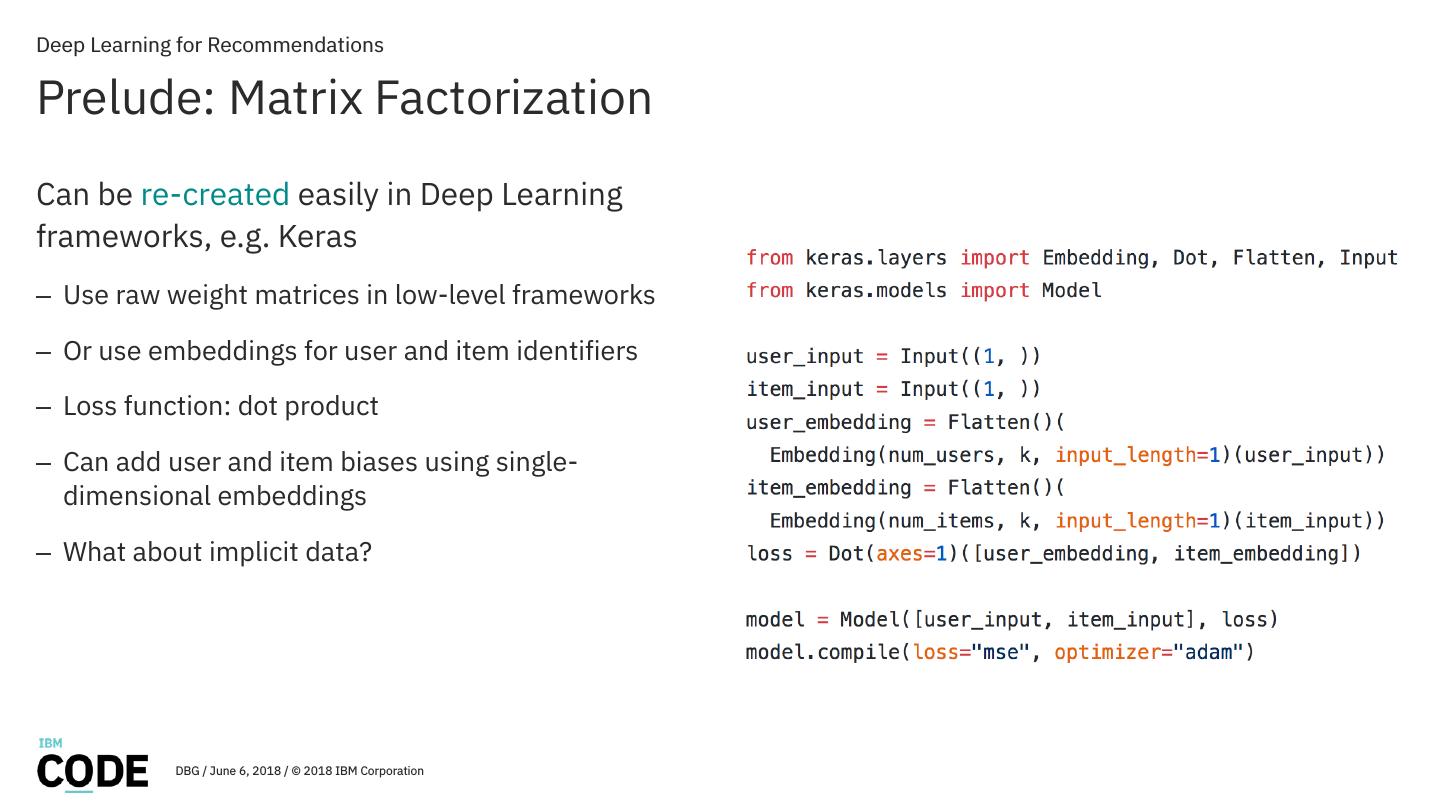

18 .Deep Learning for Recommendations Prelude: Matrix Factorization Can be re-created easily in Deep Learning frameworks, e.g. Keras – Use raw weight matrices in low-level frameworks – Or use embeddings for user and item identifiers – Loss function: dot product – Can add user and item biases using single- dimensional embeddings – What about implicit data? DBG / June 6, 2018 / © 2018 IBM Corporation

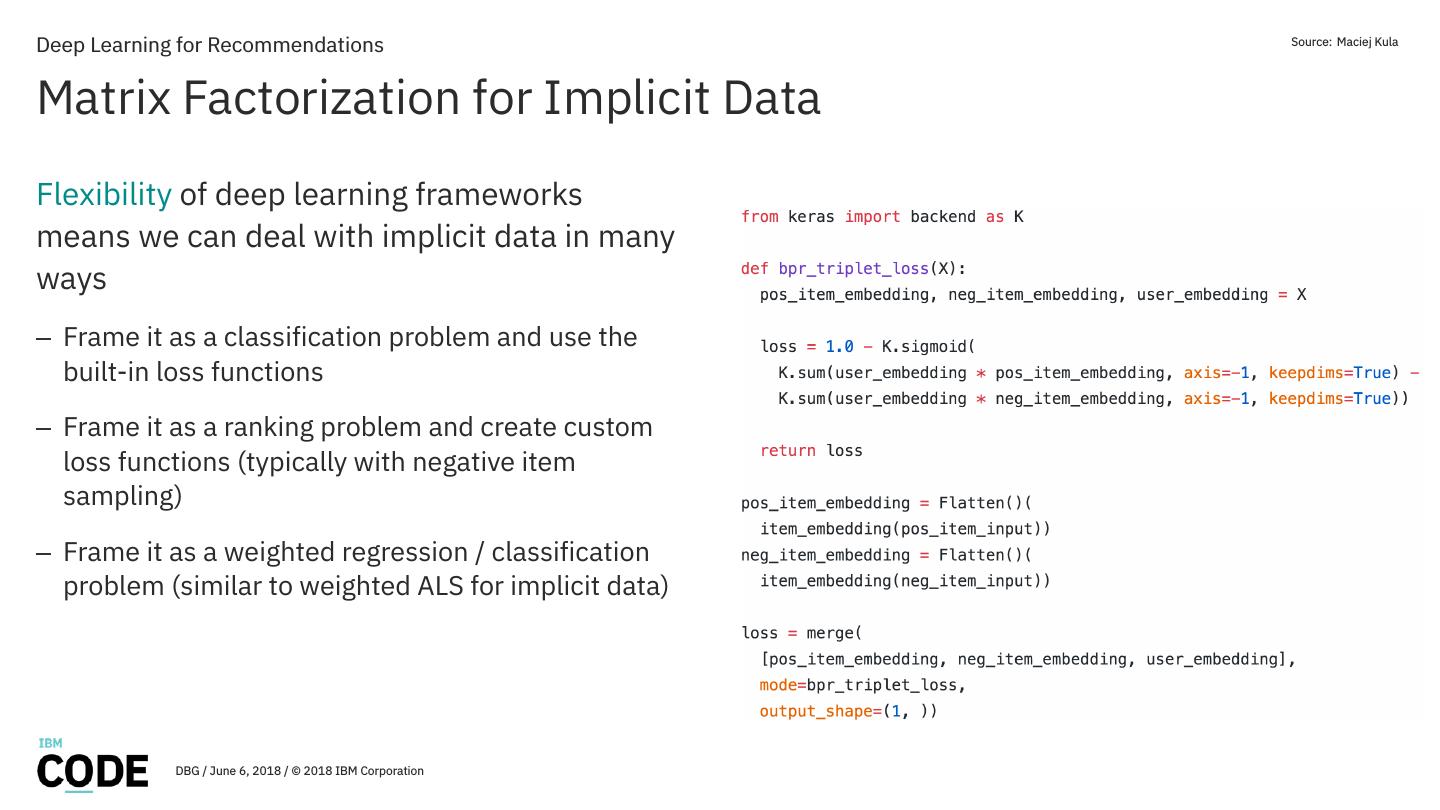

19 .Deep Learning for Recommendations Source: Maciej Kula Matrix Factorization for Implicit Data Flexibility of deep learning frameworks means we can deal with implicit data in many ways – Frame it as a classification problem and use the built-in loss functions – Frame it as a ranking problem and create custom loss functions (typically with negative item sampling) – Frame it as a weighted regression / classification problem (similar to weighted ALS for implicit data) DBG / June 6, 2018 / © 2018 IBM Corporation

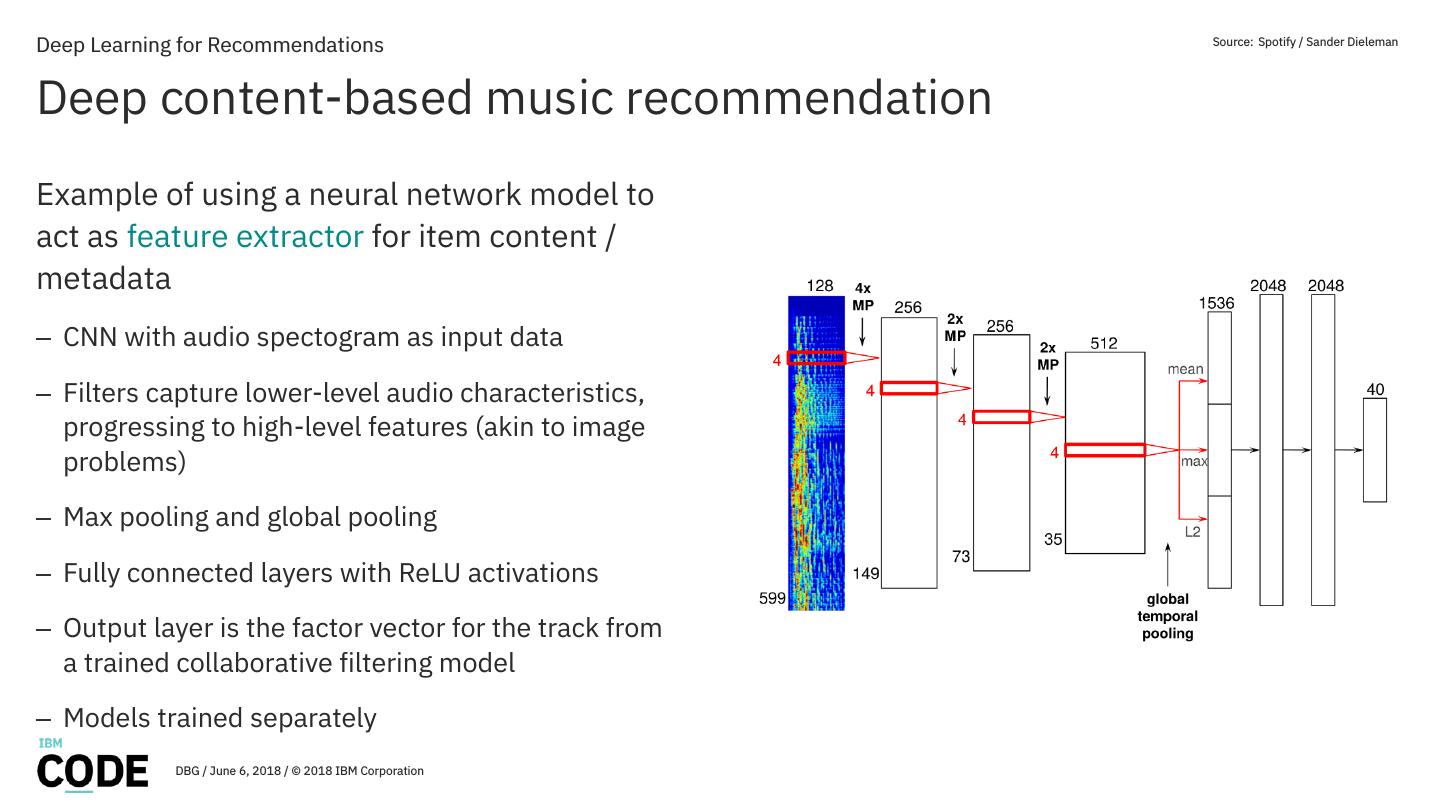

20 .Deep Learning for Recommendations Source: Spotify / Sander Dieleman Deep content-based music recommendation Example of using a neural network model to act as feature extractor for item content / metadata – CNN with audio spectogram as input data – Filters capture lower-level audio characteristics, progressing to high-level features (akin to image problems) – Max pooling and global pooling – Fully connected layers with ReLU activations – Output layer is the factor vector for the track from a trained collaborative filtering model – Models trained separately DBG / June 6, 2018 / © 2018 IBM Corporation

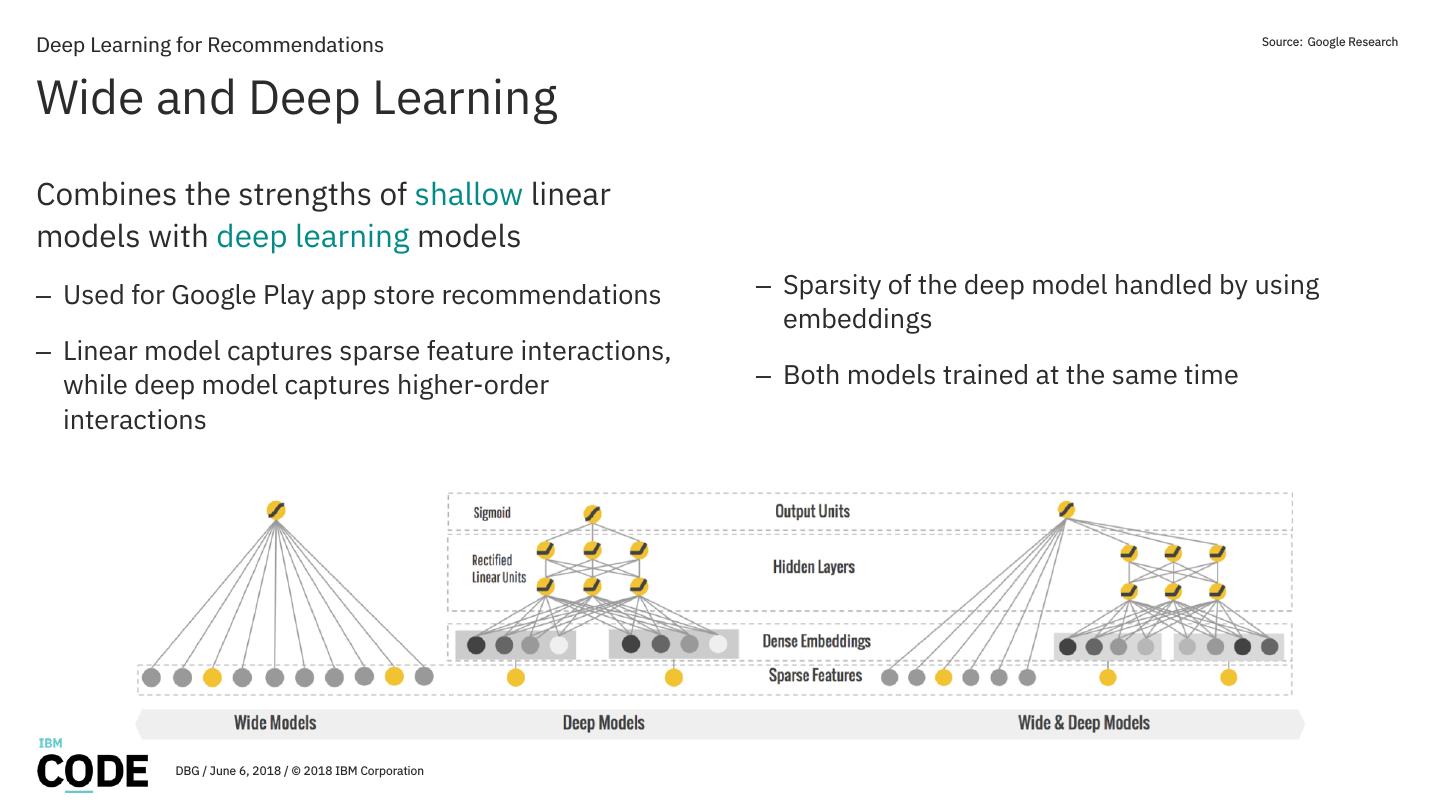

21 .Deep Learning for Recommendations Source: Google Research Wide and Deep Learning Combines the strengths of shallow linear models with deep learning models – Used for Google Play app store recommendations – Sparsity of the deep model handled by using embeddings – Linear model captures sparse feature interactions, while deep model captures higher-order – Both models trained at the same time interactions DBG / June 6, 2018 / © 2018 IBM Corporation

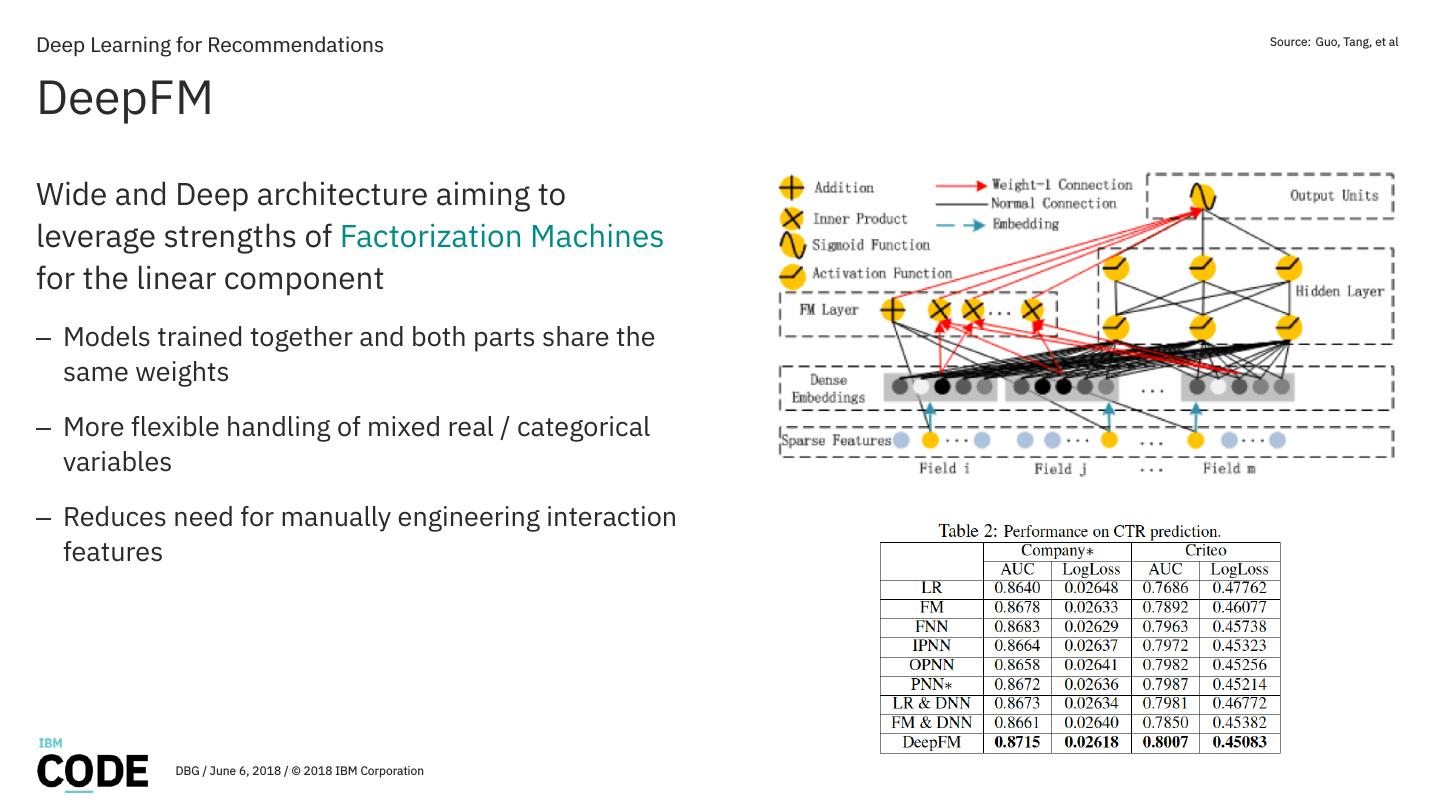

22 .Deep Learning for Recommendations Source: Guo, Tang, et al DeepFM Wide and Deep architecture aiming to leverage strengths of Factorization Machines for the linear component – Models trained together and both parts share the same weights – More flexible handling of mixed real / categorical variables – Reduces need for manually engineering interaction features DBG / June 6, 2018 / © 2018 IBM Corporation

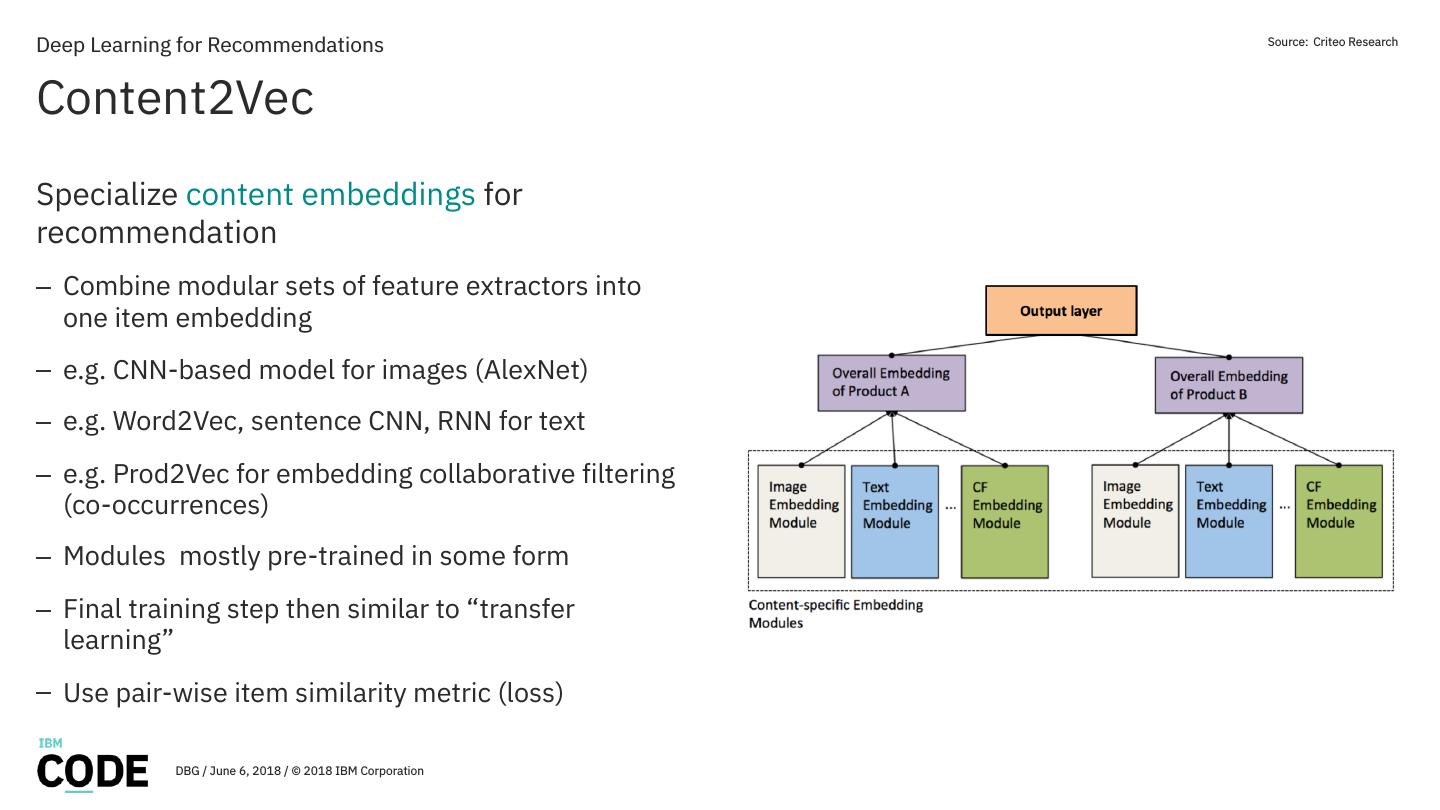

23 .Deep Learning for Recommendations Source: Criteo Research Content2Vec Specialize content embeddings for recommendation – Combine modular sets of feature extractors into one item embedding – e.g. CNN-based model for images (AlexNet) – e.g. Word2Vec, sentence CNN, RNN for text – e.g. Prod2Vec for embedding collaborative filtering (co-occurrences) – Modules mostly pre-trained in some form – Final training step then similar to “transfer learning” – Use pair-wise item similarity metric (loss) DBG / June 6, 2018 / © 2018 IBM Corporation

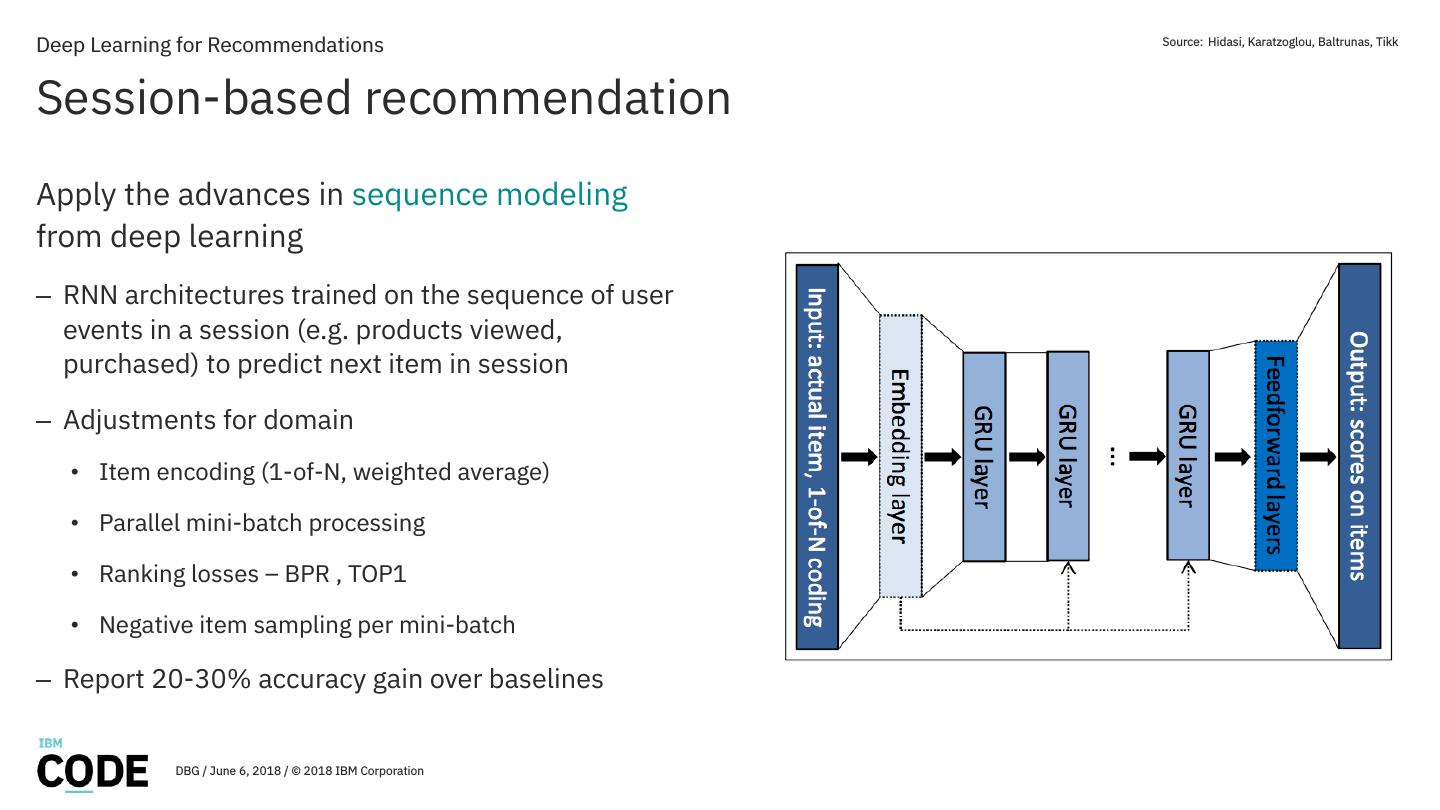

24 .Deep Learning for Recommendations Source: Hidasi, Karatzoglou, Baltrunas, Tikk Session-based recommendation Apply the advances in sequence modeling from deep learning – RNN architectures trained on the sequence of user events in a session (e.g. products viewed, purchased) to predict next item in session – Adjustments for domain • Item encoding (1-of-N, weighted average) • Parallel mini-batch processing • Ranking losses – BPR , TOP1 • Negative item sampling per mini-batch – Report 20-30% accuracy gain over baselines DBG / June 6, 2018 / © 2018 IBM Corporation

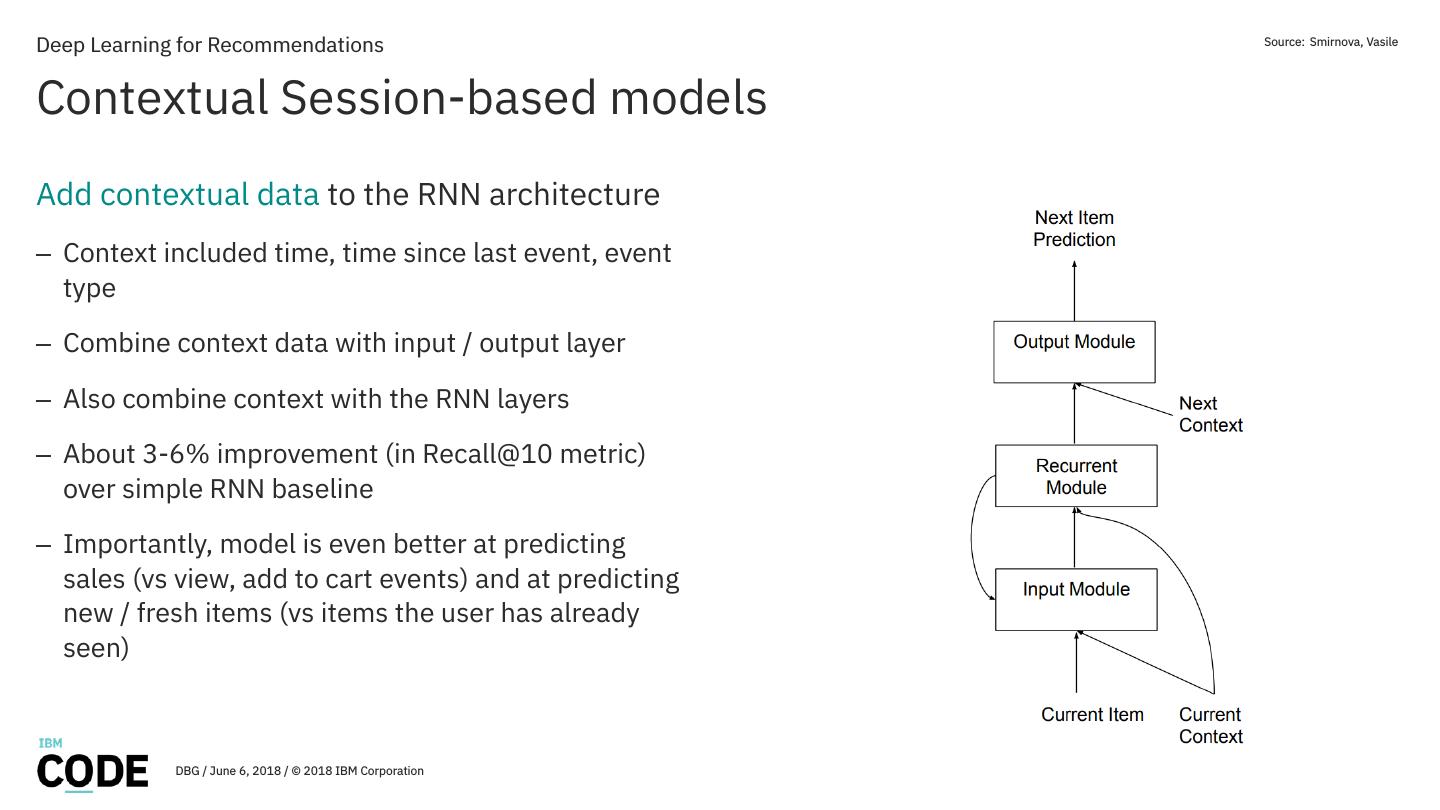

25 .Deep Learning for Recommendations Source: Smirnova, Vasile Contextual Session-based models Add contextual data to the RNN architecture – Context included time, time since last event, event type – Combine context data with input / output layer – Also combine context with the RNN layers – About 3-6% improvement (in Recall@10 metric) over simple RNN baseline – Importantly, model is even better at predicting sales (vs view, add to cart events) and at predicting new / fresh items (vs items the user has already seen) DBG / June 6, 2018 / © 2018 IBM Corporation

26 .Deep Learning for Recommendations Challenges Challenges particular to recommendation models – Metrics – model accuracy and its relation to real- – Data size and dimensionality (input & output) world outcomes and behaviors – Extreme sparsity – Need for standard, open, large-scale, datasets that have time / session data and are content- and • Embeddings & compressed representations context-rich – Wide variety of specialized settings – Evaluation – watch you baselines! – Combining session, content, context and • When Recurrent Neural Networks meet the preference data Neighborhood for Session-Based Recommendation – Model serving is difficult – ranking, large number of items, computationally expensive DBG / June 6, 2018 / © 2018 IBM Corporation

27 .Deep Learning for Recommendations Future Directions Most recent and future directions in research & industry – Applications at scale – Improved RNNs • Dimensionality reduction techniques (e.g. • Cross-session models feature hashing, Bloom embeddings for large • Further research on contextual models, as well input/output spaces) as content and metadata • Compression / quantization techniques – Combine sequence and historical models (long- • Distributed training and short-term user behavior) • Efficient model serving for complex – Improving and understanding user and item architectures embeddings DBG / June 6, 2018 / © 2018 IBM Corporation

28 .Deep Learning for Recommendations Summary DL for recommendation is just getting started (again) – Benefits from advances in DL for images, video, – Huge increase in interest, research papers. Already NLP many new models and approaches – Open-source libraries appearing (e.g. Spotlight) – DL approaches have generally yielded incremental % gains – Check out DLRS workshops & tutorials @ RecSys 2016 / 2017, and upcoming in Oct, 2018 • But that can translate to significant $$$ • More pronounced in e.g. session-based – Cold start scenarios benefit from multi-modal nature of DL models – Flexibility of DL frameworks helps a lot DBG / June 6, 2018 / © 2018 IBM Corporation

29 .Thank you MAX codait.org twitter.com/MLnick FfDL github.com/MLnick developer.ibm.com/code Sign up for IBM Cloud and try Watson Studio! https://datascience.ibm.com/ DBG / June 6, 2018 / © 2018 IBM Corporation