- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

随时随地写入文件系统

展开查看详情

1 . CS 202 Advanced OS WAFL: Write Anywhere File System

2 .Presenta<on credit: Mark Claypool from WPI. WAFL: WRITE ANYWHERE FILE SYSTEMS

3 . Introduc<on • In general, an appliance is a device designed to perform a specific func<on • Distributed systems trend has been to use appliances instead of general purpose computers. Examples: – routers from Cisco and Avici – network terminals – network printers • New type of network appliance is an Network File System (NFS) file server

4 . Introduc<on : NFS Appliance • NFS File Server Appliance file systems have different requirements than those of a general purpose file system – NFS access paOerns are different than local file access paOerns – Large client-side caches result in fewer reads than writes • Network Appliance Corpora<on uses a Write Anywhere File Layout (WAFL) file system

5 . Introduc<on : WAFL • WAFL has 4 requirements – Fast NFS service – Support large file systems (10s of GB) that can grow (can add disks later) – Provide high performance writes and support Redundant Arrays of Inexpensive Disks (RAID) – Restart quickly, even aXer unclean shutdown • NFS and RAID both strain write performance: – NFS server must respond aXer data is wriOen – RAID must write parity bits also

6 . Outline • Introduc<on (done) • Snapshots : User Level (next) • WAFL Implementa<on • Snapshots: System Level • Performance • Conclusions

7 . Introduc<on to Snapshots • Snapshots are a copy of the file system at a given point in <me – WAFL’s “claim to fame” • WAFL creates and deletes snapshots automa<cally at preset <mes – Up to 255 snapshots stored at once • Uses Copy-on-write to avoid duplica<ng blocks in the ac<ve file system • Snapshot uses: – Users can recover accidentally deleted files – Sys admins can create backups from running system – System can restart quickly aXer unclean shutdown • Roll back to previous snapshot

8 . User Access to Snapshots • Example, suppose accidentally removed file named “todo”: spike% ls -lut .snapshot/*/todo -rw-r--r-- 1 hitz 52880 Oct 15 00:00 .snapshot/nightly.0/todo -rw-r--r-- 1 hitz 52880 Oct 14 19:00 .snapshot/hourly.0/todo -rw-r--r-- 1 hitz 52829 Oct 14 15:00 .snapshot/hourly.1/todo -rw-r--r-- 1 hitz 55059 Oct 10 00:00 .snapshot/nightly.4/todo -rw-r--r-- 1 hitz 55059 Oct 9 00:00 .snapshot/nightly.5/todo • Can then recover most recent version: spike% cp .snapshot/hourly.0/todo todo • Note, snapshot directories (.snapshot) are hidden in that they don’t show up with ls

9 . Snapshot Administra<on • The WAFL server allows commands for sys admins to create and delete snapshots, but typically done automa<cally • At WPI, snapshots of /home: – 7:00 AM, 10:00, 1:00 PM, 4:00, 7:00, 10:00, 1:00 AM – Nightly snapshot at midnight every day – Weekly snapshot is made on Sunday at midnight every week • Thus, always have: 7 hourly, 7 daily snapshots, 2 weekly snapshots claypool 32 ccc3=>>pwd /home/claypool/.snapshot claypool 33 ccc3=>>ls hourly.0/ hourly.3/ hourly.6/ nightly.2/ nightly.5/ weekly.1/ hourly.1/ hourly.4/ nightly.0/ nightly.3/ nightly.6/ hourly.2/ hourly.5/ nightly.1/ nightly.4/ weekly.0/

10 . Outline • Introduc<on (done) • Snapshots : User Level (done) • WAFL Implementa<on (next) • Snapshots: System Level • Performance • Conclusions

11 . WAFL File Descriptors • Inode based system with 4 KB blocks • Inode has 16 pointers • For files smaller than 64 KB: – Each pointer points to data block • For files larger than 64 KB: – Each pointer points to indirect block • For really large files: – Each pointer points to doubly-indirect block • For very small files (less than 64 bytes), data kept in inode instead of pointers

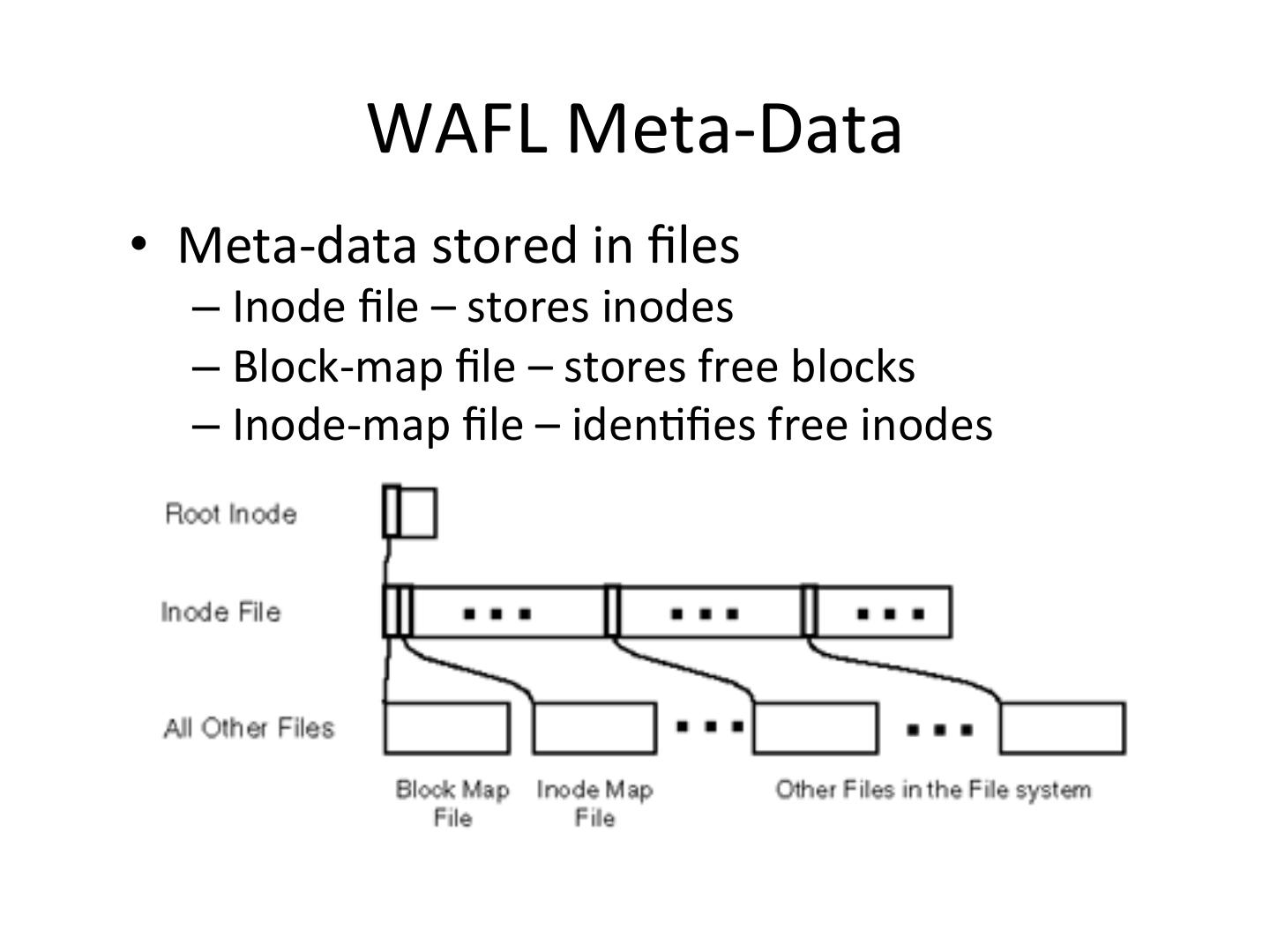

12 . WAFL Meta-Data • Meta-data stored in files – Inode file – stores inodes – Block-map file – stores free blocks – Inode-map file – iden<fies free inodes

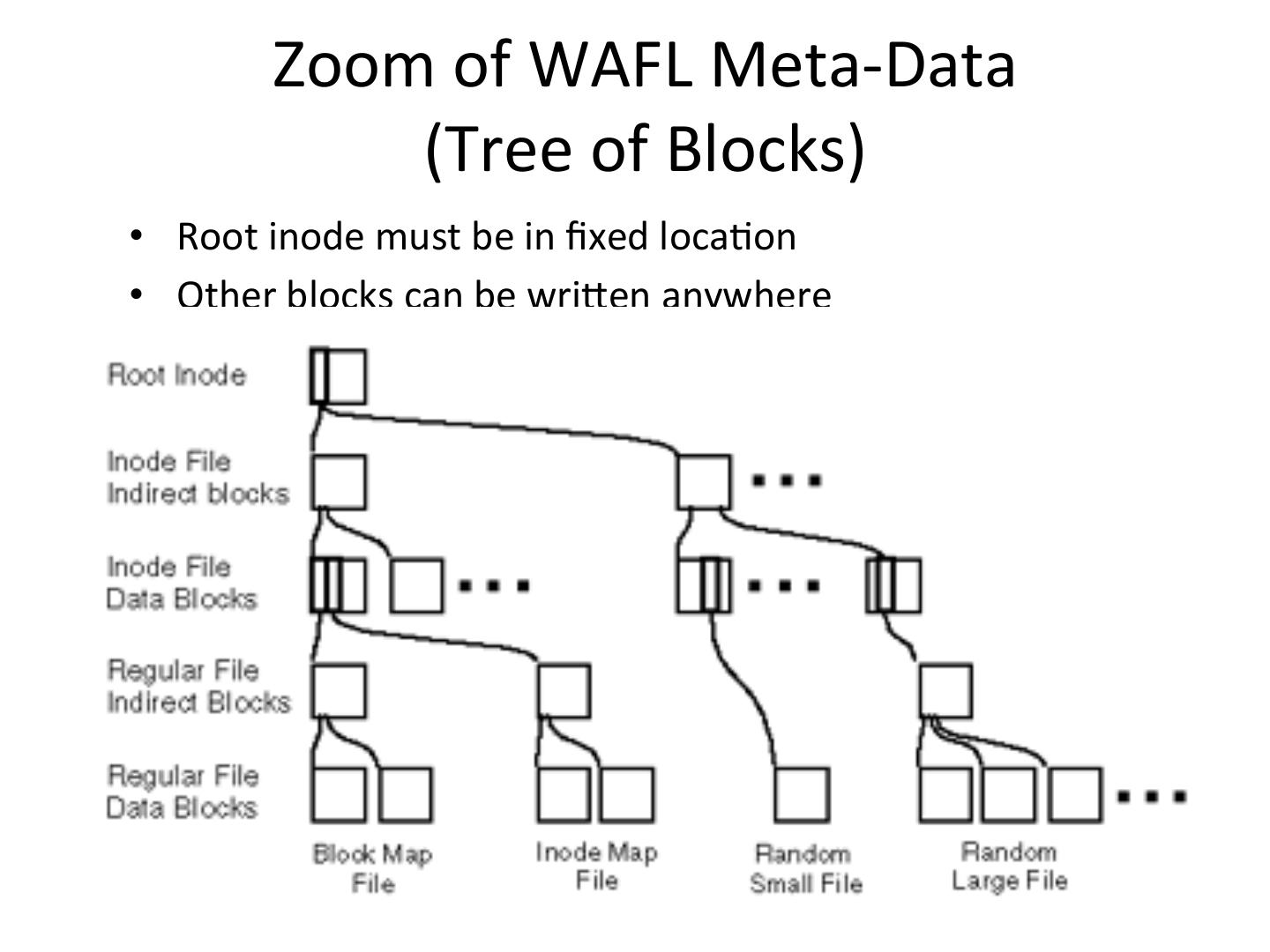

13 . Zoom of WAFL Meta-Data (Tree of Blocks) • Root inode must be in fixed loca<on • Other blocks can be wriOen anywhere

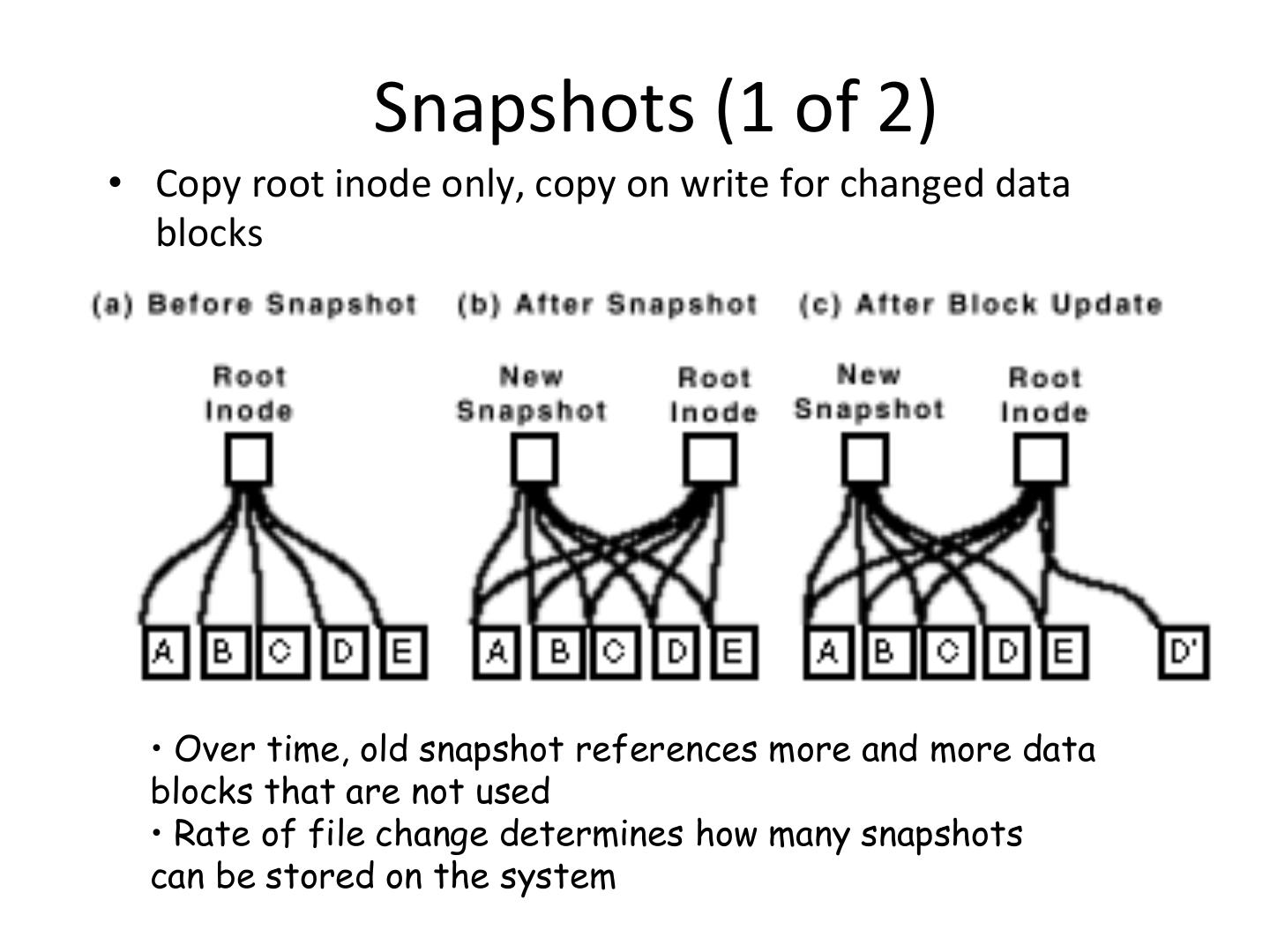

14 . Snapshots (1 of 2) • Copy root inode only, copy on write for changed data blocks • Over time, old snapshot references more and more data blocks that are not used • Rate of file change determines how many snapshots can be stored on the system

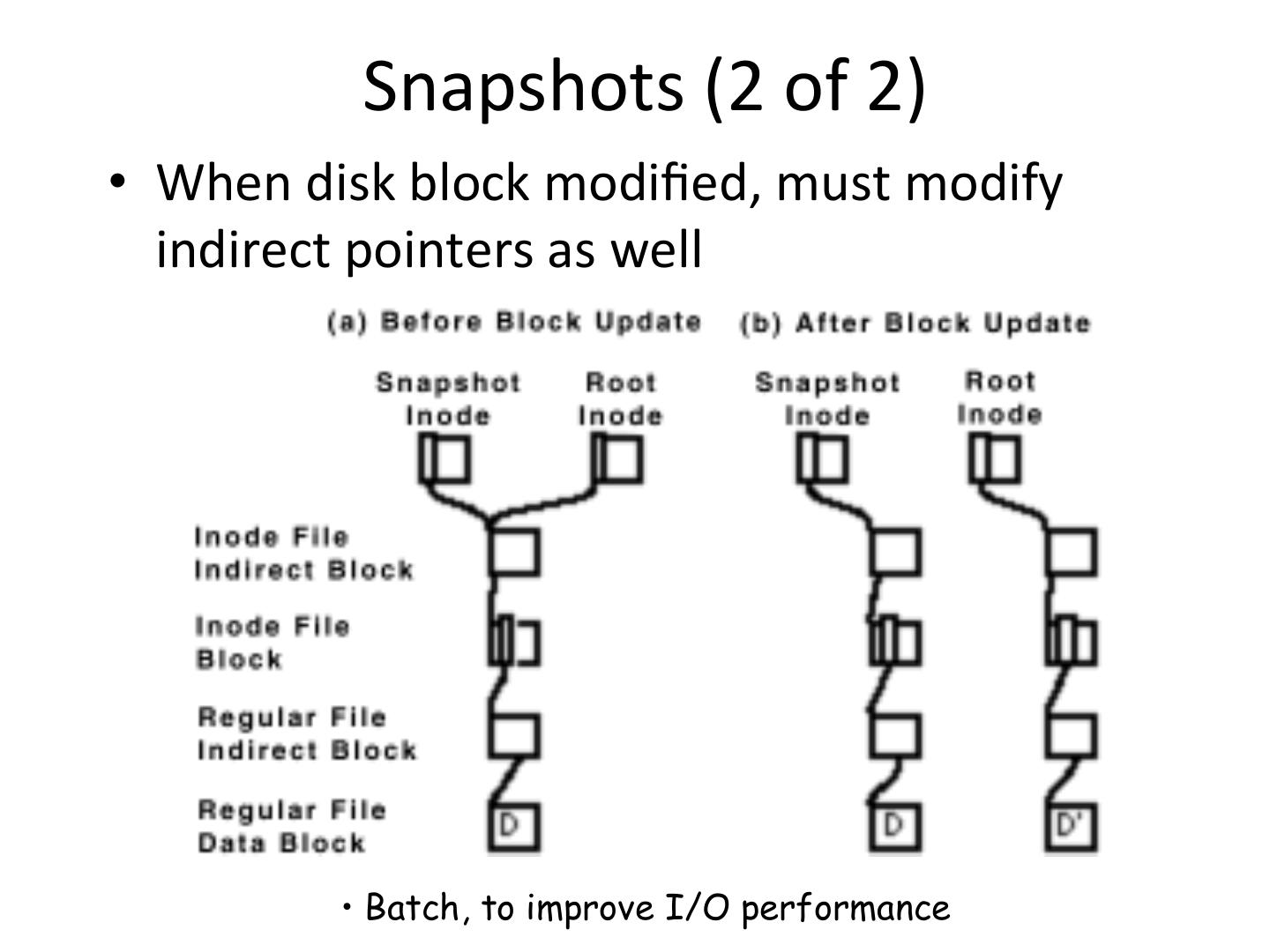

15 . Snapshots (2 of 2) • When disk block modified, must modify indirect pointers as well • Batch, to improve I/O performance

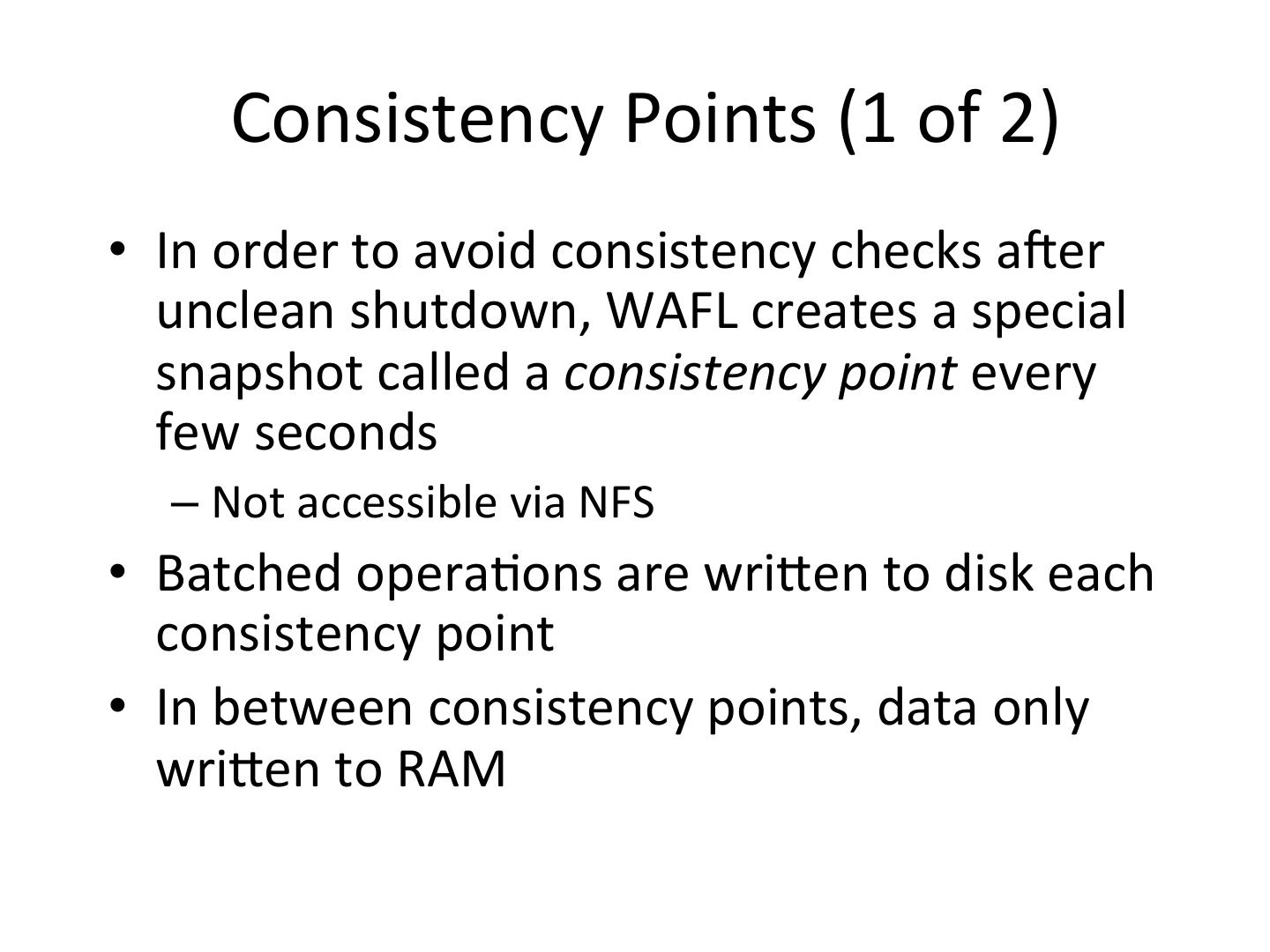

16 . Consistency Points (1 of 2) • In order to avoid consistency checks aXer unclean shutdown, WAFL creates a special snapshot called a consistency point every few seconds – Not accessible via NFS • Batched opera<ons are wriOen to disk each consistency point • In between consistency points, data only wriOen to RAM

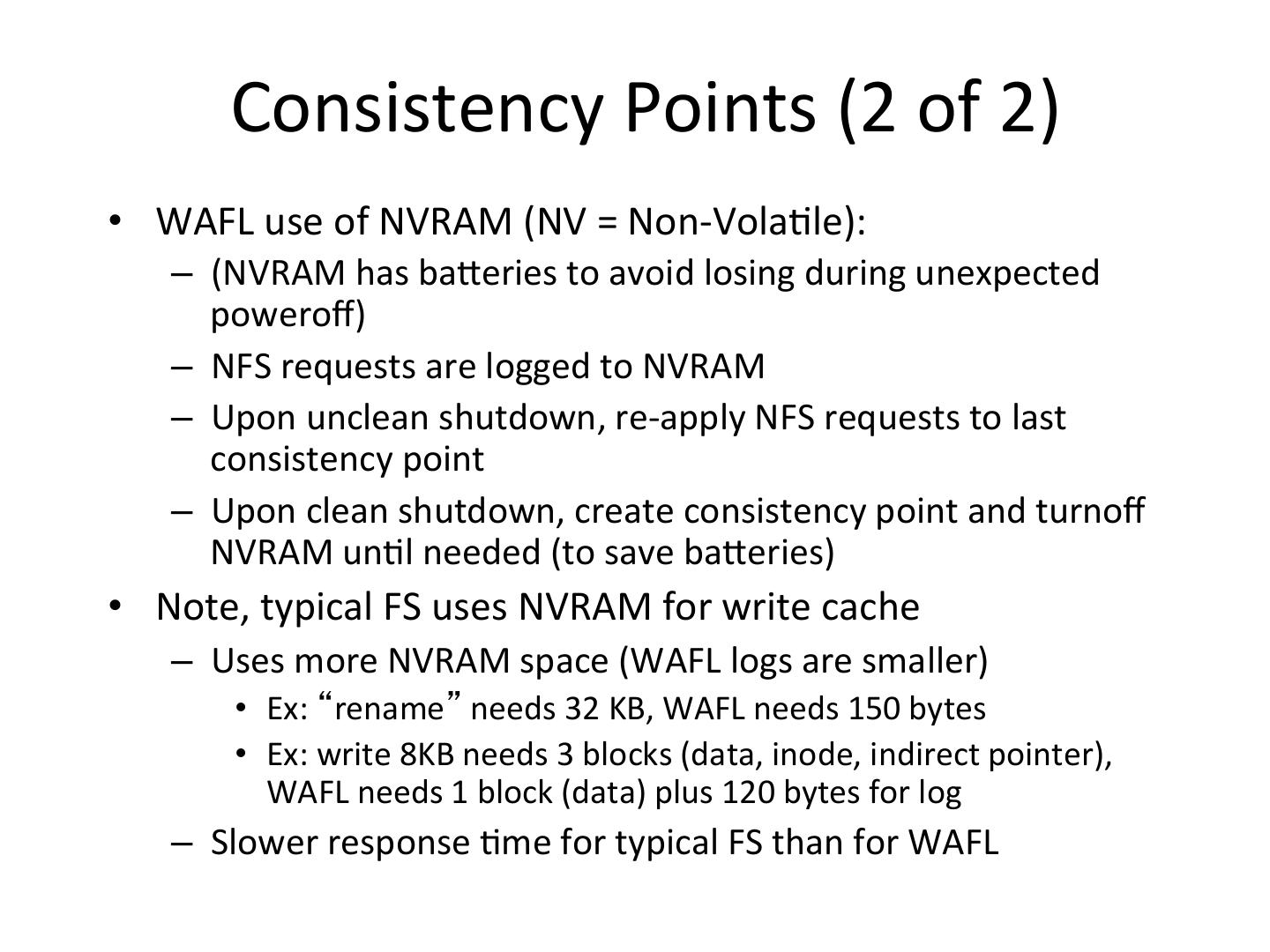

17 . Consistency Points (2 of 2) • WAFL use of NVRAM (NV = Non-Vola<le): – (NVRAM has baOeries to avoid losing during unexpected poweroff) – NFS requests are logged to NVRAM – Upon unclean shutdown, re-apply NFS requests to last consistency point – Upon clean shutdown, create consistency point and turnoff NVRAM un<l needed (to save baOeries) • Note, typical FS uses NVRAM for write cache – Uses more NVRAM space (WAFL logs are smaller) • Ex: “rename” needs 32 KB, WAFL needs 150 bytes • Ex: write 8KB needs 3 blocks (data, inode, indirect pointer), WAFL needs 1 block (data) plus 120 bytes for log – Slower response <me for typical FS than for WAFL

18 . Write Alloca<on • Write <mes dominate NFS performance – Read caches at client are large – 5x as many write opera<ons as read opera<ons at server • WAFL batches write requests • WAFL allows write anywhere, enabling inode next to data for beOer perf – Typical FS has inode informa<on and free blocks at fixed loca<on • WAFL allows writes in any order since uses consistency points – Typical FS writes in fixed order to allow fsck to work

19 . Outline • Introduc<on (done) • Snapshots : User Level (done) • WAFL Implementa<on (done) • Snapshots: System Level (next) • Performance • Conclusions

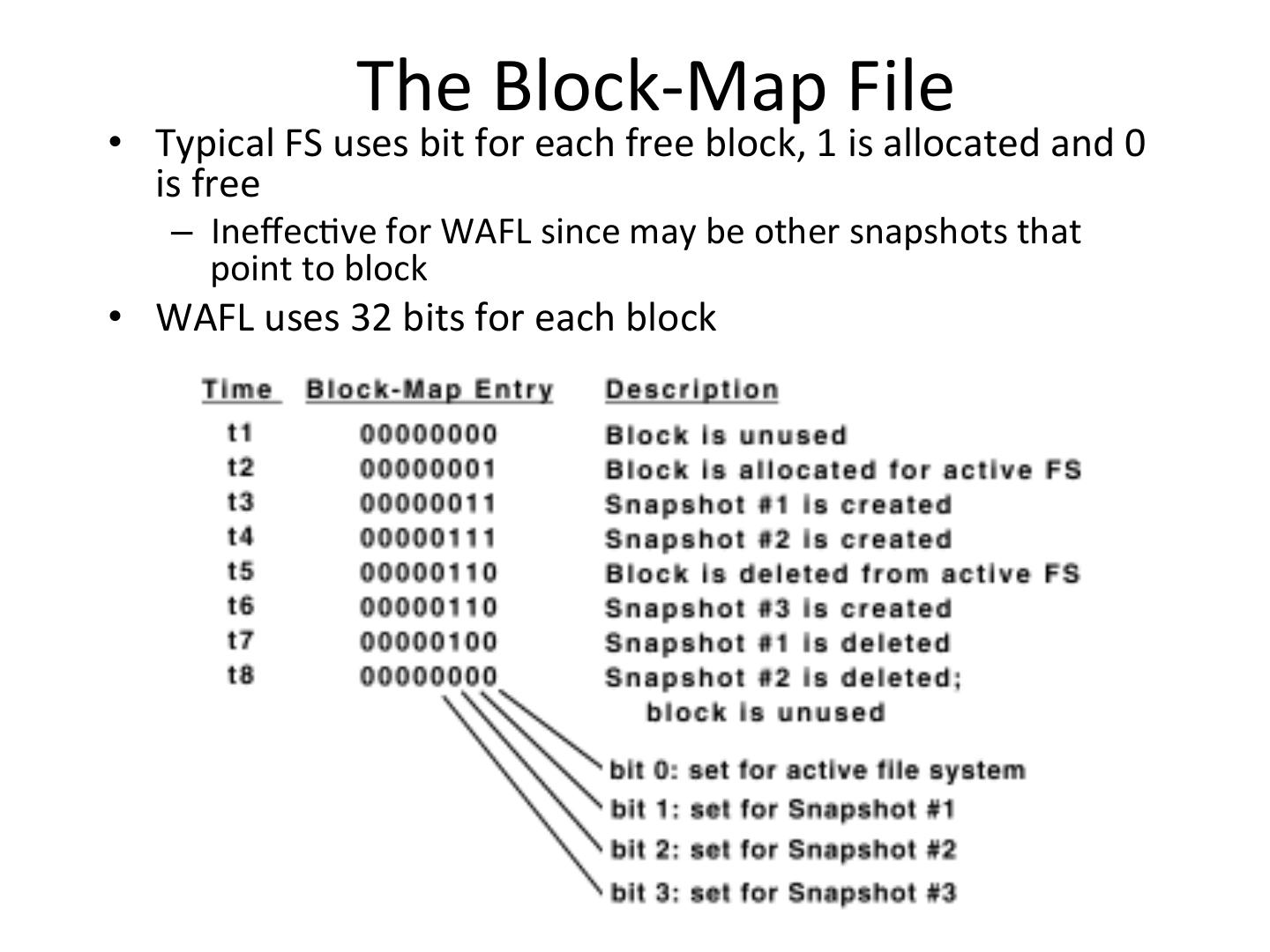

20 . The Block-Map File • Typical FS uses bit for each free block, 1 is allocated and 0 is free – Ineffec<ve for WAFL since may be other snapshots that point to block • WAFL uses 32 bits for each block

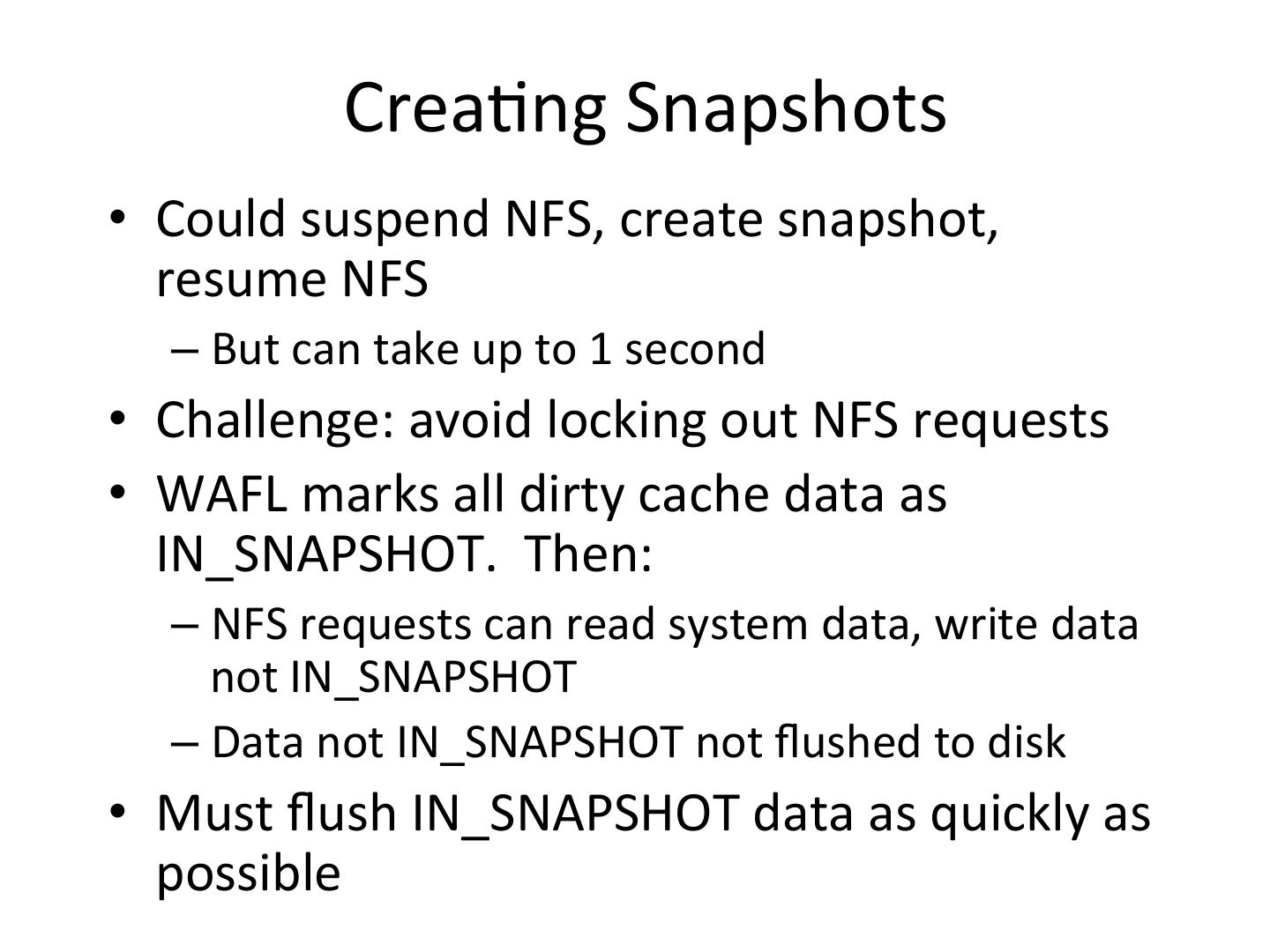

21 . Crea<ng Snapshots • Could suspend NFS, create snapshot, resume NFS – But can take up to 1 second • Challenge: avoid locking out NFS requests • WAFL marks all dirty cache data as IN_SNAPSHOT. Then: – NFS requests can read system data, write data not IN_SNAPSHOT – Data not IN_SNAPSHOT not flushed to disk • Must flush IN_SNAPSHOT data as quickly as possible

22 . Flushing IN_SNAPSHOT Data • Flush inode data first – Keeps two caches for inode data, so can copy system cache to inode data file, unblocking most NFS requests (requires no I/O since inode file flushed later) • Update block-map file – Copy ac<ve bit to snapshot bit • Write all IN_SNAPSHOT data – Restart any blocked requests • Duplicate root inode and turn off IN_SNAPSHOT bit • All done in less than 1 second, first step done in 100s of ms

23 . Outline • Introduc<on (done) • Snapshots : User Level (done) • WAFL Implementa<on (done) • Snapshots: System Level (done) • Performance (next) • Conclusions

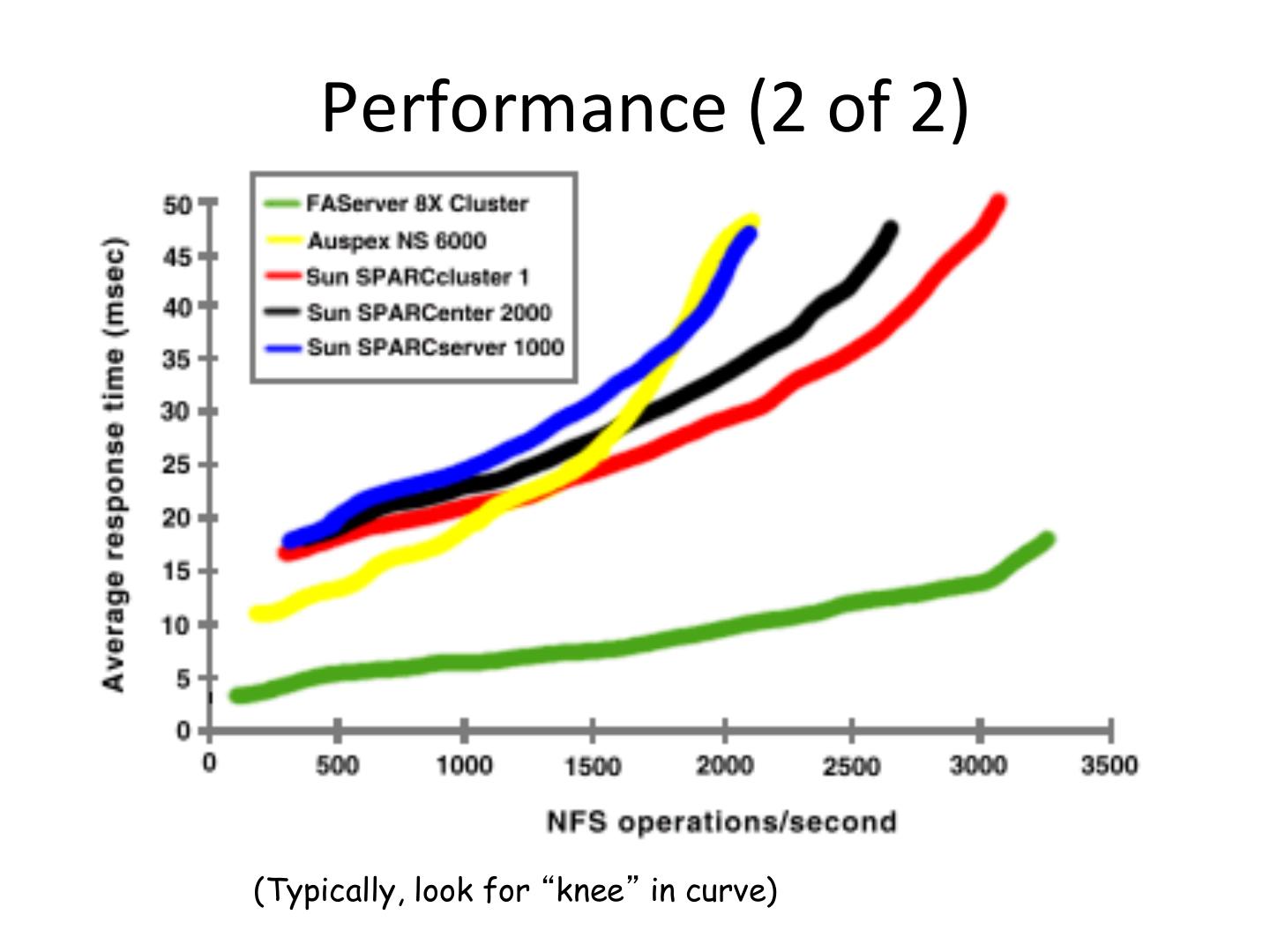

24 . Performance (1 of 2) • Compare against NFS systems • Best is SPEC NFS – LADDIS: Legato, Auspex, Digital, Data General, Interphase and Sun • Measure response <mes versus throughput • (Me: System Specifica<ons?!)

25 . Performance (2 of 2) (Typically, look for “knee” in curve)

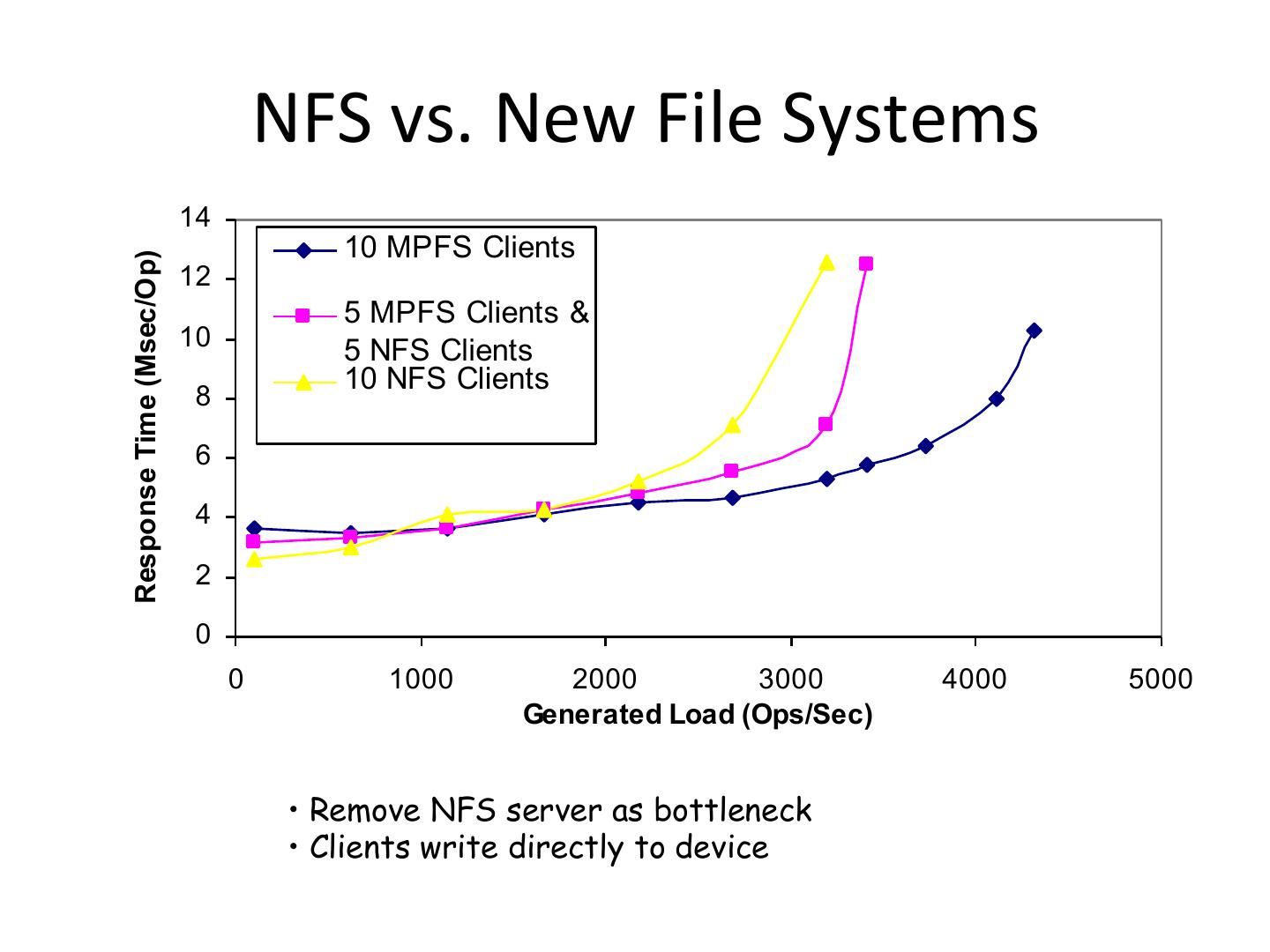

26 . NFS vs. New File Systems 14 10 MPFS Clients Response Time (Msec/Op) 12 5 MPFS Clients & 10 5 NFS Clients 10 NFS Clients 8 6 4 2 0 0 1000 2000 3000 4000 5000 Generated Load (Ops/Sec) • Remove NFS server as bottleneck • Clients write directly to device

27 . Conclusion • NetApp (with WAFL) works and is stable – Consistency points simple, reducing bugs in code – Easier to develop stable code for network appliance than for general system • Few NFS client implementa<ons and limited set of opera<ons so can test thoroughly