- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

系统可靠性,事务和分布式系统

展开查看详情

1 . Important “ilities” CS162 • Availability: the probability that the system can accept and process Operating Systems and requests – Often measured in “nines” of probability. So, a 99.9% probability is Systems Programming considered “3-nines of availability” Lecture 20 – Key idea here is independence of failures • Durability: the ability of a system to recover data despite faults Reliability, Transactions – This idea is fault tolerance applied to data Distributed Systems – Doesn’t necessarily imply availability: information on pyramids was very durable, but could not be accessed until discovery of Rosetta Stone November 6th, 2017 • Reliability: the ability of a system or component to perform its Prof. Ion Stoica required functions under stated conditions for a specified period of time (IEEE definition) http://cs162.eecs.Berkeley.edu – Usually stronger than simply availability: means that the system is not only “up”, but also working correctly – Includes availability, security, fault tolerance/durability – Must make sure data survives system crashes, disk crashes, etc 11/6/17 CS162 © UCB Fall 2017 Lec 20.2 How to Make File System Durable? RAID: Redundant Arrays of Inexpensive Disks • Disk blocks contain Reed-Solomon error correcting codes (ECC) to deal with small defects in disk drive • Invented by David Patterson, Garth A. Gibson, and – Can allow recovery of data from small media defects Randy Katz here at UCB in 1987 • Make sure writes survive in short term – Either abandon delayed writes or • Data stored on multiple disks (redundancy) – Use special, battery-backed RAM (called non-volatile RAM or NVRAM) for dirty blocks in buffer cache • Either in software or hardware – In hardware case, done by disk controller; file system may • Make sure that data survives in long term not even know that there is more than one disk in use – Need to replicate! More than one copy of data! – Important element: independence of failure » Could put copies on one disk, but if disk head fails… • Initially, five levels of RAID (more now) » Could put copies on different disks, but if server fails… » Could put copies on different servers, but if building is struck by lightning…. » Could put copies on servers in different continents… 11/6/17 CS162 © UCB Fall 2017 Lec 20.3 11/6/17 CS162 © UCB Fall 2017 Lec 20.4 Page 1

2 . RAID 1: Disk Mirroring/Shadowing RAID 5+: High I/O Rate Parity • Data stripped across Stripe Unit multiple disks D0 D1 D2 D3 P0 – Successive blocks recovery stored on successive Increasing group (non-parity) disks D4 D5 D6 P1 D7 Logical – Increased bandwidth Disk • Each disk is fully duplicated onto its “shadow” over single disk Addresses D8 D9 P2 D10 D11 – For high I/O rate, high availability environments • Parity block (in green) – Most expensive solution: 100% capacity overhead constructed by XORing D12 P3 D13 D14 D15 • Bandwidth sacrificed on write: data bocks in stripe – Logical write = two physical writes – P0=D0ÅD1ÅD2ÅD3 P4 D16 D17 D18 D19 – Highest bandwidth when disk heads and rotation fully – Can destroy any one synchronized (hard to do exactly) disk and still D20 D21 D22 D23 P5 • Reads may be optimized reconstruct data – Can have two independent reads to same data – Suppose Disk 3 fails, then can reconstruct: Disk 1 Disk 2 Disk 3 Disk 4 Disk 5 • Recovery: D2=D0ÅD1ÅD3ÅP0 – Disk failure Þ replace disk and copy data to new disk • Can spread information widely across internet for durability – Hot Spare: idle disk already attached to system to be used for – Overview now, more later in semester immediate replacement 11/6/17 CS162 © UCB Fall 2017 Lec 20.5 11/6/17 CS162 © UCB Fall 2017 Lec 20.6 Higher Durability/Reliability through Geographic Replication File System Reliability • Highly durable – hard to destroy all copies • What can happen if disk loses power or software crashes? • Highly available for reads – read any copy – Some operations in progress may complete • Low availability for writes – Some operations in progress may be lost – Can’t write if any one replica is not up – Overwrite of a block may only partially complete – Or – need relaxed consistency model • Reliability? – availability, security, durability, fault-tolerance • Having RAID doesn’t necessarily protect against all such failures Replica #1 – No protection against writing bad state – What if one disk of RAID group not written? Replica #2 • File system needs durability (as a minimum!) – Data previously stored can be retrieved (maybe after some recovery step), regardless of failure Replica #n 11/6/17 CS162 © UCB Fall 2017 Lec 20.7 11/6/17 CS162 © UCB Fall 2017 Lec 20.8 Page 2

3 . Storage Reliability Problem Threats to Reliability • Single logical file operation can involve updates to • Interrupted Operation multiple physical disk blocks – Crash or power failure in the middle of a series of related – inode, indirect block, data block, bitmap, … updates may leave stored data in an inconsistent state – With sector remapping, single update to physical disk block – Example: Transfer funds from one bank account to another can require multiple (even lower level) updates to sectors – What if transfer is interrupted after withdrawal and before deposit? • At a physical level, operations complete one at a time – Want concurrent operations for performance • Loss of stored data • How do we guarantee consistency regardless of when – Failure of non-volatile storage media may cause previously stored data to disappear or be corrupted crash occurs? 11/6/17 CS162 © UCB Fall 2017 Lec 20.9 11/6/17 CS162 © UCB Fall 2017 Lec 20.10 Reliability Approach #1: Careful Ordering FFS: Create a File • Sequence operations in a specific order Normal operation: Recovery: – Careful design to allow sequence to be interrupted safely • Allocate data block • Scan inode table • Write data block • If any unlinked files (not in • Post-crash recovery • Allocate inode any directory), delete or – Read data structures to see if there were any operations in put in lost & found dir • Write inode block progress • Compare free block – Clean up/finish as needed • Update bitmap of free bitmap against inode trees blocks and inodes • Scan directories for missing • Update directory with update/access times • Approach taken by file name ® inode – FAT and FFS (fsck) to protect filesystem structure/metadata number – Many app-level recovery schemes (e.g., Word, emacs autosaves) • Update modify time for Time proportional to disk size directory 11/6/17 CS162 © UCB Fall 2017 Lec 20.11 11/6/17 CS162 © UCB Fall 2017 Lec 20.12 Page 3

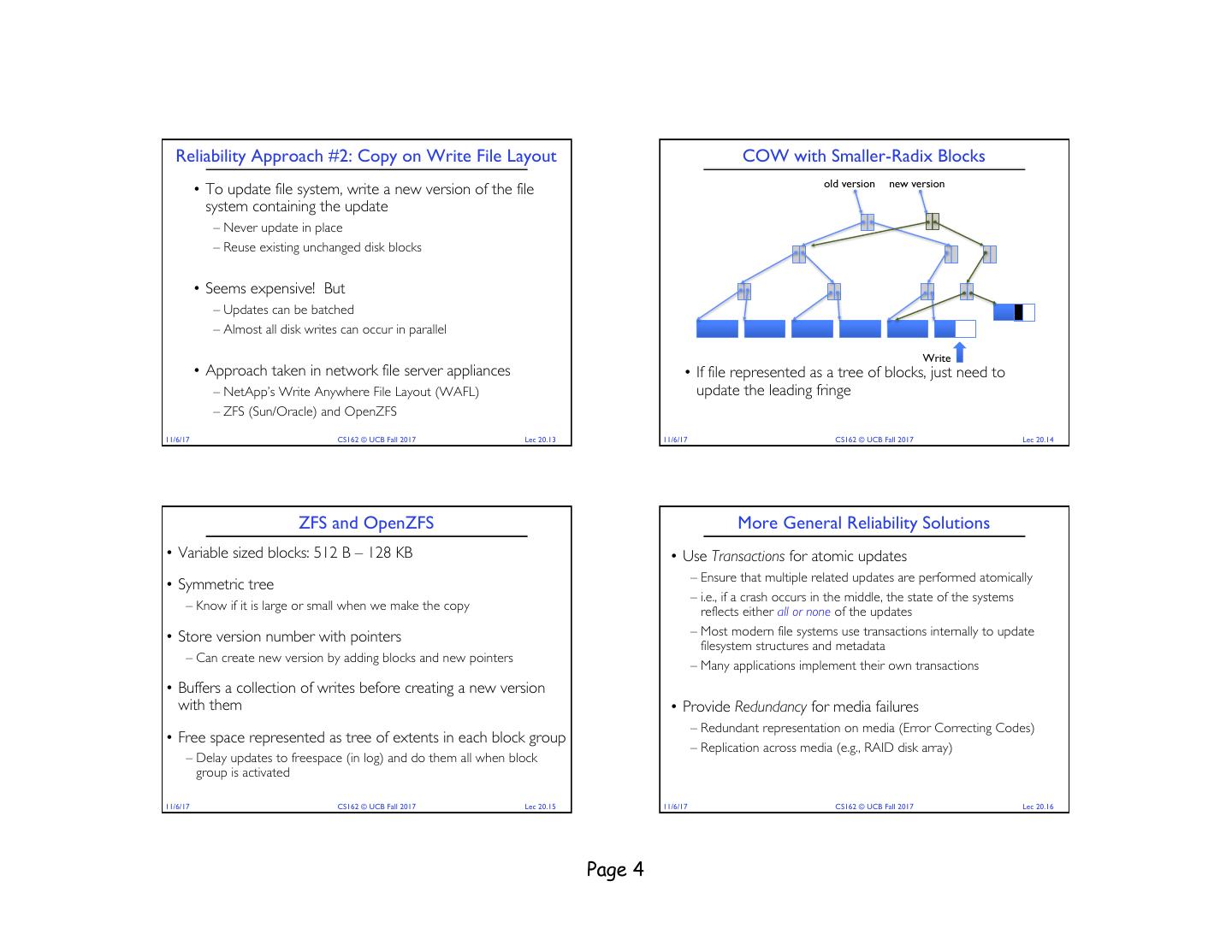

4 . Reliability Approach #2: Copy on Write File Layout COW with Smaller-Radix Blocks old version new version • To update file system, write a new version of the file system containing the update – Never update in place – Reuse existing unchanged disk blocks • Seems expensive! But – Updates can be batched – Almost all disk writes can occur in parallel Write • Approach taken in network file server appliances • If file represented as a tree of blocks, just need to – NetApp’s Write Anywhere File Layout (WAFL) update the leading fringe – ZFS (Sun/Oracle) and OpenZFS 11/6/17 CS162 © UCB Fall 2017 Lec 20.13 11/6/17 CS162 © UCB Fall 2017 Lec 20.14 ZFS and OpenZFS More General Reliability Solutions • Variable sized blocks: 512 B – 128 KB • Use Transactions for atomic updates – Ensure that multiple related updates are performed atomically • Symmetric tree – i.e., if a crash occurs in the middle, the state of the systems – Know if it is large or small when we make the copy reflects either all or none of the updates • Store version number with pointers – Most modern file systems use transactions internally to update filesystem structures and metadata – Can create new version by adding blocks and new pointers – Many applications implement their own transactions • Buffers a collection of writes before creating a new version with them • Provide Redundancy for media failures – Redundant representation on media (Error Correcting Codes) • Free space represented as tree of extents in each block group – Replication across media (e.g., RAID disk array) – Delay updates to freespace (in log) and do them all when block group is activated 11/6/17 CS162 © UCB Fall 2017 Lec 20.15 11/6/17 CS162 © UCB Fall 2017 Lec 20.16 Page 4

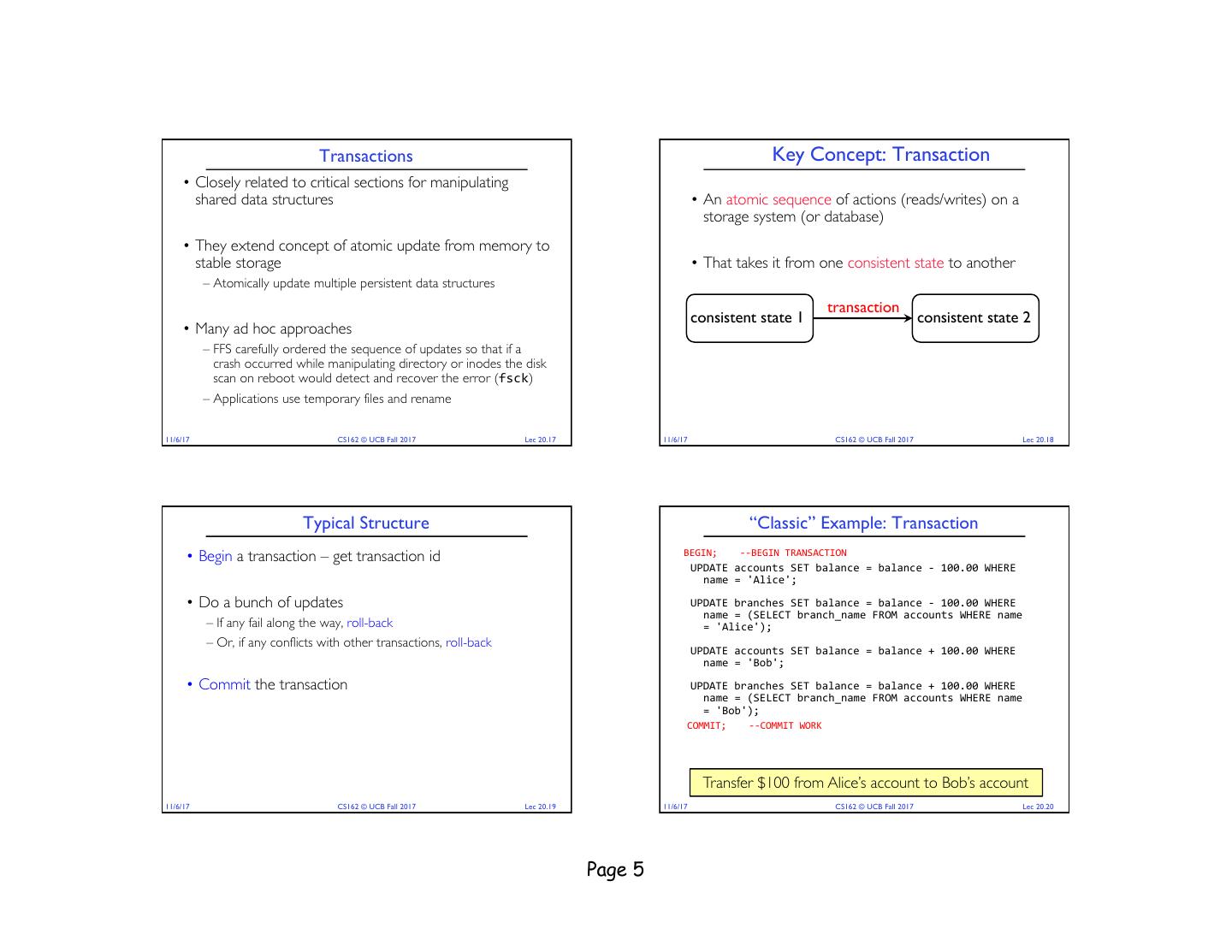

5 . Transactions Key Concept: Transaction • Closely related to critical sections for manipulating shared data structures • An atomic sequence of actions (reads/writes) on a storage system (or database) • They extend concept of atomic update from memory to stable storage • That takes it from one consistent state to another – Atomically update multiple persistent data structures transaction consistent state 1 consistent state 2 • Many ad hoc approaches – FFS carefully ordered the sequence of updates so that if a crash occurred while manipulating directory or inodes the disk scan on reboot would detect and recover the error (fsck) – Applications use temporary files and rename 11/6/17 CS162 © UCB Fall 2017 Lec 20.17 11/6/17 CS162 © UCB Fall 2017 Lec 20.18 Typical Structure “Classic” Example: Transaction • Begin a transaction – get transaction id BEGIN; --BEGIN TRANSACTION UPDATE accounts SET balance = balance - 100.00 WHERE name = 'Alice'; • Do a bunch of updates UPDATE branches SET balance = balance - 100.00 WHERE name = (SELECT branch_name FROM accounts WHERE name – If any fail along the way, roll-back = 'Alice'); – Or, if any conflicts with other transactions, roll-back UPDATE accounts SET balance = balance + 100.00 WHERE name = 'Bob'; • Commit the transaction UPDATE branches SET balance = balance + 100.00 WHERE name = (SELECT branch_name FROM accounts WHERE name = 'Bob'); COMMIT; --COMMIT WORK Transfer $100 from Alice’s account to Bob’s account 11/6/17 CS162 © UCB Fall 2017 Lec 20.19 11/6/17 CS162 © UCB Fall 2017 Lec 20.20 Page 5

6 . The ACID properties of Transactions Administrivia • Ion out on Wednesday • Atomicity: all actions in the transaction happen, or none – Washington, DC, for an important NSF presentation happen – (I expect this will the last time I’m out by the end of semester) • Consistency: transactions maintain data integrity, e.g., • Wednesday lecture will be given by Aurojit Panda – Balance cannot be negative – PhD (Networking), 2017 – Cannot reschedule meeting on February 30 – Assistant Professor, NYU • Isolation: execution of one transaction is isolated from that of all others; no problems from concurrency • Durability: if a transaction commits, its effects persist despite crashes 11/6/17 CS162 © UCB Fall 2017 Lec 20.21 11/6/17 CS162 © UCB Fall 2017 Lec 20.22 Transactional File Systems (1/2) • Better reliability through use of log – All changes are treated as transactions – A transaction is committed once it is written to the log » Data forced to disk for reliability (improve perf. w/ NVRAM) – File system may not be updated immediately, data preserved in the log • Difference between “Log Structured” and “Journaling” – In a Log Structured filesystem, data stays in log form Break – In a Journaling filesystem, Log used for recovery 11/6/17 CS162 © UCB Fall 2017 Lec 20.23 11/6/17 CS162 © UCB Fall 2017 Lec 20.24 Page 6

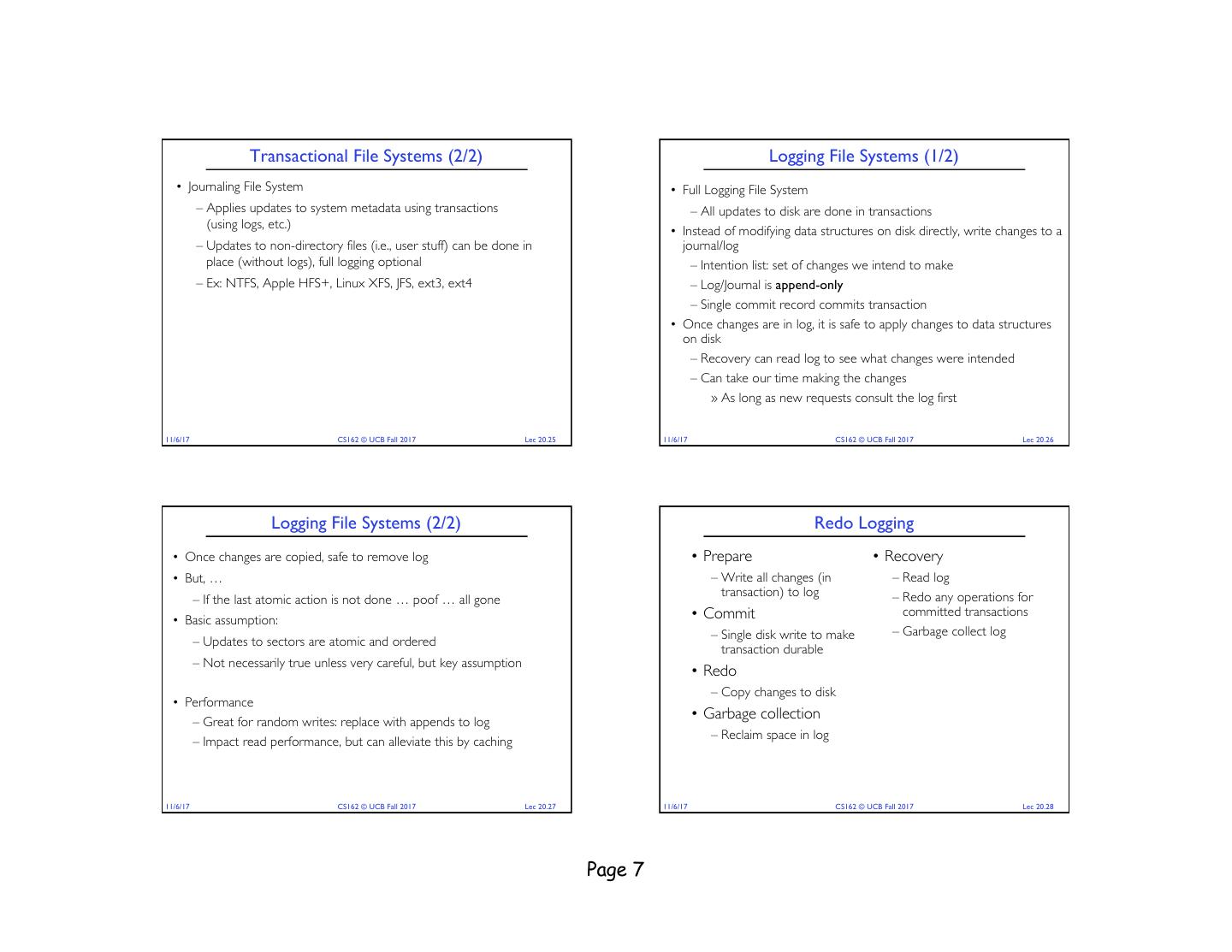

7 . Transactional File Systems (2/2) Logging File Systems (1/2) • Journaling File System • Full Logging File System – Applies updates to system metadata using transactions – All updates to disk are done in transactions (using logs, etc.) • Instead of modifying data structures on disk directly, write changes to a – Updates to non-directory files (i.e., user stuff) can be done in journal/log place (without logs), full logging optional – Intention list: set of changes we intend to make – Ex: NTFS, Apple HFS+, Linux XFS, JFS, ext3, ext4 – Log/Journal is append-only – Single commit record commits transaction • Once changes are in log, it is safe to apply changes to data structures on disk – Recovery can read log to see what changes were intended – Can take our time making the changes » As long as new requests consult the log first 11/6/17 CS162 © UCB Fall 2017 Lec 20.25 11/6/17 CS162 © UCB Fall 2017 Lec 20.26 Logging File Systems (2/2) Redo Logging • Once changes are copied, safe to remove log • Prepare • Recovery • But, … – Write all changes (in – Read log transaction) to log – Redo any operations for – If the last atomic action is not done … poof … all gone • Commit committed transactions • Basic assumption: – Single disk write to make – Garbage collect log – Updates to sectors are atomic and ordered transaction durable – Not necessarily true unless very careful, but key assumption • Redo – Copy changes to disk • Performance – Great for random writes: replace with appends to log • Garbage collection – Reclaim space in log – Impact read performance, but can alleviate this by caching 11/6/17 CS162 © UCB Fall 2017 Lec 20.27 11/6/17 CS162 © UCB Fall 2017 Lec 20.28 Page 7

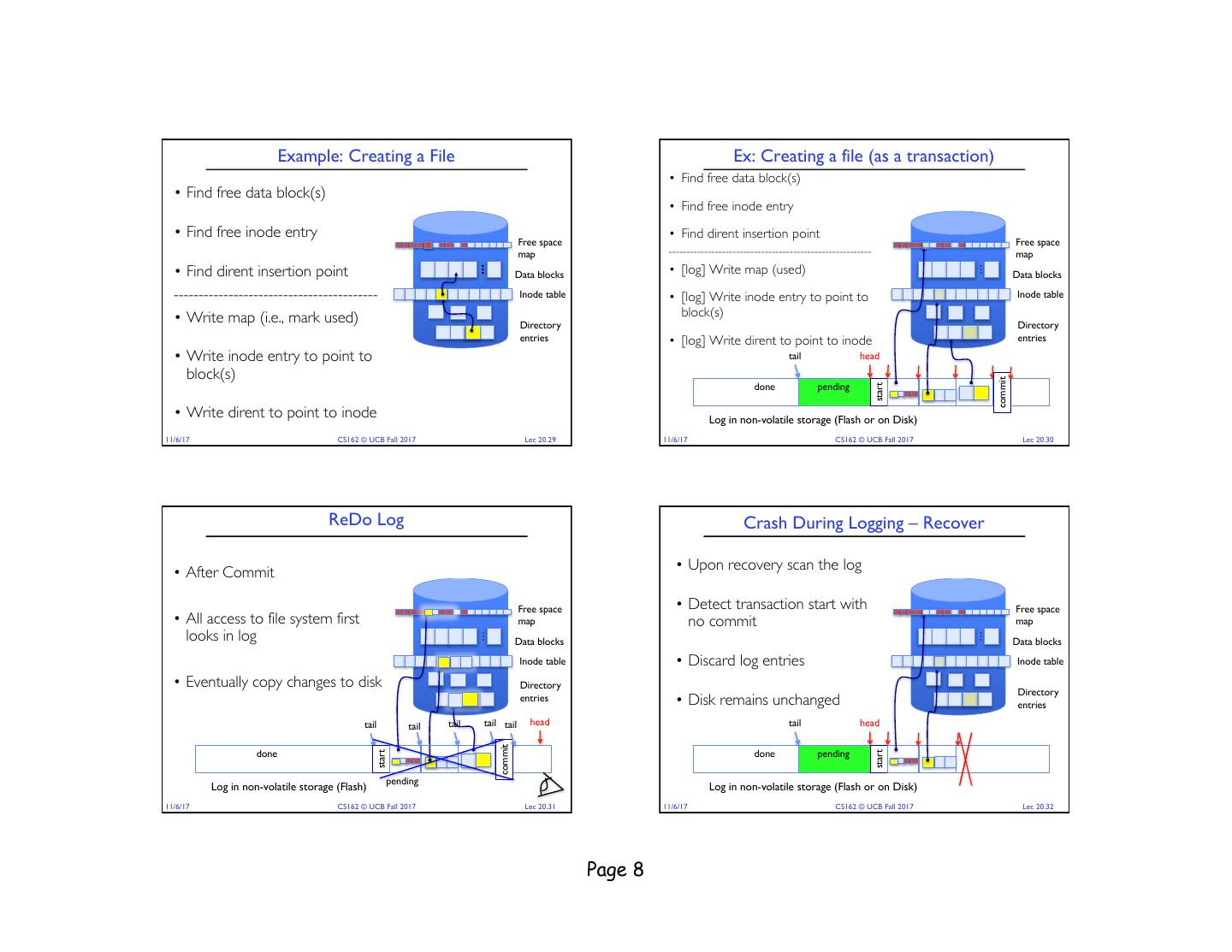

8 . Example: Creating a File Ex: Creating a file (as a transaction) • Find free data block(s) • Find free data block(s) • Find free inode entry • Find free inode entry • Find dirent insertion point Free space Free space map --------------------------------------------------------- map • Find dirent insertion point • [log] Write map (used) … … Data blocks Data blocks ----------------------------------------- Inode table • [log] Write inode entry to point to Inode table • Write map (i.e., mark used) block(s) Directory Directory entries • [log] Write dirent to point to inode entries • Write inode entry to point to tail head block(s) commit done pending start • Write dirent to point to inode Log in non-volatile storage (Flash or on Disk) 11/6/17 CS162 © UCB Fall 2017 Lec 20.29 11/6/17 CS162 © UCB Fall 2017 Lec 20.30 ReDo Log Crash During Logging – Recover • After Commit • Upon recovery scan the log Free space • Detect transaction start with Free space • All access to file system first map no commit map looks in log … … Data blocks Data blocks Inode table • Discard log entries Inode table • Eventually copy changes to disk Directory Directory entries • Disk remains unchanged entries tail tail tail tail tail head tail head commit done done pending start start pending Log in non-volatile storage (Flash) Log in non-volatile storage (Flash or on Disk) 11/6/17 CS162 © UCB Fall 2017 Lec 20.31 11/6/17 CS162 © UCB Fall 2017 Lec 20.32 Page 8

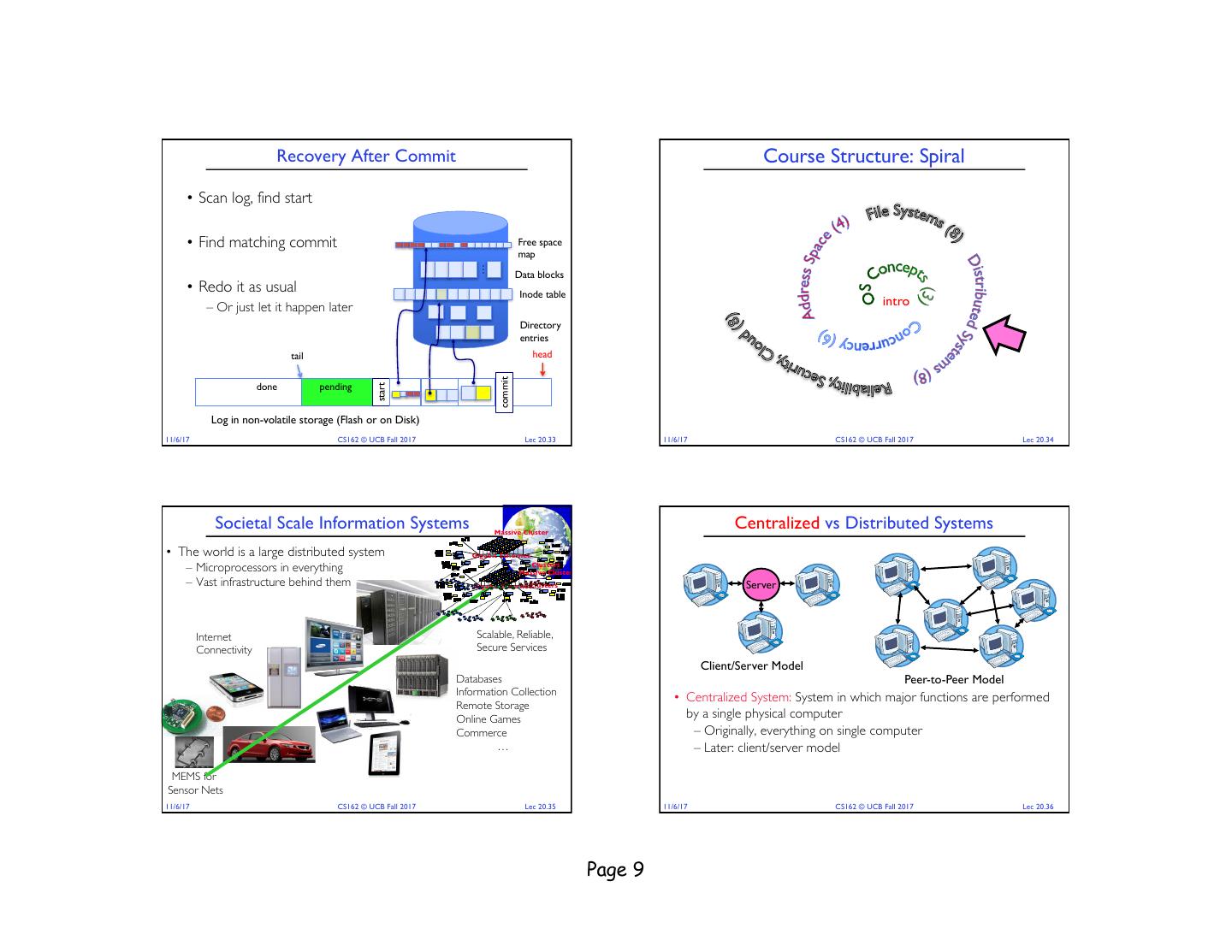

9 . Recovery After Commit Course Structure: Spiral • Scan log, find start • Find matching commit Free space map … Data blocks • Redo it as usual Inode table – Or just let it happen later intro Directory entries tail head commit done pending start Log in non-volatile storage (Flash or on Disk) 11/6/17 CS162 © UCB Fall 2017 Lec 20.33 11/6/17 CS162 © UCB Fall 2017 Lec 20.34 Societal Scale Information Systems Massive Cluster Centralized vs Distributed Systems • The world is a large distributed system Gigabit Ethernet – Microprocessors in everything Clusters Massive Cluster – Vast infrastructure behind them Gigabit EthernetClusters Server Internet Scalable, Reliable, Connectivity Secure Services Client/Server Model Databases Peer-to-Peer Model Information Collection • Centralized System: System in which major functions are performed Remote Storage Online Games by a single physical computer Commerce – Originally, everything on single computer … – Later: client/server model MEMS for Sensor Nets 11/6/17 CS162 © UCB Fall 2017 Lec 20.35 11/6/17 CS162 © UCB Fall 2017 Lec 20.36 Page 9

10 . Centralized vs Distributed Systems Distributed Systems: Motivation/Issues/Promise • Why do we want distributed systems? – Cheaper and easier to build lots of simple computers Server – Easier to add power incrementally – Users can have complete control over some components – Collaboration: much easier for users to collaborate through network resources (such as network file systems) Client/Server Model Peer-to-Peer Model • The promise of distributed systems: • Distributed System: physically separate computers working together – Higher availability: one machine goes down, use another on some task – Better durability: store data in multiple locations – Early model: multiple servers working together – More security: each piece easier to make secure » Probably in the same room or building » Often called a “cluster” – Later models: peer-to-peer/wide-spread collaboration 11/6/17 CS162 © UCB Fall 2017 Lec 20.37 11/6/17 CS162 © UCB Fall 2017 Lec 20.38 Distributed Systems: Reality Distributed Systems: Goals/Requirements • Transparency: the ability of the system to mask its • Reality has been disappointing complexity behind a simple interface – Worse availability: depend on every machine being up • Possible transparencies: » Lamport: “a distributed system is one where I can’t do work – Location: Can’t tell where resources are located because some machine I’ve never heard of isn’t working!” – Migration: Resources may move without the user knowing – Worse reliability: can lose data if any machine crashes – Replication: Can’t tell how many copies of resource exist – Worse security: anyone in world can break into system – Concurrency: Can’t tell how many users there are – Parallelism: System may speed up large jobs by splitting them into • Coordination is more difficult smaller pieces – Must coordinate multiple copies of shared state information (using – Fault Tolerance: System may hide various things that go wrong only a network) • Transparency and collaboration require some way for – What would be easy in a centralized system becomes a lot more different processors to communicate with one another difficult 11/6/17 CS162 © UCB Fall 2017 Lec 20.39 11/6/17 CS162 © UCB Fall 2017 Lec 20.40 Page 10

11 . Summary • RAID: Redundant Arrays of Inexpensive Disks – RAID1: mirroring, RAID5: Parity block • Use of Log to improve Reliability – Journaling file systems such as ext3, NTFS • Transactions: ACID semantics – Atomicity – Consistency – Isolation – Durability 11/6/17 CS162 © UCB Fall 2017 Lec 20.41 Page 11