- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

并发(线程和进程)

展开查看详情

1 . Recall: Use of Threads • Version of program with Threads (loose syntax): CS162 Operating Systems and main() { Systems Programming ThreadFork(ComputePI, ̎pi.txt̎)); Lecture 6 ThreadFork(PrintClassList, ̎classlist.txt̎)); } Concurrency (Continued), Thread and Processes • What does ThreadFork() do? – Start independent thread running given procedure • What is the behavior here? September 13, 2017 – Now, you would actually see the class list Prof. Ion Stoica – This should behave as if there are two separate CPUs http://cs162.eecs.Berkeley.edu CPU1 CPU2 CPU1 CPU2 CPU1 CPU2 Time 9/13/17 CS162 ©UCB Fall 2017 Lec 6.2 Recall: Memory Footprint: Two-Threads Actual Thread Operations • thread_fork(func, args) – Create a new thread to run func(args) • If we stopped this program and examined it with a debugger, we would see – Pintos: thread_create – Two sets of CPU registers Stack 1 • thread_yield() – Two sets of Stacks – Relinquish processor voluntarily – Pintos: thread_yield • Questions: Stack 2 • thread_join(thread) Address Space – How do we position stacks relative to – In parent, wait for forked thread to exit, then return each other? – Pintos: thread_join – What maximum size should we choose • thread_exit() for the stacks? Heap – Quit thread and clean up, wake up joiner if any – What happens if threads violate this? Global Data – Pintos: thread_exit – How might you catch violations? Code • pThreads: POSIX standard for thread programming [POSIX.1c, Threads extensions (IEEE Std 1003.1c-1995)] 9/13/17 CS162 ©UCB Fall 2017 Lec 6.3 9/13/17 CS162 ©UCB Fall 2017 Lec 6.4 Page 1

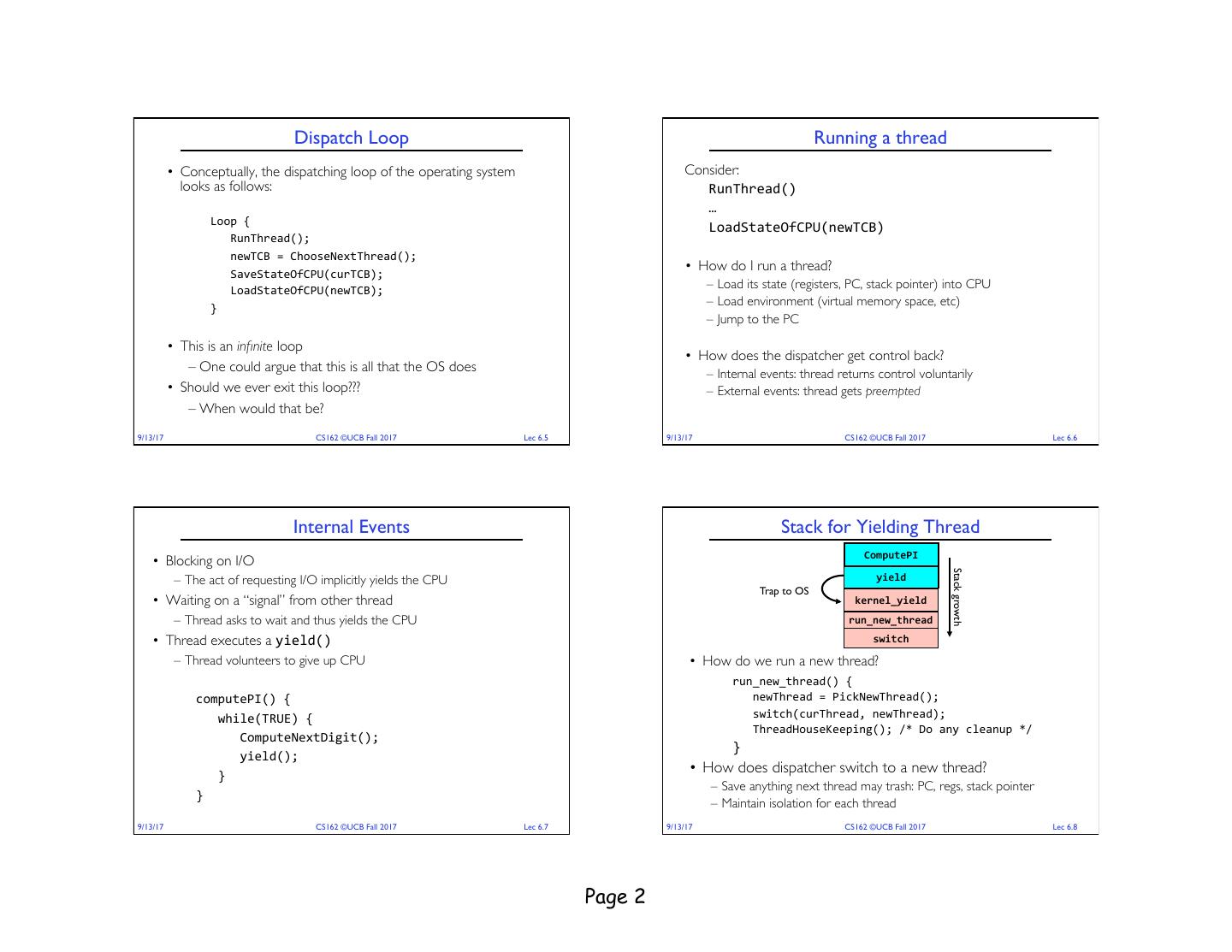

2 . Dispatch Loop Running a thread • Conceptually, the dispatching loop of the operating system Consider: looks as follows: RunThread() … Loop { LoadStateOfCPU(newTCB) RunThread(); newTCB = ChooseNextThread(); • How do I run a thread? SaveStateOfCPU(curTCB); LoadStateOfCPU(newTCB); – Load its state (registers, PC, stack pointer) into CPU } – Load environment (virtual memory space, etc) – Jump to the PC • This is an infinite loop • How does the dispatcher get control back? – One could argue that this is all that the OS does – Internal events: thread returns control voluntarily • Should we ever exit this loop??? – External events: thread gets preempted – When would that be? 9/13/17 CS162 ©UCB Fall 2017 Lec 6.5 9/13/17 CS162 ©UCB Fall 2017 Lec 6.6 Internal Events Stack for Yielding Thread ComputePI • Blocking on I/O Stack growth – The act of requesting I/O implicitly yields the CPU yield Trap to OS • Waiting on a “signal” from other thread kernel_yield – Thread asks to wait and thus yields the CPU run_new_thread • Thread executes a yield() switch – Thread volunteers to give up CPU • How do we run a new thread? run_new_thread() { computePI() { newThread = PickNewThread(); while(TRUE) { switch(curThread, newThread); ThreadHouseKeeping(); /* Do any cleanup */ ComputeNextDigit(); } yield(); } • How does dispatcher switch to a new thread? – Save anything next thread may trash: PC, regs, stack pointer } – Maintain isolation for each thread 9/13/17 CS162 ©UCB Fall 2017 Lec 6.7 9/13/17 CS162 ©UCB Fall 2017 Lec 6.8 Page 2

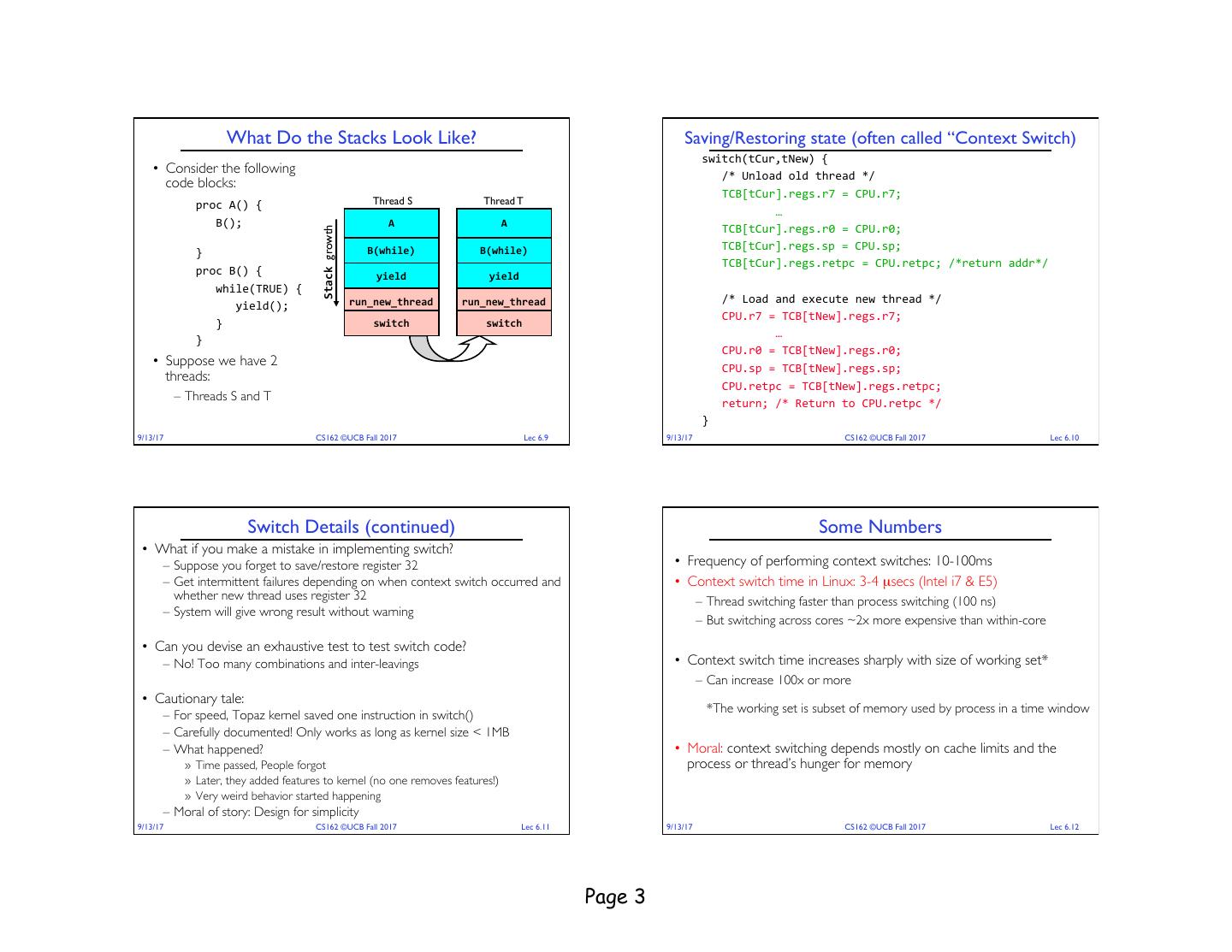

3 . What Do the Stacks Look Like? Saving/Restoring state (often called “Context Switch) switch(tCur,tNew) { • Consider the following /* Unload old thread */ code blocks: TCB[tCur].regs.r7 = CPU.r7; proc A() { Thread S Thread T … B(); A A TCB[tCur].regs.r0 = CPU.r0; Stack growth B(while) B(while) TCB[tCur].regs.sp = CPU.sp; } TCB[tCur].regs.retpc = CPU.retpc; /*return addr*/ proc B() { yield yield while(TRUE) { run_new_thread run_new_thread /* Load and execute new thread */ yield(); CPU.r7 = TCB[tNew].regs.r7; } switch switch … } CPU.r0 = TCB[tNew].regs.r0; • Suppose we have 2 CPU.sp = TCB[tNew].regs.sp; threads: CPU.retpc = TCB[tNew].regs.retpc; – Threads S and T return; /* Return to CPU.retpc */ } 9/13/17 CS162 ©UCB Fall 2017 Lec 6.9 9/13/17 CS162 ©UCB Fall 2017 Lec 6.10 Switch Details (continued) Some Numbers • What if you make a mistake in implementing switch? – Suppose you forget to save/restore register 32 • Frequency of performing context switches: 10-100ms – Get intermittent failures depending on when context switch occurred and • Context switch time in Linux: 3-4 µsecs (Intel i7 & E5) whether new thread uses register 32 – Thread switching faster than process switching (100 ns) – System will give wrong result without warning – But switching across cores ~2x more expensive than within-core • Can you devise an exhaustive test to test switch code? – No! Too many combinations and inter-leavings • Context switch time increases sharply with size of working set* – Can increase 100x or more • Cautionary tale: *The working set is subset of memory used by process in a time window – For speed, Topaz kernel saved one instruction in switch() – Carefully documented! Only works as long as kernel size < 1MB – What happened? • Moral: context switching depends mostly on cache limits and the » Time passed, People forgot process or thread’s hunger for memory » Later, they added features to kernel (no one removes features!) » Very weird behavior started happening – Moral of story: Design for simplicity 9/13/17 CS162 ©UCB Fall 2017 Lec 6.11 9/13/17 CS162 ©UCB Fall 2017 Lec 6.12 Page 3

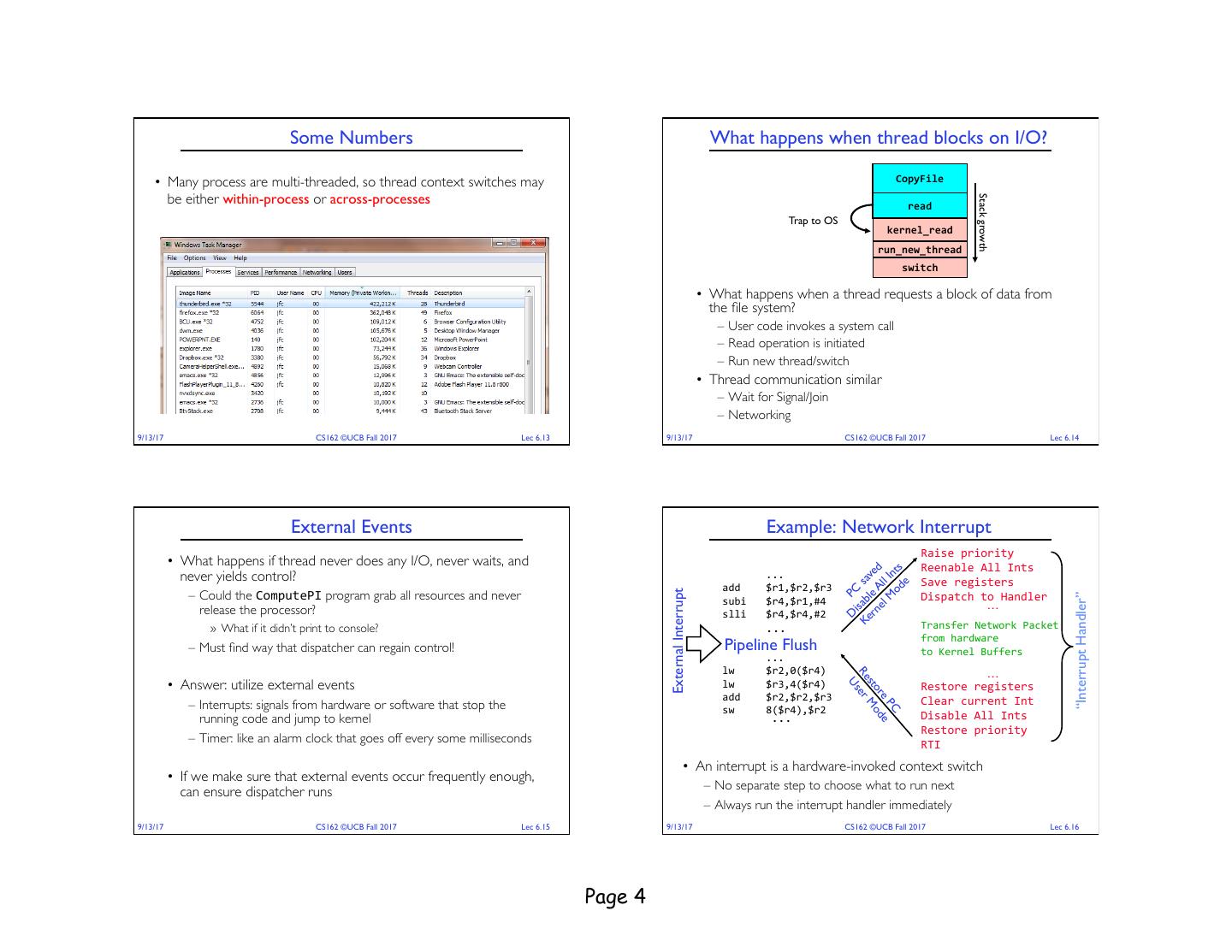

4 . Some Numbers What happens when thread blocks on I/O? • Many process are multi-threaded, so thread context switches may CopyFile be either within-process or across-processes Stack growth read Trap to OS kernel_read run_new_thread switch • What happens when a thread requests a block of data from the file system? – User code invokes a system call – Read operation is initiated – Run new thread/switch • Thread communication similar – Wait for Signal/Join – Networking 9/13/17 CS162 ©UCB Fall 2017 Lec 6.13 9/13/17 CS162 ©UCB Fall 2017 Lec 6.14 External Events Example: Network Interrupt Raise priority • What happens if thread never does any I/O, never waits, and d s ve nt Reenable All Ints never yields control? ... sa All I de Save registers PC ble Mo add $r1,$r2,$r3 External Interrupt – Could the ComputePI program grab all resources and never Dispatch to Handler “Interrupt Handler” l isa e subi $r4,$r1,#4 … release the processor? slli $r4,$r4,#2 D ern K Transfer Network Packet » What if it didn’t print to console? ... from hardware – Must find way that dispatcher can regain control! Pipeline Flush to Kernel Buffers ... lw $r2,0($r4) … Re ser • Answer: utilize external events lw $r3,4($r4) sto Mo U Restore registers re de add $r2,$r2,$r3 Clear current Int – Interrupts: signals from hardware or software that stop the PC sw 8($r4),$r2 running code and jump to kernel ... Disable All Ints Restore priority – Timer: like an alarm clock that goes off every some milliseconds RTI • An interrupt is a hardware-invoked context switch • If we make sure that external events occur frequently enough, can ensure dispatcher runs – No separate step to choose what to run next – Always run the interrupt handler immediately 9/13/17 CS162 ©UCB Fall 2017 Lec 6.15 9/13/17 CS162 ©UCB Fall 2017 Lec 6.16 Page 4

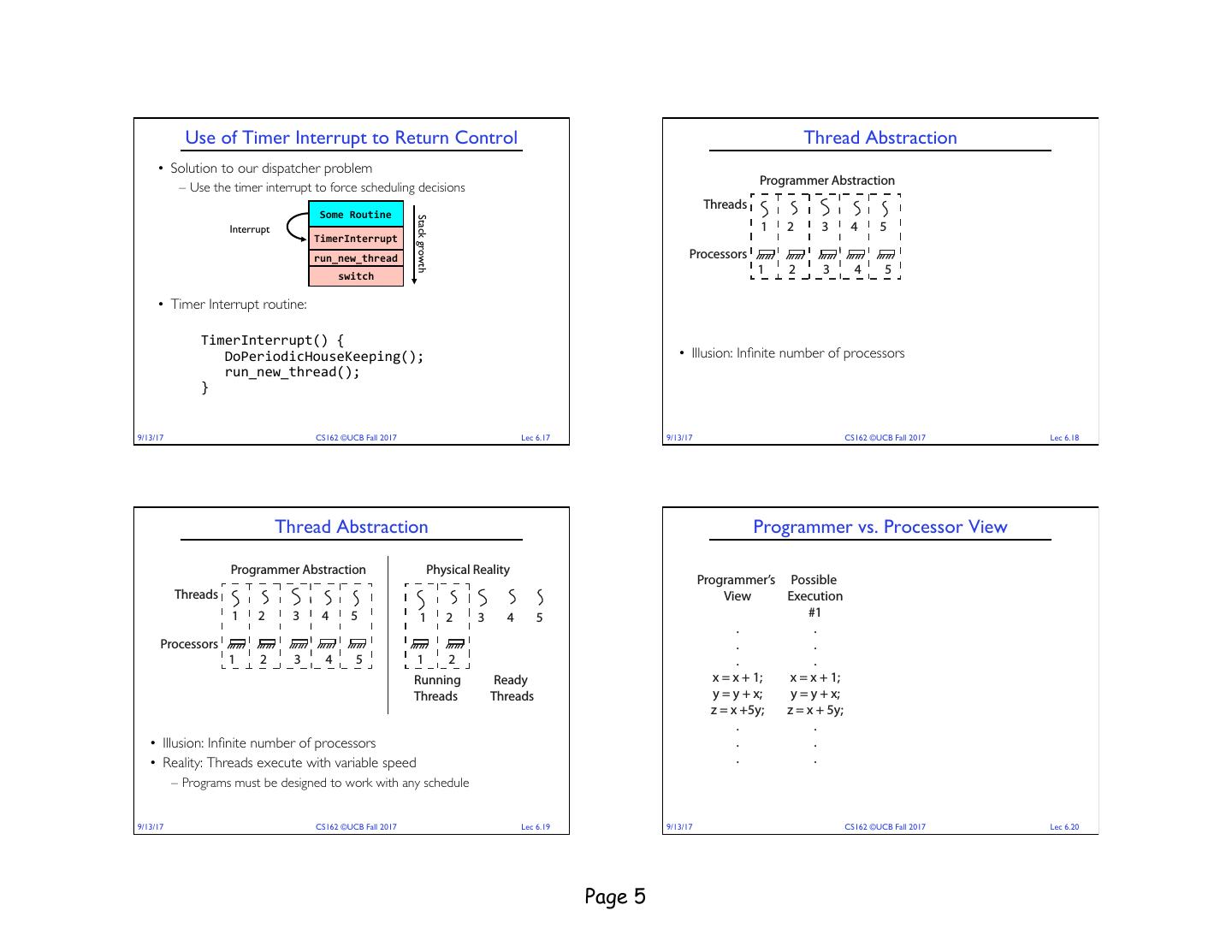

5 . Use of Timer Interrupt to Return Control Thread Abstraction • Solution to our dispatcher problem – Use the timer interrupt to force scheduling decisions Programmer Abstraction Physical Reality Threads Some Routine Stack growth Interrupt 1 2 3 4 5 1 2 3 4 5 TimerInterrupt run_new_thread Processors switch 1 2 3 4 5 1 2 Running Ready • Timer Interrupt routine: Threads Threads TimerInterrupt() { DoPeriodicHouseKeeping(); • Illusion: Infinite number of processors run_new_thread(); } 9/13/17 CS162 ©UCB Fall 2017 Lec 6.17 9/13/17 CS162 ©UCB Fall 2017 Lec 6.18 Thread Abstraction Programmer vs. Processor View Programmer Abstraction Physical Reality Programmer’s Possible Possible Possible Threads View Execution Execution Execution 1 2 3 4 5 1 2 3 4 5 #1 #2 #3 . . . . Processors . . . . 1 2 3 4 5 1 2 . . . . Running Ready x = x + 1; x = x + 1; x=x+1 x=x+1 Threads Threads y = y + x; y = y + x; .............. y=y+x z = x +5y; z = x + 5y; thread is suspended ............... . . other thread(s) run thread is suspended • Illusion: Infinite number of processors . . thread is resumed other thread(s) run • Reality: Threads execute with variable speed . . ............... thread is resumed y=y+x ................ – Programs must be designed to work with any schedule z = x + 5y z = x + 5y 9/13/17 CS162 ©UCB Fall 2017 Lec 6.19 9/13/17 CS162 ©UCB Fall 2017 Lec 6.20 Page 5

6 . Programmer vs. Processor View Programmer vs. Processor View Programmer’s Possible Possible Possible Programmer’s Possible Possible Possible View Execution Execution Execution View Execution Execution Execution #1 #2 #3 #1 #2 #3 . . . . . . . . . . . . . . . . . . . . . . . . x = x + 1; x = x + 1; x=x+1 x=x+1 x = x + 1; x = x + 1; x=x+1 x=x+1 y = y + x; y = y + x; .............. y=y+x y = y + x; y = y + x; .............. y=y+x z = x +5y; z = x + 5y; thread is suspended ............... z = x +5y; z = x + 5y; thread is suspended ............... . . other thread(s) run thread is suspended . . other thread(s) run thread is suspended . . thread is resumed other thread(s) run . . thread is resumed other thread(s) run . . ............... thread is resumed . . ............... thread is resumed y=y+x ................ y=y+x ................ z = x + 5y z = x + 5y z = x + 5y z = x + 5y 9/13/17 CS162 ©UCB Fall 2017 Lec 6.21 9/13/17 CS162 ©UCB Fall 2017 Lec 6.22 Possible Executions Thread Lifecycle Thread 1 Thread 1 Thread 2 Thread 2 Thread 3 Thread 3 a) One execution b) Another execution Scheduler Thread Creation Resumes Thread Thread Exit Init e.g., Ready Running e.g., Finished sthread_create() sthread_exit() Thread Yields/ Scheduler Thread 1 Event Occurs Suspends Thread e.g., sthread_yield() Thread Waits for Event Thread 2 e.g., other thread calls e.g., sthread_join() Thread 3 sthread_join() Waiting c) Another execution 9/13/17 CS162 ©UCB Fall 2017 Lec 6.23 9/13/17 CS162 ©UCB Fall 2017 Lec 6.24 Page 6

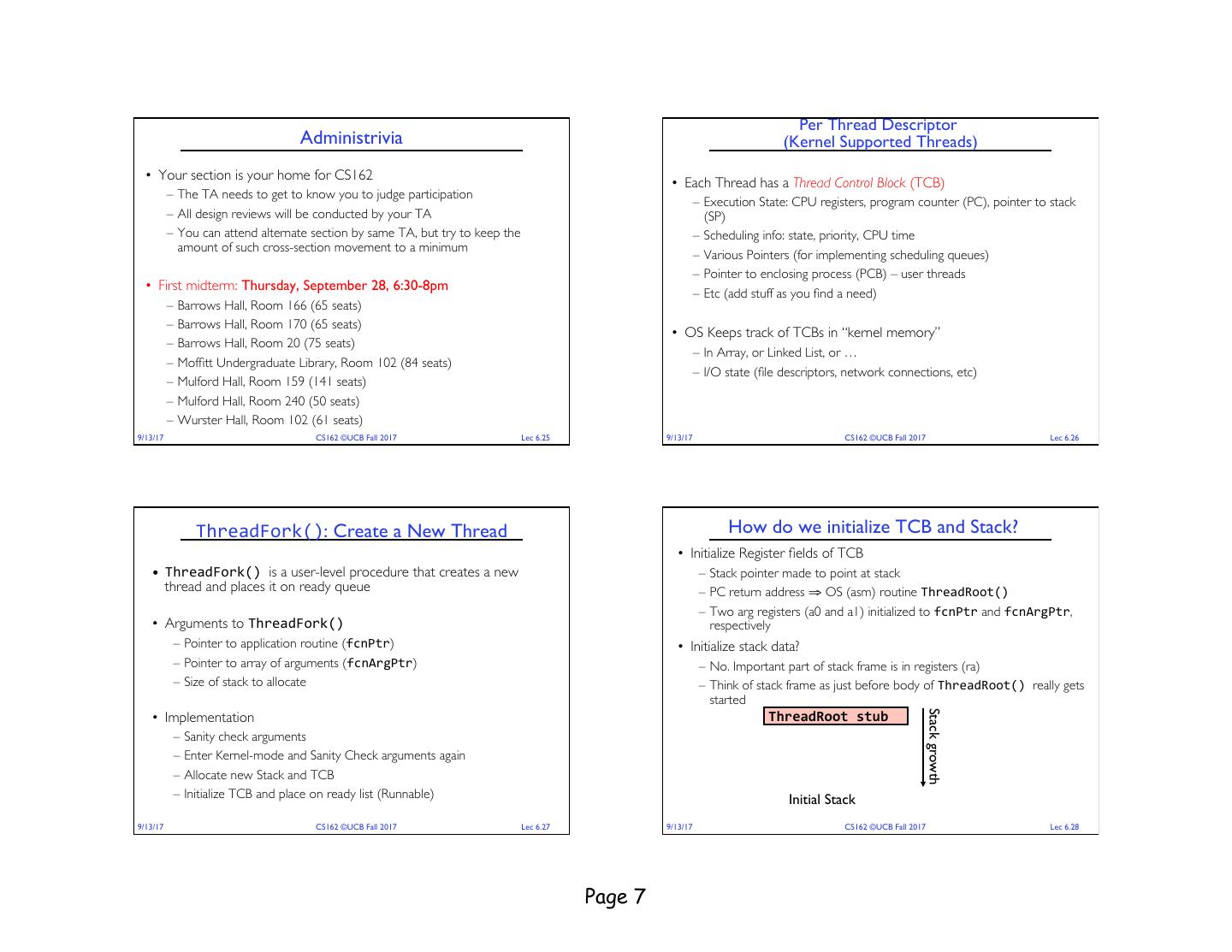

7 . Per Thread Descriptor Administrivia (Kernel Supported Threads) • Your section is your home for CS162 • Each Thread has a Thread Control Block (TCB) – The TA needs to get to know you to judge participation – Execution State: CPU registers, program counter (PC), pointer to stack – All design reviews will be conducted by your TA (SP) – You can attend alternate section by same TA, but try to keep the – Scheduling info: state, priority, CPU time amount of such cross-section movement to a minimum – Various Pointers (for implementing scheduling queues) – Pointer to enclosing process (PCB) – user threads • First midterm: Thursday, September 28, 6:30-8pm – Etc (add stuff as you find a need) – Barrows Hall, Room 166 (65 seats) – Barrows Hall, Room 170 (65 seats) • OS Keeps track of TCBs in “kernel memory” – Barrows Hall, Room 20 (75 seats) – In Array, or Linked List, or … – Moffitt Undergraduate Library, Room 102 (84 seats) – I/O state (file descriptors, network connections, etc) – Mulford Hall, Room 159 (141 seats) – Mulford Hall, Room 240 (50 seats) – Wurster Hall, Room 102 (61 seats) 9/13/17 CS162 ©UCB Fall 2017 Lec 6.25 9/13/17 CS162 ©UCB Fall 2017 Lec 6.26 ThreadFork(): Create a New Thread How do we initialize TCB and Stack? • Initialize Register fields of TCB • ThreadFork() is a user-level procedure that creates a new – Stack pointer made to point at stack thread and places it on ready queue – PC return address ⇒ OS (asm) routine ThreadRoot() – Two arg registers (a0 and a1) initialized to fcnPtr and fcnArgPtr, • Arguments to ThreadFork() respectively – Pointer to application routine (fcnPtr) • Initialize stack data? – Pointer to array of arguments (fcnArgPtr) – No. Important part of stack frame is in registers (ra) – Size of stack to allocate – Think of stack frame as just before body of ThreadRoot() really gets started Stack growth • Implementation ThreadRoot stub – Sanity check arguments – Enter Kernel-mode and Sanity Check arguments again – Allocate new Stack and TCB – Initialize TCB and place on ready list (Runnable) Initial Stack 9/13/17 CS162 ©UCB Fall 2017 Lec 6.27 9/13/17 CS162 ©UCB Fall 2017 Lec 6.28 Page 7

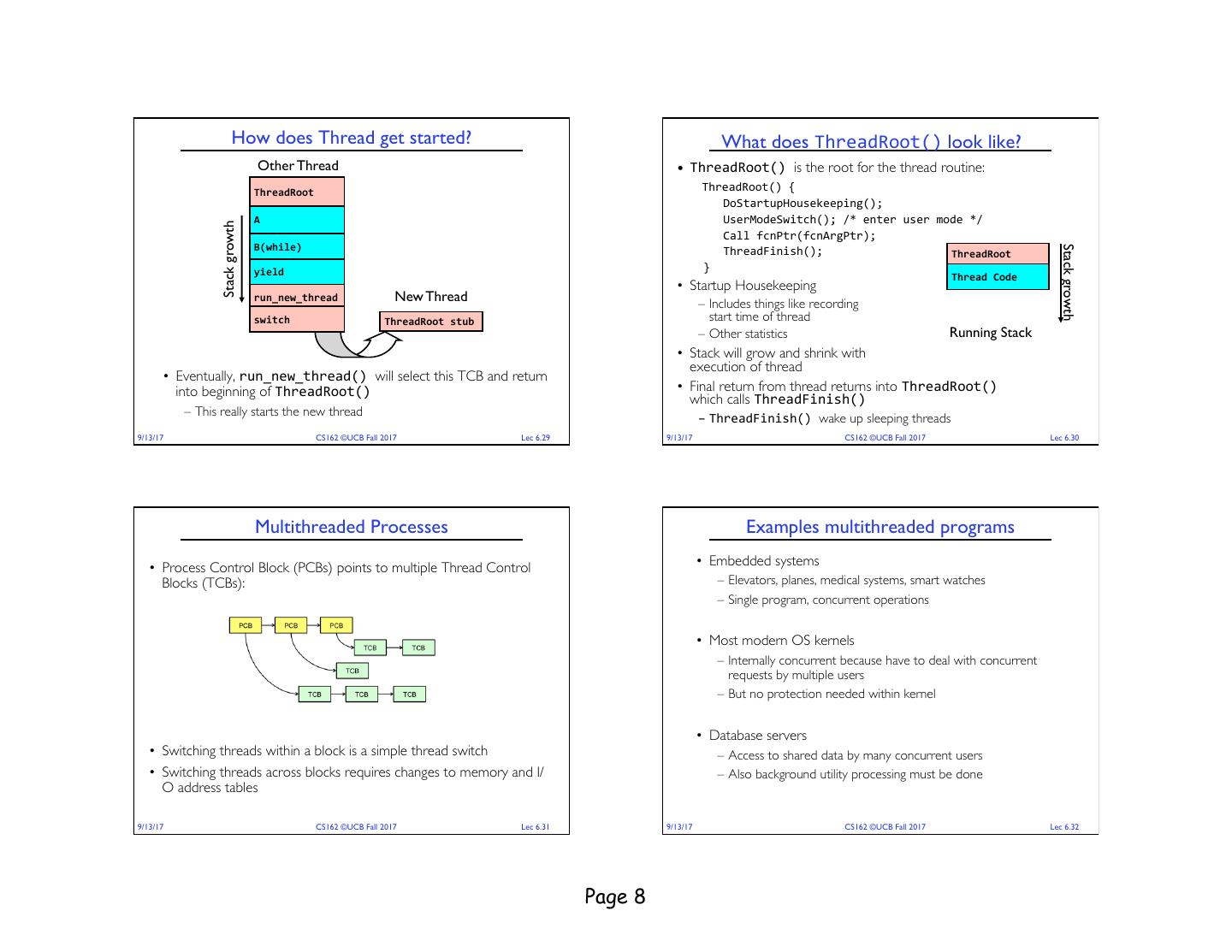

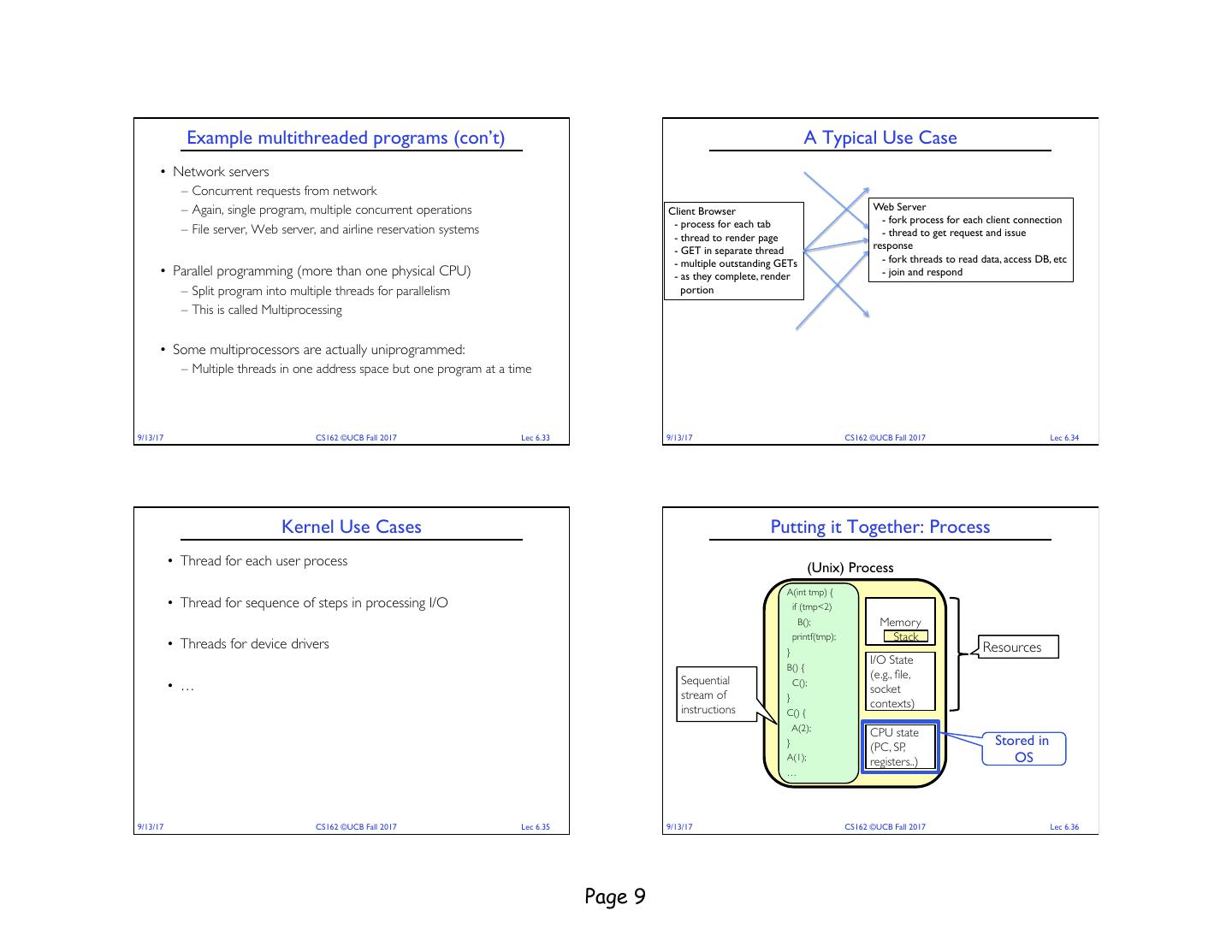

8 . How does Thread get started? What does ThreadRoot() look like? Other Thread • ThreadRoot() is the root for the thread routine: ThreadRoot ThreadRoot() { DoStartupHousekeeping(); A UserModeSwitch(); /* enter user mode */ Stack growth Call fcnPtr(fcnArgPtr); B(while) Stack growth ThreadFinish(); ThreadRoot yield } Thread Code • Startup Housekeeping run_new_thread New Thread – Includes things like recording switch ThreadRoot stub start time of thread – Other statistics Running Stack • Stack will grow and shrink with execution of thread • Eventually, run_new_thread() will select this TCB and return into beginning of ThreadRoot() • Final return from thread returns into ThreadRoot() which calls ThreadFinish() – This really starts the new thread – ThreadFinish() wake up sleeping threads 9/13/17 CS162 ©UCB Fall 2017 Lec 6.29 9/13/17 CS162 ©UCB Fall 2017 Lec 6.30 Multithreaded Processes Examples multithreaded programs • Embedded systems • Process Control Block (PCBs) points to multiple Thread Control Blocks (TCBs): – Elevators, planes, medical systems, smart watches – Single program, concurrent operations • Most modern OS kernels – Internally concurrent because have to deal with concurrent requests by multiple users – But no protection needed within kernel • Database servers • Switching threads within a block is a simple thread switch – Access to shared data by many concurrent users • Switching threads across blocks requires changes to memory and I/ – Also background utility processing must be done O address tables 9/13/17 CS162 ©UCB Fall 2017 Lec 6.31 9/13/17 CS162 ©UCB Fall 2017 Lec 6.32 Page 8

9 . Example multithreaded programs (con’t) A Typical Use Case • Network servers – Concurrent requests from network – Again, single program, multiple concurrent operations Client Browser Web Server - process for each tab - fork process for each client connection – File server, Web server, and airline reservation systems - thread to get request and issue - thread to render page - GET in separate thread response - multiple outstanding GETs - fork threads to read data, access DB, etc • Parallel programming (more than one physical CPU) - as they complete, render - join and respond – Split program into multiple threads for parallelism portion – This is called Multiprocessing • Some multiprocessors are actually uniprogrammed: – Multiple threads in one address space but one program at a time 9/13/17 CS162 ©UCB Fall 2017 Lec 6.33 9/13/17 CS162 ©UCB Fall 2017 Lec 6.34 Kernel Use Cases Putting it Together: Process • Thread for each user process (Unix) Process A(int tmp) { • Thread for sequence of steps in processing I/O if (tmp<2) B(); Memory printf(tmp); Stack • Threads for device drivers } Resources I/O State B() { Sequential (e.g., file, C(); • … socket stream of } instructions contexts) C() { A(2); CPU state } (PC, SP, Stored in A(1); registers..) OS … 9/13/17 CS162 ©UCB Fall 2017 Lec 6.35 9/13/17 CS162 ©UCB Fall 2017 Lec 6.36 Page 9

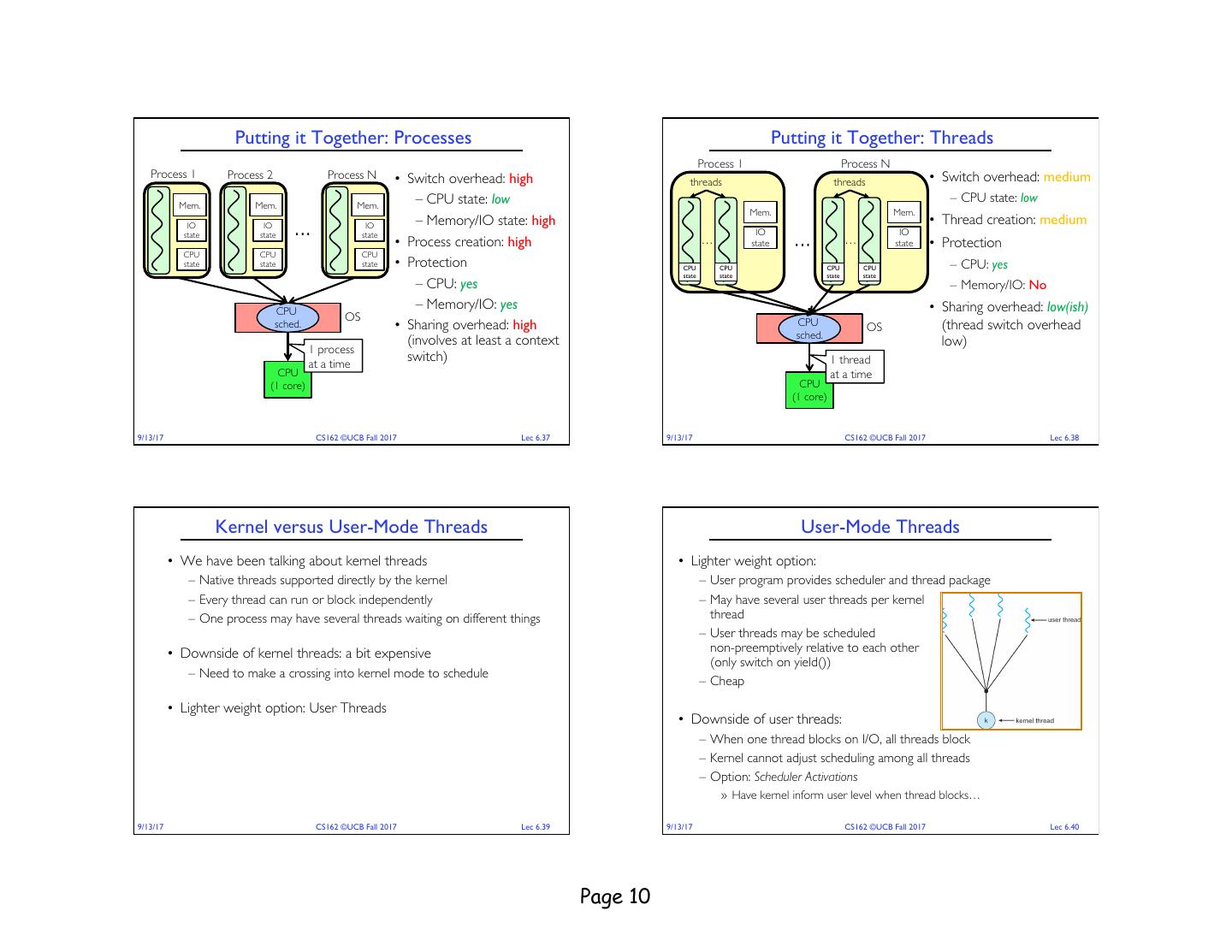

10 . Putting it Together: Processes Putting it Together: Threads Process 1 Process N Process 1 Process 2 Process N • Switch overhead: high • Switch overhead: medium threads threads Mem. Mem. Mem. – CPU state: low – CPU state: low Mem. Mem. – Memory/IO state: high • Thread creation: medium IO IO … IO IO IO state state state • Process creation: high … state … … state • Protection CPU CPU CPU state state state • Protection CPU CPU CPU CPU – CPU: yes state state state state – CPU: yes – Memory/IO: No CPU – Memory/IO: yes • Sharing overhead: low(ish) OS sched. • Sharing overhead: high CPU OS (thread switch overhead sched. (involves at least a context low) 1 process at a time switch) 1 thread CPU at a time (1 core) CPU (1 core) 9/13/17 CS162 ©UCB Fall 2017 Lec 6.37 9/13/17 CS162 ©UCB Fall 2017 Lec 6.38 Kernel versus User-Mode Threads User-Mode Threads • We have been talking about kernel threads • Lighter weight option: – Native threads supported directly by the kernel – User program provides scheduler and thread package – Every thread can run or block independently – May have several user threads per kernel – One process may have several threads waiting on different things thread – User threads may be scheduled • Downside of kernel threads: a bit expensive non-preemptively relative to each other (only switch on yield()) – Need to make a crossing into kernel mode to schedule – Cheap • Lighter weight option: User Threads • Downside of user threads: – When one thread blocks on I/O, all threads block – Kernel cannot adjust scheduling among all threads – Option: Scheduler Activations » Have kernel inform user level when thread blocks… 9/13/17 CS162 ©UCB Fall 2017 Lec 6.39 9/13/17 CS162 ©UCB Fall 2017 Lec 6.40 Page 10

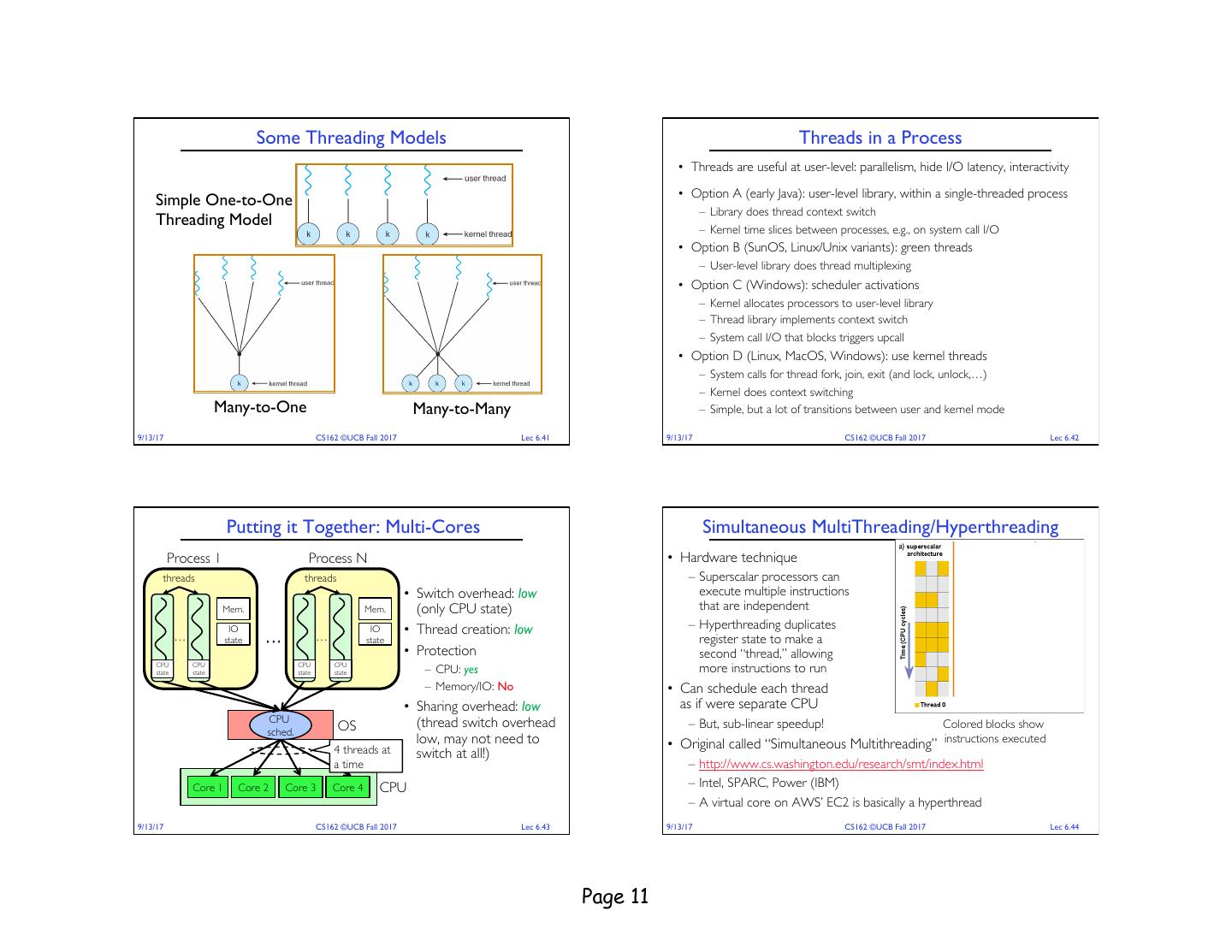

11 . Some Threading Models Threads in a Process • Threads are useful at user-level: parallelism, hide I/O latency, interactivity • Option A (early Java): user-level library, within a single-threaded process Simple One-to-One – Library does thread context switch Threading Model – Kernel time slices between processes, e.g., on system call I/O • Option B (SunOS, Linux/Unix variants): green threads – User-level library does thread multiplexing • Option C (Windows): scheduler activations – Kernel allocates processors to user-level library – Thread library implements context switch – System call I/O that blocks triggers upcall • Option D (Linux, MacOS, Windows): use kernel threads – System calls for thread fork, join, exit (and lock, unlock,…) – Kernel does context switching Many-to-One Many-to-Many – Simple, but a lot of transitions between user and kernel mode 9/13/17 CS162 ©UCB Fall 2017 Lec 6.41 9/13/17 CS162 ©UCB Fall 2017 Lec 6.42 Putting it Together: Multi-Cores Simultaneous MultiThreading/Hyperthreading Process 1 Process N • Hardware technique threads threads – Superscalar processors can • Switch overhead: low execute multiple instructions Mem. Mem. (only CPU state) that are independent IO IO • Thread creation: low – Hyperthreading duplicates … state … … state register state to make a • Protection second “thread,” allowing CPU state CPU state CPU state CPU state – CPU: yes more instructions to run – Memory/IO: No • Can schedule each thread • Sharing overhead: low as if were separate CPU CPU (thread switch overhead – But, sub-linear speedup! OS Colored blocks show sched. low, may not need to • Original called “Simultaneous Multithreading” instructions executed 4 threads at switch at all!) a time – http://www.cs.washington.edu/research/smt/index.html Core 1 Core 2 Core 3 Core 4 CPU – Intel, SPARC, Power (IBM) – A virtual core on AWS’ EC2 is basically a hyperthread 9/13/17 CS162 ©UCB Fall 2017 Lec 6.43 9/13/17 CS162 ©UCB Fall 2017 Lec 6.44 Page 11

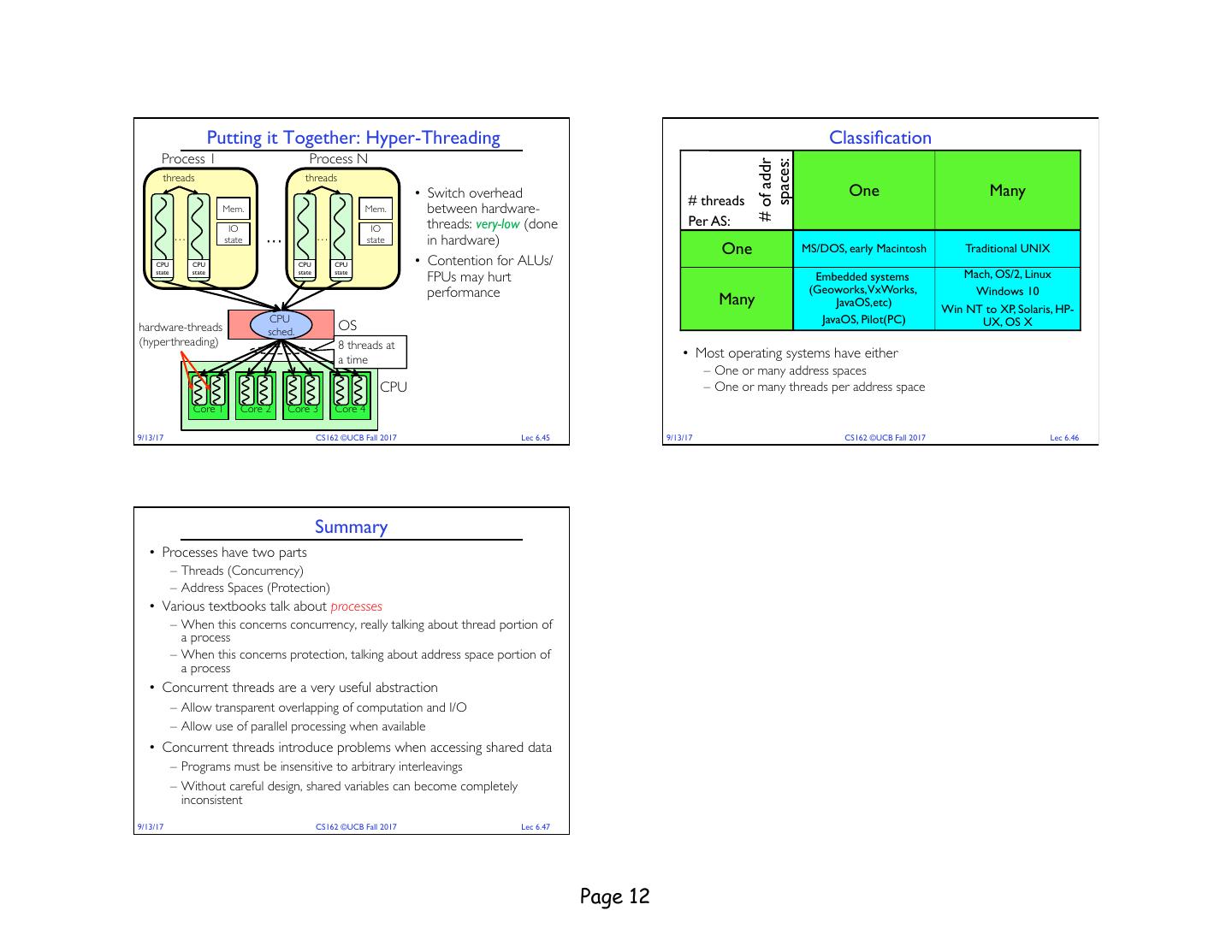

12 . Putting it Together: Hyper-Threading Classification Process 1 Process N # of addr spaces: threads threads • Switch overhead One Many # threads Mem. Mem. between hardware- IO IO threads: very-low (done Per AS: … state … … state in hardware) One MS/DOS, early Macintosh Traditional UNIX CPU CPU CPU CPU • Contention for ALUs/ state state state state FPUs may hurt Embedded systems Mach, OS/2, Linux performance (Geoworks,VxWorks, Windows 10 Many JavaOS,etc) Win NT to XP, Solaris, HP- CPU JavaOS, Pilot(PC) UX, OS X hardware-threads sched. OS (hyperthreading) 8 threads at a time • Most operating systems have either – One or many address spaces CPU – One or many threads per address space Core 1 Core 2 Core 3 Core 4 9/13/17 CS162 ©UCB Fall 2017 Lec 6.45 9/13/17 CS162 ©UCB Fall 2017 Lec 6.46 Summary • Processes have two parts – Threads (Concurrency) – Address Spaces (Protection) • Various textbooks talk about processes – When this concerns concurrency, really talking about thread portion of a process – When this concerns protection, talking about address space portion of a process • Concurrent threads are a very useful abstraction – Allow transparent overlapping of computation and I/O – Allow use of parallel processing when available • Concurrent threads introduce problems when accessing shared data – Programs must be insensitive to arbitrary interleavings – Without careful design, shared variables can become completely inconsistent 9/13/17 CS162 ©UCB Fall 2017 Lec 6.47 Page 12