- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Introduction to Spark

展开查看详情

1 .Introduction to Spark Shannon Quinn (with thanks to Paco Nathan and Databricks )

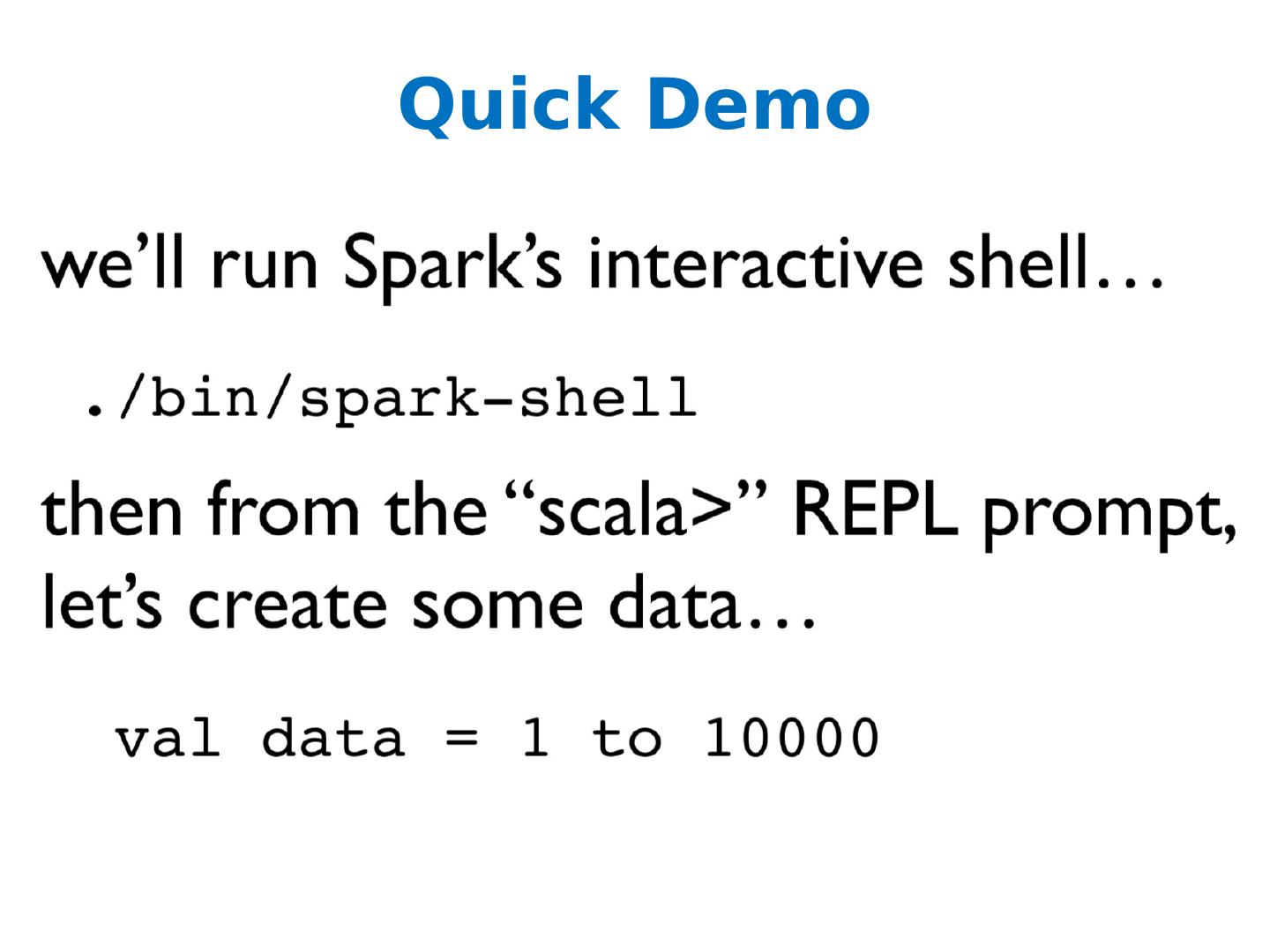

2 .Quick Demo

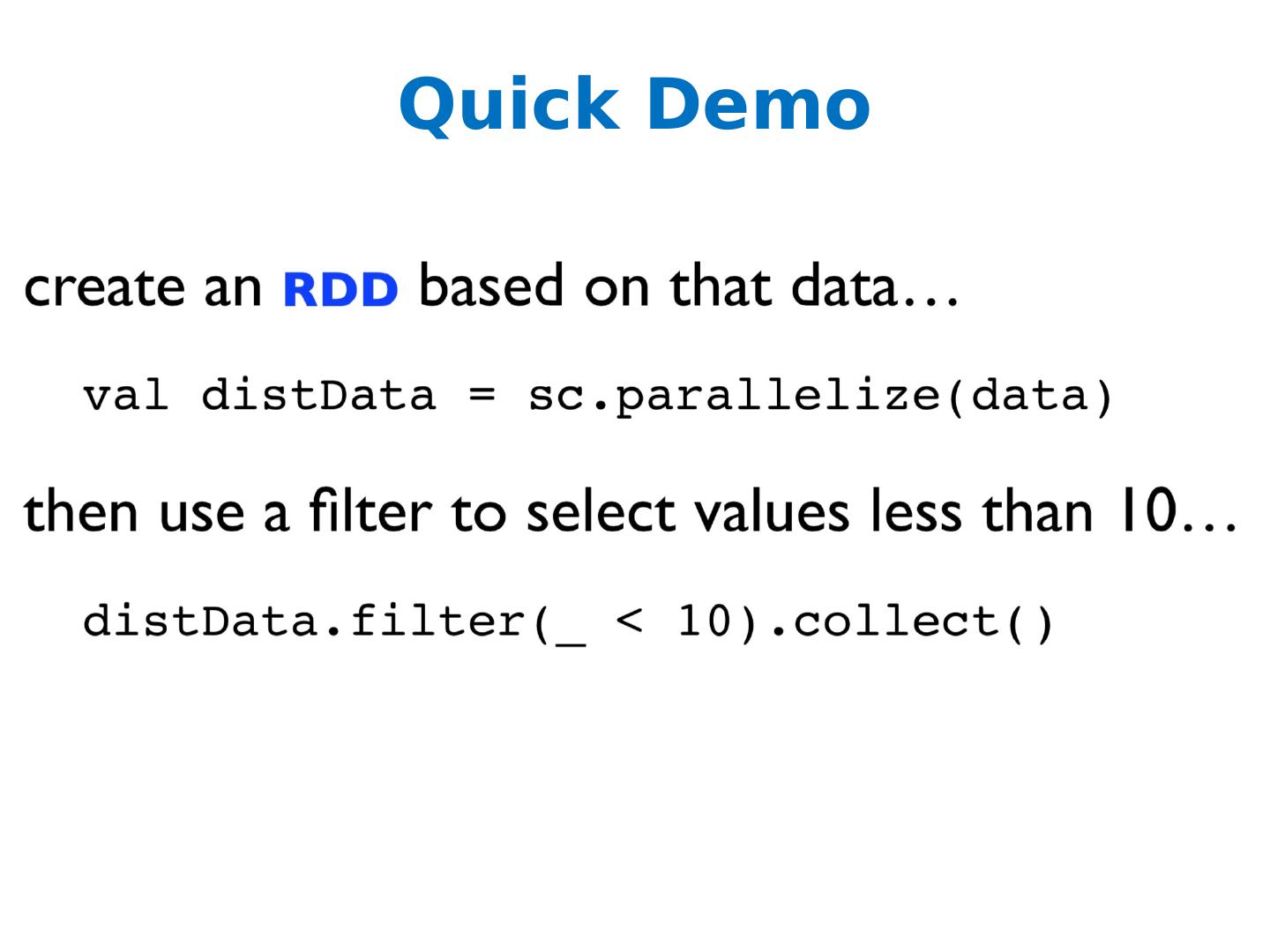

3 .Quick Demo

4 .API Hooks Scala / Java All Java libraries *.jar http://www.scala-lang.org Python Anaconda: https://store.continuum.io/cshop/anaconda /

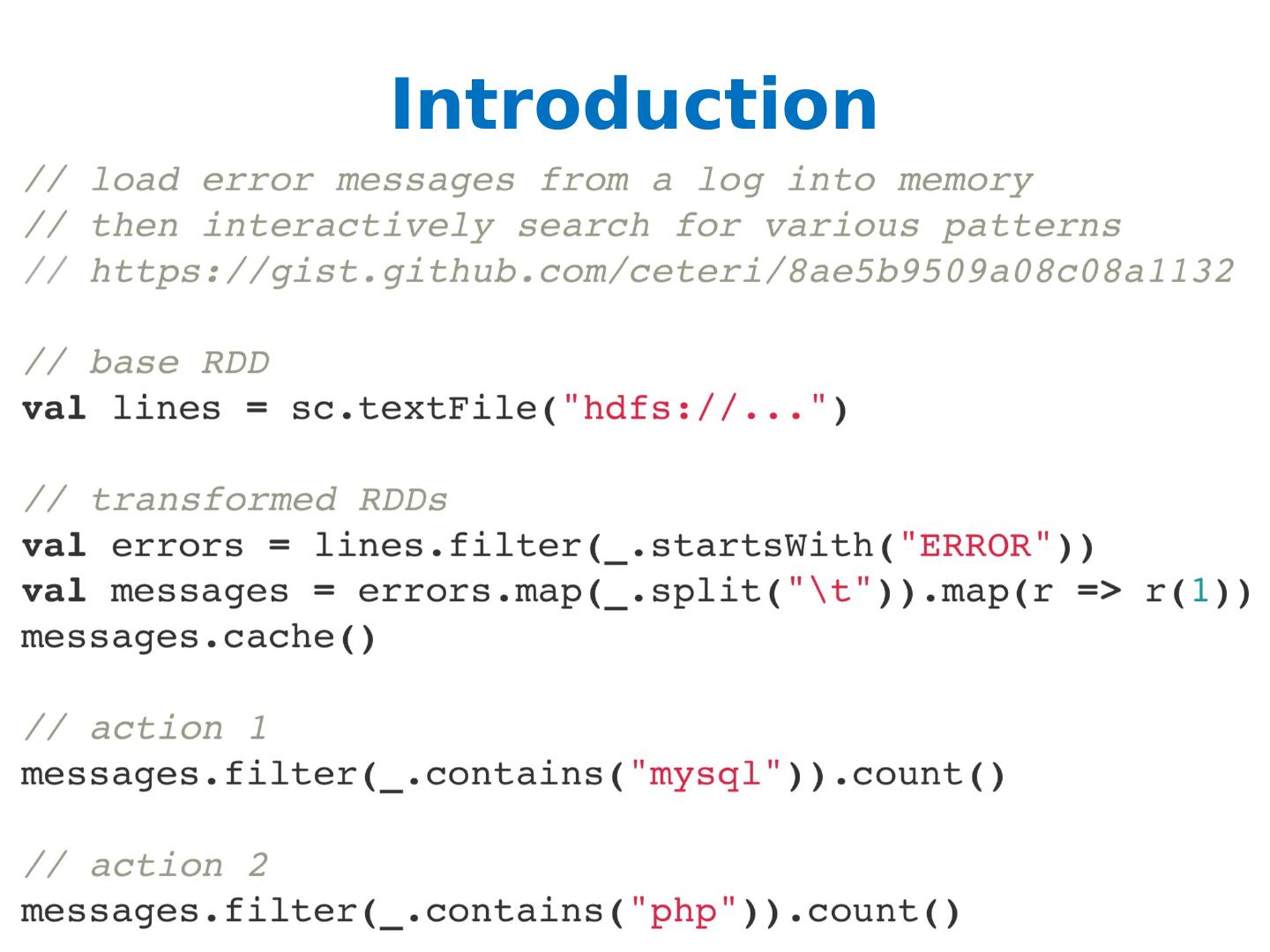

5 .Introduction

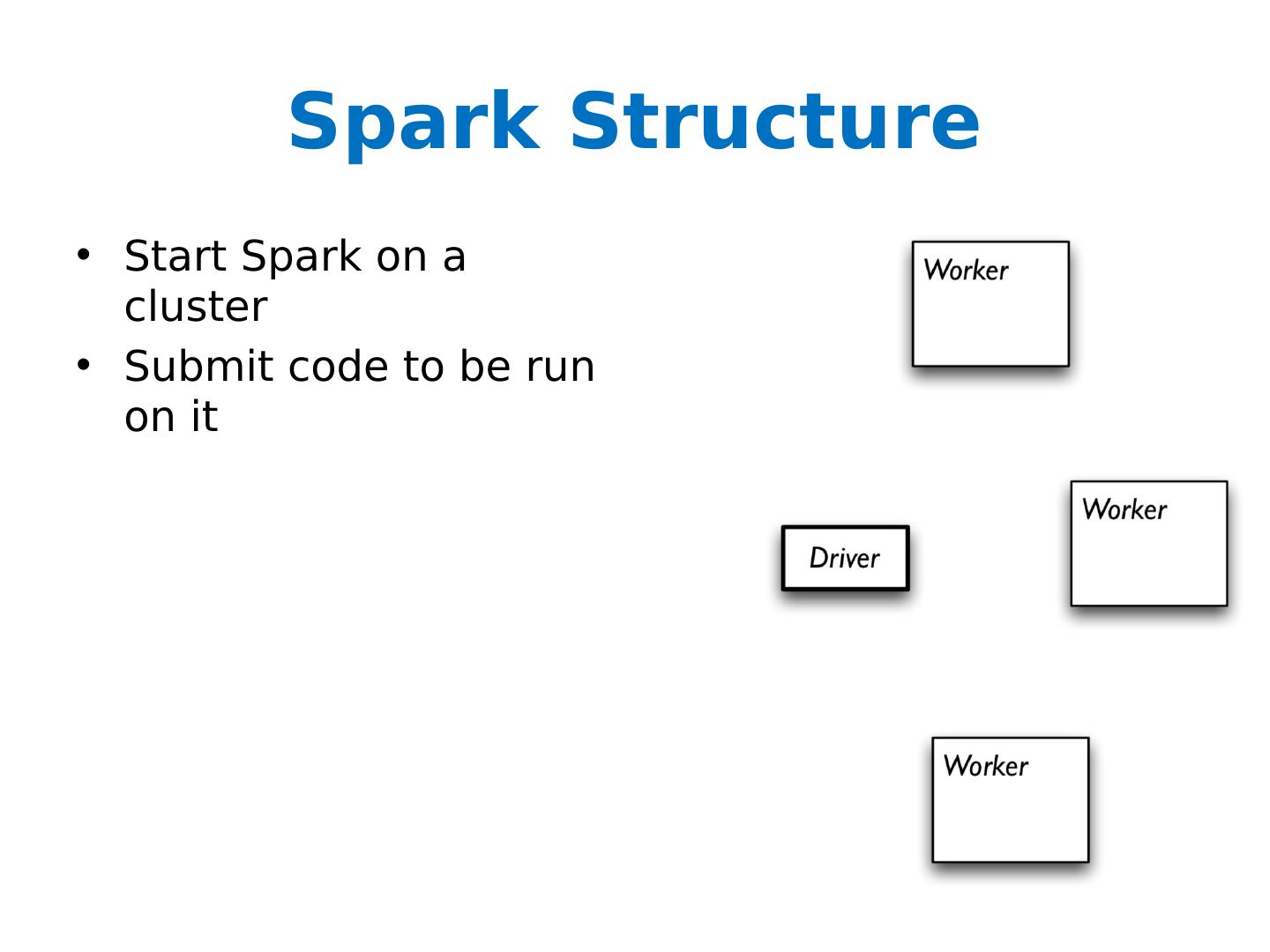

6 .Spark Structure Start Spark on a cluster Submit code to be run on it

7 .

8 .

9 .

10 .

11 .

12 .

13 .

14 .

15 .

16 .Another Perspective

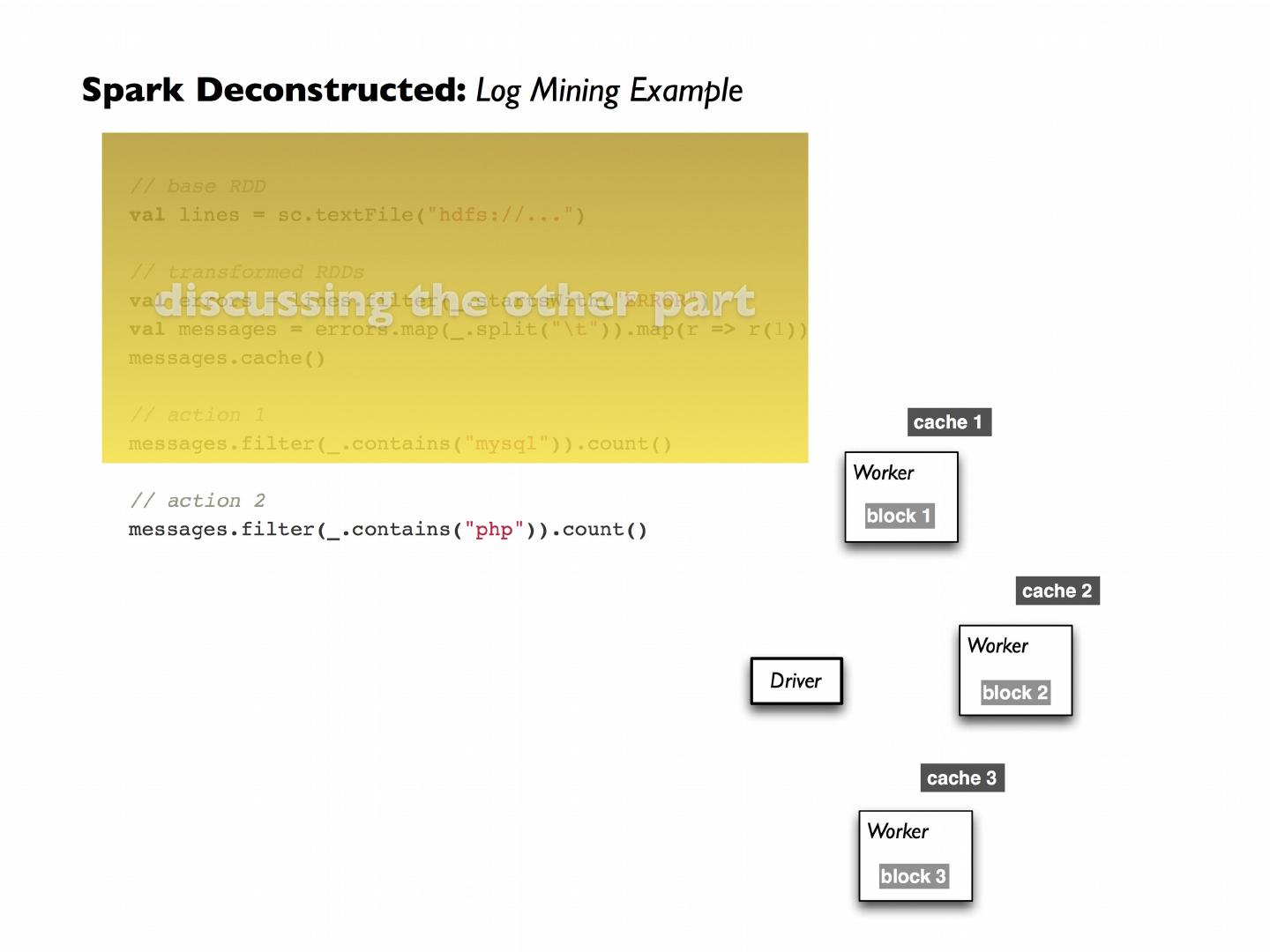

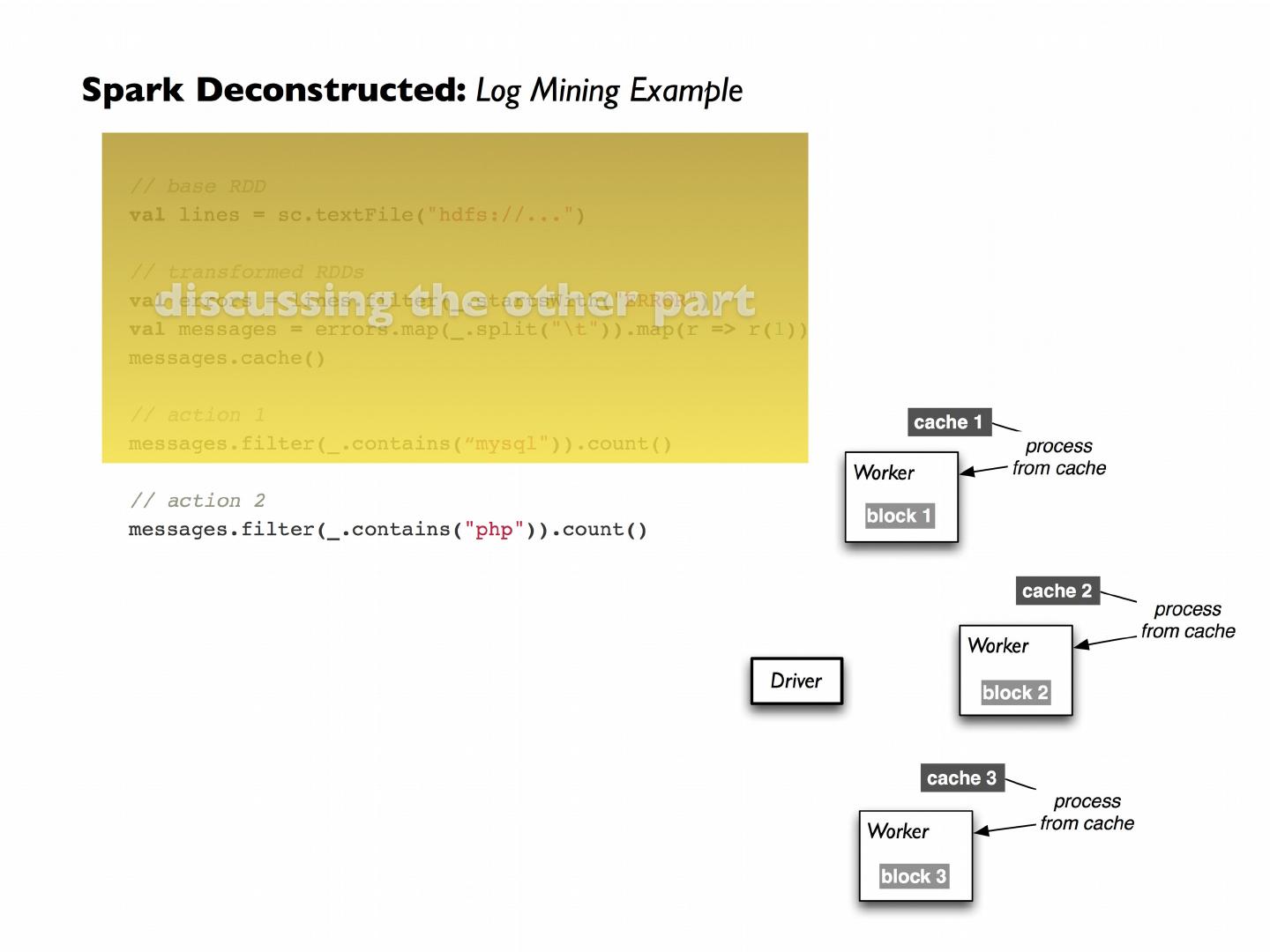

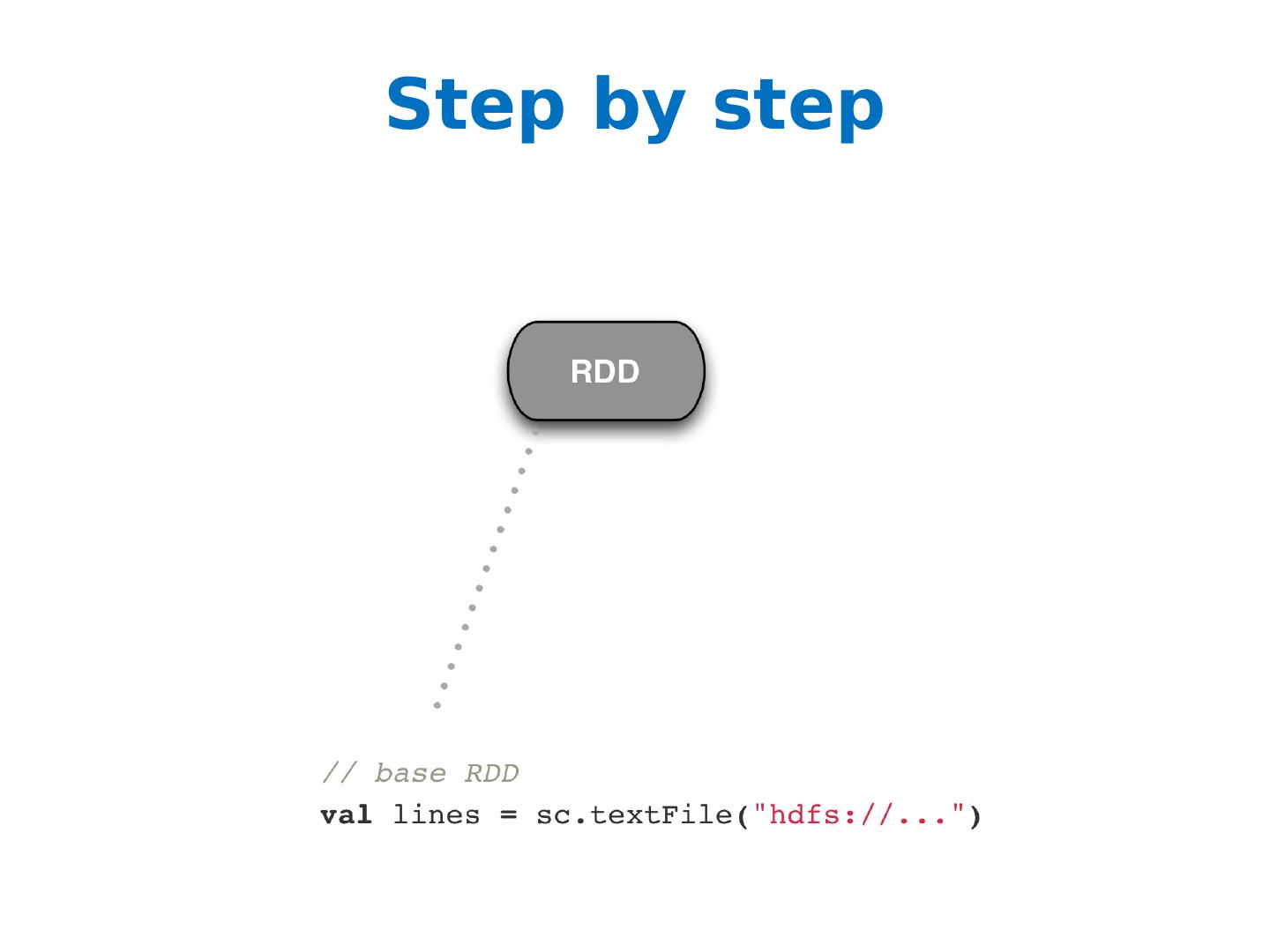

17 .Step by step

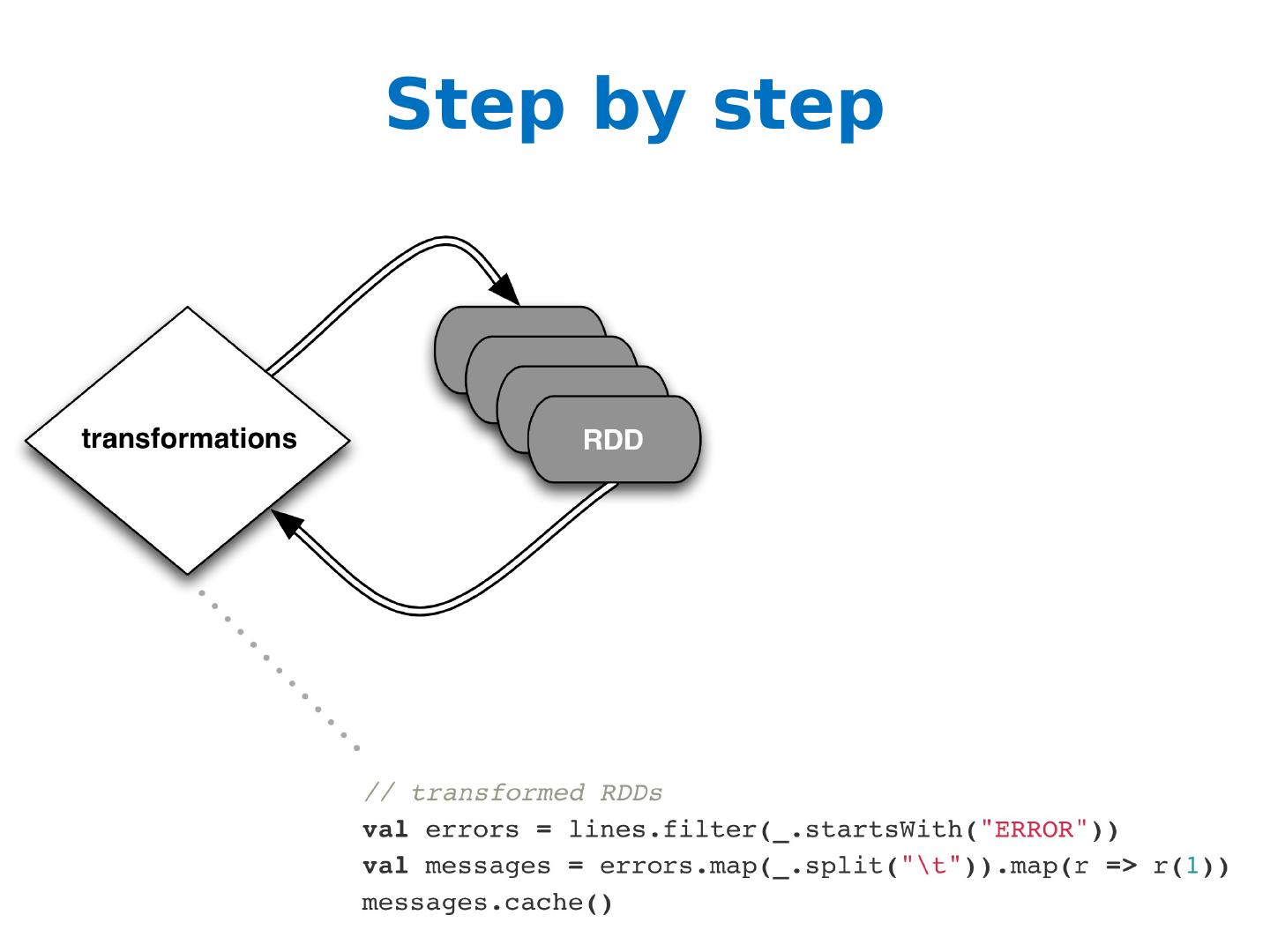

18 .Step by step

19 .Step by step

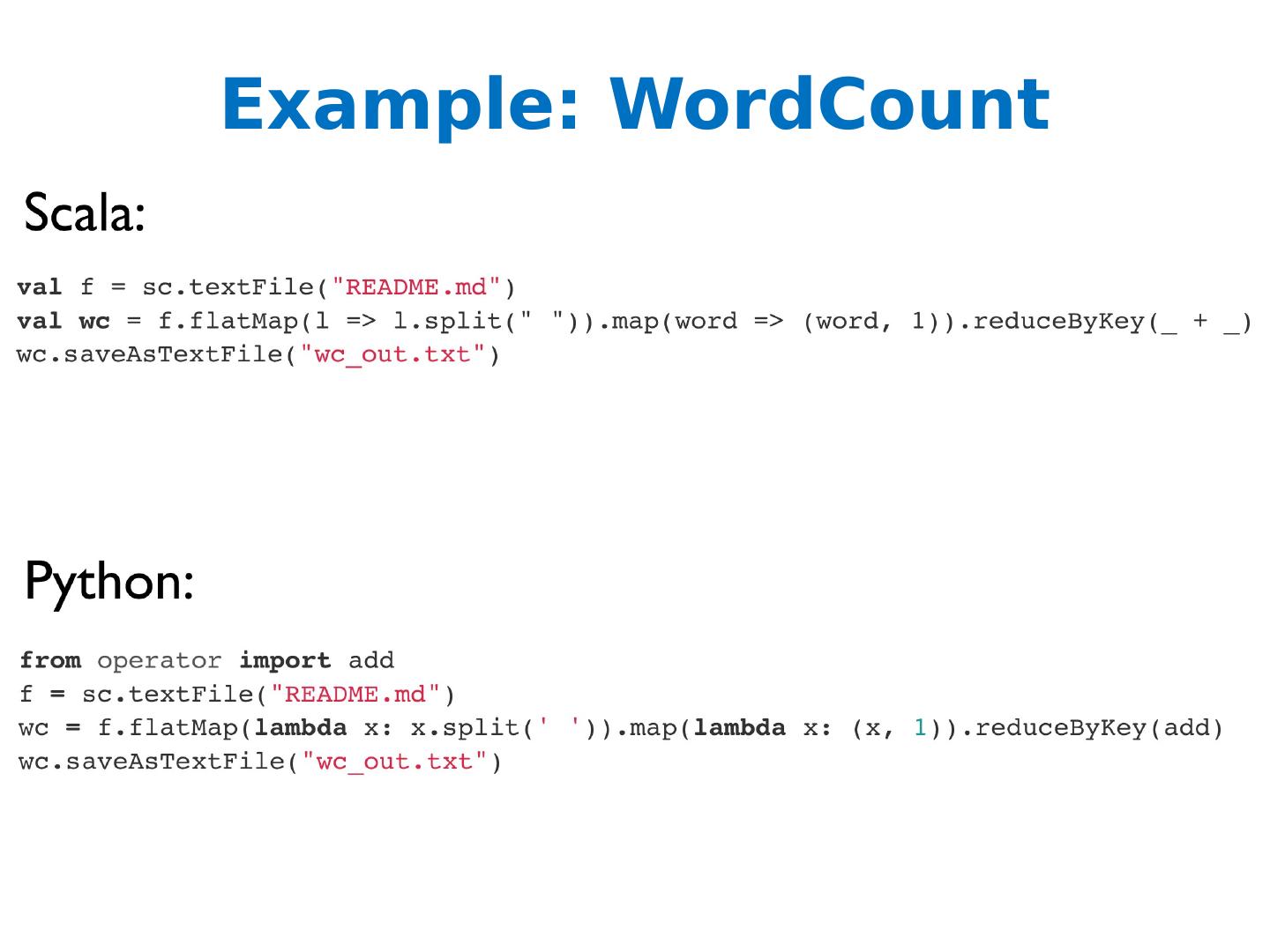

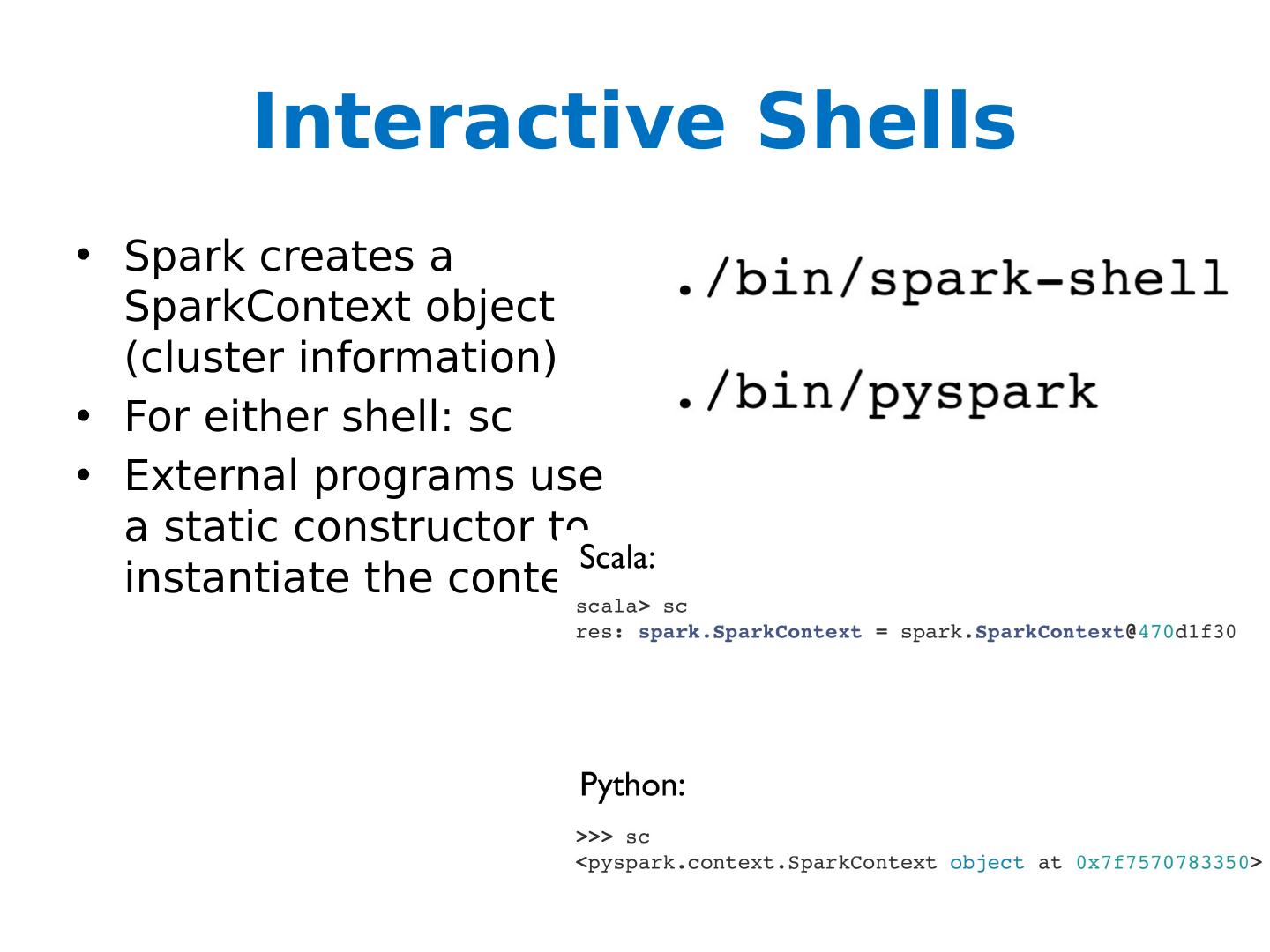

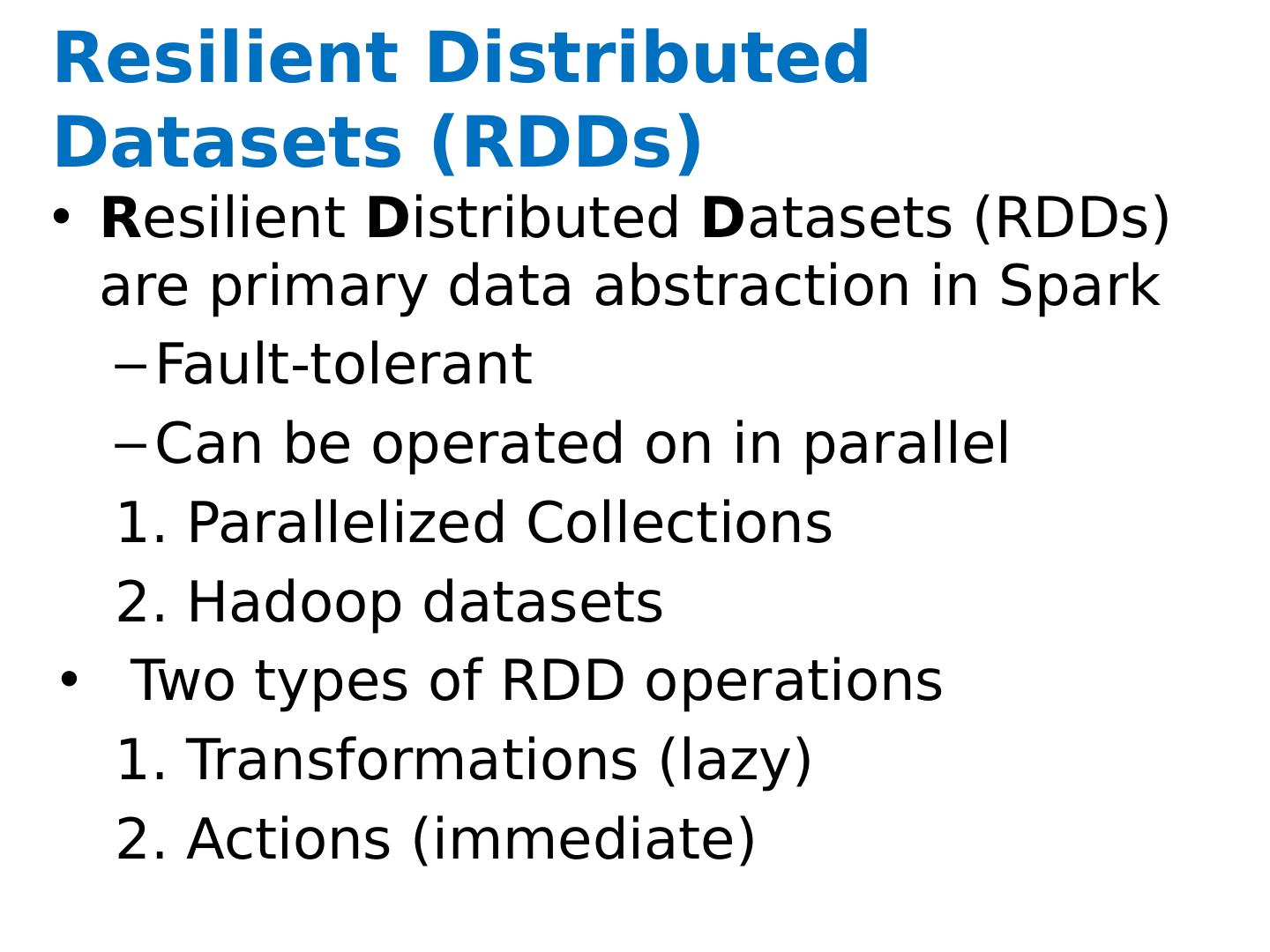

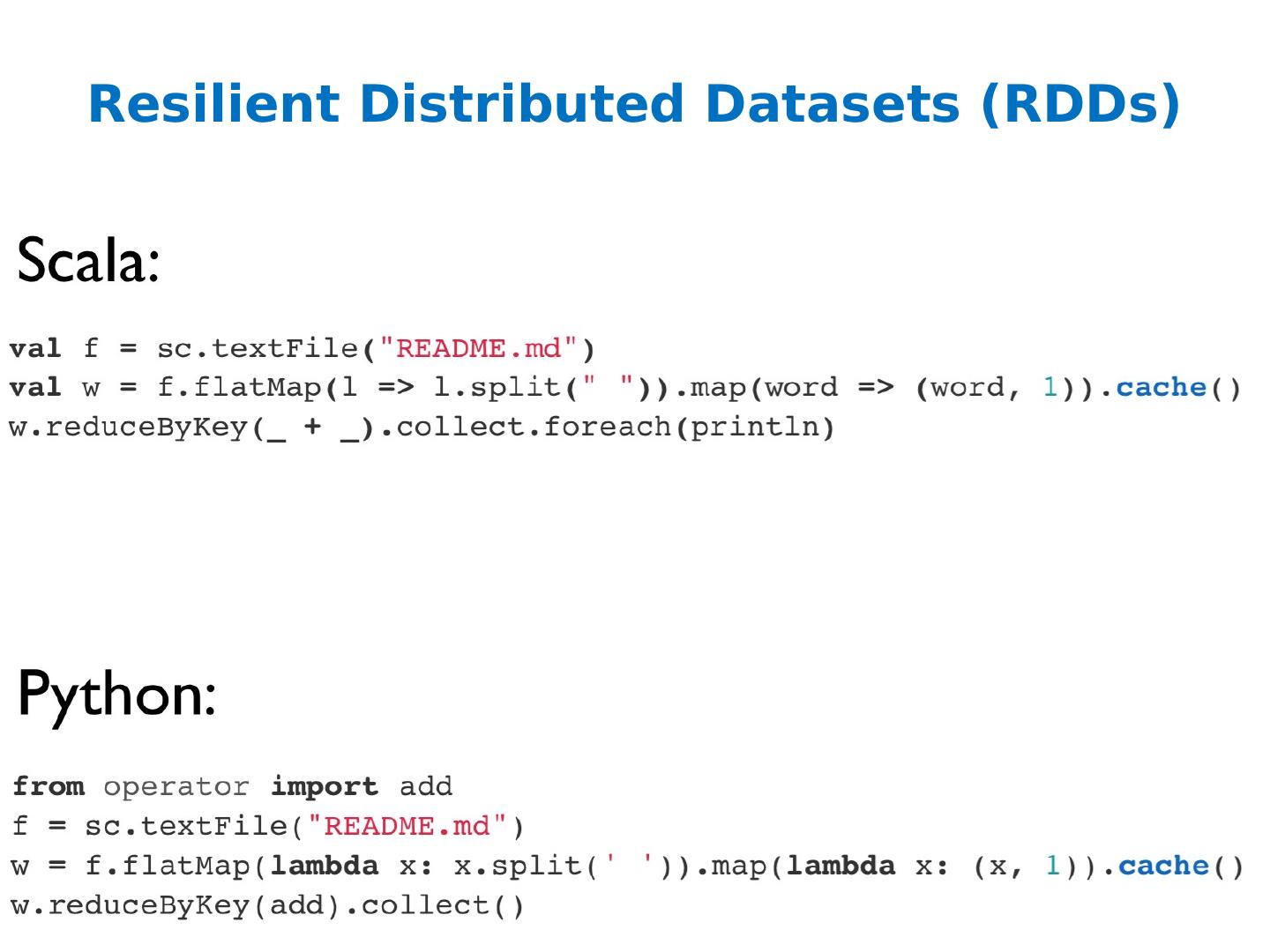

20 .Example: WordCount

21 .Example: WordCount

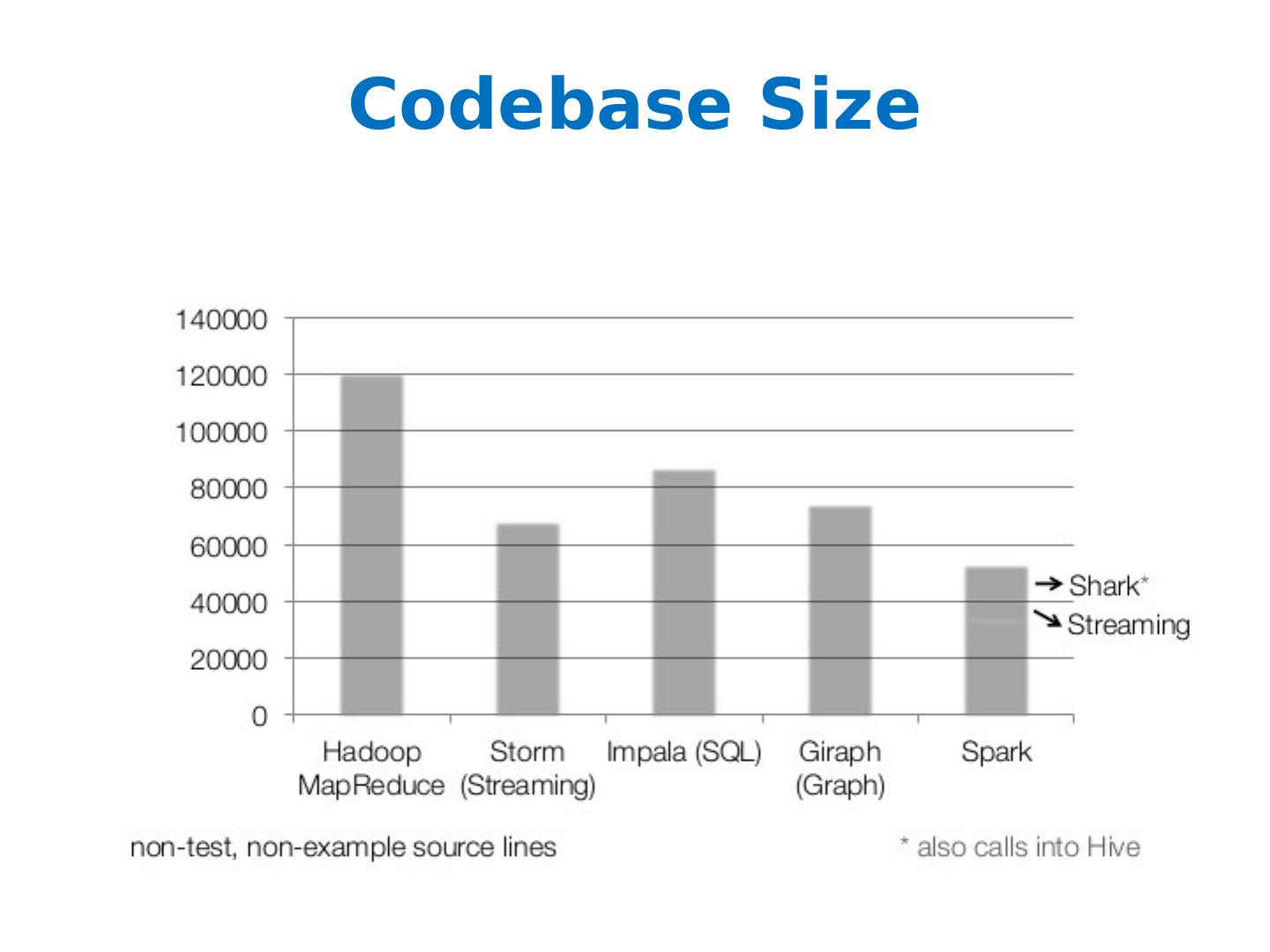

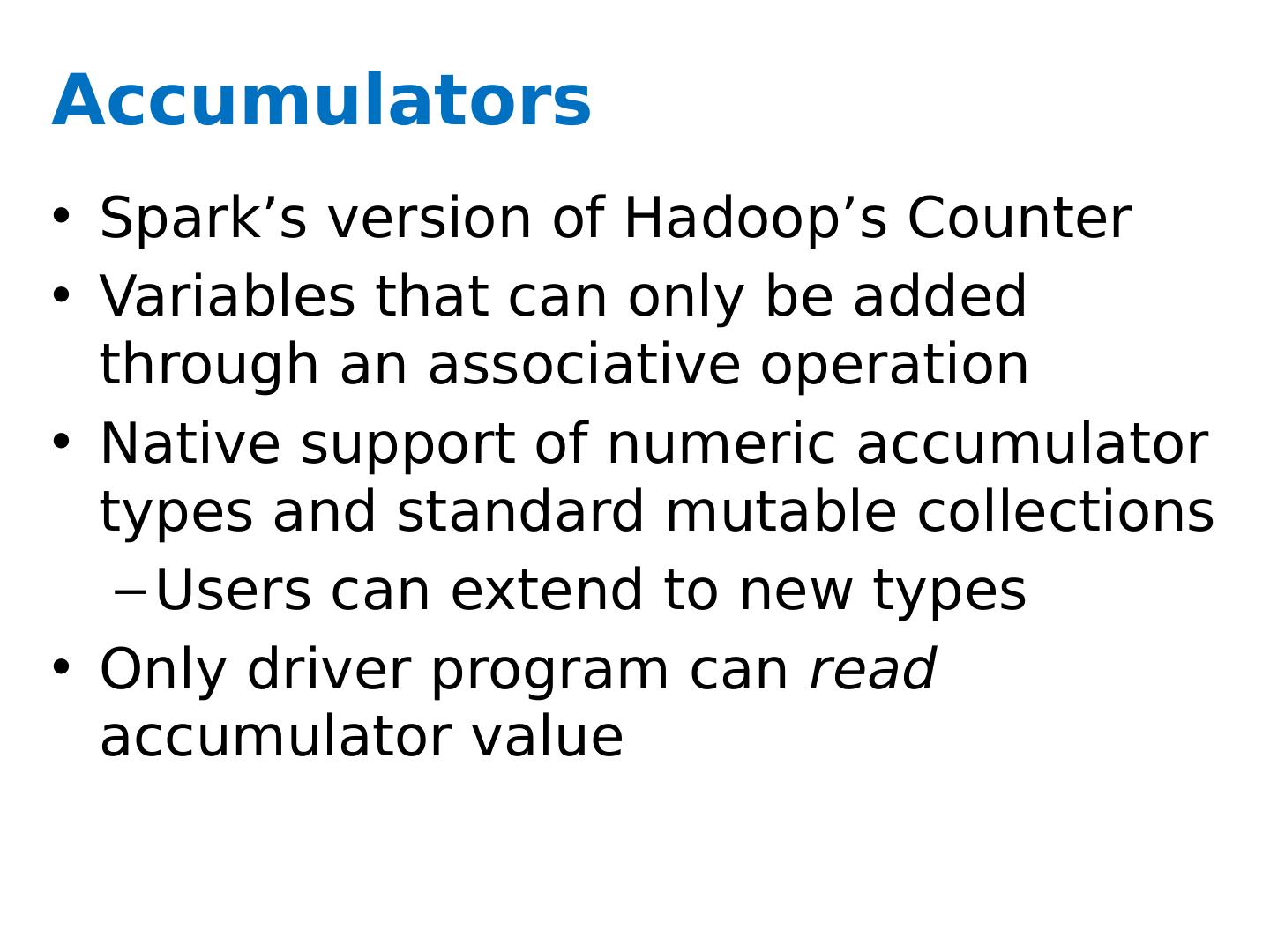

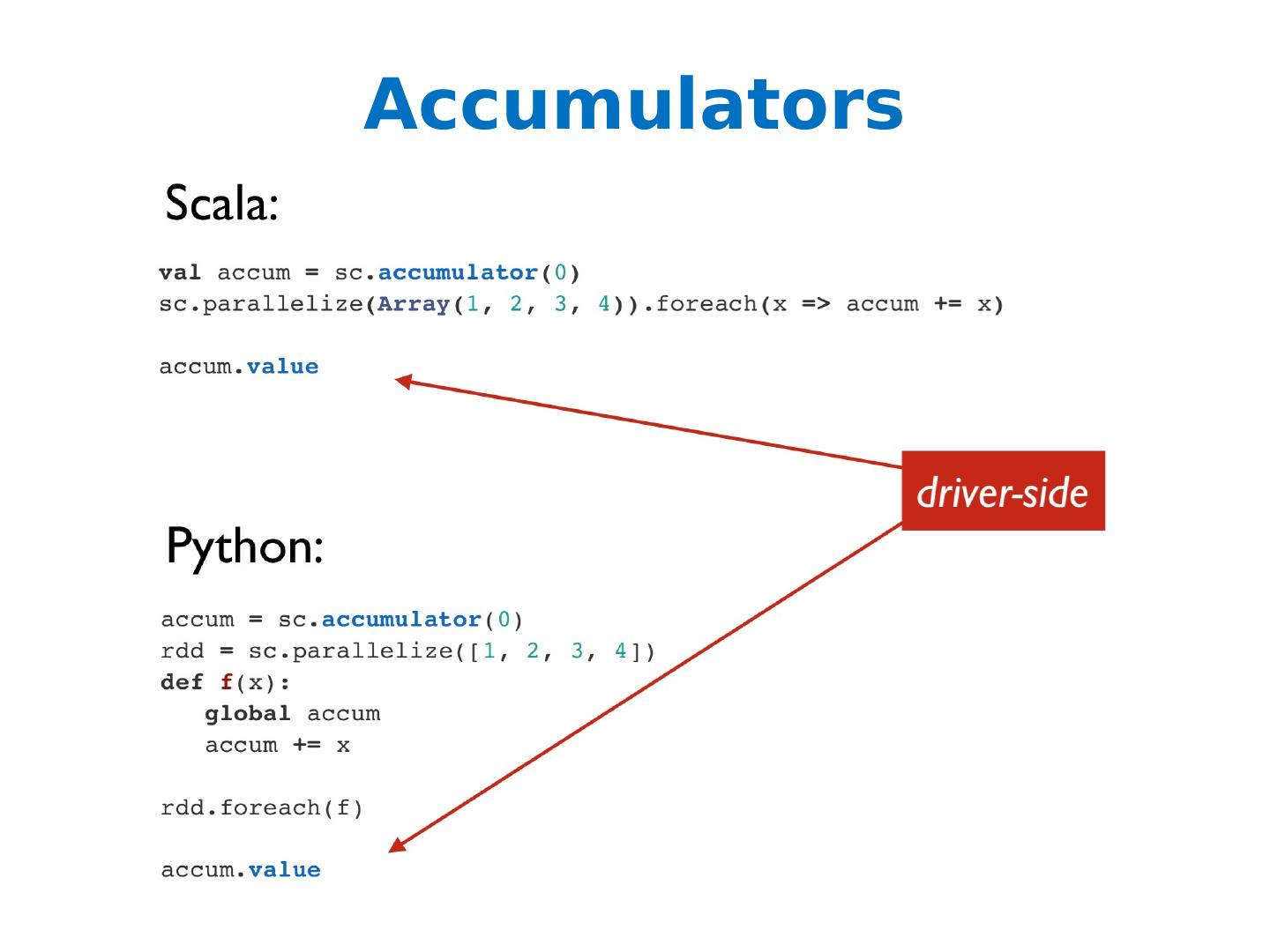

22 .Limitations of MapReduce Performance bottlenecks—not all jobs can be cast as batch processes Graphs? Programming in Hadoop is hard Boilerplate boilerplate everywhere

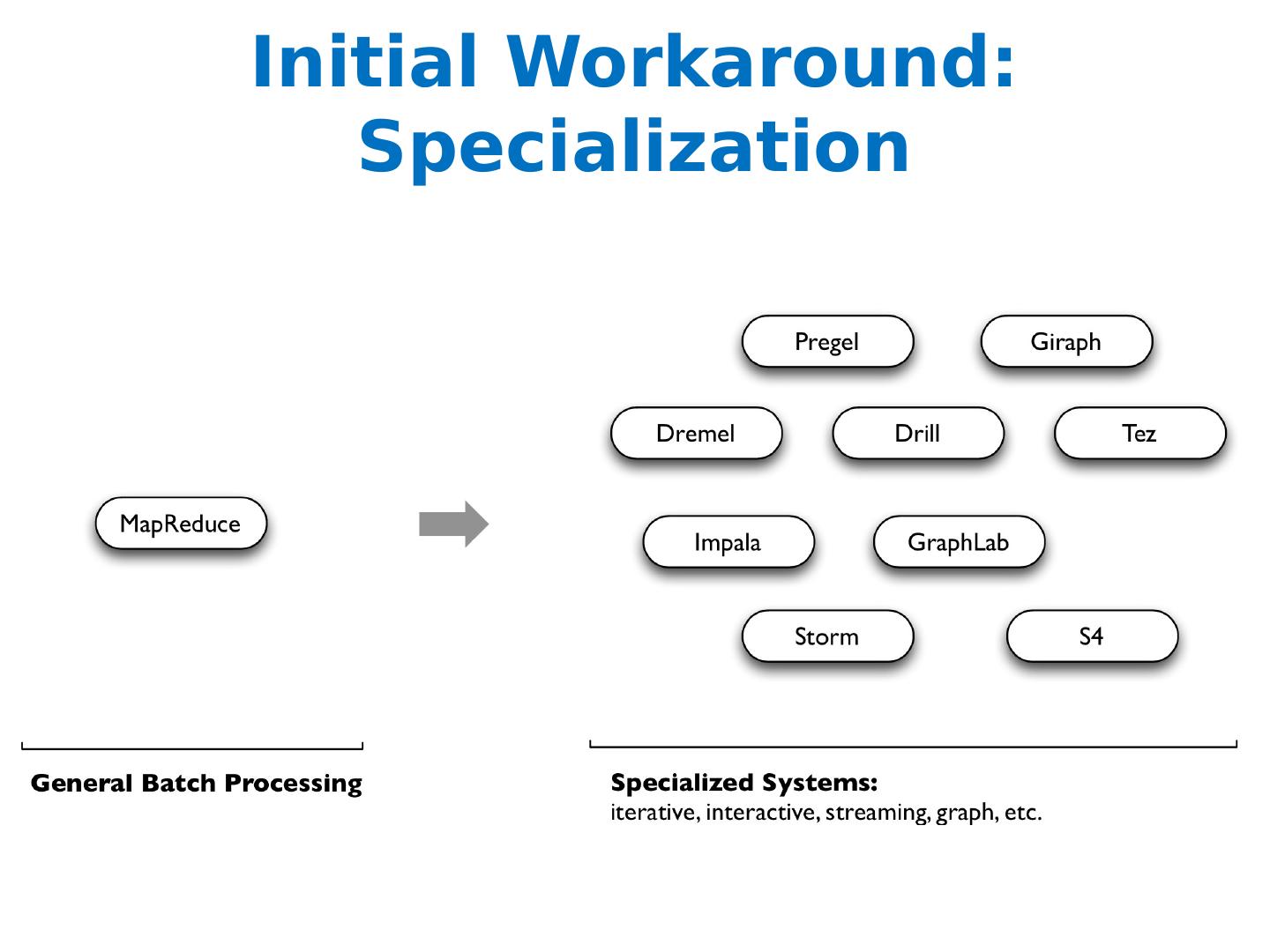

23 .Initial Workaround: Specialization

24 .Along Came Spark Spark’s goal was to generalize MapReduce to support new applications within the same engine Two additions: Fast data sharing General DAGs (directed acyclic graphs) Best of both worlds: easy to program & more efficient engine in general

25 .Codebase Size

26 .More on Spark More general Supports map/reduce paradigm Supports vertex-based paradigm General compute engine (DAG) More API hooks Scala , Java, and Python More interfaces Batch ( Hadoop ), real-time (Storm), and interactive (???)

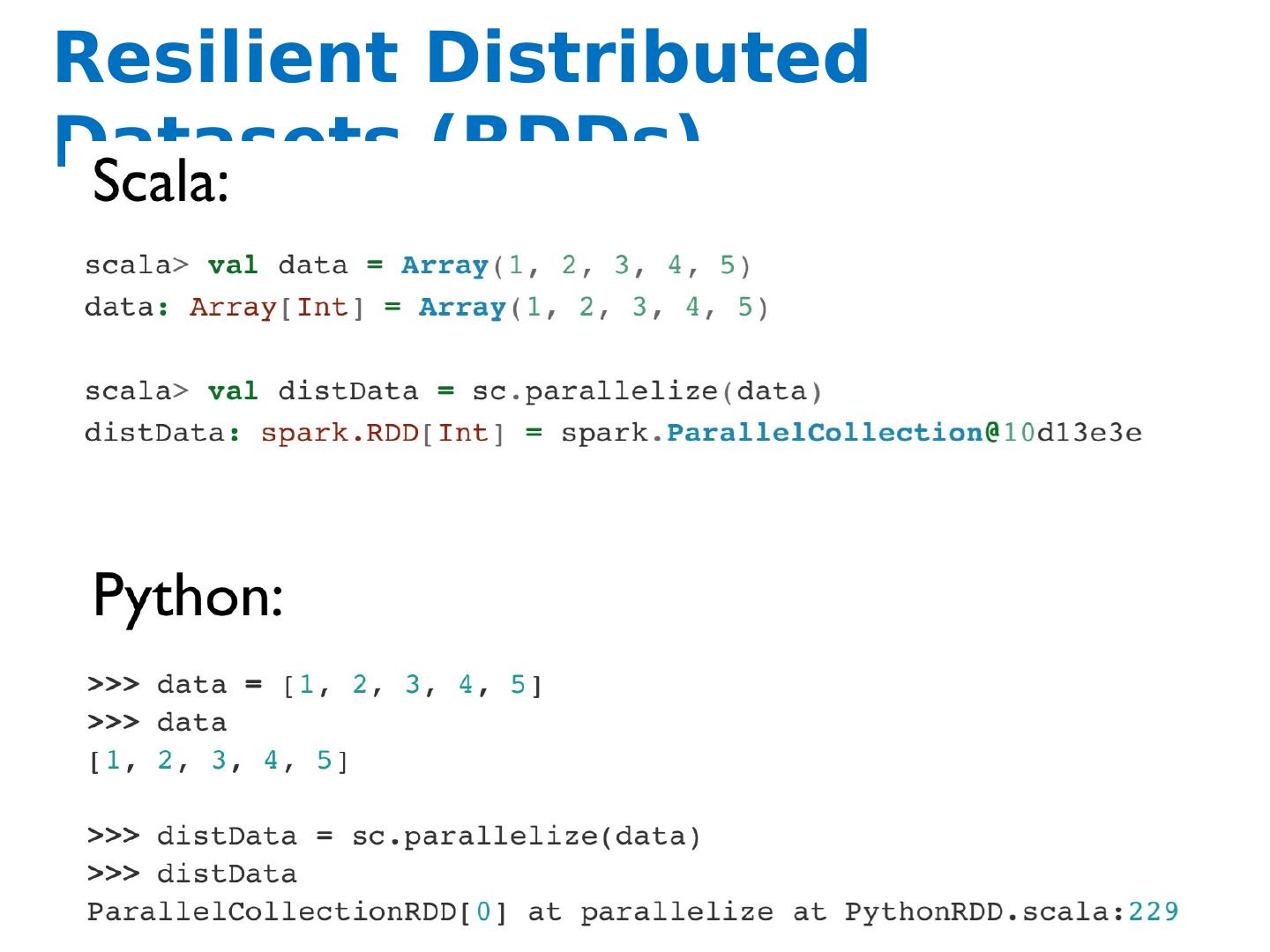

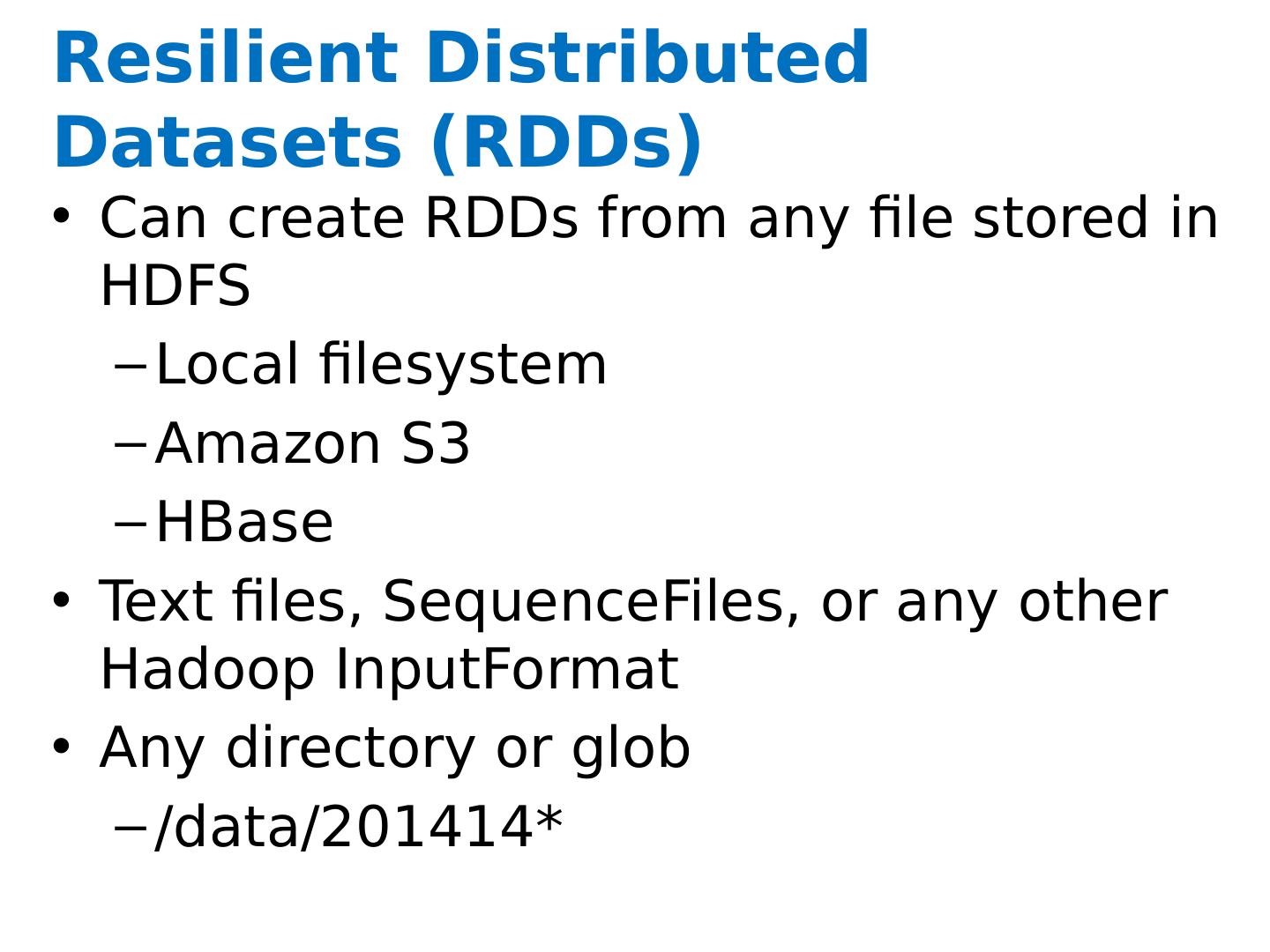

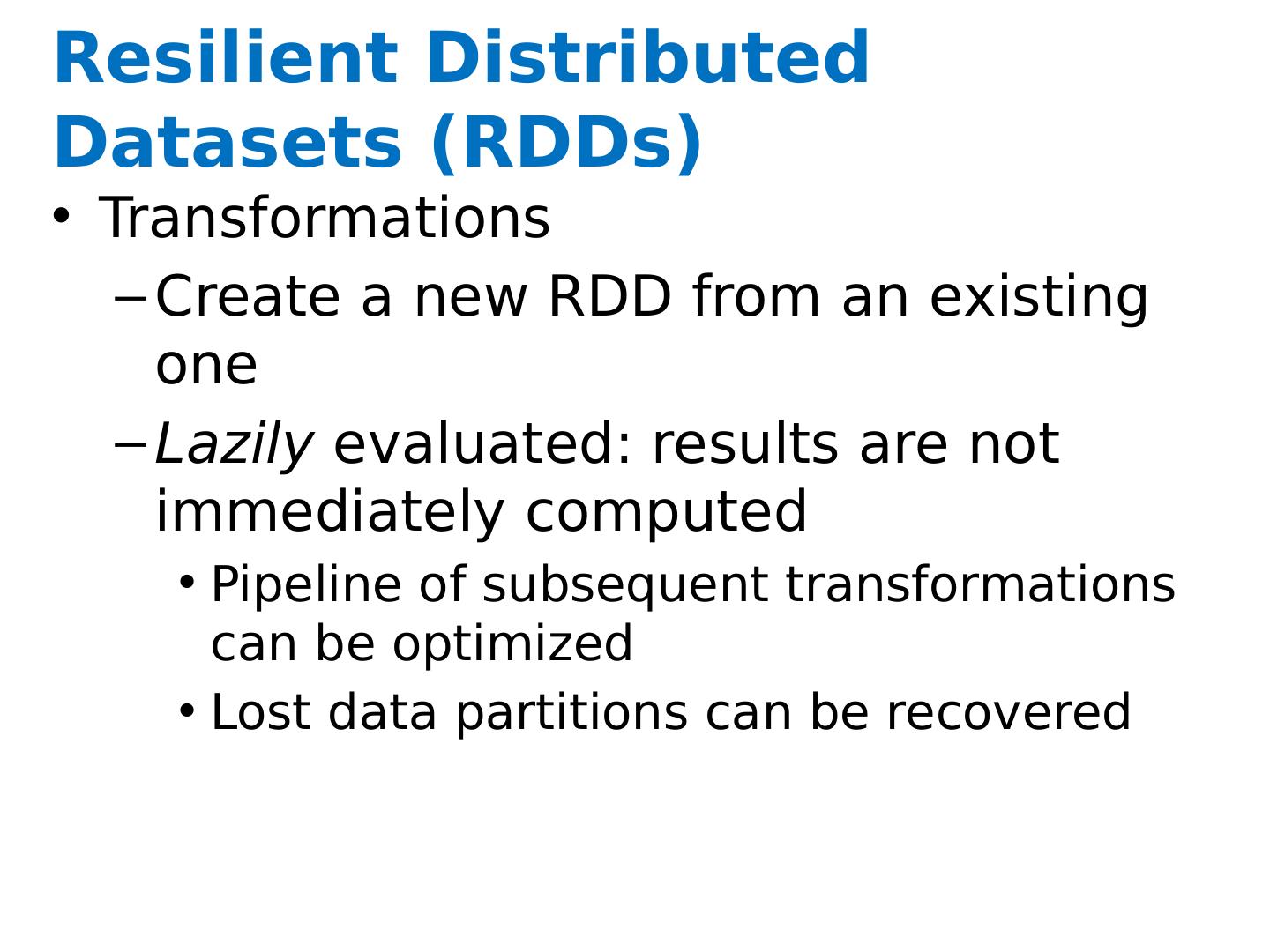

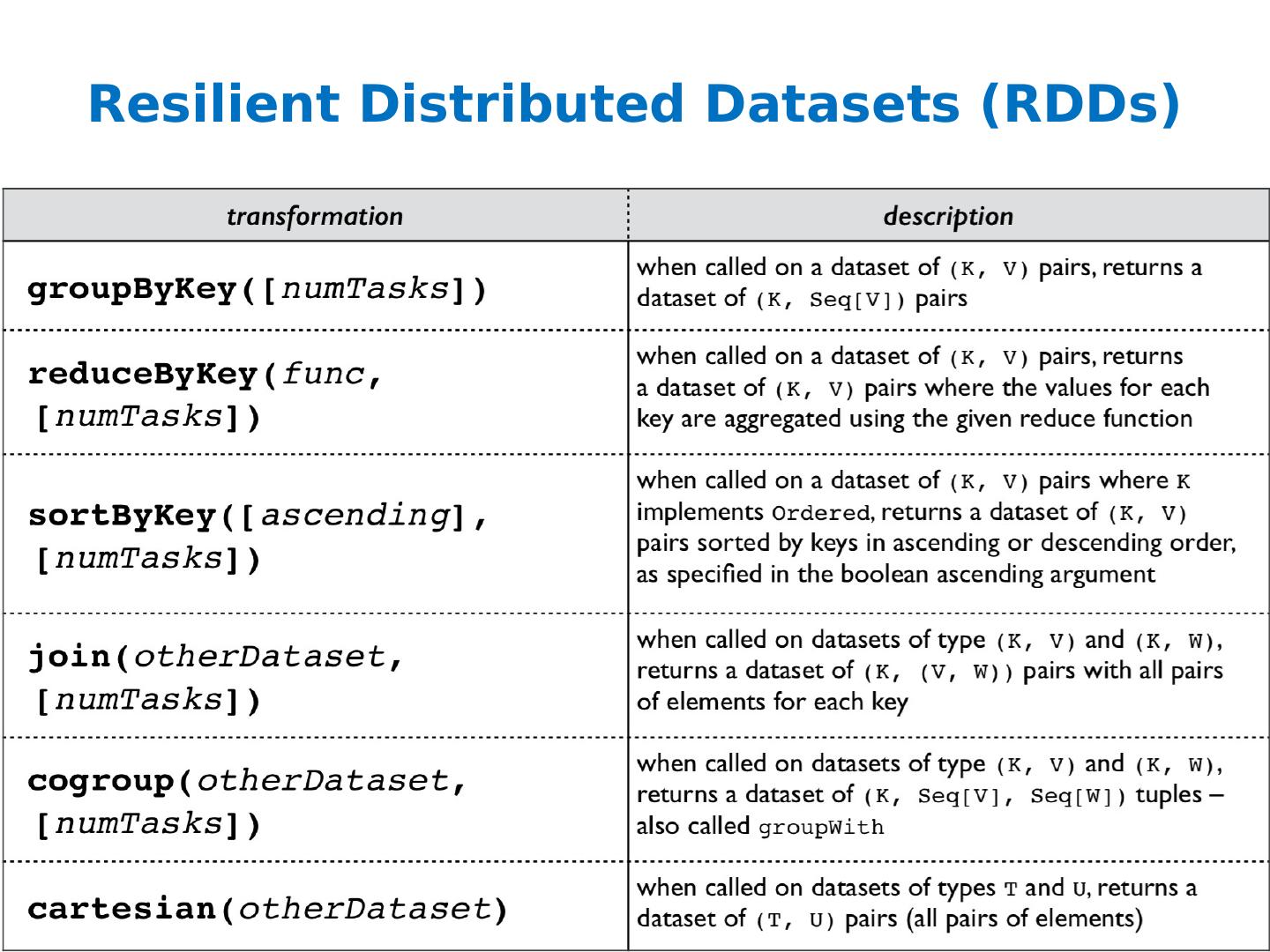

27 .Interactive Shells Spark creates a SparkContext object (cluster information) For either shell: sc External programs use a static constructor to instantiate the context

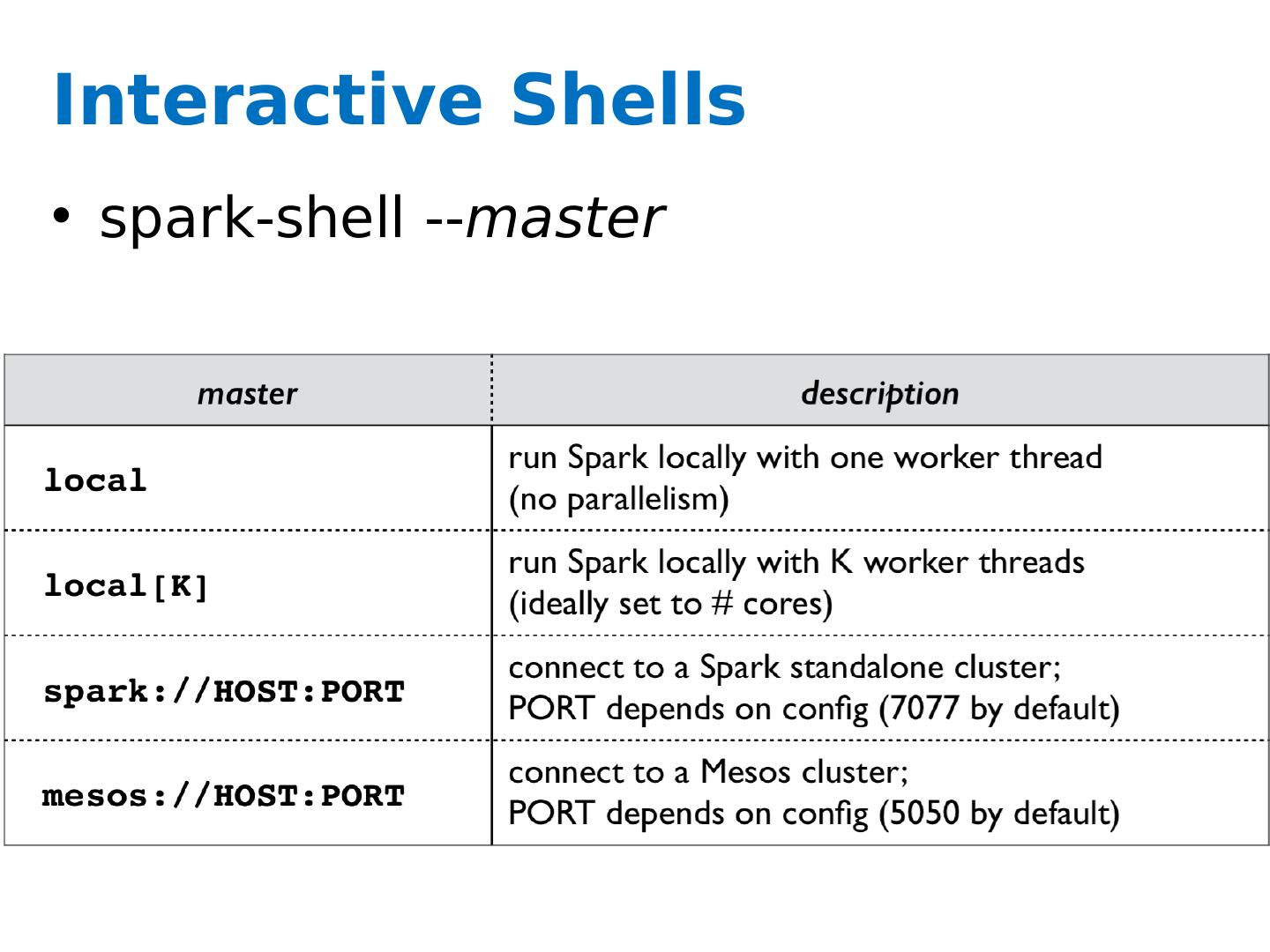

28 .Interactive Shells spark-shell -- master

29 .Interactive Shells Master connects to the cluster manager, which allocates resources across applications Acquires executors on cluster nodes: worker processes to run computations and store data Sends app code to executors Sends tasks for executors to run