- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Tencent Cloud - Ozone

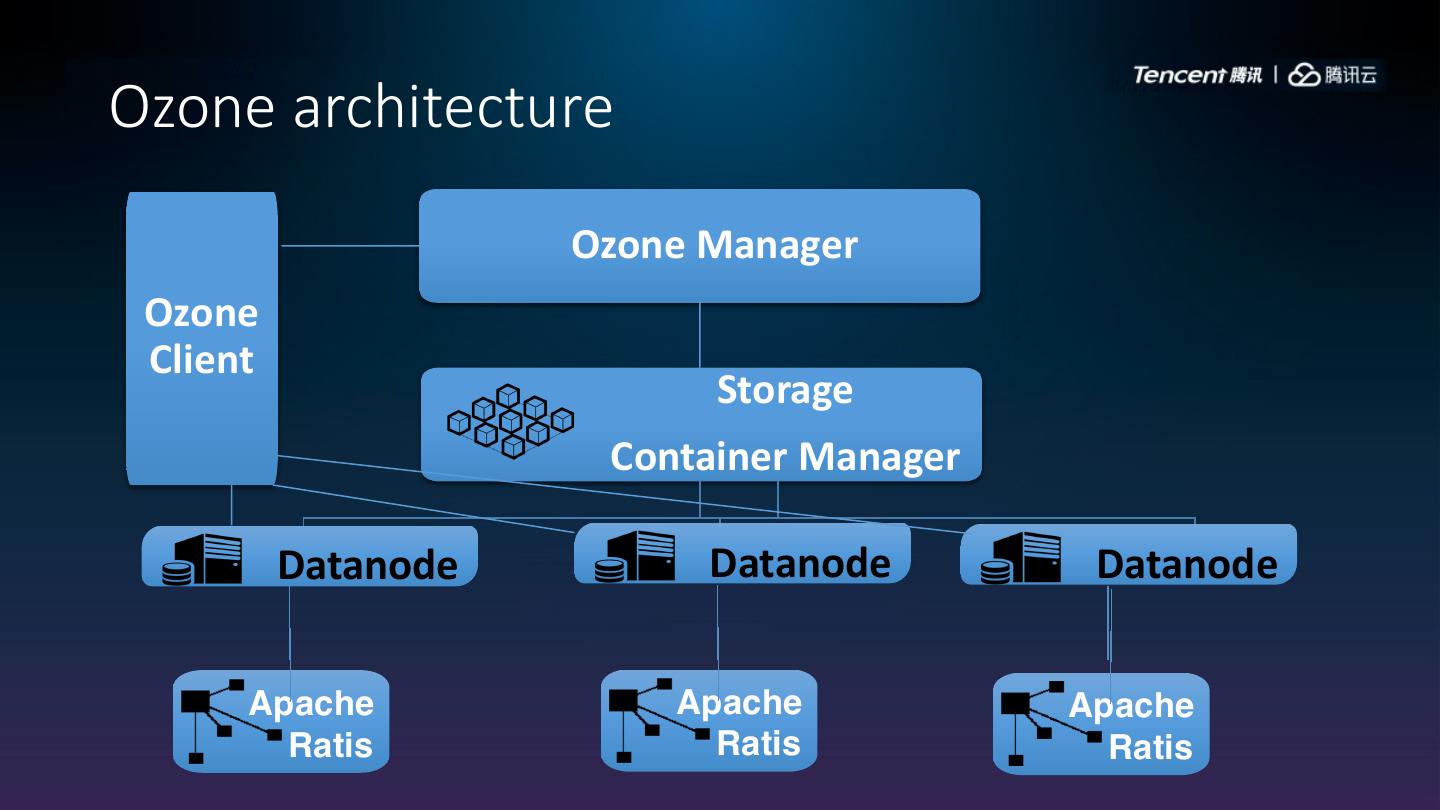

展开查看详情

1 .Ozone: Native Object Store for Hadoop (Sammi Chen) Tencent Cloud Hadoop Committer & PMC member

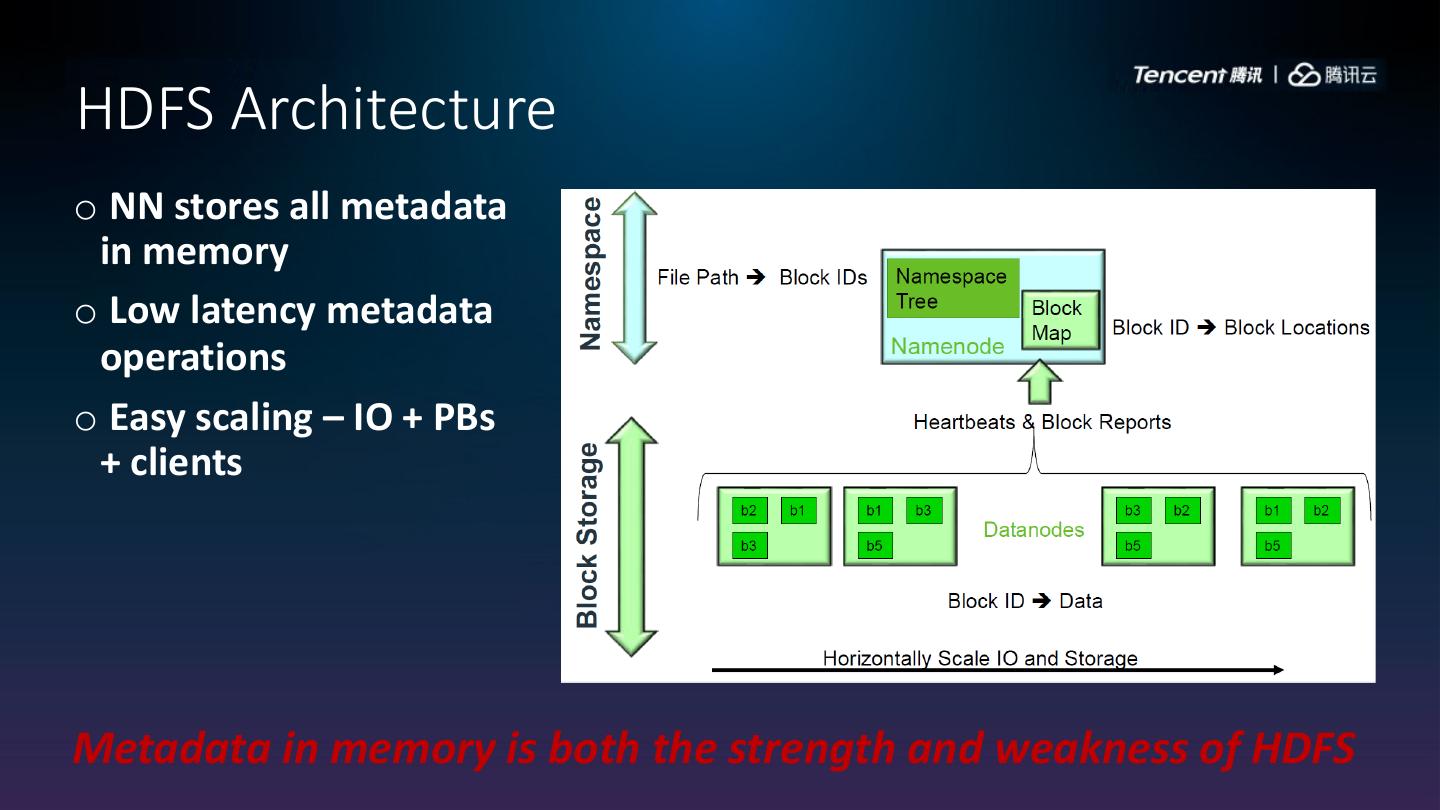

2 .HDFS Architecture o NN stores all metadata in memory o Low latency metadata operations o Easy scaling – IO + PBs + clients Metadata in memory is both the strength and weakness of HDFS

3 .Scaling Challenges When scaling cluster up to 4K+ nodes with about ~500M files o Namespace metadata in NN o Block management in NN o File operation concurrency o Block reports handle o Client/RPC 150K++ o Slow NN startup Small files in HDFS make thing worse

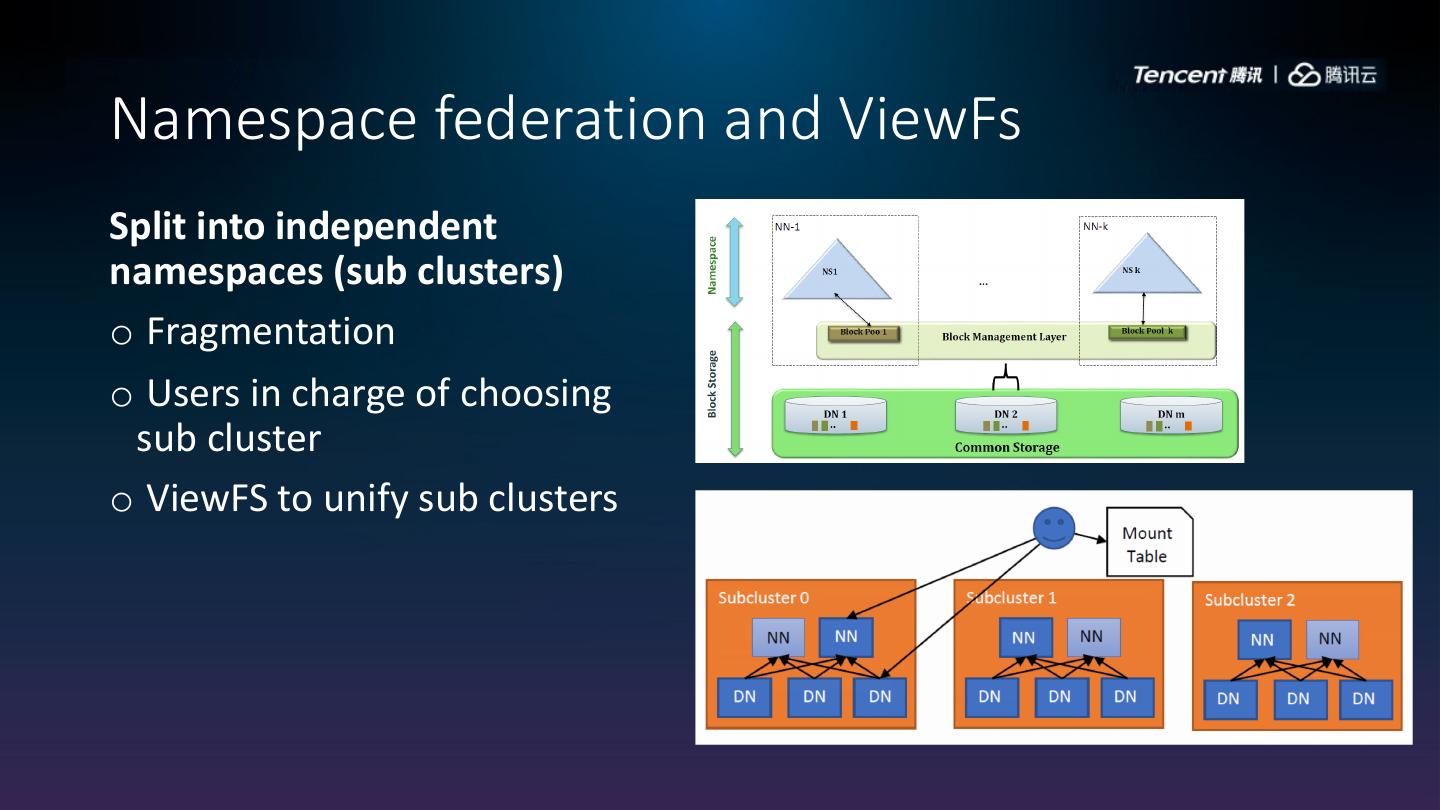

4 .Namespace federation and ViewFs Split into independent namespaces (sub clusters) o Fragmentation o Users in charge of choosing sub cluster o ViewFS to unify sub clusters

5 .RBF(Router based federation) Unified server side namespaces o Centralize the mount table o New Federation layer

6 .Ozone - Motivation o Scale both Namespace and Block Layer o Scaling to trillions of objects o Natura good for small files o Object store has simpler semantics than a file system and is easier to scale

7 .Ozone architecture Ozone Manager Ozone Client Storage Container Manager Datanode Datanode Datanode Apache Apache Apache Ratis Ratis Ratis

8 .Ozone components o Datanode (Containers) • Datanode hosts containers. A container is a collection of blocks on a datanode o Storage Container Manager (SCM) • Manages the container and datanode life cycle o Ozone Manager (OM) • Maps Ozone objects to Containers o Ozone Client • Talks to OM to discover the location of a container and sends IO requests to the appropriate container

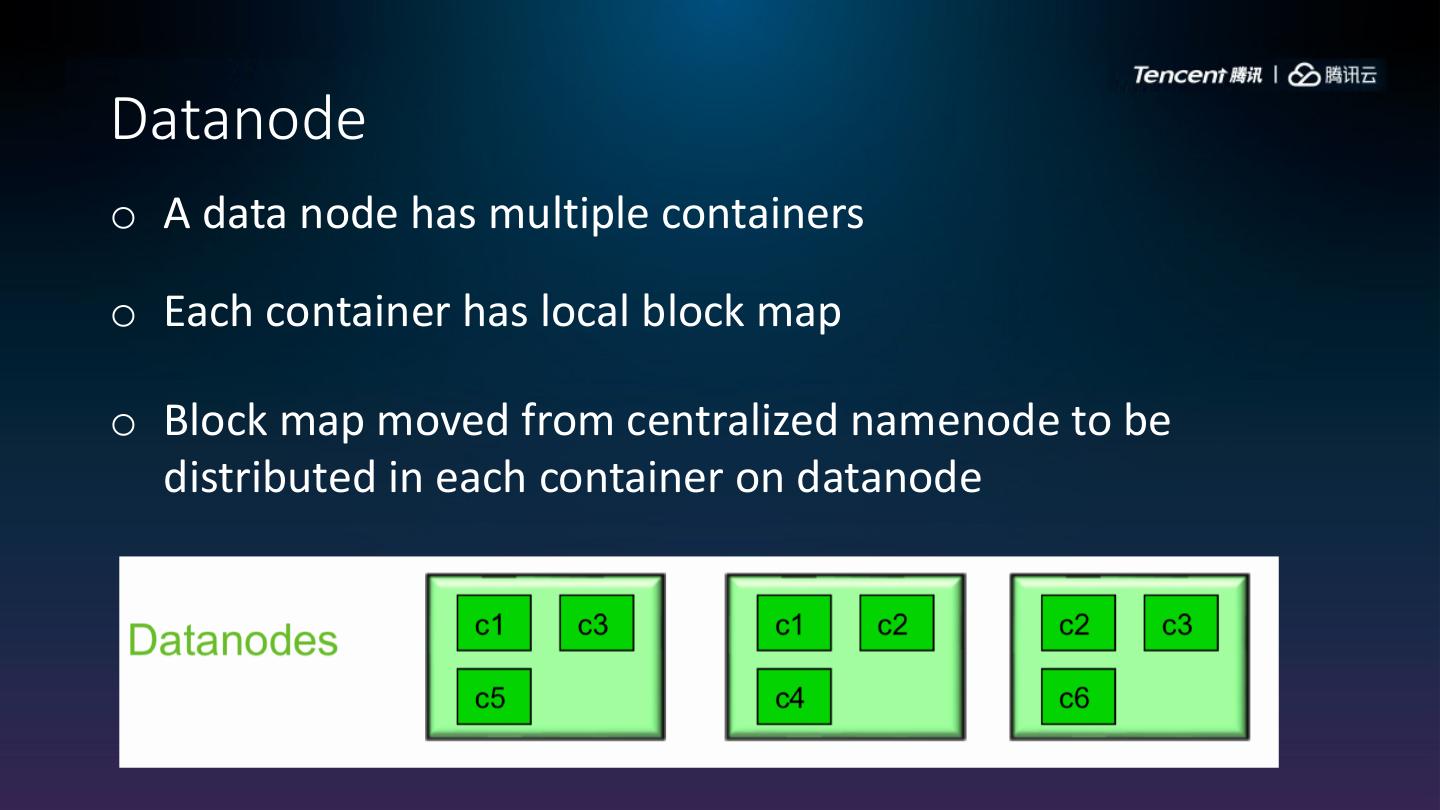

9 .Datanode o A data node has multiple containers o Each container has local block map o Block map moved from centralized namenode to be distributed in each container on datanode

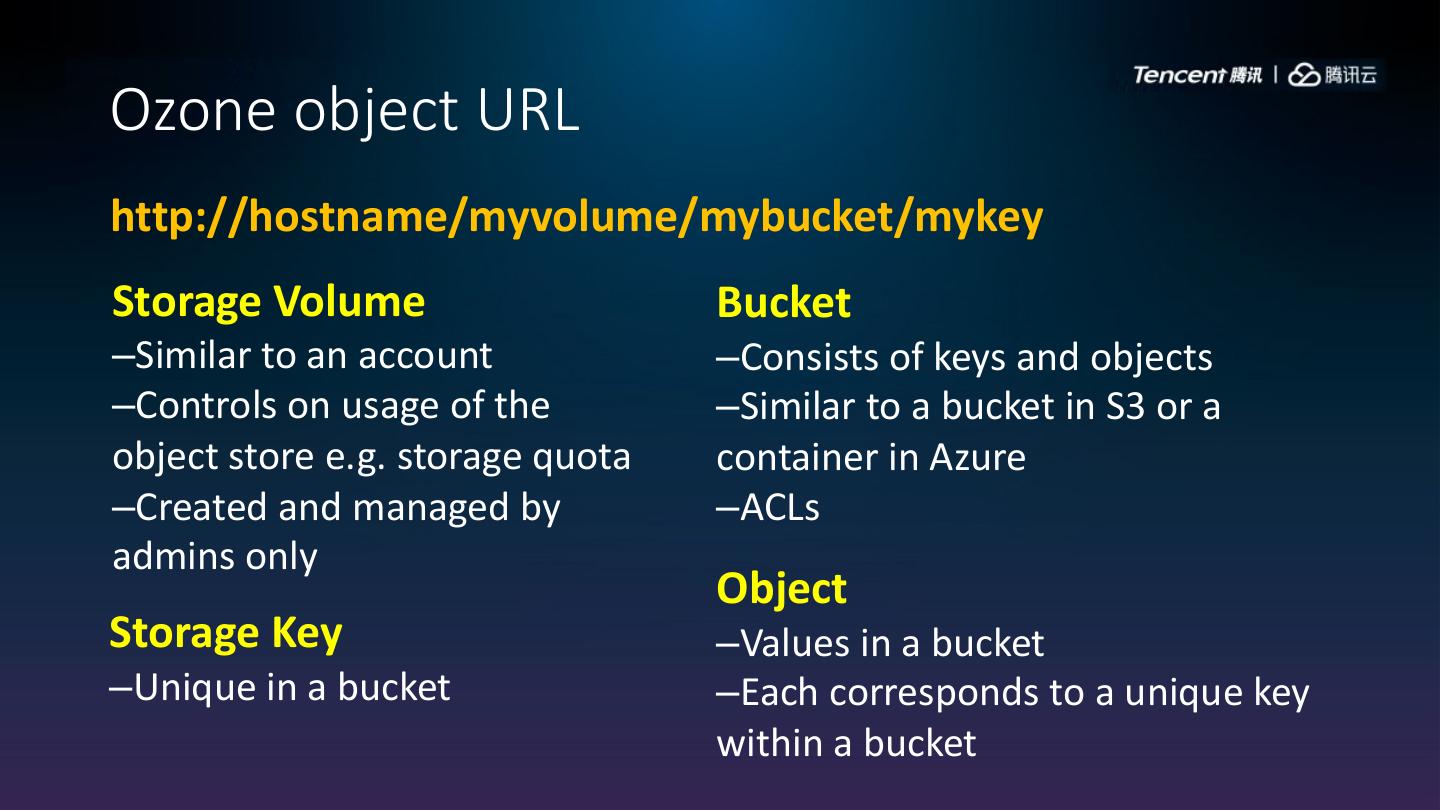

10 .Containers

11 .Containers o Container is the basic unit of replication (GB size, configurable) o Keys are unique only within a container o No requirements on the structure or format of keys/values • An Ozone Key-Value pair • An HDFS block ID and block contents, or part of a block, when a block spans containers o Metadata in LSM-based K-V store replicated via RAFT protocol o A chunk is a piece of user data, of arbitrary sizes e.g. a few KB to GBs o Containers may garbage collect unreferenced chunks

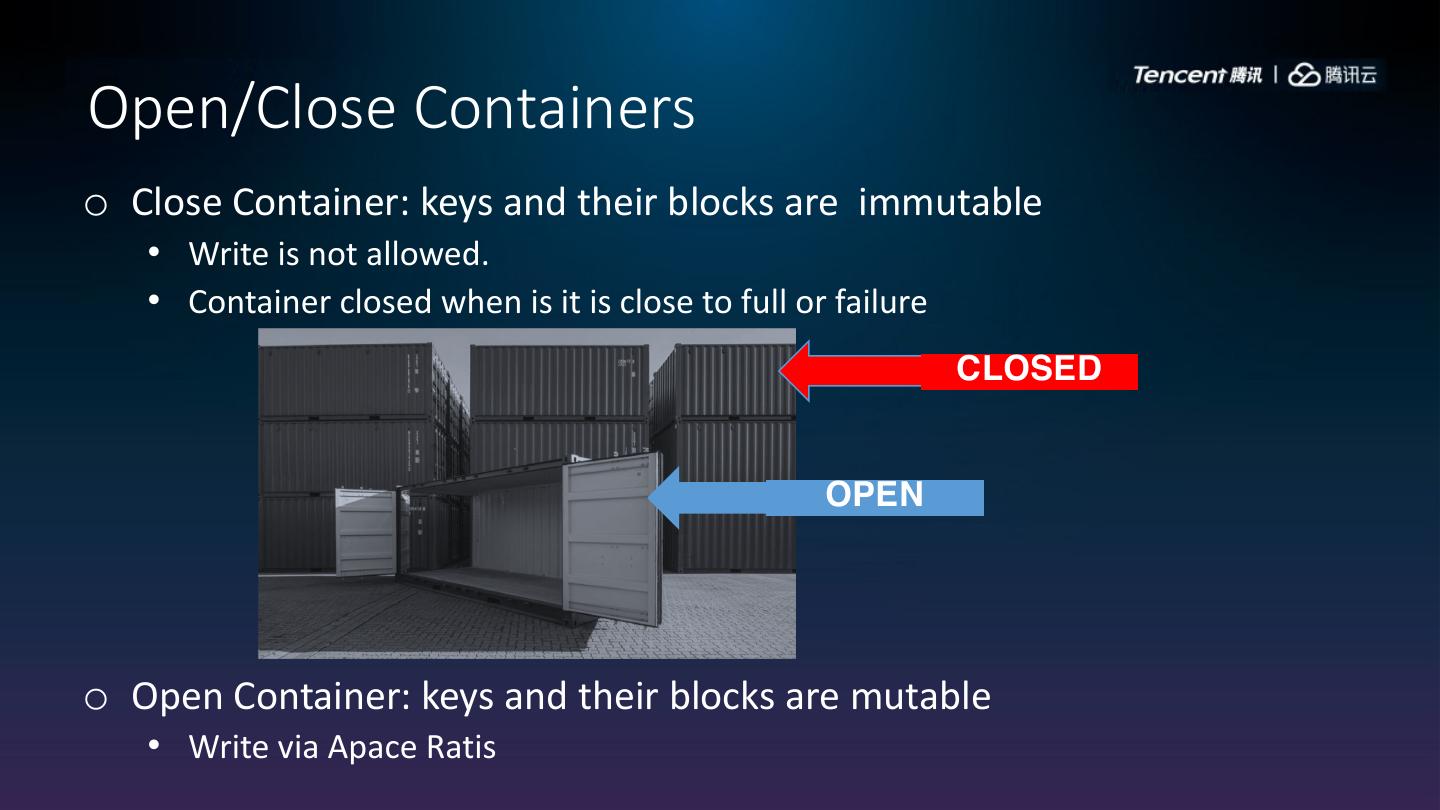

12 .Open/Close Containers o Close Container: keys and their blocks are immutable • Write is not allowed. • Container closed when is it is close to full or failure CLOSED OPEN o Open Container: keys and their blocks are mutable • Write via Apace Ratis

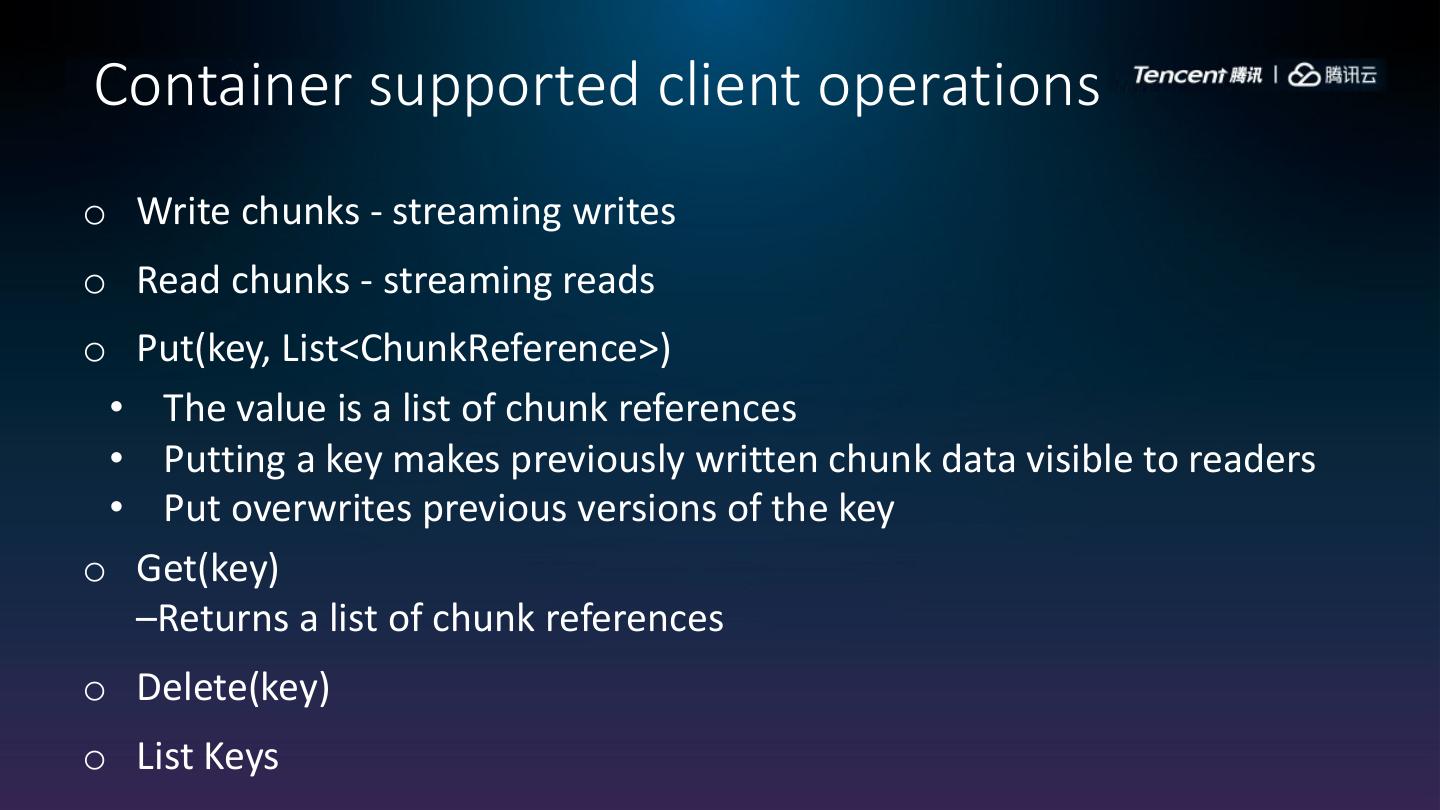

13 .Container supported client operations o Write chunks - streaming writes o Read chunks - streaming reads o Put(key, List<ChunkReference>) • The value is a list of chunk references • Putting a key makes previously written chunk data visible to readers • Put overwrites previous versions of the key o Get(key) –Returns a list of chunk references o Delete(key) o List Keys

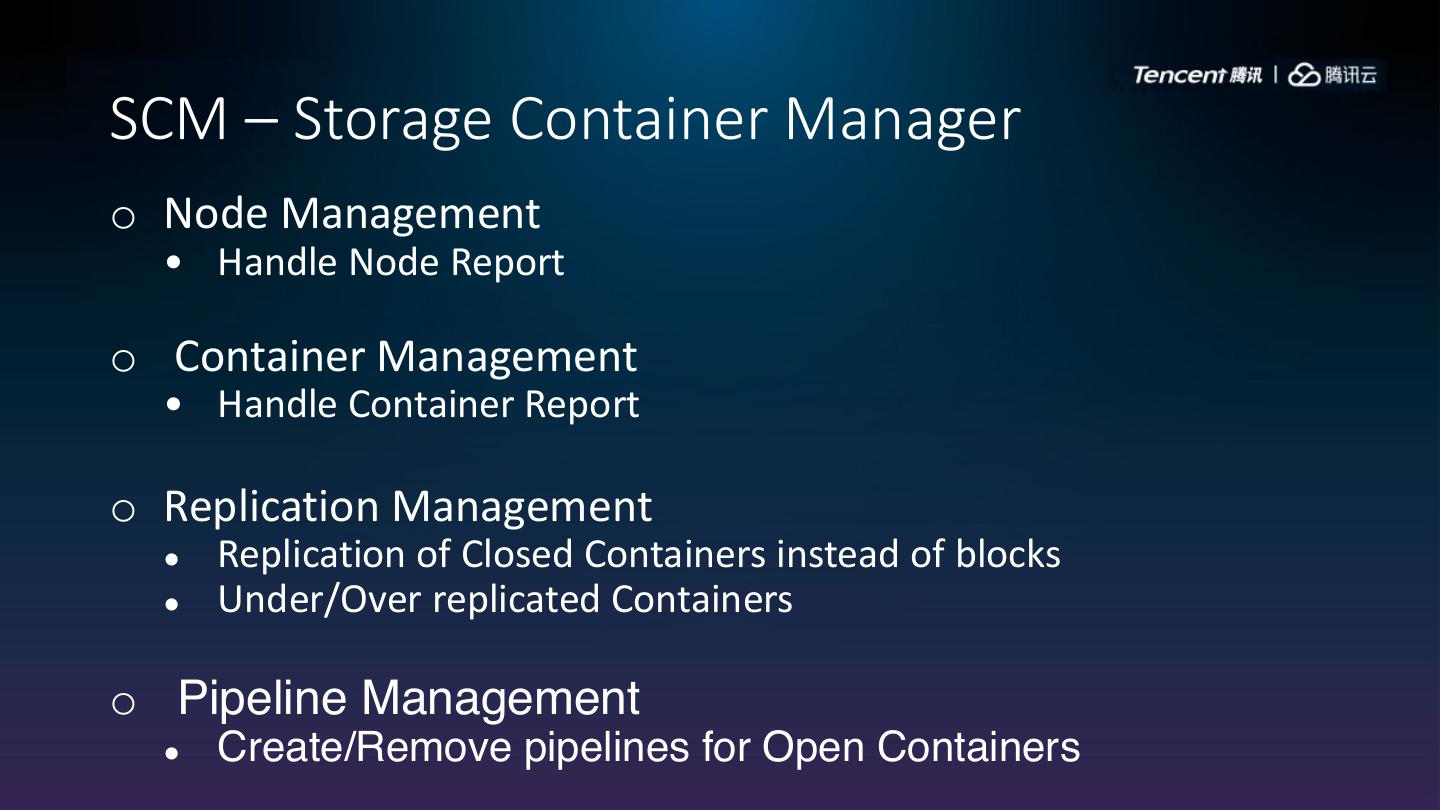

14 .SCM – Storage Container Manager o Node Management • Handle Node Report o Container Management • Handle Container Report o Replication Management l Replication of Closed Containers instead of blocks l Under/Over replicated Containers o Pipeline Management l Create/Remove pipelines for Open Containers

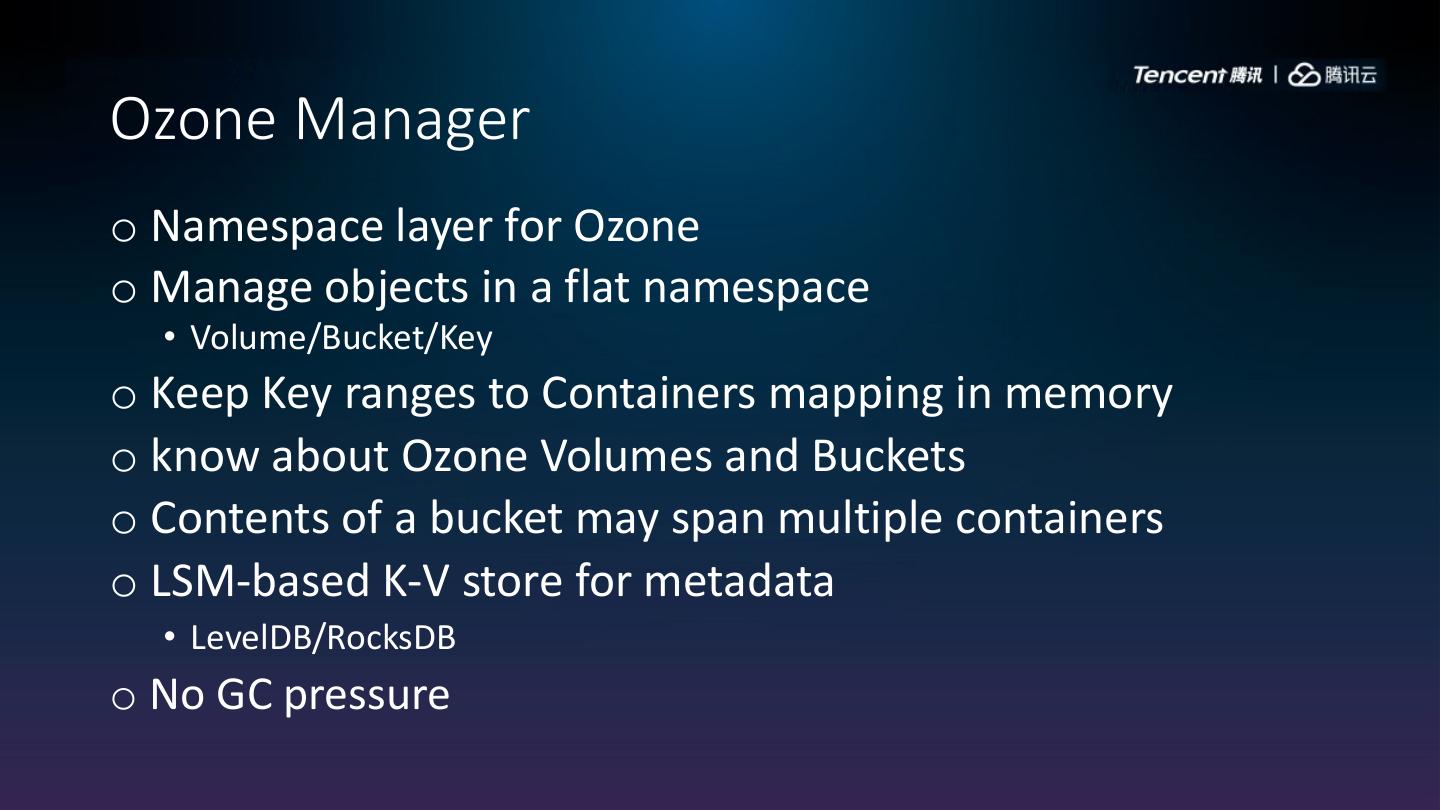

15 .Ozone Manager o Namespace layer for Ozone o Manage objects in a flat namespace • Volume/Bucket/Key o Keep Key ranges to Containers mapping in memory o know about Ozone Volumes and Buckets o Contents of a bucket may span multiple containers o LSM-based K-V store for metadata • LevelDB/RocksDB o No GC pressure

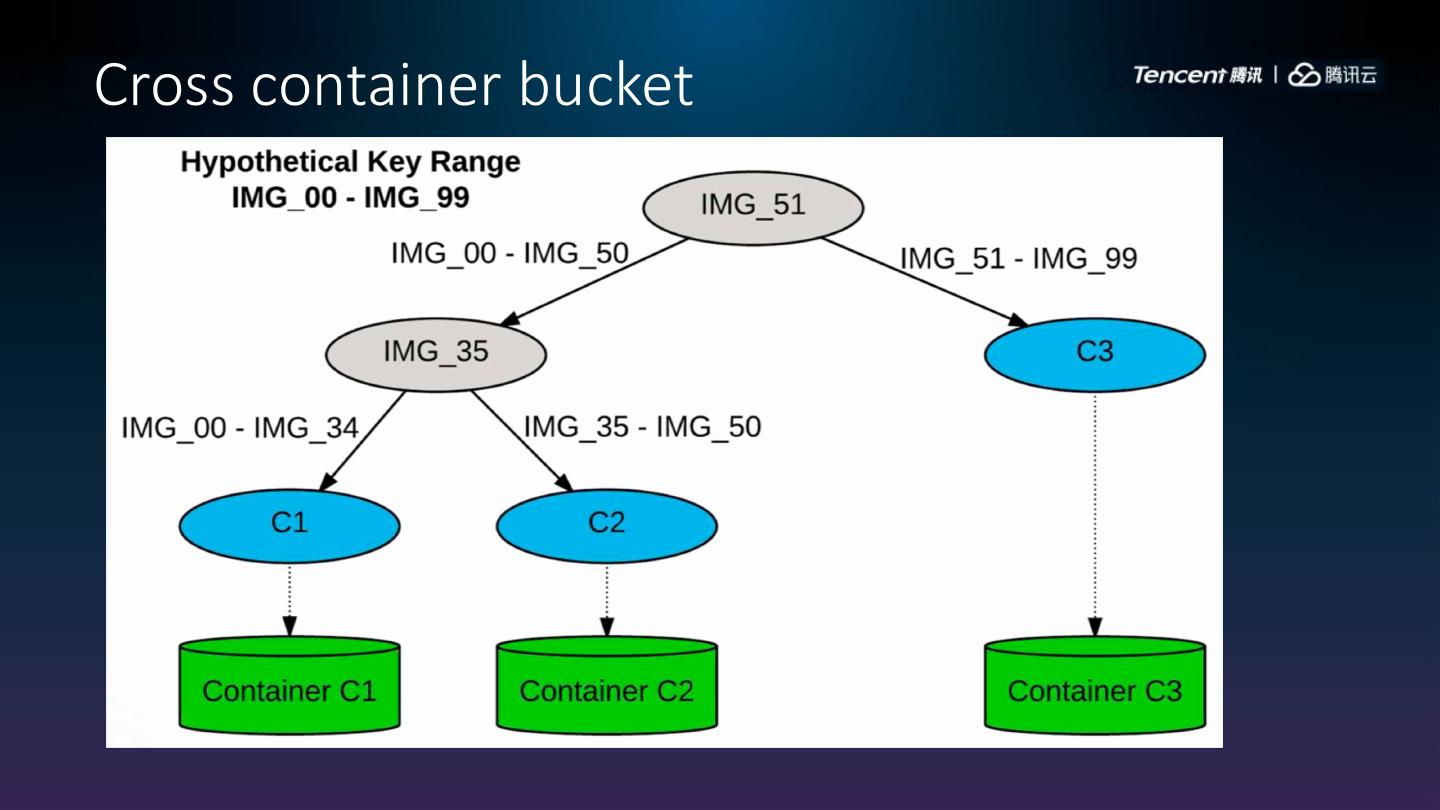

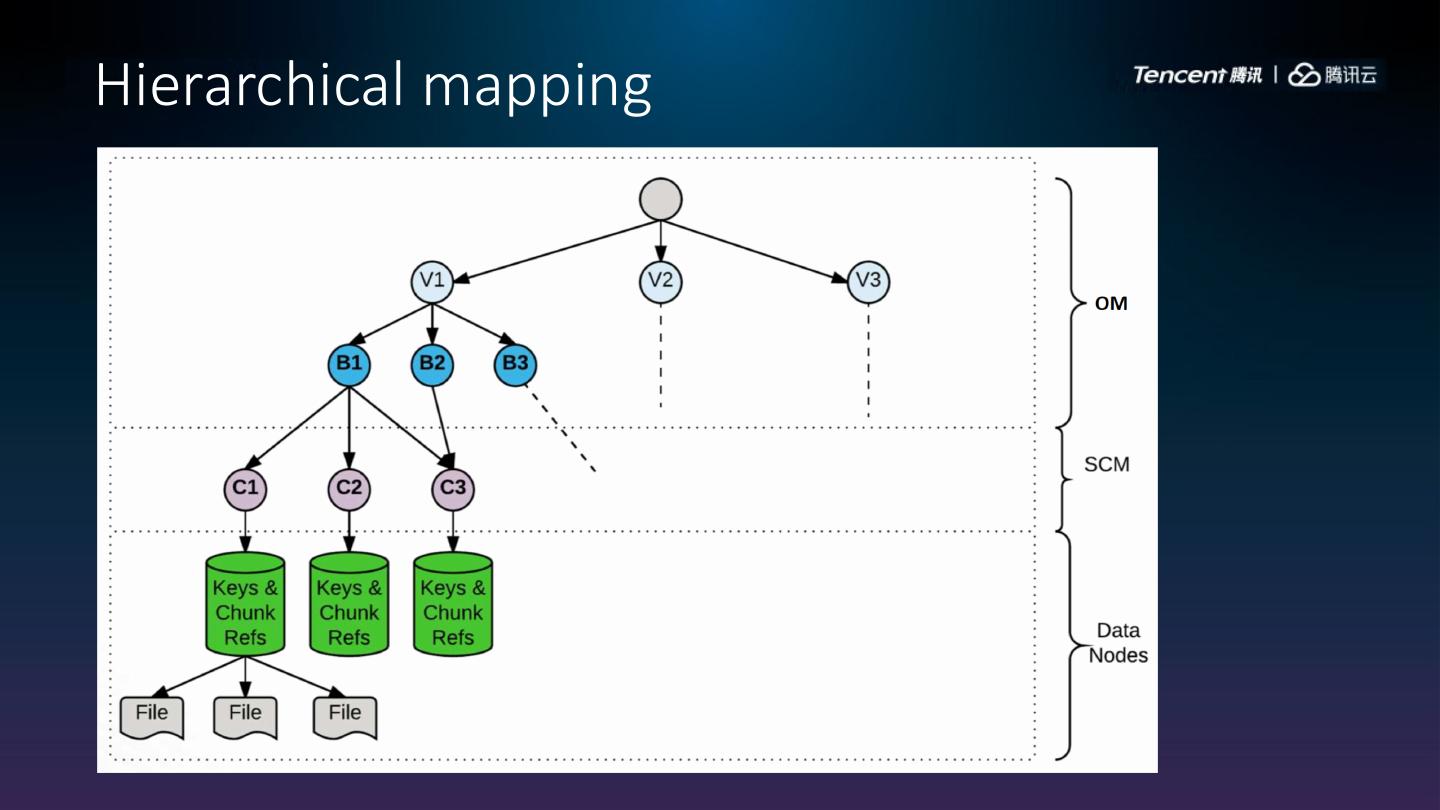

16 .Cross container bucket

17 .Ozone object URL http://hostname/myvolume/mybucket/mykey Storage Volume Bucket –Similar to an account –Consists of keys and objects –Controls on usage of the –Similar to a bucket in S3 or a object store e.g. storage quota container in Azure –Created and managed by –ACLs admins only Object Storage Key –Values in a bucket –Unique in a bucket –Each corresponds to a unique key within a bucket

18 .Hierarchical mapping

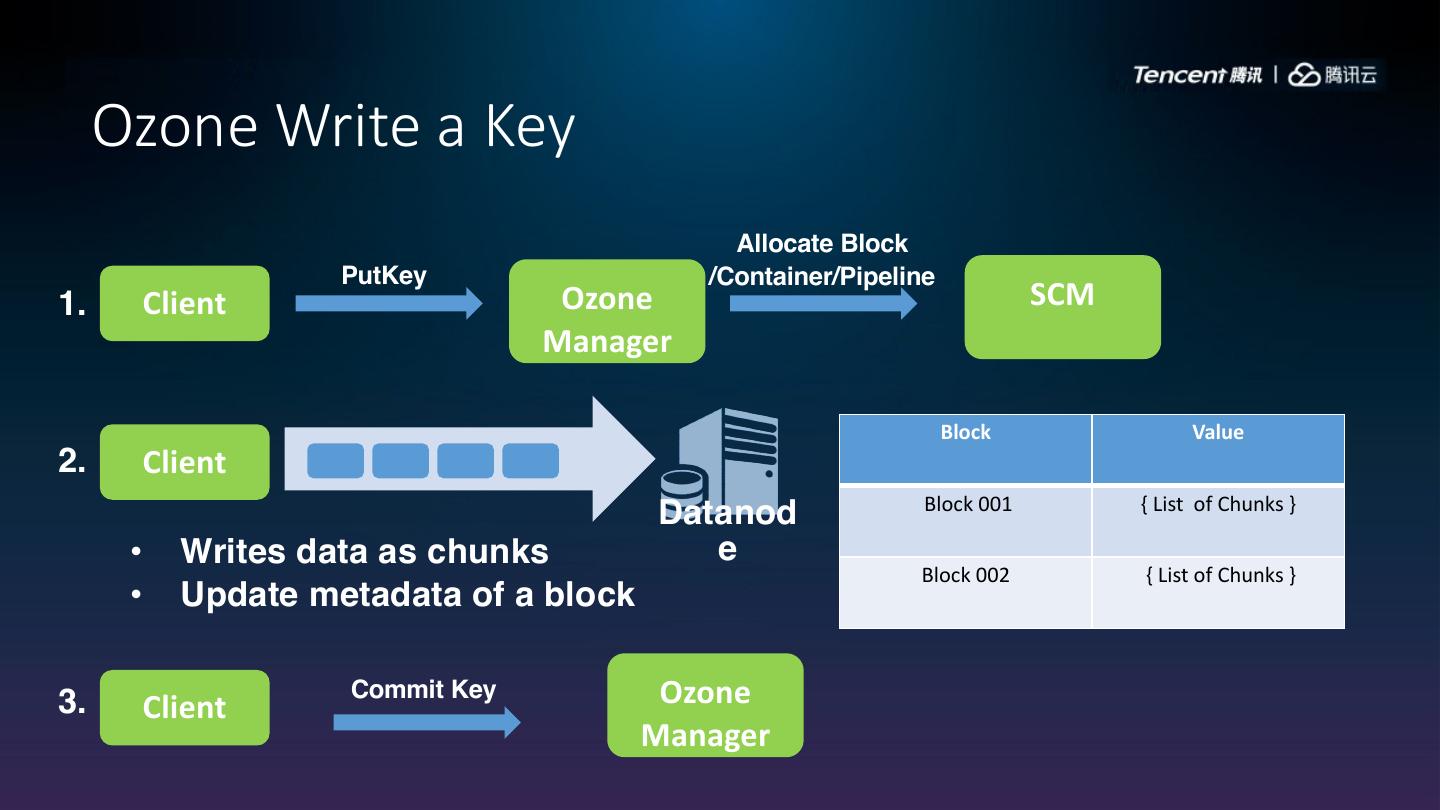

19 . Ozone Write a Key Allocate Block PutKey /Container/Pipeline 1. Client Ozone SCM Manager Block Value 2. Client Block 001 { List of Chunks } Datanod • Writes data as chunks e Block 002 { List of Chunks } • Update metadata of a block 3. Client Commit Key Ozone Manager

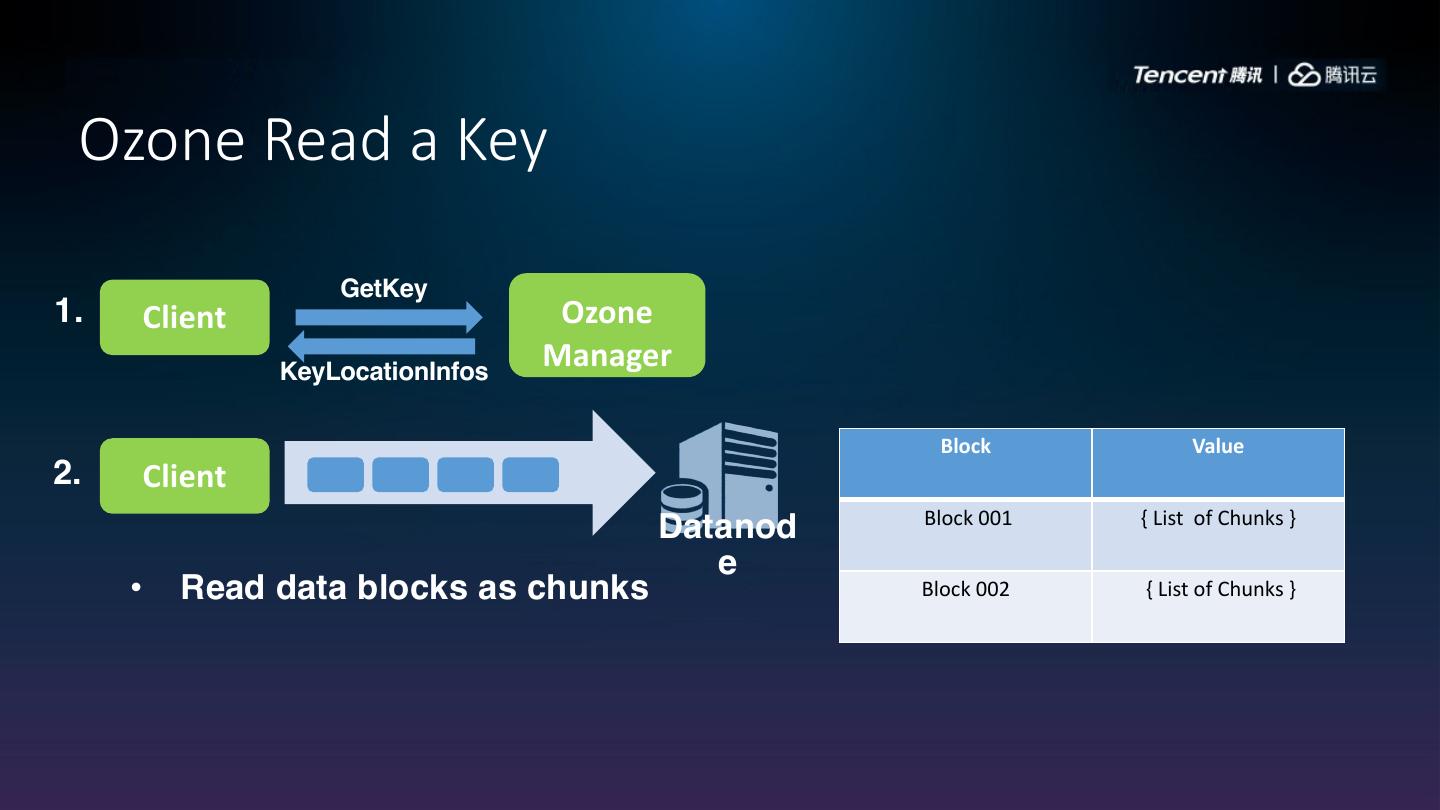

20 . Ozone Read a Key GetKey 1. Client Ozone KeyLocationInfos Manager Block Value 2. Client Block 001 { List of Chunks } Datanod e • Read data blocks as chunks Block 002 { List of Chunks }

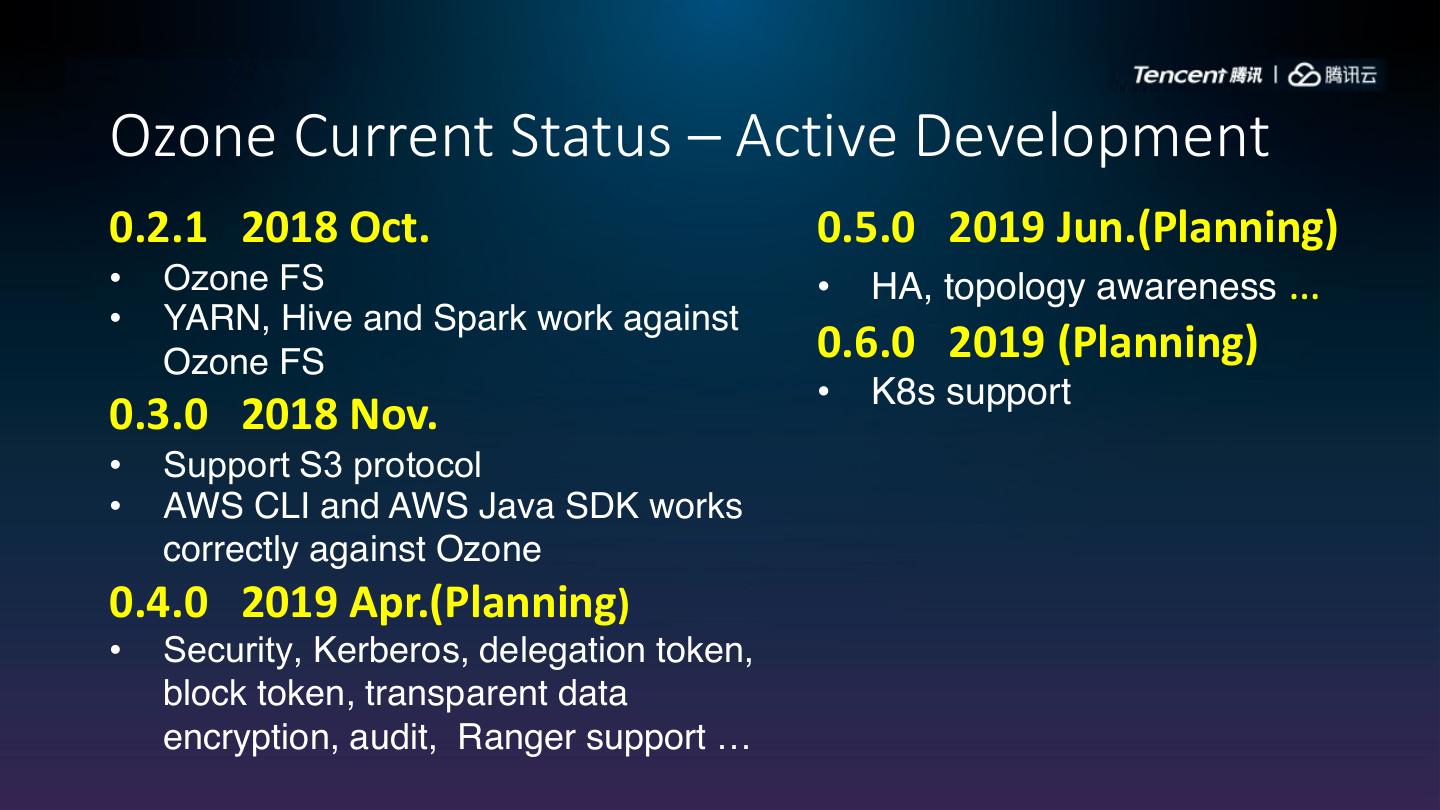

21 .Ozone Current Status – Active Development 0.2.1 2018 Oct. 0.5.0 2019 Jun.(Planning) • Ozone FS • HA, topology awareness … • YARN, Hive and Spark work against Ozone FS 0.6.0 2019 (Planning) • K8s support 0.3.0 2018 Nov. • Support S3 protocol • AWS CLI and AWS Java SDK works correctly against Ozone 0.4.0 2019 Apr.(Planning) • Security, Kerberos, delegation token, block token, transparent data encryption, audit, Ranger support …

22 .Contribution to Ozone o Tencent starts contributing to Ozone from 0.4.0 o Currently lead the container placement with network topology awareness feature development https://hadoop.apache.org/ozone Welcome to contribute and try it out !

23 .! !

24 .Thank You!