- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

TensorFlow Extended: An End-to-End Machine Learning Platform for TensorFlow

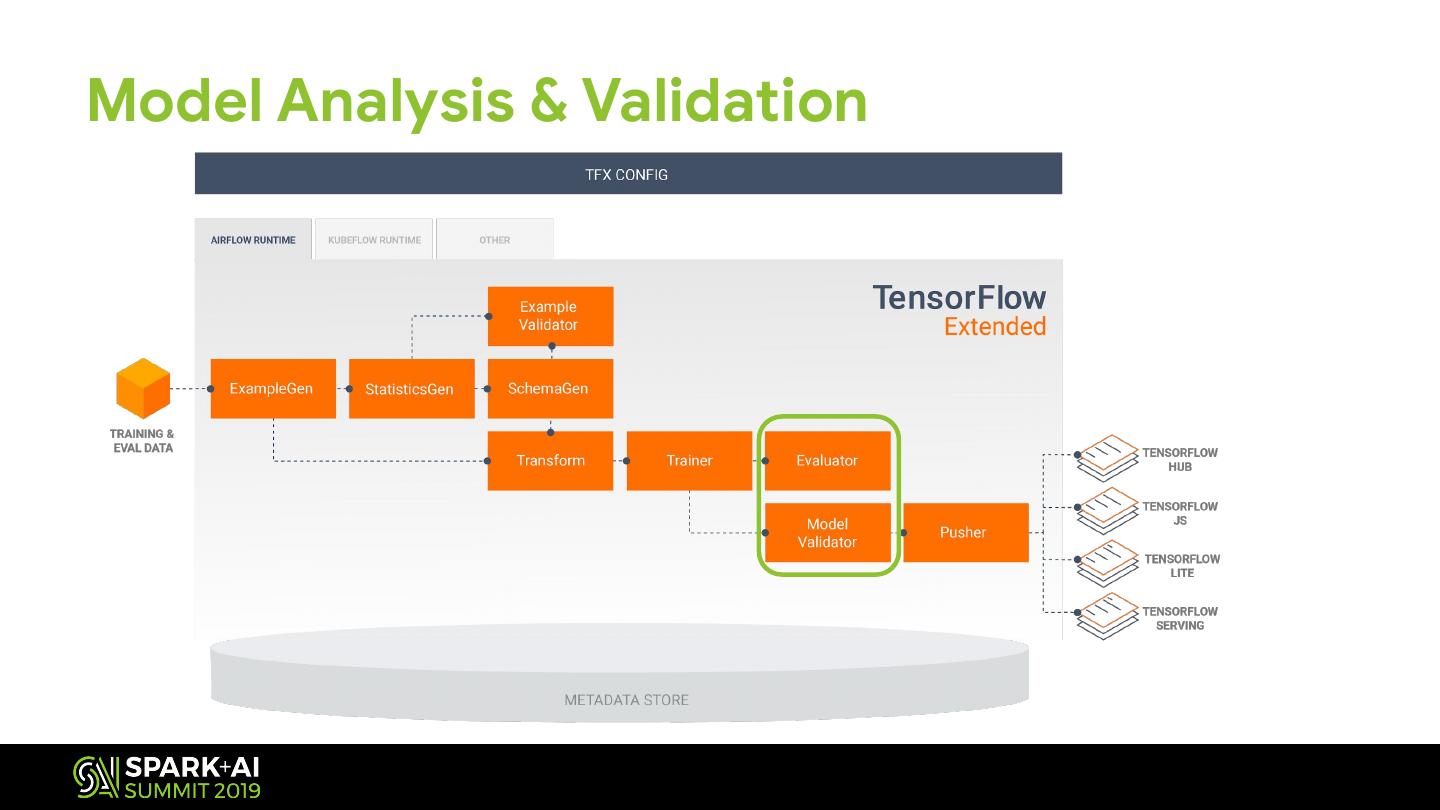

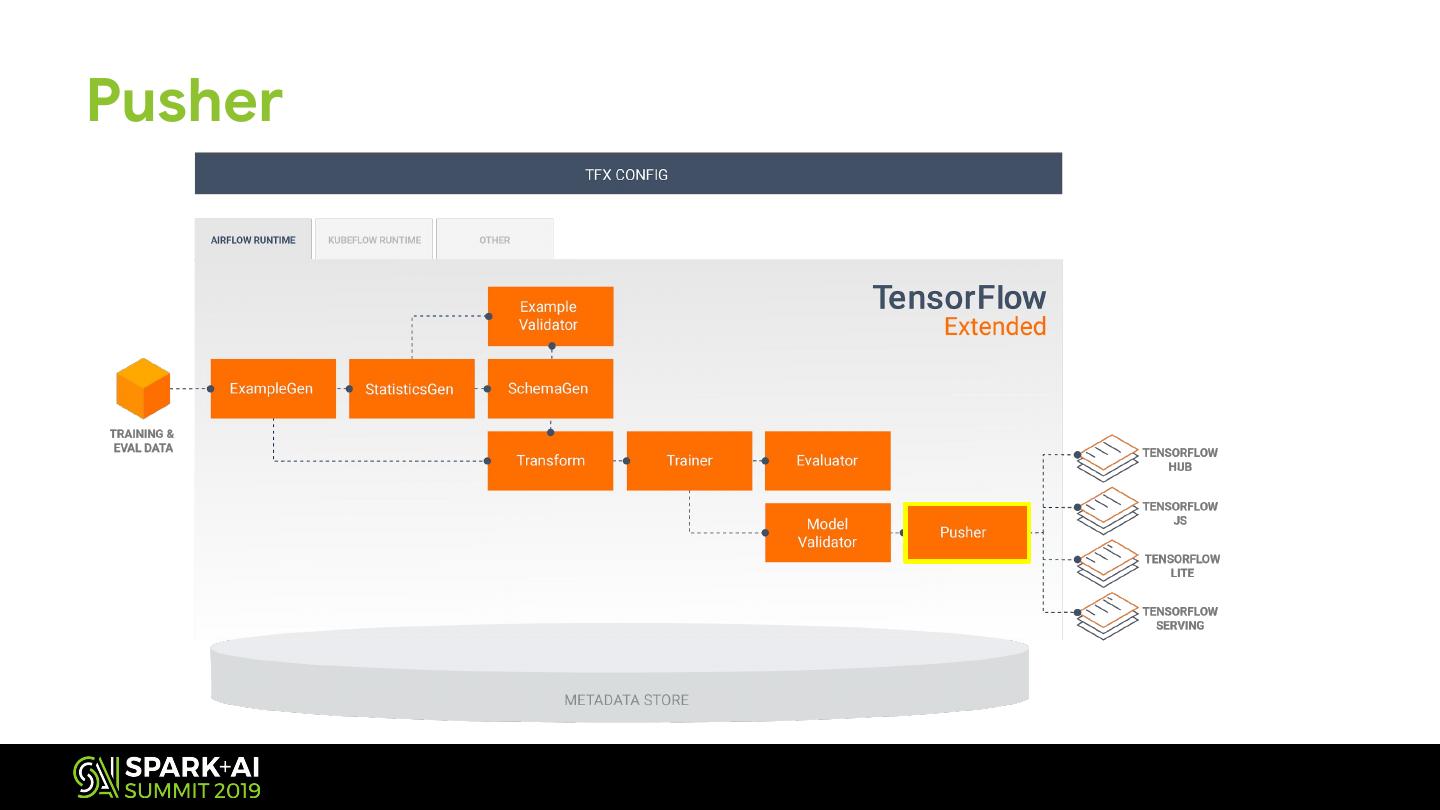

展开查看详情

1 .TensorFlow Extended (TFX) An End-to-End ML Platform Konstantinos (Gus) Katsiapis Google Ahmet Altay Google

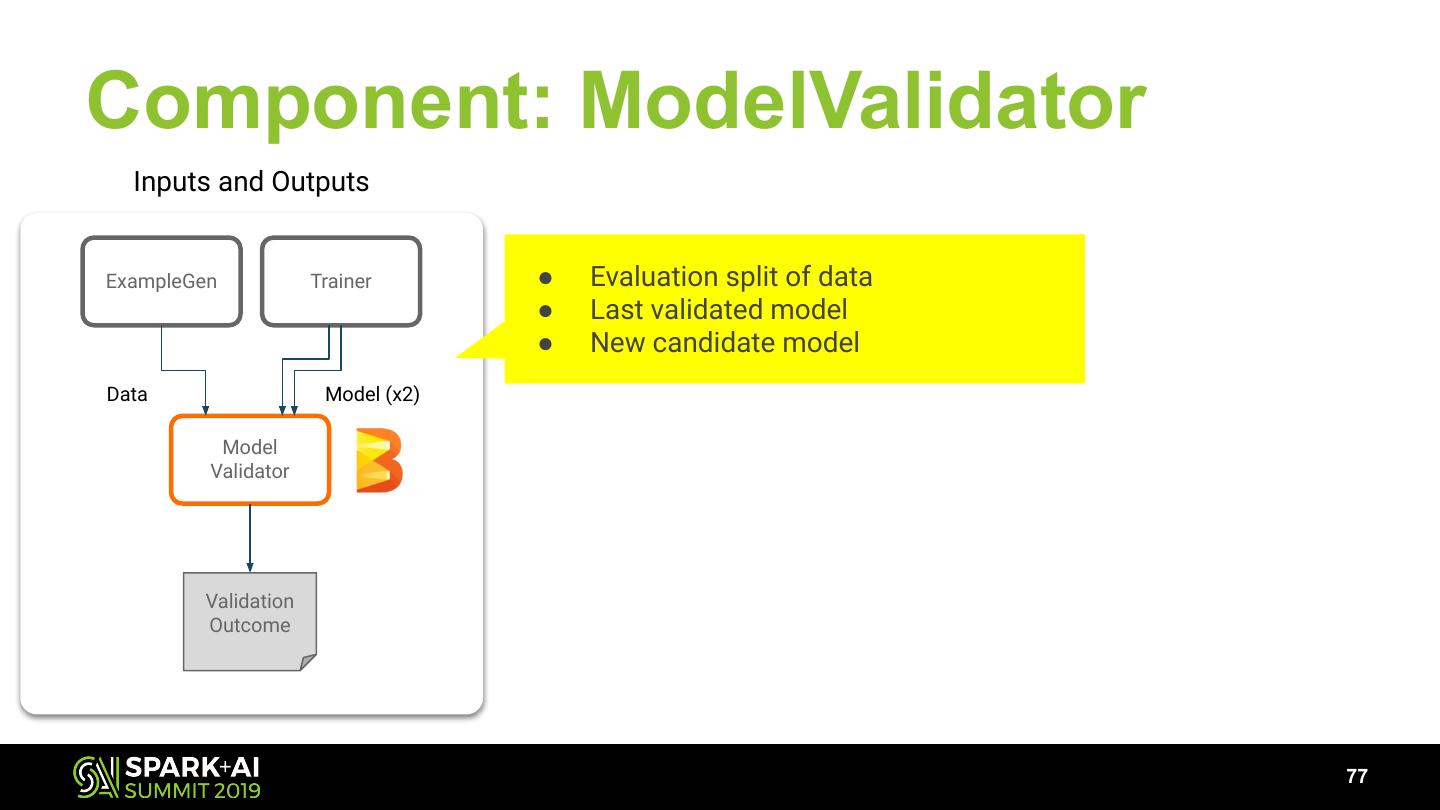

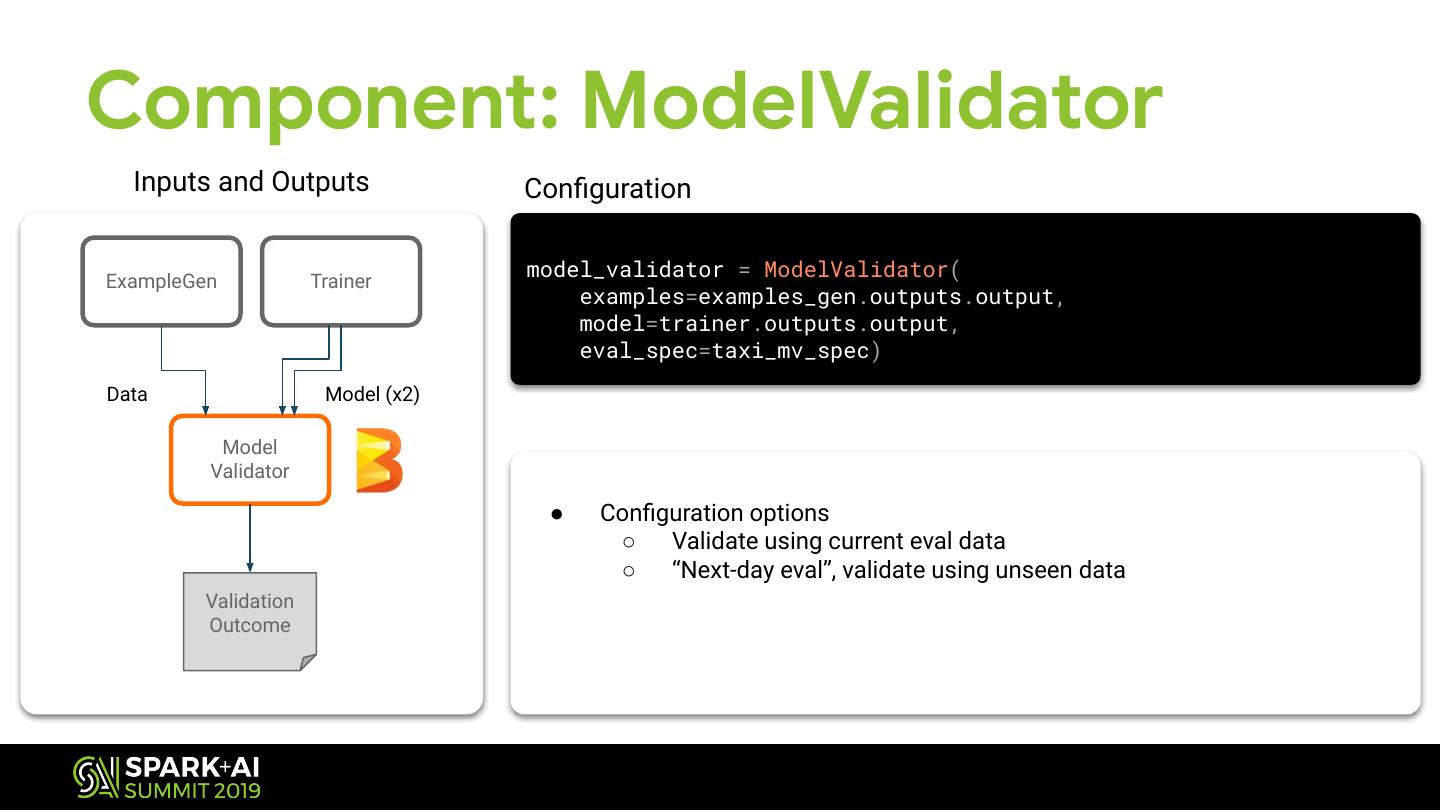

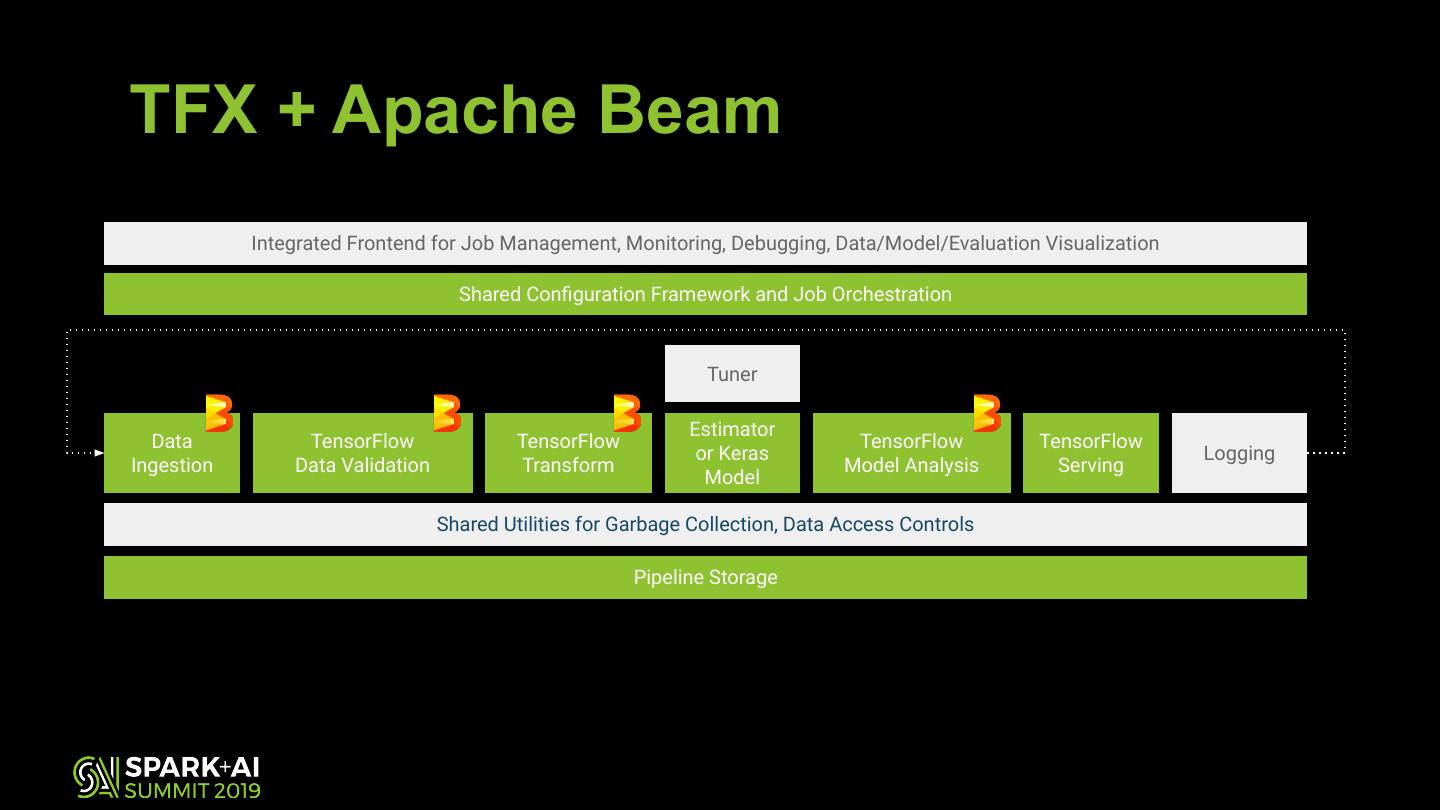

2 .TensorFlow Extended (TFX) is an end-to-end ML pipeline for TensorFlow Integrated Frontend for Job Management, Monitoring, Debugging, Data/Model/Evaluation Visualization Shared Configuration Framework and Job Orchestration Tuner Data Data Feature Model Evaluation Trainer Serving Logging Ingestion Analysis + Validation Engineering and Validation Shared Utilities for Garbage Collection, Data Access Controls Pipeline Storage

3 .TFX powers our most important bets and products... AlphaBets Major Products (incl. )

4 .TensorFlow Extended (TFX) is an end-to-end ML pipeline for TensorFlow Integrated Frontend for Job Management, Monitoring, Debugging, Data/Model/Evaluation Visualization Shared Configuration Framework and Job Orchestration Tuner Data Data Feature Model Evaluation Trainer Serving Logging Ingestion Analysis + Validation Engineering and Validation Shared Utilities for Garbage Collection, Data Access Controls Pipeline Storage

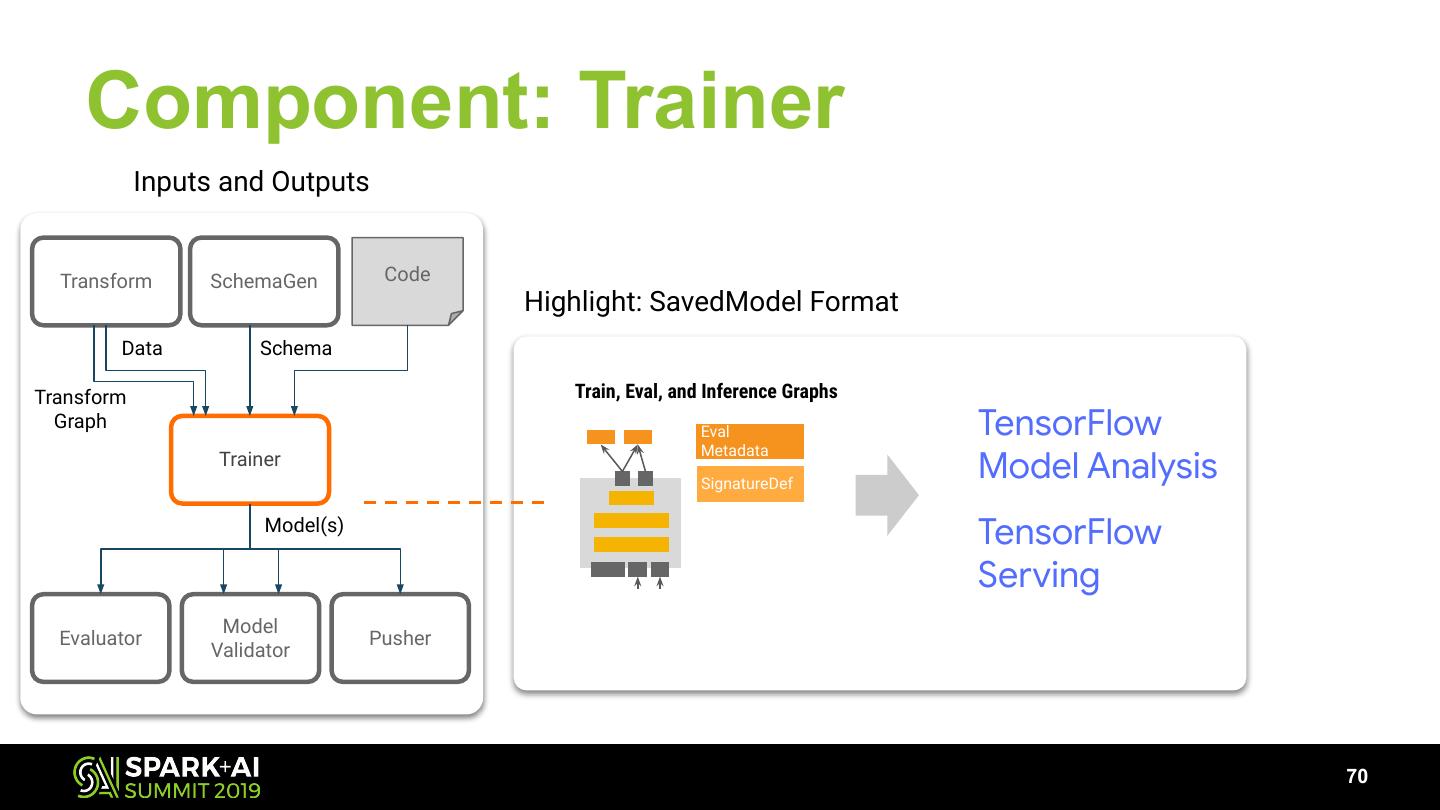

5 .… and some of our most important partners.

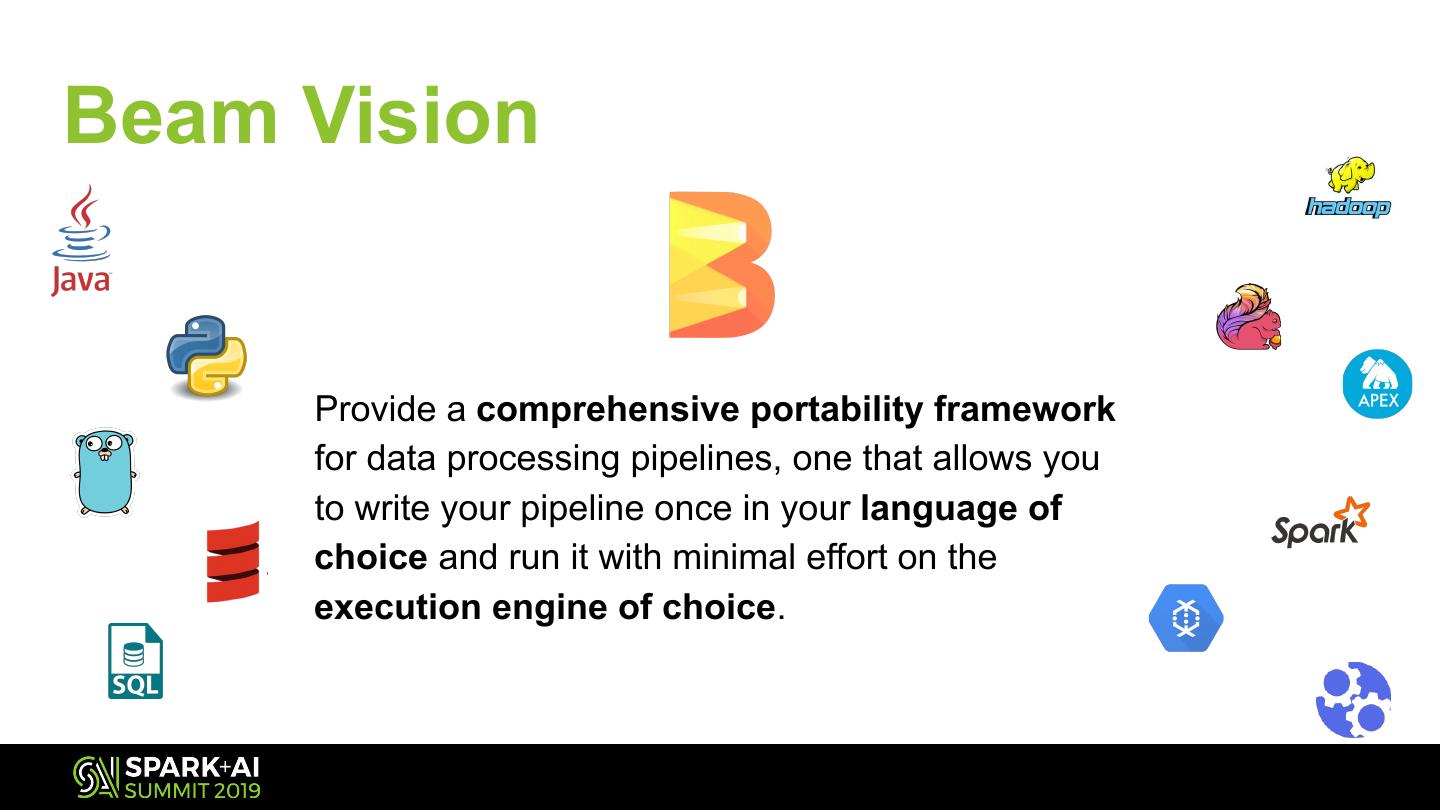

6 .What is Apache Beam? - A unified batch and stream distributed processing API - A set of SDK frontends: Java, Python, Go, Scala, SQL - A set of Runners which can execute Beam jobs into various backends: Local, Apache Flink, Apache Spark, Apache Gearpump, Apache Samza, Apache Hadoop, Google Cloud Dataflow, …

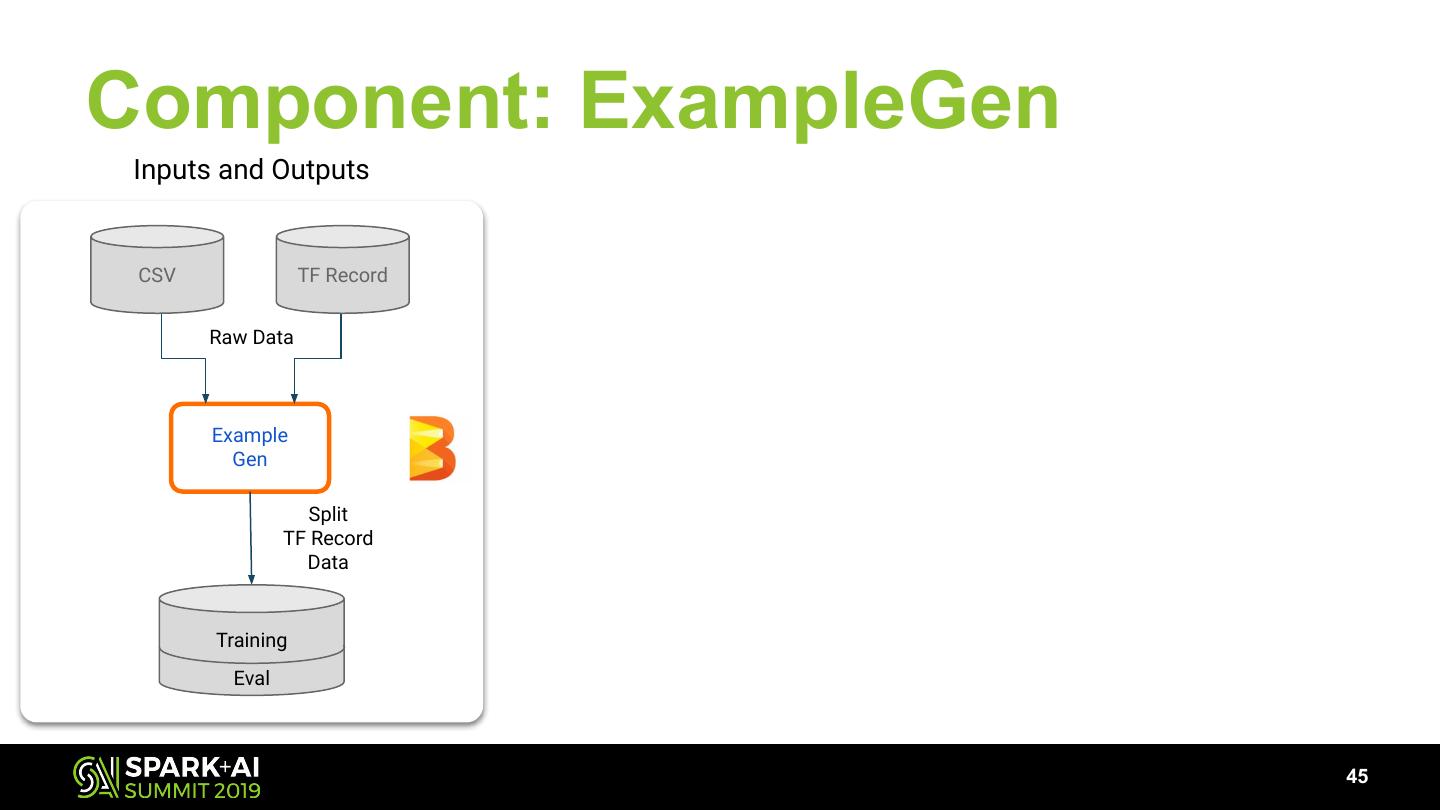

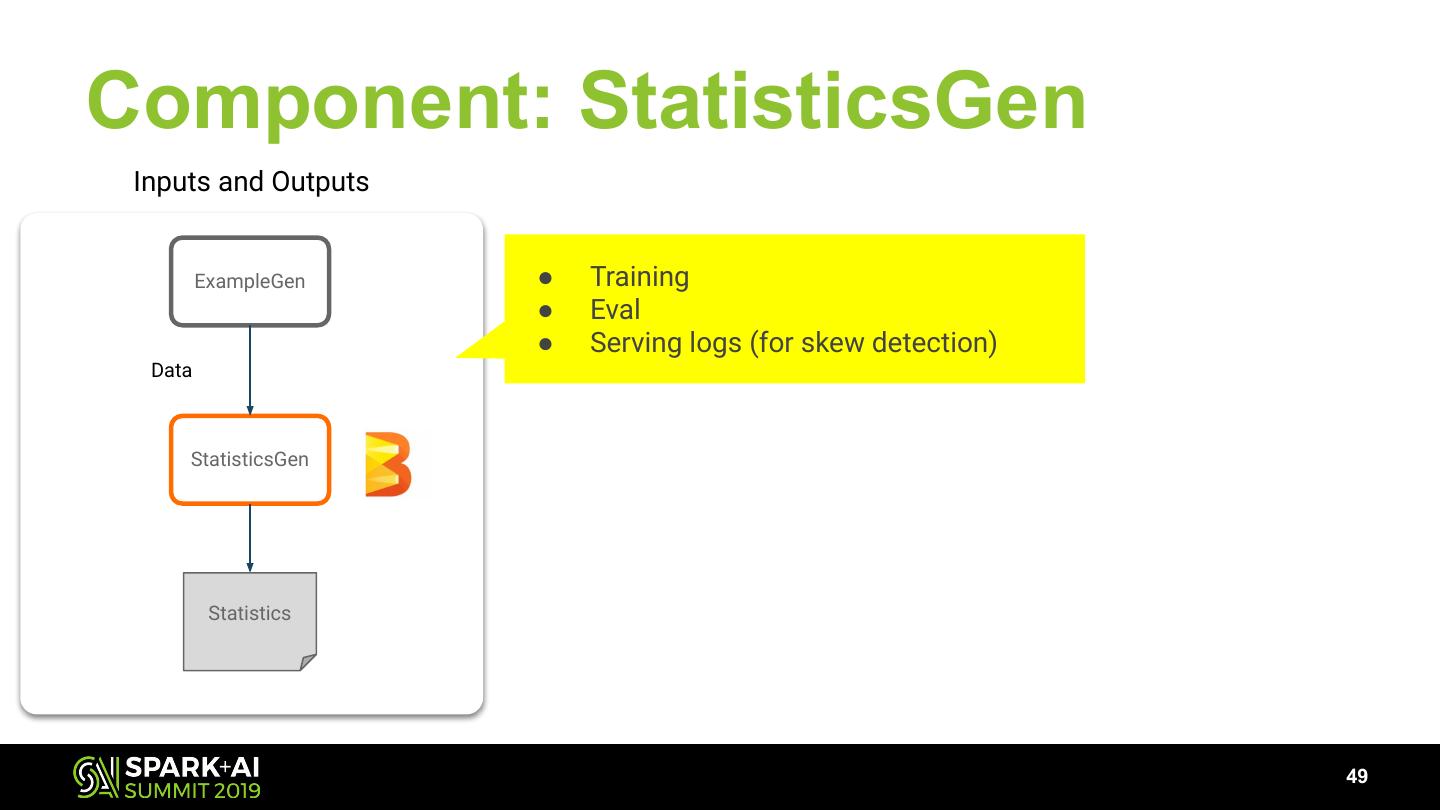

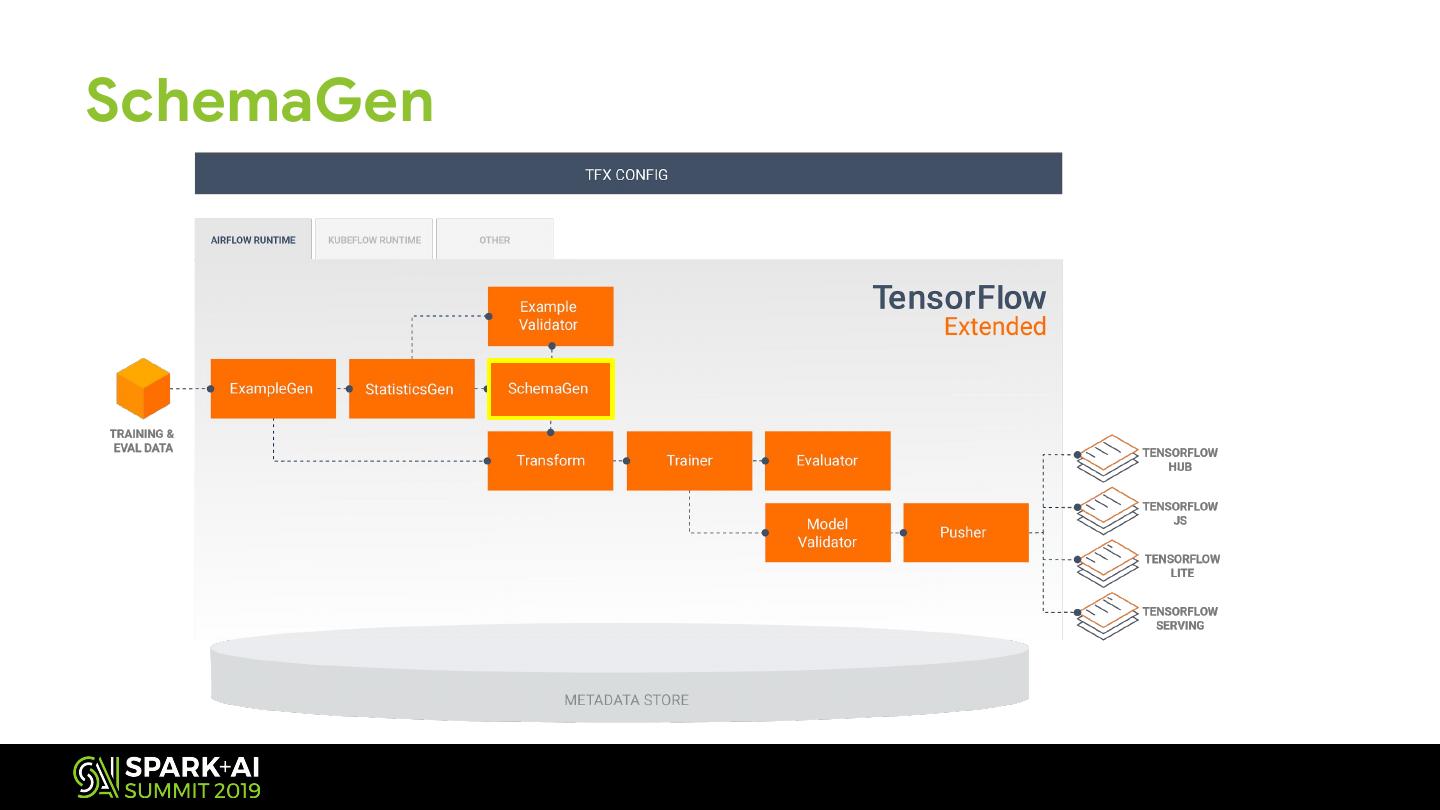

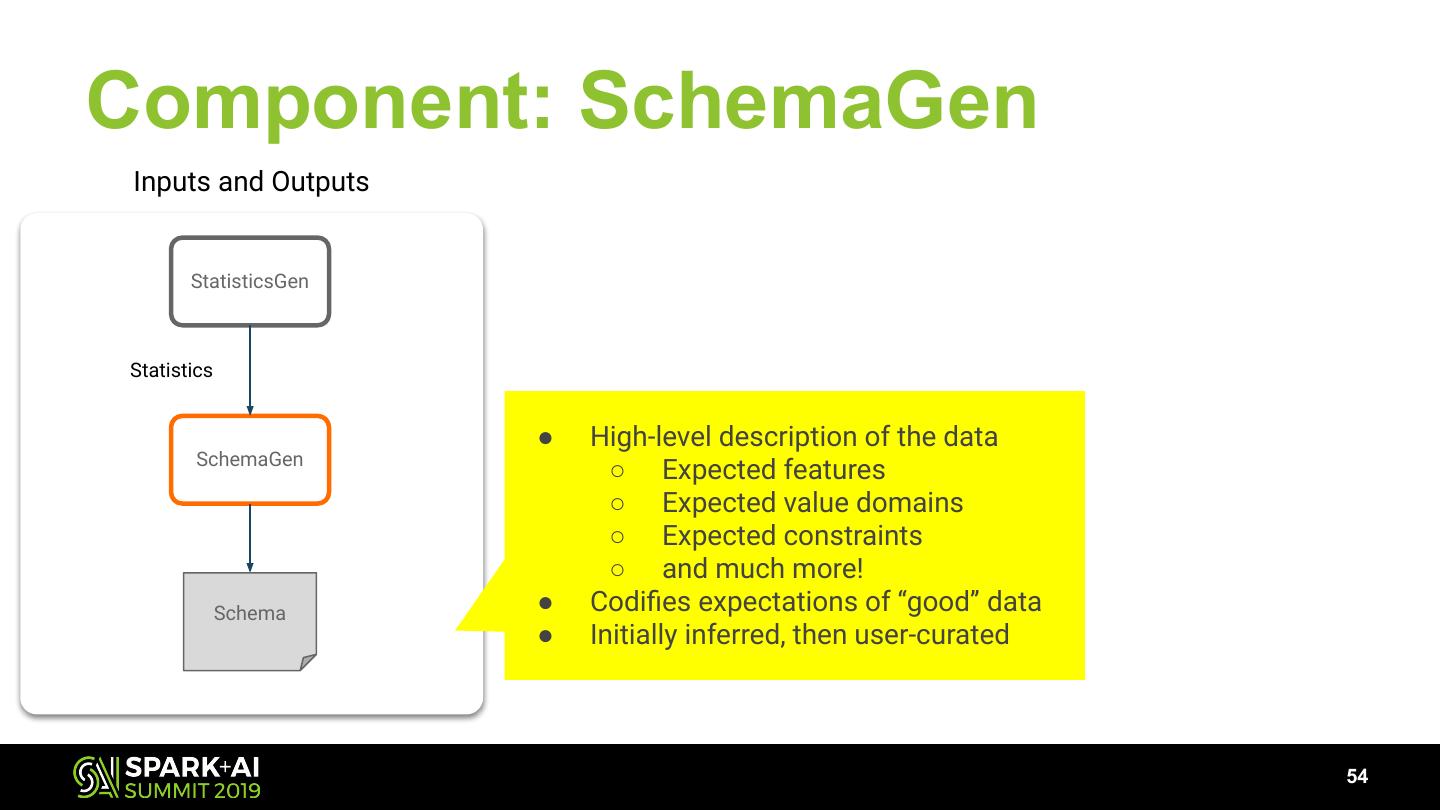

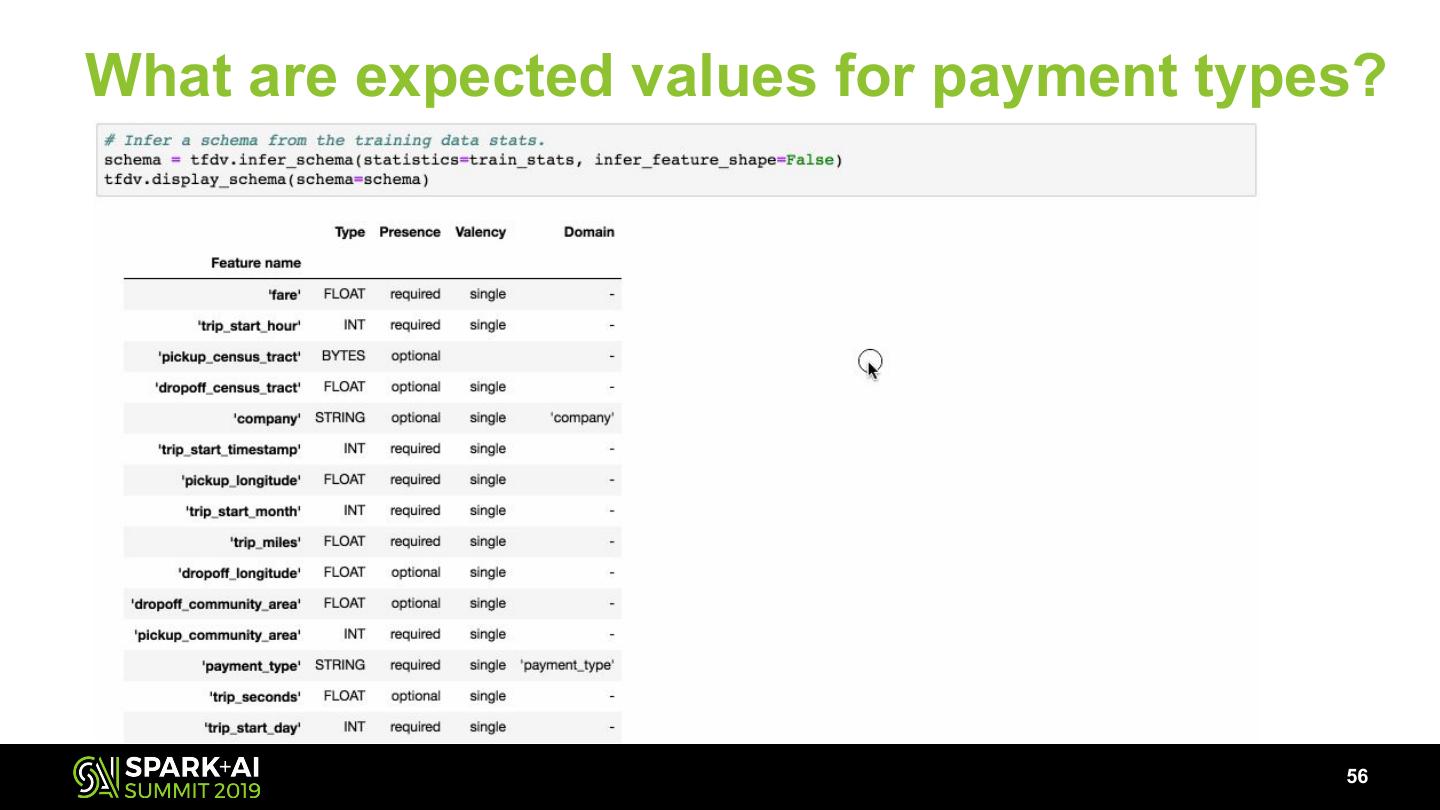

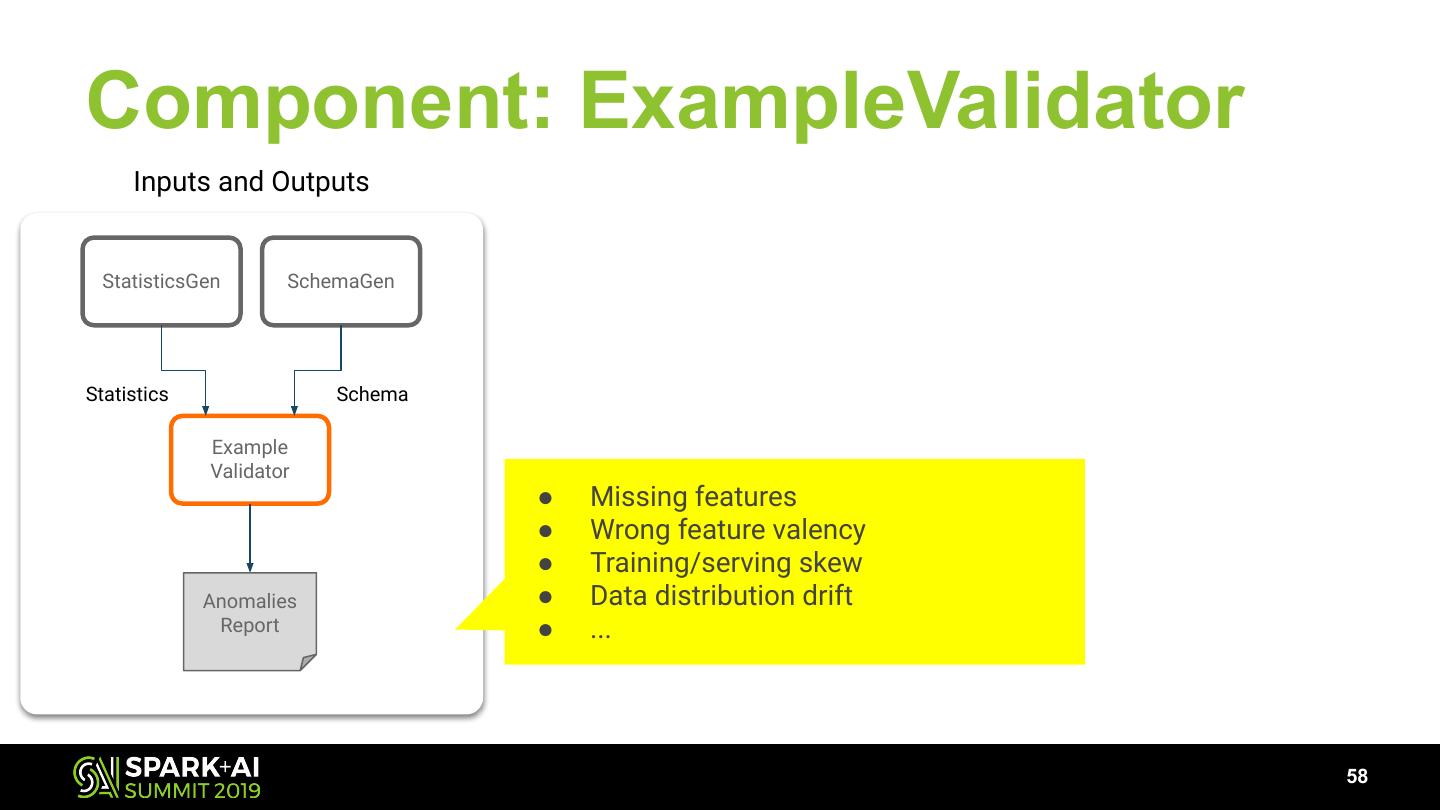

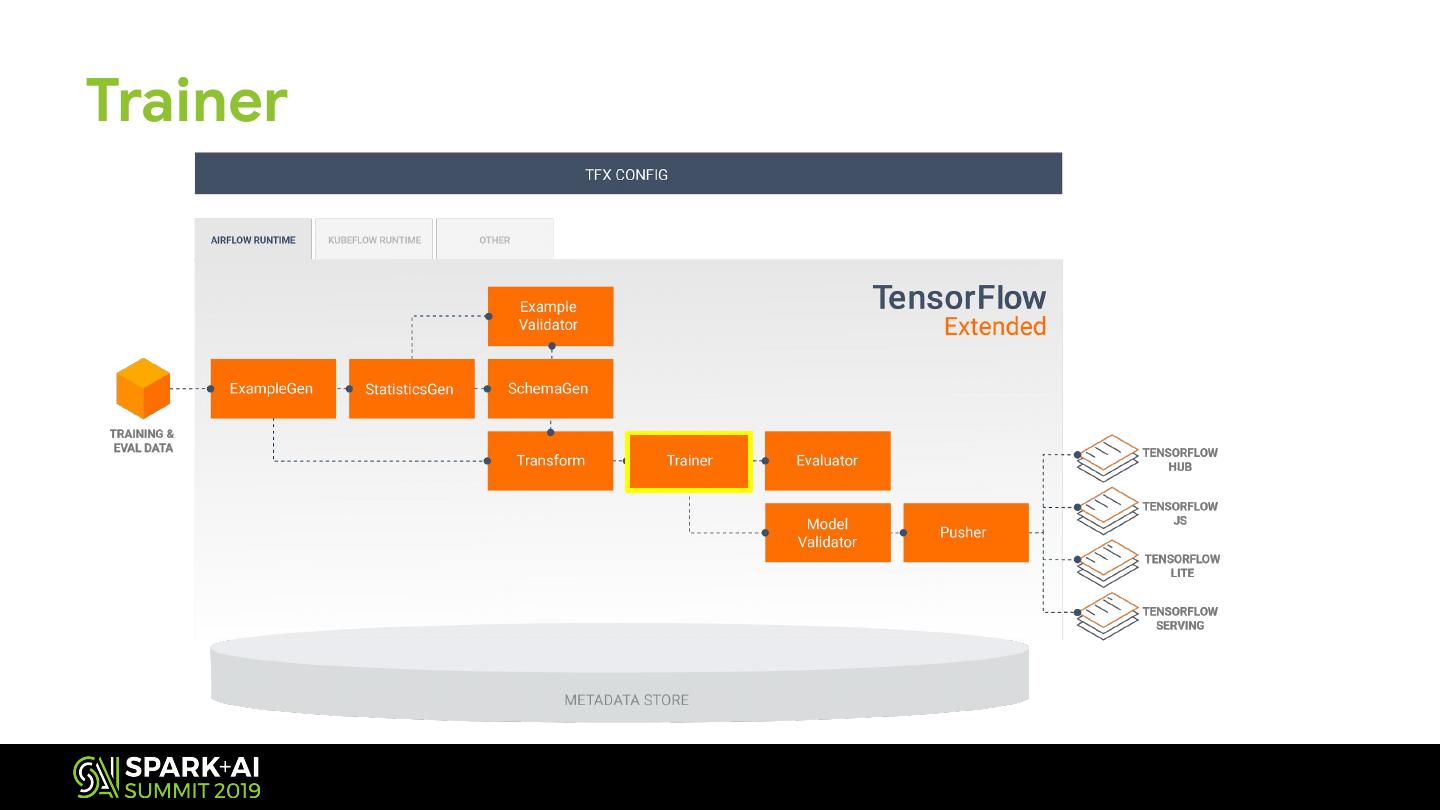

7 . Building Components out of Libraries Powered by Beam Powered by Beam Honoring TensorFlow TensorFlow TensorFlow TensorFlow Data Ingestion Estimator Model Validation Data Validation Transform Model Analysis Serving Outcomes StatisticsGen Evaluator ExampleGen SchemaGen Transform Trainer Pusher Model Server Model Validator Example Validator

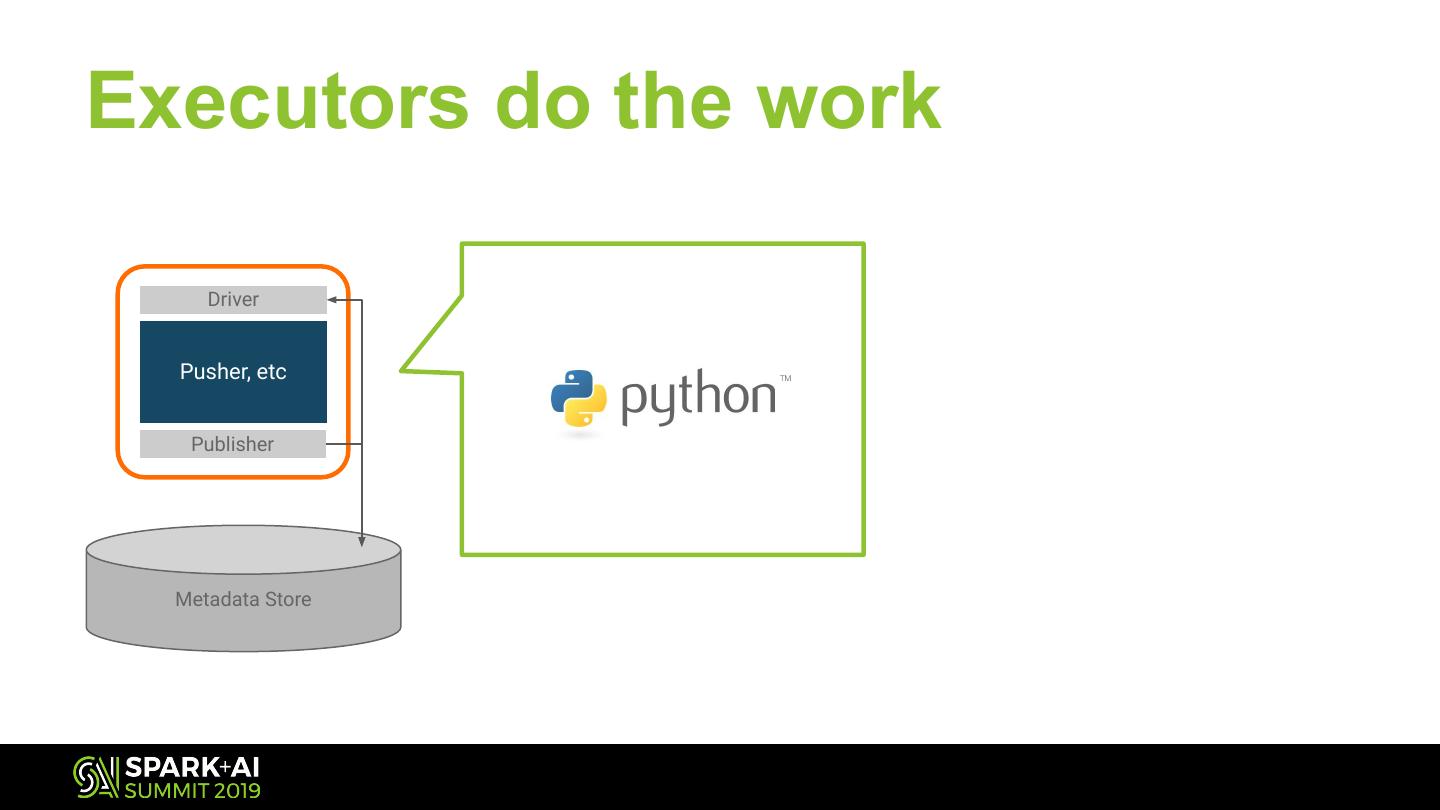

8 .What makes a Component Packaged binary or container Model Validator

9 .What makes a Component Validation Outcome Model Validator Well defined Last Validated New (Candidate) inputs and outputs Model Model

10 .What makes a Component Well defined Config configuration Validation Outcome Model Validator Last Validated New (Candidate) Model Model

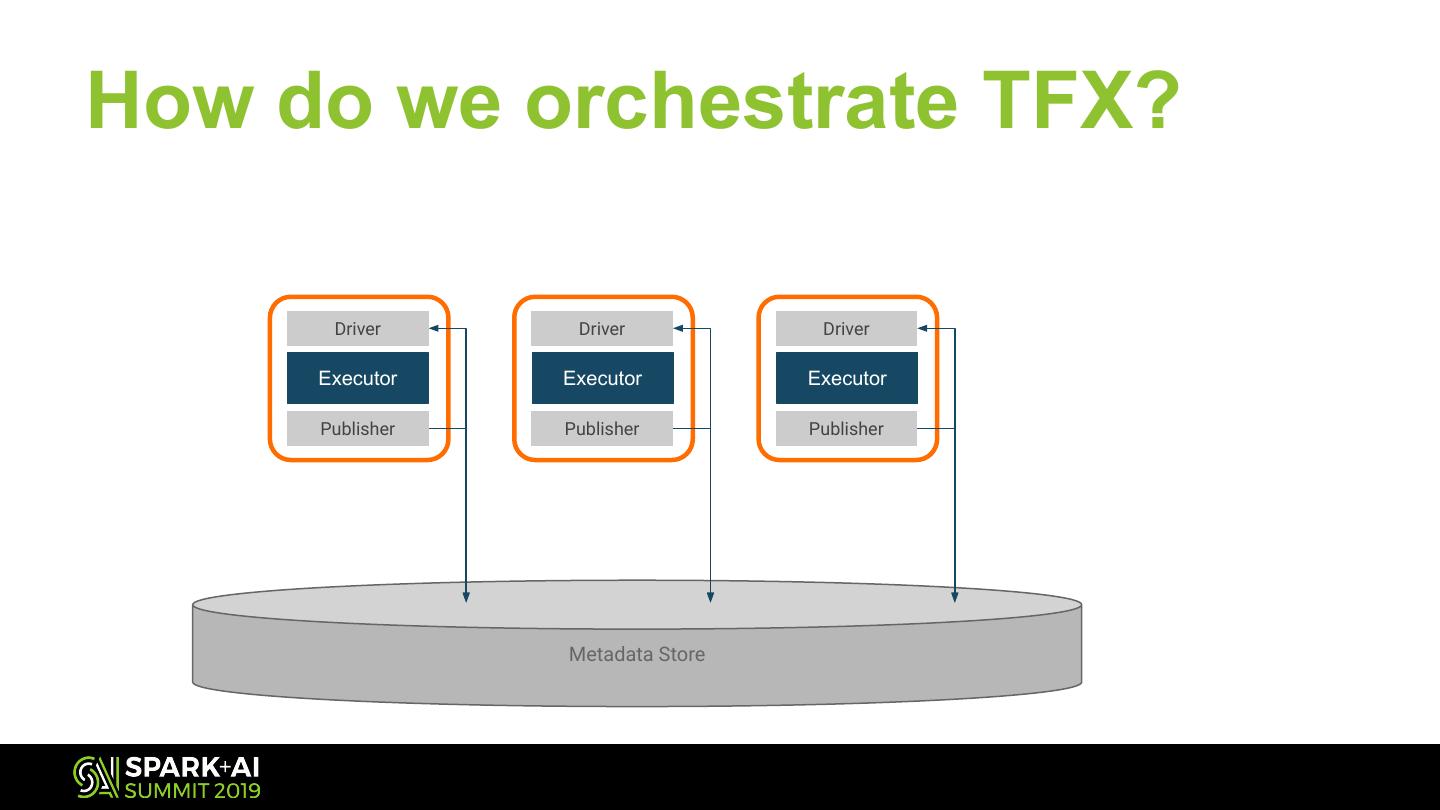

11 .What makes a Component Config Validation Outcome Model Validator Last Validated New (Candidate) Model Model Context Metadata Store

12 .What makes a Component Config Deployment targets: TensorFlow Serving TensorFlow Lite Validation TensorFlow JS New Model TensorFlow Hub Outcome Model Trainer Pusher Validator Last Validated New (Candidate) New (Candidate) Validation Model Model Model Outcome Metadata Store

13 .Metadata Store? That’s new

14 . Metadata Store? That’s new Task-Aware Pipelines Transform Trainer

15 . Metadata Store? That’s new Task-Aware Pipelines Task- and Data-Aware Pipelines Transform Trainer Transform Trainer Transformed Trained Input Data Deployment Data Models Training Data Pipeline + Metadata Storage

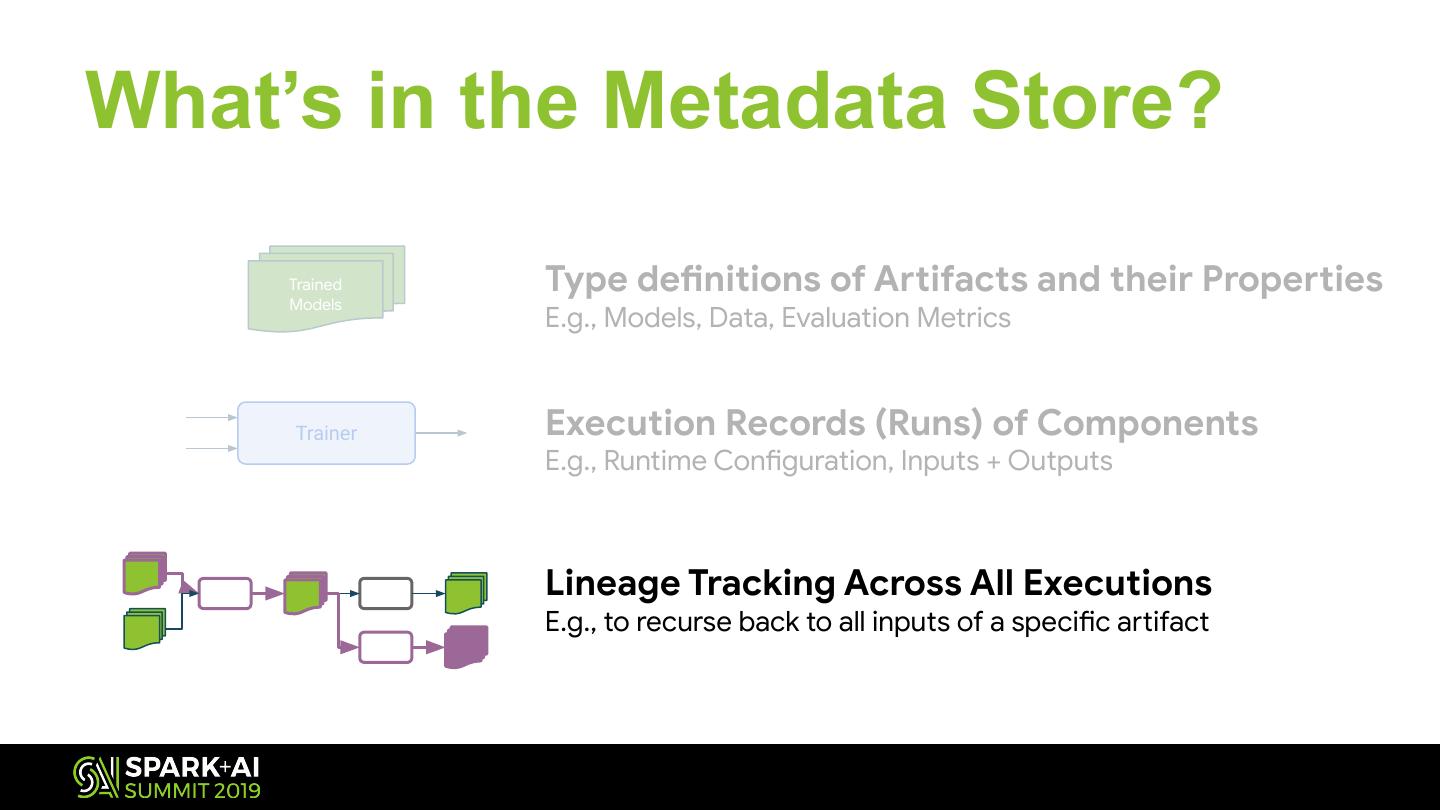

16 .What’s in the Metadata Store? Trained Type definitions of Artifacts and their Properties Models E.g., Models, Data, Evaluation Metrics

17 .What’s in the Metadata Store? Trained Type definitions of Artifacts and their Properties Models E.g., Models, Data, Evaluation Metrics Trainer Execution Records (Runs) of Components E.g., Runtime Configuration, Inputs + Outputs

18 .What’s in the Metadata Store? Trained Type definitions of Artifacts and their Properties Models E.g., Models, Data, Evaluation Metrics Trainer Execution Records (Runs) of Components E.g., Runtime Configuration, Inputs + Outputs Lineage Tracking Across All Executions E.g., to recurse back to all inputs of a specific artifact

19 .List all training runs and attributes

20 .Visualize lineage of a specific model Model artifact that was created

21 .Visualize data a model was trained on

22 .Visualize sliced eval metrics associated with a model

23 .Launch TensorBoard for a specific model run

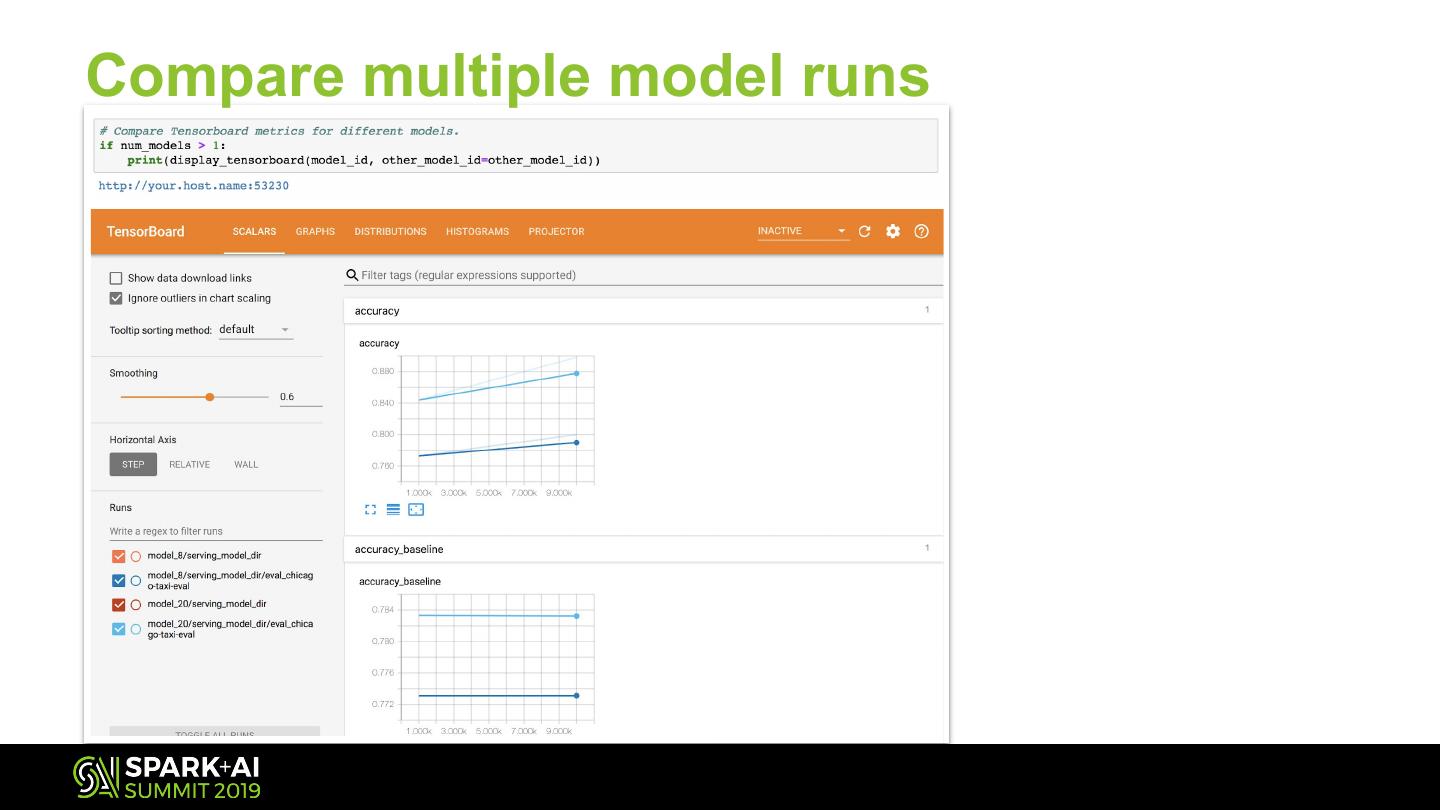

24 .Compare multiple model runs

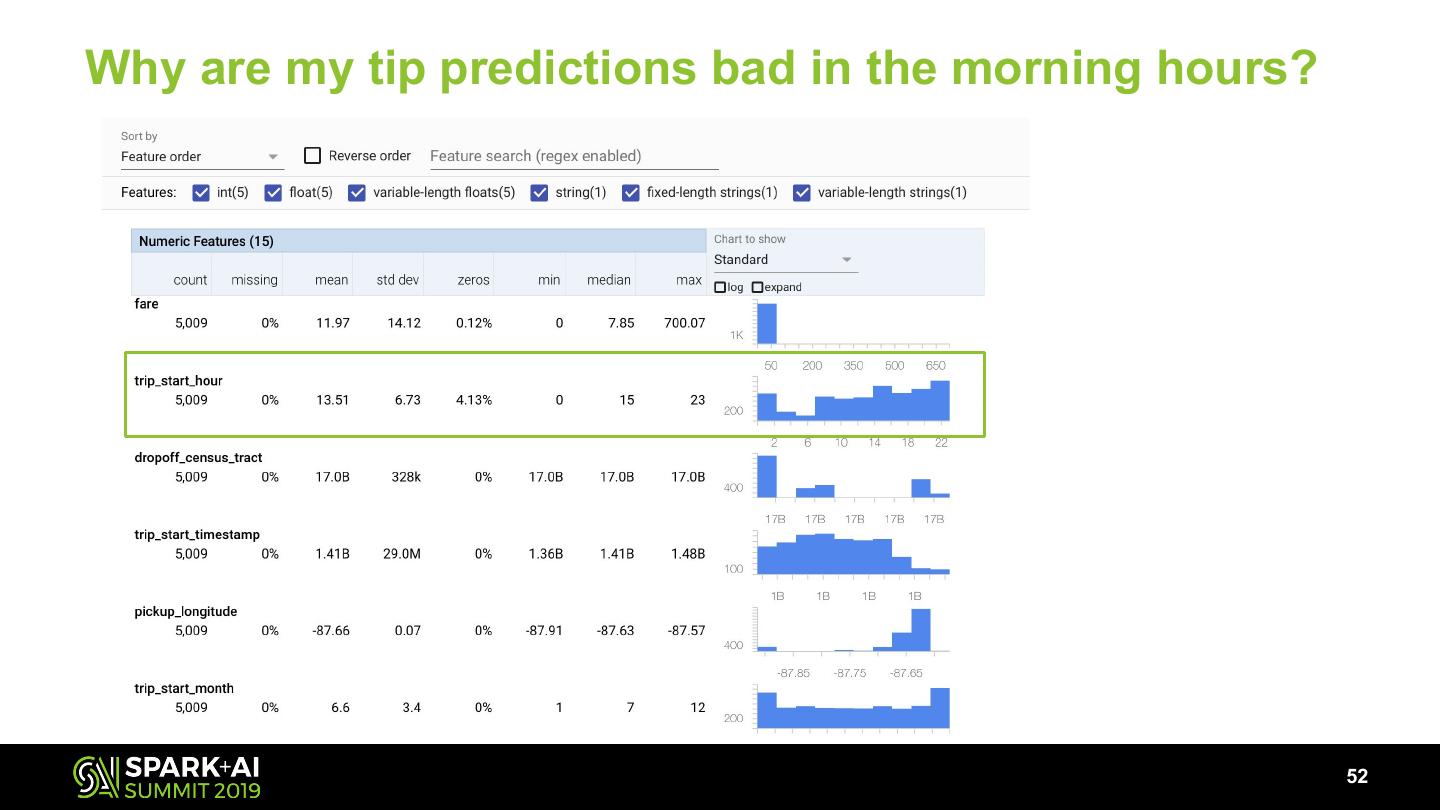

25 .Compare data statistics for multiple models

26 .Examples of Metadata-Powered Functionality Use-cases enabled by lineage tracking

27 .Examples of Metadata-Powered Functionality Use-cases enabled by lineage tracking Compare previous model runs

28 .Examples of Metadata-Powered Functionality Use-cases enabled by lineage tracking Compare previous model runs Carry-over state from previous models

29 .Examples of Metadata-Powered Functionality Use-cases enabled by lineage tracking Compare previous model runs Carry-over state from previous models Re-use previously computed outputs