- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Tackling Network Bottlenecks with Hardware Accelerations: Cloud vs. On-Premise

展开查看详情

1 .Tackling Network Bottlenecks with Hardware Accelerations: Cloud vs. On-Premise Yuval Degani, LinkedIn Dr. Jithin Jose, Microsoft Azure #UnifiedAnalytics #SparkAISummit

2 .Intro • Infinite loop of removing performance road blocks • With faster storage devices (DRAM, NVMe, SSD) and stronger than ever processing power (CPU, GPU, ASIC), a traditional network just can’t keep up with I/O flow • Upgrading to higher wire speeds will rarely do the trick • This is where co-designed hardware acceleration can be used to truly utilize the power of a compute cluster #UnifiedAnalytics #SparkAISummit 2

3 .Previous talks Spark Summit Europe 2017 First open-source stand-alone RDMA accelerated shuffle plugin for Spark (SparkRDMA) Spark+AI Summit North America 2018 First preview of SparkRDMA on Azure HPC nodes, demonstrating x2.6 job speed-up on cloud VMs #UnifiedAnalytics #SparkAISummit 3

4 .Network Bottlenecks in the Wild #UnifiedAnalytics #SparkAISummit 4

5 .Network Bottlenecks in the Wild • Not always caused by lack of bandwidth • Network I/O imposes overhead in many system components: – Memory management – Memory copy – Garbage Collection – Serialization/Compression/Encryption • Overhead=CPU cycles, cycles that are not available for the actual job at hand • Hardware acceleration can reduce overhead and allow better utilization of compute and network resources #UnifiedAnalytics #SparkAISummit 5

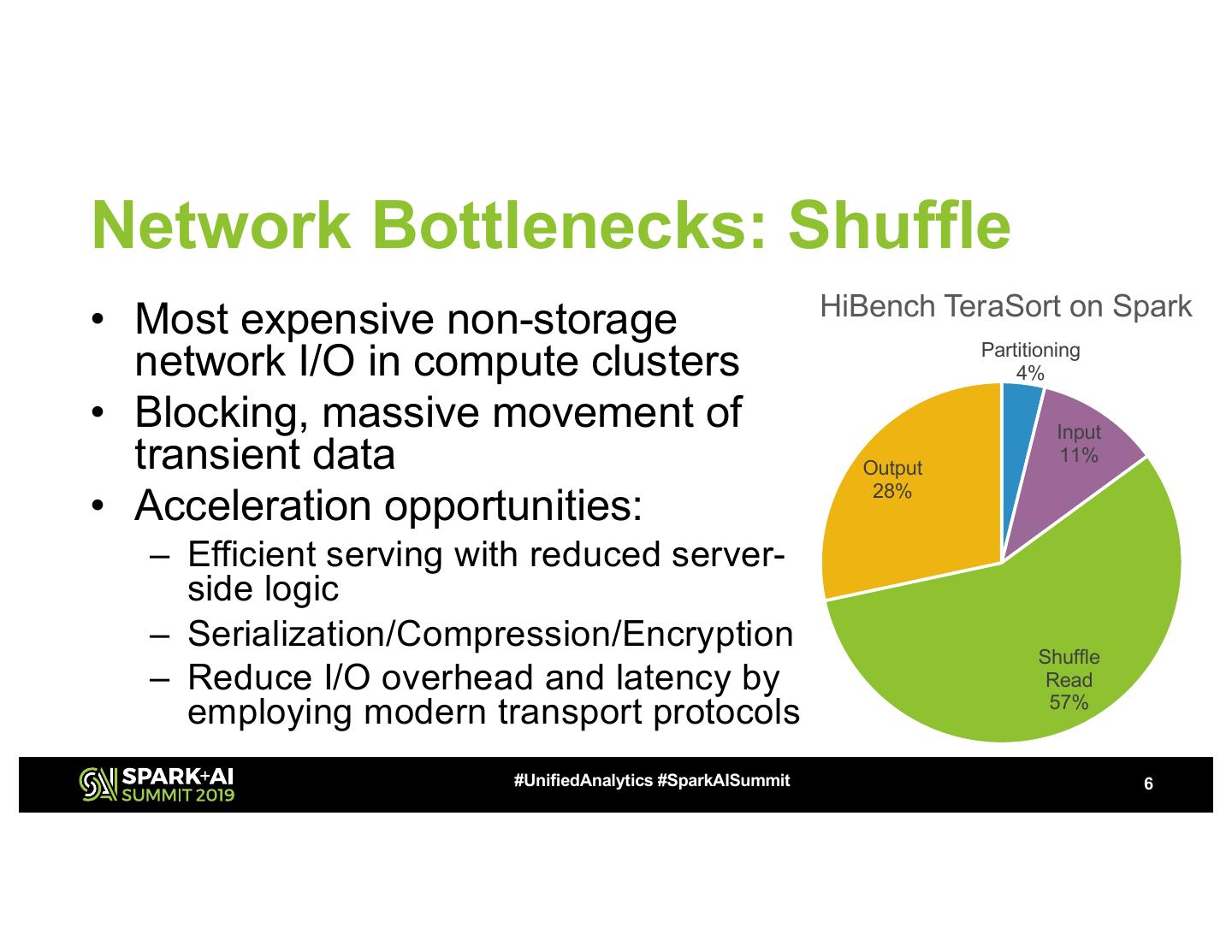

6 .Network Bottlenecks: Shuffle HiBench TeraSort on Spark • Most expensive non-storage network I/O in compute clusters Partitioning 4% • Blocking, massive movement of Input transient data Output 11% 28% • Acceleration opportunities: – Efficient serving with reduced server- side logic – Serialization/Compression/Encryption Shuffle – Reduce I/O overhead and latency by Read 57% employing modern transport protocols #UnifiedAnalytics #SparkAISummit 6

7 . Network Bottlenecks: Distributed Training ResNet 269* • Model updates create massive network traffic V100 • Model update frequency rises as GPUs get faster M60 • Acceleration opportunities: K80 – Inter-GPU RDMA communication – Lower latency network transport Total Time GPU Active Time – Collectives offloads * “Parameter Hub: High Performance Parameter Servers for Efficient Distributed Deep Neural Network Training” by Luo et al. #UnifiedAnalytics #SparkAISummit 7

8 .Network Bottlenecks: Storage • Massive data movement • Premium devices (DRAM, Flash) provide storage access speeds that were never seen before • Acceleration opportunities: – Higher bandwidth – Reduced transport overhead – OS/CPU bypass – direct storage access from network devices #UnifiedAnalytics #SparkAISummit 8

9 .Major Hardware Acceleration Technologies #UnifiedAnalytics #SparkAISummit 9

10 . Effect of network speed Speeds 800 on workload runtime* • 1, 10, 25, 40, 100, 200Gbps 700 600 • Faster network doesn’t 500 necessarily mean a faster 400 runtime 300 200 • Many workloads consist of 100 relatively short bursts rather 0 than sustainable throughput: Flink TeraSort Flink PowerGraph Timely PageRank PageRank PageRank higher bandwidth may not have 1GbE 10GbE 40GbE any effect * “On The [Ir]relevance of Network Performance for Data Processing” by Trivedi et al. #UnifiedAnalytics #SparkAISummit 10

11 . TOP500 Supercomputers InfiniBand Interconnect Performance Share* Proprietary • De-facto standard in the HPC world 1% • FDR: 56Gbps, EDR: 100Gbps, HDR: 200Gbps Omnipath 10% Ethernet • Sub-microsecond latency 23% • Native support for RDMA • HW accelerated transport layer Custom • True SDN: standard fabric components are 28% developed as open-source and are cross- platform InfiniBand • Native support for Switch collectives offload 38% * www.top500.org #UnifiedAnalytics #SparkAISummit 11

12 . Java app RDMA buffer Socket RDMA • Remote Direct Memory Access – Read/write from/to remote memory locations • Zero-copy Context switch • Direct hardware interface – bypasses the kernel and TCP/IP in IO path OS • Flow control and reliability is offloaded in Sockets hardware TCP/IP • Supported on almost all mid-range/high- end network adapters: both InfiniBand Driver and Ethernet Network Adapter #UnifiedAnalytics #SparkAISummit 12

13 .NVIDIA GPUDirect CPU • Direct DMA over PCIe • RDMA devices can write/read Non-GPUDirect directly to/from GPU memory over the network NIC GPU • No CPU overhead • Zero-copy GPUDirect #UnifiedAnalytics #SparkAISummit 13

14 .“Smart NIC” – FPGA/ASIC Offloads • FPGA – tailor-made accelerations • ASIC – less flexibility, better performance • Common use cases: – I/O: Serialization, compression, encryption offloads – Data: Aggregation, sorting, group-by, reduce • Deployment options: – Pipeline – Look-aside – Bump-on-the-wire #UnifiedAnalytics #SparkAISummit 14

15 .“Smart Switch” • In-network processing – Data reduction during movement – Wire-speed • Generic: MPI Switch Collectives Offloads (e.g. Mellanox SHArP) • Per-workload: Programmable switches (e.g. Barefoot Tofino) – Example: Network-Accelerated Query Processing #UnifiedAnalytics #SparkAISummit 15

16 .NVMeOF CPU • Network protocol for NVM express disks (PCIe) Traditional • Uses RDMA to provide direct NIC<->Disk access NIC • Completely bypasses the host • Minimal latency differences between local and remote access NVMeOF #UnifiedAnalytics #SparkAISummit 16

17 .Azure Network Acceleration Offering #UnifiedAnalytics #SparkAISummit 17

18 . Offer ‘Bare Metal’ Experience – Azure HPC Solution Eliminate Jitter Full Network Experience Transparent Exposure of Hardware Host holdback is a start, but must Enable customers to use Mellanox or Core N in guest VM should = completely isolate guest from host OFED drivers Core N in silicon Minroot & CPU Groups; separated Supports all MPI types and versions 1:1 between physical pNUMA host and guest VM sandboxes Leverage hardware offload to topology and vNUMA topology Mellanox InfiniBand ASIC #UnifiedAnalytics #SparkAISummit 18

19 .Latest Azure HPC Offerings – HB/HC HB Series (AMD EPYC) HC Series (Intel Xeon Platinum) Workloads Targets Bandwidth Intensive Compute Intensive Core Count 60 44 System Memory 240 GB 352 GB Network 100 Gbps EDR InfiniBand, 40 Gbps Ethernet Storage Support Standard / Premium Azure Storage, and 700GB Local SSD OS Support for RDMA CentOS/RHEL, Ubuntu, SLES 12, Windows OpenMPI, HPC-X, MVAPICH2, MPICH, MPI Support Intel MPI, PlatformMPI, Microsoft MPI Hardware Collectives Enabled Azure CLI, ARM template, Azure CycleCloud, Access Model Azure Batch, Partner Platform #UnifiedAnalytics #SparkAISummit 19

20 .Other Azure HPC Highlights • SR-IOV going broad – All HPC SKUs will support SR-IOV – Driver/SKU Performance Optimizations • GPUs – Latest NDv2 Series • 8 Nvidia Tesla v100 NVLINK interconnected GPUs • Intel Skylake, 672 GB Memory • Excellent platform for HPC and AI workloads • Azure FPGA – Based on Project Brainwave – Deploy model to Azure FPGA, Reconfigure for different models – Supports ResNet 50, ResNet 152, DenseNet-121, and VGG-16 #UnifiedAnalytics #SparkAISummit 20

21 .Accelerate Your Framework #UnifiedAnalytics #SparkAISummit 21

22 . MPI Microbenchmarks MPI Latency MPI Bandwidth 90 14000 12 GB/s 80 12000 Bandwidth (MB/s) Ethernet (40 Gbps) 70 10000 60 IPoIB (100 Gbps) Time (us) Ethernet (40 Gbps) 8000 50 RDMA (100 Gbps) IPoIB (100 Gbps) 6000 40 RDMA (100 Gbps) 4000 30 20 2000 10 1.77 us 0 16K 32K 64K 128 256 512 128K 256K 512K 1 2 4 8 1K 2K 4K 8K 1M 2M 4M 16 32 64 0 0 1 2 4 8 16 32 64 128 256 512 1K 2K Message Size (bytes) Message Size (bytes) • Experiments on HC cluster • MPI Latency (4 B) – 1.77us • OSU Benchmarks 5.6.1 • Getting even better later this year • OpenMPI (4.0.0) + UCX (1.5.0) • MPI Bandwidth (4 MB) – 12.06 GB/s • MPI ranks pinned nearer to HCA #UnifiedAnalytics #SparkAISummit 22

23 .SparkRDMA • RDMA-powered ShuffleManager PageRank 19GB plugin for Apache Spark • Similarly spec 8 node cluster: TeraSort 320GB – On-prem: 100GbE RoCE – Cloud: Azure ”h16mr” instances with 56Gbps InfiniBand 0 1000 2000 On-prem non-RDMA 100GbE On-prem RDMA 100GbE • https://github.com/Mellanox/SparkRDMA Azure IPoIB 56Gbps Azure RDMA 56Gbps #UnifiedAnalytics #SparkAISummit 23

24 .SparkRDMA on Azure • Azure HC cluster: PageRank - 19GB – 100 Gbps InfiniBand – 16 Spark Workers/HDFS DataNodes RDMA (100 Gbps) – Separate NameNode IPoIB (100 Gbps) TeraSort - 320 GB – Data folder hosted on SSD – HiBench Benchmarks (gigantic) • Spark 2.4.0, Hadoop 2.7.7, SparkRDMA 3.1 0 100 200 300 400 500 600 Execution Time (s) #UnifiedAnalytics #SparkAISummit 24

25 . HDFS-RDMA on Azure TestDFSIO (Write) Execution Time 400 • OSU HDFS RDMA 0.9.1 Ethernet (40 Gbps) 350 • Based on Hadoop 3.0.0 IPoIB (100 Gbps) RDMA (100 Gbps) • http://hibd.cse.ohio-state.edu/#hadoop3 300 • HDFS on HC cluster 250 Time (sec) • 1 NameNode 200 • 16 DataNodes 150 • Data folder hosted on SSD 100 • Packet Size: 128KB 50 • Containers per Node: 32 0 512GB 640GB 768GB 896GB 1TB Size (bytes) #UnifiedAnalytics #SparkAISummit 25

26 . Memcached-RDMA on Azure Memcached SET Memcached GET 180 180 Ethernet (40 Gbps) IPoIB (100 Gbps) 160 160 RDMA (100 Gbps) 140 Latency (us) 140 120 Latency (us) 120 100 100 80 80 60 60 40 40 20 20 0 0 1 2 4 8 16 32 64 128 256 512 1K 2K 4K 1 2 4 8 16 32 64 128 256 512 1K 2K 4K Message Size (bytes) Message Size (bytes) • OSU Memcached RDMA 0.9.6 • Experiment run on HC Nodes • Based on Memcached 1.5.3 and • Memcached GET (8 B) Latency – 5.5us libmemcached 1.0.18 • Memcached SET (8 B) Latency – 6.45us • http://hibd.cse.ohio-state.edu/#memcached #UnifiedAnalytics #SparkAISummit 26

27 . Kafka-RDMA on Azure Kafka Producer Latency Kafka Producer Bandwidth 400 70 IPoIB (100 Gbps) RDMA (100 Gbps) IPoIB (100 Gbps) RDMA (100 Gbps) 350 60 Bandwidth (MB/s) 300 50 Time (s) 250 40 200 30 150 100 20 50 10 0 0 Producer Producer • HC cluster • OSU Kafka RDMA 0.9.1 • Broker with 100 GB Ramdisk • Based on Apache Kafka 1.0.0 • Record Size – 100 bytes • http://hibd.cse.ohio-state.edu/#kafka • Number of Records – 500000 #UnifiedAnalytics #SparkAISummit 27

28 .Horovod on Azure 1600 100.00 100.00 98.86 98.37 96.78 96.94 • Tensorflow 1.13 1400 95.58 94.93 95.00 – ResNet-50 Training 90.00 1200 – Partial ImageNet Data IPoIB (100 Gbps) 85.00 RDMA (100 Gbps) – Batch Size = 64 per worker Images/second 1000 % Efficiency IPoIB Efficiency 80.00 – 2 workers per node 800 RDMA Efficiency 75.00 – Total batches 100 70.00 – CPU only version 600 65.00 • HC Cluster 400 60.00 – OpenMPI 4.0 + UCX 1.5 200 – Singularity container 55.00 • ~97% Scaling efficiency 0 2 4 8 16 50.00 # nodes #UnifiedAnalytics #SparkAISummit 28

29 .Wrapping up #UnifiedAnalytics #SparkAISummit 29