- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Horizon: Deep Reinforcement Learning at Scale

展开查看详情

1 .Horizon: Deep Reinforcement Learning at Scale Jason Gauci Applied RL, Facebook AI

2 .About Me • Recommender systems @ Google/Apple/Facebook • TLM on Horizon: A framework for large-scale RL: https://github.com/facebookresearch/Horizon • Eternal Terminal: a replacement for ssh/mosh https://mistertea.github.io/EternalTerminal/ • Programming Throwdown: tech podcast https://itunes.apple.com/us/podcast/programming- throwdown/id427166321?mt=2

3 .Recommender Systems in 20 10 Minutes

4 .Recommender Systems 1. Retrieval Matrix Factorization, Two Tower DNN 2. Event Prediction DNN, GBDT, Convnets, Seq2seq, etc. 3. Ranking Black Box Optimization, Bandits, RL 4. Data Science A/B Tests, Query Engines, User Studies https://www.mailmunch.com/blog/sales-funnel/

5 .Recommender Systems are Control Systems 1. Retrieval Control 2. Event Prediction Signal Processing 3. Ranking Control 4. Data Science Causal Analysis

6 . Control the user experience • Explore/exploit Recommender • Freshness Systems are • Slate optimization Control Systems Control future models’ data • Break feedback loops • De-bias the model

7 .Classification Versus Decision Making Classification Decision Making "What" questions (What will happen?) "How" questions (How can we do better?) Trained on ground truth (Hotdog / Not Hotdog) Trained from another policy (usually a worse one) Evaluated via accuracy (F1, AUC, NE) Counterfactual Evaluation (IPS, DR, MAGIC) Assume data is perfect Assume data is flawed (explore/exploit)

8 .Framework For Recommendation • Action Features: 𝑋" ∈ 𝑅 % • Context Features: 𝑋& ∈ 𝑅 % • Session Features: 𝑋' ∈ 𝑅 % • Event Predictors: 𝐸(𝑋" , 𝑋& , 𝑋' ) → 𝑅 Greedy Slate Recommendation: • Value Function: 𝑉 𝑋" , 𝑋& , 𝑋' , 𝐸. , 𝐸/ , … , 𝐸1 → 𝑅 • Control Function: 𝜋 𝑉3 , 𝑉. , … , 𝑉1 → {0, … , 𝑛} • Transition Function: 𝑇 𝑋" , 𝑋& , 𝑋' , 𝐸. , 𝐸/ , … , 𝐸1 , 𝜋 → 𝑋' 9

9 .Discovering The Value Function • What should we optimize for? • Ads: Clicks? Conversions? Impressions? • Feed/Search: Clicks? Time-Spent? Favorable user surveys? • Answer: All of the above. • How to combine? • How to assign credit? • Differentiable?

10 .Tuning The Value Function

11 .Searching Through Value Functions

12 .Learning Value Functions • Search is limited • Curse of dimensionality • Value models are sequential • Optimize for long-term value • Value models should be personalized • Relationship between event predictors and utility is contextual • Optimizing metrics is counterfactual • “If I chose action a’, would metric m increase?”

13 .Learning Value Functions • Reinforcement Learning is designed around agents who make decisions and improve their actions over time Hypothesis: We can use RL to learn better value functions

14 .Intro to RL

15 .Reinforcement Learning (RL) • Agent • Recommendation System • Reward • User Behavior • State • Context (inc. historical) • Action • Content https://becominghuman.ai/the-very-basics-of-reinforcement-learning-154f28a79071

16 .RL Terms • State (S) • Every piece of data needed to decide a single action. • Example: User/Post/Session features • Action (A) • A decision to be made by the system • Example: Which post to show • Reward (𝑹 𝑺, 𝑨 ) • A function of utility based on the current state and action

17 . RL Terms • Transition (𝑻 𝑺, 𝑨 → 𝑺> ) • A function that maps state-action pairs to a future state • Bandit: 𝑻 𝑺, 𝑨 = 𝑻(𝑺) • Policy (𝝅 𝑺, 𝑨𝟎 , 𝑨𝟏 , … , 𝑨𝒏 → {𝟎, 𝒏}) • A function that, given a state, chooses an action • Episode • A sequence of state-action pairs for a single run (e.g. a complete game of Go)

18 .Value Optimization • Value (𝑸 𝑺, 𝑨 ) • The cumulative discounted reward given a state and action • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝝲 ∗ 𝑟GM. + 𝝲/ ∗ 𝑟GM/ + 𝝲N ∗ 𝑟GMN + ⋯ • A good policy becomes: 𝜋 𝑠 = 𝑚𝑎𝑥" 𝑄(𝑠, 𝑎)

19 .Value Regression • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝝲 ∗ 𝑟GM. + 𝝲/ ∗ 𝑟GM/ + 𝝲N ∗ 𝑟GMN + ⋯ • Collect historical data • Solve with linear regression • Problem: 𝑟GM. also depends on 𝑎GM.

20 .Credit Assignment Problem • Current state/action • X’s turn to move • What is the value? • Pretty high

21 .Credit Assignment Problem • Next State/Action • Now what is the value? • Low • The future actions affect the past value

22 .State Action Reward State Action (SARSA) • Value Regression • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝑟GM. + 𝛾 / ∗ 𝑟GM/ +… • SARSA • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝑄 𝑠GM. , 𝑎GM. • Idea borrowed from Dynamic Programming • Using the future Q is more robust • Value still highly influenced by current policy

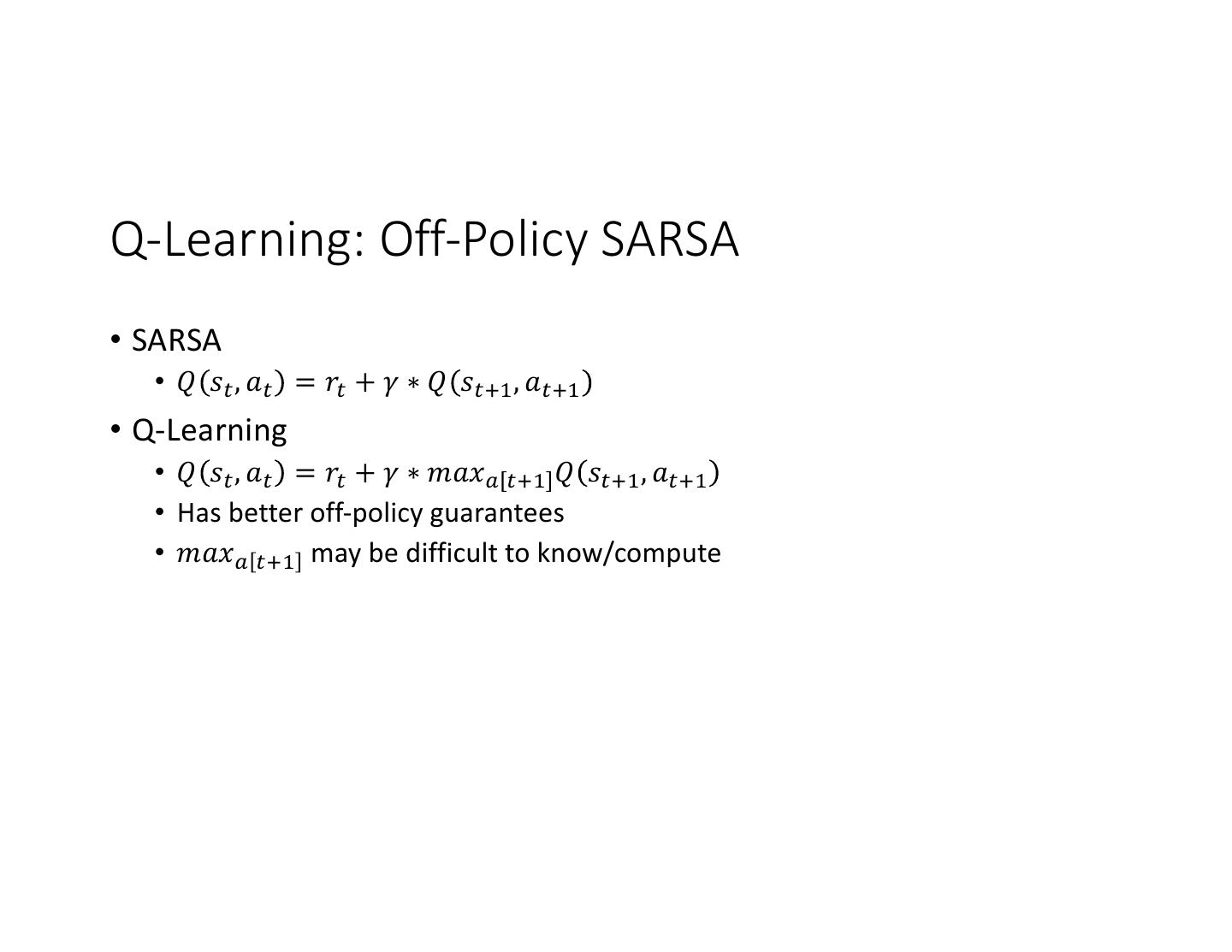

23 .Q-Learning: Off-Policy SARSA • SARSA • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝑄 𝑠GM. , 𝑎GM. • Q-Learning • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝑚𝑎𝑥" GM. 𝑄 𝑠GM. , 𝑎GM. • Has better off-policy guarantees • 𝑚𝑎𝑥" GM. may be difficult to know/compute

24 .Policy Gradients • Q-Learning: 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝑚𝑎𝑥" GM. [𝑄 𝑠GM. , 𝑎GM. ] • What if we can’t do 𝑚𝑎𝑥" GM. [… ]? • Policy Gradient • Approximate 𝑚𝑎𝑥" GM. [𝑄 𝑠GM. , 𝑎GM. ] • 𝑄 𝑠G , 𝑎G = 𝑟G + 𝛾 ∗ 𝐴 𝑠GM. • Learn 𝐴 𝑠GM. assuming Q is perfect: • Deep Deterministic Policy Gradient • 𝐿 𝐴 𝑠GM. = min(−𝑄 𝑠GM. , 𝑎GM. ) • Soft Actor Critic • 𝐿 𝐴 𝑠GM. = min(log(𝑃(𝐴 𝑠GM. = 𝑎GM. )) − 𝑄 𝑠GM. , 𝑎GM. )

25 .Applying RL at Scale

26 .Prior State of Applied RL • Small-scale • Notable Exceptions: ELF OpenGo, OpenAI Five, AlphaGo • Simulation-Driven • Simulators are often deterministic and stationary

27 .Prior State of Applied RL • Small-scale • Notable Exceptions: ELF OpenGo, OpenAI Five, AlphaGo • Simulation-Driven • Simulators are often deterministic and stationary Can we train personalized, large-scale RL models and bring them to billions of people?

28 .Applying RL at Scale • Batch Feature normalization & training • Because the loss target is dynamic, normalization is critical • Distributed training • Synchronous SGD (PASGD should be fine) • Fixed (but stochastic) policies • E-greedy, Softmax, Thompson Sampling • Fixed policies allow for massive deployment • No need for checkpointing, online parameter servers • Counterfactual Policy Evaluation • Detect anomalies and gain insights offline

29 .Horizon: Applied RL Platform • Robust • Massively Parallel • Open Source • Built on high-performance platforms • Spark • PyTorch • ONNX • OpenAI Gym & Gridworld Integration tests