- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Data Agility—A Journey to Advanced Analytics and Machine Learning at Scale

展开查看详情

1 .Data Agility - A Journey to Advanced Analytics and Machine Learning at Scale Spark Summit, April 2019 Hari Subramanian, Engineering Manager

2 .About me Engineering Manager (also Engineer, Product Manager, Entrepreneur) Previously: Amazon Web Services, VMware, Startups Until Recently: Led Big Data Analytics & Data Science Workbench at Uber Currently: Customer Obsession Engineering at Uber

3 .Agenda 00 The Uber Scale 01 Uber’s Data Platform 02 Data Science Workbench 03 DS & ML - Uber Toolset 04 Customer Obsession - a case study 05 Lessons learned 06 Wrap-up

4 .Uber’s mission is to ignite opportunity by setting the world in motion. Livelihood Impacts Global for Millions Millions of footprint of drivers Riders NYC

5 .Data informs every decision in the company

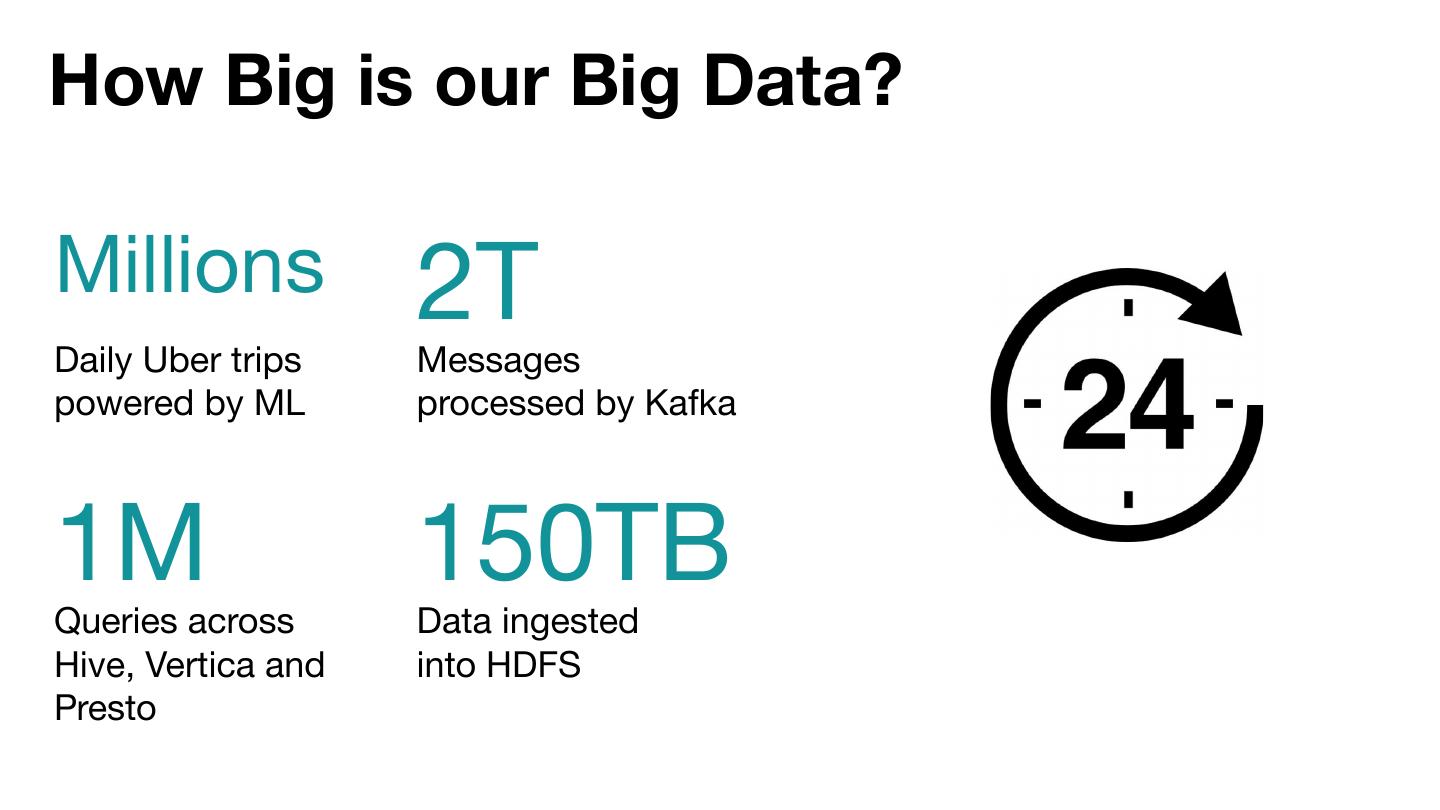

6 .How Big is our Big Data? Millions 2T Daily Uber trips Messages powered by ML processed by Kafka 1M Queries across 150TB Data ingested Hive, Vertica and into HDFS Presto

7 .Overview of Uber’s Data Platform Dashboarding Alerting CONSUMING BUSINESS INSIGHTS Monitoring DATA SCIENCE MINING BUSINESS EXPERIMENTATION Data Exploration INSIGHTS MACHINE Query Engines LEARNING Knowledge Bases MODELED TABLES CUSTOM DATA SETS ETL Frameworks Data Integrity Storage RAW DATA Infrastructure DATA SOURCES

8 .What is DSW?

9 .Rapid growth growing pains Getting started Accessing data Collaboration was was hard & services was difficult complicated

10 .Many stakeholders, many needs Varied Different users infrastructure needs Single window Cost and compliance access requirements

11 .Data Science Workbench Democratize data science by enabling access to reliable infrastructure and advanced tooling in a community-driven learning environment

12 .Our world today Getting Started Fully hosted 1-click Jupyter Notebook & RStudio IDE Data Access All internal data sources / Multi-DC / Secure / Log/Audit capabilities Shared Standards Pre-baked Environments Collaboration Sharing options on notebooks; 1-click Shiny dashboard publication Various session sizes, types (CPU, GPU)/access to compute Scalability engines Available Features Documentation Support

13 .Key features Interactive Advanced Business process workspaces dashboards automations ● Data exploration ● Visualizing rich insights ● Automating complex ● Data preparation derived from complex processes ● Ad-hoc analyses analytics ● Small model training ● Model exploration ● Displaying business metrics ● Scheduling data pulls

14 .

15 .What problem does DSW solve?

16 .

17 .Advanced data science & complex analytics Data Scientists Ops Analysts Contractors

18 .Business process automation Ops Managers S&P Analysts Contractors

19 .Exploratory ML, Uber Eats Support model-training, & Restaurant NLP model for support recommendations tickets production Risk Safety Driver account check Trip classification Referral risk scoring Operations Lifetime value (LTV) Engineers Data Scientists ML Researchers model

20 .Unique fit in a mature Data Platform Experimentation BI Tools DS Platform ML Platform Data processing Platform Summary | Query | Dash | Piper | Metron | WatchTower | XP | Mentana DSW Michelangelo Map | Chart Builder Marmaray | Kirby | Databook Query Gateway Services All Active and HiveSync Efficiency and Capacity Hive as a Service Observability Spark as a Service Security Presto Peloton YARN Mesos HDFS (Hadoop Data Lake)

21 .DS & ML - Uber Toolkit Ingestion & Dispersal (Hoover, Marmaray - uses Spark, Hive) Data preparation (Databook, QB/QR - uses Spark, Presto, Hive) Data Analytics (BI tools, DSW - numPy, scikit-learn, pandas) ML and DL (Spark MLLib, xgboost, TF, keras, pytorch, Horovod) Model serving (PyML, Michelangelo, Peloton) Workflows, Exploration (AirFlow/Piper, Data Science Workbench)

22 .Case study COTA - Customer Obsession Ticketing Assistant A Deep Learning Model developed and deployed using Uber’s Data Platform

23 .What is the challenge? As Uber grows, so does our volume of support tickets Thousands of Millions of tickets different types of from riders / drivers / issues users may eaters per week encounter This slide was adapted from a talk by Huaixiu Zheng, Uber

24 .Bliss - Uber’s Customer Support Platform Write response using User Response Select Action a Reply Template Lookup info & Select Flow Node Policies Contact Select Write Message CSR Ticket Contact Type This slide was adapted from a talk by Huaixiu Zheng, Uber

25 .The Problem Resolving a ticket is not easy (or cheap) 1000+ types in a hierarchy depth: 3~6 10+ actions (adjust fare, add appeasement, …) 1000+ reply templates This slide was adapted from a talk by Huaixiu Zheng, Uber

26 .COTA: The Solution A collaborative effort from Uber Risk, CO Eng, and Data Platform teams ML Layer CO Eng routing engagement User Info TYPE ROUTING Trip Info COTA v2.1 REPLY (wordCNN) Ticket Text ACTION Ticket Metadata Recommend + Fraud DS Default embedment + Risk Features English Auto-resolution Spanish Portuguese

27 .Typical Machine Learning Workflow 1. define 4. measure Launch and Iterate 2. prototype 3. productionize

28 .Exploration and prototyping 1. define SQL, Spark 4. measure GET DATA Validation Computational cost EVALUATE MODELS 2. prototype DATA PREPARATION Interpretability Data cleansing and pre-processing, 3. productionize R / Python TRAIN MODELS CPU or GPU

29 .Vision: Build in DSW, run in prod platforms Easy ML experimentation, quick production ● Iterate on model quickly with tweaks to parameters and configuration ● Flexible development - custom code + leverage existing modules for data prep, ETLs, train, predict, and visualize ● Jupyter notebook running on a GPU or CPU session ● Pre-packaged Spark, tensorflow, keras, pandas, numpy, scipy etc. ● Interactive Spark exec through Uber’s Spark as a Service - Drogon ● API integrations to production ML platform - Michelangelo ● API integrations to data workflow management - Piper ● Develop and test locally, deploy in the cluster when ready