- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Cloud Storage Spring Cleaning: A Treasure Hunt

展开查看详情

1 .WIFI SSID:SparkAISummit | Password: UnifiedAnalytics

2 .Cloud Storage Spring Cleaning: A Treasure Hunt Zach Musgrave and Steven Moy Yelp Inc #UnifiedAnalytics #SparkAISummit

3 .Agenda today • What is our treasure? • Where can we find some clues? • How do we uncover the treasure map? • What can we find with it? !3

4 .Motivation • Insight into cloud storage patterns • Justification to delete or transition data • Classification of data via real-world accesses !4

5 .Motivation • In the beginning, there was infinite storage. • Yelp said, "Yes, please!" • Now, we don't have a problem, per se... #UnifiedAnalytics #SparkAISummit !5

6 .Motivation • Now, we don't have a problem, per se... • We have dozens of PBs worth of problems :/ #UnifiedAnalytics #SparkAISummit !6

7 .What is our treasure? Spark+AI Summit ztm & smoy !7

8 .What is our treasure? We keep many things in S3, and we want to lifecycle data into the right cost tier. How do we make the right decision? For example, should we archive after 365 days? 400 days? !8

9 .Motivation • Cloud object storage: It's everywhere! • S3 continues to eat the world's storage workloads • No more filing tickets to add hard drives • As long as you pay the monthly bill, it’s easy... • Put(key, object) • Get(key) #UnifiedAnalytics #SparkAISummit !9

10 .Eventually, leadership says... Why are we spending so much money? Do we really need tens of petabytes stored in the cloud? !10

11 .Eventually, leadership says... Why are we spending so much money? Do we really need tens of petabytes for immediate access at all times? !11

12 .Eventually, leadership says... Why are we spending so much money? Do we really access all data equally all the time? !12

13 .And then we say... If only we knew something - anything! - about engineers' access patterns... !13

14 .We need a map... !14

15 .What is the treasure? • Large storage savings, measured in $$$ • You may end up in your org's quarterly slide deck • Security implications • Principle of least privilege made easy easier • Access trails • Make decisions without rolling a 12-sided dice !15

16 .Where are the clues? • We use AWS S3 as our example • Your storage provider likely has similar features • S3 Server Side Logging • Apache-like access logs for every operation !16

17 .Where are the clues? • Use S3? • Get access logs. • Where do those go? • ... Inside S3 ... • If you enable them. !17

18 .Where are the clues? analyze the S3 access logs... !18

19 .How do we do that? Let's look at the contents of these logs. !19

20 .S3 Access Logs • Non-compressed • Row-based format • Files can be very small • Range from KB to MB • Dozens - to many thousands - of rows • Require a complex regex to parse !20

21 .S3 Access Logs: Example 79a59df900b949e55d96a1e698fbacedfd6e09d98eacf8f8d521 8e7cd47ef2be awsexamplebucket [06/Feb/2019:00:00:38 +0000] 192.0.2.3 79a59df900b949e55d96a1e698fbacedfd6e09d98eacf8f8d521 8e7cd47ef2be 3E57427F3EXAMPLE REST.GET.VERSIONING - "GET /awsexamplebucket?versioning HTTP/1.1" 200 - 113 - 7 - "-" "S3Console/0.4" - s9lzHYrFp76ZVxRcpX9+5cjAnEH2ROuNkd2BHfIa6UkFVdtjf5mK R3/eTPFvsiP/XV/VLi31234= SigV2 ECDHE-RSA-AES128-GCM- SHA256 AuthHeader awsexamplebucket.s3.amazonaws.com !21

22 .Persistence and structure • Conversion is a bit expensive • No need to repeat conversion over 10+ years of data • May as well store a queryable result • Determinism: Easy to replicate prior results. !22

23 .Before we go further All queries, code fragments, snippets, etc. we show you Are included in our Github repository! github.com/Yelp/ aws_logs_to_parquet_converter So, no need to photograph each slide :) !23

24 .Where are the clues? !24

25 . SQL Analytics (AWS Athena) Columnar Files (Parquet) Batch Conversion (Spark + Jupyter) S3 Prefix Scan (boto3 + RDD -> dataframe) !25

26 .Clue #1 - SQL declarative analysis • Our developers and analysts speak SQL • We can execute SQL against flat files • Lots of different ways to do this • AWS Athena is easiest for us !26

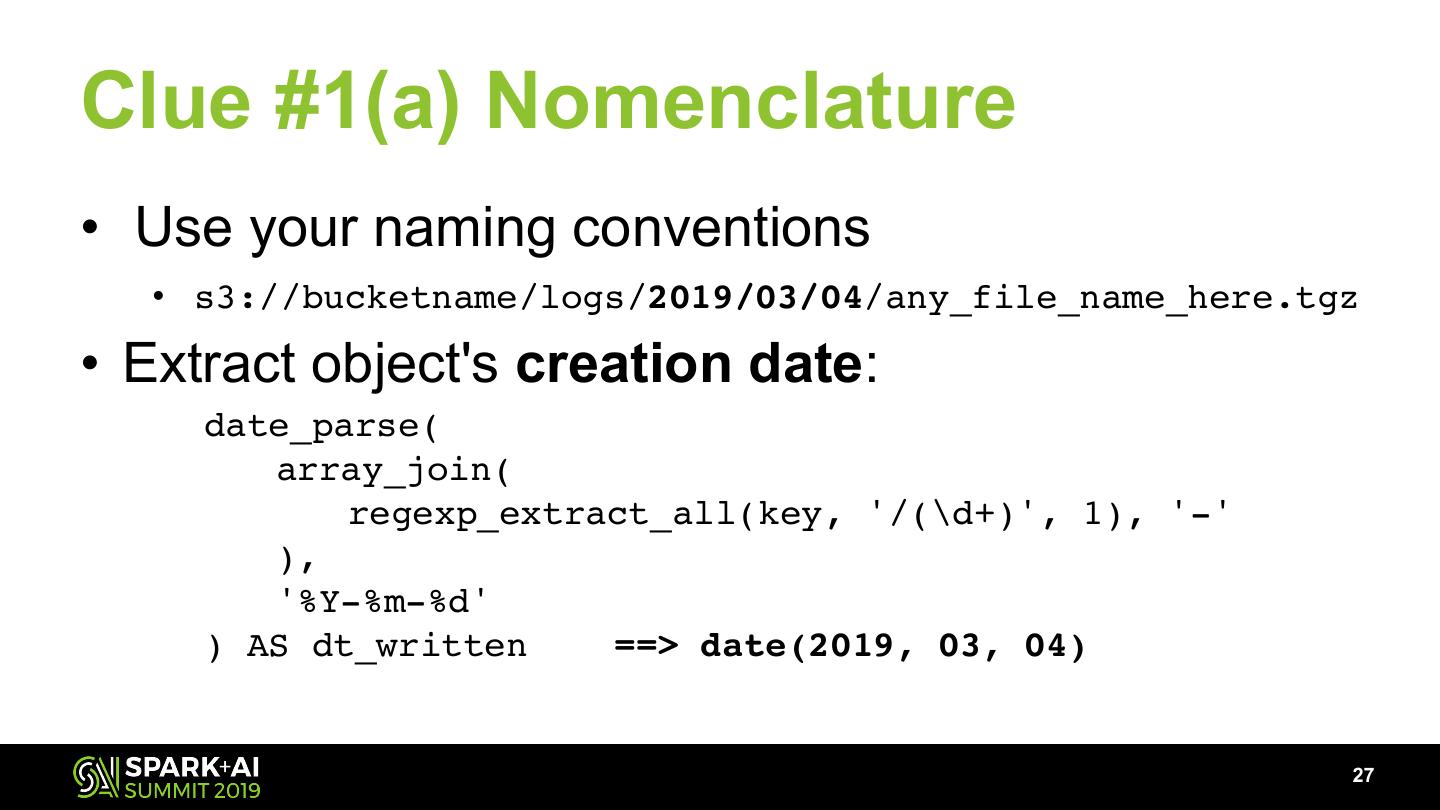

27 .Clue #1(a) Nomenclature • Use your naming conventions • s3://bucketname/logs/2019/03/04/any_file_name_here.tgz • Extract object's creation date: date_parse( array_join( regexp_extract_all(key, '/(\d+)', 1), '-' ), '%Y-%m-%d' ) AS dt_written ==> date(2019, 03, 04) !27

28 .Clue #1(a) Nomenclature !28

29 .Clue #1(b) Days Apart • We don't care when accesses occur • We do care how far in the past the access is date_diff( 'day', date_parse(array_join(regexp_extract_all(...... , date_trunc('day', request_time) ) AS days_apart, !29