- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Best Practices for Hyperparameter Tuning with MLflow

展开查看详情

1 .Best Practices for Hyperparameter Tuning with Joseph Bradley April 24, 2019 Spark + AI Summit

2 .About me Joseph Bradley • Software engineer at Databricks • Apache Spark committer & PMC member

3 .About Databricks TEAM Started Spark project (now Apache Spark) at UC Berkeley in 2009 MISSION Making Big Data Simple Try for free today. PRODUCT databricks.com Unified Analytics Platform

4 .Hyperparameters • Express high-level concepts, such as statistical assumptions • Are fixed before training or are hard to learn from data • Affect objective, test time performance, computational cost E.g.: • Linear Regression: regularization, # iterations of optimization • Neural Network: learning rate, # hidden layers

5 .Tuning hyperparameters E.g.: Fitting a Common goals: polynomial • More flexible modeling process • Reduced generalization error • Faster training • Plug & play ML

6 .Challenges in tuning Curse of dimensionality Non-convex optimization Computational cost Unintuitive hyperparameters

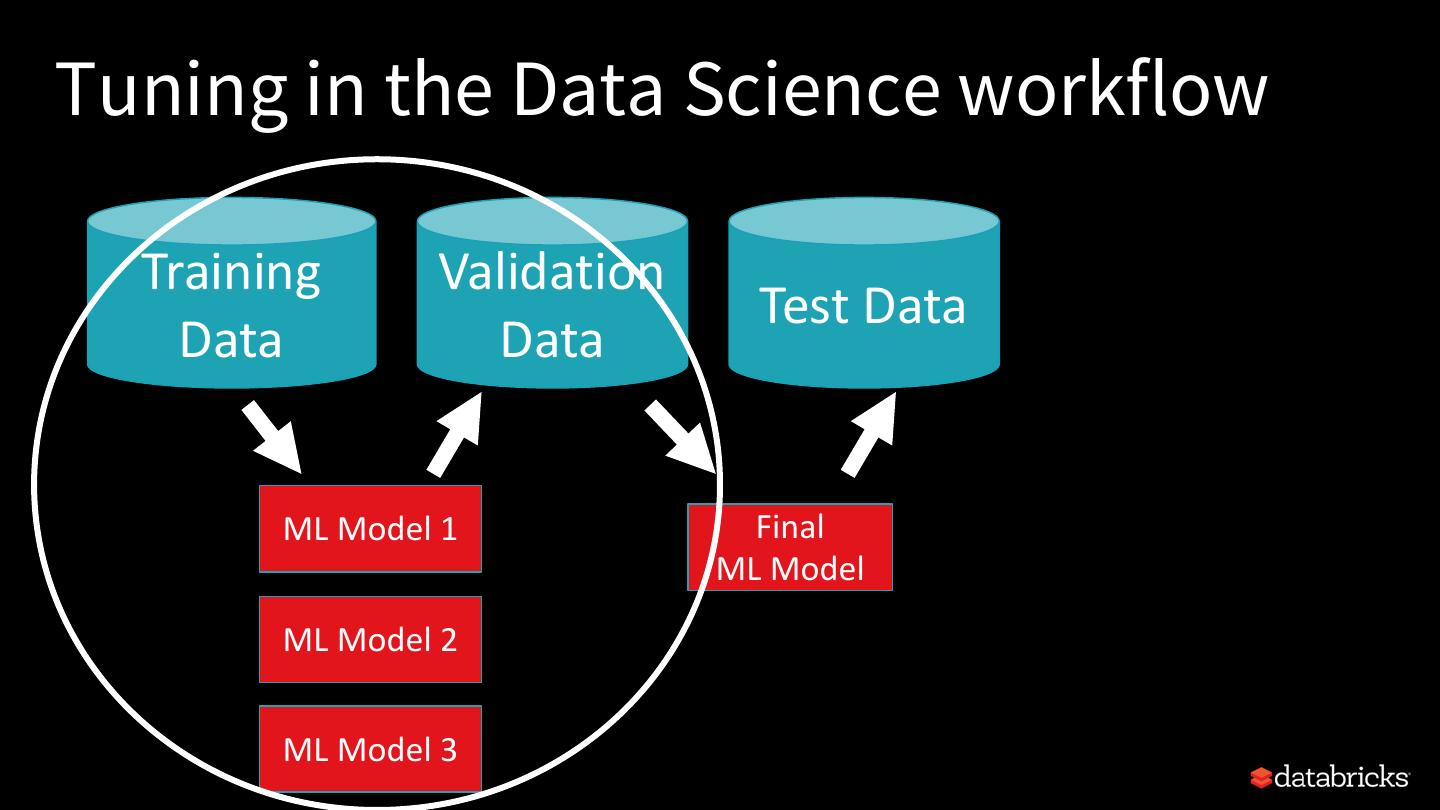

7 .Tuning in the Data Science workflow Data

8 .Tuning in the Data Science workflow Training Data Test Data ML Model

9 .Tuning in the Data Science workflow Training Validation Test Data Data Data ML Model 1 Final ML Model ML Model 2 ML Model 3

10 .Tuning in the Data Science workflow ML Model Model family Hyperparameter Featurization selection tuning “AutoML” includes hyperparameter tuning.

11 .This talk Popular methods for hyperparameter tuning • Overview of methods • Comparing methods • Open-source tools Tuning in practice with MLflow • Instrument tuning • Analyze results • Productionize models Beyond this talk

12 .This talk Popular methods for hyperparameter tuning • Overview of methods • Comparing methods • Open-source tools Tuning in practice with MLflow • Instrument tuning • Analyze results • Productionize models Beyond this talk

13 .Overview of tuning methods • Manual search • Grid search • Random search • Population-based algorithms • Bayesian algorithms

14 .Manual search Select hyperparameter settings to try based on human intuition. 5 2 hyperparameters: 4 • [0, ..., 5] 3 • {A, B, ..., F} 2 Expert knowledge tells us to try: 1 (2,C), (2,D), (2,E), (3,C), (3,D), (3,E) 0 A B C D E F

15 .Grid Search Try points on a grid defined by ranges and step sizes 5 X-axis: {A,...,F} 4 Y-axis: 0-5, step = 1 3 2 1 0 A B C D E F

16 .Random Search Sample from distributions over ranges 5 X-axis: Uniform({A,...,F}) 4 Y-axis: Uniform([0,5]) 3 2 1 0 A B C D E F

17 .Population Based Algorithms Start with random search, then iterate: • Use the previous “generation” to 5 inform the next generation 4 • E.g., sample from best performers & then perturb them 3 2 1 0 A B C D E F

18 .Population Based Algorithms Start with random search, then iterate: • Use the previous “generation” to 5 inform the next generation 4 • E.g., sample from best performers & then perturb them 3 2 1 0 A B C D E F

19 .Population Based Algorithms Start with random search, then iterate: • Use the previous “generation” to 5 inform the next generation 4 • E.g., sample from best performers & then perturb them 3 2 1 0 A B C D E F

20 .Bayesian Optimization Model the loss function: Hyperparameters à loss 5 Iteratively search space, trading off 4 between exploration and exploitation 3 2 1 0 A B C D E F

21 .Bayesian Optimization Performance Parameter Space

22 .Bayesian Optimization Performance Parameter Space

23 .Bayesian Optimization Performance Parameter Space

24 .Bayesian Optimization Performance Parameter Space

25 .Bayesian Optimization Performance Parameter Space

26 .Bayesian Optimization Performance Parameter Space

27 .Bayesian Optimization Performance Parameter Space

28 .Bayesian Optimization Performance Parameter Space

29 .Bayesian Optimization Performance Parameter Space