- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

A View of Cloud Computing

展开查看详情

1 .practice doi:10.1145/ 1721654.1721672 hours. This elasticity of resources, with- out paying a premium for large scale, is Clearing the clouds away from the true unprecedented in the history of IT. potential and obstacles posed by this As a result, cloud computing is a popular topic for blogging and white computing capability. papers and has been featured in the title of workshops, conferences, and by Michael Armbrust, Armando Fox, Rean Griffith, even magazines. Nevertheless, confu- Anthony D. Joseph, Randy Katz, Andy Konwinski, sion remains about exactly what it is Gunho Lee, David Patterson, Ariel Rabkin, Ion Stoica, and when it’s useful, causing Oracle’s and Matei Zaharia CEO Larry Ellison to vent his frustra- tion: “The interesting thing about A View cloud computing is that we’ve rede- fined cloud computing to include ev- erything that we already do…. I don’t understand what we would do differ- of Cloud ently in the light of cloud computing other than change the wording of some of our ads.” Our goal in this article is to reduce Computing that confusion by clarifying terms, pro- viding simple figures to quantify com- parisons between of cloud and con- ventional computing, and identifying the top technical and non-technical obstacles and opportunities of cloud computing. (Armbrust et al4 is a more detailed version of this article.) Defining Cloud Computing Cloud computing refers to both the Cloud computing, the long-held dream of computing applications delivered as services over as a utility, has the potential to transform a large the Internet and the hardware and sys- tems software in the data centers that part of the IT industry, making software even more provide those services. The services attractive as a service and shaping the way IT hardware themselves have long been referred to as Software as a Service (SaaS).a Some is designed and purchased. Developers with innovative vendors use terms such as IaaS (Infra- ideas for new Internet services no longer require the structure as a Service) and PaaS (Plat- large capital outlays in hardware to deploy their service form as a Service) to describe their products, but we eschew these because or the human expense to operate it. They need not accepted definitions for them still vary be concerned about overprovisioning for a service widely. The line between “low-level” infrastructure and a higher-level “plat- whose popularity does not meet their predictions, thus form” is not crisp. We believe the two wasting costly resources, or underprovisioning for one are more alike than different, and we that becomes wildly popular, thus missing potential consider them together. Similarly, the customers and revenue. Moreover, companies with a For the purposes of this article, we use the large batch-oriented tasks can get results as quickly as term Software as a Service to mean applica- tions delivered over the Internet. The broadest their programs can scale, since using 1,000 servers for definition would encompass any on demand one hour costs no more than using one server for 1,000 software, including those that run software locally but control use via remote software li- censing. 50 communicatio ns o f th e acm | apr i l 201 0 | vol. 5 3 | no. 4

2 . related term “grid computing,” from but does not include small or medium- computing users to plan far ahead for the high-performance computing sized data centers, even if these rely on provisioning. community, suggests protocols to offer virtualization for management. People ˲˲ The elimination of an up-front shared computation and storage over can be users or providers of SaaS, or us- commitment by cloud users, thereby long distances, but those protocols did ers or providers of utility computing. allowing companies to start small and not lead to a software environment that We focus on SaaS providers (cloud us- increase hardware resources only when grew beyond its community. ers) and cloud providers, which have there is an increase in their needs.b The data center hardware and soft- received less attention than SaaS us- ˲˲ The ability to pay for use of com- ware is what we will call a cloud. When ers. Figure 1 makes provider-user re- puting resources on a short-term basis a cloud is made available in a pay-as- lationships clear. In some cases, the as needed (for example, processors by you-go manner to the general public, same actor can play multiple roles. For the hour and storage by the day) and re- we call it a public cloud; the service be- instance, a cloud provider might also lease them as needed, thereby reward- ing sold is utility computing. We use the host its own customer-facing services ing conservation by letting machines term private cloud to refer to internal on cloud infrastructure. and storage go when they are no longer data centers of a business or other or- From a hardware provisioning and useful. ganization, not made available to the pricing point of view, three aspects are Illustration by j on ha n general public, when they are large new in cloud computing. enough to benefit from the advantages ˲˲ The appearance of infinite com- b Note, however, that upfront commitments can still be used to reduce per-usage charges. of cloud computing that we discuss puting resources available on demand, For example, Amazon Web Services also offers here. Thus, cloud computing is the quickly enough to follow load surges, long-term rental of servers, which they call re- sum of SaaS and utility computing, thereby eliminating the need for cloud served instances. apr i l 2 0 1 0 | vol . 53 | n o. 4 | c om m u n icat ion s of t he ac m 51

3 .practice Figure 1. Users and providers of cloud computing. We focus on cloud computing’s effects This low level makes it inherently dif- on cloud providers and SaaS providers/cloud users. The top level can be recursive, in that ficult for Amazon to offer automatic SaaS providers can also be a SaaS users via mashups. scalability and failover because the semantics associated with replication and other state management issues are highly application-dependent. At the other extreme of the spectrum are application domain-specific platforms such as Google AppEngine, which is targeted exclusively at traditional Web SaaS Provider/ applications, enforcing an applica- Cloud Provider SaaS User Cloud User tion structure of clean separation be- Utility Web tween a stateless computation tier and computing applications a stateful storage tier. AppEngine’s impressive automatic scaling and high-availability mechanisms, and the We argue that the construction and Omitting private clouds from cloud proprietary MegaStore data storage operation of extremely large-scale, computing has led to considerable de- available to AppEngine applications, commodity-computer data centers at bate in the blogosphere. We believe the all rely on these constraints. Applica- low-cost locations was the key neces- confusion and skepticism illustrated tions for Microsoft’s Azure are written sary enabler of cloud computing, for by Larry Ellison’s quote occurs when using the .NET libraries, and compiled they uncovered the factors of 5 to 7 the advantages of public clouds are to the Common Language Runtime, a decrease in cost of electricity, network also claimed for medium-sized data language-independent managed en- bandwidth, operations, software, and centers. Except for extremely large vironment. The framework is signifi- hardware available at these very large data centers of hundreds of thousands cantly more flexible than AppEngine’s, economies of scale. These factors, of machines, such as those that might but still constrains the user’s choice of combined with statistical multiplexing be operated by Google or Microsoft, storage model and application struc- to increase utilization compared to tra- most data centers enjoy only a subset ture. Thus, Azure is intermediate be- ditional data centers, meant that cloud of the potential advantages of public tween application frameworks like computing could offer services below clouds, as Table 1 shows. We therefore AppEngine and hardware virtual ma- the costs of a medium-sized data cen- believe that including traditional data chines like EC2. ter and yet still make a good profit. centers in the definition of cloud com- Our proposed definition allows us to puting will lead to exaggerated claims Cloud Computing Economics clearly identify certain installations as for smaller, so-called private clouds, We see three particularly compelling examples and non-examples of cloud which is why we exclude them. How- use cases that favor utility computing computing. Consider a public-facing ever, here we describe how so-called over conventional hosting. A first case Internet service hosted on an ISP who private clouds can get more of the ben- is when demand for a service varies can allocate more machines to the ser- efits of public clouds through surge with time. For example, provisioning vice given four hours notice. Since load computing or hybrid cloud computing. a data center for the peak load it must surges on the public Internet can hap- sustain a few days per month leads pen much more quickly than that (An- Classes of Utility Computing to underutilization at other times. imoto saw its load double every 12 hours Any application needs a model of com- Instead, cloud computing lets an or- for nearly three days), this is not cloud putation, a model of storage, and a ganization pay by the hour for com- computing. In contrast, consider an model of communication. The statisti- puting resources, potentially leading internal enterprise data center whose cal multiplexing necessary to achieve to cost savings even if the hourly rate applications are modified only with sig- elasticity and the appearance of infi- to rent a machine from a cloud pro- nificant advance notice to administra- nite capacity available on demand re- vider is higher than the rate to own tors. In this scenario, large load surges quires automatic allocation and man- one. A second case is when demand on the scale of minutes are highly un- agement. In practice, this is done with is unknown in advance. For example, likely, so as long as allocation can track virtualization of some sort. Our view a Web startup will need to support expected load increases, this scenario is that different utility computing of- a spike in demand when it becomes fulfills one of the necessary conditions ferings will be distinguished based on popular, followed potentially by a re- for operating as a cloud. The enterprise the cloud system software’s level of ab- duction once some visitors turn away. data center may still fail to meet other straction and the level of management Finally, organizations that perform conditions for being a cloud, however, of the resources. batch analytics can use the “cost asso- such as the appearance of infinite re- Amazon EC2 is at one end of the ciativity” of cloud computing to finish sources or fine-grained billing. A pri- spectrum. An EC2 instance looks computations faster: using 1,000 EC2 vate data center may also not benefit much like physical hardware, and us- machines for one hour costs the same from the economies of scale that make ers can control nearly the entire soft- as using one machine for 1,000 hours. public clouds financially attractive. ware stack, from the kernel upward. Although the economic appeal of 52 comm unicatio ns o f th e acm | apr i l 201 0 | vol. 5 3 | no. 4

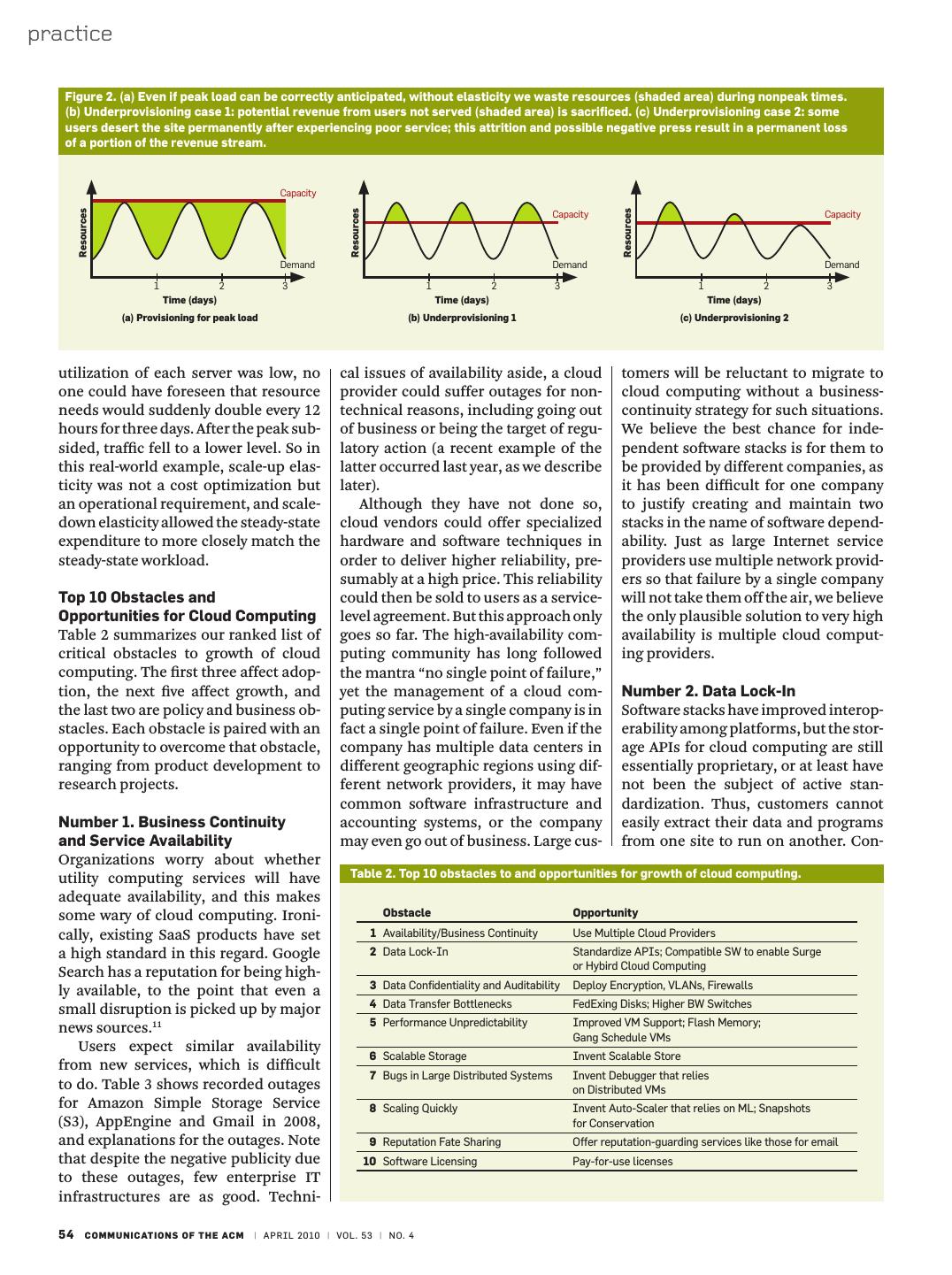

4 . practice cloud computing is often described as shockingly low, but it is consistent with ly turning away excess users. While the “converting capital expenses to operat- the observation that for many services cost of overprovisioning is easily mea- ing expenses” (CapEx to OpEx), we be- the peak workload exceeds the aver- sured, the cost of underprovisioning is lieve the phrase “pay as you go” more age by factors of 2 to 10. Since few us- more difficult to measure yet potential- directly captures the economic benefit ers deliberately provision for less than ly equally serious: not only do rejected to the buyer. Hours purchased via cloud the expected peak, resources are idle at users generate zero revenue, they may computing can be distributed non-uni- nonpeak times. The more pronounced never come back. For example, Friend- formly in time (for example, use 100 the variation, the more the waste. ster’s decline in popularity relative to server-hours today and no server-hours For example, Figure 2a assumes competitors Facebook and MySpace is tomorrow, and still pay only for 100); in our service has a predictable demand believed to have resulted partly from the networking community, this way of where the peak requires 500 servers at user dissatisfaction with slow response selling bandwidth is already known as noon but the trough requires only 100 times (up to 40 seconds).16 Figure 2c usage-based pricing.c In addition, the servers at midnight. As long as the aver- aims to capture this behavior: Users absence of up-front capital expense age utilization over a whole day is 300 will desert an underprovisioned service allows capital to be redirected to core servers, the actual cost per day (area un- until the peak user load equals the data business investment. der the curve) is 300 × 24 = 7,200 server center’s usable capacity, at which point Therefore, even if Amazon’s pay- hours; but since we must provision to users again receive acceptable service. as-you-go pricing was more expensive the peak of 500 servers, we pay for 500 × For a simplified example, assume than buying and depreciating a com- 24 = 12,000 server-hours, a factor of 1.7 that users of a hypothetical site fall into parable server over the same period, more. Therefore, as long as the pay-as- two classes: active users (those who use we argue that the cost is outweighed you-go cost per server-hour over three the site regularly) and defectors (those by the extremely important cloud com- years (typical amortization time) is less who abandon the site or are turned puting economic benefits of elasticity than 1.7 times the cost of buying the away from the site due to poor perfor- and transference of risk, especially the server, utility computing is cheaper. mance). Further, suppose that 10% of risks of overprovisioning (underutiliza- In fact, this example underestimates active users who receive poor service tion) and underprovisioning (satura- the benefits of elasticity, because in ad- due to underprovisioning are “perma- tion). dition to simple diurnal patterns, most nently lost” opportunities (become de- We start with elasticity. The key ob- services also experience seasonal or fectors), that is, users who would have servation is that cloud computing’s other periodic demand variation (for remained regular visitors with a better ability to add or remove resources at example, e-commerce in December experience. The site is initially provi- a fine grain (one server at a time with and photo sharing sites after holidays) sioned to handle an expected peak of EC2) and with a lead time of minutes as well as some unexpected demand 400,000 users (1,000 users per server × rather than weeks allows matching bursts due to external events (for ex- 400 servers), but unexpected positive resources to workload much more ample, news events). Since it can take press drives 500,000 users in the first closely. Real world estimates of average weeks to acquire and rack new equip- hour. Of the 100,000 who are turned server utilization in data centers range ment, to handle such spikes you must away or receive bad service, by our as- from 5% to 20%.15,17 This may sound provision for them in advance. We al- sumption 10,000 of them are perma- ready saw that even if service operators nently lost, leaving an active user base c Usage-based pricing is not renting. Renting predict the spike sizes correctly, capac- of 390,000. The next hour sees 250,000 a resource involves paying a negotiated cost ity is wasted, and if they overestimate new unique users. The first 10,000 do to have the resource over some time period, the spike they provision for, it’s even fine, but the site is still overcapacity by whether or not you use the resource. Pay-as- you-go involves metering usage and charging worse. 240,000 users. This results in 24,000 based on actual use, independently of the time They may also underestimate the additional defections, leaving 376,000 period over which the usage occurs. spike (Figure 2b), however, accidental- permanent users. If this pattern con- tinues, after lg(500,000) or 19 hours, Table 1. Comparing public clouds and private data centers. the number of new users will approach zero and the site will be at capacity in Advantage Public Cloud Conventional Data Center steady state. Clearly, the service op- Appearance of infinite computing Yes No erator has collected less than 400,000 resources on demand users’ worth of steady revenue during Elimination of an up-front Yes No those 19 hours, however, again illustrat- commitment by Cloud users ing the underutilization argument—to Ability to pay for use of computing resources Yes No say nothing of the bad reputation from on a short-term basis as needed the disgruntled users. Economies of scale due to very large data centers Yes Usually not Do such scenarios really occur in Higher utilization by multiplexing of workloads Yes Depends on from different organizations company size practice? When Animoto3 made its ser- Simplify operation and increase utilization Yes No vice available via Facebook, it experi- via resource virtualization enced a demand surge that resulted in growing from 50 servers to 3,500 serv- ers in three days. Even if the average apr i l 2 0 1 0 | vol . 53 | n o. 4 | c om m u n icat ion s of t he ac m 53

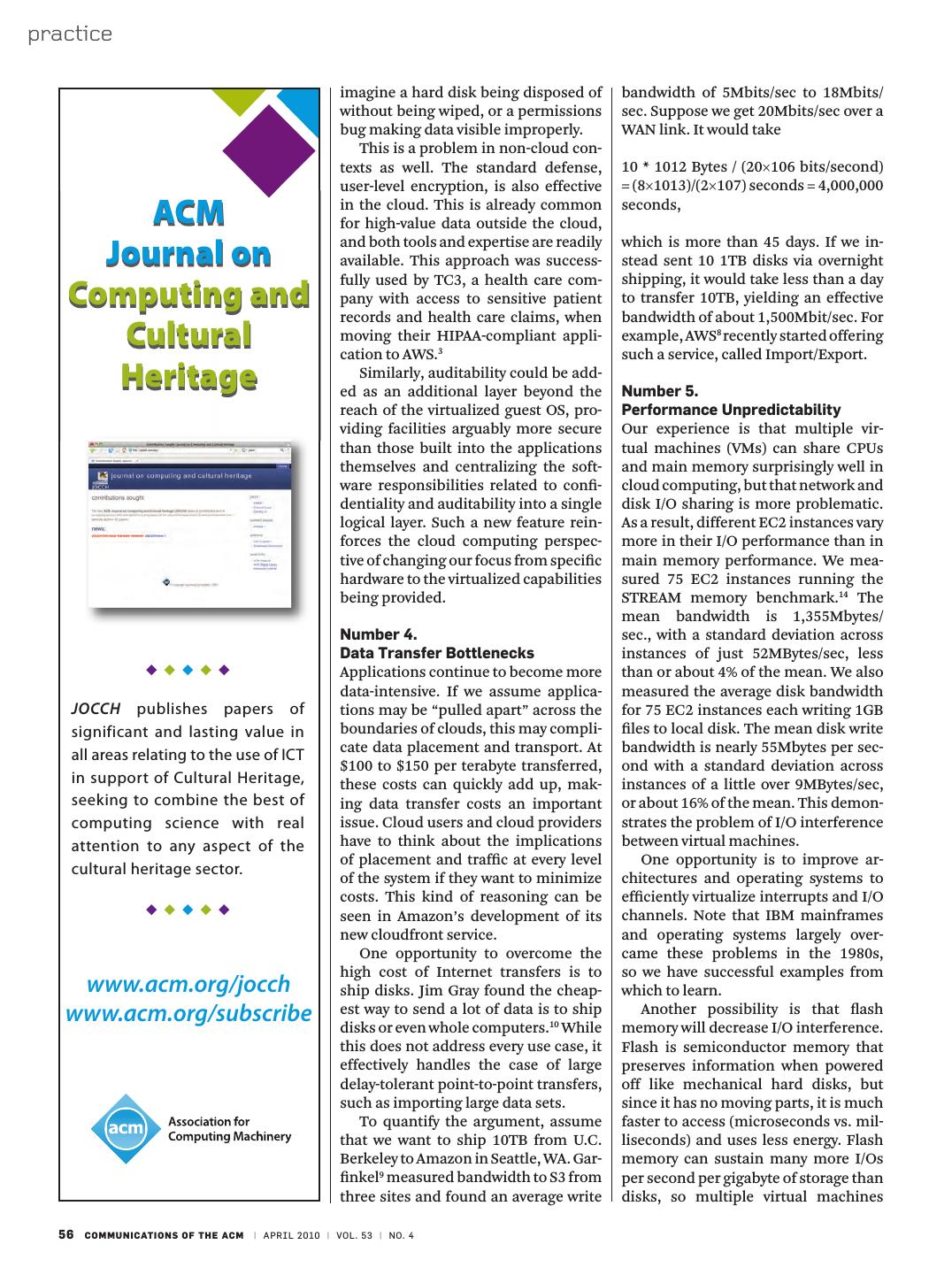

5 .practice Figure 2. (a) Even if peak load can be correctly anticipated, without elasticity we waste resources (shaded area) during nonpeak times. (b) Underprovisioning case 1: potential revenue from users not served (shaded area) is sacrificed. (c) Underprovisioning case 2: some users desert the site permanently after experiencing poor service; this attrition and possible negative press result in a permanent loss of a portion of the revenue stream. Capacity Resources Resources Resources Capacity Capacity Demand Demand Demand 1 2 3 1 2 3 1 2 3 Time (days) Time (days) Time (days) (a) Provisioning for peak load (b) Underprovisioning 1 (c) Underprovisioning 2 utilization of each server was low, no cal issues of availability aside, a cloud tomers will be reluctant to migrate to one could have foreseen that resource provider could suffer outages for non- cloud computing without a business- needs would suddenly double every 12 technical reasons, including going out continuity strategy for such situations. hours for three days. After the peak sub- of business or being the target of regu- We believe the best chance for inde- sided, traffic fell to a lower level. So in latory action (a recent example of the pendent software stacks is for them to this real-world example, scale-up elas- latter occurred last year, as we describe be provided by different companies, as ticity was not a cost optimization but later). it has been difficult for one company an operational requirement, and scale- Although they have not done so, to justify creating and maintain two down elasticity allowed the steady-state cloud vendors could offer specialized stacks in the name of software depend- expenditure to more closely match the hardware and software techniques in ability. Just as large Internet service steady-state workload. order to deliver higher reliability, pre- providers use multiple network provid- sumably at a high price. This reliability ers so that failure by a single company Top 10 Obstacles and could then be sold to users as a service- will not take them off the air, we believe Opportunities for Cloud Computing level agreement. But this approach only the only plausible solution to very high Table 2 summarizes our ranked list of goes so far. The high-availability com- availability is multiple cloud comput- critical obstacles to growth of cloud puting community has long followed ing providers. computing. The first three affect adop- the mantra “no single point of failure,” tion, the next five affect growth, and yet the management of a cloud com- Number 2. Data Lock-In the last two are policy and business ob- puting service by a single company is in Software stacks have improved interop- stacles. Each obstacle is paired with an fact a single point of failure. Even if the erability among platforms, but the stor- opportunity to overcome that obstacle, company has multiple data centers in age APIs for cloud computing are still ranging from product development to different geographic regions using dif- essentially proprietary, or at least have research projects. ferent network providers, it may have not been the subject of active stan- common software infrastructure and dardization. Thus, customers cannot Number 1. Business Continuity accounting systems, or the company easily extract their data and programs and Service Availability may even go out of business. Large cus- from one site to run on another. Con- Organizations worry about whether utility computing services will have Table 2. Top 10 obstacles to and opportunities for growth of cloud computing. adequate availability, and this makes some wary of cloud computing. Ironi- Obstacle Opportunity cally, existing SaaS products have set 1 Availability/Business Continuity Use Multiple Cloud Providers a high standard in this regard. Google 2 Data Lock-In Standardize APIs; Compatible SW to enable Surge or Hybird Cloud Computing Search has a reputation for being high- 3 Data Confidentiality and Auditability Deploy Encryption, VLANs, Firewalls ly available, to the point that even a 4 Data Transfer Bottlenecks FedExing Disks; Higher BW Switches small disruption is picked up by major 5 Performance Unpredictability Improved VM Support; Flash Memory; news sources.11 Gang Schedule VMs Users expect similar availability 6 Scalable Storage Invent Scalable Store from new services, which is difficult 7 Bugs in Large Distributed Systems Invent Debugger that relies to do. Table 3 shows recorded outages on Distributed VMs for Amazon Simple Storage Service 8 Scaling Quickly Invent Auto-Scaler that relies on ML; Snapshots (S3), AppEngine and Gmail in 2008, for Conservation and explanations for the outages. Note 9 Reputation Fate Sharing Offer reputation-guarding services like those for email that despite the negative publicity due 10 Software Licensing Pay-for-use licenses to these outages, few enterprise IT infrastructures are as good. Techni- 54 com municatio ns o f th e acm | apr i l 201 0 | vol. 5 3 | no. 4

6 . practice cern about the difficulty of extracting lyptus and HyperTable, are first steps sell specialty security services. The ho- data from the cloud is preventing some in enabling surge computing. mogeneity and standardized interfaces organizations from adopting cloud of platforms like EC2 make it possible computing. Customer lock-in may be Number 3. Data for a company to offer, say, configura- attractive to cloud computing provid- Confidentiality/Auditability tion management or firewall rule anal- ers, but their users are vulnerable to Despite most companies outsourcing ysis as value-added services. price increases, to reliability problems, payroll and many companies using While cloud computing may make or even to providers going out of busi- external email services to hold sensi- external-facing security easier, it does ness. tive information, security is one of the pose the new problem of internal- For example, an online storage ser- most often-cited objections to cloud facing security. Cloud providers must vice called The Linkup shut down on computing; analysts and skeptical guard against theft or denial-of-service Aug. 8, 2008 after losing access as much companies ask “who would trust their attacks by users. Users need to be pro- as 45% of customer data.6 The Linkup, essential data out there somewhere?” tected from one another. in turn, had relied on the online stor- There are also requirements for audit- The primary security mechanism in age service Nirvanix to store customer ability, in the sense of Sarbanes-Oxley today’s clouds is virtualization. It is a data, which led to finger pointing be- and Health and Human Services Health powerful defense, and protects against tween the two organizations as to why Insurance Portability and Accountabil- most attempts by users to attack one customer data was lost. Meanwhile, ity Act (HIPAA) regulations that must another or the underlying cloud infra- The Linkup’s 20,000 users were told be provided for corporate data to be structure. However, not all resources the service was no longer available and moved to the cloud. are virtualized and not all virtualization were urged to try out another storage Cloud users face security threats environments are bug-free. Virtualiza- site. both from outside and inside the cloud. tion software has been known to con- One solution would be to standard- Many of the security issues involved in tain bugs that allow virtualized code to ize the APIsd in such a way that a SaaS protecting clouds from outside threats “break loose” to some extent. Incorrect developer could deploy services and are similar to those already facing large network virtualization may allow user data across multiple cloud computing data centers. In the cloud, however, code access to sensitive portions of the providers so that the failure of a single this responsibility is divided among provider’s infrastructure, or to the re- company would not take all copies of potentially many parties, including the sources of other users. These challeng- customer data with it. One might worry cloud user, the cloud vendor, and any es, though, are similar to those involved that this would lead to a “race-to-the- third-party vendors that users rely on in managing large non-cloud data cen- bottom” of cloud pricing and flatten for security-sensitive software or con- ters, where different applications need the profits of cloud computing provid- figurations. to be protected from one another. Any ers. We offer two arguments to allay The cloud user is responsible for large Internet service will need to en- this fear. application-level security. The cloud sure that a single security hole doesn’t First, the quality of a service matters provider is responsible for physical compromise everything else. as well as the price, so customers may security, and likely for enforcing exter- One last security concern is protect- not jump to the lowest-cost service. nal firewall policies. Security for inter- ing the cloud user against the provider. Some Internet service providers today mediate layers of the software stack is The provider will by definition con- cost a factor of 10 more than others shared between the user and the oper- trol the “bottom layer” of the software because they are more dependable and ator; the lower the level of abstraction stack, which effectively circumvents offer extra services to improve usabil- exposed to the user, the more respon- most known security techniques. Ab- ity. sibility goes with it. Amazon EC2 us- sent radical improvements in security Second, in addition to mitigating ers have more technical responsibility technology, we expect that users will data lock-in concerns, standardization (that is, must implement or procure use contracts and courts, rather than of APIs enables a new usage model in more of the necessary functionality clever security engineering, to guard which the same software infrastruc- themselves) for their security than do against provider malfeasance. The one ture can be used in an internal data Azure users, who in turn have more re- important exception is the risk of inad- center and in a public cloud. Such an sponsibilities than AppEngine custom- vertent data loss. It’s difficult to imag- option could enable hybrid cloud com- ers. This user responsibility, in turn, ine Amazon spying on the contents of puting or surge computing in which can be outsourced to third parties who virtual machine memory; it’s easy to the public cloud is used to capture the extra tasks that cannot be easily run Table 3. Outages in AWS, AppEngine, and gmail service and outage duration date. in the data center (or private cloud) due to temporarily heavy workloads. Service and Outage Duration Date This option could significantly expand S3 outage: authentication service overload leading to unavailability17 2 hours 2/15/08 the cloud computing market. Indeed, S3 outage: Single bit error leading to gossip protocol blowup18 6–8 hours 7/20/08 open-source reimplementations of AppEngine partial outage: programming error19 5 hours 6/17/08 proprietary cloud APIs, such as Euca- Gmail: site unavailable due to outage in contacts system11 1.5 hours 8/11/08 d Data Liberation Front; http://dataliberation.org apr i l 2 0 1 0 | vol . 53 | n o. 4 | c om m u n icat ion s of t he ac m 55

7 .practice imagine a hard disk being disposed of bandwidth of 5Mbits/sec to 18Mbits/ without being wiped, or a permissions sec. Suppose we get 20Mbits/sec over a bug making data visible improperly. WAN link. It would take This is a problem in non-cloud con- texts as well. The standard defense, 10 * 1012 Bytes / (20×106 bits/second) user-level encryption, is also effective = (8×1013)/(2×107) seconds = 4,000,000 ACM in the cloud. This is already common for high-value data outside the cloud, seconds, Journal on and both tools and expertise are readily available. This approach was success- which is more than 45 days. If we in- stead sent 10 1TB disks via overnight fully used by TC3, a health care com- shipping, it would take less than a day Computing and pany with access to sensitive patient to transfer 10TB, yielding an effective bandwidth of about 1,500Mbit/sec. For records and health care claims, when Cultural moving their HIPAA-compliant appli- cation to AWS.3 example, AWS8 recently started offering such a service, called Import/Export. Heritage Similarly, auditability could be add- ed as an additional layer beyond the Number 5. reach of the virtualized guest OS, pro- Performance Unpredictability viding facilities arguably more secure Our experience is that multiple vir- than those built into the applications tual machines (VMs) can share CPUs themselves and centralizing the soft- and main memory surprisingly well in ware responsibilities related to confi- cloud computing, but that network and dentiality and auditability into a single disk I/O sharing is more problematic. logical layer. Such a new feature rein- As a result, different EC2 instances vary forces the cloud computing perspec- more in their I/O performance than in tive of changing our focus from specific main memory performance. We mea- hardware to the virtualized capabilities sured 75 EC2 instances running the being provided. STREAM memory benchmark.14 The mean bandwidth is 1,355Mbytes/ Number 4. sec., with a standard deviation across Data Transfer Bottlenecks instances of just 52MBytes/sec, less � � � � � Applications continue to become more than or about 4% of the mean. We also data-intensive. If we assume applica- measured the average disk bandwidth JOCCH publishes papers of tions may be “pulled apart” across the for 75 EC2 instances each writing 1GB significant and lasting value in boundaries of clouds, this may compli- files to local disk. The mean disk write all areas relating to the use of ICT cate data placement and transport. At bandwidth is nearly 55Mbytes per sec- $100 to $150 per terabyte transferred, ond with a standard deviation across in support of Cultural Heritage, these costs can quickly add up, mak- instances of a little over 9MBytes/sec, seeking to combine the best of ing data transfer costs an important or about 16% of the mean. This demon- computing science with real issue. Cloud users and cloud providers strates the problem of I/O interference attention to any aspect of the have to think about the implications between virtual machines. of placement and traffic at every level One opportunity is to improve ar- cultural heritage sector. of the system if they want to minimize chitectures and operating systems to costs. This kind of reasoning can be efficiently virtualize interrupts and I/O � � � � � seen in Amazon’s development of its channels. Note that IBM mainframes new cloudfront service. and operating systems largely over- One opportunity to overcome the came these problems in the 1980s, high cost of Internet transfers is to so we have successful examples from www.acm.org/jocch ship disks. Jim Gray found the cheap- which to learn. www.acm.org/subscribe est way to send a lot of data is to ship disks or even whole computers.10 While Another possibility is that flash memory will decrease I/O interference. this does not address every use case, it Flash is semiconductor memory that effectively handles the case of large preserves information when powered delay-tolerant point-to-point transfers, off like mechanical hard disks, but such as importing large data sets. since it has no moving parts, it is much To quantify the argument, assume faster to access (microseconds vs. mil- that we want to ship 10TB from U.C. liseconds) and uses less energy. Flash Berkeley to Amazon in Seattle, WA. Gar- memory can sustain many more I/Os finkel9 measured bandwidth to S3 from per second per gigabyte of storage than three sites and found an average write disks, so multiple virtual machines 56 comm unicatio ns o f the acm | apr i l 201 0 | vol. 5 3 | no. 4

8 . practice with conflicting random I/O workloads There have been many attempts to could coexist better on the same physi- answer this question, varying in the cal computer without the interference richness of the query and storage API’s, we see with mechanical disks. the performance guarantees offered, Another unpredictability obstacle concerns the scheduling of virtual ma- Just as large and the resulting consistency seman- tics. The opportunity, which is still an chines for some classes of batch pro- cessing programs, specifically for high- ISPs use multiple open research problem, is to create a storage system that would not only performance computing. Given that network providers meet existing programmer expecta- high-performance computing (HPC) is used to justify government purchases so that failure by tions in regard to durability, high avail- ability, and the ability to manage and of $100M supercomputer centers with a single company query data, but combine them with the 10,000 to 1,000,000 processors, there are many tasks with parallelism that will not take cloud advantages of scaling arbitrarily up and down on demand. can benefit from elastic computing. them off the air, Today, many of these tasks are run on small clusters, which are often poorly we believe the Number 7: Bugs in Large- Scale Distributed Systems utilized. There could be a significant savings in running these tasks on large only plausible One of the difficult challenges in cloud clusters in the cloud instead. Cost as- solution to very computing is removing errors in these very large-scale distributed systems. A sociativity means there is no cost pen- alty for using 20 times as much com- high availability common occurrence is that these bugs cannot be reproduced in smaller config- puting for 1/20th the time. Potential is multiple urations, so the debugging must occur applications that could benefit include those with very high potential financial cloud computing at scale in the production data centers. One opportunity may be the reliance returns—financial analysis, petroleum providers. on virtual machines in cloud comput- exploration, movie animation—that ing. Many traditional SaaS providers de- would value a 20x speedup even if there veloped their infrastructure without us- were a cost premium. ing VMs, either because they preceded The obstacle to attracting HPC is the recent popularity of VMs or because not the use of clusters; most parallel they felt they could not afford the per- computing today is done in large clus- formance hit of VMs. Since VMs are de ters using the message-passing inter- rigueur in utility computing, that level face MPI. The problem is that many of virtualization may make it possible HPC applications need to ensure that to capture valuable information in ways all the threads of a program are run- that are implausible without VMs. ning simultaneously, and today’s virtu- al machines and operating systems do Number 8: Scaling Quickly not provide a programmer-visible way Pay-as-you-go certainly applies to stor- to ensure this. Thus, the opportunity to age and to network bandwidth, both of overcome this obstacle is to offer some- which count bytes used. Computation thing like “gang scheduling” for cloud is slightly different, depending on the computing. The relatively tight timing virtualization level. Google AppEngine coordination expected in traditional automatically scales in response to gang scheduling may be challenging to load increases and decreases, and us- achieve in a cloud computing environ- ers are charged by the cycles used. AWS ment due to the performance unpre- charges by the hour for the number of dictability just described. instances you occupy, even if your ma- chine is idle. Number 6: Scalable Storage The opportunity is then to auto- Earlier, we identified three properties matically scale quickly up and down whose combination gives cloud com- in response to load in order to save puting its appeal: short-term usage money, but without violating service- (which implies scaling down as well as level agreements. Indeed, one focus up when demand drops), no upfront of the UC Berkeley Reliable Adaptive cost, and infinite capacity on demand. Distributed Systems Laboratory is the While it’s straightforward what this pervasive and aggressive use of statis- means when applied to computation, tical machine learning as a diagnostic it’s less clear how to apply it to persis- and predictive tool to allow dynamic tent storage. scaling, automatic reaction to perfor- apr i l 2 0 1 0 | vol . 53 | n o. 4 | c om m u n icat ion s of t he ac m 57

9 .practice mance and correctness problems, and restrict the computers on which the energy proportionality5 by putting idle automatically managing many other software can run. Users pay for the portions of the memory, disk, and net- aspects of these systems. software and then pay an annual main- work into low-power mode. Processors Another reason for scaling is to con- tenance fee. Indeed, SAP announced should work well with VMs and flash serve resources as well as money. Since that it would increase its annual main- memory should be added to the mem- an idle computer uses about two-thirds tenance fee to at least 22% of the pur- ory hierarchy, and LAN switches and of the power of a busy computer, care- chase price of the software, which is WAN routers must improve in band- ful use of resources could reduce the close to Oracle’s pricing.17 Hence, many width and cost. impact of data centers on the environ- cloud computing providers originally ment, which is currently receiving a relied on open source software in part Acknowledgments great deal of negative attention. Cloud because the licensing model for com- This research is supported in part by computing providers already perform mercial software is not a good match to gifts from Google, Microsoft, Sun Mi- careful and low-overhead accounting utility computing. crosystems, Amazon Web Services, of resource consumption. By impos- The primary opportunity is either Cisco Systems, Cloudera, eBay, Face- ing fine-grained costs, utility comput- for open source to remain popular or book, Fujitsu, Hewlett-Packard, Intel, ing encourages programmers to pay simply for commercial software com- Network Appliances, SAP, VMWare, Ya- attention to efficiency (that is, releas- panies to change their licensing struc- hoo! and by matching funds from the ing and acquiring resources only when ture to better fit cloud computing. For University of California Industry/Uni- necessary), and allows more direct example, Microsoft and Amazon now versity Cooperative Research Program measurement of operational and de- offer pay-as-you-go software licensing (UC Discovery) grant COM07-10240 velopment inefficiencies. for Windows Server and Windows SQL and by the National Science Founda- Being aware of costs is the first step to Server on EC2. An EC2 instance run- tion Grant #CNS-0509559. conservation, but configuration hassles ning Microsoft Windows costs $0.15 make it tempting to leave machines idle per hour instead of $0.10 per hour for The authors are associated with the UC Berkeley Reliable Adaptive Distributed Systems Laboratory (RAD Lab). overnight so that startup time is zero the open source alternative. IBM also when developers return to work the next announced pay-as-you-go pricing for References day. A fast and easy-to-use snapshot/re- hosted IBM software in conjunction 1. Amazon.com CEO Jeff Bezos on Animoto (Apr. 2008); start tool might further encourage con- with EC2, at prices ranging from $0.38 http://blog.animoto.com/2008/04/21/amazon-ceo-jeff- bezos-on-animoto/. servation of computing resources. per hour for DB2 Express to $6.39 per 2. Amazon S3 Team. Amazon S3 Availability Event hour for IBM WebSphere with Lotus (July 20, 2008); http://status.aws.amazon.com/s3- 20080720.html. Number 9: Reputation Fate Sharing Web Content Management Server. 3. Amazon Web Services. TC3 Health Case Study; http:// One customer’s bad behavior can af- aws.amazon.com/solutions/case-studies/tc3-health/. 4. Armbrust, M., et al. Above the clouds: A Berkeley view fect the reputation of others using Conclusion of cloud computing. Tech. Rep. UCB/EECS-2009-28, the same cloud. For instance, black- We predict cloud computing will grow, EECS Department, U.C. Berkeley, Feb 2009. 5. Barroso, L.A., and Holzle, U. The case for energy- listing of EC2 IP addresses13 by spam- so developers should take it into ac- proportional computing. IEEE Computer 40, 12 (Dec. 2007). prevention services may limit which count. Regardless of whether a cloud 6. Brodkin, J. Loss of customer data spurs closure of online storage service ’The Linkup.’ Network World applications can be effectively hosted. provider sells services at a low level of (Aug. 2008). An opportunity would be to create rep- abstraction like EC2 or a higher level 7. Fink, J. FBI agents raid Dallas computer business (Apr. 2009); http://cbs11tv.com/local/Core. utation-guarding services similar to like AppEngine, we believe computing, IP.Networks.2.974706.html. 8. Freedom OSS. Large data set transfer to the cloud (Apr. the “trusted email” services currently storage, and networking must all focus 2009); http://freedomoss.com/clouddataingestion. offered (for a fee) to services hosted on on horizontal scalability of virtualized 9. Garfinkel, S. An Evaluation of Amazon’s Grid Computing Services: EC2, S3 and SQS. Tech. Rep. TR-08-07, smaller ISP’s, which experience a mi- resources rather than on single node Harvard University, Aug. 2007. crocosm of this problem. performance. Moreover: 10. Gray, J., and Patterson, D. A conversation with Jim Gray. ACM Queue 1, 4 (2003), 8–17. Another legal issue is the question of 1. Applications software needs to 11. Helft, M. Google confirms problems with reaching its transfer of legal liability—cloud com- both scale down rapidly as well as scale services (May 14, 2009). 12. Jackson, T. We feel your pain, and we’re sorry (Aug. puting providers would want custom- up, which is a new requirement. Such 2008); http://gmailblog.blogspot.com/2008/08/we- ers to be liable and not them (such as, software also needs a pay-for-use li- feel-your-pain-and-were-sorry.html. 13. Krebs, B. Amazon: Hey spammers, Get off my cloud! the company sending the spam should censing model to match needs of cloud Washington Post (July 1 2008). be held liable, not Amazon). In March computing. 14. McCalpin, J. Memory bandwidth and machine balance in current high performance computers. IEEE Technical 2009, the FBI raided a Dallas data cen- 2. Infrastructure software must be Committee on Computer Architecture Newsletter ter because a company whose services aware that it is no longer running on (1995), 19–25. 15. Rangan, K. The Cloud Wars: $100+ Billion at Stake. were hosted there was being investi- bare metal but on VMs. Moreover, me- Tech. Rep., Merrill Lynch, May 2008. gated for possible criminal activity, tering and billing need to be built in 16. Rivlin, G. Wallflower at the Web Party (Oct. 15, 2006). 17. Siegele, L. Let It Rise: A Special Report on Corporate but a number of “innocent bystander” from the start. IT. The Economist (Oct. 2008). 18. Stern, A. Update from Amazon Regarding Friday’s S3 companies hosted in the same facility 3. Hardware systems should be de- Downtime. CenterNetworks (Feb. 2008); http://www. suffered days of unexpected downtime, signed at the scale of a container (at centernetworks.com/amazon-s3-downtime-update. 19. Wilson, S. AppEngine Outage. CIO Weblog (June 2008); and some went out of business.7 least a dozen racks), which will be the http://www.cio-weblog.com/50226711/appengine\ minimum purchase size. Cost of oper- outage.php. Number 10: Software Licensing ation will match performance and cost Current software licenses commonly of purchase in importance, rewarding © 2010 ACM 0001-0782/10/0400 $10.00 58 comm unicatio ns o f the acm | apr i l 201 0 | vol. 5 3 | no. 4

10 .Copyright of Communications of the ACM is the property of Association for Computing Machinery and its content may not be copied or emailed to multiple sites or posted to a listserv without the copyright holder's express written permission. However, users may print, download, or email articles for individual use.