- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

借助Intel 傲腾DC持久内存构建高性能HDFS 缓存加速大数据分析

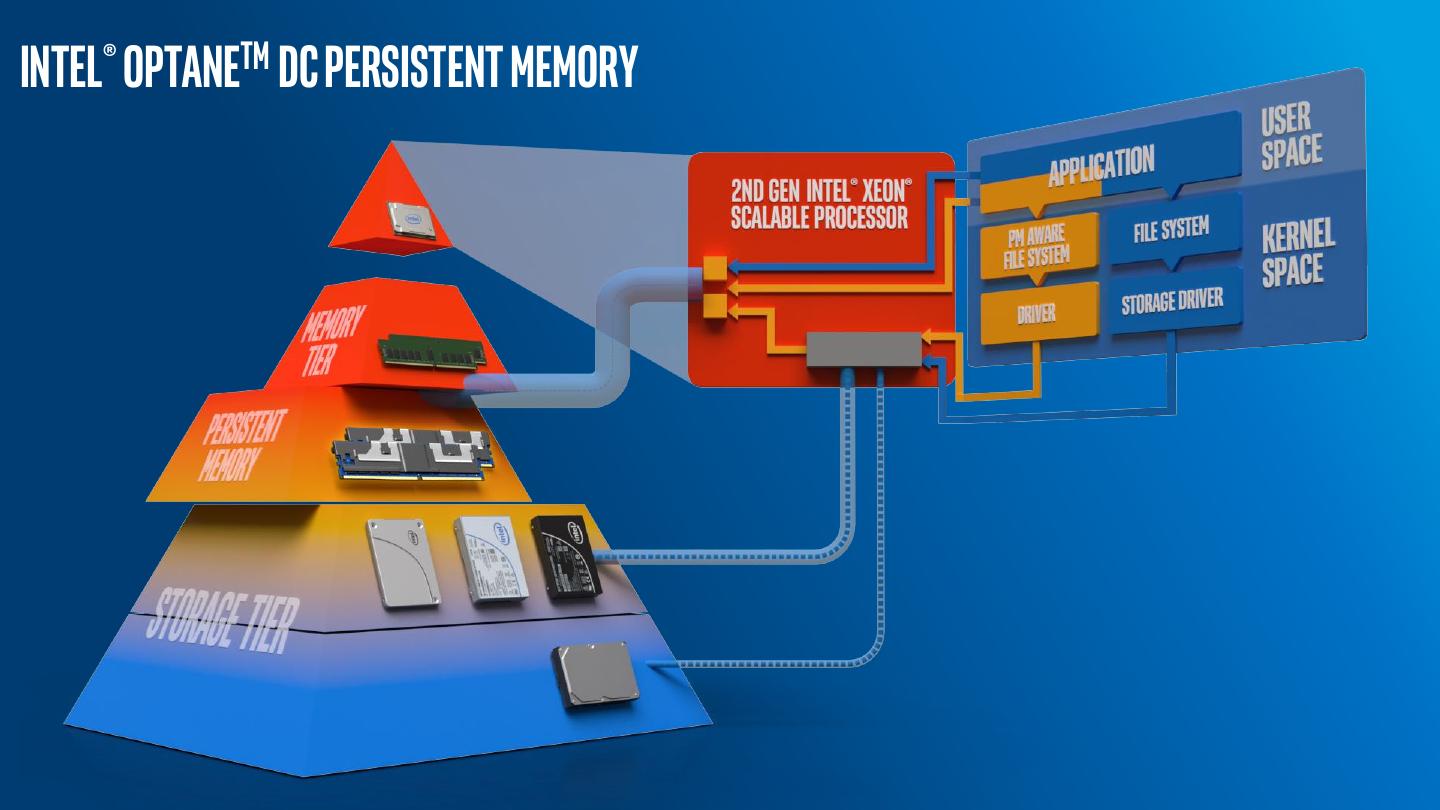

HDFS缓存是一种集中式缓存机制,用户可以通过指定要缓存的文件的路径的方式来加速常用数据访问速度。但是,由于HDFS缓存是基于DRAM的,会与用户程序竞争内存资源,同时在数据节点重启的场景下需要重新预热缓存,所以其使用场景受到很多限制。持久化内存代表了一种新型的存储器和存储技术,它以可承受的价格提供更高性能,更大容量,同时提供数据持久性。本次分享将介绍如何借助持久化内存构建高性能HDFS 缓存方案。我们将首先介绍HDFS持久性内存缓存设计与实现,然后介绍其性能优势,最后将探讨持久化内存在其他大数据分析场景中的应用。

展开查看详情

1 .Jian Zhang, Software Engineer Manager October, 2019

2 .Disclaimers https://legal.intel.com/Benchmarking/Pages/FTC-and-Security-Update-Disclaimer-Usage.aspx Performance results are based on testing as of September 5th, 2019 and may not reflect all publicly available security updates. See configuration disclosure on [page/slide 23] for details. No product can be absolutely secure. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks. Optimization Notice: Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice Revision #20110804 Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 2 *Other names and brands may be claimed as the property of others.

3 .Notices & Disclaimers Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. Intel processors of the same SKU may vary in frequency or power as a result of natural variability in the production process. Tests document performance of components on a particular test, in specific systems. Differences in hardware, software, or configuration will affect actual performance. For more complete information about performance and benchmark results, visit http://www.intel.com/benchmarks. Performance results are based on testing as of September 2019 and may not reflect all publicly available security updates. See configuration disclosure for details. No product or component can be absolutely secure. Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit http://www.intel.com/benchmarks. Intel® Advanced Vector Extensions (Intel® AVX)* provides higher throughput to certain processor operations. Due to varying processor power characteristics, utilizing AVX instructions may cause a) some parts to operate at less than the rated frequency and b) some parts with Intel® Turbo Boost Technology 2.0 to not achieve any or maximum turbo frequencies. Performance varies depending on hardware, software, and system configuration and you can learn more at http://www.intel.com/go/turbo. Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice #20110804 Cost reduction scenarios described are intended as examples of how a given Intel-based product, in the specified circumstances and configurations, may affect future costs and provide cost savings. Circumstances will vary. Intel does not guarantee any costs or cost reduction. Intel® Optane™ DC persistent memory pricing & DRAM pricing referenced in TCO calculations is provided for guidance and planning purposes only and does not constitute a final offer. Pricing guidance is subject to change and may revise up or down based on market dynamics. Please contact your OEM/distributor for actual pricing. Intel does not control or audit third-party benchmark data or the web sites referenced in this document. You should visit the referenced web site and confirm whether referenced data are accurate. © 2019 Intel Corporation. The Intel logo, Xeon, and Optane are trademarks of Intel Corporation in the United States and other countries. *Other names and brands may be claimed as property of others. Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 3 *Other names and brands may be claimed as the property of others.

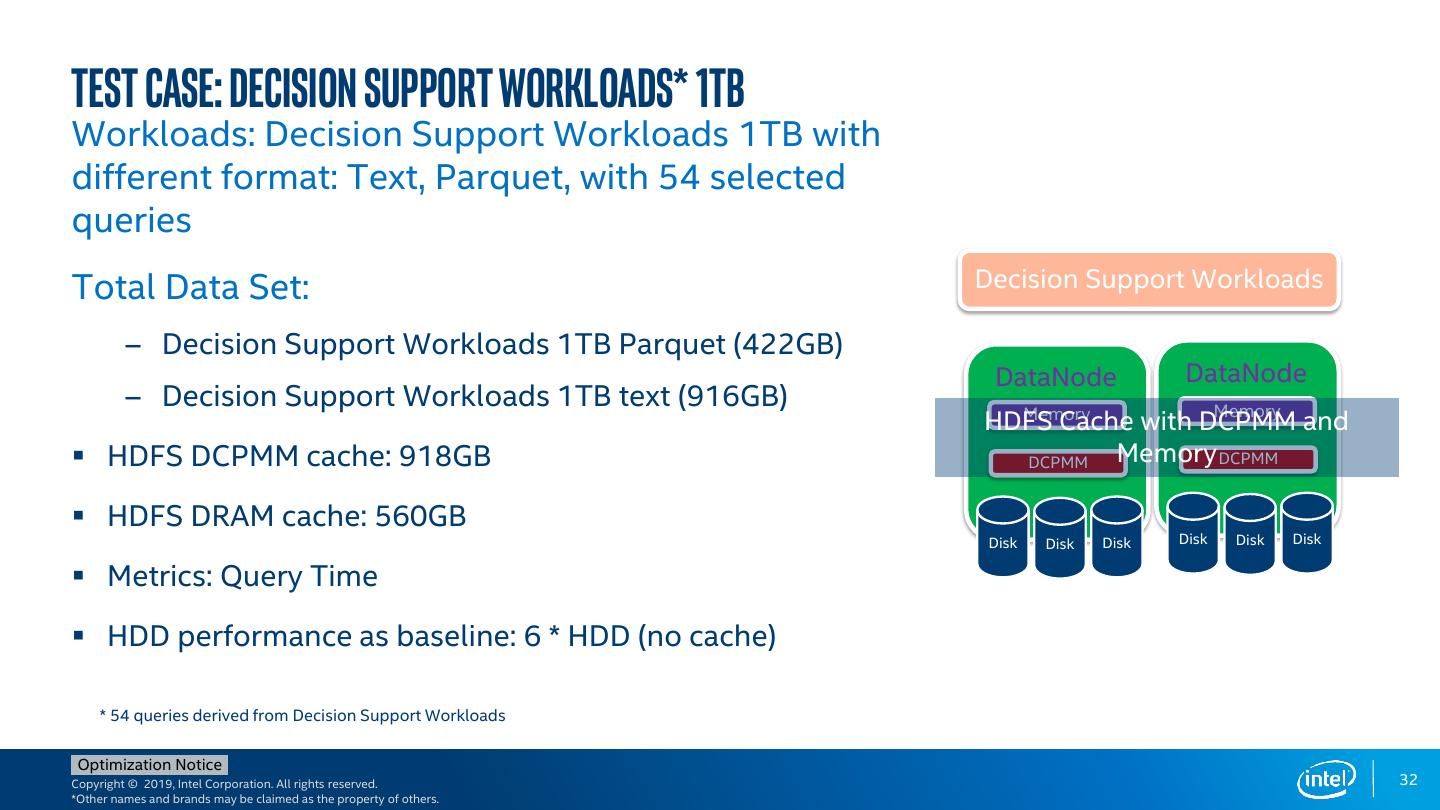

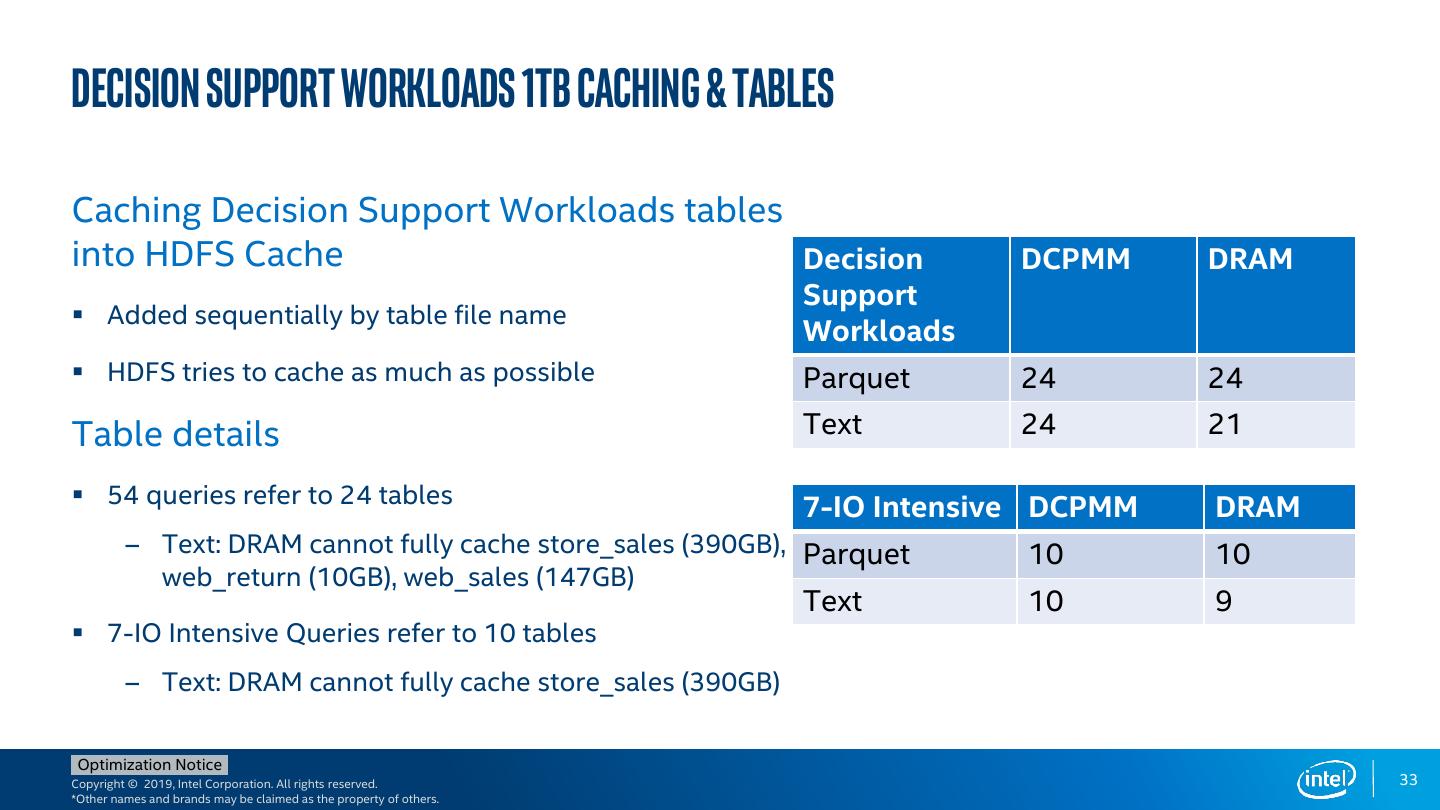

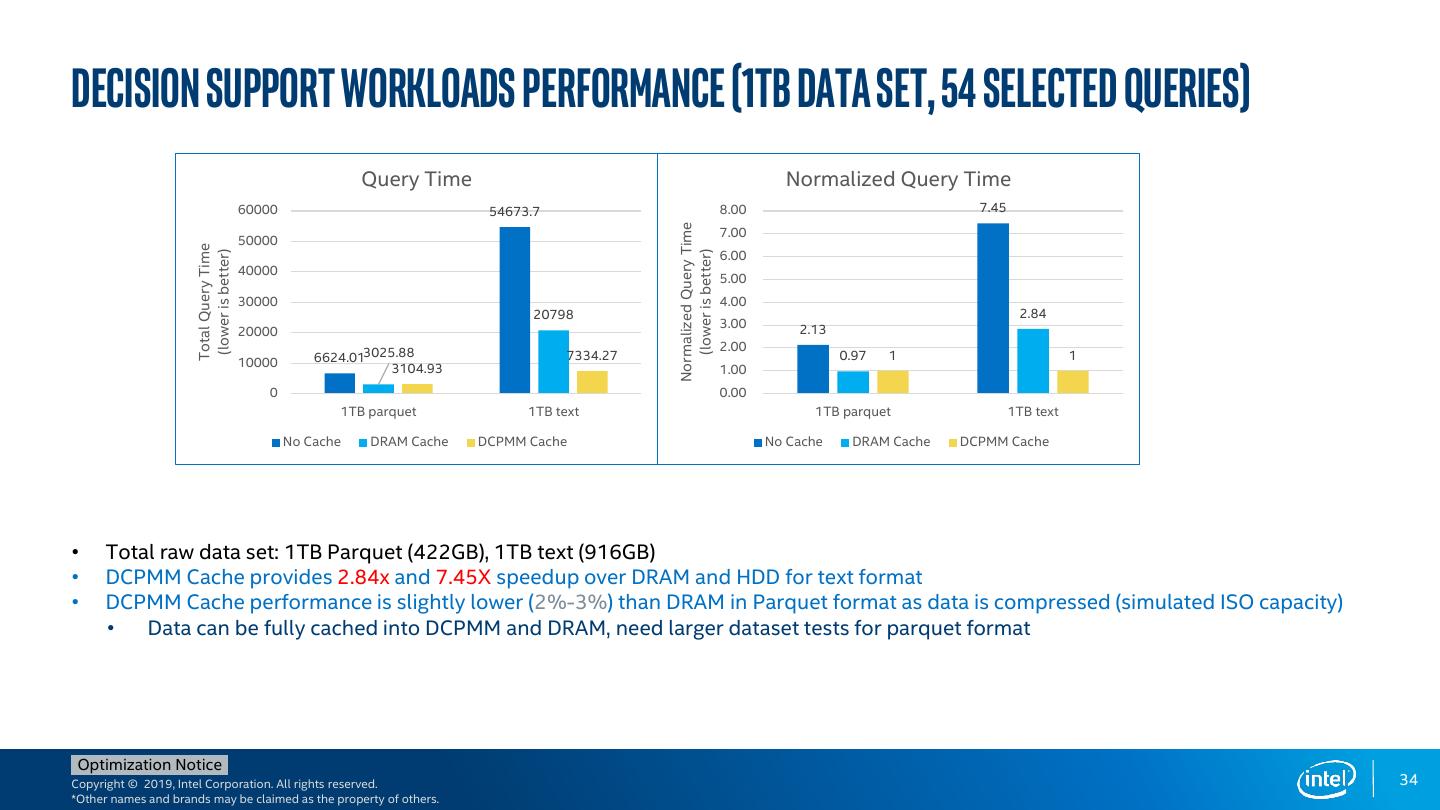

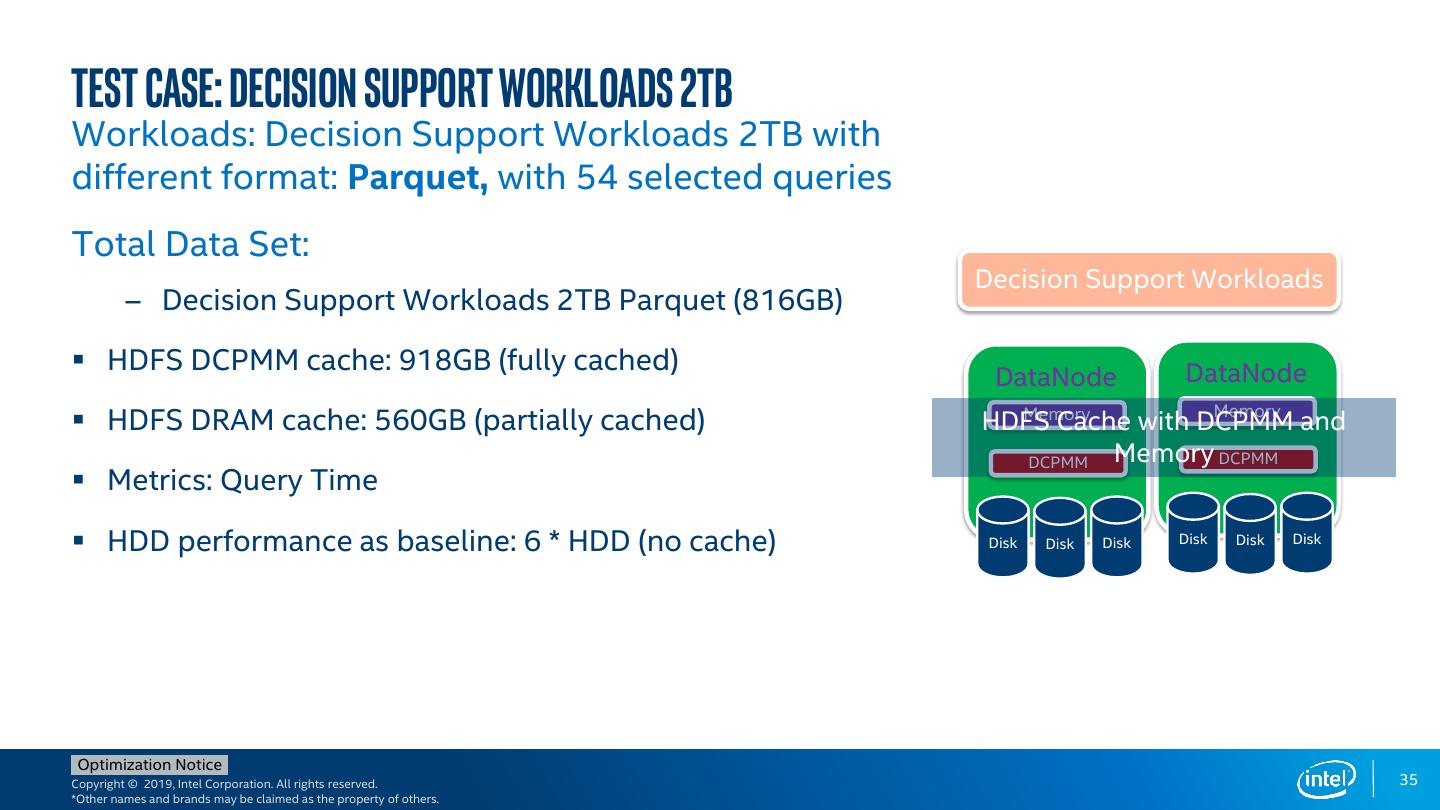

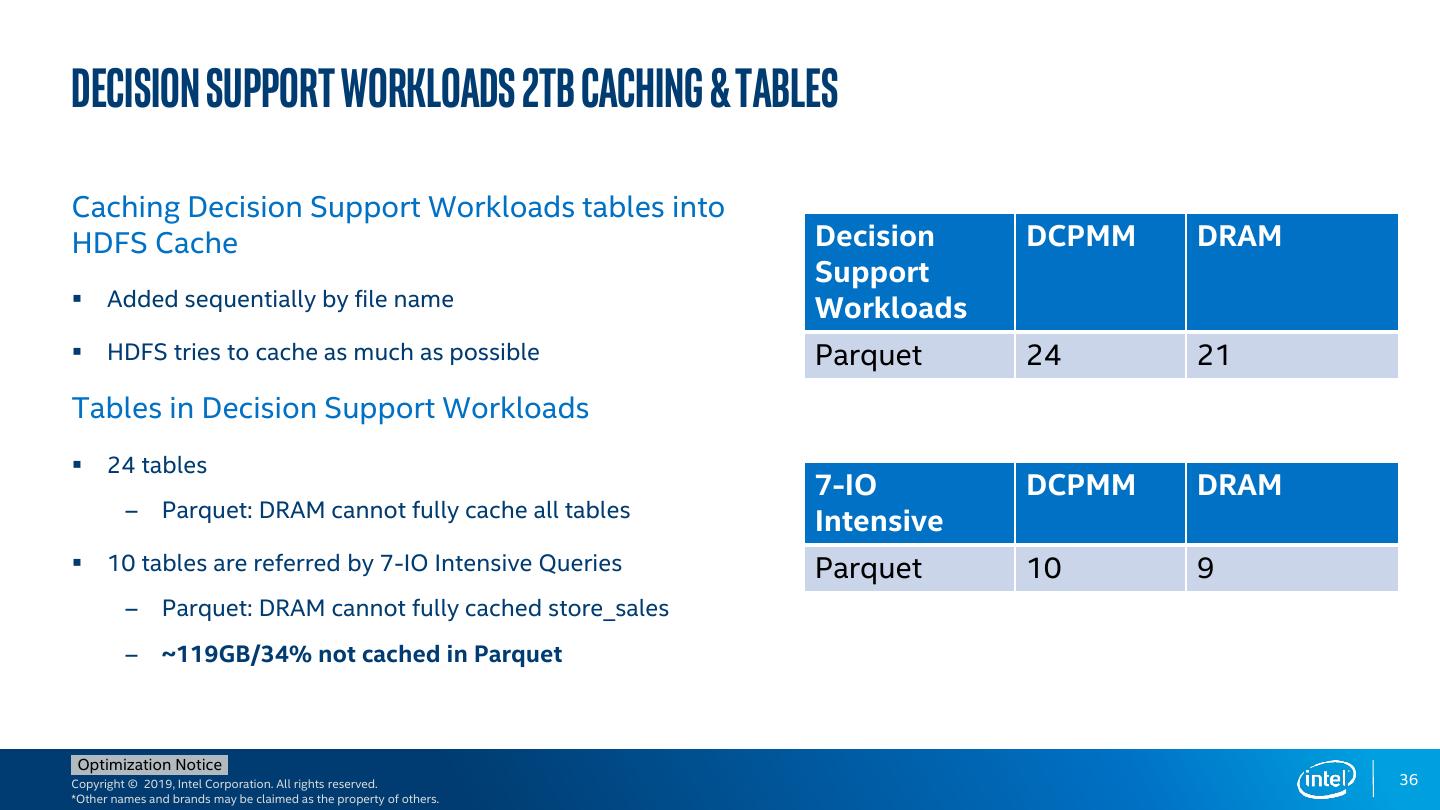

4 .Executive Summary • HDFS Cache • HDFS Cache is a centralized cache management in HDFS based on memory, it provides performance and scalability benefits in lots of production environments. • HDFS cache is used to accelerate queries and other jobs where some tables or partitions are frequently accessed • HDFS cache is a perfect suit for DCPMM where it brings higher performance and lower cost for users already using HDFS cache • HDFS Cache enabling with Intel® Optane™ DCPMM • Patch HDFS-13762 has been merged to upstream, tested and validated with industrial decision support workloads, and validated with CDH 5.16 & 6.2, will be released in Hadoop 3.3.0. • HDFS Cache performance with Intel® Optane™ DCPMM (HDFS DCPMM Cache) • ISO-Cost • DFSIO 1TB • HDFS DCPMM Cache delivers 11.02X (random), 16.64X (sequential) speedup compared to no cache (HDD). • HDFS DCPMM Cache delivers 3.16X (random), 6.09X (sequential) speedup compared to DRAM cache • Decision Support Workloads 1TB • HDFS DCPMM Cache delivers 7.45X speedup compared to no cache (HDD). • HDFS DCPMM Cache delivers 2.84X speedup compared to DRAM cache (partial cache, Text format) • Decision Support Workloads 2TB • HDFS DCPMM Cache delivers 2.44X speedup compared to no cache (HDD) for Parquet format. • HDFS DCPMM Cache delivers 1.23X speedup compared to DRAM cache (partial cache, Parquet) Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 4 *Other names and brands may be claimed as the property of others.

5 .Agenda HDFS and HDFS Cache introduction Intel® Optane™ DCPMM introduction HDFS DCPMM Cache design and implementation HDFS DCPMM Cache performance HDFS DCPMM Cache value proposition Summary Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 5 *Other names and brands may be claimed as the property of others.

6 .6

7 .HDFS HDFS is the primary distributed storage used by Hadoop applications • HDFS is a distributed file system that is fault tolerant, scalable and extremely easy to expand • HDFS is highly fault-tolerant and is designed to be deployed on commodity hardware • HDFS relaxes a few POSIX requirements to enable streaming access to file system data • HDFS provides high throughput access to application data and is suitable for applications that have large data sets Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 7 *Other names and brands may be claimed as the property of others.

8 .HDFS Cache • Centralized cache management in HDFS is an explicit caching mechanism that enables you to specify paths to directories or files that will be cached by HDFS • Avoid Eviction: Explicit pinning prevents frequently used data from being evicted from memory. • Better Locality: Co-locating a task with a cached block replica improves read performance. • Higher Performance: clients can use a new, more efficient, zero-copy read API for cached block. • Better memory utilization: explicitly pin only m of the n replica and saves memory • But • Compete with memory resources for computation, might lead to performance regression for memory intensive workloads • Limited usage scenario due to insufficient memory capacity Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 8 *Other names and brands may be claimed as the property of others.

9 .HDFS Distributed Read Cache 1 1 Send command to NN to Admin NameNode cache a path 2 Heartbeat 3 2 3 2 3 2 3 Cache block command DFSClient DFSClient DFSClient 4 Cache data to memory 5 5 5 5 Read directly from memory (zero copy short-circuiting DataNode DataNode DataNode read) Memory Memory Memory 4 4 4 Disk Disk Disk Disk Disk Disk Disk Disk Disk Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 9 *Other names and brands may be claimed as the property of others.

10 .® 10

11 .Intel® optaneTM DC Persistent Memory

12 . ChrisCav 18ww24.3 Intel® Optane™ DC Persistent Memory Overview Flexible, Usage Specific Partitions Cascade Lake Non-Volatile Memory Pool IMC IMC Memory App Direct Storage DRAM, or DRAM as cache DDR4 DRAM* DIMM Capacity • 128, 256, 512GB 1 MEMORY mode ● Large memory at lower cost Speed APP DIRECT ● Low latency persistent memory mode ● Persistent data for rapid recovery • 2666 MT/sec • DDR4 electrical & physical • Close to DRAM latency Platform Capacity 2 Storage over APP DIRECT ● Fast direct-attach storage • Cache line size access • 6TB (3TB per CPU) * DIMM population shown as an example only. Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 12 *Other names and brands may be claimed as the property of others.

13 .13

14 .HDFS DCPMM Cache HDFS DCPMM Cache • HDFS Cache is a centralized cache management in HDFS based on memory, it provides Admin NameNode performance and scalability benefits in lots of DFSClient DFSClient production environments. • HDFS cache is used to accelerate queries and Data Node Data Node other jobs where some tables or partitions are HDFS Cache HDFS Cache frequently accessed DRAM DCPM DRAM DCPM • DCPMM is a perfect suit for HDFS Cache where it brings higher performance and lower cost for Disk Disk Disk Disk Disk Disk users already using HDFS cache, it also can be used to accelerating existing cluster will several new nodes with DCPMM cache Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 14 *Other names and brands may be claimed as the property of others.

15 .HDFS Cache DCPMM enabling 1 1 Send command to Cache a Admin NameNode path 2 Heartbeat 3 2 3 2 3 2 3 Cache block command DFSClient DFSClient DFSClient Cache data to DCPMM 4 5 5 5 5 Read directly from DCPMM DataNode DataNode DataNode Memory Memory Memory Lower cost DCPMM DCPMM DCPMM cache with acceptable 4 4 4 performance Disk Disk Disk Disk Disk Disk Disk Disk Disk Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 15 *Other names and brands may be claimed as the property of others.

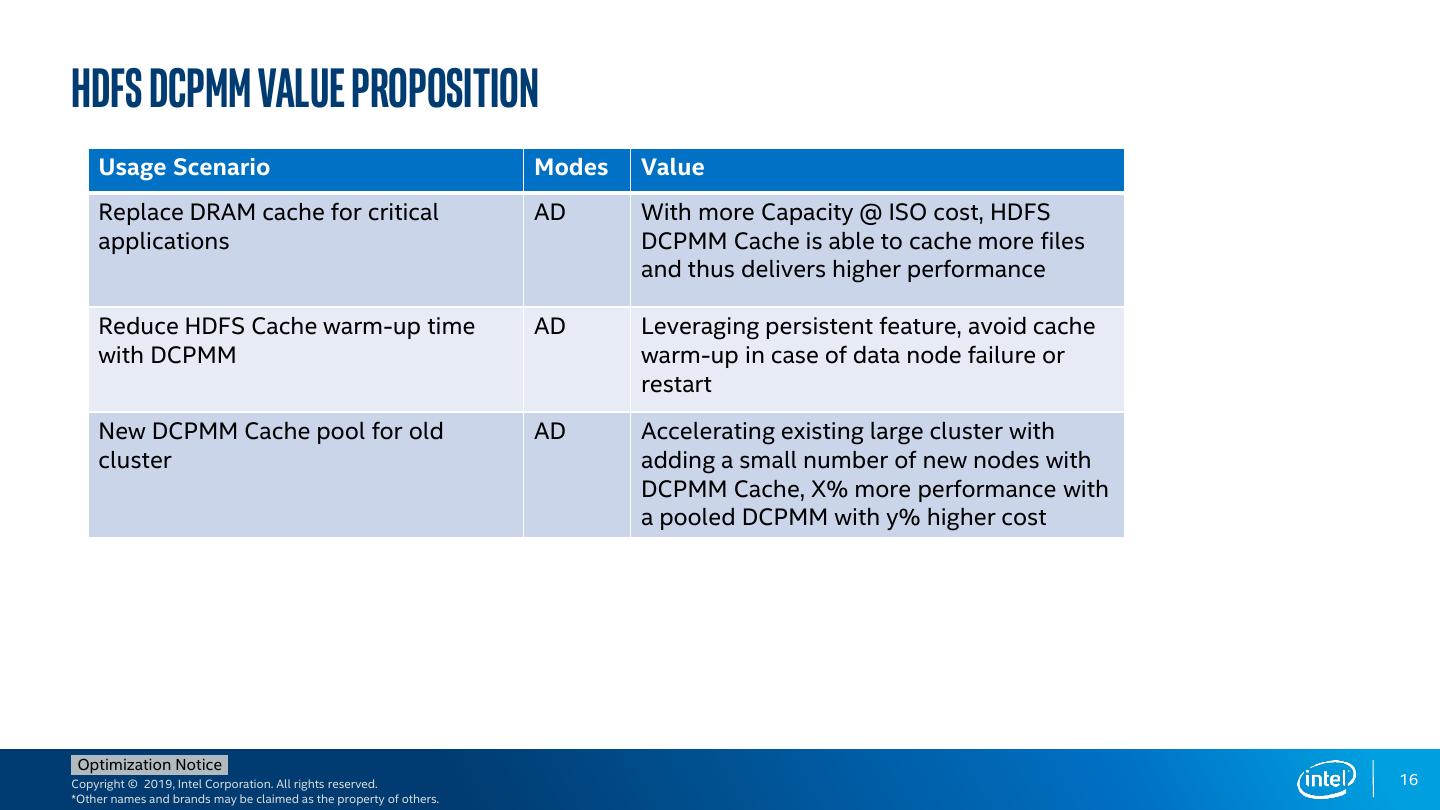

16 .HDFS DCPMM Value Proposition Usage Scenario Modes Value Replace DRAM cache for critical AD With more Capacity @ ISO cost, HDFS applications DCPMM Cache is able to cache more files and thus delivers higher performance Reduce HDFS Cache warm-up time AD Leveraging persistent feature, avoid cache with DCPMM warm-up in case of data node failure or restart New DCPMM Cache pool for old AD Accelerating existing large cluster with cluster adding a small number of new nodes with DCPMM Cache, X% more performance with a pooled DCPMM with y% higher cost Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 16 *Other names and brands may be claimed as the property of others.

17 .HDFS Read Cache with DCPMM Workloads: DFSIO etc.. Map Reduce Get file location Read file HDFS Cache command HDFS Cache/uncache NameNode Cached block list DataNodes directives list (Java Process) Cache report (Java Process) JNI PMDK(libpmem C library) User Space DAX load Kernel Space Pmem-aware file system MMU mapping DIMM DCPMM block file block file block file HDFS-13762: Support non-volatile storage class memory(SCM) in HDFS cache directives Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 17 *Other names and brands may be claimed as the property of others.

18 .HDFS DCPMM Cache Implementations Based on whether Datanode’s response to the cache request dfs.datanode.cache.pmem.dirs is configured? processCommand () BPServiceActor MemoryMappedBlock PmemMappedBlock BlockPoolId, BlockIds .load() .load() cache() FsDatasetImpl By JNI BlockPoolId, BlockIds NativeIO.c PMDK CachingTask FsDatasetCache Memory DCPMM Cache to memory Cache to DCPMM Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 18 *Other names and brands may be claimed as the property of others.

19 .HDFS DCPMM Cache upstream progress Patch Status HDFS-14354, Refactor MappableBlock to align with the implementation of SCM cache. Merged on 15th Mar, 2019. HDFS-14393, Refactor FsDatasetCache for SCM cache implementation. Merged on 28th Mar, 2019. HDFS-14355, Implement HDFS cache on SCM by using pure java mapped byte buffer. Merged on 31st Mar, 2019. HDFS-14401, Optimize for DCPMM Cache volume management. Merged on 8th May, 2019. HDFS-14402, Use FileChannel.transferTo() method for transferring block to SCM cache. Merged on 26th May, 2019. HDFS-14356, PMDK based implementation for HDFS DCPMM Cache. Merged on 5th June, 2019. HDFS-14458, Report pmem stats to NameNode. Merged on 15th July, 2019. HDFS-14357, Update the relevant HDFS docs for DCPMM cache. Merged on 15th July, 2019. • HDFS-13762: Support non-volatile storage class memory(SCM) in HDFS cache directives merged, target for Hadoop 3.3.0 • Backport to Hadoop 3.1.4 ready, 3.2 in progress. • Validated with CDH 5.16.0 & 6.2.0. Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 19 *Other names and brands may be claimed as the property of others.

20 .20

21 .1TB 2-2-1 System DCPMM 128 GB 16 GB 16 GB 128 GB 32 GB 32 GB 32 GB 32 GB 128 GB 16 GB CLX 16 GB 128 GB 32 GB 32 GB CLX 32 GB 32 GB DRAM Iso Cost 16 GB 16 GB 32 GB 32 GB 32 GB 32 GB 1 TB 768 GB 128 GB 16 GB 16 GB 128 GB 32 GB 32 GB 32 GB 32 GB 128 GB 16 GB CLX 16 GB 128 GB 32 GB 32 GB CLX 32 GB 32 GB 16 GB 16 GB 32 GB 32 GB 32 GB 32 GB Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 21 *Other names and brands may be claimed as the property of others.

22 .Test Configuration DRAM HDD (no cache) DCPMM 2-2-1 Config System CLX-2S CLX-2S CLX-2S CPU CLX 6240, HT on CLX 6240, HT on CLX 6240, HT on CPU per node 18core/socket, 2 sockets, 18core/socket, 2 sockets, 18core/socket, 2 sockets, 2 threads per core 2 threads per core 2 threads per core Memory DDR4 dual rank 768GB = 24 * 32GB DDR4 dual rank 192GB=12 * 16GB DDR4 dual rank 192GB=12 * 16GB DCPMM 8 * 128GB ES2 Network 10GbE 10GbE 10GbE Storage Type 1x SATA SSD for OS 1x SATA SSD for OS 1x SATA SSD for OS 1TB SATA SSD for name node 1TB SATA SSD for namenode 1TB SATA SSD for namenode 2 P4500 for Spark Shuffle 2 P4500 for Spark Shuffle 2 P4500 for Spark Shuffle 6x 1TB HDD on datanode 6x 1TB HDD on datanode 6x 1TB HDD on datanode BIOS SE5C620.86B.02.01.0008.03192019155 SE5C620.86B.02.01.0008.03192019155 SE5C620.86B.02.01.0008.031920191559 9 9 OS/Hypervisor OS: Fedora 29 OS: Fedora 29 OS: Fedora 29 /SW Java 1.8, Hadoop 3.1.2 , Mysql 5.7 Java 1.8, Hadoop 3.1.2 , Mysql 5.7 Java 1.8, Hadoop 3.1.2 , Mysql 5.7 WL Version DFSIO, Decision Support Workloads DFSIO, Decision Support Workloads DFSIO, Decision Support Workloads Input Data Set Total data set 1TB, 2TB Total data set 1TB, 2TB Total data set 1TB, 2TB Special Patches HDFS/cache replication factor=2 HDFS/cache replication factor=2 HDFS/cache replication factor=2 Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 22 *Other names and brands may be claimed as the property of others.

23 . Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 23 *Other names and brands may be claimed as the property of others.

24 .DFSIO Read (1TB) Workloads: DFSIO Random & Sequential Read Total Data Set: 128 * 8GB=1024GB DFSIO ▪ HDFS DCPMM cache: 918G (almost fully cached) DataNode DataNode ▪ HDFS DRAM cache: 560GB (partial cached) Memory HDFS Cache with DCPMM Memory and ▪ Metrics: Throughput (MB/S) DCPMM Memory DCPMM ▪ HDD performance as baseline: 6 * HDD (no cache) Disk Disk Disk Disk Disk Disk Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 24 *Other names and brands may be claimed as the property of others.

25 .DFSIO 1TB Performance DFSIO Read Throughput (per job) Normalized DFSIO Read Throughput 120 18.00 16.64 99.68 16.00 100 Throughput (MB/s) 14.00 80 12.00 11.02 10.00 60 47.71 8.00 40 6.00 3.48 16.37 15.05 4.00 2.73 20 1.00 1.00 5.99 4.33 2.00 0 0.00 128 * 8GB files (sequential) 128 * 8GB files (random) 128 * 8GB files (sequential) 128 * 8GB files (random) No Cache DCPMM Cache DRAM Cache No Cache DCPMM Cache DRAM Cache • DCPMM Cache vs. DRAM Cache vs. HDD Cache • HDFS DCPM cache: 918G (partial cache); HDFS DRAM cache: 560GB (partial cached) • 1TB: HDFS DCPMM Cache delivers up to 3.16x speedup for random read and 6.09x speedup for sequential read in compared with DRAM cache in ISO-Cost configuration; 16.64x (sequential read) and 11.02x (random read) speedup over without cache Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 25 *Other names and brands may be claimed as the property of others.

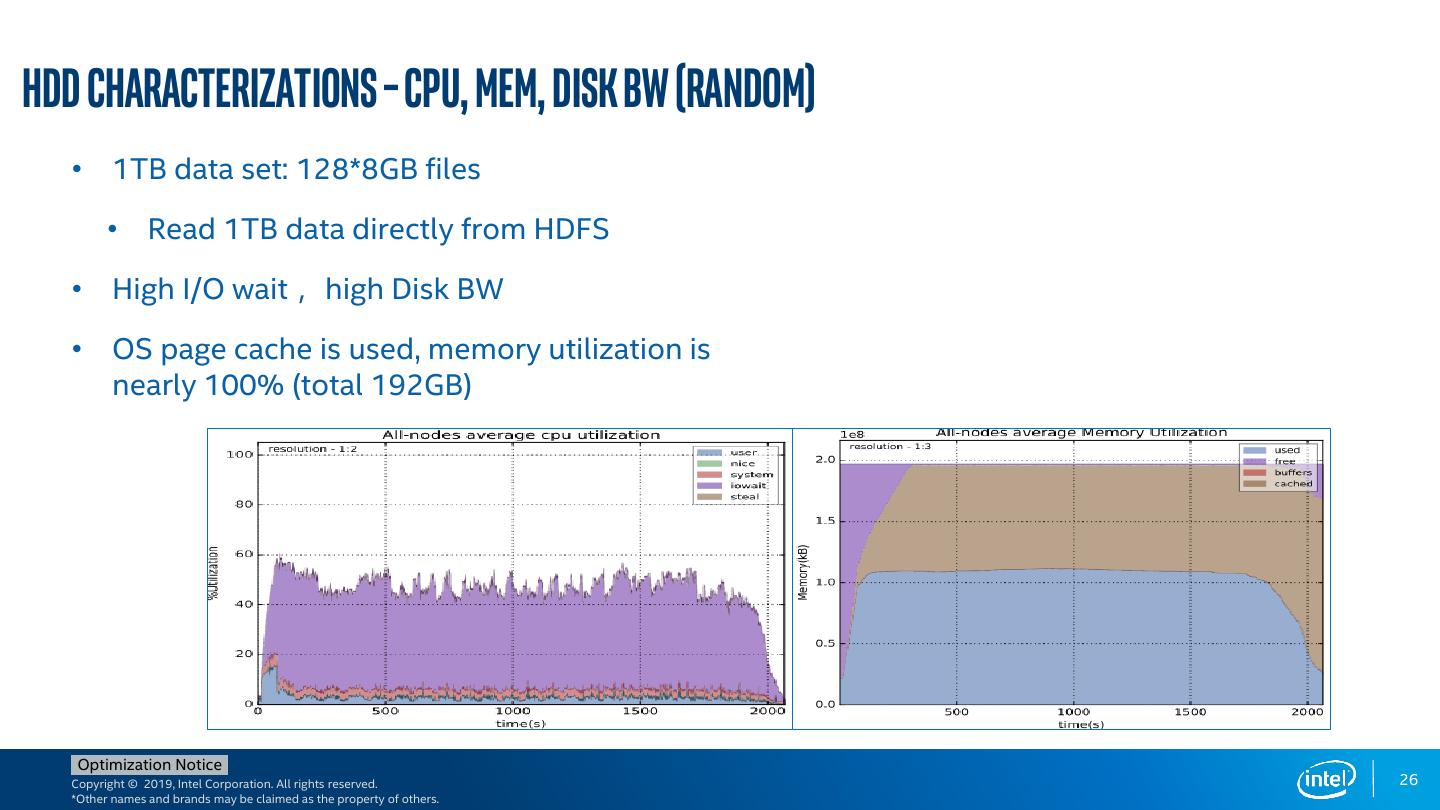

26 .HDD Characterizations – CPU, Mem, Disk BW (random) • 1TB data set: 128*8GB files • Read 1TB data directly from HDFS • High I/O wait ,high Disk BW • OS page cache is used, memory utilization is nearly 100% (total 192GB) Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 26 *Other names and brands may be claimed as the property of others.

27 .DFSIO Rand Read Characterizations: Non_cache – Disk Details • HDD is fully used (high IOPS and latency) Disk Bandwidth Disk IO latencies Disk IO requests HDD is the bottleneck: high IOPS & await time Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 27 *Other names and brands may be claimed as the property of others.

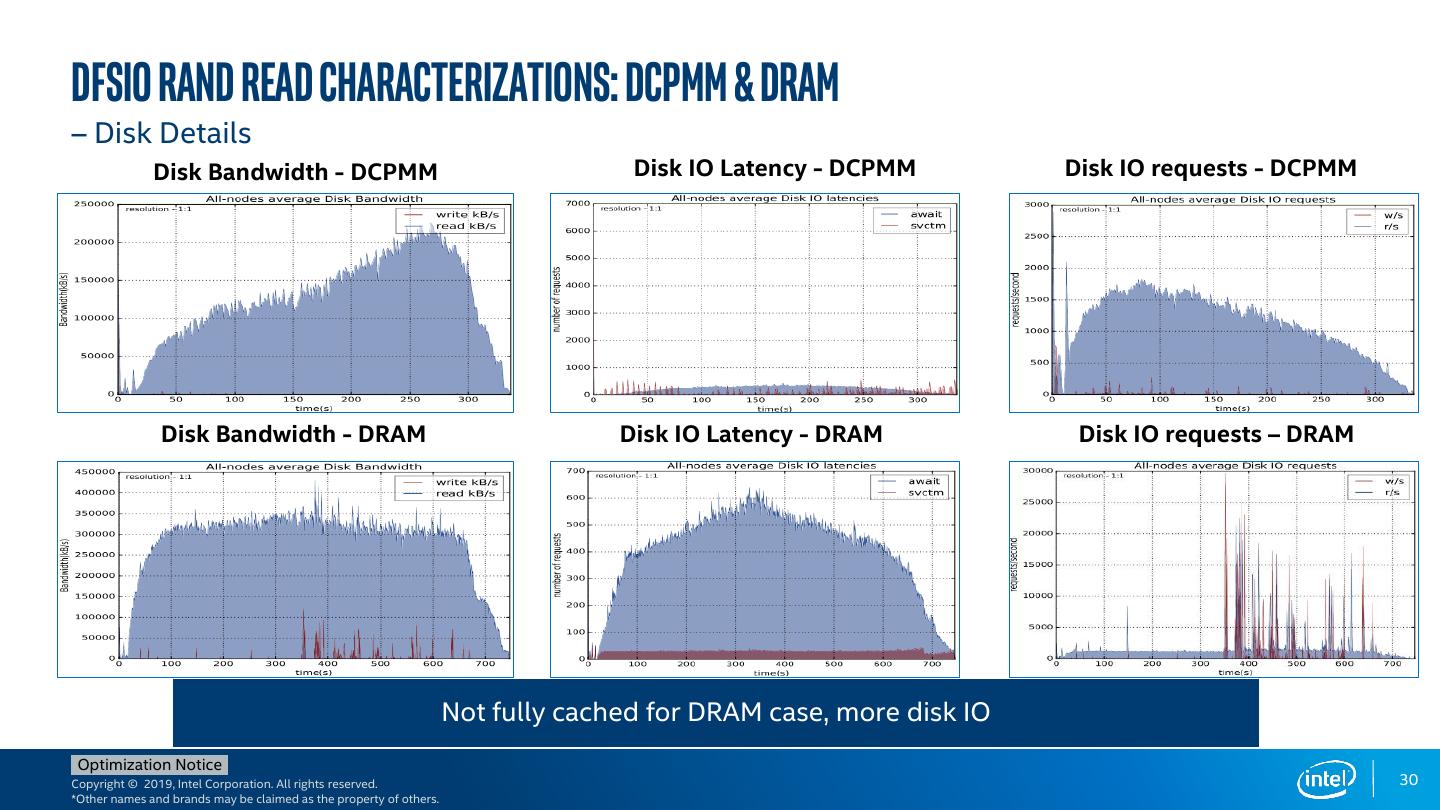

28 .DFSIO Rand Read Characterizations: DCPMM & DRAM – CPU • Significant Speed up by DCPMM • Nearly 3/8 Running time on the same datasets • Higher CPU usage on DCPMM • Because more data is read in same interval DCPMM DRAM DCPMM Bandwidth Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 28 *Other names and brands may be claimed as the property of others.

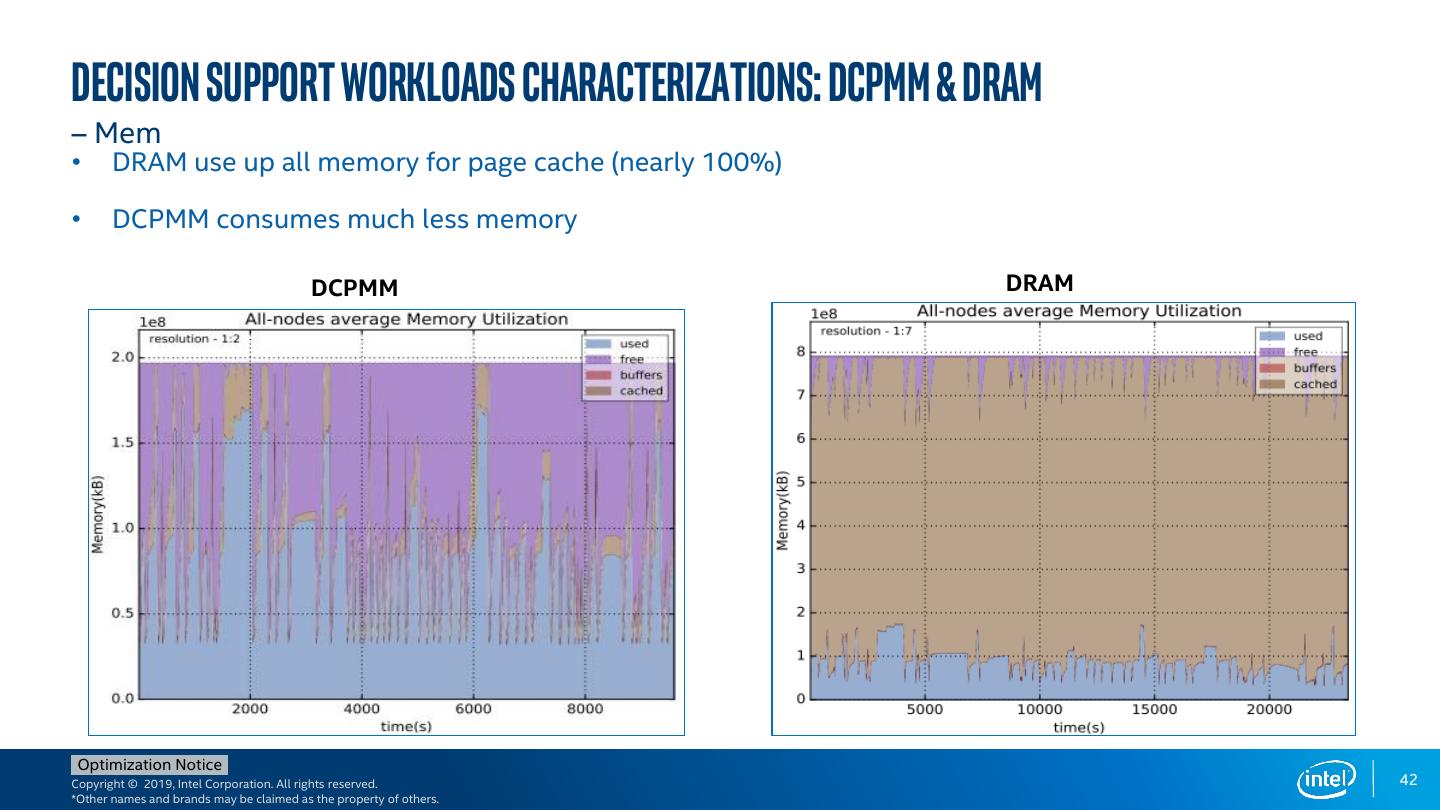

29 .DFSIO Rand Read Characterizations: DCPMM & DRAM –Mem • DRAM used up all free memory (~100GB) for page cached (caused by HDD read) • DCPMM used a few page cache, because only a few data (~80GB) cannot be cached DCPMM DRAM Optimization Notice Copyright © 2019, Intel Corporation. All rights reserved. 29 *Other names and brands may be claimed as the property of others.