- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

gohbase :HBase go客户端

展开查看详情

1 . gohbase Pure Go HBase Client Andrey Elenskiy • Bug Breeder at Arista Networks

2 .What’s so special? ● A (sorta)-fully-functional driver for HBase written in Go ● Kinda based on AsyncHBase Java client ● Fast enough ● Small and simple codebase (for now) ● No Java (not a single AbstractFactoryObserverService)

3 .Top contributors (2,000 ++) ● Timoha (Andrey Elenskiy) ● tsuna (Benoit Sigoure) ● dgonyeo (Derek Gonyeo) ● CurleySamuel (Sam Curley)

4 .So much failure ● HBase's feature-set is huge → bunch of small projects → bugs ● Asynch, wannabe lock-free architecture + handling failures = :coding_horror: ● Benchmarking is tricky ● Found some HBase issues in the process

5 .Go is pretty cool I guess

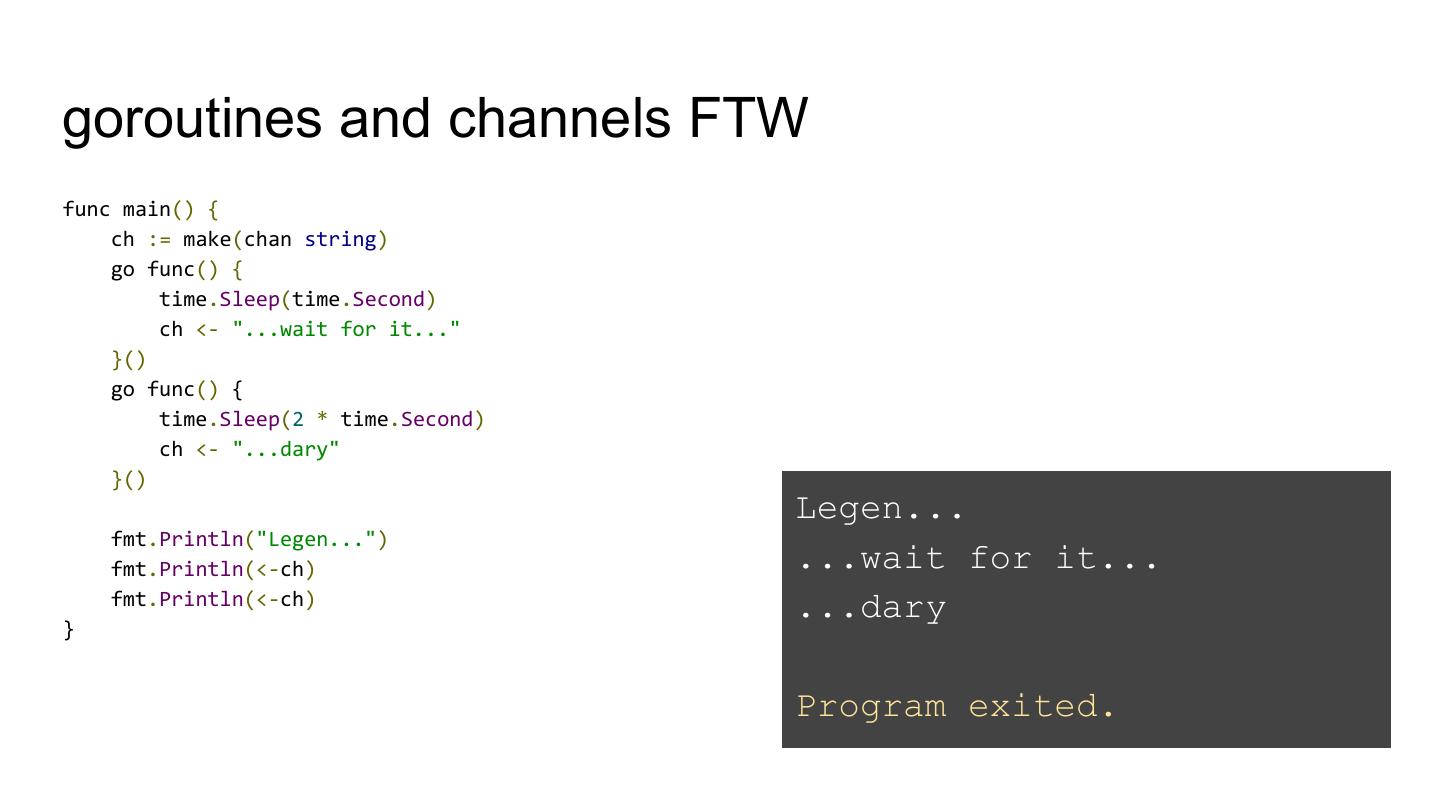

6 .goroutines and channels FTW func main() { ch := make(chan string) go func() { time.Sleep(time.Second) ch <- "...wait for it..." }() go func() { time.Sleep(2 * time.Second) ch <- "...dary" }() Legen... fmt.Println("Legen...") fmt.Println(<-ch) ...wait for it... fmt.Println(<-ch) ...dary } Program exited.

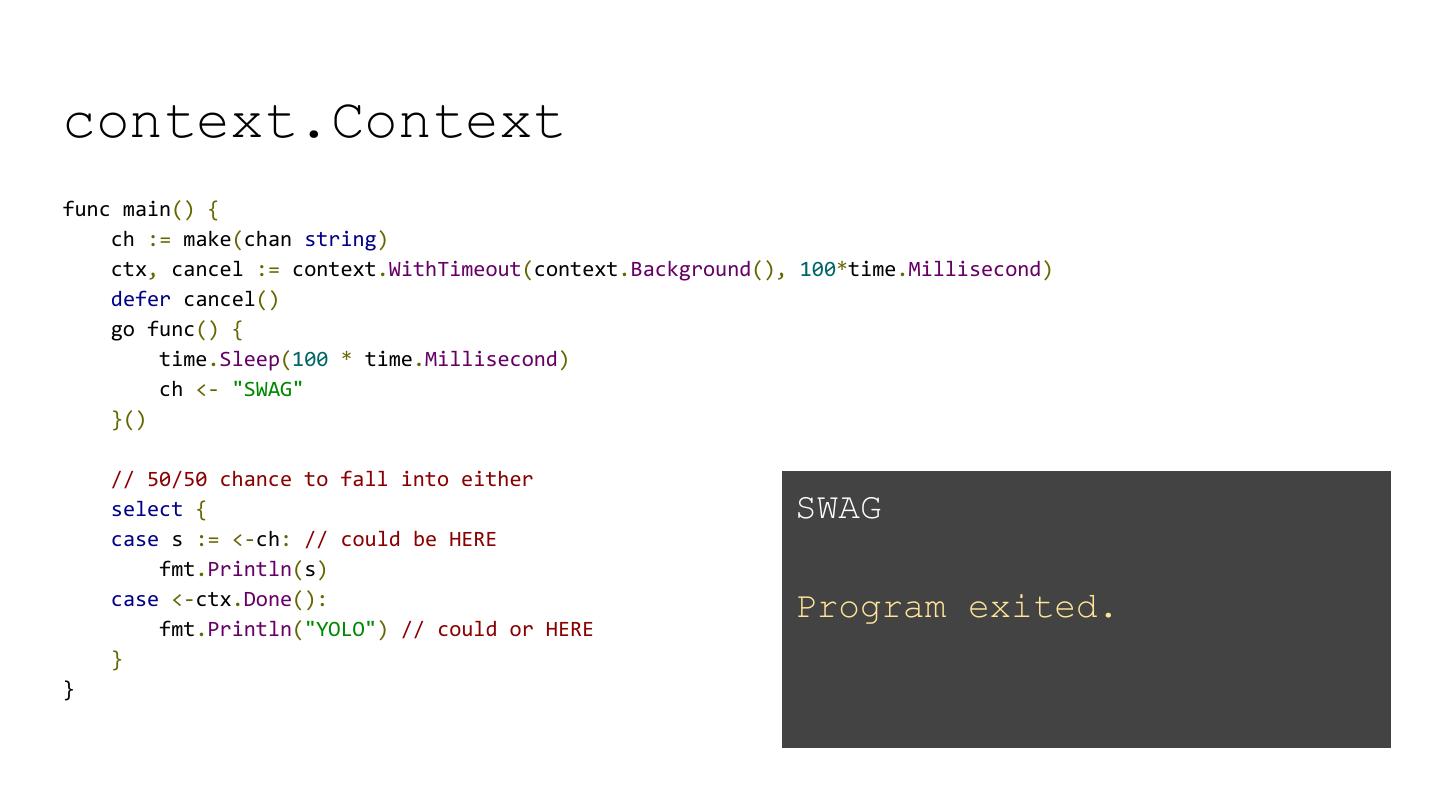

7 .context.Context func main() { ch := make(chan string) ctx, cancel := context.WithTimeout(context.Background(), 100*time.Millisecond) defer cancel() go func() { time.Sleep(100 * time.Millisecond) ch <- "SWAG" }() // 50/50 chance to fall into either select { SWAG case s := <-ch: // could be HERE fmt.Println(s) case <-ctx.Done(): Program exited. fmt.Println("YOLO") // could or HERE } }

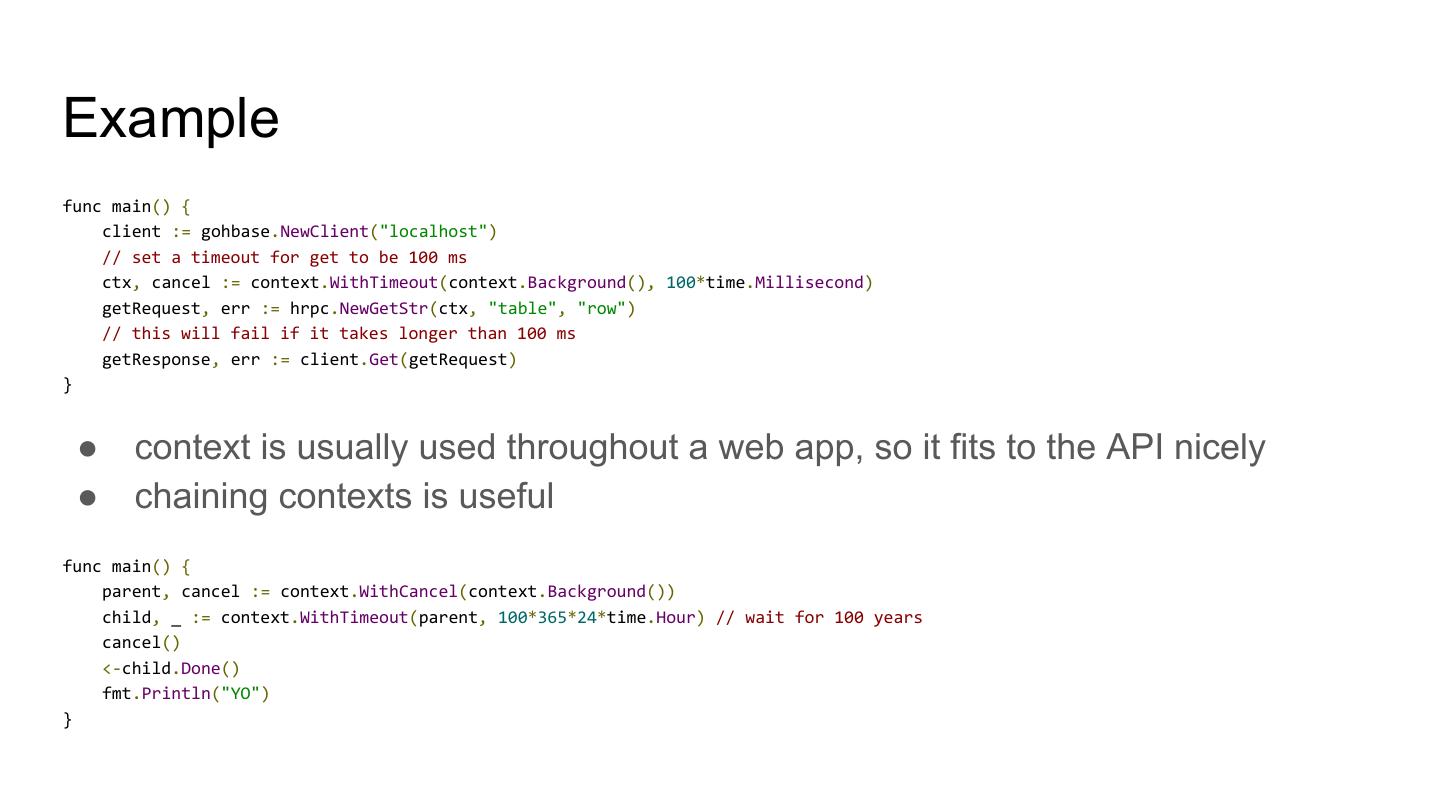

8 .Example func main() { client := gohbase.NewClient("localhost") // set a timeout for get to be 100 ms ctx, cancel := context.WithTimeout(context.Background(), 100*time.Millisecond) getRequest, err := hrpc.NewGetStr(ctx, "table", "row") // this will fail if it takes longer than 100 ms getResponse, err := client.Get(getRequest) } ● context is usually used throughout a web app, so it fits to the API nicely ● chaining contexts is useful func main() { parent, cancel := context.WithCancel(context.Background()) child, _ := context.WithTimeout(parent, 100*365*24*time.Hour) // wait for 100 years cancel() <-child.Done() fmt.Println("YO") }

9 .Internal architecture in a nutshell

10 .Case A: Normal (95%)

11 .Step 1: Get region in B+Tree

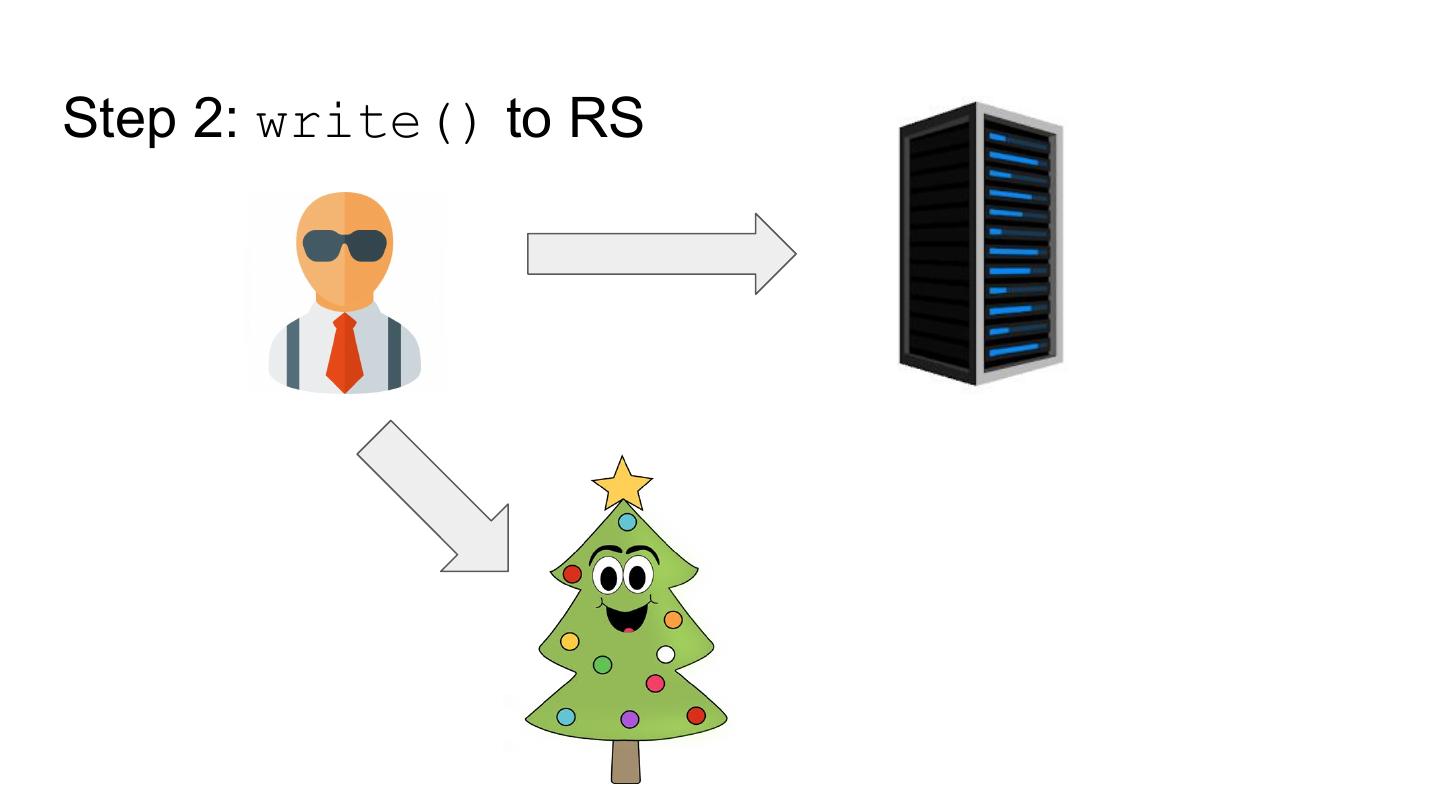

12 .Step 2: write() to RS

13 .Step 3: receiveRPCs()

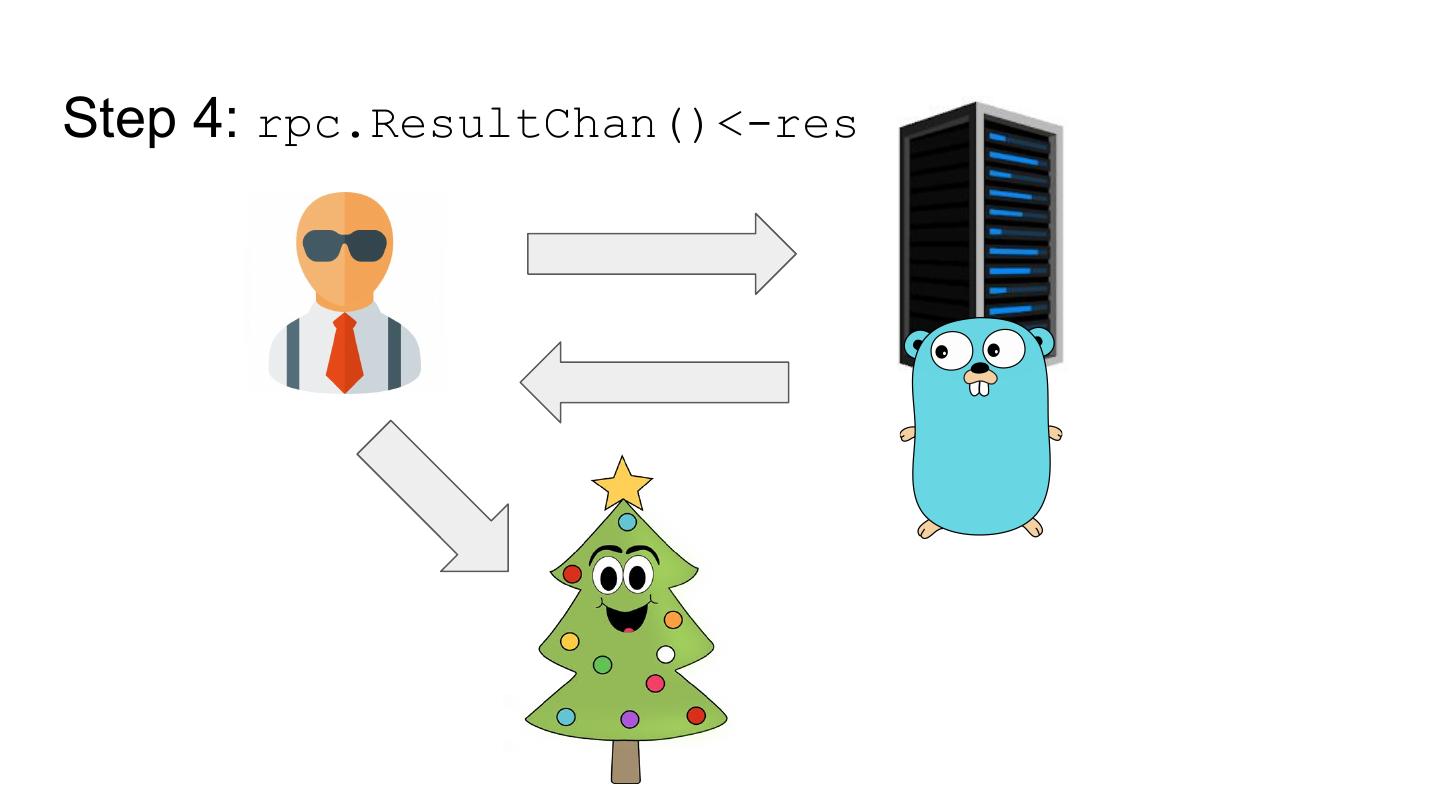

14 .Step 4: rpc.ResultChan()<-res

15 .Case A: Normal ● Client’s goroutine writes rpc to RS connection ● One goroutine in RegionClient to read from RS connection ● Asynchronous internals. Synchronous API.

16 .Case B: Cache miss/failure 1. Go to B+tree cache for region of the RPC 2-100. ...Magic... 101. rpc.ResultChan()<-res

17 .Magic? 2. Mark region as unavailable in cache 3. Block all new RPCs for region by reading on its "availability" channel func main() { ch := make(chan struct{}) go func() { 2009-11-10 23:00:00 +0000 UTC sleeping fmt.Println(time.Now(), "sleeping") 2009-11-10 23:00:01 +0000 UTC done time.Sleep(time.Second) close(ch) }() Program exited. <-ch fmt.Println(time.Now(), "done") } 4. Start a goroutine to reestablish the region 5. Replace all overlapping regions in cache with new looked up region 6. Connect to Region Server 7. Probe the region to see if it’s being served 8. Close "availability" channel to unblock RPCs and let them find new region in cache 9. write() to RS 10-100. PROFIT!!!

18 .How do you benchmark this stuff? Requirements: ● No disk IO ● No network Tried: ● Standalone ● Pseudo-distributed (MiniHBaseCluster from HBaseTestingUtility) ● Distributed on the same node with Docker ● 16 node HBase cluster

19 .I want my cores ● Using 70% CPU per Region Server on client side ● Region Server is chilling and not using all CPUs per connection ● Where’s the bottleneck?

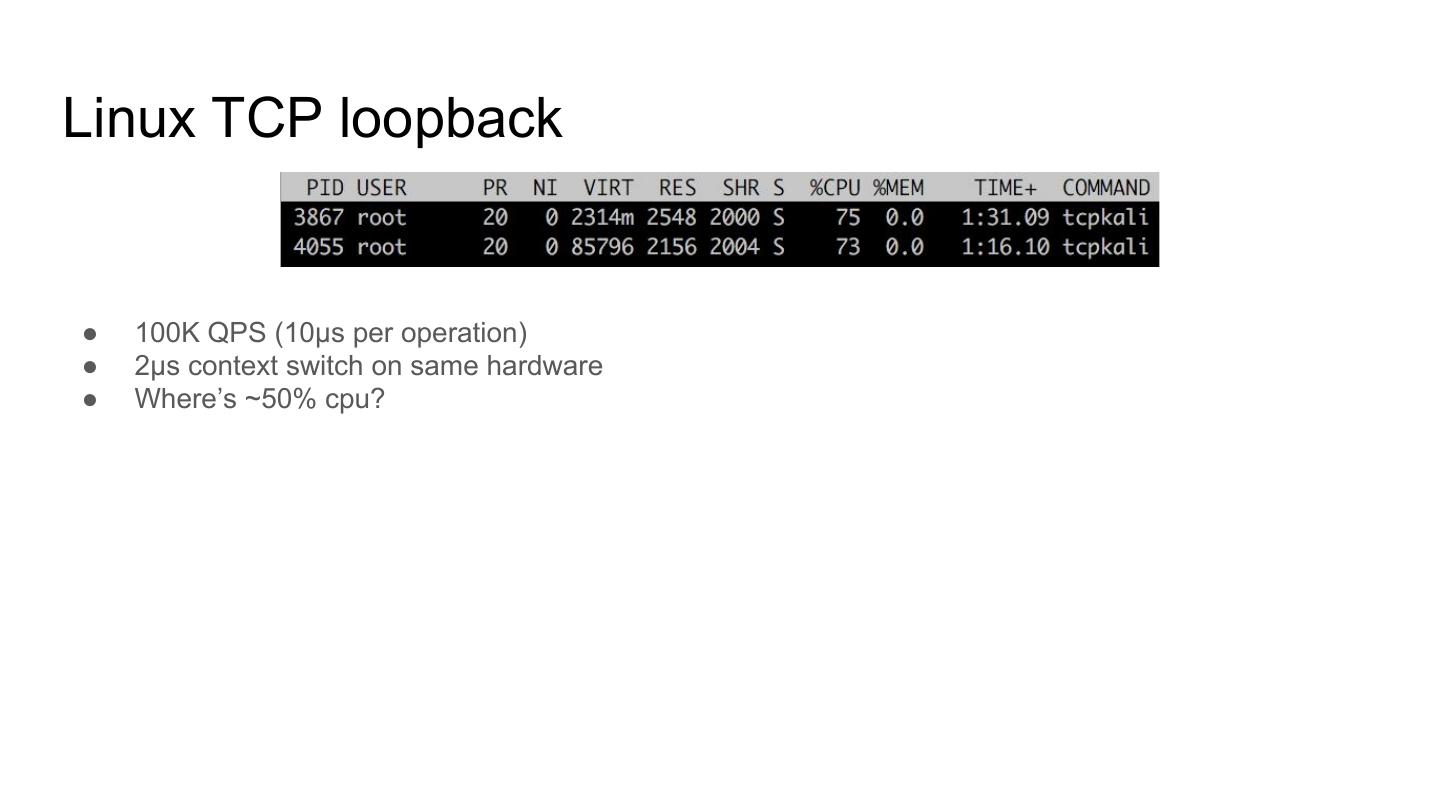

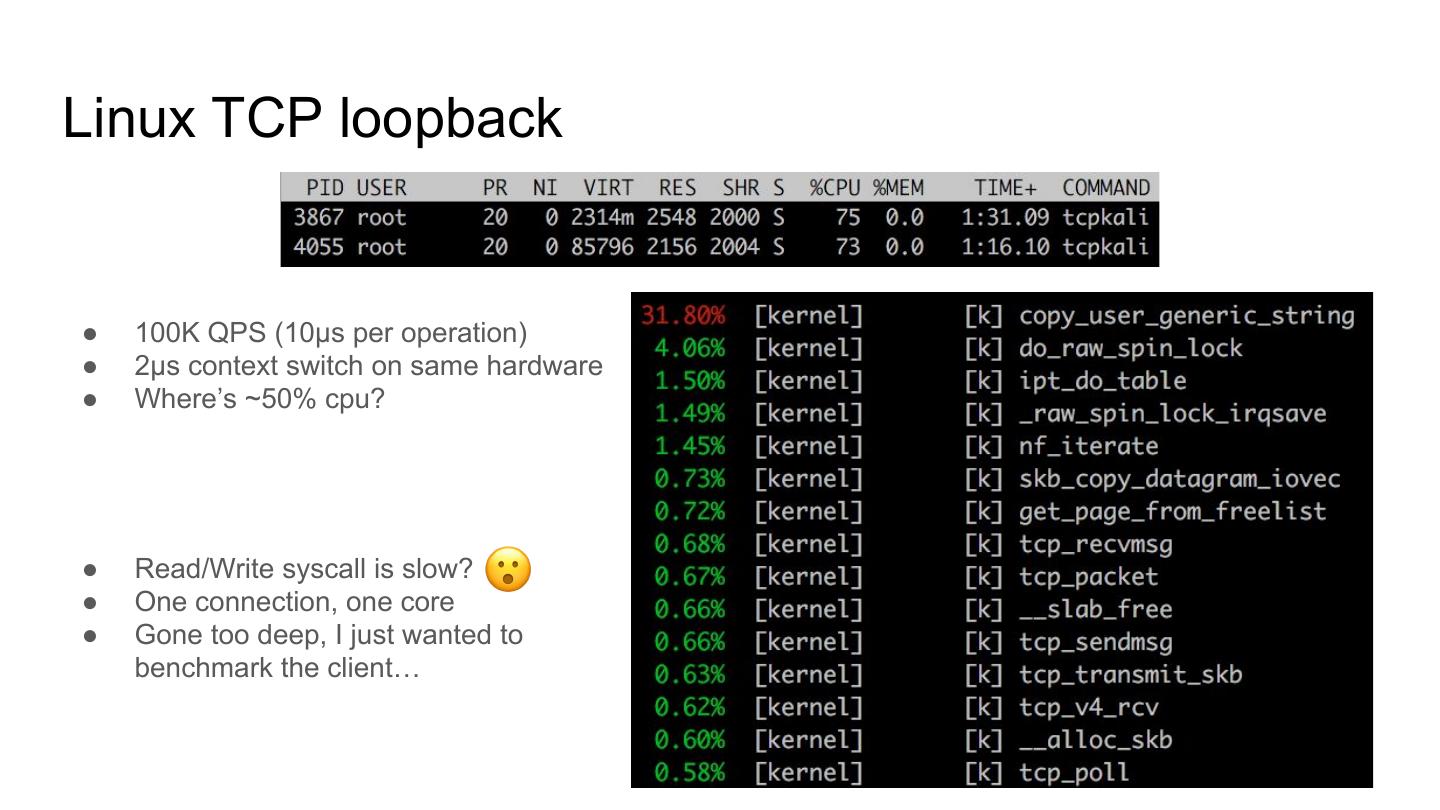

20 .Linux TCP loopback ● 100K QPS (10μs per operation) ● 2μs context switch on same hardware ● Where’s ~50% cpu?

21 .Linux TCP loopback ● 100K QPS (10μs per operation) ● 2μs context switch on same hardware ● Where’s ~50% cpu?

22 .Linux TCP loopback ● 100K QPS (10μs per operation) ● 2μs context switch on same hardware ● Where’s ~50% cpu? ● Read/Write syscall is slow? ● One connection, one core ● Gone too deep, I just wanted to benchmark the client…

23 .fast.patch [HBASE-15594] 24 clients doing 1M random reads each to one HBase 1.3.1 regionserver

24 .fast.patch [HBASE-15594] ● With it, same %75 / %75 CPU utilization per connection ● Without it, RegionServer is 100% CPU per connection: probably wasting time context switching ● More threads, more throughput ● More connections, even more throughput

25 .Benchmark Results ● 30M rows with 26 byte keys and 100 byte vlaues ● 200 regions ● 3 runs of each benchmark ● 16 regionservers with 32 cores 64gb ram ● One Arista switch ;)

26 .RandomRead With lots of threads gohbase is 10% faster 3 times less memory allocated though

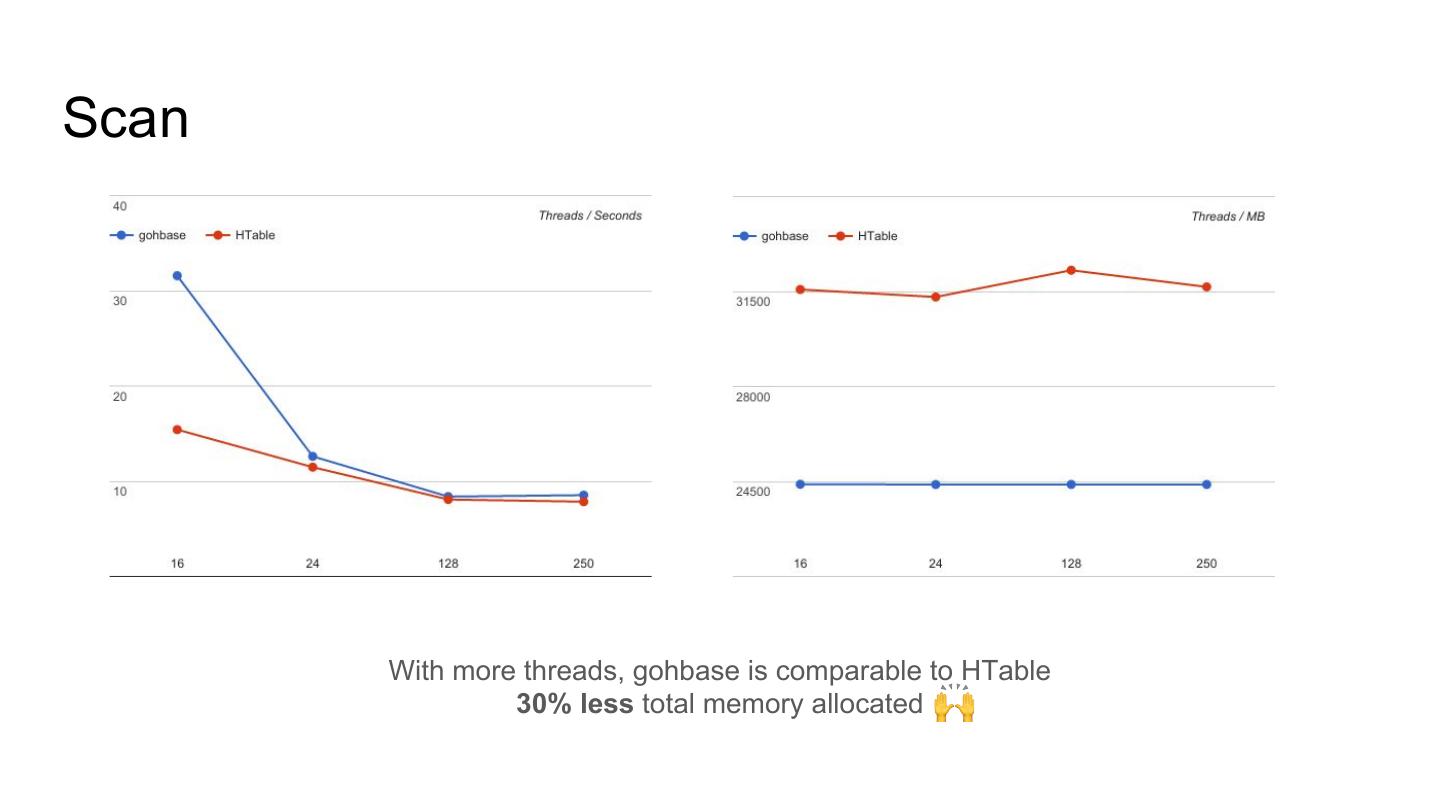

27 .Scan With more threads, gohbase is comparable to HTable 30% less total memory allocated

28 .RandomWrite? (gohbase only) Best: 250 threads, 270sec, 165,218mb total

29 .Benchmarking was “entertaining”