- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

19_08 - CassandraTokenManagement

讲述 cassandra集群规模的,facebook有超过1k节点的应用

展开查看详情

1 .Token Management @ Scale Jay Zhuang - Software Engineer @ Instagram

2 .About me Software Engineer @ Instagram Cassandra Team Cassandra Committer Before: ○ Uber Cassandra Team ○ Amazon AWS

3 .Overview 1 Challenges 2 Improvements 3 Future Work 3

4 .Challenges

5 .Token Management & Gossip Protocol ● Consistent Hashing Ring ● Gossip Protocol based Token Management ○ Adding New Node ○ Decommission Existing Node ○ Replacing Down Node ○ etc. ● Eventual Consistency 5

6 .Challenges ● Large Number of Tokens ○ Expensive Token Operations ○ Inefficient Replicas Computation ● Gossip Convergence Time ● Strong Consistency 6

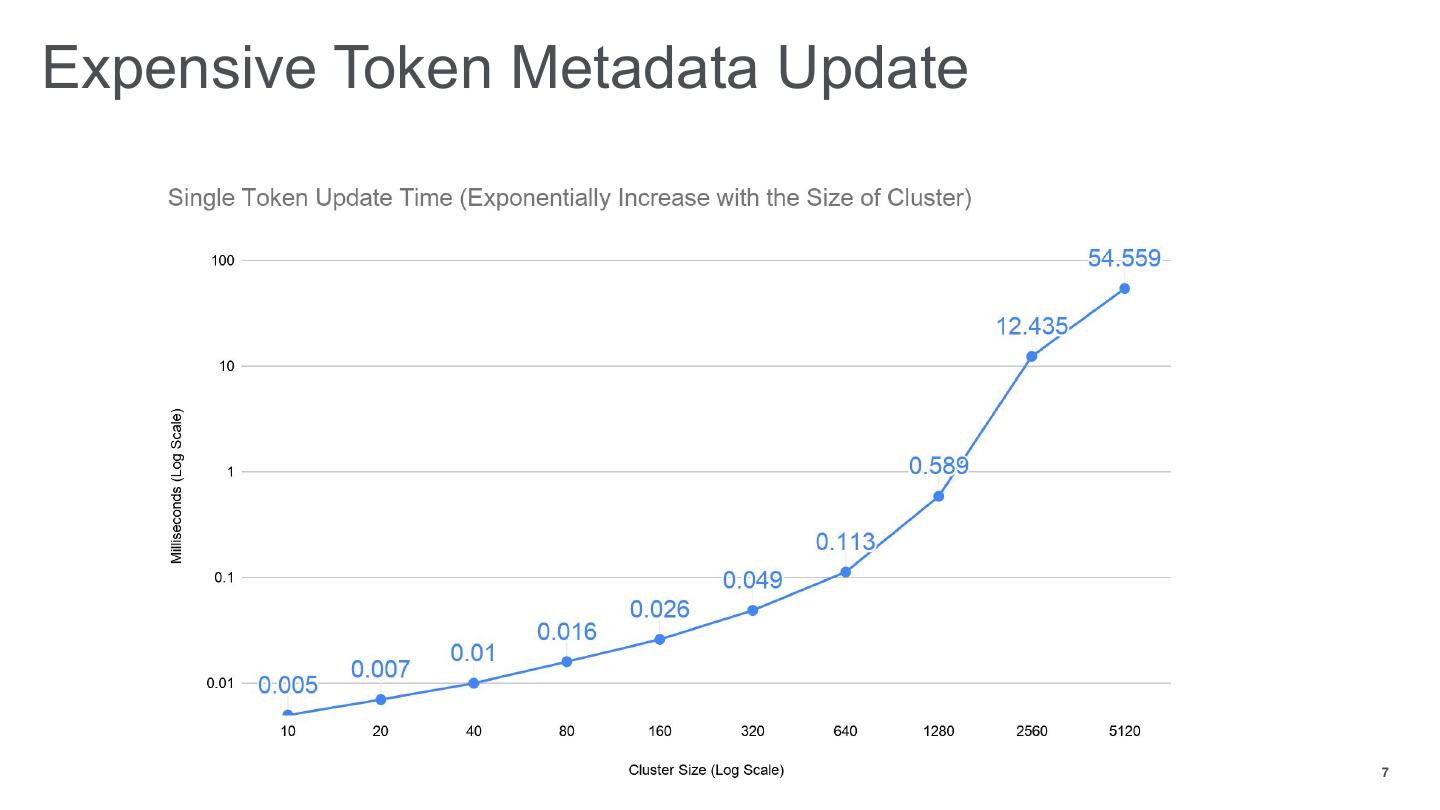

7 .Expensive Token Metadata Update 7

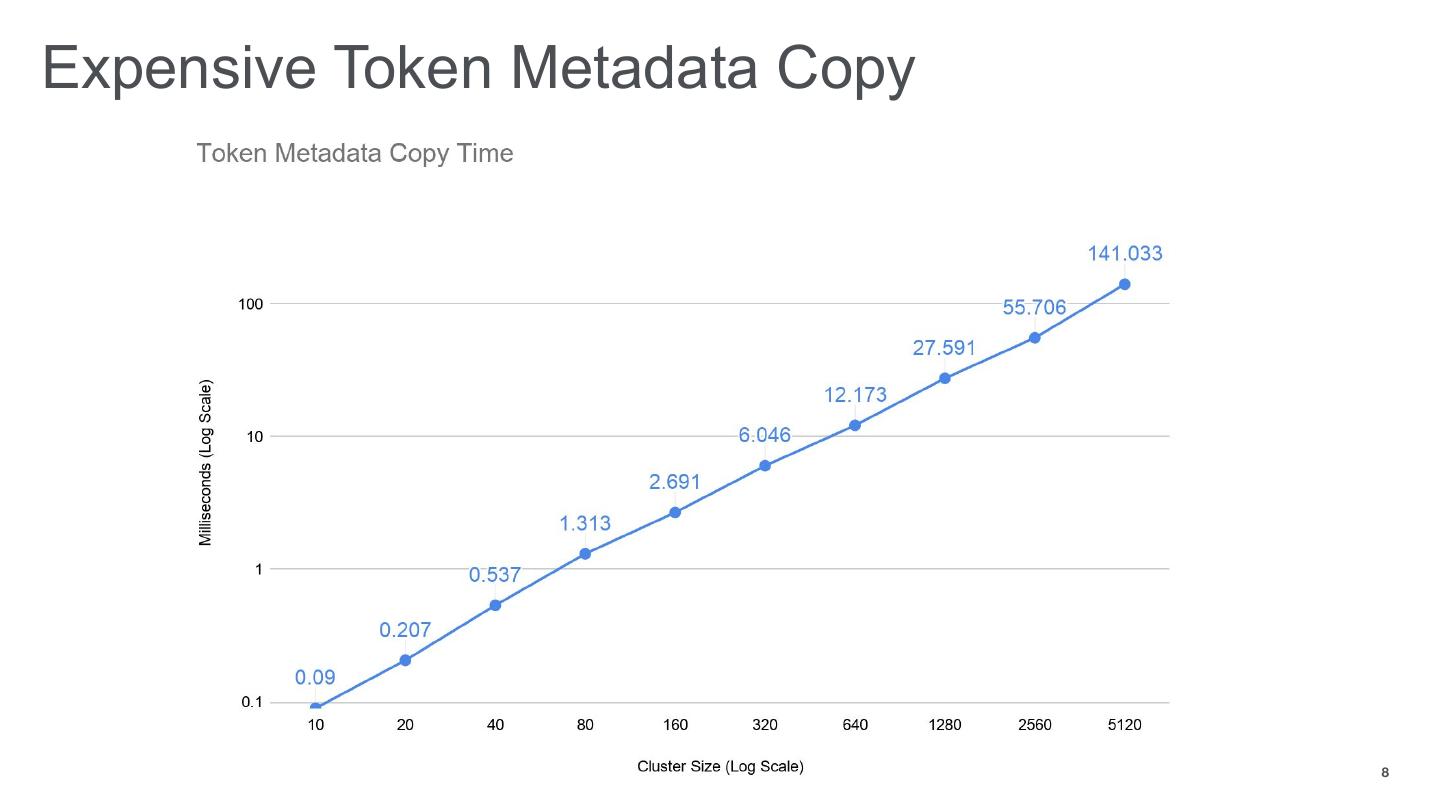

8 .Expensive Token Metadata Copy 8

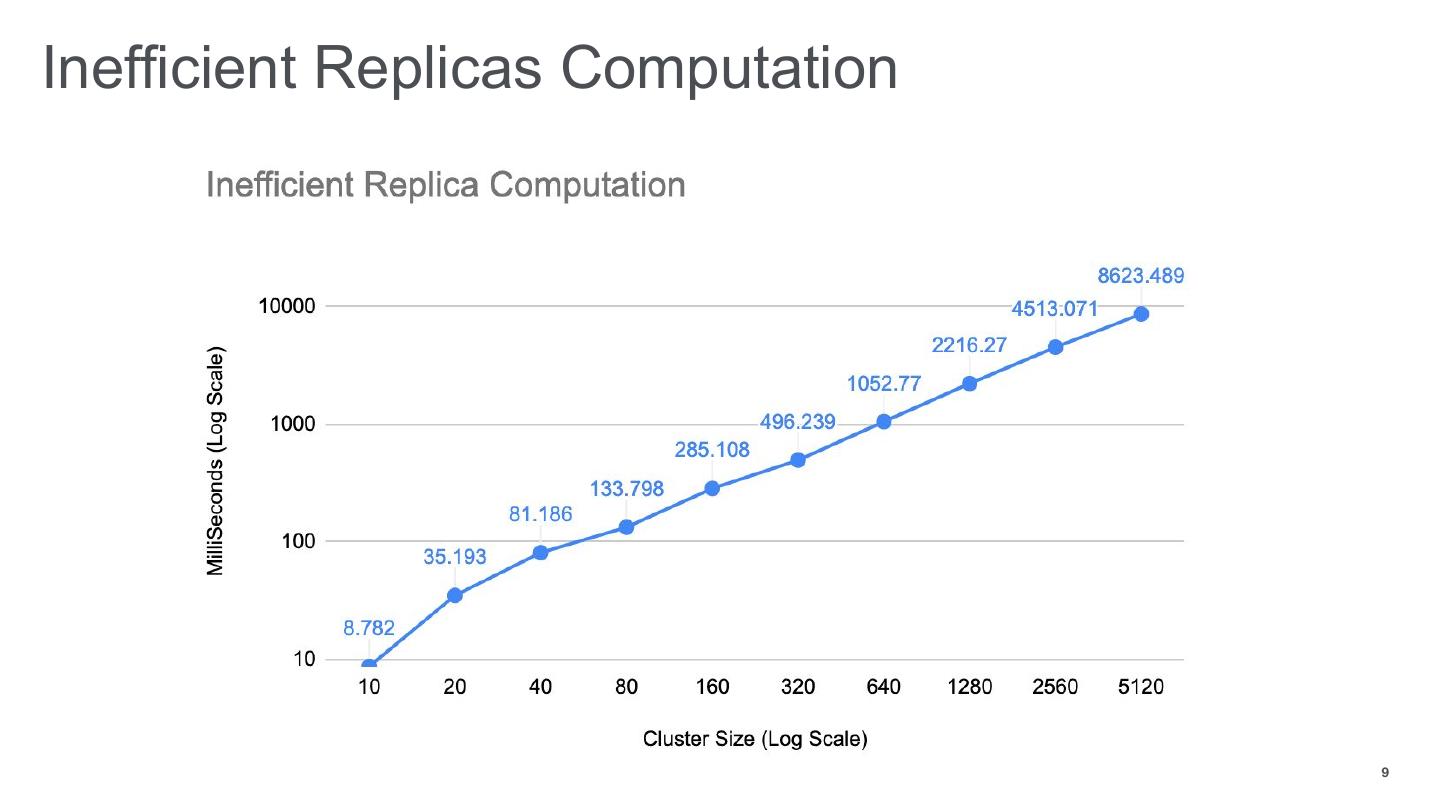

9 .Inefficient Replicas Computation 9

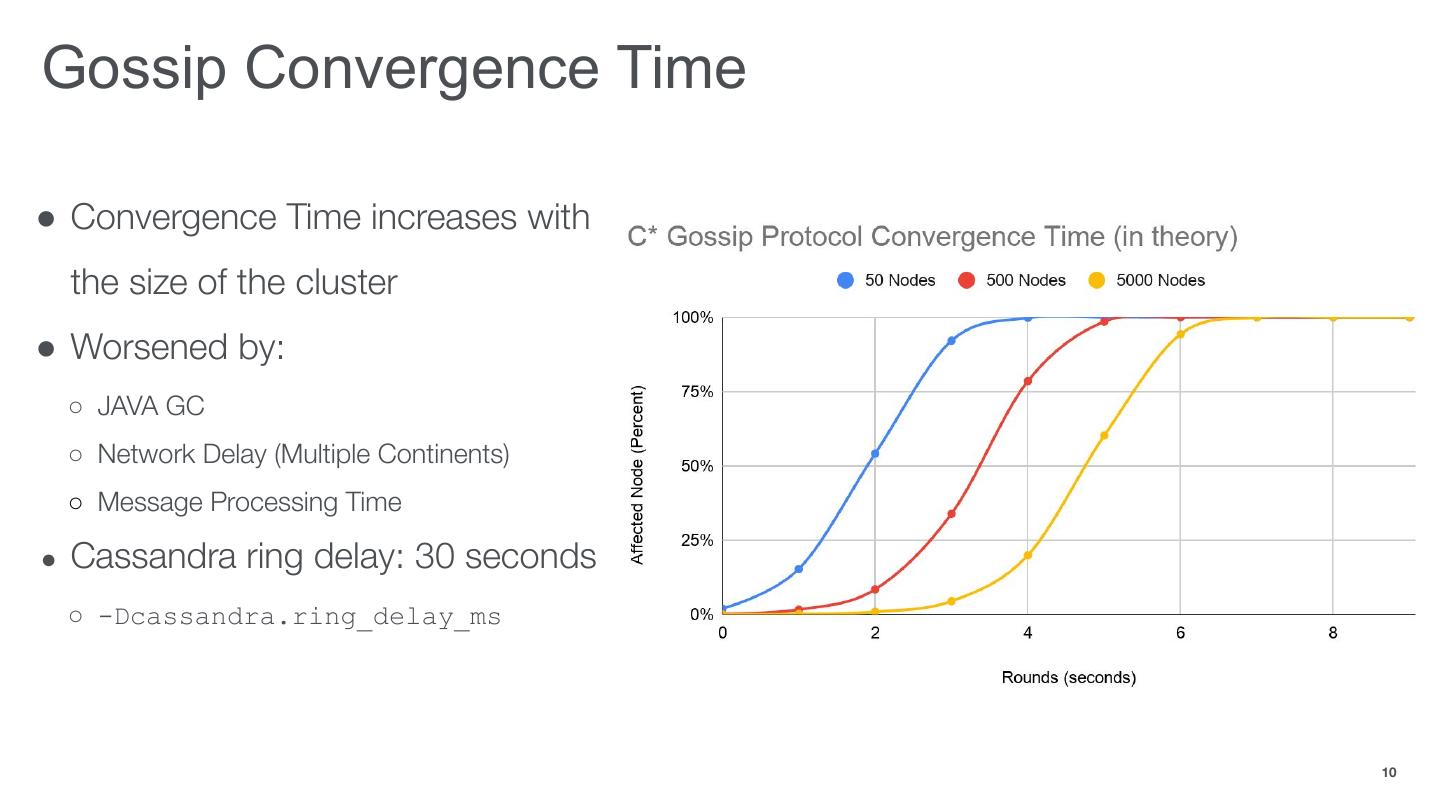

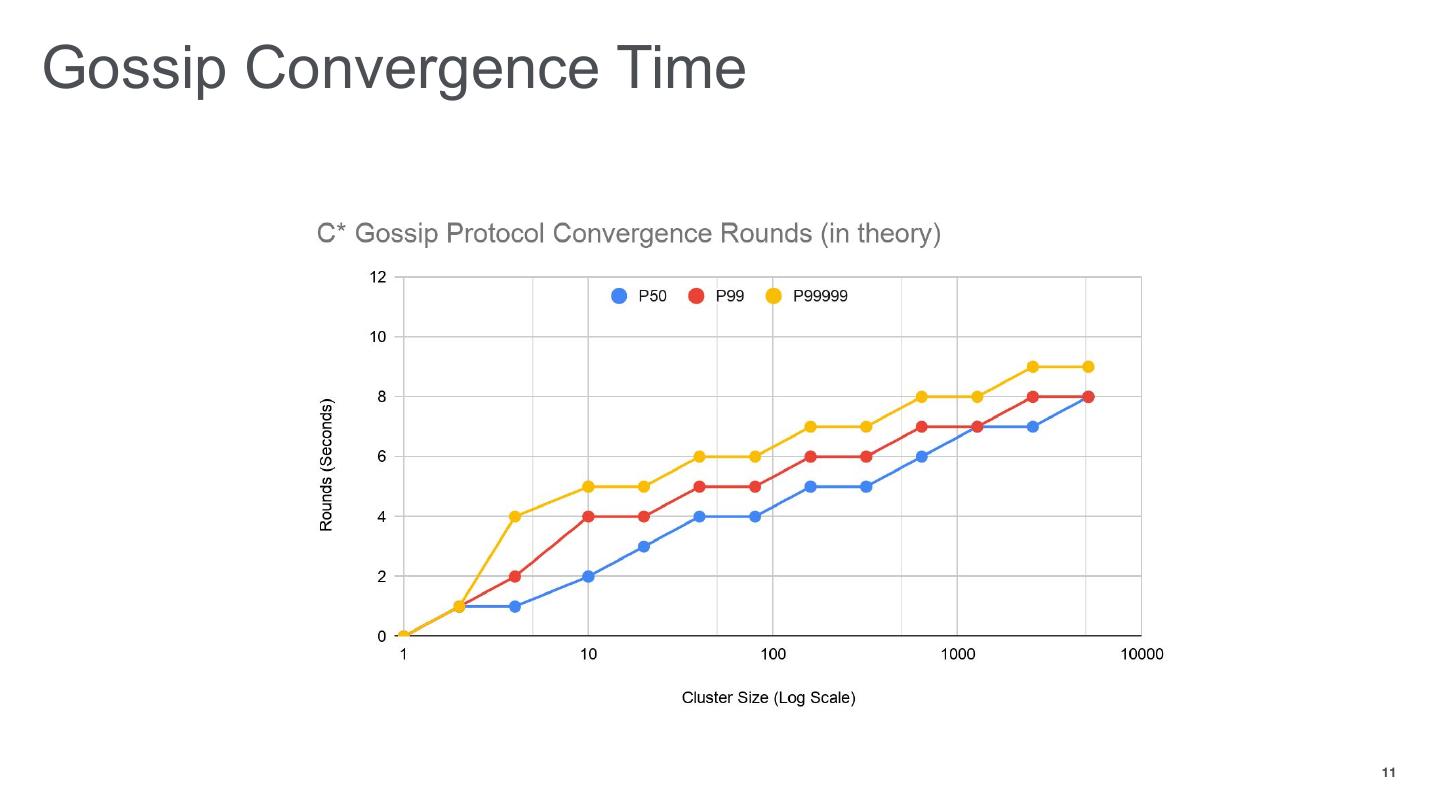

10 .Gossip Convergence Time ● Convergence Time increases with the size of the cluster ● Worsened by: ○ JAVA GC ○ Network Delay (Multiple Continents) ○ Message Processing Time ● Cassandra ring delay: 30 seconds ○ -Dcassandra.ring_delay_ms 10

11 .Gossip Convergence Time 11

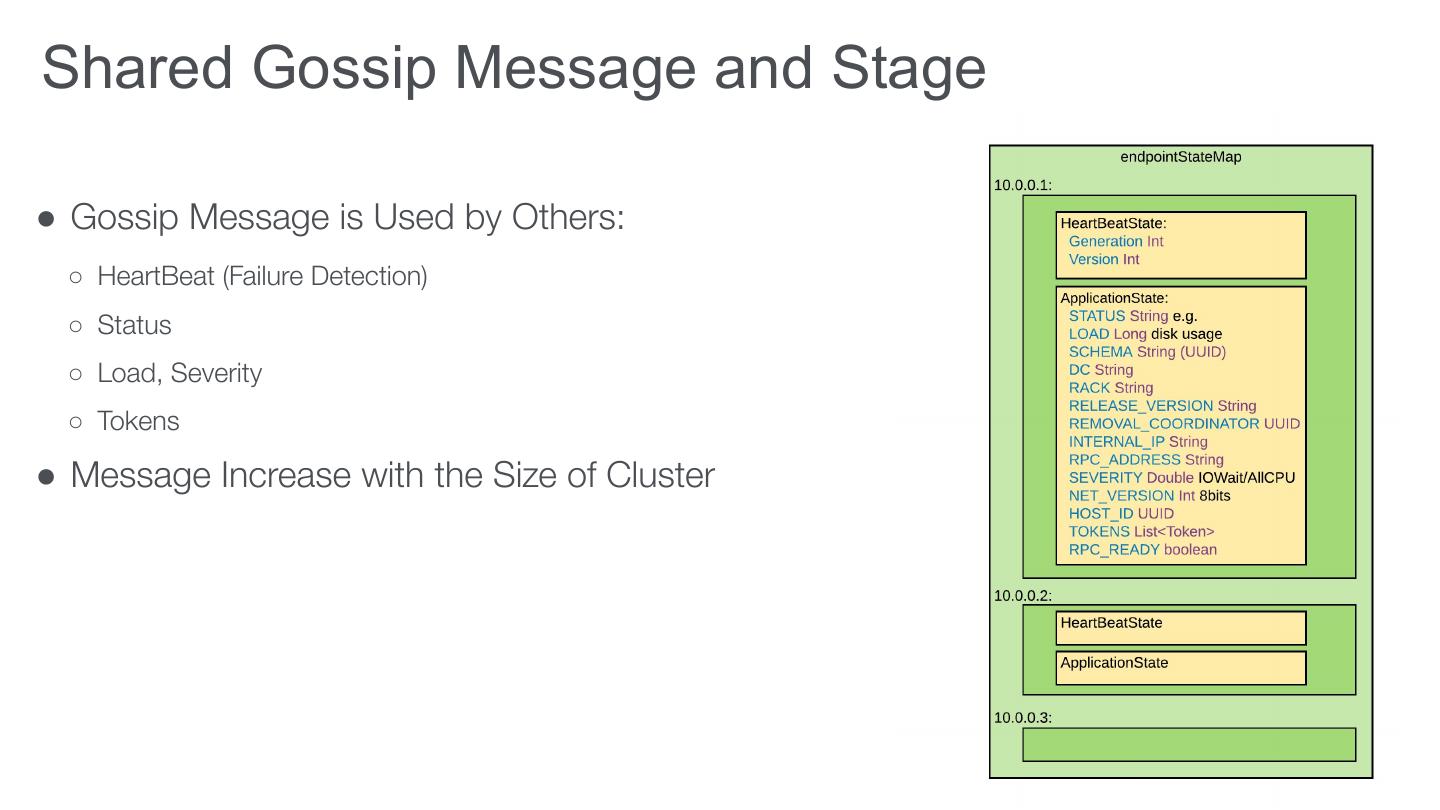

12 .Shared Gossip Message and Stage ● Gossip Message is Used by Others: ○ HeartBeat (Failure Detection) ○ Status ○ Load, Severity ○ Tokens ● Message Increase with the Size of Cluster 12

13 .Improvements

14 .More Efficient Token Update ● Improve Token Metadata Update Speed ○ CASSANDRA-14660: Improve Token Metadata cache populating performance for large cluster ○ CASSANDRA-15097: Avoid updating unchanged gossip state ○ CASSANDRA-15098: Avoid token metadata copy during update ○ CASSANDRA-15133: Node restart causes unnecessary token metadata update ○ CASSANDRA-15290: Avoid token cache invalidation for removing proxy node ○ CASSANDRA-15291: Batch the token metadata update to improve the speed ● Improve Token Ownership Calculation ○ CASSANDRA-15141: Faster token ownership calculation for NetworkTopologyStrategy 14

15 .Token Update Lock ● Token Metadata Update is Faster ● No Unnecessary Token Update ● 10x Cassandra start up time for Large cluster 15

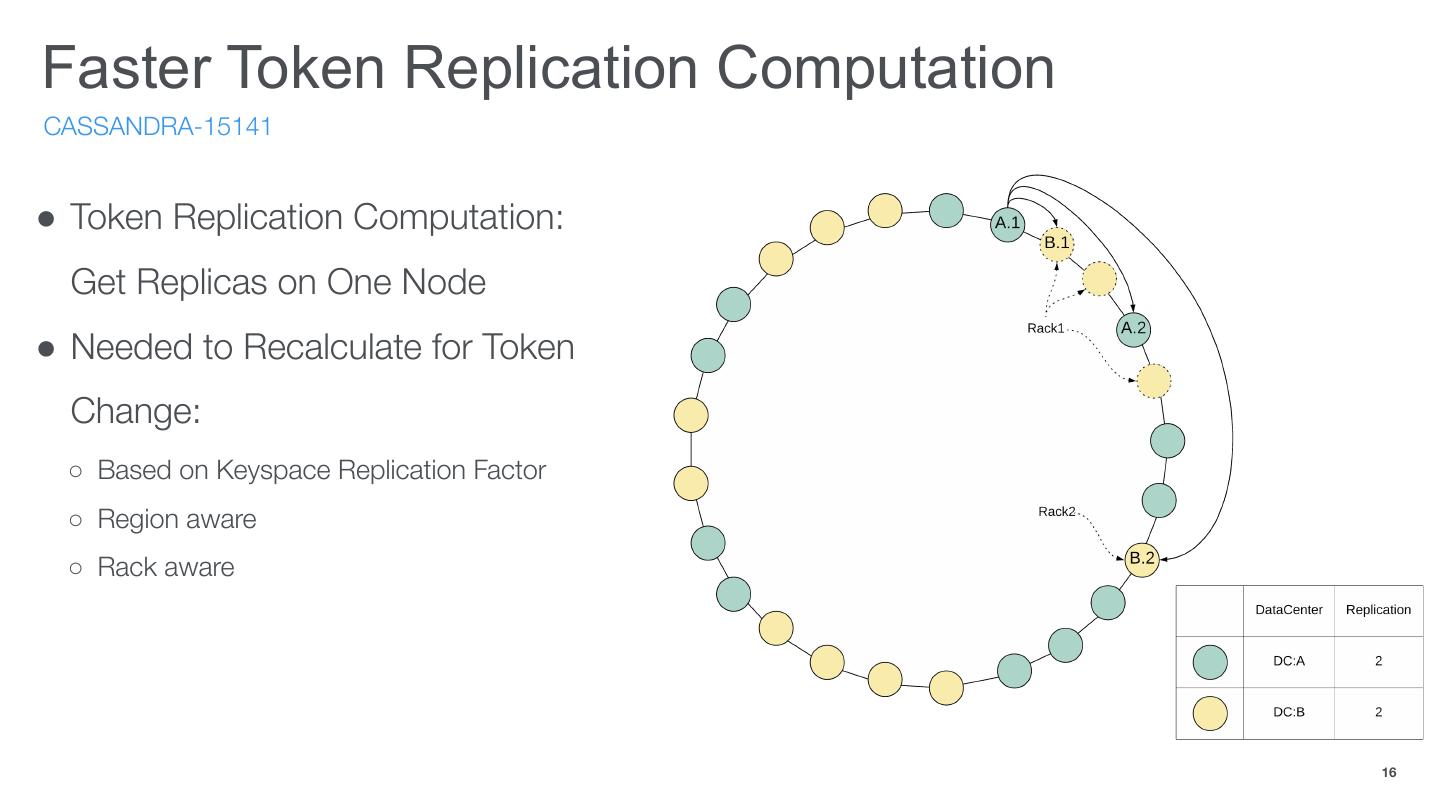

16 .Faster Token Replication Computation CASSANDRA-15141 ● Token Replication Computation: Get Replicas on One Node ● Needed to Recalculate for Token Change: ○ Based on Keyspace Replication Factor ○ Region aware ○ Rack aware 16

17 .Replication Computation Time 17

18 .Future Work

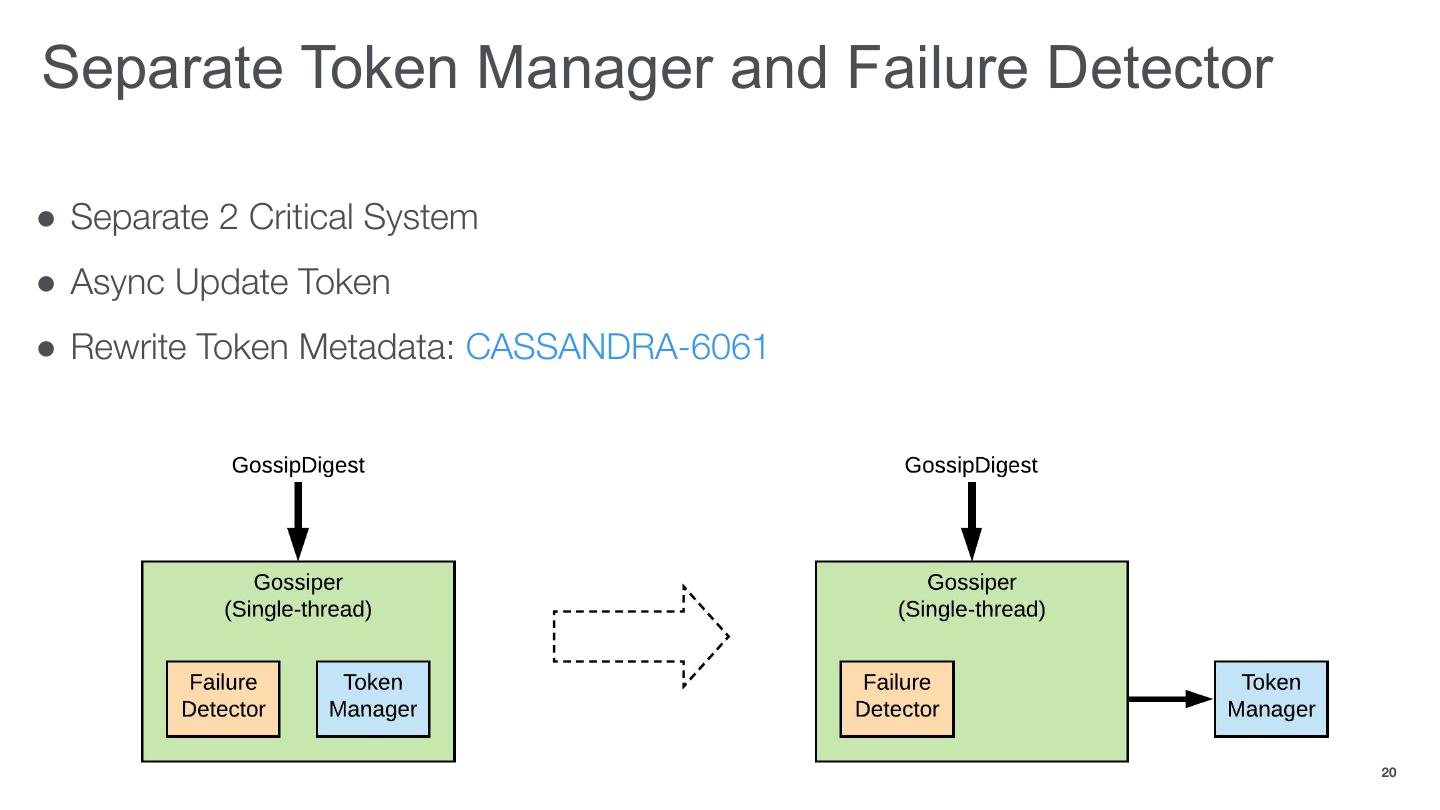

19 .Future Work ● Separate Token Manager and Failure Detector ● Consistent Token Management ● Pre-Allocate Tokens ● Pluggable Token Management 19

20 .Separate Token Manager and Failure Detector ● Separate 2 Critical System ● Async Update Token ● Rewrite Token Metadata: CASSANDRA-6061 20

21 .Consistent Token Management ● CASSANDRA-9667: Strongly Consistent Membership and Ownership ○ Token change can only be committed if majority of Nodes agrees (with a consensus transaction like PAXOS) ○ Stable and Consistent Membership at Scale with Rapid ● Data ownership verify on local read/write path 21

22 .Pre-Allocate Tokens ● Generate Fixed Tokens for N Number Nodes (Even Before Cluster Creation) ● Even better for replication aware token allocation, as token imbalance issue if randomly decommission a node ● Other Token Computation Could also be Pre-Computed 22

23 .Pluggable Token Management ● Integrate with Other Consistent Shard Management System: ○ ZooKeeper ○ ShardManager 23

24 .Summary 1 Challenges 2 Improvements 3 Future Work 24

25 .25