- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

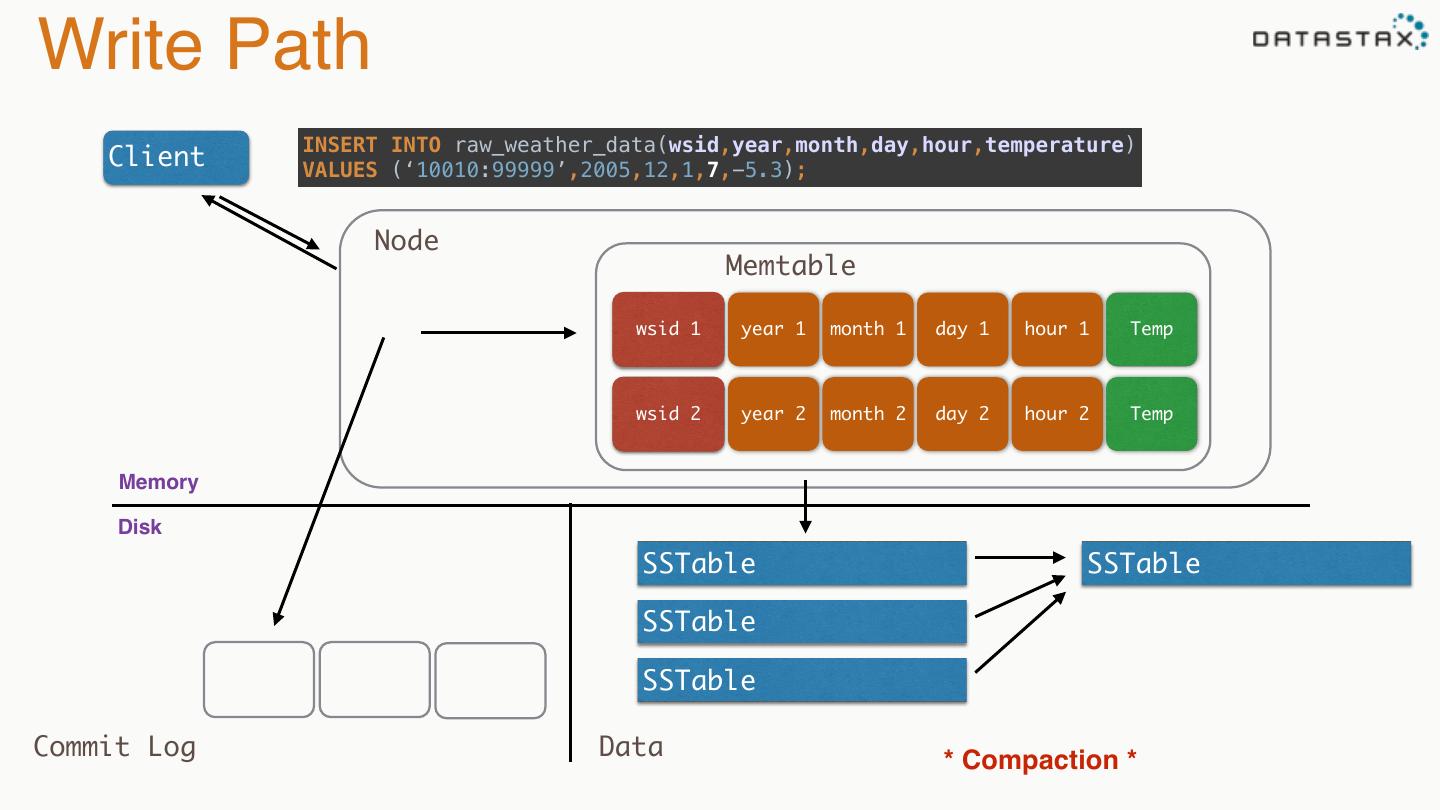

16/06 Introduction to Cassandra Architecture

展开查看详情

1 .Intro to Cassandra Architecture Nick Bailey @nickmbailey 1

2 .4.1 Cassandra - Introduction

3 .Why does Cassandra Exist?

4 .Dynamo Paper(2007) • How do we build a data store that is: • Reliable • Performant • “Always On” • Nothing new and shiny • 24 papers cited Also the basis for Riak and Voldemort

5 .BigTable(2006) • Richer data model • 1 key. Lots of values • Fast sequential access • 38 Papers cited

6 .Cassandra(2008) • Distributed features of Dynamo • Data Model and storage from BigTable • February 17, 2010 it graduated to a top-level Apache project

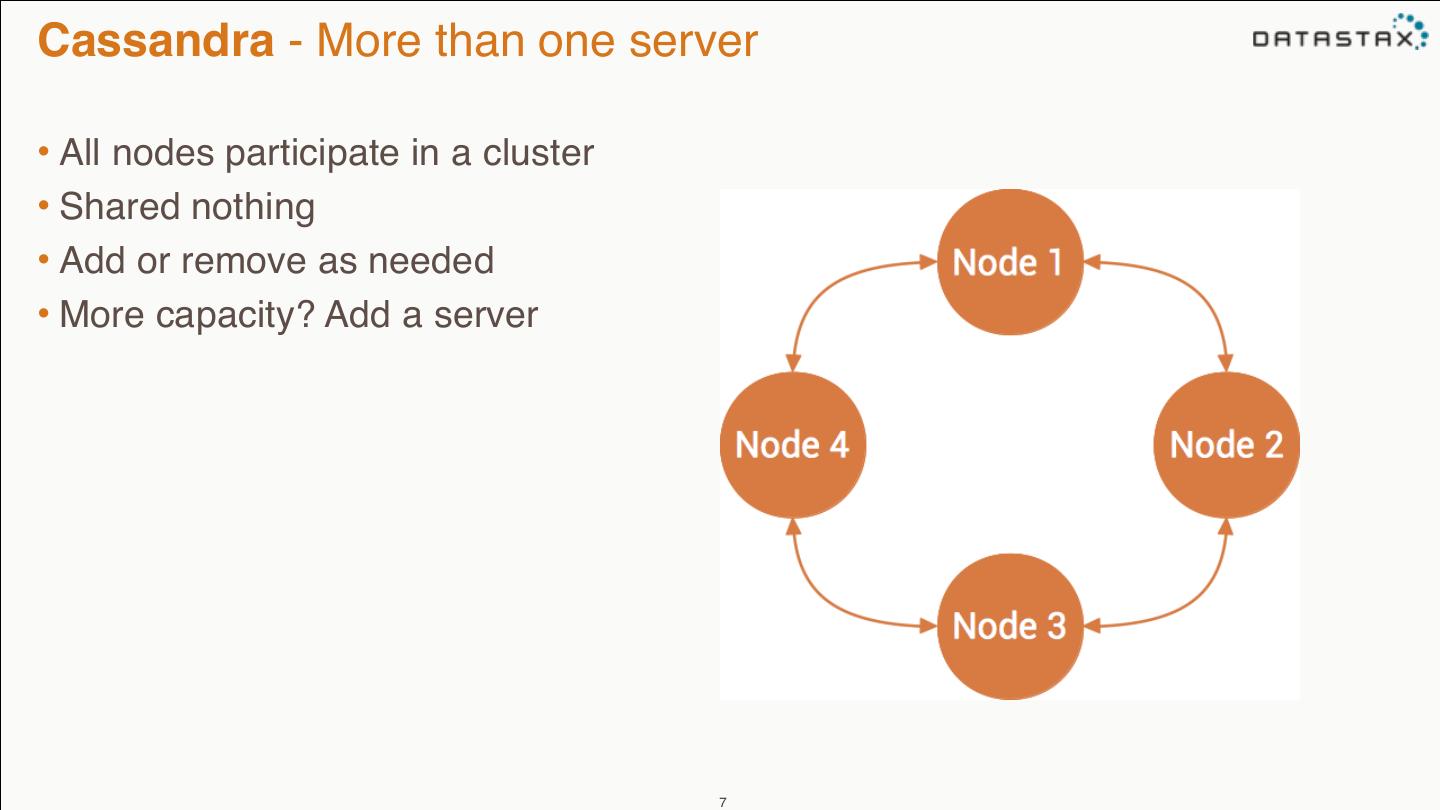

7 .Cassandra - More than one server • All nodes participate in a cluster • Shared nothing • Add or remove as needed • More capacity? Add a server 7

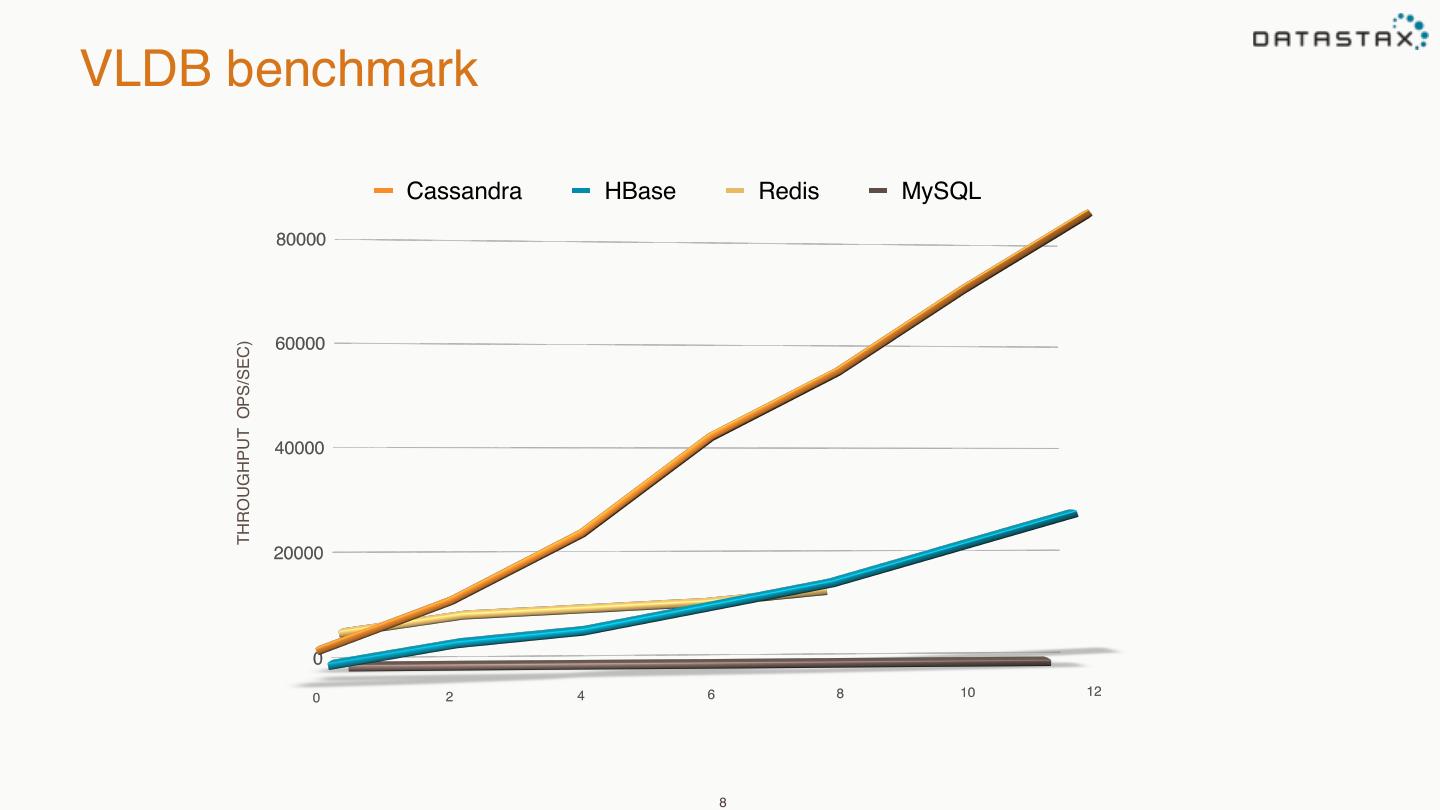

8 .VLDB benchmark Cassandra HBase Redis MySQL THROUGHPUT OPS/SEC) 8

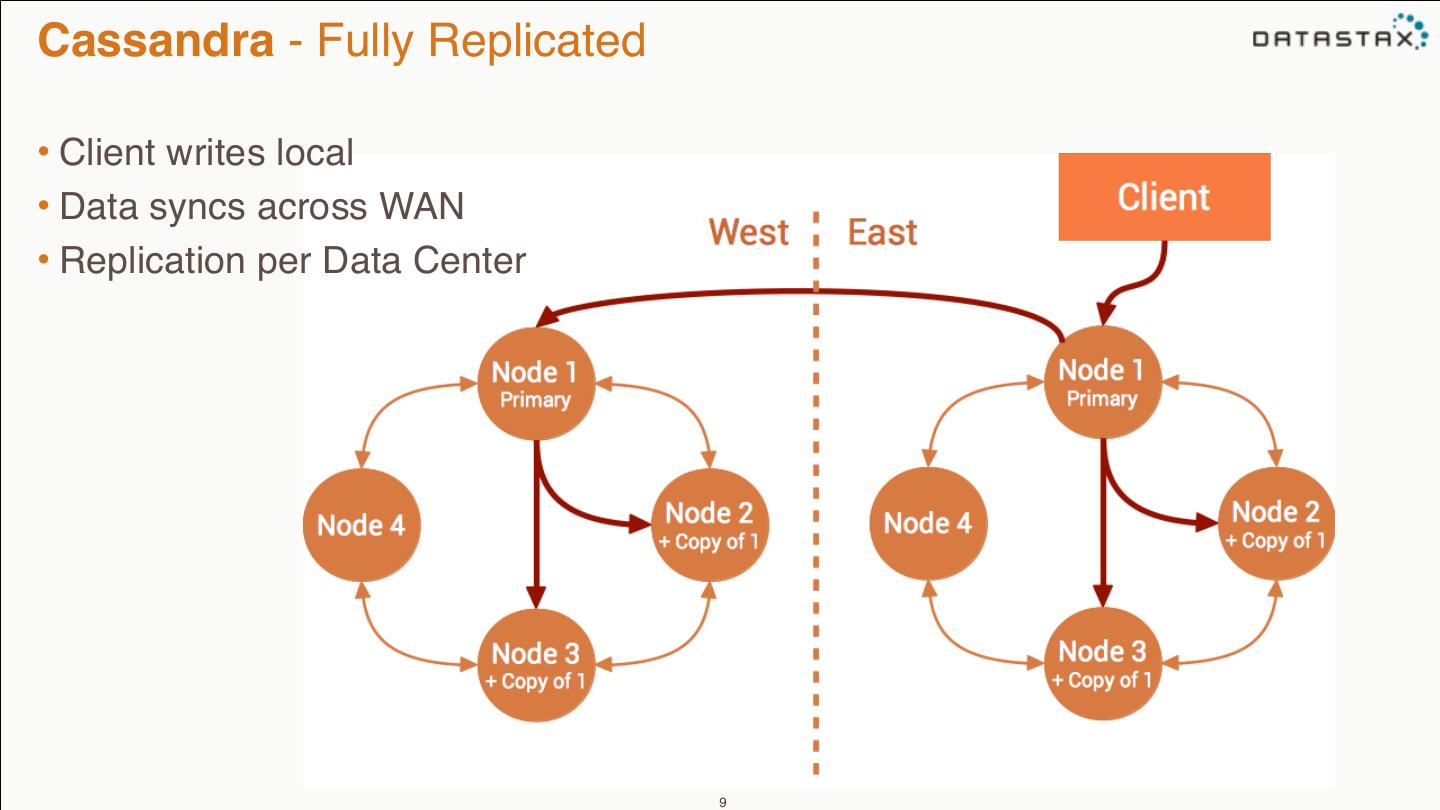

9 .Cassandra - Fully Replicated • Client writes local • Data syncs across WAN • Replication per Data Center 9

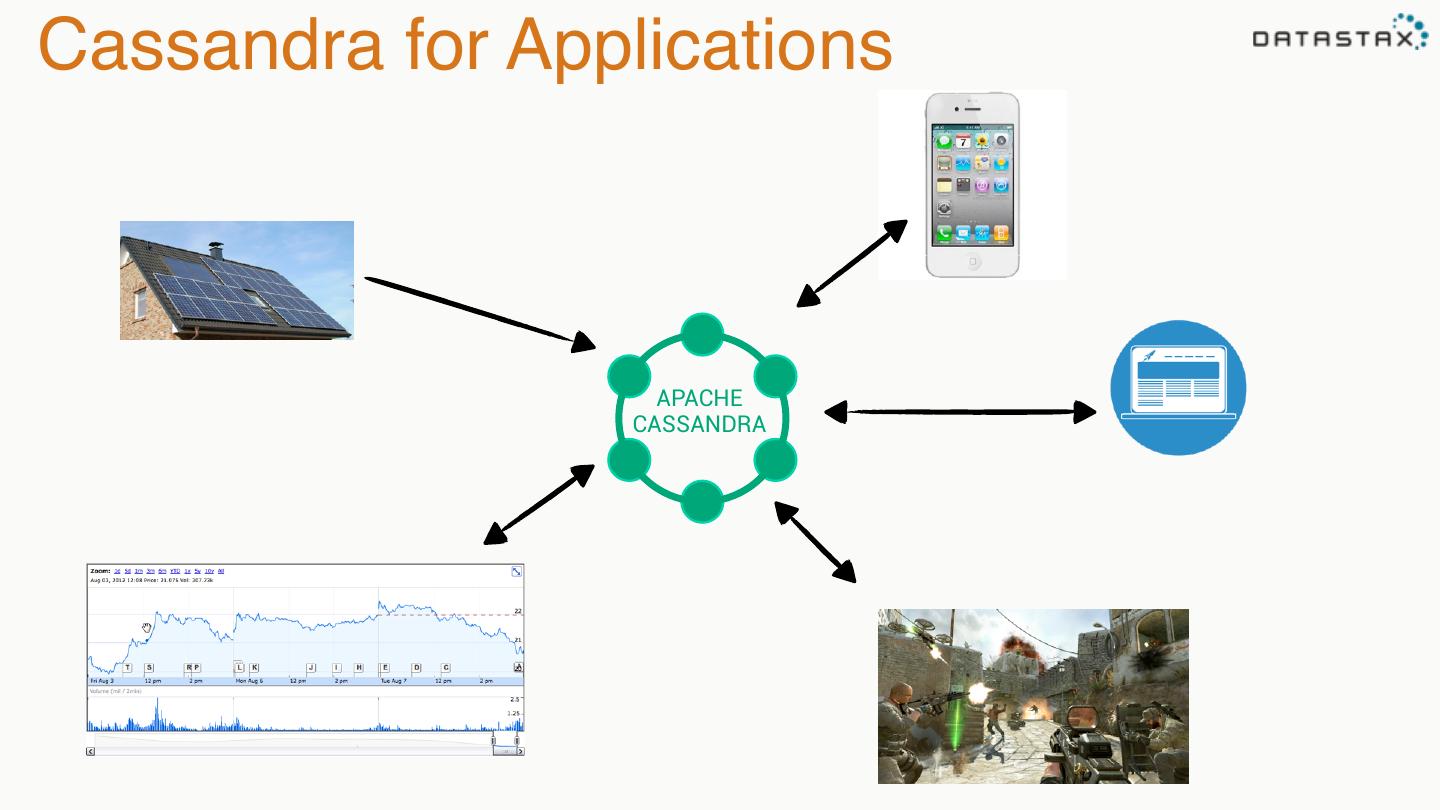

10 .Cassandra for Applications APACHE CASSANDRA

11 .Summary •The evolution of the internet and online data created new problems •Apache Cassandra was based on a variety of technologies to solve these problems •The goals of Apache Cassandra are all about staying online and performant •Apache Cassandra is a database best used for applications, close to your users

12 .4.1.2 Cassandra - Basic Architecture

13 .Row Partition Column Column Column Column Key 1 1 2 3 4

14 .Partition Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 1 1 2 3 4

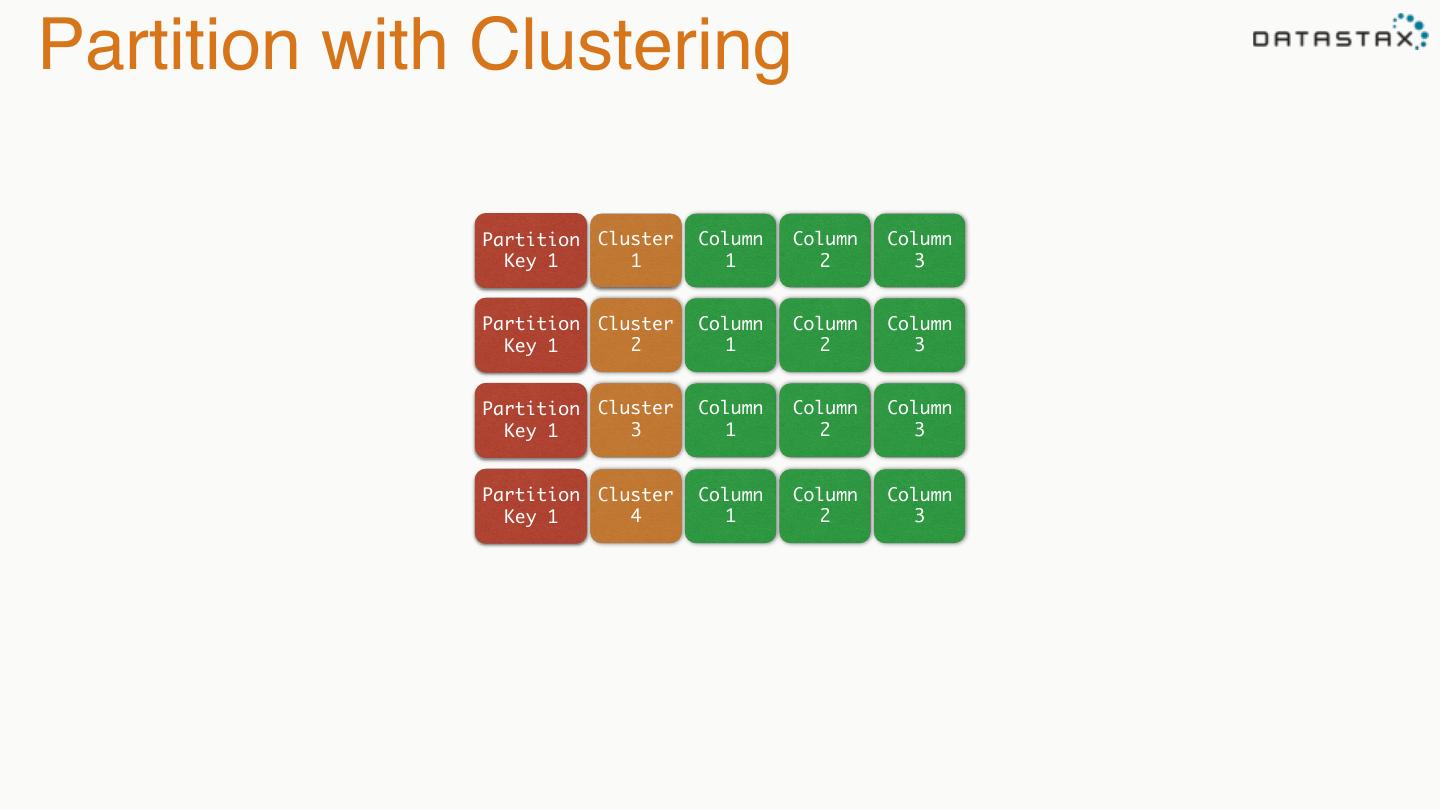

15 .Partition with Clustering Partition Cluster Column Column Column Key 1 1 1 2 3 Partition Cluster Column Column Column Key 1 2 1 2 3 Partition Cluster Column Column Column Key 1 3 1 2 3 Partition Cluster Column Column Column Key 1 4 1 2 3

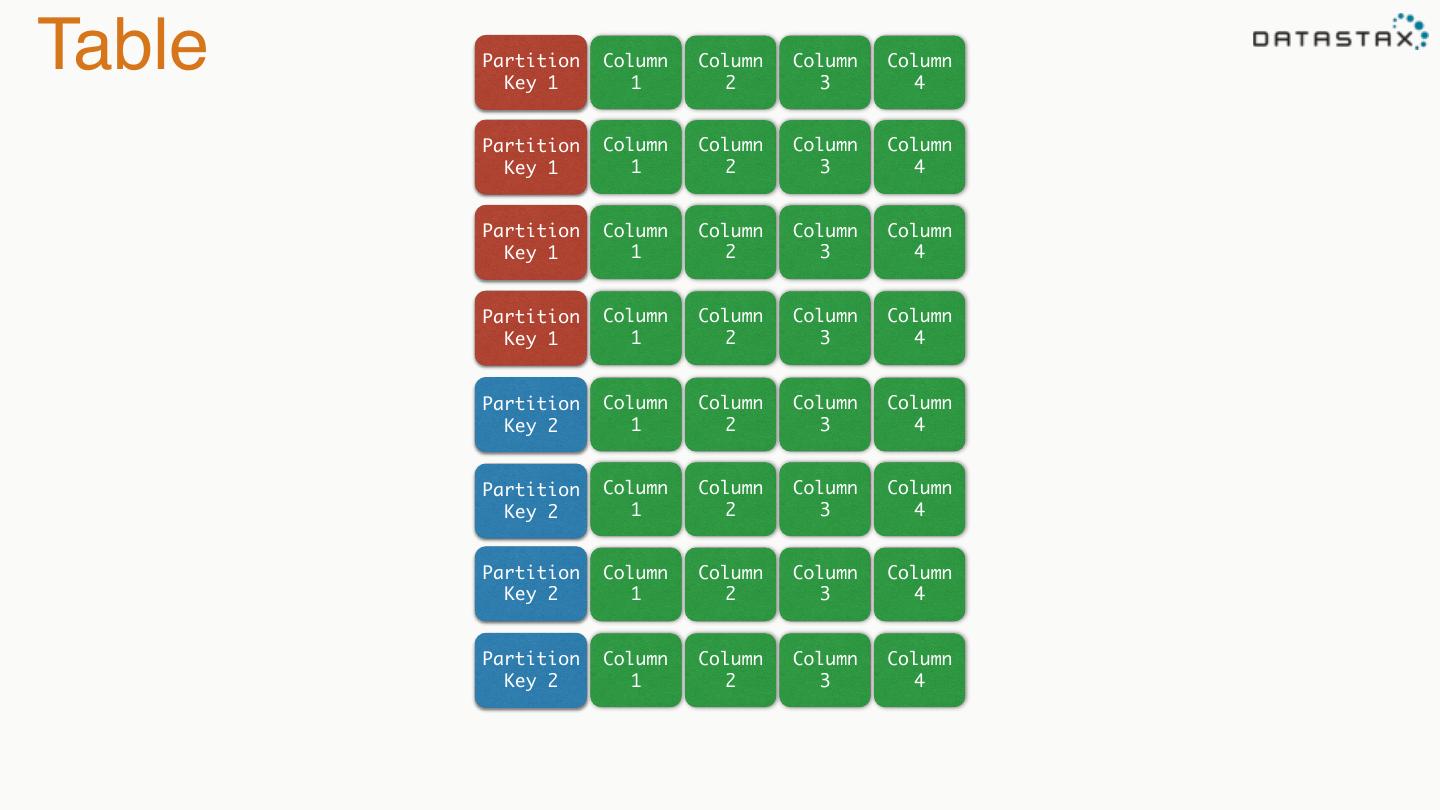

16 .Table Partition Key 1 Column 1 Column 2 Column 3 Column 4 Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 1 1 2 3 4 Partition Column Column Column Column Key 2 1 2 3 4 Partition Column Column Column Column Key 2 1 2 3 4 Partition Column Column Column Column Key 2 1 2 3 4 Partition Column Column Column Column Key 2 1 2 3 4

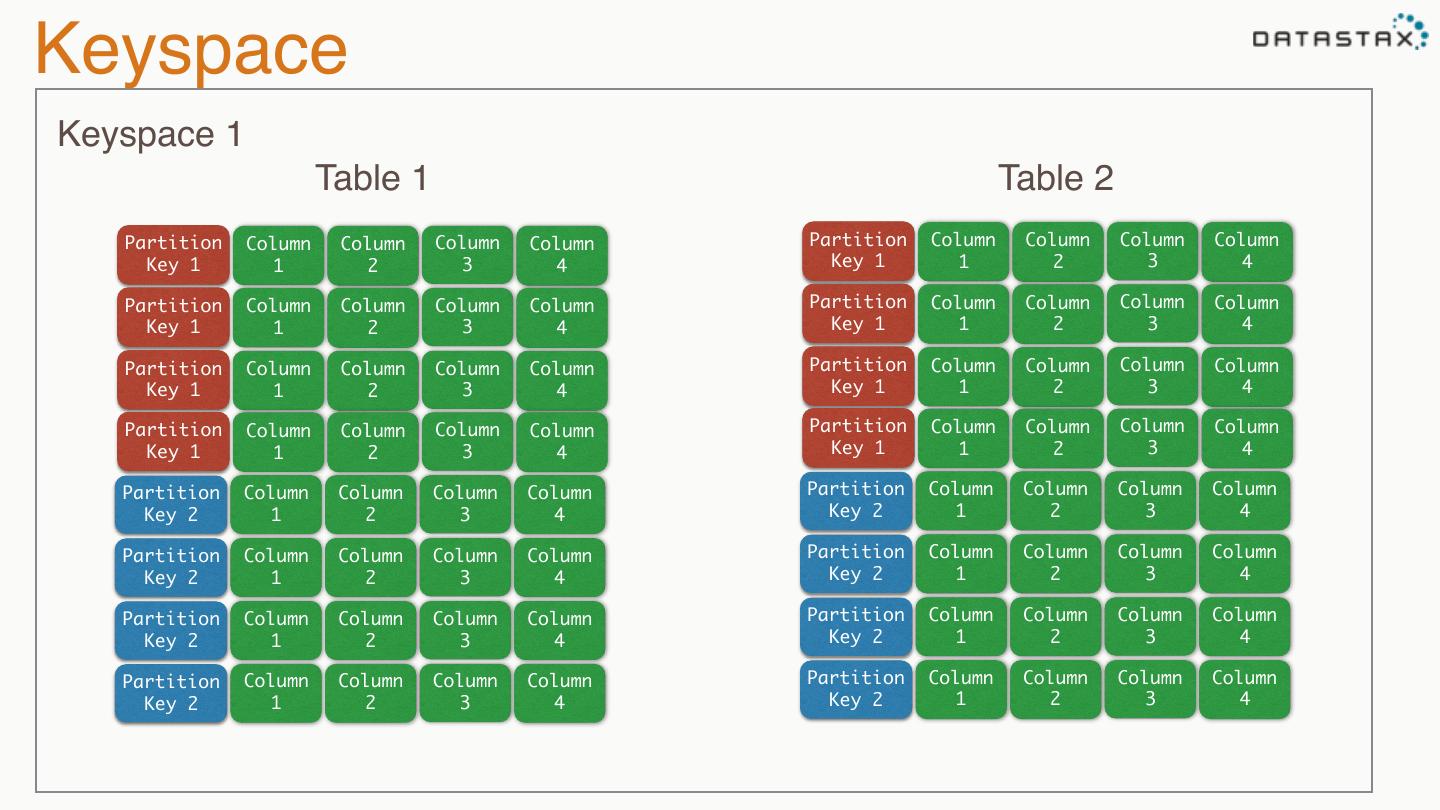

17 .Keyspace Keyspace 1 Table 1 Table 2 Partition Column Column Column Column Partition Column Column Column Column Key 1 1 2 3 4 Key 1 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 1 1 2 3 4 Key 1 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 1 1 2 3 4 Key 1 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 1 1 2 3 4 Key 1 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 2 1 2 3 4 Key 2 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 2 1 2 3 4 Key 2 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 2 1 2 3 4 Key 2 1 2 3 4 Partition Column Column Column Column Partition Column Column Column Column Key 2 1 2 3 4 Key 2 1 2 3 4

18 .Node Server

19 .Token Server •Each partition is a 128 bit value •Consistent hash between 2-63 and 264 •Each node owns a range of those values •The token is the beginning of that range to the next node’s token value Token Range •Virtual Nodes break these down 0 … further Data

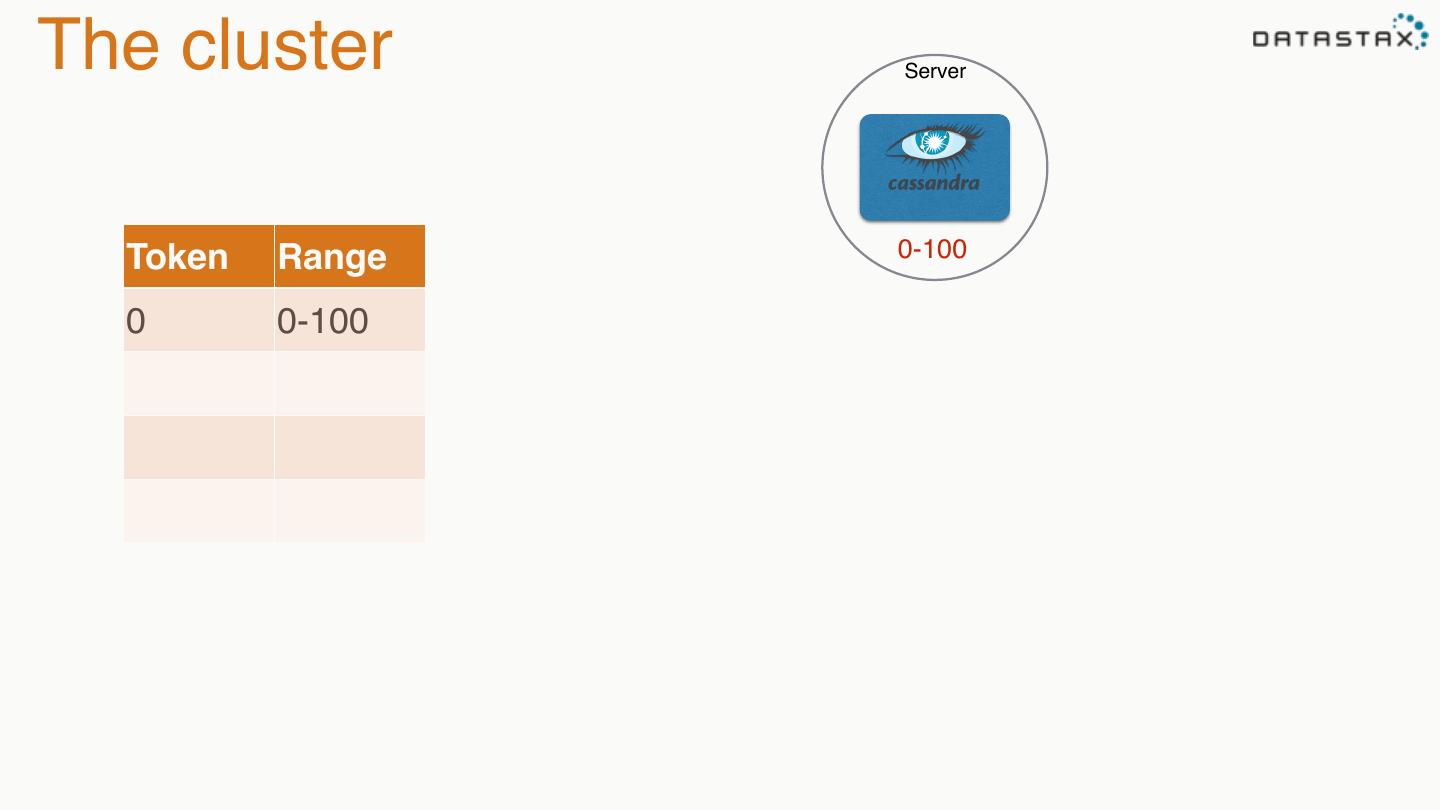

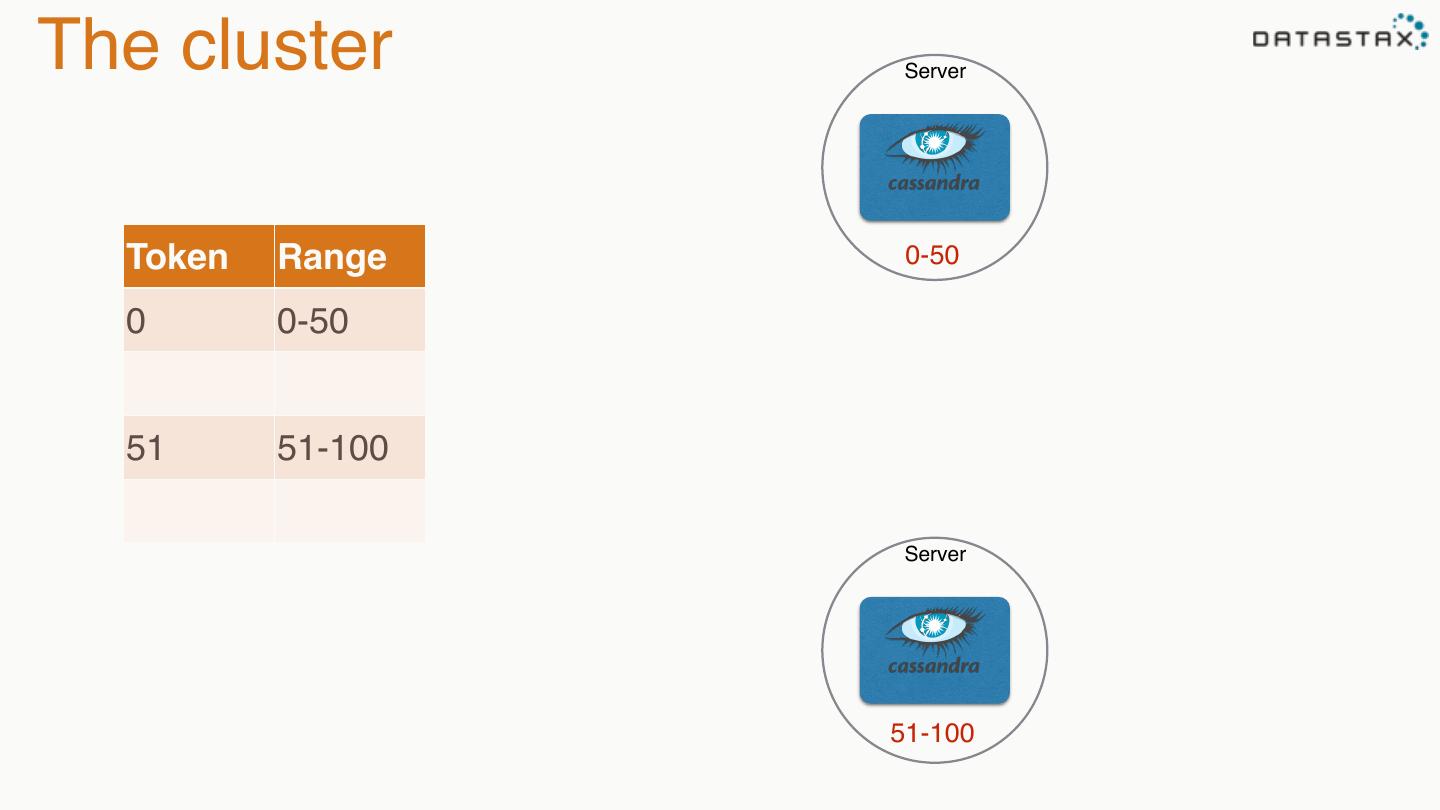

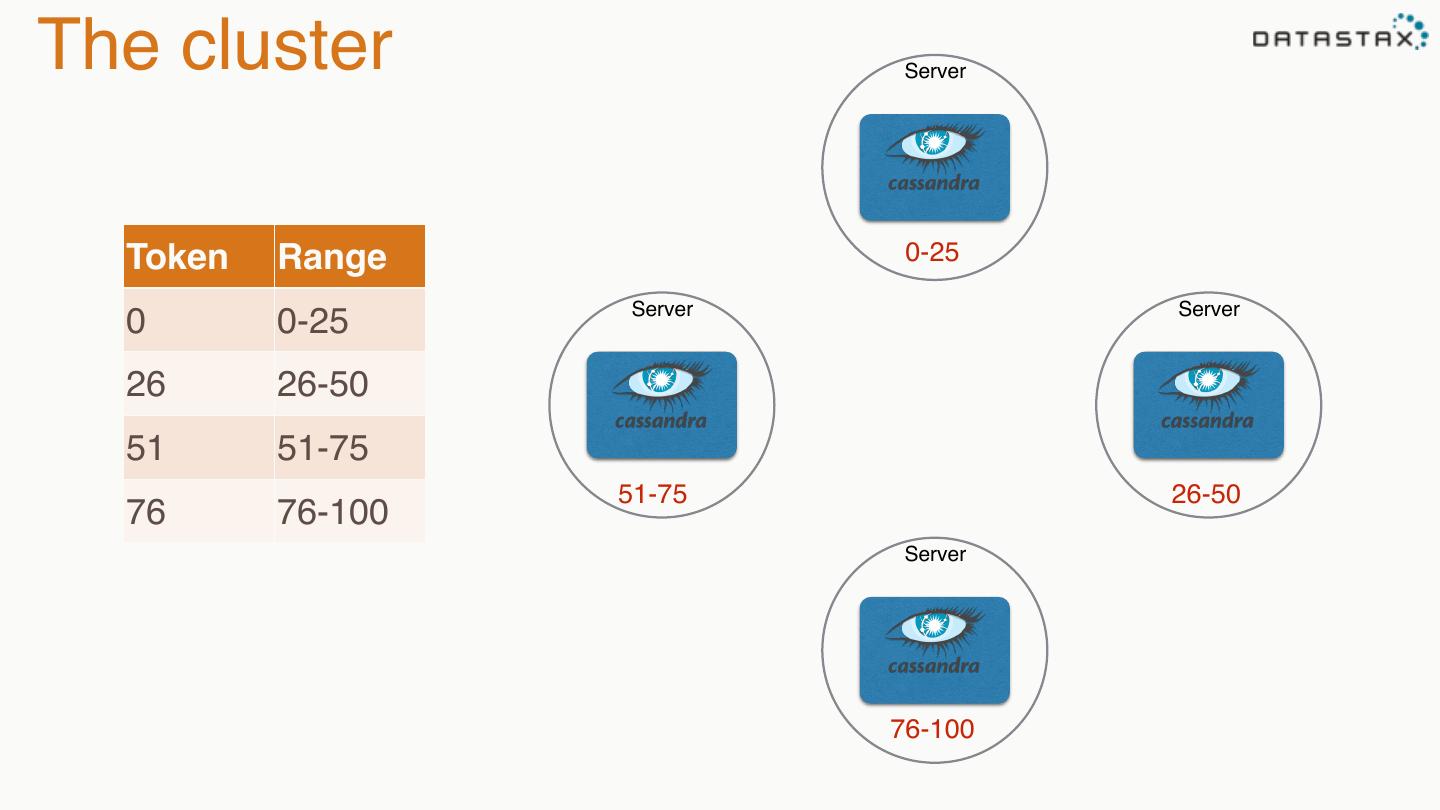

20 .The cluster Server Token Range 0-100 0 0-100

21 .The cluster Server Token Range 0-50 0 0-50 51 51-100 Server 51-100

22 .The cluster Server Token Range 0-25 Server Server 0 0-25 26 26-50 51 51-75 51-75 26-50 76 76-100 Server 76-100

23 .Summary •Tables store rows of data by column •Partitions are similar data grouped by a partition key •Keyspaces contain tables and are grouped by data center •Tokens show node placement in the range of cluster data

24 .4.1.3 Cassandra - Replication, High Availability and Multi-datacenter

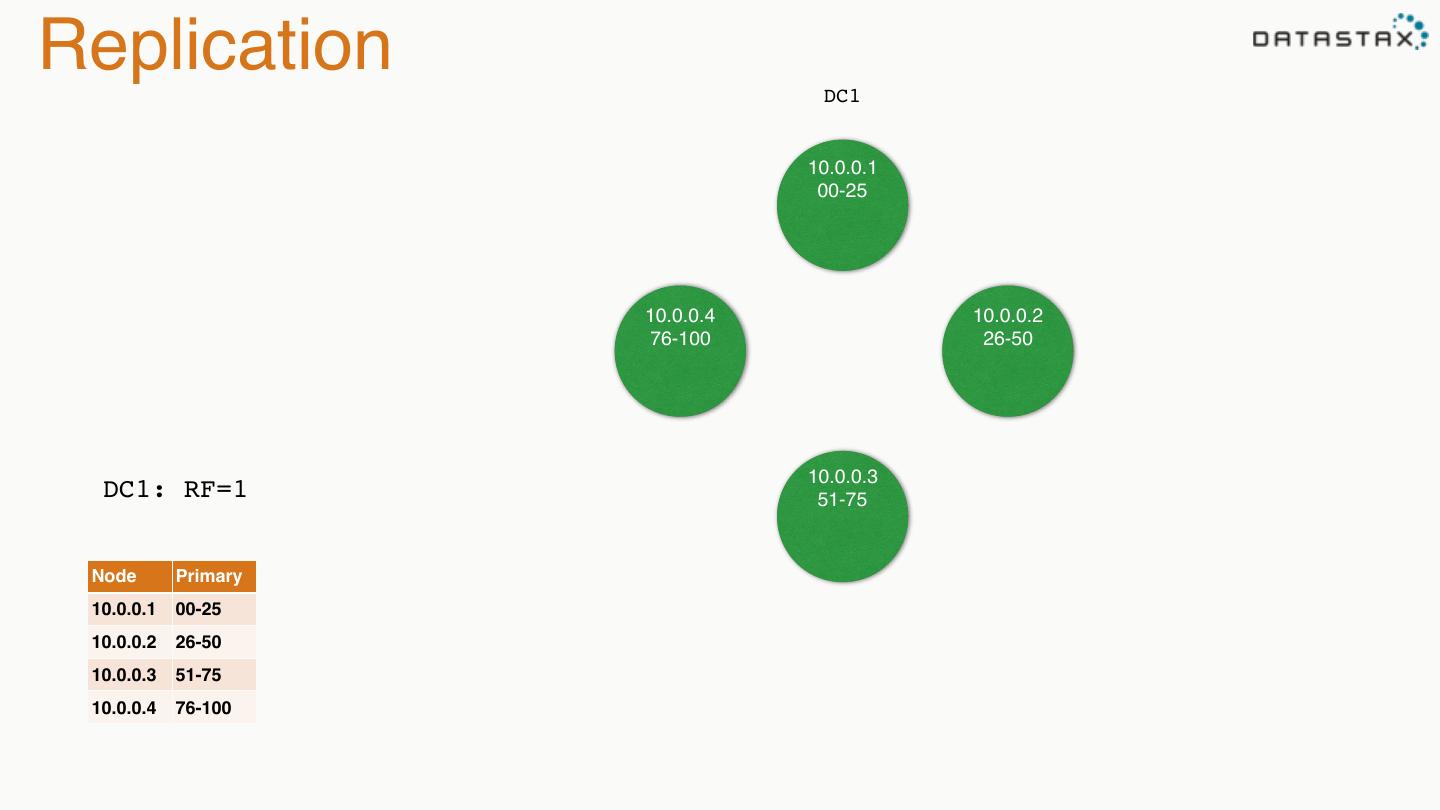

25 .Replication DC1 10.0.0.1 10.0.0. 00-25 1 10.0.0.4 10.0.0.2 76-100 26-50 10.0.0.3 DC1: RF=1 51-75 Node Primary 10.0.0.1 00-25 10.0.0.2 26-50 10.0.0.3 51-75 10.0.0.4 76-100

26 .Replication DC1 10.0.0.1 00-25 76-100 10.0.0.4 10.0.0.2 76-100 26-50 51-75 00-25 10.0.0.3 DC1: RF=2 51-75 26-50 Node Primary Replica 10.0.0.1 00-25 76-100 10.0.0.2 26-50 00-25 10.0.0.3 51-75 26-50 10.0.0.4 76-100 51-75

27 .Replication DC1 10.0.0.1 00-25 76-100 51-75 10.0.0.4 10.0.0.2 76-100 26-50 51-75 00-25 26-50 76-100 10.0.0.3 DC1: RF=3 51-75 26-50 00-25 Node Primary Replica Replica 10.0.0.1 00-25 76-100 51-75 10.0.0.2 26-50 00-25 76-100 10.0.0.3 51-75 26-50 00-25 10.0.0.4 76-100 51-75 26-50

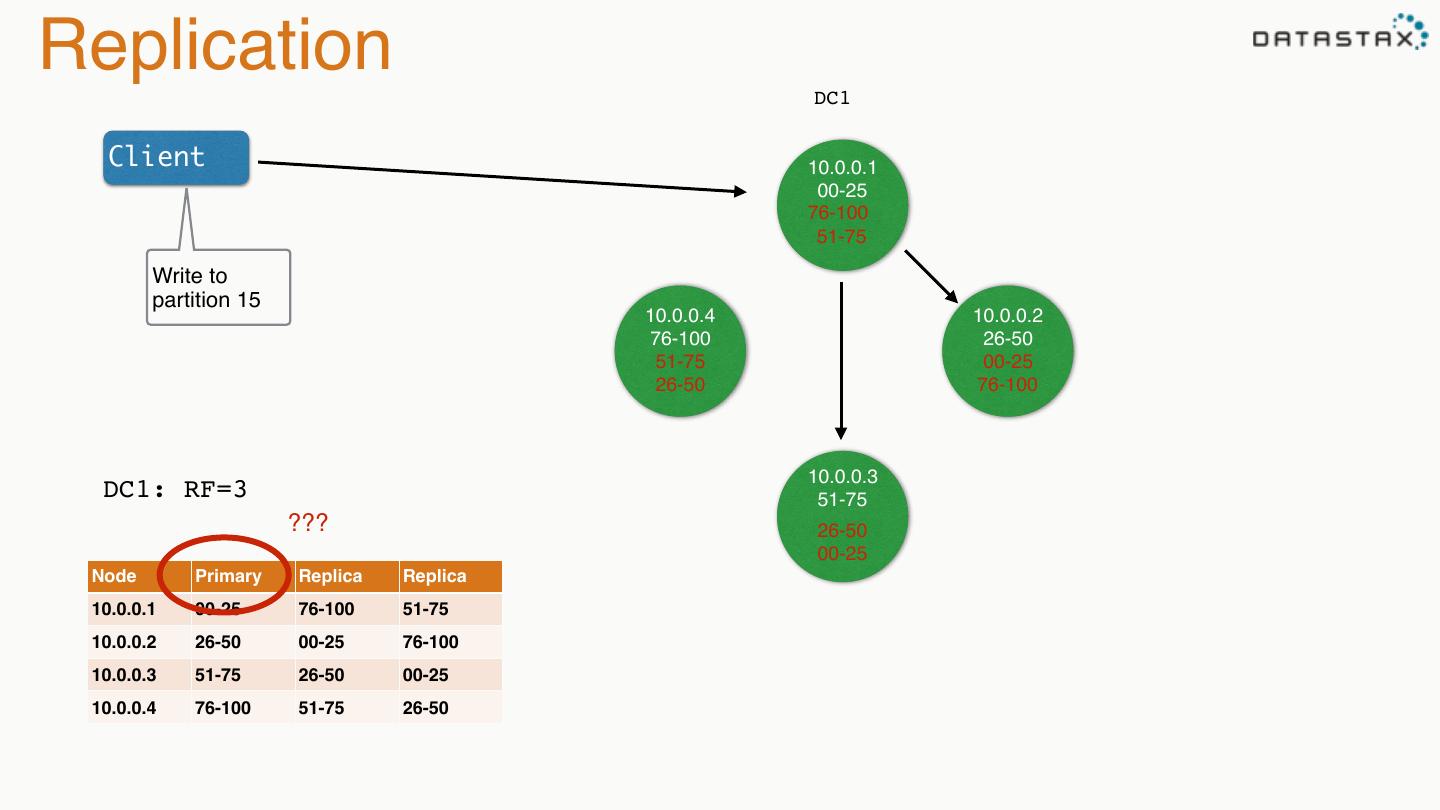

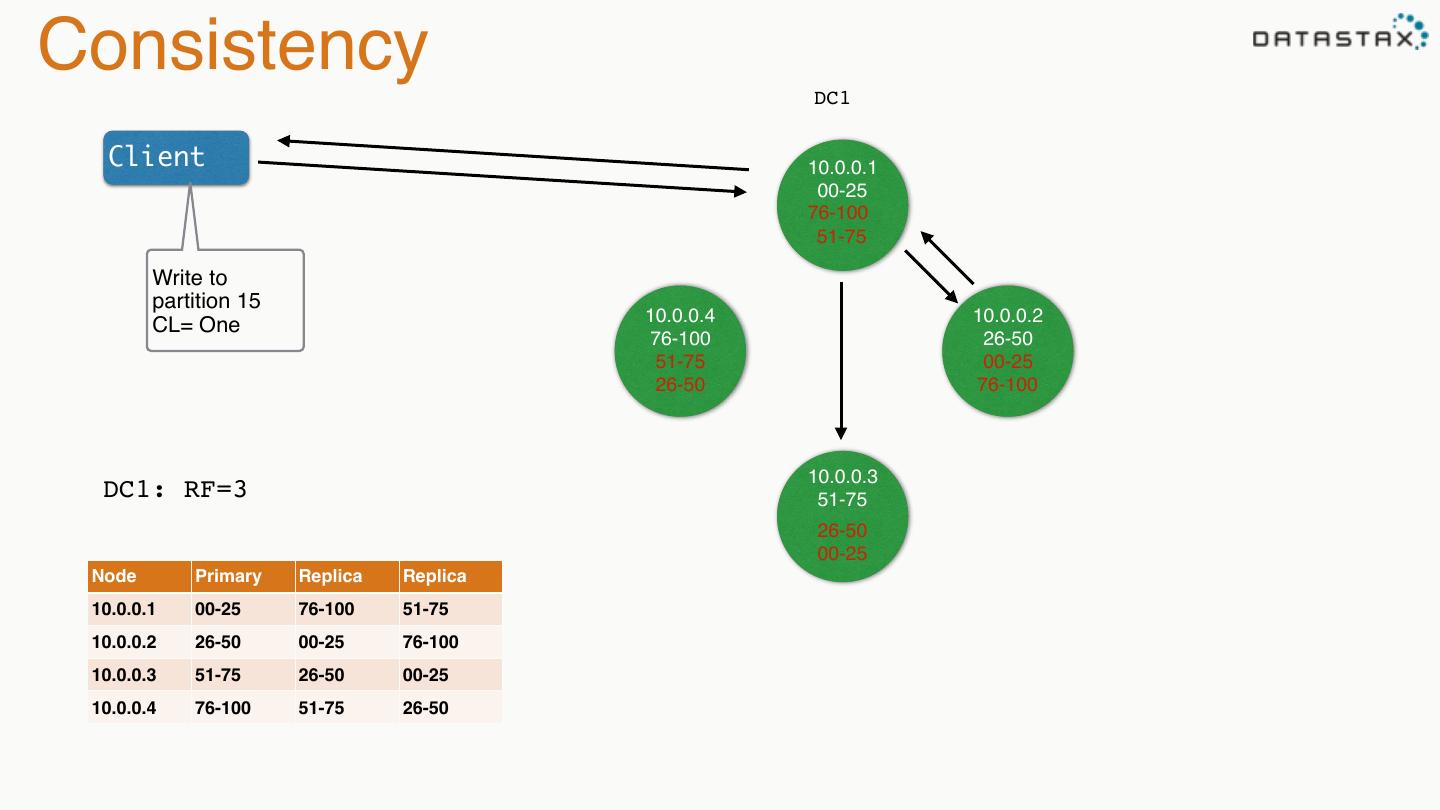

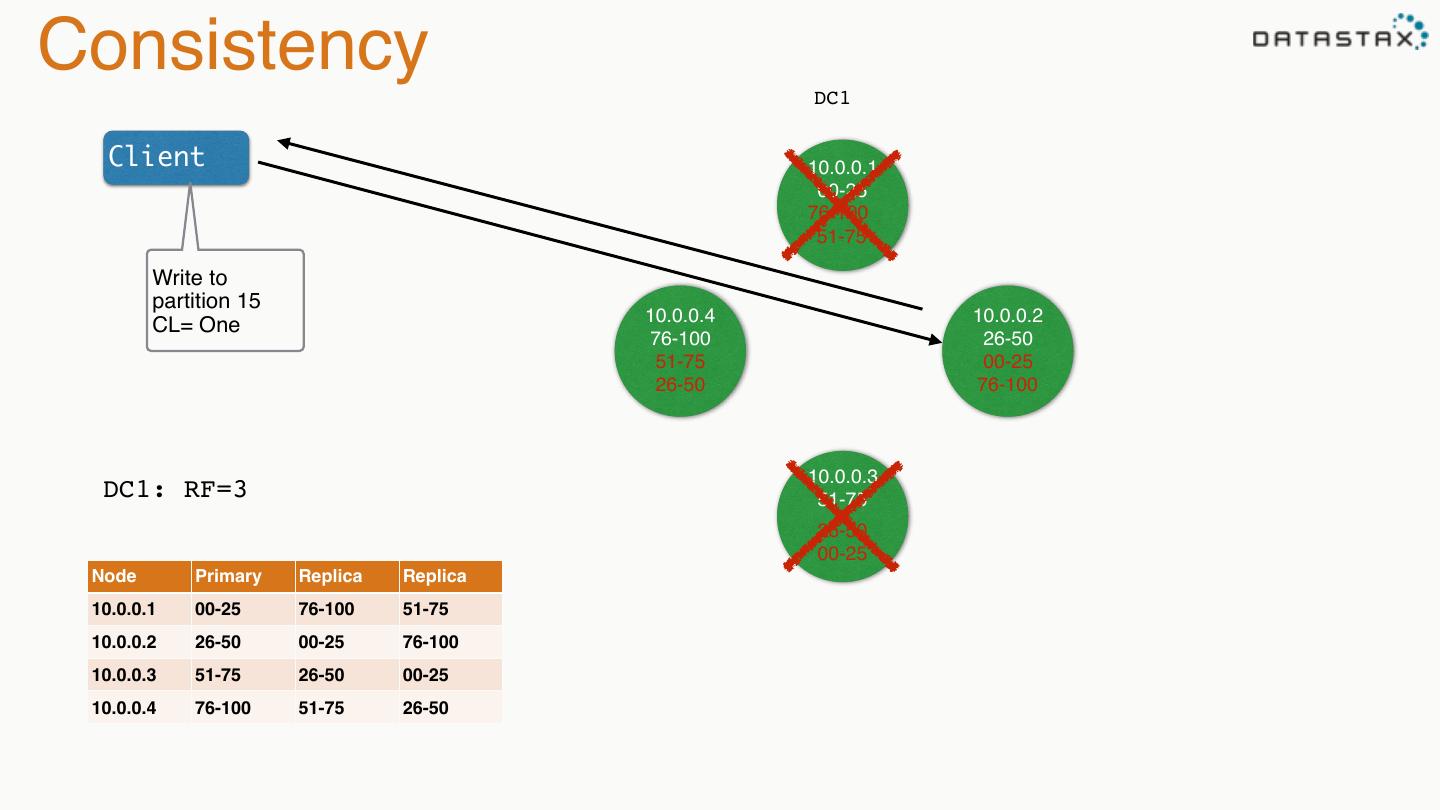

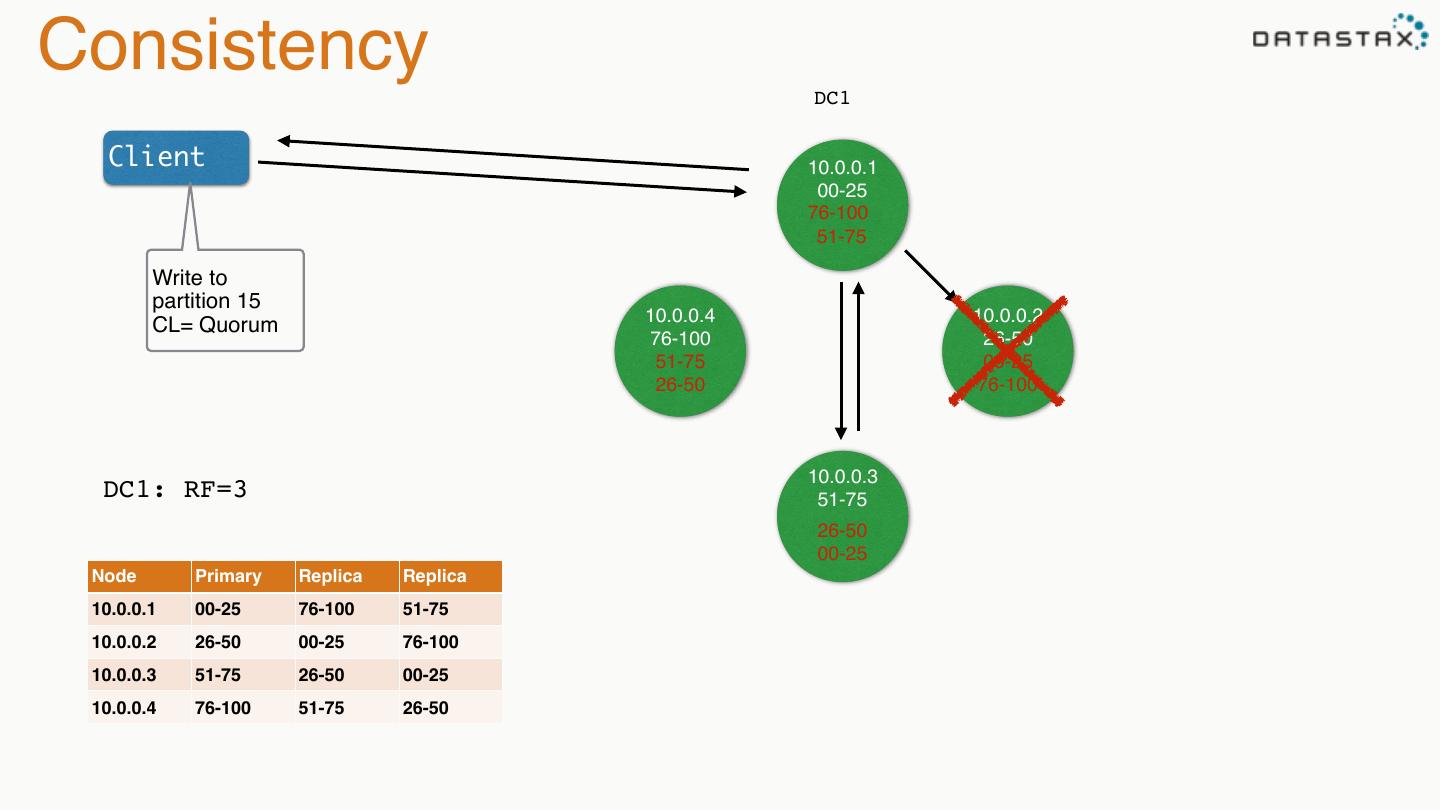

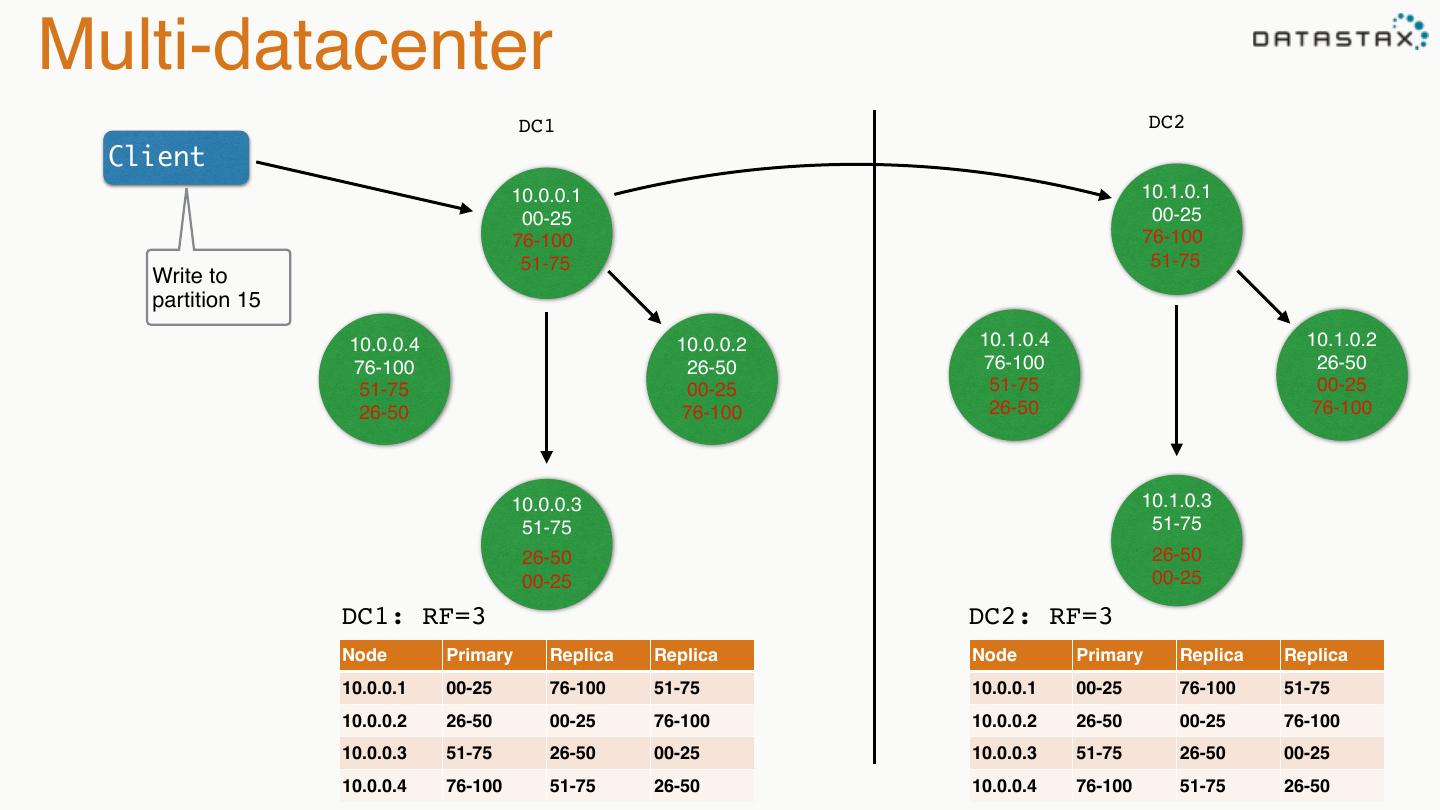

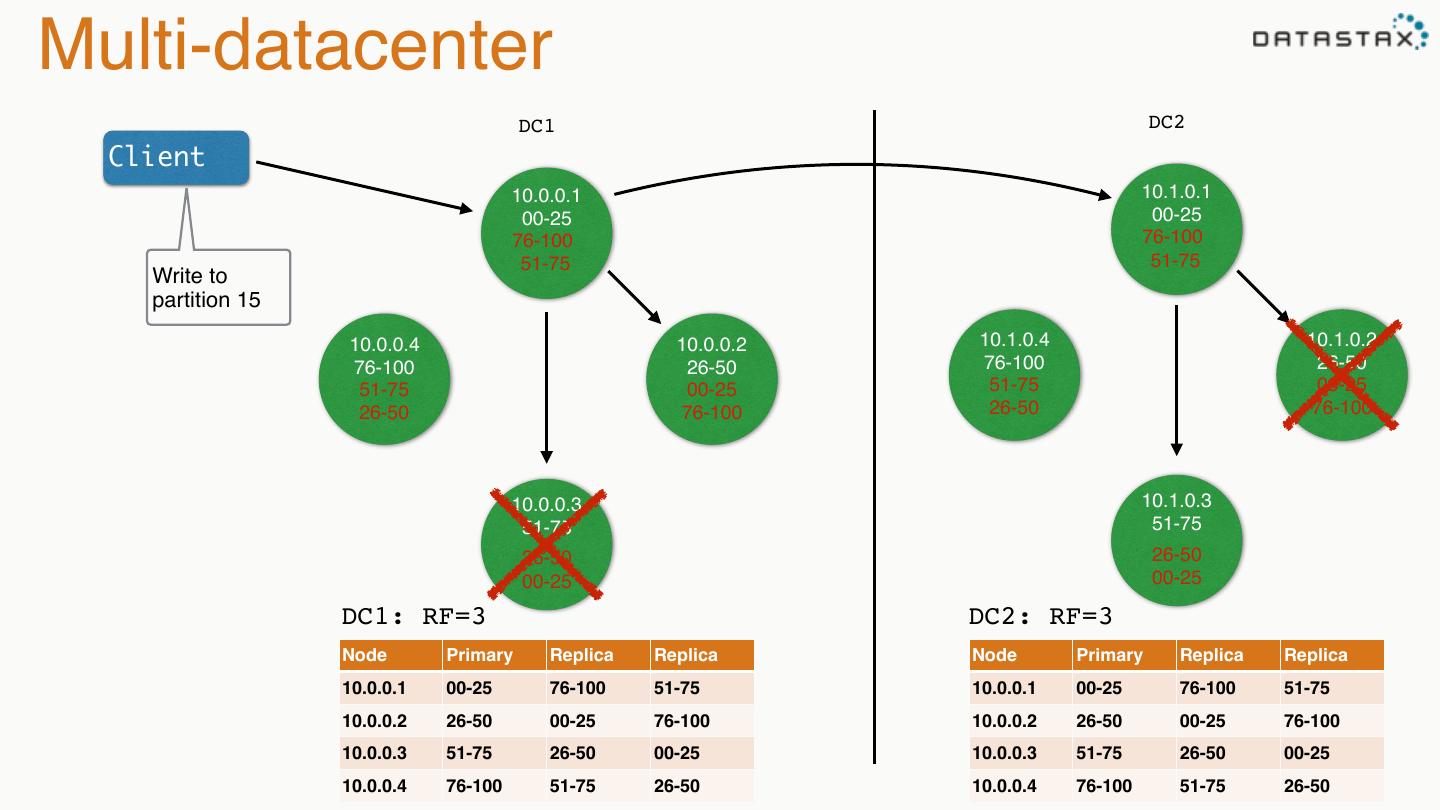

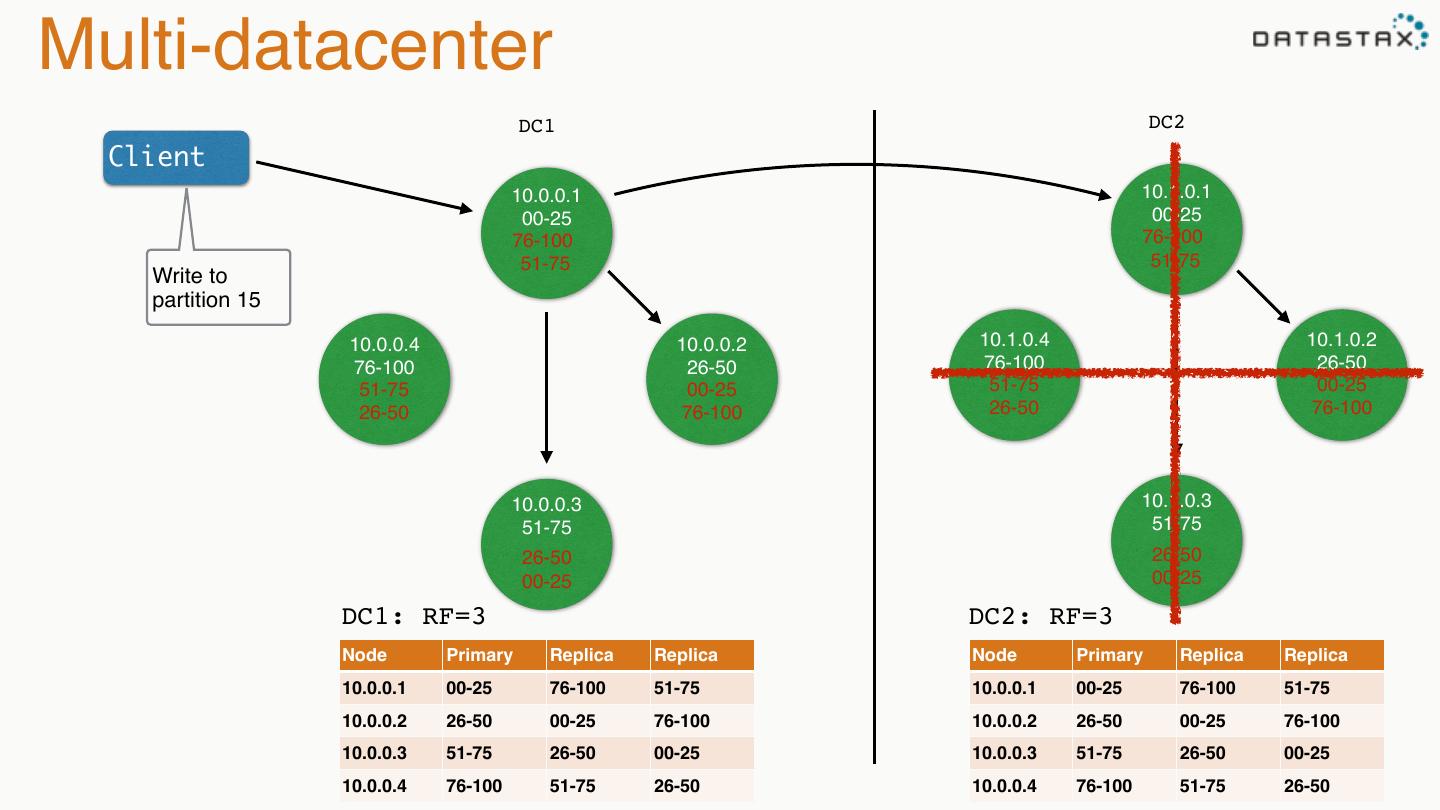

28 .Replication DC1 Client 10.0.0.1 00-25 76-100 51-75 Write to partition 15 10.0.0.4 10.0.0.2 76-100 26-50 51-75 00-25 26-50 76-100 10.0.0.3 DC1: RF=3 51-75 ??? 26-50 00-25 Node Primary Replica Replica 10.0.0.1 00-25 76-100 51-75 10.0.0.2 26-50 00-25 76-100 10.0.0.3 51-75 26-50 00-25 10.0.0.4 76-100 51-75 26-50

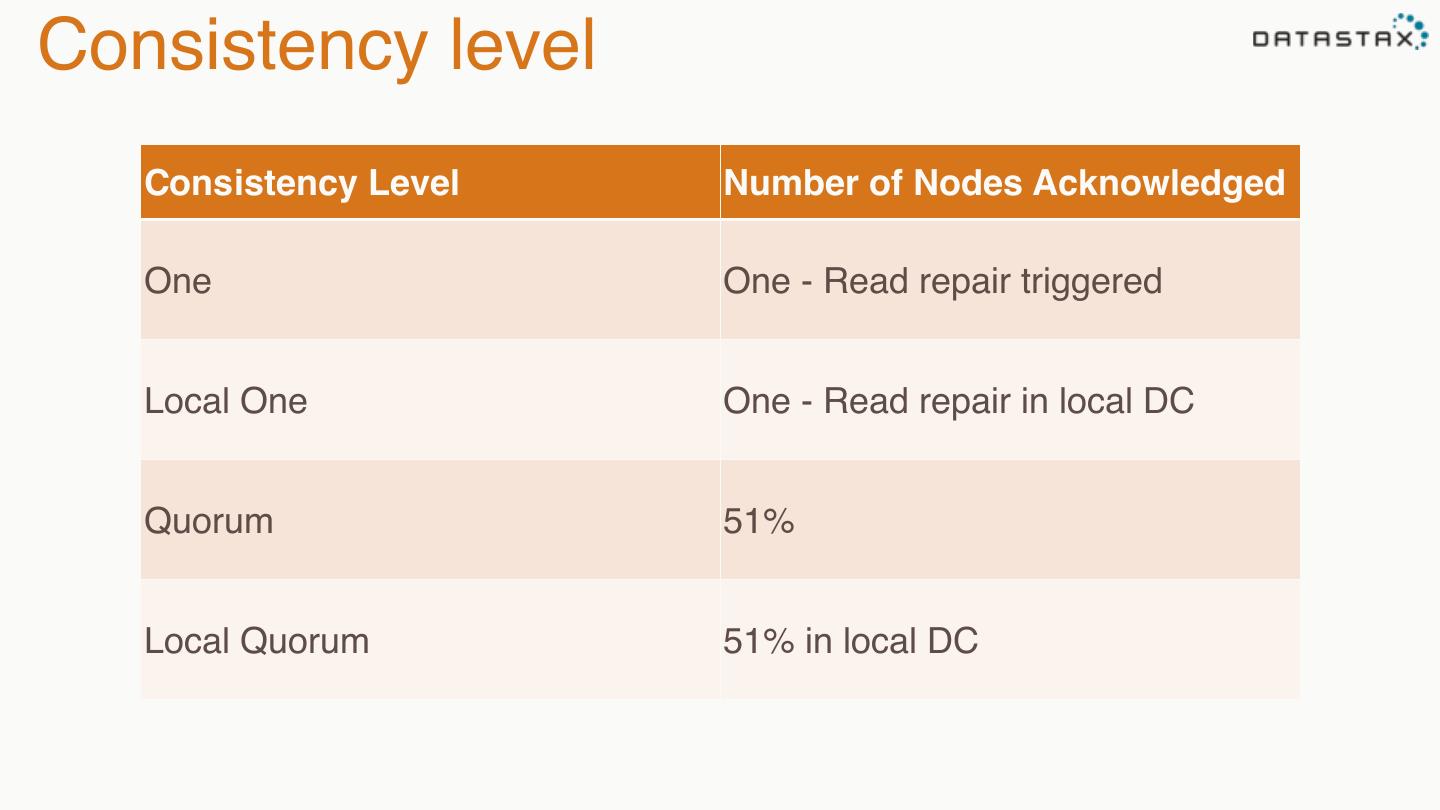

29 .Consistency level Consistency Level Number of Nodes Acknowledged One One - Read repair triggered Local One One - Read repair in local DC Quorum 51% Local Quorum 51% in local DC