- 快召唤伙伴们来围观吧

- 微博 QQ QQ空间 贴吧

- 文档嵌入链接

- 复制

- 微信扫一扫分享

- 已成功复制到剪贴板

Improving HBase reliability at Pinterest

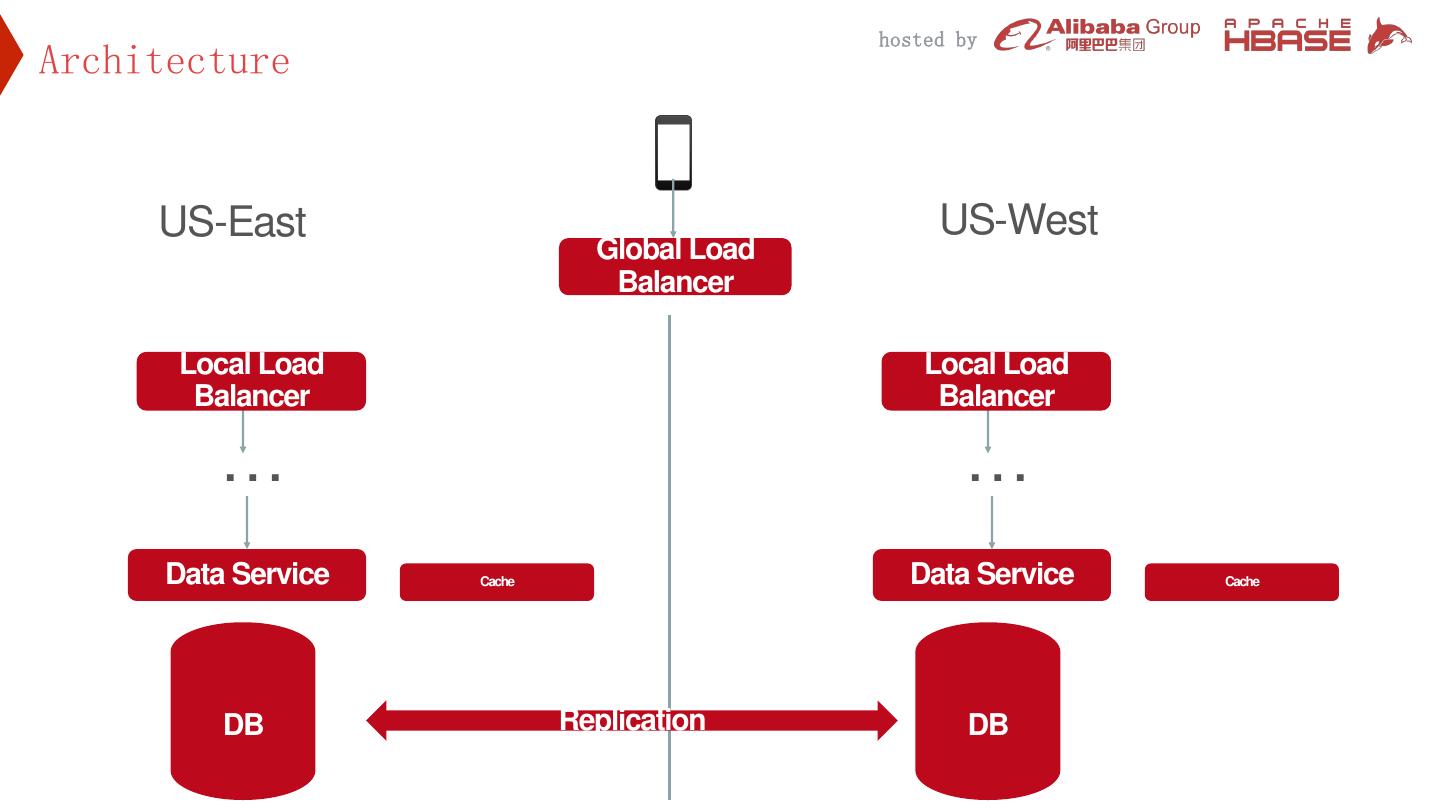

展开查看详情

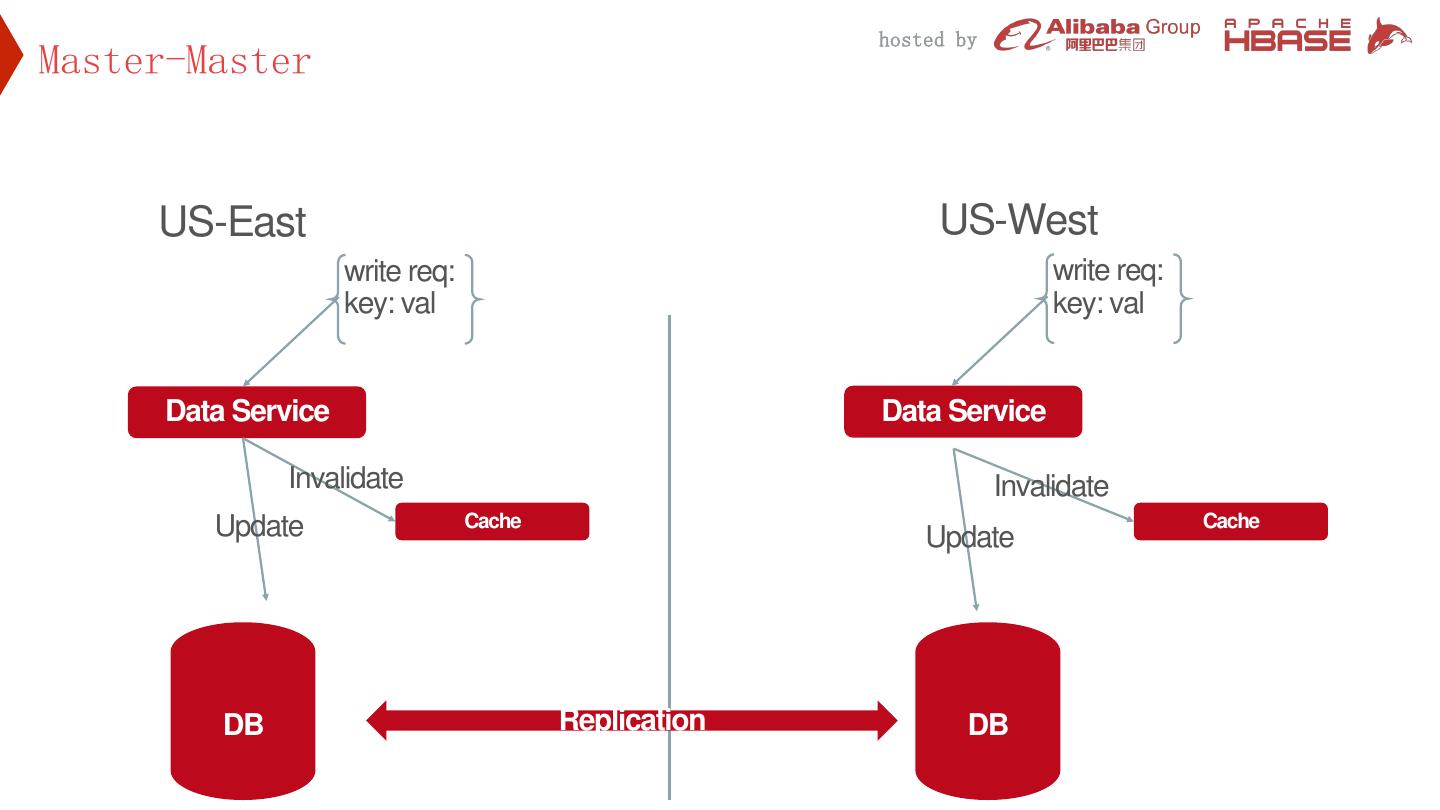

1 . hosted by Improving HBase reliability at Pinterest with Geo-replication and Efficient Backup Chenji Pan Lianghong Xu Storage & Caching, Pinterest August 17,2018

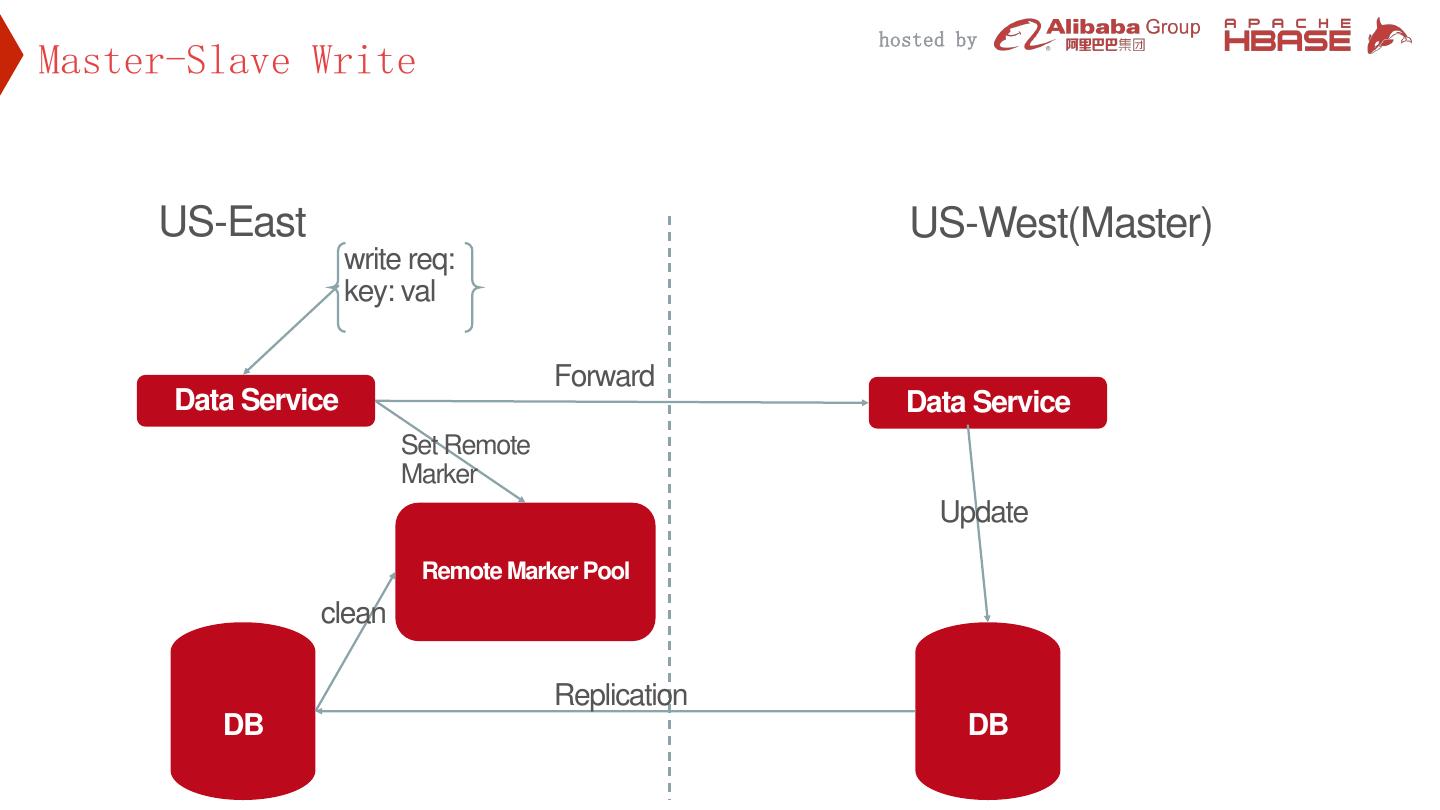

2 . hosted by 01 HBase in Pinterest Content 02 Multicell 03 Backup

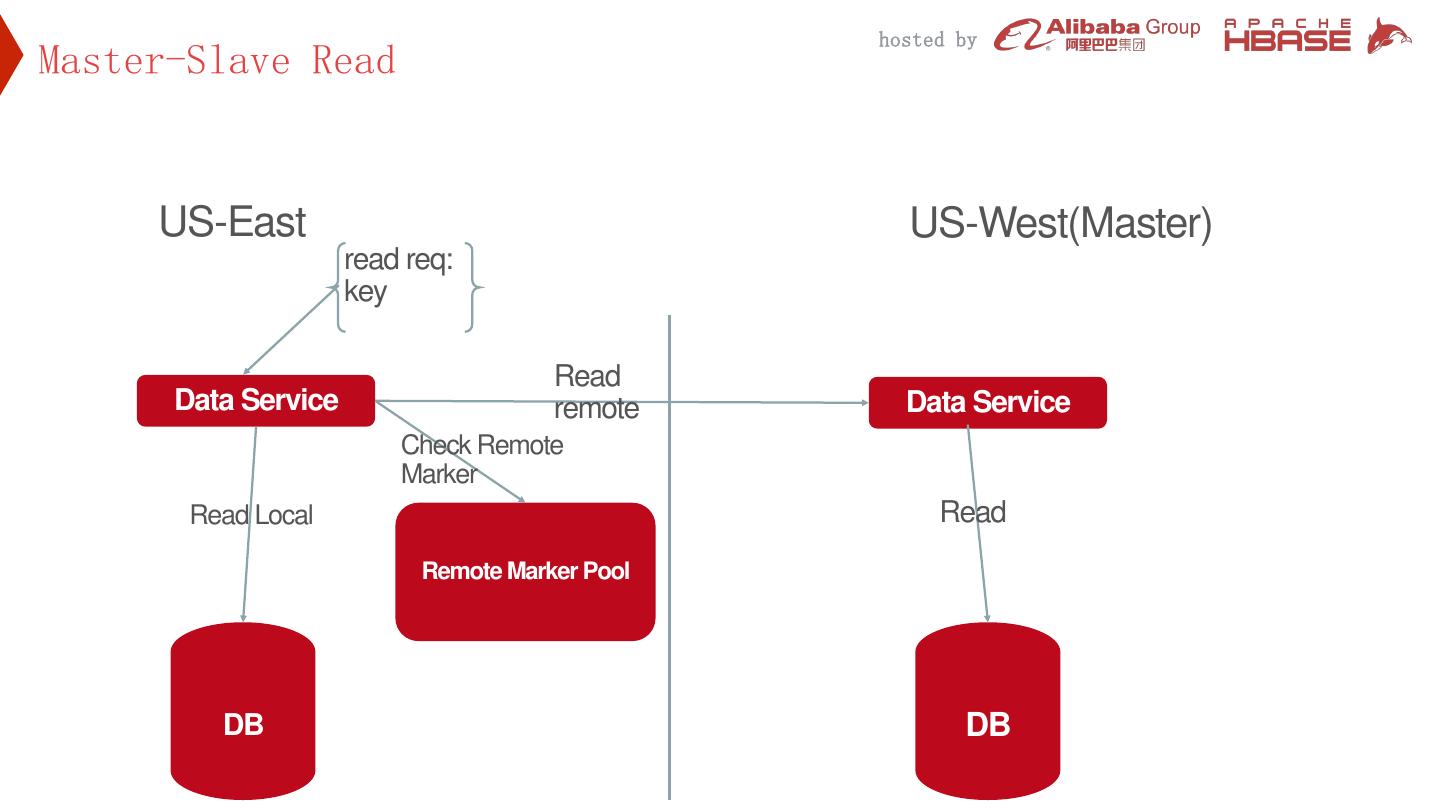

3 . hosted by 01 HBase in Pinterest

4 . hosted by HBase in Pinterest • Use HBase for online service since 2013 • Back data abstraction layer like Zen, UMS • ~50 Hbase 1.2 clusters • Internal repo with ZSTD, CCSMAP, Bucket cache, timestamp, etc

5 . hosted by 02 Multicell

6 . hosted by Why Multicell? 2011 2012 2013 2014 2015 2016

7 . hosted by Architecture US-East US-West Global Load Balancer Local Load Local Load Balancer Balancer … … Data Service Cache Data Service Cache DB Replication DB

8 . hosted by Master-Master US-East US-West write req: write req: key: val key: val Data Service Data Service Invalidate Invalidate Update Cache Cache Update DB Replication DB

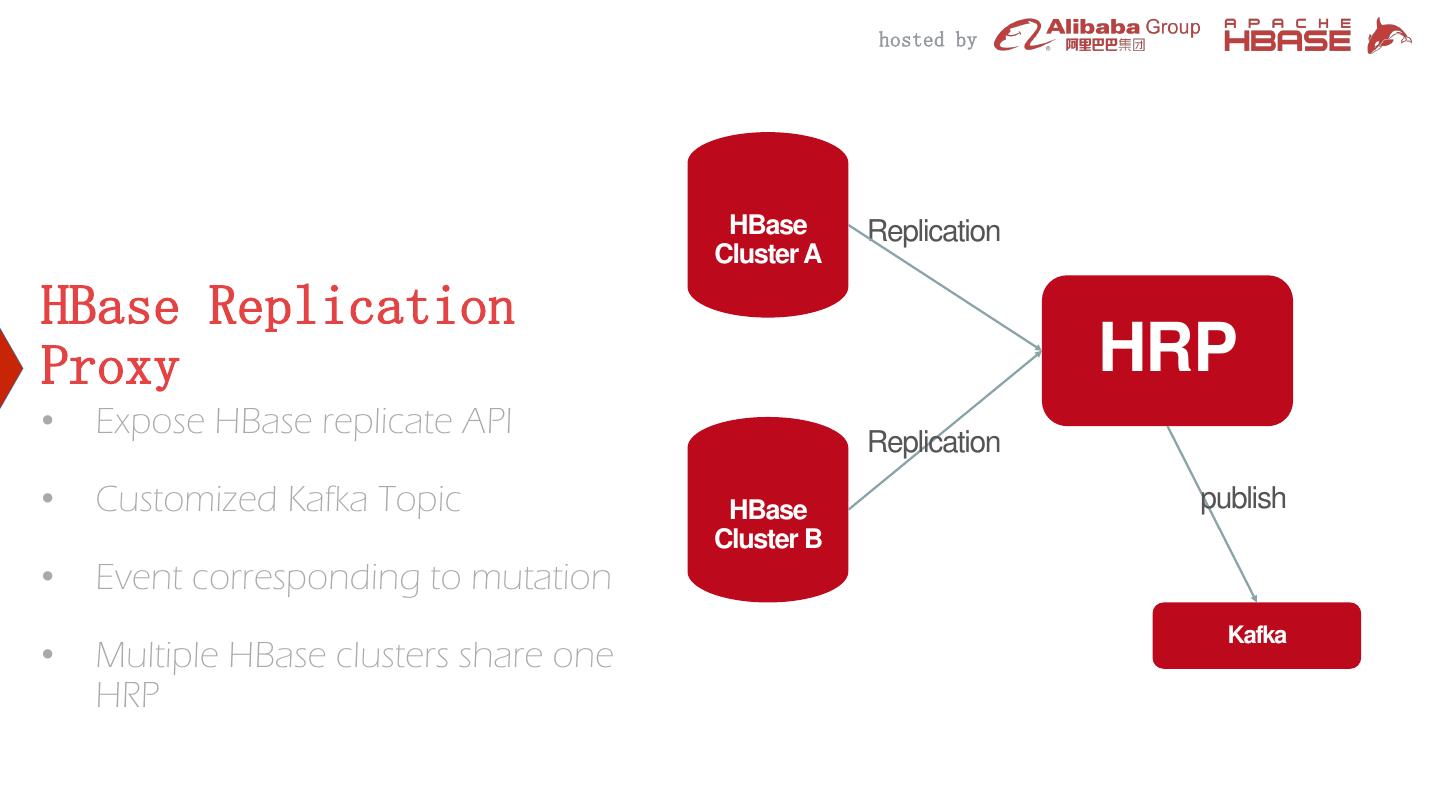

9 . hosted by Master-Slave Write US-East US-West(Master) write req: key: val Forward Data Service Data Service Set Remote Marker Update Remote Marker Pool clean Replication DB DB

10 . hosted by Master-Slave Read US-East US-West(Master) read req: key Read Data Service remote Data Service Check Remote Marker Read Local Read Remote Marker Pool DB DB

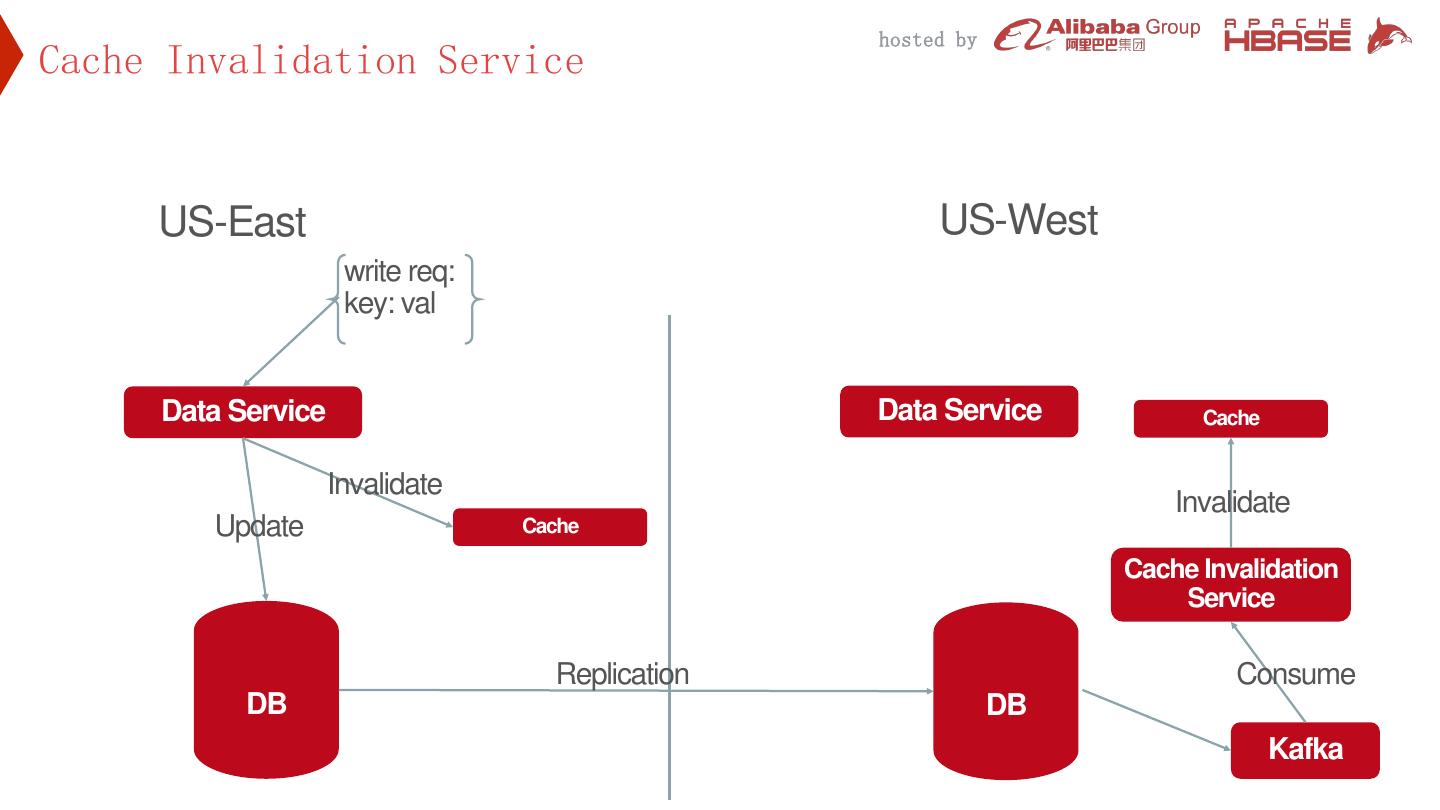

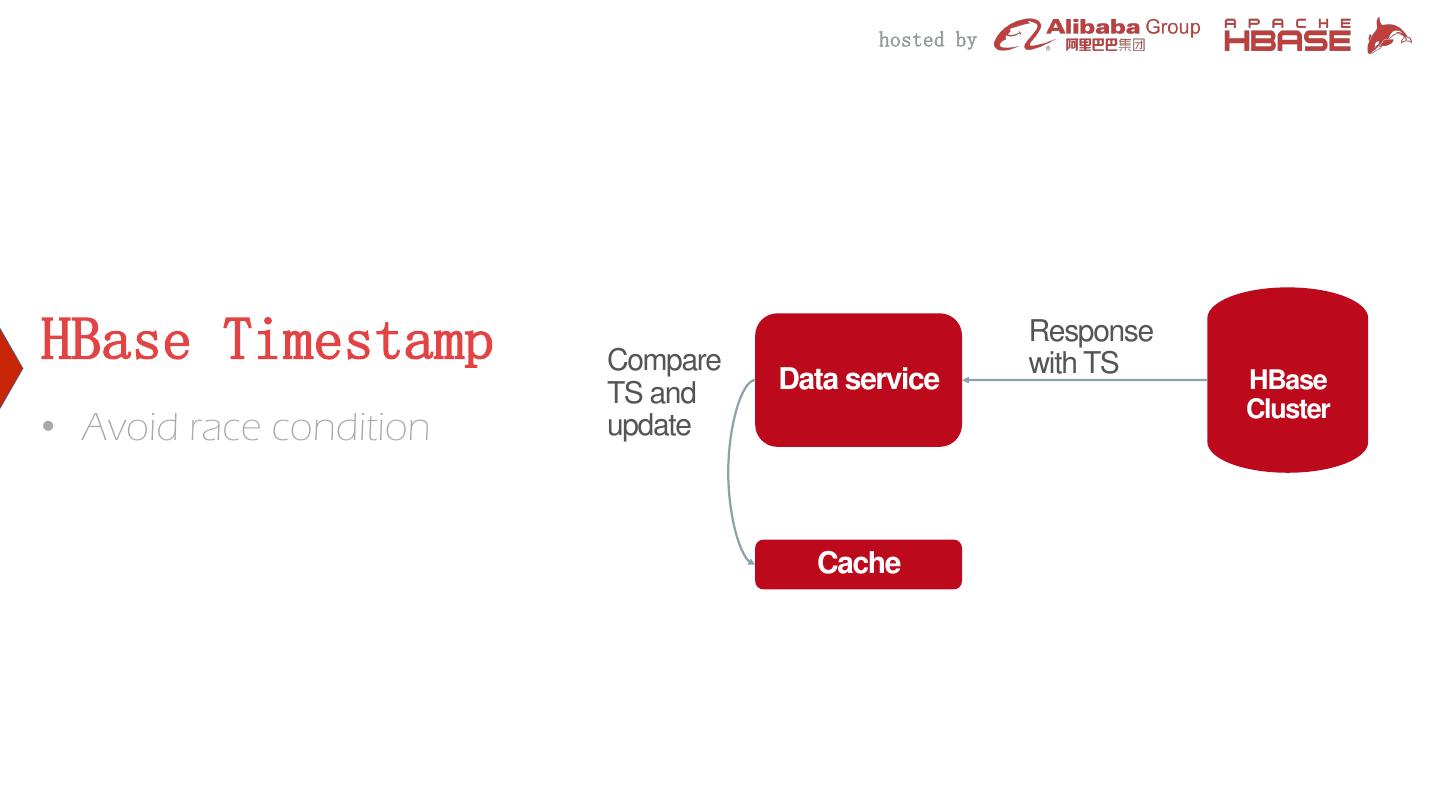

11 . hosted by Cache Invalidation Service US-East US-West write req: key: val Data Service Data Service Cache Invalidate Invalidate Update Cache Cache Invalidation Service Replication Consume DB DB Kafka

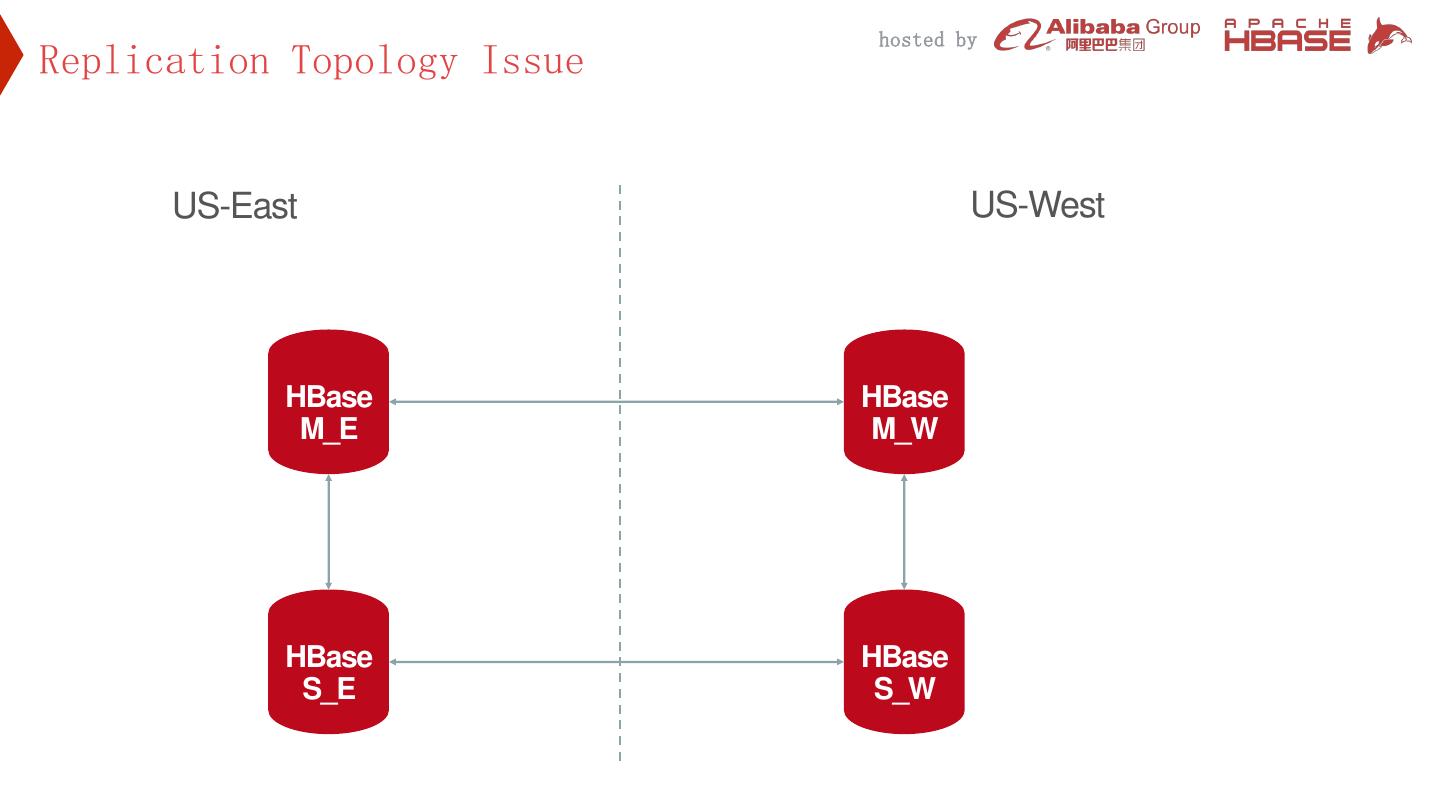

12 . hosted by DB Mysql HBase

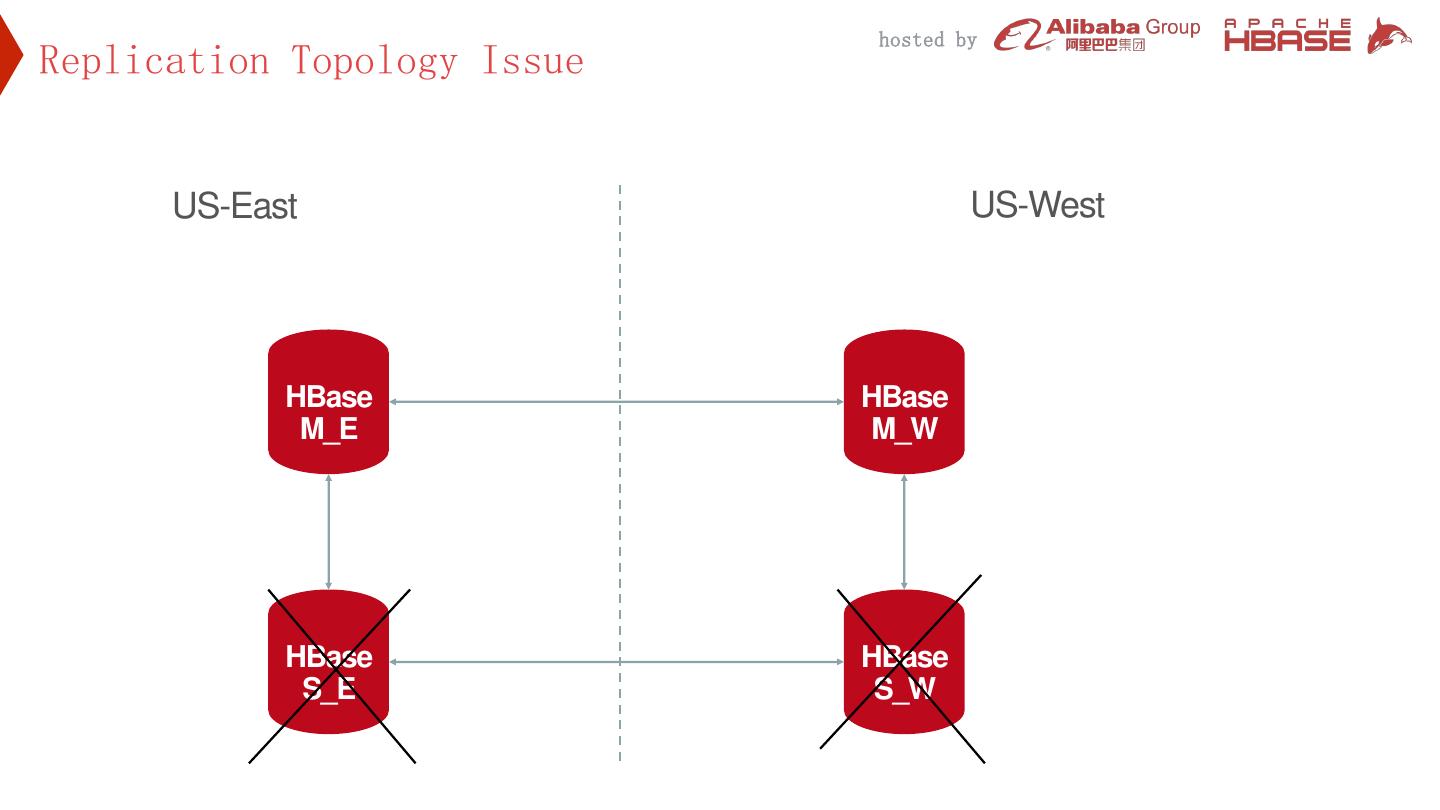

13 . hosted by Mysql Maxwell Mysql Comment

14 . hosted by Cache Invalidation Service US-East US-West write req: key: val Data Service Data Service Cache Invalidate Invalidate Update Cache Cache Invalidation Service Replication Consume DB DB Kafka

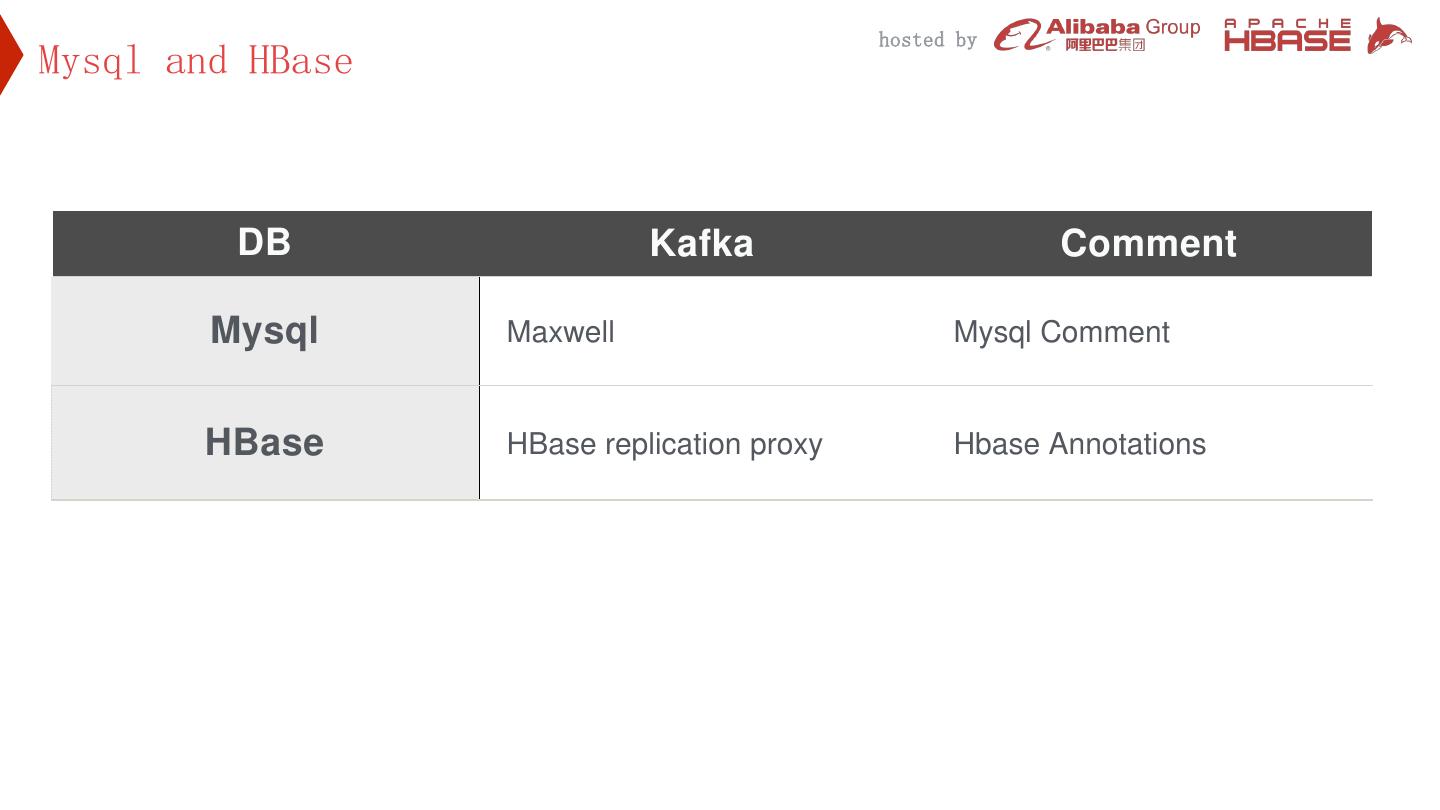

15 . hosted by Mysql and HBase DB Kafka Comment Mysql Maxwell Mysql Comment HBase HBase replication proxy Hbase Annotations

16 . hosted by HBase Replication Cluster A HBase Replication Proxy HRP • Expose HBase replicate API Replication • Customized Kafka Topic HBase publish Cluster B • Event corresponding to mutation Kafka • Multiple HBase clusters share one HRP

17 . hosted by HBase Annotations • Part of Mutate • Written in WAL log, but not Memstore

18 . hosted by HBase Timestamp Compare Response with TS TS and Data service HBase Cluster • Avoid race condition update Cache

19 . hosted by Replication Topology Issue US-East US-West HBase HBase M_E M_W HBase HBase S_E S_W

20 . hosted by Replication Topology Issue US-East US-West HBase HBase M_E M_W HBase HBase S_E S_W

21 . hosted by Replication Topology Issue US-East US-West HBase HBase M_E M_W HBase HBase S_E S_W

22 . hosted by Replication Topology Issue US-East US-West Get remote master region server list HBase HBase M_E M_W Update zk ZK ZK with master’s region server list HBase HBase S_E S_W

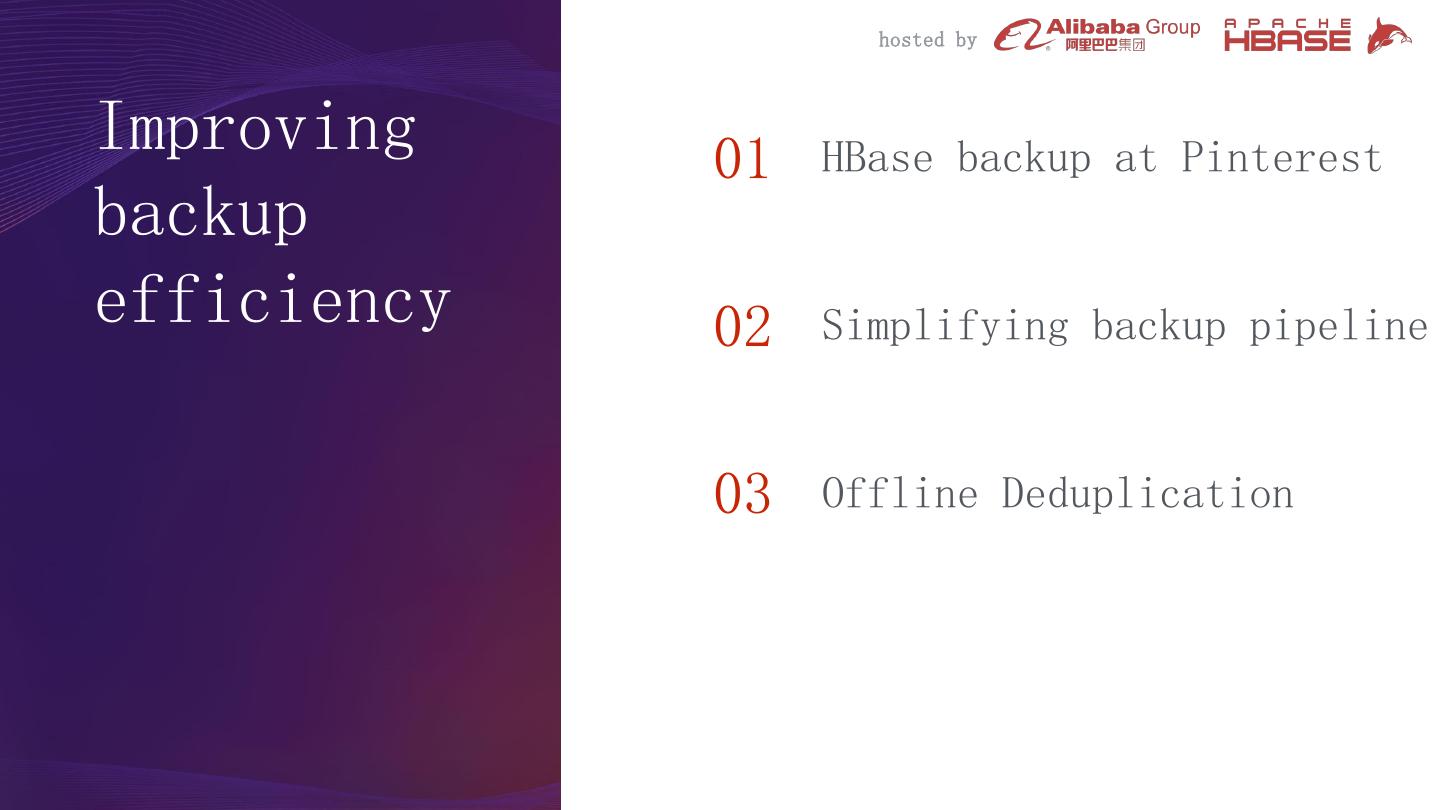

23 . hosted by Improving 01 HBase backup at Pinterest backup efficiency 02 Simplifying backup pipeline 03 Offline Deduplication

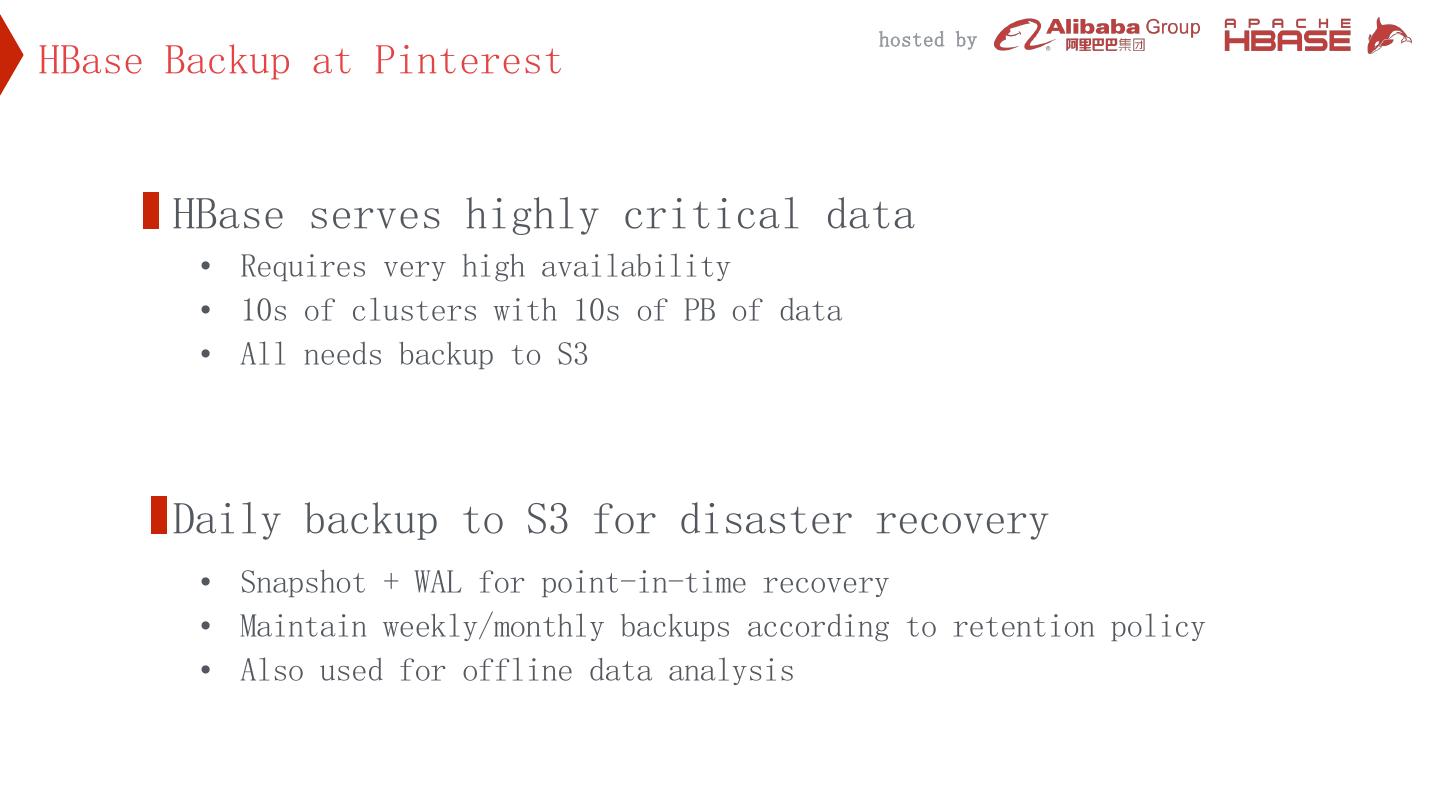

24 . hosted by HBase Backup at Pinterest HBase serves highly critical data • Requires very high availability • 10s of clusters with 10s of PB of data • All needs backup to S3 Daily backup to S3 for disaster recovery • Snapshot + WAL for point-in-time recovery • Maintain weekly/monthly backups according to retention policy • Also used for offline data analysis

25 . hosted by Legacy Backup Problem HBase 0.94 does not support S3 export Hbase cluster Hbase cluster … Hbase cluster Snapshots and WALs Two-step backup pipeline • HBase -> HDFS backup cluster HDFS backup • HDFS -> S3 cluster Problem with the HDFS backup cluster • Infra cost as data volume increases AWS S3 • Operational pain on failure

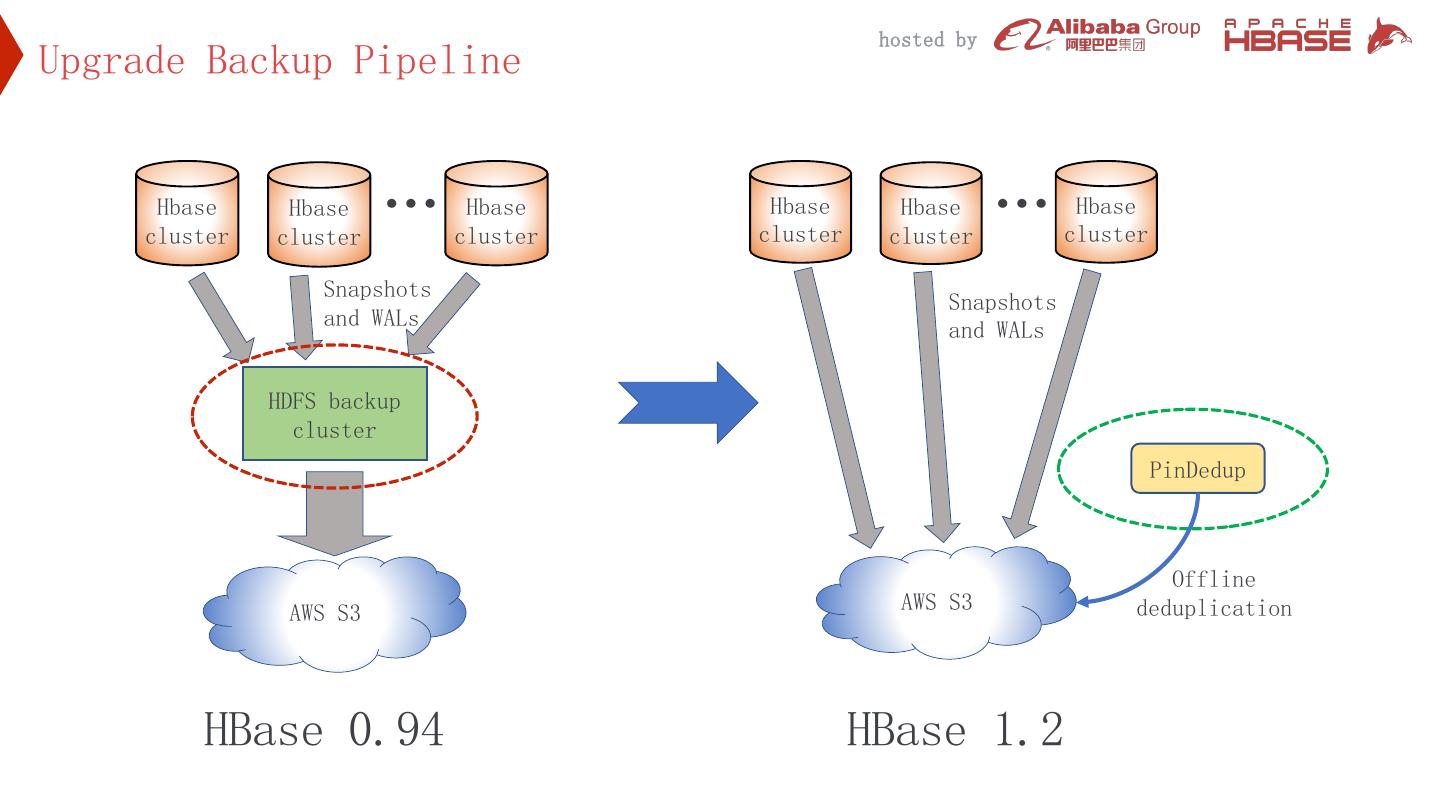

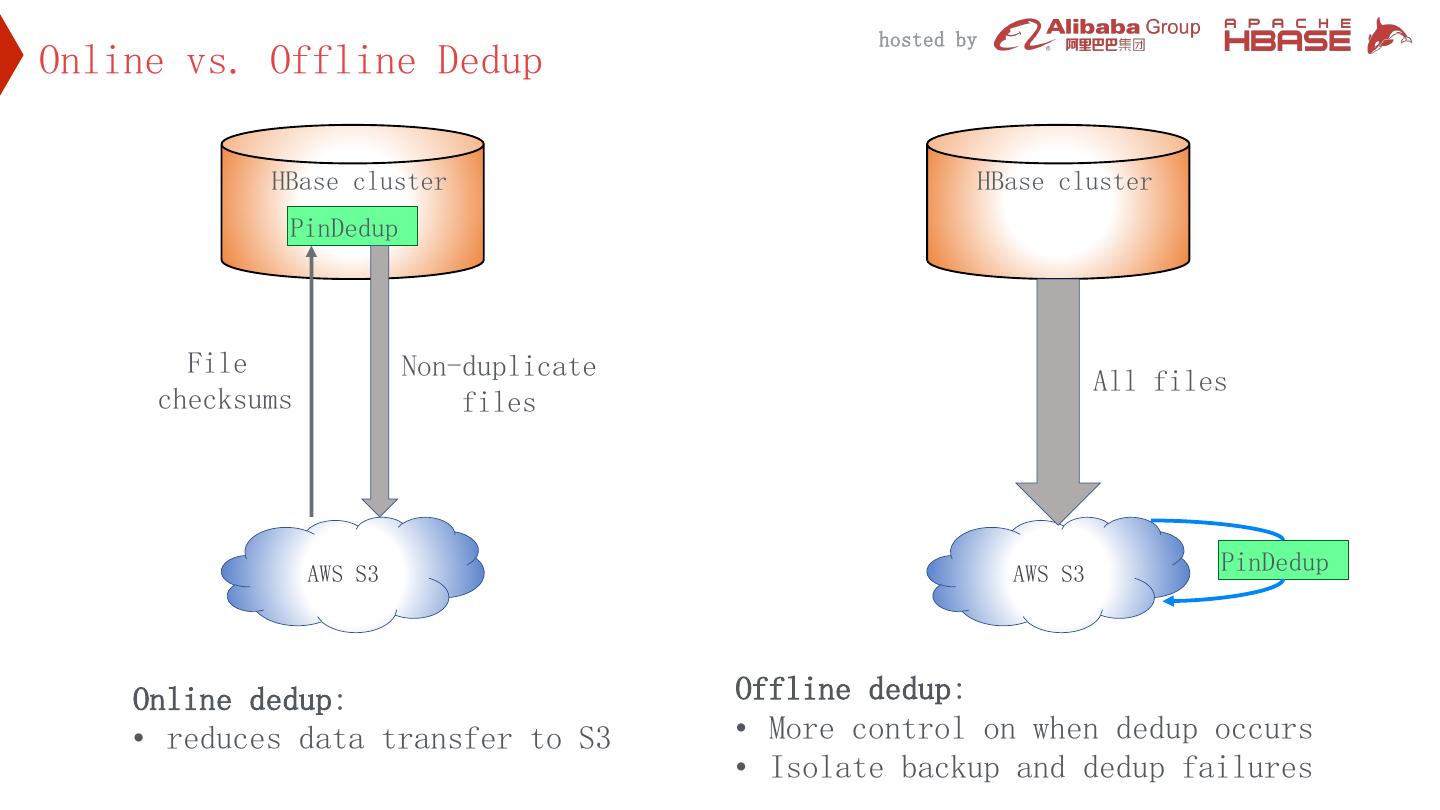

26 . hosted by Upgrade Backup Pipeline Hbase cluster Hbase cluster … Hbase cluster Hbase cluster Hbase cluster … Hbase cluster Snapshots Snapshots and WALs and WALs HDFS backup cluster PinDedup Offline AWS S3 AWS S3 deduplication HBase 0.94 HBase 1.2

27 . hosted by Challenge and Approach Directly export HBase backup to S3 • Table export done using a variant of distcp • Use S3A client with the fast upload option Direct S3 upload is very CPU intensive • Large HFiles broken down into smaller chunks • Each chunk needs to be hashed and signed before upload Minimize impact on prod HBase clusters • Constrain max number of threads and Yarn contains per host • Max CPU Overhead during backup < 30%

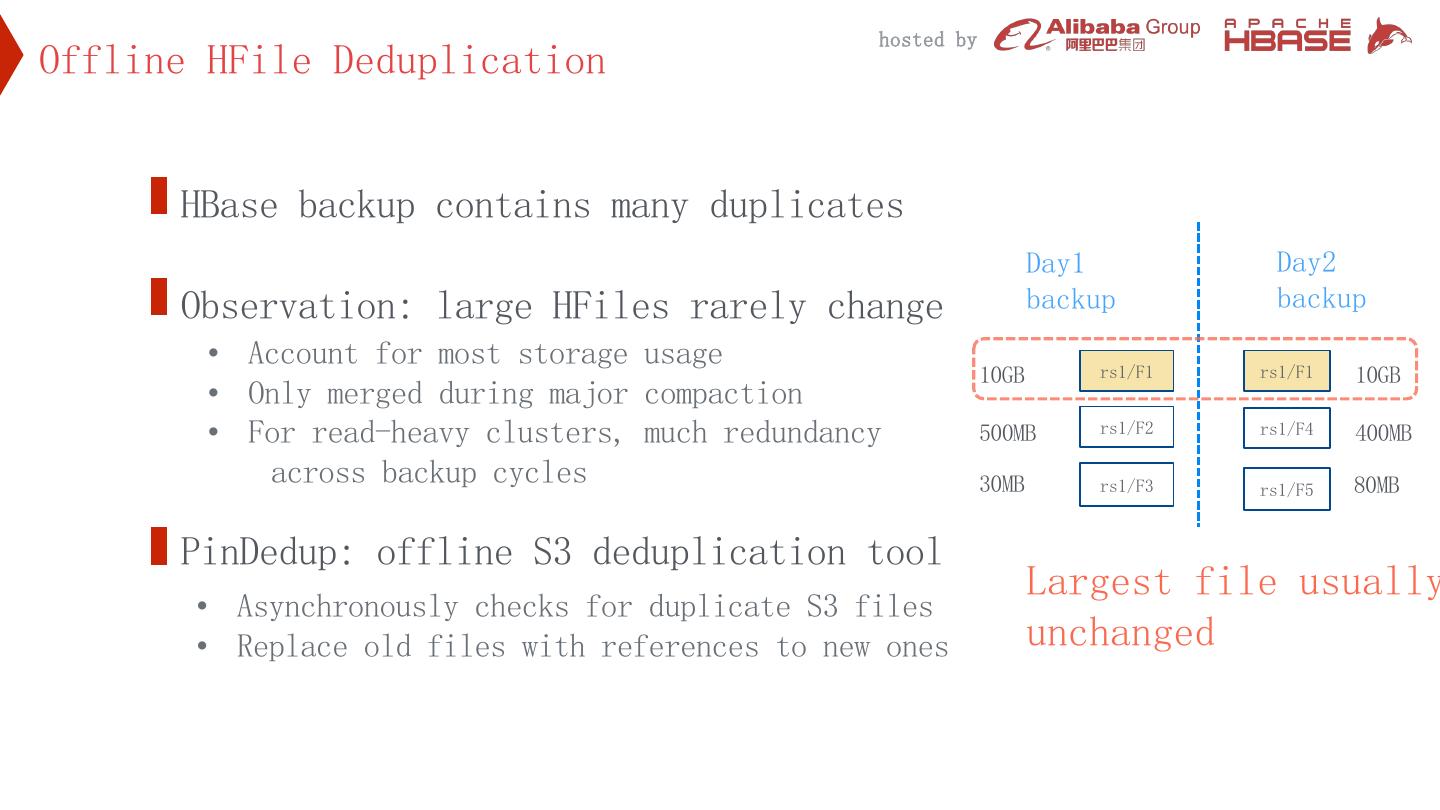

28 . hosted by Offline HFile Deduplication HBase backup contains many duplicates Day1 Day2 Observation: large HFiles rarely change backup backup • Account for most storage usage 10GB rs1/F1 rs1/F1 10GB • Only merged during major compaction • For read-heavy clusters, much redundancy 500MB rs1/F2 rs1/F4 400MB across backup cycles 30MB rs1/F3 rs1/F5 80MB PinDedup: offline S3 deduplication tool Largest file usually • Asynchronously checks for duplicate S3 files • Replace old files with references to new ones unchanged

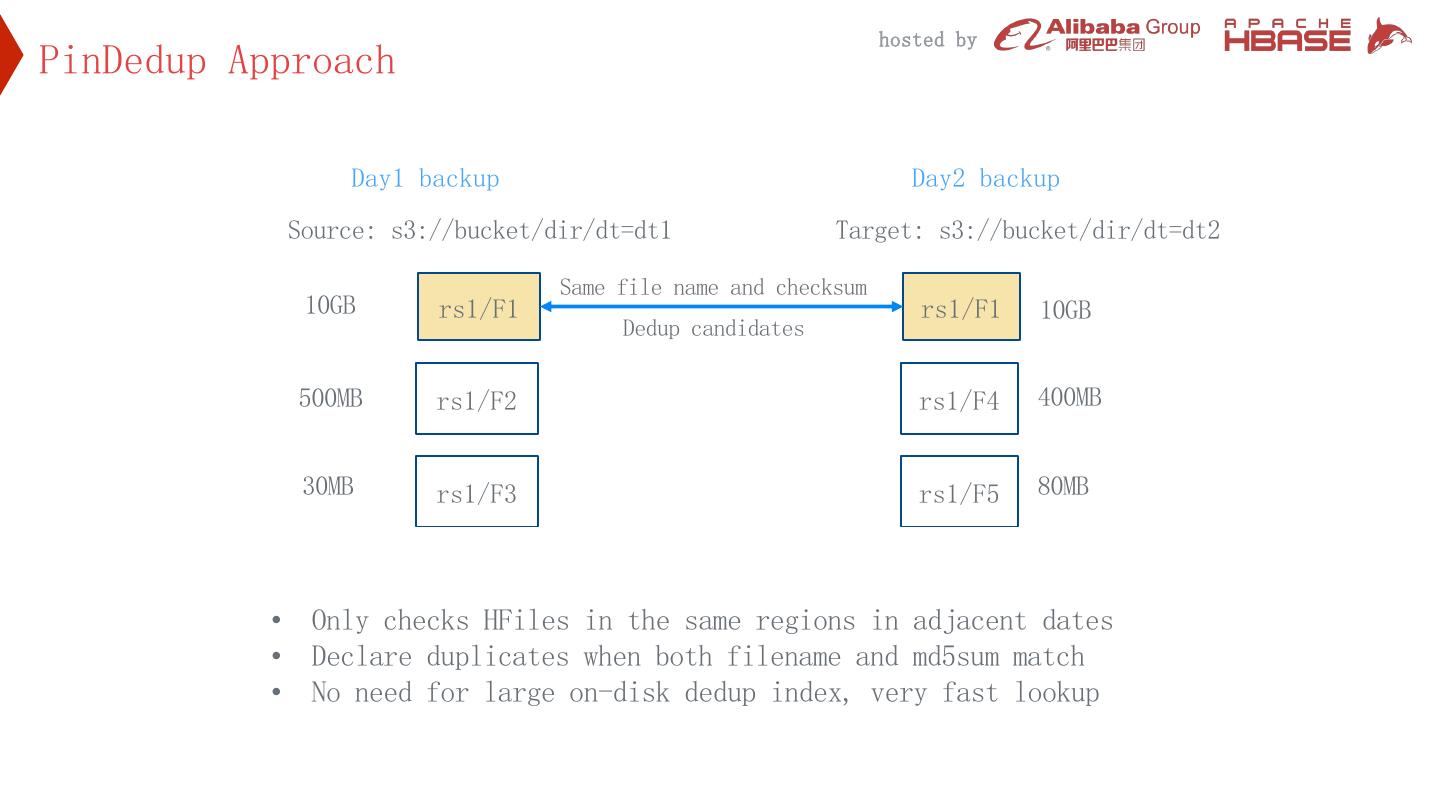

29 . hosted by PinDedup Approach Day1 backup Day2 backup Source: s3://bucket/dir/dt=dt1 Target: s3://bucket/dir/dt=dt2 Same file name and checksum 10GB rs1/F1 rs1/F1 10GB Dedup candidates 500MB rs1/F2 rs1/F4 400MB 30MB rs1/F3 rs1/F5 80MB • Only checks HFiles in the same regions in adjacent dates • Declare duplicates when both filename and md5sum match • No need for large on-disk dedup index, very fast lookup